File size: 4,167 Bytes

0c87b6a e4ef55a 0c87b6a 4a49479 535954a 4a49479 535954a 4a49479 e4ef55a 4a49479 535954a e4ef55a 4a49479 535954a 4a49479 e4ef55a 4a49479 535954a 4a49479 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 |

---

license: other

---

## Introduction

Aquila is a large language model independently developed by BAAI. Building upon the Aquila model, we continued pre-training, SFT (Supervised Fine-Tuning), and RL (Reinforcement Learning) through a multi-stage training process, ultimately resulting in the AquilaMed-RL model. This model possesses professional capabilities in the medical field and demonstrates a significant win rate when evaluated against annotated data using the GPT-4 model. The AquilaMed-RL model can perform medical triage, medication inquiries, and general Q&A. We will open-source the SFT data and RL data required for training the model. Additionally, we will release a technical report detailing our methods in developing the model for the medical field, thereby promoting the development of the open-source community.

## Model Details

The training process of the model is described as follows. For more information, please refer to our technical report. https://github.com/FlagAI-Open/industry-application/blob/main/Aquila_med_tech-report.pdf

## Evaluation

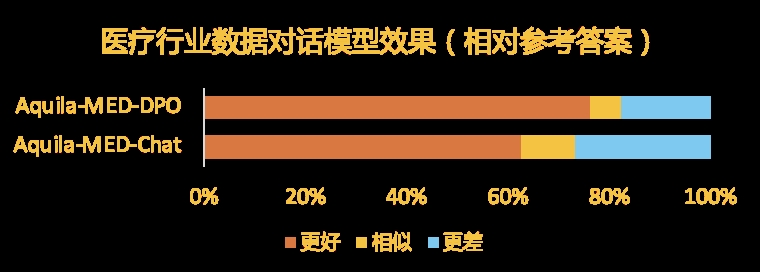

Using GPT-4 for evaluation, the win rates of our model compared to the reference answers in the annotated validation dataset are as follows.

## usage

Once you have downloaded the model locally, you can use the following code for inference.

```python

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, AutoConfig

model_dir = "xxx"

tokenizer = AutoTokenizer.from_pretrained(model_dir, trust_remote_code=True)

config = AutoConfig.from_pretrained(model_dir, trust_remote_code=True)

model = AutoModelForCausalLM.from_pretrained(

model_dir, config=config, trust_remote_code=True

)

model.cuda()

model.eval()

template = "<|im_start|>system\nYou are a helpful assistant in medical domain.<|im_end|>\n<|im_start|>user\n{question}<|im_end|>\n<|im_start|>assistant\n"

text = "我肚子疼怎么办?"

item_instruction = template.format(question=text)

inputs = tokenizer(item_instruction, return_tensors="pt").to("cuda")

input_ids = inputs["input_ids"]

prompt_length = len(input_ids[0])

generate_output = model.generate(

input_ids=input_ids, do_sample=False, max_length=1024, return_dict_in_generate=True

)

response_ids = generate_output.sequences[0][prompt_length:]

predicts = tokenizer.decode(

response_ids, skip_special_tokens=True, clean_up_tokenization_spaces=True

)

print("predict:", predicts)

"""

predict: 肚子疼可能是多种原因引起的,例如消化不良、胃炎、胃溃疡、胆囊炎、胰腺炎、肠道感染等。如果疼痛持续或加重,或者伴随有呕吐、腹泻、发热等症状,建议尽快就医。如果疼痛轻微,可以尝试以下方法缓解:

1. 饮食调整:避免油腻、辛辣、刺激性食物,多喝水,多吃易消化的食物,如米粥、面条、饼干等。

2. 休息:避免剧烈运动,保持充足的睡眠。

3. 热敷:用热水袋或毛巾敷在肚子上,可以缓解疼痛。

4. 药物:可以尝试一些非处方药,如布洛芬、阿司匹林等,但请务必在医生的指导下使用。

如果疼痛持续或加重,或者伴随有其他症状,建议尽快就医。

希望我的回答对您有所帮助。如果您还有其他问题,欢迎随时向我提问。

"""

```

## License

Aquila series open-source model is licensed under [BAAI Aquila Model Licence Agreement](./BAAI-Aquila-Model-License -Agreement.pdf)

## Citation

If you find our work helpful, feel free to give us a cite.

```

@article{Aqulia-Med LLM,

title={Aqulia-Med LLM: Pioneering Full-Process Open-Source Medical Language Models},

year={2024}

}

``` |