File size: 3,955 Bytes

c789604 9b62f7e c789604 d368d96 928fb9b d3e73be 928fb9b 3f43324 3609118 d3f3e52 3609118 d3f3e52 32e83b4 d3f3e52 3609118 924eab9 3609118 924eab9 3609118 924eab9 3609118 9b62f7e |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 114 115 116 117 118 119 |

---

license: apache-2.0

datasets:

- Chrisneverdie/sports_llm

language:

- en

pipeline_tag: text-generation

tags:

- sports

---

#### Do not run the inference from Model card it won't work!!

#### Use this model space instead!!

https://huggingface.co/spaces/Chrisneverdie/SportsDPT

This model is finetuned with QA pairs so a text completion task would probably result in an error.

Questions unrelated to sports may suffer from poor performance.

It may still provide incorrect information so just take it as a toy domain model.

# FirstSportsELM

### The first ever Sports Expert Language Model

Created by Chris Zexin Chen, Sean Xie, and Chengxi Li.

Email for question: zc2404@nyu.edu

GitHub: https://github.com/chrischenhub/FirstSportsELM

As avid sports enthusiasts, we’ve consistently observed a gap in the market for a dedicated

large language model tailored to the sports domain. This research stems from our intrigue

about the potential of a language model that is exclusively trained and fine-tuned on sports-

related data. We aim to assess its performance against generic language models, thus delving

into the unique nuances and demands of the sports industry

This model structure is built by Andrej Karpathy: https://github.com/karpathy/nanoGPT

Here is an example QA from SportsDPT

## Model Checkpoint File

https://drive.google.com/drive/folders/1PSYYWdUWiM5t0KTtlpwQ1YXBWRwV1JWi?usp=sharing

*put FineTune_ckpt.pt under model folder in finetune/model/ if you wish to proceed with inference*

## Pretrain Data

https://drive.google.com/drive/folders/1bZvWxLnmCDYJhgMDaWumr33KbyDKQUki?usp=sharing

*train.bin ~8.4 Gb/4.5B tokens, val.bin ~4.1 Mb/2M tokens*

## Pretrain

To replicate our model, you need to use train.bin and val.bin in this drive, which is processed and ready to train.

We trained on a 4xA100 40GB node for 30 hrs to get a val loss ~2.36. Once you set up the environment, run the following:

```$ torchrun --standalone --nproc_per_node=4 train.py config/train_gpt2.py```

You can tweak around with the parameters in train_gpt2.py. We had two experiments and the first one failed badly.

The second trial is a success and the parameters are all stored in pretrain/train_gpt2.py

## Fine Tune

We used thousands of GPT4-generated Sports QA pairs to finetune our model.

1. Generate Tags, Questions and Respones from GPT-4

*python FineTuneDataGeneration.py api_key Numtag NumQuestion NumParaphrase NumAnswer*

* api_key: Your Api Key

* Numtag: number of tags, default 50, optional

* NumQuestion: number of questions, default 16, optional

* NumParaphrase: number of question paraphrases, default 1, optional

* NumAnswer: number of answers, default 2, optional

2. Convert Json to TXT and Bin for fine-tuning

*python Json2Bin.py*

3. Fine Tune OmniSportsGPT

*python train.py FineTuneConfig.py*

## Ask Your Question!

1. Inference

*python Inference.py YourQuestionHere*

*python DefaultAnswer.py*

*python RandomGPT2ChatBot.py*

2. Plot Result

*python plot.py*

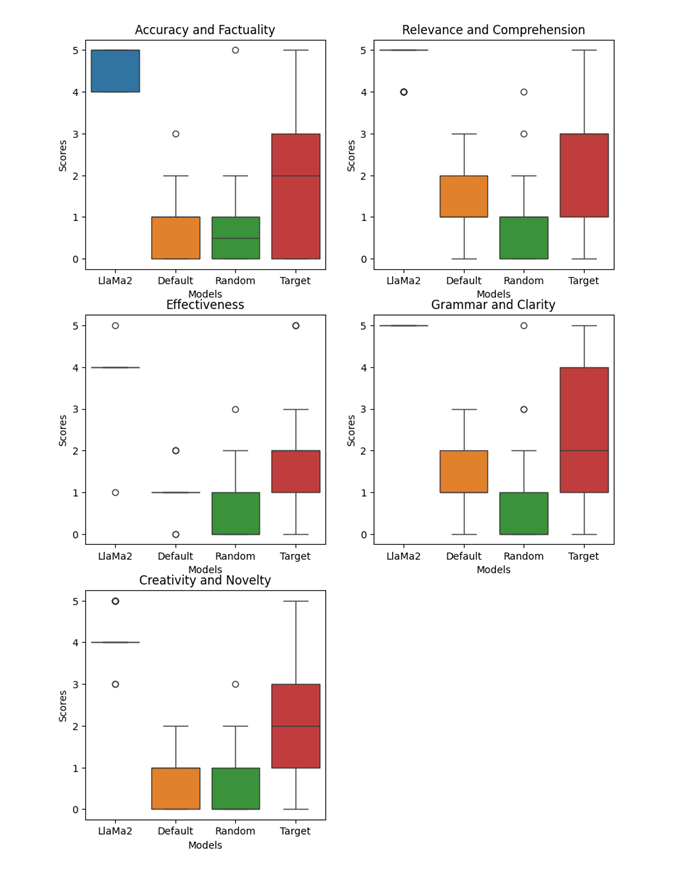

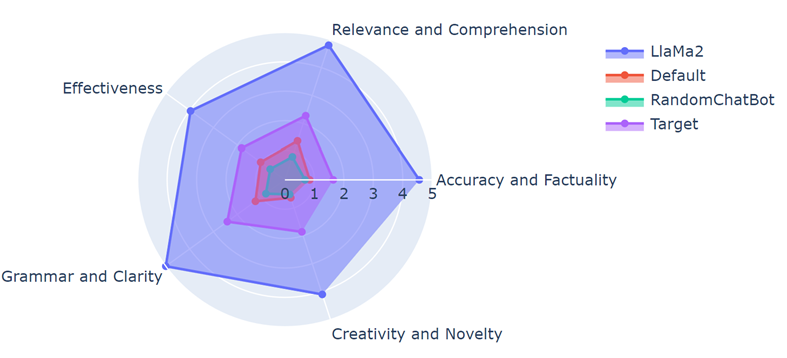

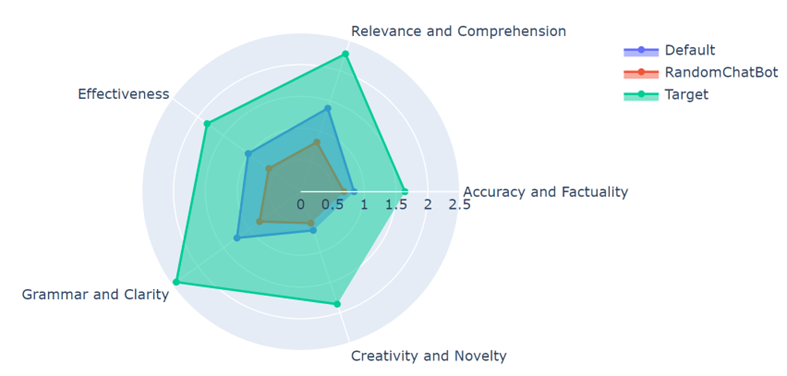

## Benchmark

Target: Sports DPT

Default: GPT2 replica finetuned by sports QA

Random: GPT2 size language model finetuned by general QA

Llama2: Llama2 7B finetuned by general QA

## Cost

The entire pretrain and finetune process costs around 250 USD. ~200$ in GPU rentals and ~50$ in OpenAI API usage. |