Adding README

Browse files

README.md

CHANGED

|

@@ -1,3 +1,80 @@

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 3 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

license: apache-2.0

|

| 3 |

+

library_name: tfhub

|

| 4 |

+

language: en

|

| 5 |

+

tags:

|

| 6 |

+

- text

|

| 7 |

+

- sentence-similarity

|

| 8 |

+

- use

|

| 9 |

+

- universal-sentence-encoder

|

| 10 |

+

- tensorflow

|

| 11 |

---

|

| 12 |

+

|

| 13 |

+

# Overview

|

| 14 |

+

|

| 15 |

+

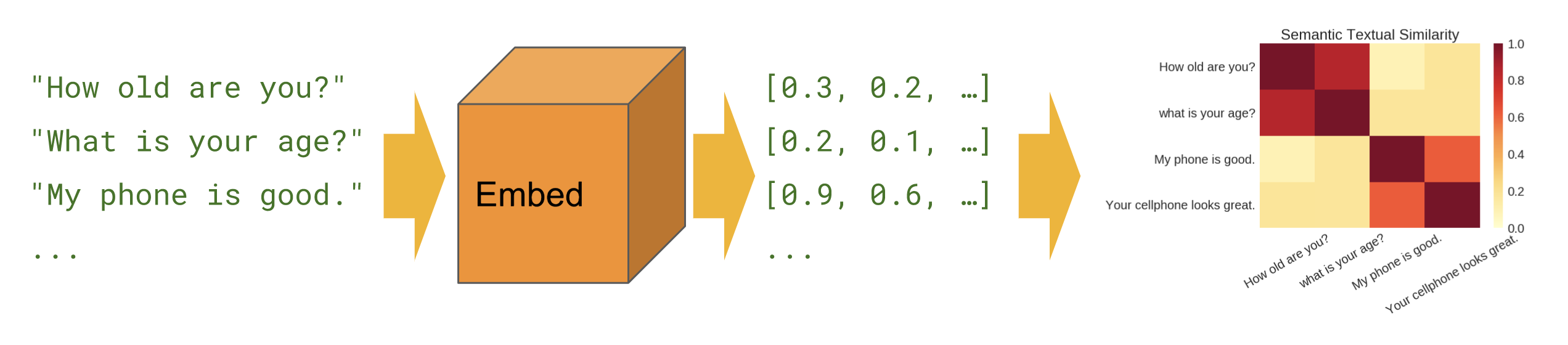

The Universal Sentence Encoder encodes text into high-dimensional vectors that can be used for text classification, semantic similarity, clustering and other natural language tasks.

|

| 16 |

+

|

| 17 |

+

The model is trained and optimized for greater-than-word length text, such as sentences, phrases or short paragraphs. It is trained on a variety of data sources and a variety of tasks with the aim of dynamically accommodating a wide variety of natural language understanding tasks. The input is variable length English text and the output is a 512 dimensional vector. We apply this model to the [STS benchmark](https://ixa2.si.ehu.es/stswiki/index.php/STSbenchmark) for semantic similarity, and the results can be seen in the [example notebook](https://colab.research.google.com/github/tensorflow/hub/blob/master/examples/colab/semantic_similarity_with_tf_hub_universal_encoder.ipynb) made available. The universal-sentence-encoder model is trained with a deep averaging network (DAN) encoder.

|

| 18 |

+

|

| 19 |

+

To learn more about text embeddings, refer to the [TensorFlow Embeddings](https://www.tensorflow.org/tutorials/text/word_embeddings) documentation. Our encoder differs from word level embedding models in that we train on a number of natural language prediction tasks that require modeling the meaning of word sequences rather than just individual words. Details are available in the paper "Universal Sentence Encoder" [1].

|

| 20 |

+

|

| 21 |

+

## Universal Sentence Encoder family

|

| 22 |

+

|

| 23 |

+

There are several versions of universal sentence encoder models trained with different goals including size/performance multilingual, and fine-grained question answer retrieval.

|

| 24 |

+

|

| 25 |

+

- [Universal Sentence Encoder family](https://tfhub.dev/google/collections/universal-sentence-encoder/1)

|

| 26 |

+

|

| 27 |

+

### Example use

|

| 28 |

+

|

| 29 |

+

|

| 30 |

+

### Using TF Hub and HF Hub

|

| 31 |

+

```

|

| 32 |

+

model_path = snapshot_download(repo_id="Dimitre/universal-sentence-encoder")

|

| 33 |

+

model = KerasLayer(handle=model_path)

|

| 34 |

+

embeddings = model([

|

| 35 |

+

"The quick brown fox jumps over the lazy dog.",

|

| 36 |

+

"I am a sentence for which I would like to get its embedding"])

|

| 37 |

+

|

| 38 |

+

print(embeddings)

|

| 39 |

+

|

| 40 |

+

# The following are example embedding output of 512 dimensions per sentence

|

| 41 |

+

# Embedding for: The quick brown fox jumps over the lazy dog.

|

| 42 |

+

# [-0.03133016 -0.06338634 -0.01607501, ...]

|

| 43 |

+

# Embedding for: I am a sentence for which I would like to get its embedding.

|

| 44 |

+

# [0.05080863 -0.0165243 0.01573782, ...]

|

| 45 |

+

```

|

| 46 |

+

|

| 47 |

+

### Using [TF Hub fork](https://github.com/dimitreOliveira/hub)

|

| 48 |

+

```

|

| 49 |

+

model = pull_from_hub(repo_id="Dimitre/universal-sentence-encoder")

|

| 50 |

+

embeddings = model([

|

| 51 |

+

"The quick brown fox jumps over the lazy dog.",

|

| 52 |

+

"I am a sentence for which I would like to get its embedding"])

|

| 53 |

+

|

| 54 |

+

print(embeddings)

|

| 55 |

+

|

| 56 |

+

# The following are example embedding output of 512 dimensions per sentence

|

| 57 |

+

# Embedding for: The quick brown fox jumps over the lazy dog.

|

| 58 |

+

# [-0.03133016 -0.06338634 -0.01607501, ...]

|

| 59 |

+

# Embedding for: I am a sentence for which I would like to get its embedding.

|

| 60 |

+

# [0.05080863 -0.0165243 0.01573782, ...]

|

| 61 |

+

```

|

| 62 |

+

|

| 63 |

+

This module is about 1GB. Depending on your network speed, it might take a while to load the first time you run inference with it. After that, loading the model should be faster as modules are cached by default ([learn more about caching](https://www.tensorflow.org/hub/tf2_saved_model)). Further, once a module is loaded to memory, inference time should be relatively fast.

|

| 64 |

+

|

| 65 |

+

### Preprocessing

|

| 66 |

+

|

| 67 |

+

The module does not require preprocessing the data before applying the module, it performs best effort text input preprocessing inside the graph.

|

| 68 |

+

|

| 69 |

+

# Semantic Similarity

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

Semantic similarity is a measure of the degree to which two pieces of text carry the same meaning. This is broadly useful in obtaining good coverage over the numerous ways that a thought can be expressed using language without needing to manually enumerate them.

|

| 74 |

+

|

| 75 |

+

Simple applications include improving the coverage of systems that trigger behaviors on certain keywords, phrases or utterances. [This section of the notebook](https://colab.research.google.com/github/tensorflow/hub/blob/master/examples/colab/semantic_similarity_with_tf_hub_universal_encoder.ipynb#scrollTo=BnvjATdy64eR) shows how to encode text and compare encoding distances as a proxy for semantic similarity.

|

| 76 |

+

|

| 77 |

+

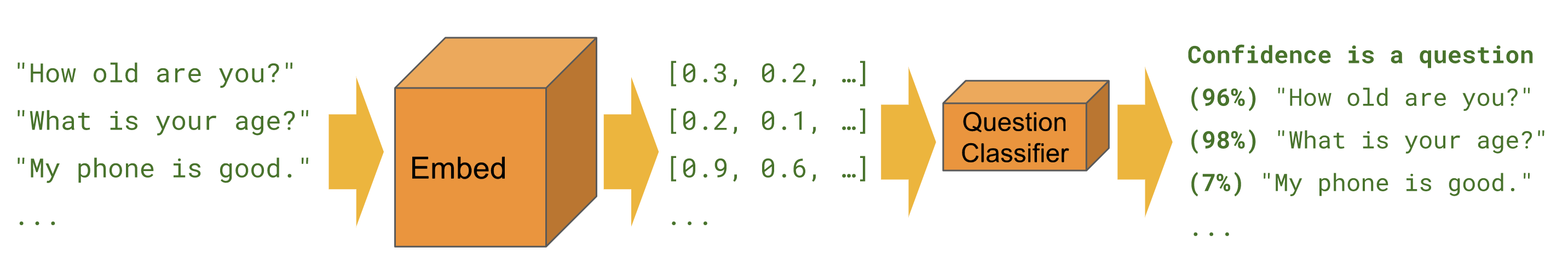

# Classification

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

[This notebook](https://colab.research.google.com/github/tensorflow/hub/blob/master/docs/tutorials/text_classification_with_tf_hub.ipynb) shows how to train a simple binary text classifier on top of any TF-Hub module that can embed sentences. The Universal Sentence Encoder was partially trained with custom text classification tasks in mind. These kinds of classifiers can be trained to perform a wide variety of classification tasks often with a very small amount of labeled examples.

|