Upload 24 files

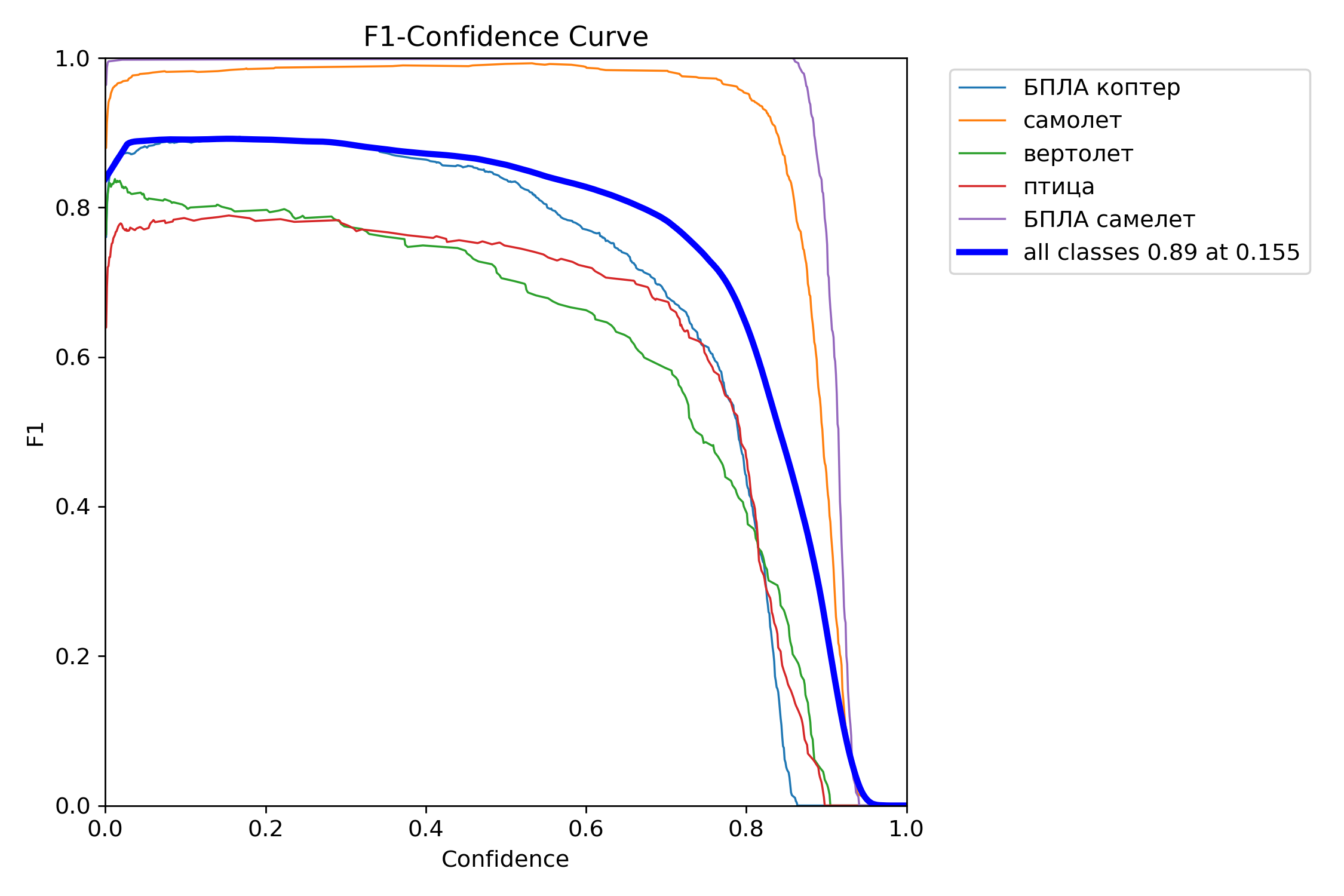

Browse files- F1_curve.png +0 -0

- PR_curve.png +0 -0

- P_curve.png +0 -0

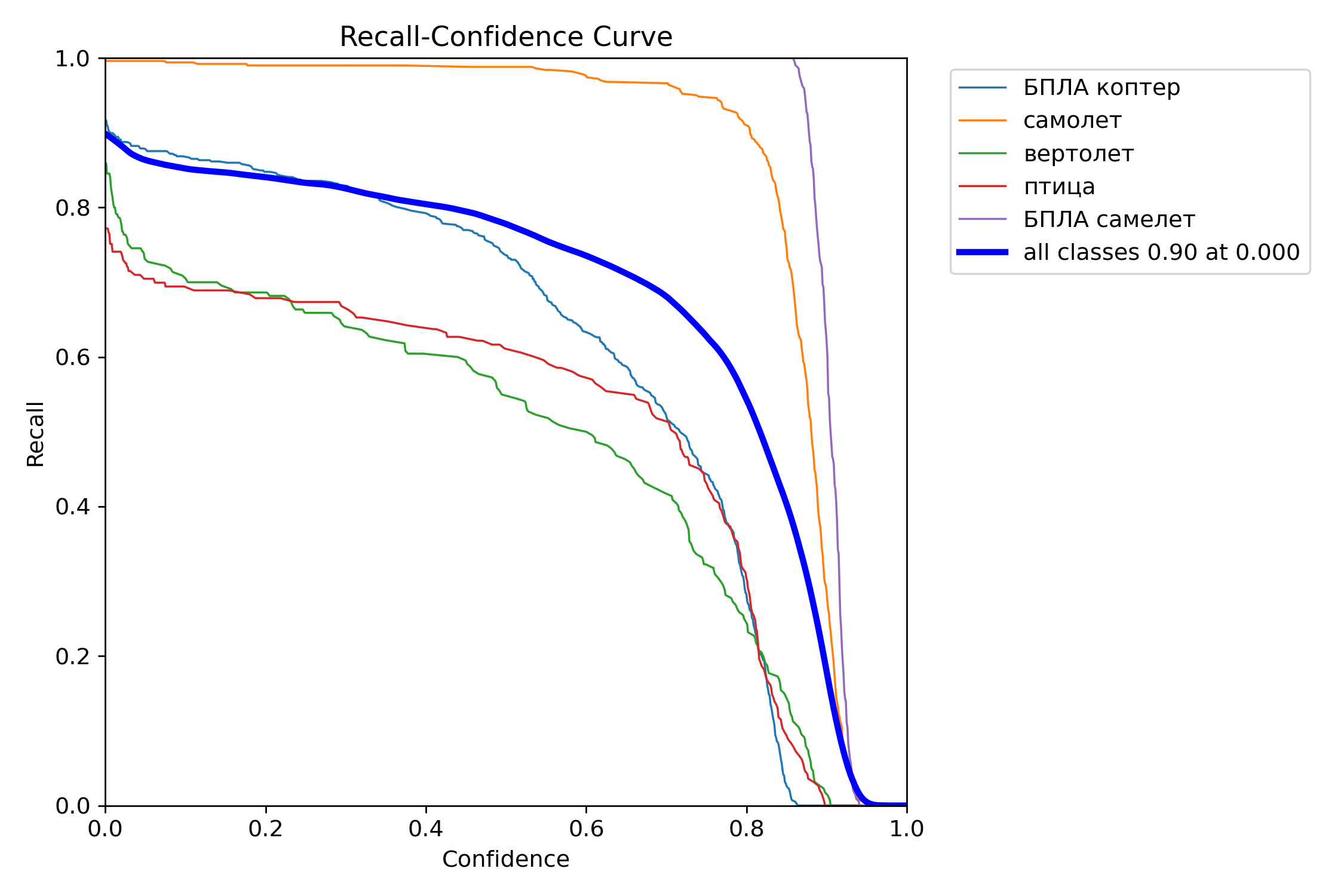

- R_curve.png +0 -0

- args.yaml +106 -0

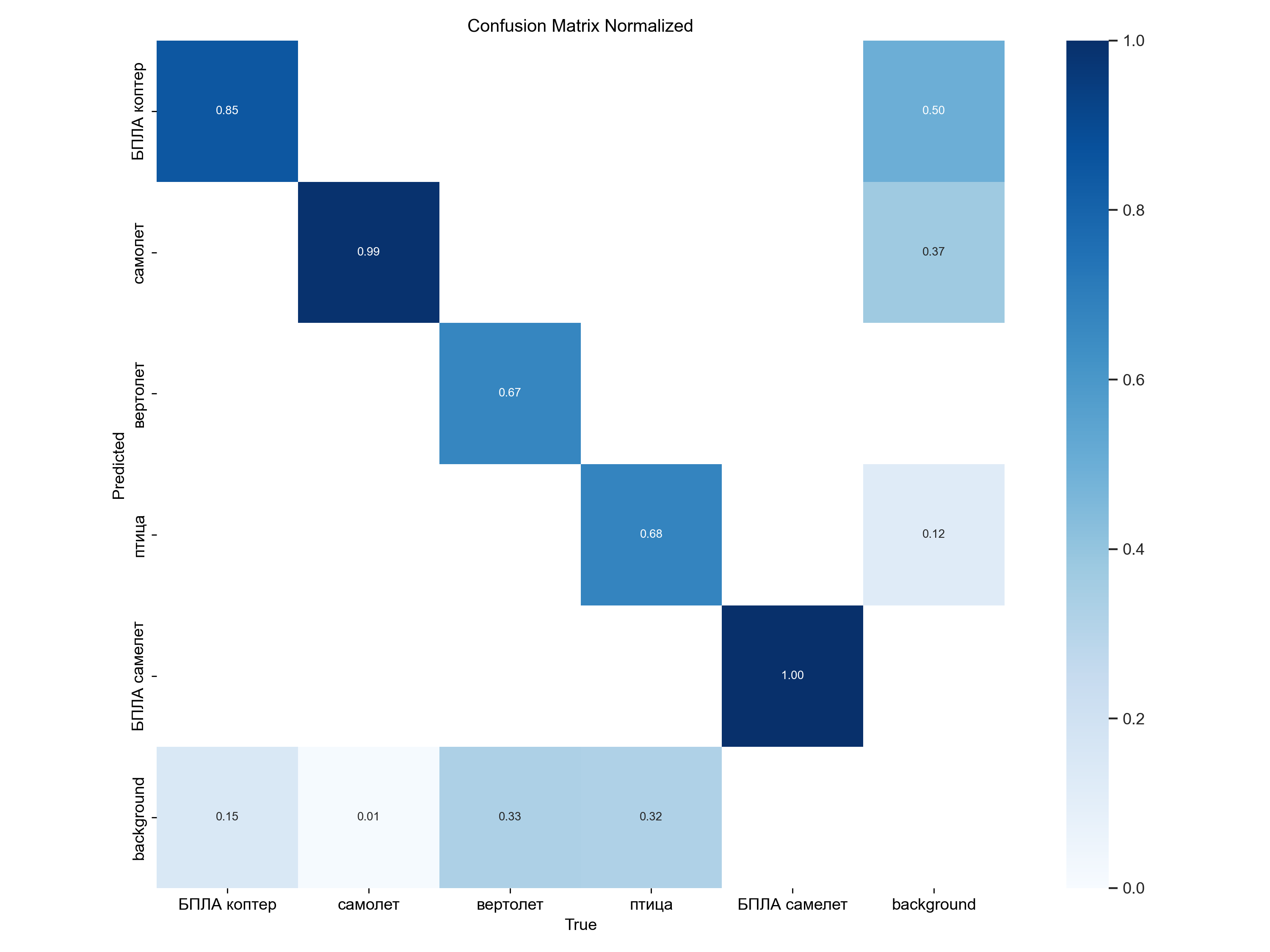

- confusion_matrix.png +0 -0

- confusion_matrix_normalized.png +0 -0

- labels.jpg +0 -0

- labels_correlogram.jpg +0 -0

- predictions.json +0 -0

- results.csv +51 -0

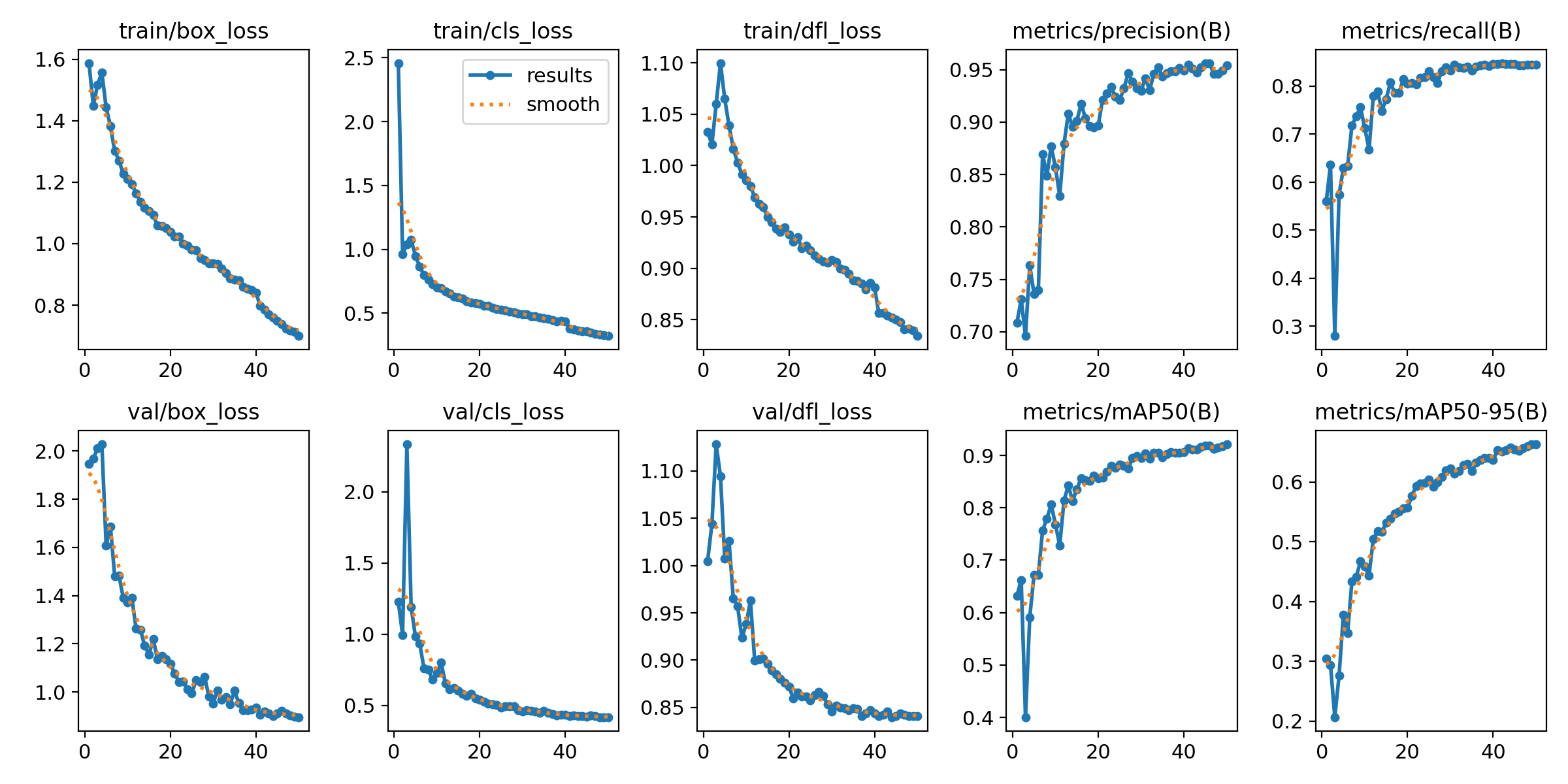

- results.png +0 -0

- train_batch0.jpg +0 -0

- train_batch1.jpg +0 -0

- train_batch17120.jpg +0 -0

- train_batch17121.jpg +0 -0

- train_batch17122.jpg +0 -0

- train_batch2.jpg +0 -0

- val_batch0_labels.jpg +0 -0

- val_batch0_pred.jpg +0 -0

- val_batch1_labels.jpg +0 -0

- val_batch1_pred.jpg +0 -0

- val_batch2_labels.jpg +0 -0

- val_batch2_pred.jpg +0 -0

F1_curve.png

ADDED

|

PR_curve.png

ADDED

|

P_curve.png

ADDED

|

R_curve.png

ADDED

|

args.yaml

ADDED

|

@@ -0,0 +1,106 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

task: detect

|

| 2 |

+

mode: train

|

| 3 |

+

model: D:\python_project\Yolo8\runs\detect\yolov8m_LTC15\weights\last.pt

|

| 4 |

+

data: custom_data.yaml

|

| 5 |

+

epochs: 50

|

| 6 |

+

time: null

|

| 7 |

+

patience: 100

|

| 8 |

+

batch: 32

|

| 9 |

+

imgsz: 640

|

| 10 |

+

save: true

|

| 11 |

+

save_period: 10

|

| 12 |

+

cache: false

|

| 13 |

+

device: 0

|

| 14 |

+

workers: 8

|

| 15 |

+

project: null

|

| 16 |

+

name: yolov8m_LTC15

|

| 17 |

+

exist_ok: false

|

| 18 |

+

pretrained: true

|

| 19 |

+

optimizer: auto

|

| 20 |

+

verbose: true

|

| 21 |

+

seed: 42

|

| 22 |

+

deterministic: true

|

| 23 |

+

single_cls: false

|

| 24 |

+

rect: false

|

| 25 |

+

cos_lr: false

|

| 26 |

+

close_mosaic: 10

|

| 27 |

+

resume: D:\python_project\Yolo8\runs\detect\yolov8m_LTC15\weights\last.pt

|

| 28 |

+

amp: true

|

| 29 |

+

fraction: 1

|

| 30 |

+

profile: false

|

| 31 |

+

freeze: null

|

| 32 |

+

multi_scale: false

|

| 33 |

+

overlap_mask: true

|

| 34 |

+

mask_ratio: 4

|

| 35 |

+

dropout: 0.0

|

| 36 |

+

val: true

|

| 37 |

+

split: val

|

| 38 |

+

save_json: true

|

| 39 |

+

save_hybrid: false

|

| 40 |

+

conf: null

|

| 41 |

+

iou: 0.7

|

| 42 |

+

max_det: 300

|

| 43 |

+

half: false

|

| 44 |

+

dnn: false

|

| 45 |

+

plots: true

|

| 46 |

+

source: null

|

| 47 |

+

vid_stride: 1

|

| 48 |

+

stream_buffer: false

|

| 49 |

+

visualize: false

|

| 50 |

+

augment: false

|

| 51 |

+

agnostic_nms: false

|

| 52 |

+

classes: null

|

| 53 |

+

retina_masks: false

|

| 54 |

+

embed: null

|

| 55 |

+

show: false

|

| 56 |

+

save_frames: false

|

| 57 |

+

save_txt: false

|

| 58 |

+

save_conf: true

|

| 59 |

+

save_crop: false

|

| 60 |

+

show_labels: true

|

| 61 |

+

show_conf: true

|

| 62 |

+

show_boxes: true

|

| 63 |

+

line_width: null

|

| 64 |

+

format: torchscript

|

| 65 |

+

keras: false

|

| 66 |

+

optimize: false

|

| 67 |

+

int8: false

|

| 68 |

+

dynamic: false

|

| 69 |

+

simplify: false

|

| 70 |

+

opset: null

|

| 71 |

+

workspace: 4

|

| 72 |

+

nms: false

|

| 73 |

+

lr0: 0.015

|

| 74 |

+

lrf: 0.005

|

| 75 |

+

momentum: 0.937

|

| 76 |

+

weight_decay: 0.0005

|

| 77 |

+

warmup_epochs: 3.0

|

| 78 |

+

warmup_momentum: 0.8

|

| 79 |

+

warmup_bias_lr: 0.0

|

| 80 |

+

box: 7.5

|

| 81 |

+

cls: 0.5

|

| 82 |

+

dfl: 1.5

|

| 83 |

+

pose: 12.0

|

| 84 |

+

kobj: 1.0

|

| 85 |

+

label_smoothing: 0.0

|

| 86 |

+

nbs: 64

|

| 87 |

+

hsv_h: 0.015

|

| 88 |

+

hsv_s: 0.7

|

| 89 |

+

hsv_v: 0.4

|

| 90 |

+

degrees: 20

|

| 91 |

+

translate: 0.1

|

| 92 |

+

scale: 0.5

|

| 93 |

+

shear: 0.0

|

| 94 |

+

perspective: 0.0

|

| 95 |

+

flipud: 0.0

|

| 96 |

+

fliplr: 0.5

|

| 97 |

+

bgr: 0.0

|

| 98 |

+

mosaic: 0.0

|

| 99 |

+

mixup: 0.0

|

| 100 |

+

copy_paste: 0.0

|

| 101 |

+

auto_augment: randaugment

|

| 102 |

+

erasing: 0.4

|

| 103 |

+

crop_fraction: 1.0

|

| 104 |

+

cfg: null

|

| 105 |

+

tracker: botsort.yaml

|

| 106 |

+

save_dir: runs\detect\yolov8m_LTC15

|

confusion_matrix.png

ADDED

|

confusion_matrix_normalized.png

ADDED

|

labels.jpg

ADDED

|

labels_correlogram.jpg

ADDED

|

predictions.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

results.csv

ADDED

|

@@ -0,0 +1,51 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

epoch, train/box_loss, train/cls_loss, train/dfl_loss, metrics/precision(B), metrics/recall(B), metrics/mAP50(B), metrics/mAP50-95(B), val/box_loss, val/cls_loss, val/dfl_loss, lr/pg0, lr/pg1, lr/pg2

|

| 2 |

+

1, 1.5873, 2.4585, 1.0326, 0.70846, 0.55946, 0.63189, 0.30453, 1.946, 1.2285, 1.0048, 0.0033255, 0.0033255, 0.0033255

|

| 3 |

+

2, 1.4486, 0.96403, 1.0206, 0.73108, 0.63604, 0.66249, 0.29394, 1.9678, 0.99638, 1.044, 0.0065264, 0.0065264, 0.0065264

|

| 4 |

+

3, 1.5172, 1.0405, 1.0599, 0.69597, 0.27989, 0.4006, 0.2064, 2.0124, 2.334, 1.1281, 0.0095945, 0.0095945, 0.0095945

|

| 5 |

+

4, 1.5563, 1.0736, 1.0998, 0.7635, 0.57345, 0.59139, 0.27589, 2.0283, 1.1898, 1.0945, 0.009403, 0.009403, 0.009403

|

| 6 |

+

5, 1.4432, 0.9486, 1.0649, 0.73597, 0.62885, 0.67255, 0.37744, 1.6085, 0.98386, 1.0071, 0.009204, 0.009204, 0.009204

|

| 7 |

+

6, 1.382, 0.86505, 1.0388, 0.73958, 0.63374, 0.67208, 0.34715, 1.6872, 0.93489, 1.0259, 0.009005, 0.009005, 0.009005

|

| 8 |

+

7, 1.301, 0.79789, 1.0165, 0.86964, 0.71747, 0.7572, 0.43376, 1.4807, 0.76019, 0.96526, 0.008806, 0.008806, 0.008806

|

| 9 |

+

8, 1.2704, 0.7621, 1.003, 0.84873, 0.73673, 0.77927, 0.44083, 1.4835, 0.75299, 0.95721, 0.008607, 0.008607, 0.008607

|

| 10 |

+

9, 1.2268, 0.72808, 0.99157, 0.877, 0.75572, 0.80644, 0.46706, 1.3906, 0.68153, 0.92355, 0.008408, 0.008408, 0.008408

|

| 11 |

+

10, 1.2112, 0.70085, 0.98574, 0.85687, 0.71251, 0.76846, 0.45865, 1.372, 0.72622, 0.938, 0.008209, 0.008209, 0.008209

|

| 12 |

+

11, 1.1941, 0.69642, 0.97997, 0.82962, 0.66776, 0.72805, 0.44342, 1.3919, 0.80002, 0.96298, 0.00801, 0.00801, 0.00801

|

| 13 |

+

12, 1.1643, 0.66954, 0.96922, 0.87926, 0.77873, 0.81369, 0.50454, 1.2633, 0.65566, 0.89974, 0.007811, 0.007811, 0.007811

|

| 14 |

+

13, 1.1364, 0.65312, 0.96274, 0.9082, 0.78826, 0.84282, 0.51765, 1.2593, 0.61577, 0.90122, 0.007612, 0.007612, 0.007612

|

| 15 |

+

14, 1.1171, 0.62955, 0.95927, 0.89537, 0.74796, 0.81307, 0.51625, 1.1916, 0.62167, 0.90146, 0.007413, 0.007413, 0.007413

|

| 16 |

+

15, 1.1068, 0.62592, 0.95012, 0.90115, 0.77275, 0.83547, 0.5316, 1.1549, 0.60403, 0.89612, 0.007214, 0.007214, 0.007214

|

| 17 |

+

16, 1.0937, 0.61269, 0.94502, 0.91775, 0.80721, 0.85655, 0.53896, 1.2196, 0.58336, 0.88936, 0.007015, 0.007015, 0.007015

|

| 18 |

+

17, 1.0584, 0.59292, 0.93878, 0.90386, 0.78522, 0.85313, 0.54729, 1.1357, 0.56828, 0.88486, 0.006816, 0.006816, 0.006816

|

| 19 |

+

18, 1.0583, 0.5849, 0.93532, 0.89637, 0.78581, 0.85128, 0.55096, 1.1497, 0.58236, 0.88047, 0.006617, 0.006617, 0.006617

|

| 20 |

+

19, 1.0501, 0.58056, 0.94012, 0.89474, 0.81405, 0.86199, 0.556, 1.1354, 0.55043, 0.87615, 0.006418, 0.006418, 0.006418

|

| 21 |

+

20, 1.0378, 0.57237, 0.93315, 0.89671, 0.805, 0.85702, 0.55753, 1.1181, 0.54184, 0.8723, 0.006219, 0.006219, 0.006219

|

| 22 |

+

21, 1.0241, 0.55861, 0.92608, 0.92138, 0.80588, 0.85769, 0.57619, 1.0772, 0.52479, 0.85957, 0.00602, 0.00602, 0.00602

|

| 23 |

+

22, 1.0231, 0.5603, 0.93061, 0.92723, 0.80281, 0.86867, 0.59345, 1.042, 0.5126, 0.86613, 0.005821, 0.005821, 0.005821

|

| 24 |

+

23, 1.0002, 0.54112, 0.91974, 0.93363, 0.81724, 0.88043, 0.59791, 1.0433, 0.50852, 0.86186, 0.005622, 0.005622, 0.005622

|

| 25 |

+

24, 0.9941, 0.53265, 0.92189, 0.92443, 0.81808, 0.87686, 0.59901, 1.0122, 0.50224, 0.86147, 0.005423, 0.005423, 0.005423

|

| 26 |

+

25, 0.98121, 0.52903, 0.91754, 0.92108, 0.8302, 0.88232, 0.60394, 0.99371, 0.48711, 0.85757, 0.005224, 0.005224, 0.005224

|

| 27 |

+

26, 0.97954, 0.52205, 0.91232, 0.93222, 0.81833, 0.87986, 0.59219, 1.0479, 0.49569, 0.86292, 0.005025, 0.005025, 0.005025

|

| 28 |

+

27, 0.95345, 0.51328, 0.9091, 0.94663, 0.80672, 0.8752, 0.59978, 1.0412, 0.49653, 0.86651, 0.004826, 0.004826, 0.004826

|

| 29 |

+

28, 0.94624, 0.50555, 0.90668, 0.93866, 0.83121, 0.89461, 0.608, 1.0637, 0.49542, 0.86243, 0.004627, 0.004627, 0.004627

|

| 30 |

+

29, 0.9355, 0.49795, 0.90575, 0.93222, 0.83915, 0.89943, 0.61936, 0.98217, 0.46745, 0.85352, 0.004428, 0.004428, 0.004428

|

| 31 |

+

30, 0.93524, 0.49014, 0.90812, 0.9299, 0.83146, 0.89492, 0.62296, 0.95176, 0.45768, 0.84581, 0.004229, 0.004229, 0.004229

|

| 32 |

+

31, 0.93426, 0.49275, 0.90629, 0.94177, 0.8445, 0.90423, 0.61372, 1.0071, 0.46689, 0.85168, 0.00403, 0.00403, 0.00403

|

| 33 |

+

32, 0.91871, 0.47594, 0.89948, 0.93069, 0.83859, 0.89356, 0.61859, 0.96647, 0.46363, 0.84999, 0.003831, 0.003831, 0.003831

|

| 34 |

+

33, 0.90329, 0.47388, 0.89833, 0.94612, 0.83795, 0.90546, 0.62809, 0.97967, 0.45938, 0.84892, 0.003632, 0.003632, 0.003632

|

| 35 |

+

34, 0.88817, 0.4642, 0.89444, 0.95223, 0.84051, 0.90496, 0.63047, 0.94973, 0.45057, 0.84695, 0.003433, 0.003433, 0.003433

|

| 36 |

+

35, 0.88321, 0.46098, 0.88845, 0.94352, 0.83219, 0.89581, 0.61771, 1.0045, 0.46104, 0.84951, 0.003234, 0.003234, 0.003234

|

| 37 |

+

36, 0.88141, 0.45661, 0.88795, 0.94672, 0.8406, 0.90197, 0.63205, 0.95432, 0.4485, 0.84873, 0.003035, 0.003035, 0.003035

|

| 38 |

+

37, 0.8592, 0.44739, 0.8849, 0.94883, 0.84289, 0.90584, 0.63648, 0.92547, 0.4393, 0.84066, 0.002836, 0.002836, 0.002836

|

| 39 |

+

38, 0.85332, 0.43541, 0.87937, 0.94887, 0.84375, 0.90548, 0.64024, 0.92348, 0.4323, 0.8438, 0.002637, 0.002637, 0.002637

|

| 40 |

+

39, 0.84875, 0.43831, 0.88604, 0.95182, 0.84117, 0.9051, 0.64051, 0.92826, 0.43625, 0.84749, 0.002438, 0.002438, 0.002438

|

| 41 |

+

40, 0.84, 0.43253, 0.88122, 0.94952, 0.84495, 0.90603, 0.63663, 0.93639, 0.43381, 0.84356, 0.002239, 0.002239, 0.002239

|

| 42 |

+

41, 0.79814, 0.37959, 0.85645, 0.95466, 0.84619, 0.91339, 0.65306, 0.90524, 0.42344, 0.84097, 0.00204, 0.00204, 0.00204

|

| 43 |

+

42, 0.78485, 0.3714, 0.85635, 0.95135, 0.84751, 0.91136, 0.65072, 0.91793, 0.43035, 0.84226, 0.001841, 0.001841, 0.001841

|

| 44 |

+

43, 0.77144, 0.36579, 0.85391, 0.94764, 0.84521, 0.91104, 0.65279, 0.91176, 0.42632, 0.84586, 0.001642, 0.001642, 0.001642

|

| 45 |

+

44, 0.75875, 0.36084, 0.85189, 0.9526, 0.84546, 0.91569, 0.6575, 0.89942, 0.42348, 0.83979, 0.001443, 0.001443, 0.001443

|

| 46 |

+

45, 0.74944, 0.3561, 0.85001, 0.95601, 0.84497, 0.91817, 0.65474, 0.91055, 0.42331, 0.84095, 0.001244, 0.001244, 0.001244

|

| 47 |

+

46, 0.73747, 0.34758, 0.8478, 0.9564, 0.84309, 0.91901, 0.65179, 0.92297, 0.43147, 0.84384, 0.001045, 0.001045, 0.001045

|

| 48 |

+

47, 0.7246, 0.33918, 0.84076, 0.94592, 0.84217, 0.9128, 0.65616, 0.91046, 0.42518, 0.84255, 0.000846, 0.000846, 0.000846

|

| 49 |

+

48, 0.71687, 0.33174, 0.84134, 0.94621, 0.84357, 0.91487, 0.65932, 0.90268, 0.41803, 0.84084, 0.000647, 0.000647, 0.000647

|

| 50 |

+

49, 0.71337, 0.32973, 0.83963, 0.94936, 0.84424, 0.9181, 0.66262, 0.89847, 0.418, 0.84116, 0.000448, 0.000448, 0.000448

|

| 51 |

+

50, 0.6999, 0.32162, 0.83415, 0.95408, 0.84403, 0.92121, 0.663, 0.89543, 0.41845, 0.84129, 0.000249, 0.000249, 0.000249

|

results.png

ADDED

|

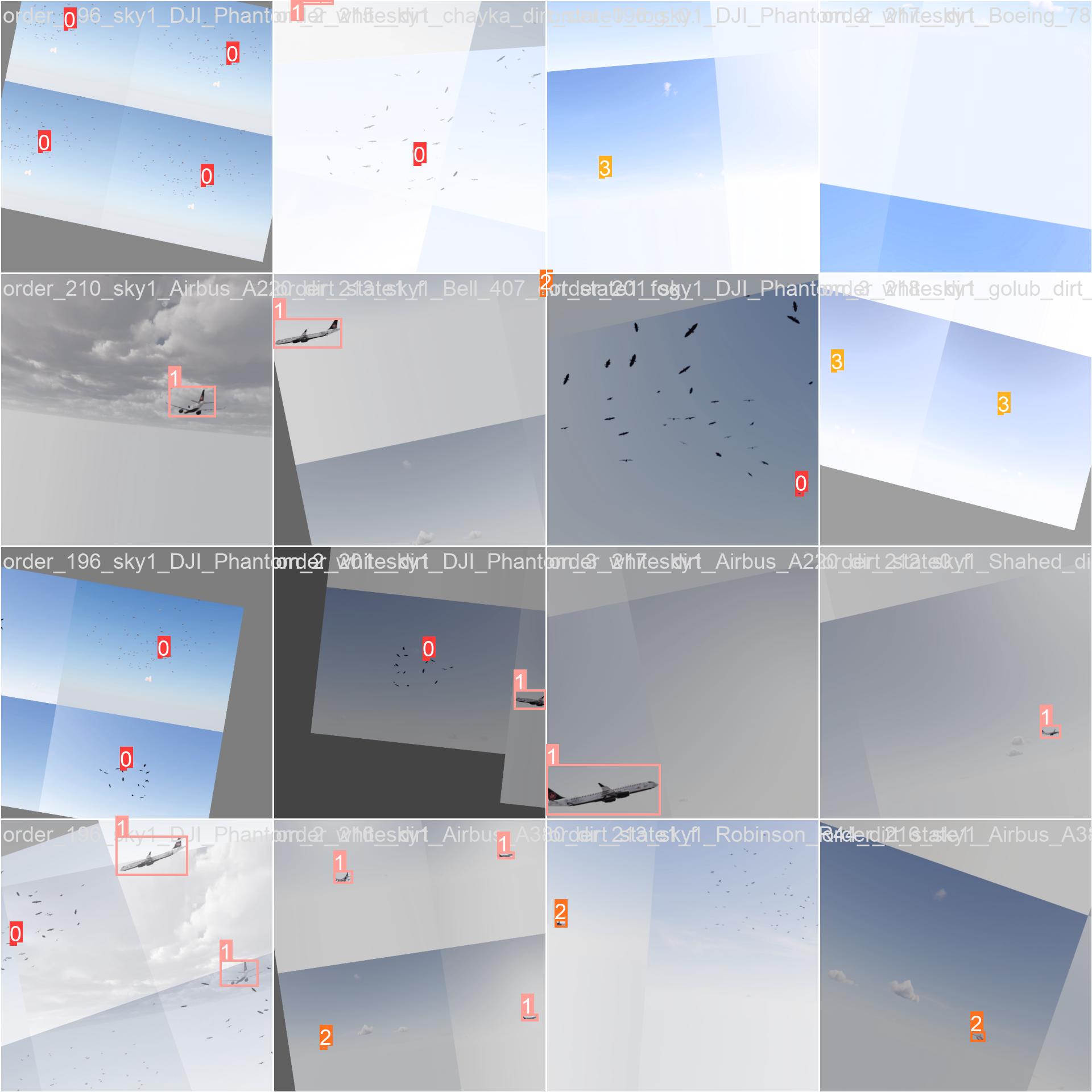

train_batch0.jpg

ADDED

|

train_batch1.jpg

ADDED

|

train_batch17120.jpg

ADDED

|

train_batch17121.jpg

ADDED

|

train_batch17122.jpg

ADDED

|

train_batch2.jpg

ADDED

|

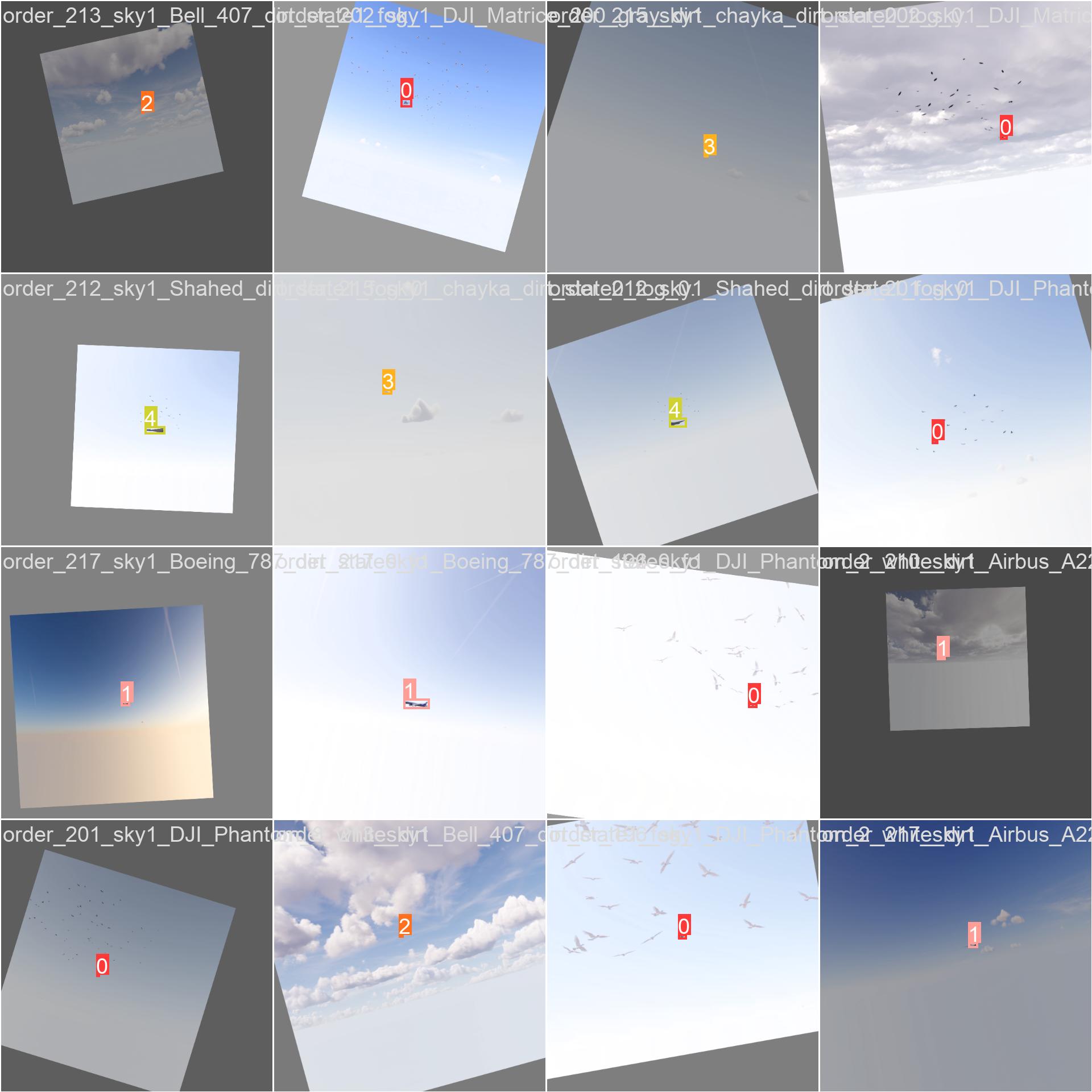

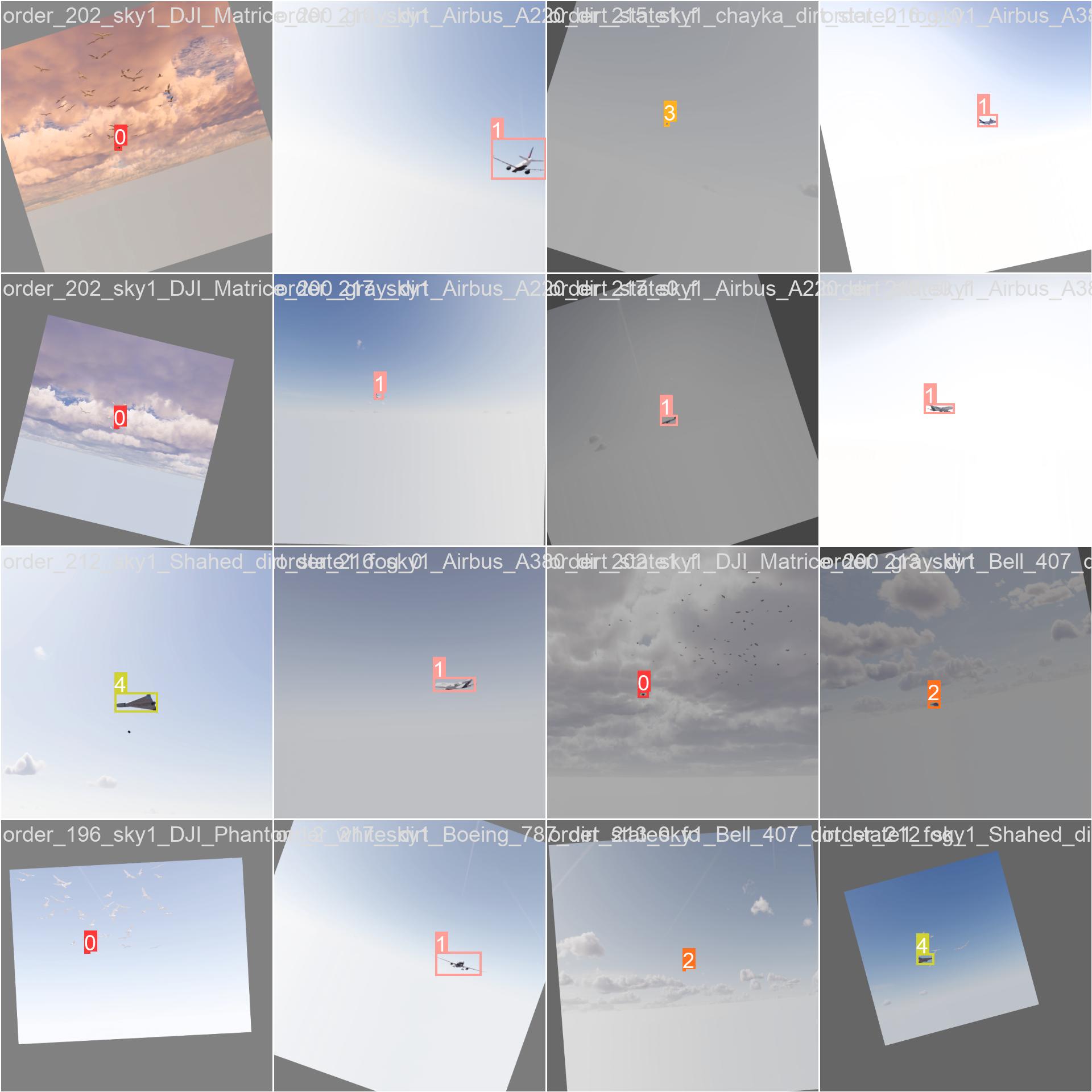

val_batch0_labels.jpg

ADDED

|

val_batch0_pred.jpg

ADDED

|

val_batch1_labels.jpg

ADDED

|

val_batch1_pred.jpg

ADDED

|

val_batch2_labels.jpg

ADDED

|

val_batch2_pred.jpg

ADDED

|