Commit

•

56c2d12

1

Parent(s):

baeb9de

Update README.md

Browse files

README.md

CHANGED

|

@@ -16,6 +16,12 @@ tags:

|

|

| 16 |

|

| 17 |

|

| 18 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 19 |

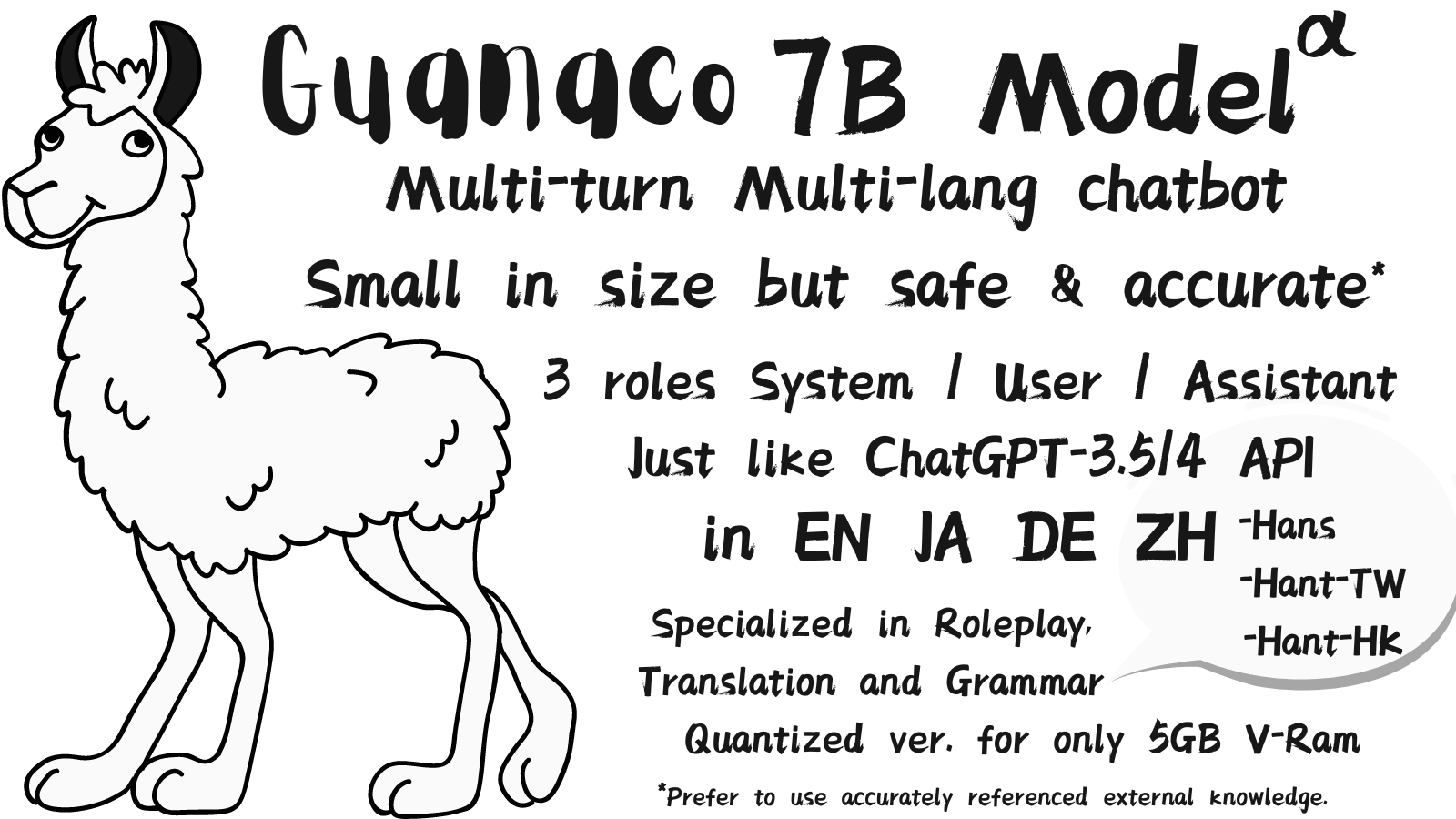

**It is highly recommended to use fp16 inference for this model, as 8-bit precision may significantly affect performance. If you require a more Consumer Hardware friendly version, please use the specialized quantized [JosephusCheung/GuanacoOnConsumerHardware](https://huggingface.co/JosephusCheung/GuanacoOnConsumerHardware).**

|

| 20 |

|

| 21 |

**You are encouraged to use the latest version of transformers from GitHub.**

|

|

|

|

| 16 |

|

| 17 |

|

| 18 |

|

| 19 |

+

**You need a Colab pro for full version (8bit not well performed, should use fp16)**

|

| 20 |

+

|

| 21 |

+

[](https://colab.research.google.com/drive/1ocSmoy3ba1EkYu7JWT1oCw9vz8qC2cMk#scrollTo=zLORi5OcPcIJ)

|

| 22 |

+

|

| 23 |

+

Free T4 Colab demo, please check 4bit version [JosephusCheung/GuanacoOnConsumerHardware](https://huggingface.co/JosephusCheung/GuanacoOnConsumerHardware).**

|

| 24 |

+

|

| 25 |

**It is highly recommended to use fp16 inference for this model, as 8-bit precision may significantly affect performance. If you require a more Consumer Hardware friendly version, please use the specialized quantized [JosephusCheung/GuanacoOnConsumerHardware](https://huggingface.co/JosephusCheung/GuanacoOnConsumerHardware).**

|

| 26 |

|

| 27 |

**You are encouraged to use the latest version of transformers from GitHub.**

|