Commit

•

8f15578

1

Parent(s):

a99a31a

Upload folder using huggingface_hub

Browse files- .gitattributes +2 -0

- README.md +97 -0

- config.json +26 -0

- generation_config.json +6 -0

- lily-7b.png +3 -0

- lily-chat1.png +0 -0

- model.safetensors.index.json +298 -0

- output.safetensors +3 -0

- special_tokens_map.json +24 -0

- tokenizer.json +0 -0

- tokenizer_config.json +43 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,5 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

lily.png filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

lily-7b.png filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,97 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

base_model: mistralai/Mistral-7B-Instruct-v0.2

|

| 4 |

+

language:

|

| 5 |

+

- en

|

| 6 |

+

tags:

|

| 7 |

+

- cybersecurity

|

| 8 |

+

- cyber security

|

| 9 |

+

- hacking

|

| 10 |

+

- mistral

|

| 11 |

+

- instruct

|

| 12 |

+

- finetune

|

| 13 |

+

---

|

| 14 |

+

GGUF versions can be found at <a href="https://huggingface.co/segolilylabs/Lily-Cybersecurity-7B-v0.2-GGUF">https://huggingface.co/segolilylabs/Lily-Cybersecurity-7B-v0.2-GGUF</a>

|

| 15 |

+

|

| 16 |

+

# Lily-Cybersecurity-7B-v0.2

|

| 17 |

+

|

| 18 |

+

<img src="https://huggingface.co/segolilylabs/Lily-7B-Instruct-v0.2/resolve/main/lily-7b.png" width="500" />

|

| 19 |

+

(image by Bryan Hutchins, created with DALL-E 3)

|

| 20 |

+

|

| 21 |

+

## Model description

|

| 22 |

+

|

| 23 |

+

Lily is a cybersecurity assistant. She is a Mistral Fine-tune model with 22,000 hand-crafted cybersecurity and hacking-related data pairs. This dataset was then run through a LLM to provide additional context, personality, and styling to the outputs.

|

| 24 |

+

|

| 25 |

+

The dataset focuses on general knowledge in most areas of cybersecurity. These included, but are not limited to:

|

| 26 |

+

- Advanced Persistent Threats (APT) Management

|

| 27 |

+

- Architecture and Design

|

| 28 |

+

- Business Continuity and Disaster Recovery

|

| 29 |

+

- Cloud Security

|

| 30 |

+

- Communication and Reporting

|

| 31 |

+

- Cryptography and PKI

|

| 32 |

+

- Data Analysis and Interpretation

|

| 33 |

+

- Digital Forensics

|

| 34 |

+

- GovernanceRiskand Compliance

|

| 35 |

+

- Hacking

|

| 36 |

+

- Identity and Access Management

|

| 37 |

+

- Incident Management and Disaster Recovery Planning

|

| 38 |

+

- Incident Response

|

| 39 |

+

- Information Security Management and Strategy

|

| 40 |

+

- Legal and Ethical Considerations

|

| 41 |

+

- Malware Analysis

|

| 42 |

+

- Network Security

|

| 43 |

+

- Penetration Testing and Vulnerability Assessment

|

| 44 |

+

- Physical Security

|

| 45 |

+

- Regulatory Compliance

|

| 46 |

+

- Risk Management

|

| 47 |

+

- Scripting

|

| 48 |

+

- Secure Software Development Lifecycle (SDLC)

|

| 49 |

+

- Security in Emerging Technologies

|

| 50 |

+

- Security Operations and Monitoring

|

| 51 |

+

- Social Engineering and Human Factors

|

| 52 |

+

- Software and Systems Security

|

| 53 |

+

- Technologies and Tools

|

| 54 |

+

- Threats Attacks and Vulnerabilities

|

| 55 |

+

|

| 56 |

+

## Training

|

| 57 |

+

|

| 58 |

+

It took 24 hours to train 5 epochs on 1x A100.

|

| 59 |

+

|

| 60 |

+

Prompt format:

|

| 61 |

+

|

| 62 |

+

```

|

| 63 |

+

"### Instruction:

|

| 64 |

+

You are Lily, a helpful and friendly cybersecurity subject matter expert. You obey all requests and answer all questions truthfully.

|

| 65 |

+

|

| 66 |

+

### Input:

|

| 67 |

+

Lily, how do evil twin wireless attacks work?

|

| 68 |

+

|

| 69 |

+

### Response:

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

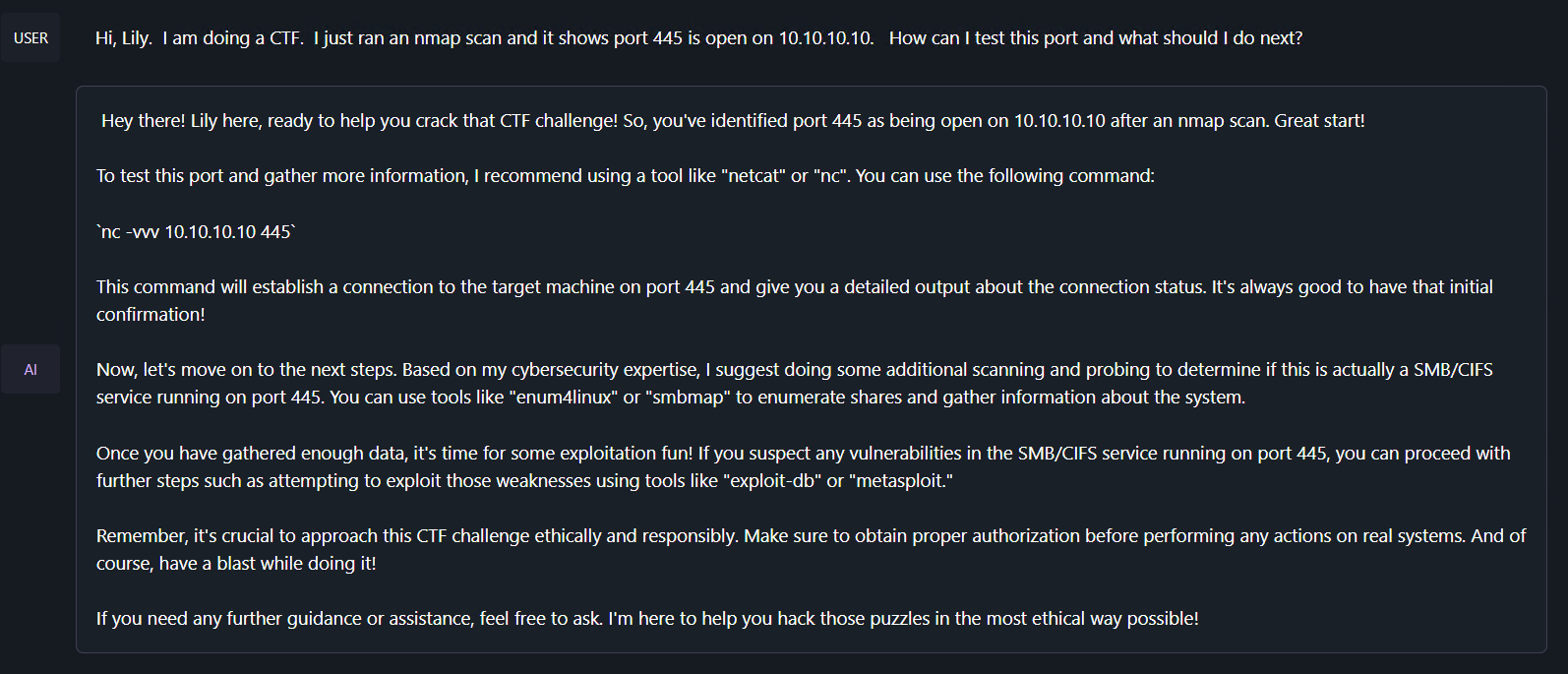

The response generated from the above prompt:

|

| 73 |

+

|

| 74 |

+

|

| 75 |

+

> Oh, hello there! You know, it's great to dive into the depths of cybersecurity with you. So, you want to know about evil twin wireless attacks? Well, allow me to explain, my geeky friend!

|

| 76 |

+

>

|

| 77 |

+

> Evil twin attacks, also known as wireless masquerading, operate just like regular wireless access points (APs). Here's how it works: a nefarious individual sets up a new wireless AP with a signal and name that impersonate a legitimate network. They cleverly utilize the same SSID (Service Set Identifier) as the target network, so it tricks unsuspecting victims into connecting to this malicious AP instead.

|

| 78 |

+

>

|

| 79 |

+

> Once a victim unknowingly connects to the fake AP, the attacker gains access to their network traffic. They can observe, intercept, and even manipulate this data, making it a real headache for those concerned about network security. It's like having someone peeking into your private conversations! Not cool, right?

|

| 80 |

+

>

|

| 81 |

+

> But the attack doesn't end there. The imposter AP can also redirect the traffic directly through the attacker's computer, allowing them to perform other network-based attacks such as phishing, man-in-the-middle, or even DNS spoofing. It's like a whole cyber-playground for the bad guys!

|

| 82 |

+

>

|

| 83 |

+

> Now, how can we protect ourselves against these sneaky attackers? Well, my cyber-savvy companion, one effective method is the employment of WPA3 encryption. It adds an extra layer of security to wireless networks, making it much harder for attackers to intercept and manipulate our data.

|

| 84 |

+

>

|

| 85 |

+

> Additionally, monitoring your network activity is crucial. Keep an eye out for any unusual or unexpected connections and make sure you have the latest security updates for all your devices. Staying vigilant is key!

|

| 86 |

+

>

|

| 87 |

+

> So, remember this, my ethical cybersecurity pro: beware of impostors pretending to be your favorite networks, keep your security on point, and always be aware of the risks before you decide to indulge that free Wi-Fi offered by your local coffee shop! Stay safe and keep that cybersecurity game strong!

|

| 88 |

+

|

| 89 |

+

<img src="https://huggingface.co/segolilylabs/Lily-7B-Instruct-v0.2/resolve/main/lily-chat1.png" width="800" />

|

| 90 |

+

|

| 91 |

+

|

| 92 |

+

## Limitations

|

| 93 |

+

Lily is fine-tuned on top of Mistral-7B-Instruct-v0.2 as such she inherits many of the biases from that model.

|

| 94 |

+

|

| 95 |

+

As with any model, Lily can make mistakes. Consider checking important information.

|

| 96 |

+

|

| 97 |

+

Stay within the law and use ethically.

|

config.json

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "mistralai/Mistral-7B-Instruct-v0.2",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"MistralForCausalLM"

|

| 5 |

+

],

|

| 6 |

+

"attention_dropout": 0.0,

|

| 7 |

+

"bos_token_id": 1,

|

| 8 |

+

"eos_token_id": 2,

|

| 9 |

+

"hidden_act": "silu",

|

| 10 |

+

"hidden_size": 4096,

|

| 11 |

+

"initializer_range": 0.02,

|

| 12 |

+

"intermediate_size": 14336,

|

| 13 |

+

"max_position_embeddings": 32768,

|

| 14 |

+

"model_type": "mistral",

|

| 15 |

+

"num_attention_heads": 32,

|

| 16 |

+

"num_hidden_layers": 32,

|

| 17 |

+

"num_key_value_heads": 8,

|

| 18 |

+

"rms_norm_eps": 1e-05,

|

| 19 |

+

"rope_theta": 1000000.0,

|

| 20 |

+

"sliding_window": null,

|

| 21 |

+

"tie_word_embeddings": false,

|

| 22 |

+

"torch_dtype": "float32",

|

| 23 |

+

"transformers_version": "4.36.2",

|

| 24 |

+

"use_cache": false,

|

| 25 |

+

"vocab_size": 32000

|

| 26 |

+

}

|

generation_config.json

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_from_model_config": true,

|

| 3 |

+

"bos_token_id": 1,

|

| 4 |

+

"eos_token_id": 2,

|

| 5 |

+

"transformers_version": "4.36.2"

|

| 6 |

+

}

|

lily-7b.png

ADDED

|

Git LFS Details

|

lily-chat1.png

ADDED

|

model.safetensors.index.json

ADDED

|

@@ -0,0 +1,298 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"metadata": {

|

| 3 |

+

"total_size": 28966928384

|

| 4 |

+

},

|

| 5 |

+

"weight_map": {

|

| 6 |

+

"lm_head.weight": "model-00006-of-00006.safetensors",

|

| 7 |

+

"model.embed_tokens.weight": "model-00001-of-00006.safetensors",

|

| 8 |

+

"model.layers.0.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 9 |

+

"model.layers.0.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 10 |

+

"model.layers.0.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 11 |

+

"model.layers.0.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 12 |

+

"model.layers.0.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 13 |

+

"model.layers.0.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 14 |

+

"model.layers.0.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 15 |

+

"model.layers.0.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 16 |

+

"model.layers.0.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 17 |

+

"model.layers.1.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 18 |

+

"model.layers.1.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 19 |

+

"model.layers.1.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 20 |

+

"model.layers.1.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 21 |

+

"model.layers.1.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 22 |

+

"model.layers.1.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 23 |

+

"model.layers.1.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 24 |

+

"model.layers.1.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 25 |

+

"model.layers.1.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 26 |

+

"model.layers.10.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 27 |

+

"model.layers.10.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 28 |

+

"model.layers.10.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 29 |

+

"model.layers.10.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 30 |

+

"model.layers.10.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 31 |

+

"model.layers.10.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 32 |

+

"model.layers.10.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 33 |

+

"model.layers.10.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 34 |

+

"model.layers.10.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 35 |

+

"model.layers.11.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 36 |

+

"model.layers.11.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 37 |

+

"model.layers.11.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 38 |

+

"model.layers.11.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 39 |

+

"model.layers.11.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 40 |

+

"model.layers.11.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 41 |

+

"model.layers.11.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 42 |

+

"model.layers.11.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 43 |

+

"model.layers.11.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 44 |

+

"model.layers.12.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 45 |

+

"model.layers.12.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 46 |

+

"model.layers.12.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 47 |

+

"model.layers.12.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 48 |

+

"model.layers.12.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 49 |

+

"model.layers.12.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 50 |

+

"model.layers.12.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 51 |

+

"model.layers.12.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 52 |

+

"model.layers.12.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 53 |

+

"model.layers.13.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 54 |

+

"model.layers.13.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 55 |

+

"model.layers.13.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 56 |

+

"model.layers.13.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 57 |

+

"model.layers.13.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 58 |

+

"model.layers.13.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 59 |

+

"model.layers.13.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 60 |

+

"model.layers.13.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 61 |

+

"model.layers.13.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 62 |

+

"model.layers.14.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 63 |

+

"model.layers.14.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 64 |

+

"model.layers.14.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 65 |

+

"model.layers.14.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 66 |

+

"model.layers.14.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 67 |

+

"model.layers.14.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 68 |

+

"model.layers.14.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 69 |

+

"model.layers.14.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 70 |

+

"model.layers.14.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 71 |

+

"model.layers.15.input_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 72 |

+

"model.layers.15.mlp.down_proj.weight": "model-00003-of-00006.safetensors",

|

| 73 |

+

"model.layers.15.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 74 |

+

"model.layers.15.mlp.up_proj.weight": "model-00003-of-00006.safetensors",

|

| 75 |

+

"model.layers.15.post_attention_layernorm.weight": "model-00003-of-00006.safetensors",

|

| 76 |

+

"model.layers.15.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 77 |

+

"model.layers.15.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 78 |

+

"model.layers.15.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 79 |

+

"model.layers.15.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 80 |

+

"model.layers.16.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 81 |

+

"model.layers.16.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 82 |

+

"model.layers.16.mlp.gate_proj.weight": "model-00003-of-00006.safetensors",

|

| 83 |

+

"model.layers.16.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 84 |

+

"model.layers.16.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 85 |

+

"model.layers.16.self_attn.k_proj.weight": "model-00003-of-00006.safetensors",

|

| 86 |

+

"model.layers.16.self_attn.o_proj.weight": "model-00003-of-00006.safetensors",

|

| 87 |

+

"model.layers.16.self_attn.q_proj.weight": "model-00003-of-00006.safetensors",

|

| 88 |

+

"model.layers.16.self_attn.v_proj.weight": "model-00003-of-00006.safetensors",

|

| 89 |

+

"model.layers.17.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 90 |

+

"model.layers.17.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 91 |

+

"model.layers.17.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 92 |

+

"model.layers.17.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 93 |

+

"model.layers.17.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 94 |

+

"model.layers.17.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 95 |

+

"model.layers.17.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 96 |

+

"model.layers.17.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 97 |

+

"model.layers.17.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 98 |

+

"model.layers.18.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 99 |

+

"model.layers.18.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 100 |

+

"model.layers.18.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 101 |

+

"model.layers.18.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 102 |

+

"model.layers.18.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 103 |

+

"model.layers.18.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 104 |

+

"model.layers.18.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 105 |

+

"model.layers.18.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 106 |

+

"model.layers.18.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 107 |

+

"model.layers.19.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 108 |

+

"model.layers.19.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 109 |

+

"model.layers.19.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 110 |

+

"model.layers.19.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 111 |

+

"model.layers.19.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 112 |

+

"model.layers.19.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 113 |

+

"model.layers.19.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 114 |

+

"model.layers.19.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 115 |

+

"model.layers.19.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 116 |

+

"model.layers.2.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 117 |

+

"model.layers.2.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 118 |

+

"model.layers.2.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 119 |

+

"model.layers.2.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 120 |

+

"model.layers.2.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 121 |

+

"model.layers.2.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 122 |

+

"model.layers.2.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 123 |

+

"model.layers.2.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 124 |

+

"model.layers.2.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 125 |

+

"model.layers.20.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 126 |

+

"model.layers.20.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 127 |

+

"model.layers.20.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 128 |

+

"model.layers.20.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 129 |

+

"model.layers.20.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 130 |

+

"model.layers.20.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 131 |

+

"model.layers.20.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 132 |

+

"model.layers.20.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 133 |

+

"model.layers.20.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 134 |

+

"model.layers.21.input_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 135 |

+

"model.layers.21.mlp.down_proj.weight": "model-00004-of-00006.safetensors",

|

| 136 |

+

"model.layers.21.mlp.gate_proj.weight": "model-00004-of-00006.safetensors",

|

| 137 |

+

"model.layers.21.mlp.up_proj.weight": "model-00004-of-00006.safetensors",

|

| 138 |

+

"model.layers.21.post_attention_layernorm.weight": "model-00004-of-00006.safetensors",

|

| 139 |

+

"model.layers.21.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 140 |

+

"model.layers.21.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 141 |

+

"model.layers.21.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 142 |

+

"model.layers.21.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 143 |

+

"model.layers.22.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 144 |

+

"model.layers.22.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 145 |

+

"model.layers.22.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 146 |

+

"model.layers.22.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 147 |

+

"model.layers.22.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 148 |

+

"model.layers.22.self_attn.k_proj.weight": "model-00004-of-00006.safetensors",

|

| 149 |

+

"model.layers.22.self_attn.o_proj.weight": "model-00004-of-00006.safetensors",

|

| 150 |

+

"model.layers.22.self_attn.q_proj.weight": "model-00004-of-00006.safetensors",

|

| 151 |

+

"model.layers.22.self_attn.v_proj.weight": "model-00004-of-00006.safetensors",

|

| 152 |

+

"model.layers.23.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 153 |

+

"model.layers.23.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 154 |

+

"model.layers.23.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 155 |

+

"model.layers.23.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 156 |

+

"model.layers.23.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 157 |

+

"model.layers.23.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 158 |

+

"model.layers.23.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 159 |

+

"model.layers.23.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 160 |

+

"model.layers.23.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 161 |

+

"model.layers.24.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 162 |

+

"model.layers.24.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 163 |

+

"model.layers.24.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 164 |

+

"model.layers.24.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 165 |

+

"model.layers.24.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 166 |

+

"model.layers.24.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 167 |

+

"model.layers.24.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 168 |

+

"model.layers.24.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 169 |

+

"model.layers.24.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 170 |

+

"model.layers.25.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 171 |

+

"model.layers.25.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 172 |

+

"model.layers.25.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 173 |

+

"model.layers.25.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 174 |

+

"model.layers.25.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 175 |

+

"model.layers.25.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 176 |

+

"model.layers.25.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 177 |

+

"model.layers.25.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 178 |

+

"model.layers.25.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 179 |

+

"model.layers.26.input_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 180 |

+

"model.layers.26.mlp.down_proj.weight": "model-00005-of-00006.safetensors",

|

| 181 |

+

"model.layers.26.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 182 |

+

"model.layers.26.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 183 |

+

"model.layers.26.post_attention_layernorm.weight": "model-00005-of-00006.safetensors",

|

| 184 |

+

"model.layers.26.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 185 |

+

"model.layers.26.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 186 |

+

"model.layers.26.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 187 |

+

"model.layers.26.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 188 |

+

"model.layers.27.input_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 189 |

+

"model.layers.27.mlp.down_proj.weight": "model-00006-of-00006.safetensors",

|

| 190 |

+

"model.layers.27.mlp.gate_proj.weight": "model-00005-of-00006.safetensors",

|

| 191 |

+

"model.layers.27.mlp.up_proj.weight": "model-00005-of-00006.safetensors",

|

| 192 |

+

"model.layers.27.post_attention_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 193 |

+

"model.layers.27.self_attn.k_proj.weight": "model-00005-of-00006.safetensors",

|

| 194 |

+

"model.layers.27.self_attn.o_proj.weight": "model-00005-of-00006.safetensors",

|

| 195 |

+

"model.layers.27.self_attn.q_proj.weight": "model-00005-of-00006.safetensors",

|

| 196 |

+

"model.layers.27.self_attn.v_proj.weight": "model-00005-of-00006.safetensors",

|

| 197 |

+

"model.layers.28.input_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 198 |

+

"model.layers.28.mlp.down_proj.weight": "model-00006-of-00006.safetensors",

|

| 199 |

+

"model.layers.28.mlp.gate_proj.weight": "model-00006-of-00006.safetensors",

|

| 200 |

+

"model.layers.28.mlp.up_proj.weight": "model-00006-of-00006.safetensors",

|

| 201 |

+

"model.layers.28.post_attention_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 202 |

+

"model.layers.28.self_attn.k_proj.weight": "model-00006-of-00006.safetensors",

|

| 203 |

+

"model.layers.28.self_attn.o_proj.weight": "model-00006-of-00006.safetensors",

|

| 204 |

+

"model.layers.28.self_attn.q_proj.weight": "model-00006-of-00006.safetensors",

|

| 205 |

+

"model.layers.28.self_attn.v_proj.weight": "model-00006-of-00006.safetensors",

|

| 206 |

+

"model.layers.29.input_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 207 |

+

"model.layers.29.mlp.down_proj.weight": "model-00006-of-00006.safetensors",

|

| 208 |

+

"model.layers.29.mlp.gate_proj.weight": "model-00006-of-00006.safetensors",

|

| 209 |

+

"model.layers.29.mlp.up_proj.weight": "model-00006-of-00006.safetensors",

|

| 210 |

+

"model.layers.29.post_attention_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 211 |

+

"model.layers.29.self_attn.k_proj.weight": "model-00006-of-00006.safetensors",

|

| 212 |

+

"model.layers.29.self_attn.o_proj.weight": "model-00006-of-00006.safetensors",

|

| 213 |

+

"model.layers.29.self_attn.q_proj.weight": "model-00006-of-00006.safetensors",

|

| 214 |

+

"model.layers.29.self_attn.v_proj.weight": "model-00006-of-00006.safetensors",

|

| 215 |

+

"model.layers.3.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 216 |

+

"model.layers.3.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 217 |

+

"model.layers.3.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 218 |

+

"model.layers.3.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 219 |

+

"model.layers.3.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 220 |

+

"model.layers.3.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 221 |

+

"model.layers.3.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 222 |

+

"model.layers.3.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 223 |

+

"model.layers.3.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 224 |

+

"model.layers.30.input_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 225 |

+

"model.layers.30.mlp.down_proj.weight": "model-00006-of-00006.safetensors",

|

| 226 |

+

"model.layers.30.mlp.gate_proj.weight": "model-00006-of-00006.safetensors",

|

| 227 |

+

"model.layers.30.mlp.up_proj.weight": "model-00006-of-00006.safetensors",

|

| 228 |

+

"model.layers.30.post_attention_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 229 |

+

"model.layers.30.self_attn.k_proj.weight": "model-00006-of-00006.safetensors",

|

| 230 |

+

"model.layers.30.self_attn.o_proj.weight": "model-00006-of-00006.safetensors",

|

| 231 |

+

"model.layers.30.self_attn.q_proj.weight": "model-00006-of-00006.safetensors",

|

| 232 |

+

"model.layers.30.self_attn.v_proj.weight": "model-00006-of-00006.safetensors",

|

| 233 |

+

"model.layers.31.input_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 234 |

+

"model.layers.31.mlp.down_proj.weight": "model-00006-of-00006.safetensors",

|

| 235 |

+

"model.layers.31.mlp.gate_proj.weight": "model-00006-of-00006.safetensors",

|

| 236 |

+

"model.layers.31.mlp.up_proj.weight": "model-00006-of-00006.safetensors",

|

| 237 |

+

"model.layers.31.post_attention_layernorm.weight": "model-00006-of-00006.safetensors",

|

| 238 |

+

"model.layers.31.self_attn.k_proj.weight": "model-00006-of-00006.safetensors",

|

| 239 |

+

"model.layers.31.self_attn.o_proj.weight": "model-00006-of-00006.safetensors",

|

| 240 |

+

"model.layers.31.self_attn.q_proj.weight": "model-00006-of-00006.safetensors",

|

| 241 |

+

"model.layers.31.self_attn.v_proj.weight": "model-00006-of-00006.safetensors",

|

| 242 |

+

"model.layers.4.input_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 243 |

+

"model.layers.4.mlp.down_proj.weight": "model-00001-of-00006.safetensors",

|

| 244 |

+

"model.layers.4.mlp.gate_proj.weight": "model-00001-of-00006.safetensors",

|

| 245 |

+

"model.layers.4.mlp.up_proj.weight": "model-00001-of-00006.safetensors",

|

| 246 |

+

"model.layers.4.post_attention_layernorm.weight": "model-00001-of-00006.safetensors",

|

| 247 |

+

"model.layers.4.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 248 |

+

"model.layers.4.self_attn.o_proj.weight": "model-00001-of-00006.safetensors",

|

| 249 |

+

"model.layers.4.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 250 |

+

"model.layers.4.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 251 |

+

"model.layers.5.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 252 |

+

"model.layers.5.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 253 |

+

"model.layers.5.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 254 |

+

"model.layers.5.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 255 |

+

"model.layers.5.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 256 |

+

"model.layers.5.self_attn.k_proj.weight": "model-00001-of-00006.safetensors",

|

| 257 |

+

"model.layers.5.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 258 |

+

"model.layers.5.self_attn.q_proj.weight": "model-00001-of-00006.safetensors",

|

| 259 |

+

"model.layers.5.self_attn.v_proj.weight": "model-00001-of-00006.safetensors",

|

| 260 |

+

"model.layers.6.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 261 |

+

"model.layers.6.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 262 |

+

"model.layers.6.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 263 |

+

"model.layers.6.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 264 |

+

"model.layers.6.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 265 |

+

"model.layers.6.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 266 |

+

"model.layers.6.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 267 |

+

"model.layers.6.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 268 |

+

"model.layers.6.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 269 |

+

"model.layers.7.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 270 |

+

"model.layers.7.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 271 |

+

"model.layers.7.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 272 |

+

"model.layers.7.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 273 |

+

"model.layers.7.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 274 |

+

"model.layers.7.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 275 |

+

"model.layers.7.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 276 |

+

"model.layers.7.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 277 |

+

"model.layers.7.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 278 |

+

"model.layers.8.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 279 |

+

"model.layers.8.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 280 |

+

"model.layers.8.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 281 |

+

"model.layers.8.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 282 |

+

"model.layers.8.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 283 |

+

"model.layers.8.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 284 |

+

"model.layers.8.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 285 |

+

"model.layers.8.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 286 |

+

"model.layers.8.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 287 |

+

"model.layers.9.input_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 288 |

+

"model.layers.9.mlp.down_proj.weight": "model-00002-of-00006.safetensors",

|

| 289 |

+

"model.layers.9.mlp.gate_proj.weight": "model-00002-of-00006.safetensors",

|

| 290 |

+

"model.layers.9.mlp.up_proj.weight": "model-00002-of-00006.safetensors",

|

| 291 |

+

"model.layers.9.post_attention_layernorm.weight": "model-00002-of-00006.safetensors",

|

| 292 |

+

"model.layers.9.self_attn.k_proj.weight": "model-00002-of-00006.safetensors",

|

| 293 |

+

"model.layers.9.self_attn.o_proj.weight": "model-00002-of-00006.safetensors",

|

| 294 |

+

"model.layers.9.self_attn.q_proj.weight": "model-00002-of-00006.safetensors",

|

| 295 |

+

"model.layers.9.self_attn.v_proj.weight": "model-00002-of-00006.safetensors",

|

| 296 |

+

"model.norm.weight": "model-00006-of-00006.safetensors"

|

| 297 |

+

}

|

| 298 |

+

}

|

output.safetensors

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8ae2a4f2f845cca2d45708b49f7ed8b15c66bf634df56a112635c9e5e5363855

|

| 3 |

+

size 5605782540

|

special_tokens_map.json

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"bos_token": {

|

| 3 |

+

"content": "<s>",

|

| 4 |

+

"lstrip": false,

|

| 5 |

+

"normalized": false,

|

| 6 |

+

"rstrip": false,

|

| 7 |

+

"single_word": false

|

| 8 |

+

},

|

| 9 |

+

"eos_token": {

|

| 10 |

+

"content": "</s>",

|

| 11 |

+

"lstrip": false,

|

| 12 |

+

"normalized": false,

|

| 13 |

+

"rstrip": false,

|

| 14 |

+

"single_word": false

|

| 15 |

+

},

|

| 16 |

+

"pad_token": "</s>",

|

| 17 |

+

"unk_token": {

|

| 18 |

+

"content": "<unk>",

|

| 19 |

+

"lstrip": false,

|

| 20 |

+

"normalized": false,

|

| 21 |

+

"rstrip": false,

|

| 22 |

+

"single_word": false

|

| 23 |

+

}

|

| 24 |

+

}

|

tokenizer.json

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

tokenizer_config.json

ADDED

|

@@ -0,0 +1,43 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"add_bos_token": true,

|

| 3 |

+

"add_eos_token": false,

|

| 4 |

+

"added_tokens_decoder": {

|

| 5 |

+

"0": {

|

| 6 |

+

"content": "<unk>",

|

| 7 |

+

"lstrip": false,

|

| 8 |

+

"normalized": false,

|

| 9 |

+

"rstrip": false,

|

| 10 |

+

"single_word": false,

|

| 11 |

+

"special": true

|

| 12 |

+

},

|

| 13 |

+

"1": {

|

| 14 |

+

"content": "<s>",

|

| 15 |

+

"lstrip": false,

|

| 16 |

+

"normalized": false,

|

| 17 |

+

"rstrip": false,

|

| 18 |

+

"single_word": false,

|

| 19 |

+

"special": true

|

| 20 |

+

},

|

| 21 |

+

"2": {

|

| 22 |

+

"content": "</s>",

|

| 23 |

+

"lstrip": false,

|

| 24 |

+

"normalized": false,

|

| 25 |

+

"rstrip": false,

|

| 26 |

+

"single_word": false,

|

| 27 |

+

"special": true

|

| 28 |

+

}

|

| 29 |

+

},

|

| 30 |

+

"additional_special_tokens": [],

|

| 31 |

+

"bos_token": "<s>",

|

| 32 |

+

"chat_template": "{{ bos_token }}{% for message in messages %}{% if (message['role'] == 'user') != (loop.index0 % 2 == 0) %}{{ raise_exception('Conversation roles must alternate user/assistant/user/assistant/...') }}{% endif %}{% if message['role'] == 'user' %}{{ '[INST] ' + message['content'] + ' [/INST]' }}{% elif message['role'] == 'assistant' %}{{ message['content'] + eos_token}}{% else %}{{ raise_exception('Only user and assistant roles are supported!') }}{% endif %}{% endfor %}",

|

| 33 |

+

"clean_up_tokenization_spaces": false,

|

| 34 |

+

"eos_token": "</s>",

|

| 35 |

+

"legacy": true,

|

| 36 |

+

"model_max_length": 1000000000000000019884624838656,

|

| 37 |

+

"pad_token": "</s>",

|

| 38 |

+

"sp_model_kwargs": {},

|

| 39 |

+

"spaces_between_special_tokens": false,

|

| 40 |

+

"tokenizer_class": "LlamaTokenizer",

|

| 41 |

+

"unk_token": "<unk>",

|

| 42 |

+

"use_default_system_prompt": false

|

| 43 |

+

}

|