Commit

·

5ab77b0

1

Parent(s):

18f889e

model push

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- model/.msc +0 -0

- model/.mv +1 -0

- model/SenseVoiceSmall/README.md +219 -0

- model/SenseVoiceSmall/am.mvn +8 -0

- model/SenseVoiceSmall/chn_jpn_yue_eng_ko_spectok.bpe.model +3 -0

- model/SenseVoiceSmall/config.yaml +98 -0

- model/SenseVoiceSmall/configuration.json +14 -0

- model/SenseVoiceSmall/example/.DS_Store +0 -0

- model/SenseVoiceSmall/example/en.mp3 +0 -0

- model/SenseVoiceSmall/example/ja.mp3 +0 -0

- model/SenseVoiceSmall/example/ko.mp3 +0 -0

- model/SenseVoiceSmall/example/yue.mp3 +0 -0

- model/SenseVoiceSmall/example/zh.mp3 +0 -0

- model/SenseVoiceSmall/fig/aed_figure.png +0 -0

- model/SenseVoiceSmall/fig/asr_results.png +0 -0

- model/SenseVoiceSmall/fig/inference.png +0 -0

- model/SenseVoiceSmall/fig/sensevoice.png +0 -0

- model/SenseVoiceSmall/fig/ser_figure.png +0 -0

- model/SenseVoiceSmall/fig/ser_table.png +0 -0

- model/SenseVoiceSmall/model.pt +3 -0

- model/SenseVoiceSmall/model.py +895 -0

- model/SenseVoiceSmall/tokens.json +0 -0

- model/bert-base-chinese/.gitattributes +10 -0

- model/bert-base-chinese/README.md +75 -0

- model/bert-base-chinese/config.json +25 -0

- model/bert-base-chinese/flax_model.msgpack +3 -0

- model/bert-base-chinese/model.safetensors +3 -0

- model/bert-base-chinese/pytorch_model.bin +3 -0

- model/bert-base-chinese/tf_model.h5 +3 -0

- model/bert-base-chinese/tokenizer.json +0 -0

- model/bert-base-chinese/tokenizer_config.json +1 -0

- model/bert-base-chinese/vocab.txt +0 -0

- model/fsmn_vad/.msc +0 -0

- model/fsmn_vad/.mv +1 -0

- model/fsmn_vad/README.md +217 -0

- model/fsmn_vad/am.mvn +8 -0

- model/fsmn_vad/config.yaml +56 -0

- model/fsmn_vad/configuration.json +13 -0

- model/fsmn_vad/example/vad_example.wav +3 -0

- model/fsmn_vad/fig/struct.png +0 -0

- model/fsmn_vad/model.pt +3 -0

- model/gaudio/am.mvn +8 -0

- model/gaudio/audio_encoder.pt +3 -0

- model/gaudio/config.json +69 -0

- model/gaudio/preprocessor_config.json +82 -0

- model/gaudio/preprocessor_config.json.bak +25 -0

- model/gaudio/special_tokens_map.json +37 -0

- model/gaudio/text_encoder.pt +3 -0

- model/gaudio/tokenizer.json +0 -0

.gitattributes

CHANGED

|

@@ -38,3 +38,4 @@ results/check/speech_j.joblib filter=lfs diff=lfs merge=lfs -text

|

|

| 38 |

results/track_record/collator_print_first.joblib filter=lfs diff=lfs merge=lfs -text

|

| 39 |

results/track_record/outputs_loss.joblib filter=lfs diff=lfs merge=lfs -text

|

| 40 |

results/track_record/text_projection.joblib filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 38 |

results/track_record/collator_print_first.joblib filter=lfs diff=lfs merge=lfs -text

|

| 39 |

results/track_record/outputs_loss.joblib filter=lfs diff=lfs merge=lfs -text

|

| 40 |

results/track_record/text_projection.joblib filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

model/fsmn_vad/example/vad_example.wav filter=lfs diff=lfs merge=lfs -text

|

model/.msc

ADDED

|

Binary file (1.35 kB). View file

|

|

|

model/.mv

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

Revision:master,CreatedAt:1727321787

|

model/SenseVoiceSmall/README.md

ADDED

|

@@ -0,0 +1,219 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

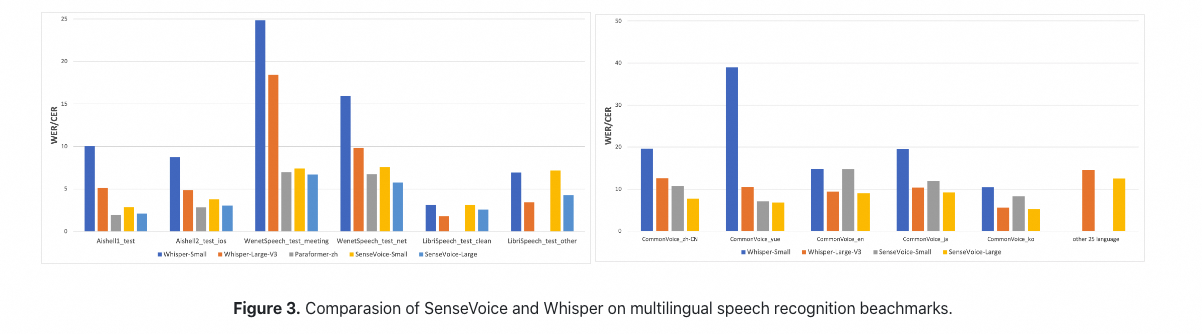

|

|

|

|

|

|

|

|

|

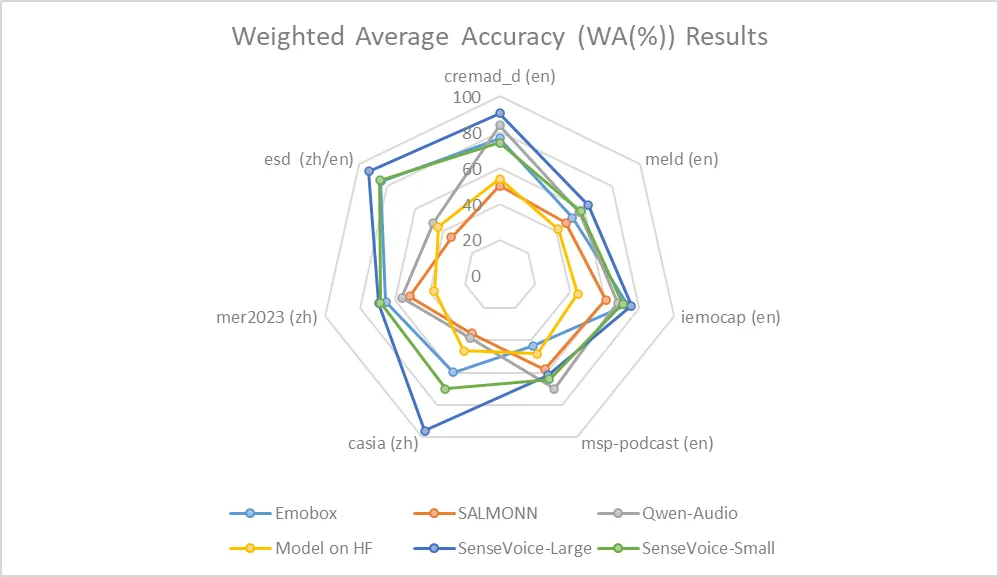

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

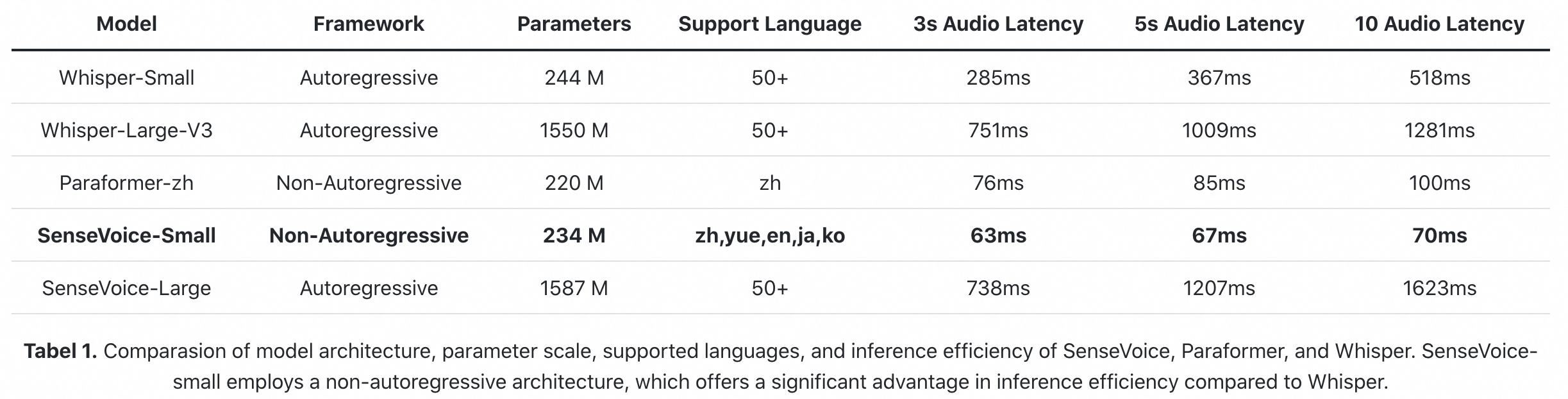

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

frameworks:

|

| 3 |

+

- Pytorch

|

| 4 |

+

license: Apache License 2.0

|

| 5 |

+

tasks:

|

| 6 |

+

- auto-speech-recognition

|

| 7 |

+

|

| 8 |

+

#model-type:

|

| 9 |

+

##如 gpt、phi、llama、chatglm、baichuan 等

|

| 10 |

+

#- gpt

|

| 11 |

+

|

| 12 |

+

#domain:

|

| 13 |

+

##如 nlp、cv、audio、multi-modal

|

| 14 |

+

#- nlp

|

| 15 |

+

|

| 16 |

+

#language:

|

| 17 |

+

##语言代码列表 https://help.aliyun.com/document_detail/215387.html?spm=a2c4g.11186623.0.0.9f8d7467kni6Aa

|

| 18 |

+

#- cn

|

| 19 |

+

|

| 20 |

+

#metrics:

|

| 21 |

+

##如 CIDEr、Blue、ROUGE 等

|

| 22 |

+

#- CIDEr

|

| 23 |

+

|

| 24 |

+

#tags:

|

| 25 |

+

##各种自定义,包括 pretrained、fine-tuned、instruction-tuned、RL-tuned 等训练方法和其他

|

| 26 |

+

#- pretrained

|

| 27 |

+

|

| 28 |

+

#tools:

|

| 29 |

+

##如 vllm、fastchat、llamacpp、AdaSeq 等

|

| 30 |

+

#- vllm

|

| 31 |

+

---

|

| 32 |

+

|

| 33 |

+

# Highlights

|

| 34 |

+

**SenseVoice**专注于高精度多语言语音识别、情感辨识和音频事件检测

|

| 35 |

+

- **多语言识别:** 采用超过40万小时数据训练,支持超过50种语言,识别效果上优于Whisper模型。

|

| 36 |

+

- **富文本识别:**

|

| 37 |

+

- 具备优秀的情感识别,能够在测试数据上达到和超过目前最佳情感识别模型的效果。

|

| 38 |

+

- 支持声音事件检测能力,支持音乐、掌声、笑声、哭声、咳嗽、喷嚏等多种常见人机交互事件进行检测。

|

| 39 |

+

- **高效推理:** SenseVoice-Small模型采用非自回归端到端框架,推理延迟极低,10s音频推理仅耗时70ms,15倍优于Whisper-Large。

|

| 40 |

+

- **微调定制:** 具备便捷的微调脚本与策略,方便用户根据业务场景修复长尾样本问题。

|

| 41 |

+

- **服务部署:** 具有完整的服务部署链路,支持多并发请求,支持客户端语言有,python、c++、html、java与c#等。

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

## <strong>[SenseVoice开源项目介绍](https://github.com/FunAudioLLM/SenseVoice)</strong>

|

| 45 |

+

<strong>[SenseVoice](https://github.com/FunAudioLLM/SenseVoice)</strong>开源模型是多语言音频理解模型,具有包括语音识别、语种识别、语音情感识别,声学事件检测能力。

|

| 46 |

+

|

| 47 |

+

[**github仓库**](https://github.com/FunAudioLLM/SenseVoice)

|

| 48 |

+

| [**最新动态**](https://github.com/FunAudioLLM/SenseVoice/blob/main/README_zh.md#%E6%9C%80%E6%96%B0%E5%8A%A8%E6%80%81)

|

| 49 |

+

| [**环境安装**](https://github.com/FunAudioLLM/SenseVoice/blob/main/README_zh.md#%E7%8E%AF%E5%A2%83%E5%AE%89%E8%A3%85)

|

| 50 |

+

|

| 51 |

+

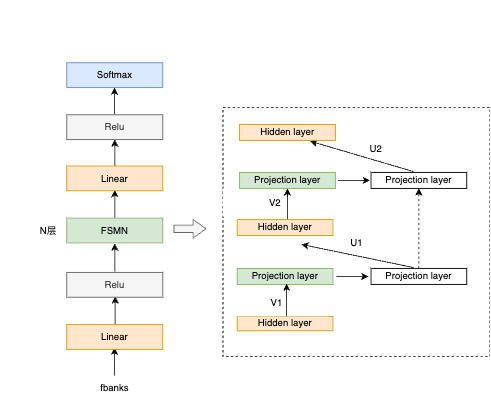

# 模型结构图

|

| 52 |

+

SenseVoice多语言音频理解模型,支持语音识别、语种识别、语音情感识别、声学事件检测、逆文本正则化等能力,采用工业级数十万小时的标注音频进行模型训练,保证了模型的通用识别效果。模型可以被应用于中文、粤语、英语、日语、韩语音频识别,并输出带有情感和事件的富文本转写结果。

|

| 53 |

+

|

| 54 |

+

<p align="center">

|

| 55 |

+

<img src="fig/sensevoice.png" alt="SenseVoice模型结构" width="1500" />

|

| 56 |

+

</p>

|

| 57 |

+

|

| 58 |

+

SenseVoice-Small是基于非自回归端到端框架模型,为了指定任务,我们在语音特征前添加四个嵌入作为输入传递给编码器:

|

| 59 |

+

- LID:用于预测音频语种标签。

|

| 60 |

+

- SER:用于预测音频情感标签。

|

| 61 |

+

- AED:用于预测音频包含的事件标签。

|

| 62 |

+

- ITN:用于指定识别输出文本是否进行逆文本正则化。

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

# 依赖环境

|

| 66 |

+

|

| 67 |

+

推理之前,请务必更新funasr与modelscope版本

|

| 68 |

+

|

| 69 |

+

```shell

|

| 70 |

+

pip install -U funasr modelscope

|

| 71 |

+

```

|

| 72 |

+

|

| 73 |

+

# 用法

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

## 推理

|

| 77 |

+

|

| 78 |

+

### modelscope pipeline推理

|

| 79 |

+

```python

|

| 80 |

+

from modelscope.pipelines import pipeline

|

| 81 |

+

from modelscope.utils.constant import Tasks

|

| 82 |

+

|

| 83 |

+

inference_pipeline = pipeline(

|

| 84 |

+

task=Tasks.auto_speech_recognition,

|

| 85 |

+

model='iic/SenseVoiceSmall',

|

| 86 |

+

model_revision="master",

|

| 87 |

+

device="cuda:0",)

|

| 88 |

+

|

| 89 |

+

rec_result = inference_pipeline('https://isv-data.oss-cn-hangzhou.aliyuncs.com/ics/MaaS/ASR/test_audio/asr_example_zh.wav')

|

| 90 |

+

print(rec_result)

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

### 使用funasr推理

|

| 94 |

+

|

| 95 |

+

支持任意格式音频输入,支持任意时长输入

|

| 96 |

+

|

| 97 |

+

```python

|

| 98 |

+

from funasr import AutoModel

|

| 99 |

+

from funasr.utils.postprocess_utils import rich_transcription_postprocess

|

| 100 |

+

|

| 101 |

+

model_dir = "iic/SenseVoiceSmall"

|

| 102 |

+

|

| 103 |

+

|

| 104 |

+

model = AutoModel(

|

| 105 |

+

model=model_dir,

|

| 106 |

+

trust_remote_code=True,

|

| 107 |

+

remote_code="./model.py",

|

| 108 |

+

vad_model="fsmn-vad",

|

| 109 |

+

vad_kwargs={"max_single_segment_time": 30000},

|

| 110 |

+

device="cuda:0",

|

| 111 |

+

)

|

| 112 |

+

|

| 113 |

+

# en

|

| 114 |

+

res = model.generate(

|

| 115 |

+

input=f"{model.model_path}/example/en.mp3",

|

| 116 |

+

cache={},

|

| 117 |

+

language="auto", # "zn", "en", "yue", "ja", "ko", "nospeech"

|

| 118 |

+

use_itn=True,

|

| 119 |

+

batch_size_s=60,

|

| 120 |

+

merge_vad=True, #

|

| 121 |

+

merge_length_s=15,

|

| 122 |

+

)

|

| 123 |

+

text = rich_transcription_postprocess(res[0]["text"])

|

| 124 |

+

print(text)

|

| 125 |

+

```

|

| 126 |

+

参数说明:

|

| 127 |

+

- `model_dir`:模型名称,或本地磁盘中的模型路径。

|

| 128 |

+

- `trust_remote_code`:

|

| 129 |

+

- `True`表示model代码实现从`remote_code`处加载,`remote_code`指定`model`具体代码的位置(例如,当前目录下的`model.py`),支持绝对路径与相对路径,以及网络url。

|

| 130 |

+

- `False`表示,model代码实现为 [FunASR](https://github.com/modelscope/FunASR) 内部集成版本,此时修改当前目录下的`model.py`不会生效,因为加载的是funasr内部版本,模型代码[点击查看](https://github.com/modelscope/FunASR/tree/main/funasr/models/sense_voice)。

|

| 131 |

+

- `vad_model`:表示开启VAD,VAD的作用是将长音频切割成短音频,此时推理耗时包括了VAD与SenseVoice总耗时,为链路耗时,如果需要单独测试SenseVoice模型耗时,可以关闭VAD模型。

|

| 132 |

+

- `vad_kwargs`:表示VAD模型配置,`max_single_segment_time`: 表示`vad_model`最大切割音频时长, 单位是毫秒ms。

|

| 133 |

+

- `use_itn`:输出结果中是否包含标点与逆文本正则化。

|

| 134 |

+

- `batch_size_s` 表示采用动态batch,batch中总音频时长,单位为秒s。

|

| 135 |

+

- `merge_vad`:是否将 vad 模型切割的短音频碎片合成,合并后长度为`merge_length_s`,单位为秒s。

|

| 136 |

+

- `ban_emo_unk`:禁用emo_unk标签,禁用后所有的句子都会被赋与情感标签。默认`False`

|

| 137 |

+

|

| 138 |

+

```python

|

| 139 |

+

model = AutoModel(model=model_dir, trust_remote_code=True, device="cuda:0")

|

| 140 |

+

|

| 141 |

+

res = model.generate(

|

| 142 |

+

input=f"{model.model_path}/example/en.mp3",

|

| 143 |

+

cache={},

|

| 144 |

+

language="auto", # "zn", "en", "yue", "ja", "ko", "nospeech"

|

| 145 |

+

use_itn=True,

|

| 146 |

+

batch_size=64,

|

| 147 |

+

)

|

| 148 |

+

```

|

| 149 |

+

|

| 150 |

+

更多详细用法,请参考 [文档](https://github.com/modelscope/FunASR/blob/main/docs/tutorial/README.md)

|

| 151 |

+

|

| 152 |

+

|

| 153 |

+

|

| 154 |

+

## 模型下载

|

| 155 |

+

上面代码会自动下载模型,如果您需要离线下载好模型,可以通过下面代码,手动下载,之后指定模型本地路径即可。

|

| 156 |

+

|

| 157 |

+

SDK下载

|

| 158 |

+

```bash

|

| 159 |

+

#安装ModelScope

|

| 160 |

+

pip install modelscope

|

| 161 |

+

```

|

| 162 |

+

```python

|

| 163 |

+

#SDK模型下载

|

| 164 |

+

from modelscope import snapshot_download

|

| 165 |

+

model_dir = snapshot_download('iic/SenseVoiceSmall')

|

| 166 |

+

```

|

| 167 |

+

Git下载

|

| 168 |

+

```

|

| 169 |

+

#Git模型下载

|

| 170 |

+

git clone https://www.modelscope.cn/iic/SenseVoiceSmall.git

|

| 171 |

+

```

|

| 172 |

+

|

| 173 |

+

## 服务部署

|

| 174 |

+

|

| 175 |

+

Undo

|

| 176 |

+

|

| 177 |

+

# Performance

|

| 178 |

+

|

| 179 |

+

## 语音识别效果

|

| 180 |

+

我们在开源基准数据集(包括 AISHELL-1、AISHELL-2、Wenetspeech、Librispeech和Common Voice)上比较了SenseVoice与Whisper的多语言语音识别性能和推理效率。在中文和粤语识别效果上,SenseVoice-Small模型具有明显的效果优势。

|

| 181 |

+

|

| 182 |

+

<p align="center">

|

| 183 |

+

<img src="fig/asr_results.png" alt="SenseVoice模型在开源测试集上的表现" width="2500" />

|

| 184 |

+

</p>

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

|

| 188 |

+

## 情感识别效果

|

| 189 |

+

由于目前缺乏被广泛使用的情感识别测试指标和方法,我们在多个测试集的多种指标进行测试,并与近年来Benchmark上的多个结果进行了全面的对比。所选取的测试集同时包含中文/英文两种语言以及表演、影视剧、自然对话等多种风格的数据,在不进行目标数据微调的前提下,SenseVoice能够在测试数据上达到和超过目前最佳情感识别模型的效果。

|

| 190 |

+

|

| 191 |

+

<p align="center">

|

| 192 |

+

<img src="fig/ser_table.png" alt="SenseVoice模型SER效果1" width="1500" />

|

| 193 |

+

</p>

|

| 194 |

+

|

| 195 |

+

同时,我们还在测试集上对多个开源情感识别模型进行对比,结果表明,SenseVoice-Large模型可以在几乎所有数据上都达到了最佳效果,而SenseVoice-Small模型同样可以在多数数据集上取得超越其他开源模型的效果。

|

| 196 |

+

|

| 197 |

+

<p align="center">

|

| 198 |

+

<img src="fig/ser_figure.png" alt="SenseVoice模型SER效果2" width="500" />

|

| 199 |

+

</p>

|

| 200 |

+

|

| 201 |

+

## 事件检测效果

|

| 202 |

+

|

| 203 |

+

尽管SenseVoice只在语音数据上进行训练,它仍然可以作为事件检测模型进行单独使用。我们在环境音分类ESC-50数据集上与目前业内广泛使用的BEATS与PANN模型的效果进行了对比。SenseVoice模型能够在这些任务上取得较好的效果,但受限于训练数据与训练方式,其事件分类效果专业的事件检测模型相比仍然有一定的差距。

|

| 204 |

+

|

| 205 |

+

<p align="center">

|

| 206 |

+

<img src="fig/aed_figure.png" alt="SenseVoice模型AED效果" width="500" />

|

| 207 |

+

</p>

|

| 208 |

+

|

| 209 |

+

|

| 210 |

+

|

| 211 |

+

## 推理效率

|

| 212 |

+

SenseVoice-Small模型采用非自回归端到端架构,推理延迟极低。在参数量与Whisper-Small模型相当的情况下,比Whisper-Small模型推理速度快7倍,比Whisper-Large模型快17倍。同时SenseVoice-small模型在音频时长增加的情况下,推理耗时也无明显增加。

|

| 213 |

+

|

| 214 |

+

|

| 215 |

+

<p align="center">

|

| 216 |

+

<img src="fig/inference.png" alt="SenseVoice模型的推理效率" width="1500" />

|

| 217 |

+

</p>

|

| 218 |

+

|

| 219 |

+

<p style="color: lightgrey;">如果您是本模型的贡献者,我们邀请您根据<a href="https://modelscope.cn/docs/ModelScope%E6%A8%A1%E5%9E%8B%E6%8E%A5%E5%85%A5%E6%B5%81%E7%A8%8B%E6%A6%82%E8%A7%88" style="color: lightgrey; text-decoration: underline;">模型贡献文档</a>,及时完善模型卡片内容。</p>

|

model/SenseVoiceSmall/am.mvn

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<Nnet>

|

| 2 |

+

<Splice> 560 560

|

| 3 |

+

[ 0 ]

|

| 4 |

+

<AddShift> 560 560

|

| 5 |

+

<LearnRateCoef> 0 [ -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 -8.311879 -8.600912 -9.615928 -10.43595 -11.21292 -11.88333 -12.36243 -12.63706 -12.8818 -12.83066 -12.89103 -12.95666 -13.19763 -13.40598 -13.49113 -13.5546 -13.55639 -13.51915 -13.68284 -13.53289 -13.42107 -13.65519 -13.50713 -13.75251 -13.76715 -13.87408 -13.73109 -13.70412 -13.56073 -13.53488 -13.54895 -13.56228 -13.59408 -13.62047 -13.64198 -13.66109 -13.62669 -13.58297 -13.57387 -13.4739 -13.53063 -13.48348 -13.61047 -13.64716 -13.71546 -13.79184 -13.90614 -14.03098 -14.18205 -14.35881 -14.48419 -14.60172 -14.70591 -14.83362 -14.92122 -15.00622 -15.05122 -15.03119 -14.99028 -14.92302 -14.86927 -14.82691 -14.7972 -14.76909 -14.71356 -14.61277 -14.51696 -14.42252 -14.36405 -14.30451 -14.23161 -14.19851 -14.16633 -14.15649 -14.10504 -13.99518 -13.79562 -13.3996 -12.7767 -11.71208 ]

|

| 6 |

+

<Rescale> 560 560

|

| 7 |

+

<LearnRateCoef> 0 [ 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 0.155775 0.154484 0.1527379 0.1518718 0.1506028 0.1489256 0.147067 0.1447061 0.1436307 0.1443568 0.1451849 0.1455157 0.1452821 0.1445717 0.1439195 0.1435867 0.1436018 0.1438781 0.1442086 0.1448844 0.1454756 0.145663 0.146268 0.1467386 0.1472724 0.147664 0.1480913 0.1483739 0.1488841 0.1493636 0.1497088 0.1500379 0.1502916 0.1505389 0.1506787 0.1507102 0.1505992 0.1505445 0.1505938 0.1508133 0.1509569 0.1512396 0.1514625 0.1516195 0.1516156 0.1515561 0.1514966 0.1513976 0.1512612 0.151076 0.1510596 0.1510431 0.151077 0.1511168 0.1511917 0.151023 0.1508045 0.1505885 0.1503493 0.1502373 0.1501726 0.1500762 0.1500065 0.1499782 0.150057 0.1502658 0.150469 0.1505335 0.1505505 0.1505328 0.1504275 0.1502438 0.1499674 0.1497118 0.1494661 0.1493102 0.1493681 0.1495501 0.1499738 0.1509654 ]

|

| 8 |

+

</Nnet>

|

model/SenseVoiceSmall/chn_jpn_yue_eng_ko_spectok.bpe.model

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:aa87f86064c3730d799ddf7af3c04659151102cba548bce325cf06ba4da4e6a8

|

| 3 |

+

size 377341

|

model/SenseVoiceSmall/config.yaml

ADDED

|

@@ -0,0 +1,98 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

encoder: SenseVoiceEncoderSmall

|

| 2 |

+

encoder_conf:

|

| 3 |

+

output_size: 512

|

| 4 |

+

attention_heads: 4

|

| 5 |

+

linear_units: 2048

|

| 6 |

+

num_blocks: 50

|

| 7 |

+

tp_blocks: 20

|

| 8 |

+

dropout_rate: 0.1

|

| 9 |

+

positional_dropout_rate: 0.1

|

| 10 |

+

attention_dropout_rate: 0.1

|

| 11 |

+

input_layer: pe

|

| 12 |

+

pos_enc_class: SinusoidalPositionEncoder

|

| 13 |

+

normalize_before: true

|

| 14 |

+

kernel_size: 11

|

| 15 |

+

sanm_shfit: 0

|

| 16 |

+

selfattention_layer_type: sanm

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

model: SenseVoiceSmall

|

| 20 |

+

model_conf:

|

| 21 |

+

length_normalized_loss: true

|

| 22 |

+

sos: 1

|

| 23 |

+

eos: 2

|

| 24 |

+

ignore_id: -1

|

| 25 |

+

|

| 26 |

+

tokenizer: SentencepiecesTokenizer

|

| 27 |

+

tokenizer_conf:

|

| 28 |

+

bpemodel: null

|

| 29 |

+

unk_symbol: <unk>

|

| 30 |

+

split_with_space: true

|

| 31 |

+

|

| 32 |

+

frontend: WavFrontend

|

| 33 |

+

frontend_conf:

|

| 34 |

+

fs: 32000

|

| 35 |

+

window: hamming

|

| 36 |

+

n_mels: 80

|

| 37 |

+

frame_length: 25

|

| 38 |

+

frame_shift: 10

|

| 39 |

+

lfr_m: 7

|

| 40 |

+

lfr_n: 6

|

| 41 |

+

cmvn_file: null

|

| 42 |

+

dither: 0.0

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

dataset: SenseVoiceCTCDataset

|

| 46 |

+

dataset_conf:

|

| 47 |

+

index_ds: IndexDSJsonl

|

| 48 |

+

batch_sampler: EspnetStyleBatchSampler

|

| 49 |

+

data_split_num: 32

|

| 50 |

+

batch_type: token

|

| 51 |

+

batch_size: 14000

|

| 52 |

+

max_token_length: 2000

|

| 53 |

+

min_token_length: 60

|

| 54 |

+

max_source_length: 2000

|

| 55 |

+

min_source_length: 60

|

| 56 |

+

max_target_length: 200

|

| 57 |

+

min_target_length: 0

|

| 58 |

+

shuffle: true

|

| 59 |

+

num_workers: 4

|

| 60 |

+

sos: ${model_conf.sos}

|

| 61 |

+

eos: ${model_conf.eos}

|

| 62 |

+

IndexDSJsonl: IndexDSJsonl

|

| 63 |

+

retry: 20

|

| 64 |

+

|

| 65 |

+

train_conf:

|

| 66 |

+

accum_grad: 1

|

| 67 |

+

grad_clip: 5

|

| 68 |

+

max_epoch: 20

|

| 69 |

+

keep_nbest_models: 10

|

| 70 |

+

avg_nbest_model: 10

|

| 71 |

+

log_interval: 100

|

| 72 |

+

resume: true

|

| 73 |

+

validate_interval: 10000

|

| 74 |

+

save_checkpoint_interval: 10000

|

| 75 |

+

|

| 76 |

+

optim: adamw

|

| 77 |

+

optim_conf:

|

| 78 |

+

lr: 0.00002

|

| 79 |

+

scheduler: warmuplr

|

| 80 |

+

scheduler_conf:

|

| 81 |

+

warmup_steps: 25000

|

| 82 |

+

|

| 83 |

+

specaug: SpecAugLFR

|

| 84 |

+

specaug_conf:

|

| 85 |

+

apply_time_warp: false

|

| 86 |

+

time_warp_window: 5

|

| 87 |

+

time_warp_mode: bicubic

|

| 88 |

+

apply_freq_mask: true

|

| 89 |

+

freq_mask_width_range:

|

| 90 |

+

- 0

|

| 91 |

+

- 30

|

| 92 |

+

lfr_rate: 6

|

| 93 |

+

num_freq_mask: 1

|

| 94 |

+

apply_time_mask: true

|

| 95 |

+

time_mask_width_range:

|

| 96 |

+

- 0

|

| 97 |

+

- 12

|

| 98 |

+

num_time_mask: 1

|

model/SenseVoiceSmall/configuration.json

ADDED

|

@@ -0,0 +1,14 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"framework": "pytorch",

|

| 3 |

+

"task" : "auto-speech-recognition",

|

| 4 |

+

"model": {"type" : "funasr"},

|

| 5 |

+

"pipeline": {"type":"funasr-pipeline"},

|

| 6 |

+

"model_name_in_hub": {

|

| 7 |

+

"ms":"",

|

| 8 |

+

"hf":""},

|

| 9 |

+

"file_path_metas": {

|

| 10 |

+

"init_param":"model.pt",

|

| 11 |

+

"config":"config.yaml",

|

| 12 |

+

"tokenizer_conf": {"bpemodel": "chn_jpn_yue_eng_ko_spectok.bpe.model"},

|

| 13 |

+

"frontend_conf":{"cmvn_file": "am.mvn"}}

|

| 14 |

+

}

|

model/SenseVoiceSmall/example/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

model/SenseVoiceSmall/example/en.mp3

ADDED

|

Binary file (57.4 kB). View file

|

|

|

model/SenseVoiceSmall/example/ja.mp3

ADDED

|

Binary file (57.8 kB). View file

|

|

|

model/SenseVoiceSmall/example/ko.mp3

ADDED

|

Binary file (27.9 kB). View file

|

|

|

model/SenseVoiceSmall/example/yue.mp3

ADDED

|

Binary file (31.2 kB). View file

|

|

|

model/SenseVoiceSmall/example/zh.mp3

ADDED

|

Binary file (45 kB). View file

|

|

|

model/SenseVoiceSmall/fig/aed_figure.png

ADDED

|

model/SenseVoiceSmall/fig/asr_results.png

ADDED

|

model/SenseVoiceSmall/fig/inference.png

ADDED

|

model/SenseVoiceSmall/fig/sensevoice.png

ADDED

|

model/SenseVoiceSmall/fig/ser_figure.png

ADDED

|

model/SenseVoiceSmall/fig/ser_table.png

ADDED

|

model/SenseVoiceSmall/model.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:833ca2dcfdf8ec91bd4f31cfac36d6124e0c459074d5e909aec9cabe6204a3ea

|

| 3 |

+

size 936291369

|

model/SenseVoiceSmall/model.py

ADDED

|

@@ -0,0 +1,895 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import time

|

| 2 |

+

import torch

|

| 3 |

+

from torch import nn

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

from typing import Iterable, Optional

|

| 6 |

+

|

| 7 |

+

from funasr.register import tables

|

| 8 |

+

from funasr.models.ctc.ctc import CTC

|

| 9 |

+

from funasr.utils.datadir_writer import DatadirWriter

|

| 10 |

+

from funasr.models.paraformer.search import Hypothesis

|

| 11 |

+

from funasr.train_utils.device_funcs import force_gatherable

|

| 12 |

+

from funasr.losses.label_smoothing_loss import LabelSmoothingLoss

|

| 13 |

+

from funasr.metrics.compute_acc import compute_accuracy, th_accuracy

|

| 14 |

+

from funasr.utils.load_utils import load_audio_text_image_video, extract_fbank

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

class SinusoidalPositionEncoder(torch.nn.Module):

|

| 18 |

+

""" """

|

| 19 |

+

|

| 20 |

+

def __int__(self, d_model=80, dropout_rate=0.1):

|

| 21 |

+

pass

|

| 22 |

+

|

| 23 |

+

def encode(

|

| 24 |

+

self, positions: torch.Tensor = None, depth: int = None, dtype: torch.dtype = torch.float32

|

| 25 |

+

):

|

| 26 |

+

batch_size = positions.size(0)

|

| 27 |

+

positions = positions.type(dtype)

|

| 28 |

+

device = positions.device

|

| 29 |

+

log_timescale_increment = torch.log(torch.tensor([10000], dtype=dtype, device=device)) / (

|

| 30 |

+

depth / 2 - 1

|

| 31 |

+

)

|

| 32 |

+

inv_timescales = torch.exp(

|

| 33 |

+

torch.arange(depth / 2, device=device).type(dtype) * (-log_timescale_increment)

|

| 34 |

+

)

|

| 35 |

+

inv_timescales = torch.reshape(inv_timescales, [batch_size, -1])

|

| 36 |

+

scaled_time = torch.reshape(positions, [1, -1, 1]) * torch.reshape(

|

| 37 |

+

inv_timescales, [1, 1, -1]

|

| 38 |

+

)

|

| 39 |

+

encoding = torch.cat([torch.sin(scaled_time), torch.cos(scaled_time)], dim=2)

|

| 40 |

+

return encoding.type(dtype)

|

| 41 |

+

|

| 42 |

+

def forward(self, x):

|

| 43 |

+

batch_size, timesteps, input_dim = x.size()

|

| 44 |

+

positions = torch.arange(1, timesteps + 1, device=x.device)[None, :]

|

| 45 |

+

position_encoding = self.encode(positions, input_dim, x.dtype).to(x.device)

|

| 46 |

+

|

| 47 |

+

return x + position_encoding

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

class PositionwiseFeedForward(torch.nn.Module):

|

| 51 |

+

"""Positionwise feed forward layer.

|

| 52 |

+

|

| 53 |

+

Args:

|

| 54 |

+

idim (int): Input dimenstion.

|

| 55 |

+

hidden_units (int): The number of hidden units.

|

| 56 |

+

dropout_rate (float): Dropout rate.

|

| 57 |

+

|

| 58 |

+

"""

|

| 59 |

+

|

| 60 |

+

def __init__(self, idim, hidden_units, dropout_rate, activation=torch.nn.ReLU()):

|

| 61 |

+

"""Construct an PositionwiseFeedForward object."""

|

| 62 |

+

super(PositionwiseFeedForward, self).__init__()

|

| 63 |

+

self.w_1 = torch.nn.Linear(idim, hidden_units)

|

| 64 |

+

self.w_2 = torch.nn.Linear(hidden_units, idim)

|

| 65 |

+

self.dropout = torch.nn.Dropout(dropout_rate)

|

| 66 |

+

self.activation = activation

|

| 67 |

+

|

| 68 |

+

def forward(self, x):

|

| 69 |

+

"""Forward function."""

|

| 70 |

+

return self.w_2(self.dropout(self.activation(self.w_1(x))))

|

| 71 |

+

|

| 72 |

+

|

| 73 |

+

class MultiHeadedAttentionSANM(nn.Module):

|

| 74 |

+

"""Multi-Head Attention layer.

|

| 75 |

+

|

| 76 |

+

Args:

|

| 77 |

+

n_head (int): The number of heads.

|

| 78 |

+

n_feat (int): The number of features.

|

| 79 |

+

dropout_rate (float): Dropout rate.

|

| 80 |

+

|

| 81 |

+

"""

|

| 82 |

+

|

| 83 |

+

def __init__(

|

| 84 |

+

self,

|

| 85 |

+

n_head,

|

| 86 |

+

in_feat,

|

| 87 |

+

n_feat,

|

| 88 |

+

dropout_rate,

|

| 89 |

+

kernel_size,

|

| 90 |

+

sanm_shfit=0,

|

| 91 |

+

lora_list=None,

|

| 92 |

+

lora_rank=8,

|

| 93 |

+

lora_alpha=16,

|

| 94 |

+

lora_dropout=0.1,

|

| 95 |

+

):

|

| 96 |

+

"""Construct an MultiHeadedAttention object."""

|

| 97 |

+

super().__init__()

|

| 98 |

+

assert n_feat % n_head == 0

|

| 99 |

+

# We assume d_v always equals d_k

|

| 100 |

+

self.d_k = n_feat // n_head

|

| 101 |

+

self.h = n_head

|

| 102 |

+

# self.linear_q = nn.Linear(n_feat, n_feat)

|

| 103 |

+

# self.linear_k = nn.Linear(n_feat, n_feat)

|

| 104 |

+

# self.linear_v = nn.Linear(n_feat, n_feat)

|

| 105 |

+

|

| 106 |

+

self.linear_out = nn.Linear(n_feat, n_feat)

|

| 107 |

+

self.linear_q_k_v = nn.Linear(in_feat, n_feat * 3)

|

| 108 |

+

self.attn = None

|

| 109 |

+

self.dropout = nn.Dropout(p=dropout_rate)

|

| 110 |

+

|

| 111 |

+

self.fsmn_block = nn.Conv1d(

|

| 112 |

+

n_feat, n_feat, kernel_size, stride=1, padding=0, groups=n_feat, bias=False

|

| 113 |

+

)

|

| 114 |

+

# padding

|

| 115 |

+

left_padding = (kernel_size - 1) // 2

|

| 116 |

+

if sanm_shfit > 0:

|

| 117 |

+

left_padding = left_padding + sanm_shfit

|

| 118 |

+

right_padding = kernel_size - 1 - left_padding

|

| 119 |

+

self.pad_fn = nn.ConstantPad1d((left_padding, right_padding), 0.0)

|

| 120 |

+

|

| 121 |

+

def forward_fsmn(self, inputs, mask, mask_shfit_chunk=None):

|

| 122 |

+

b, t, d = inputs.size()

|

| 123 |

+

if mask is not None:

|

| 124 |

+

mask = torch.reshape(mask, (b, -1, 1))

|

| 125 |

+

if mask_shfit_chunk is not None:

|

| 126 |

+

mask = mask * mask_shfit_chunk

|

| 127 |

+

inputs = inputs * mask

|

| 128 |

+

|

| 129 |

+

x = inputs.transpose(1, 2)

|

| 130 |

+

x = self.pad_fn(x)

|

| 131 |

+

x = self.fsmn_block(x)

|

| 132 |

+

x = x.transpose(1, 2)

|

| 133 |

+

x += inputs

|

| 134 |

+

x = self.dropout(x)

|

| 135 |

+

if mask is not None:

|

| 136 |

+

x = x * mask

|

| 137 |

+

return x

|

| 138 |

+

|

| 139 |

+

def forward_qkv(self, x):

|

| 140 |

+

"""Transform query, key and value.