Upload folder using huggingface_hub (#2)

Browse files- 111dccadfa825acf982c80bf38f0251d08f7a07aa435a03646004e74455a0fc8 (ac222ed7cb42cdfbaa87733f42e0199f70a16ed5)

- 8bbf2c609c4768deaaa7e385ebcdbc327105a3aee3e91075f965c1e05d8c9244 (9197534a3d0c619036f8d68d809cacf650207f40)

- config.json +1 -1

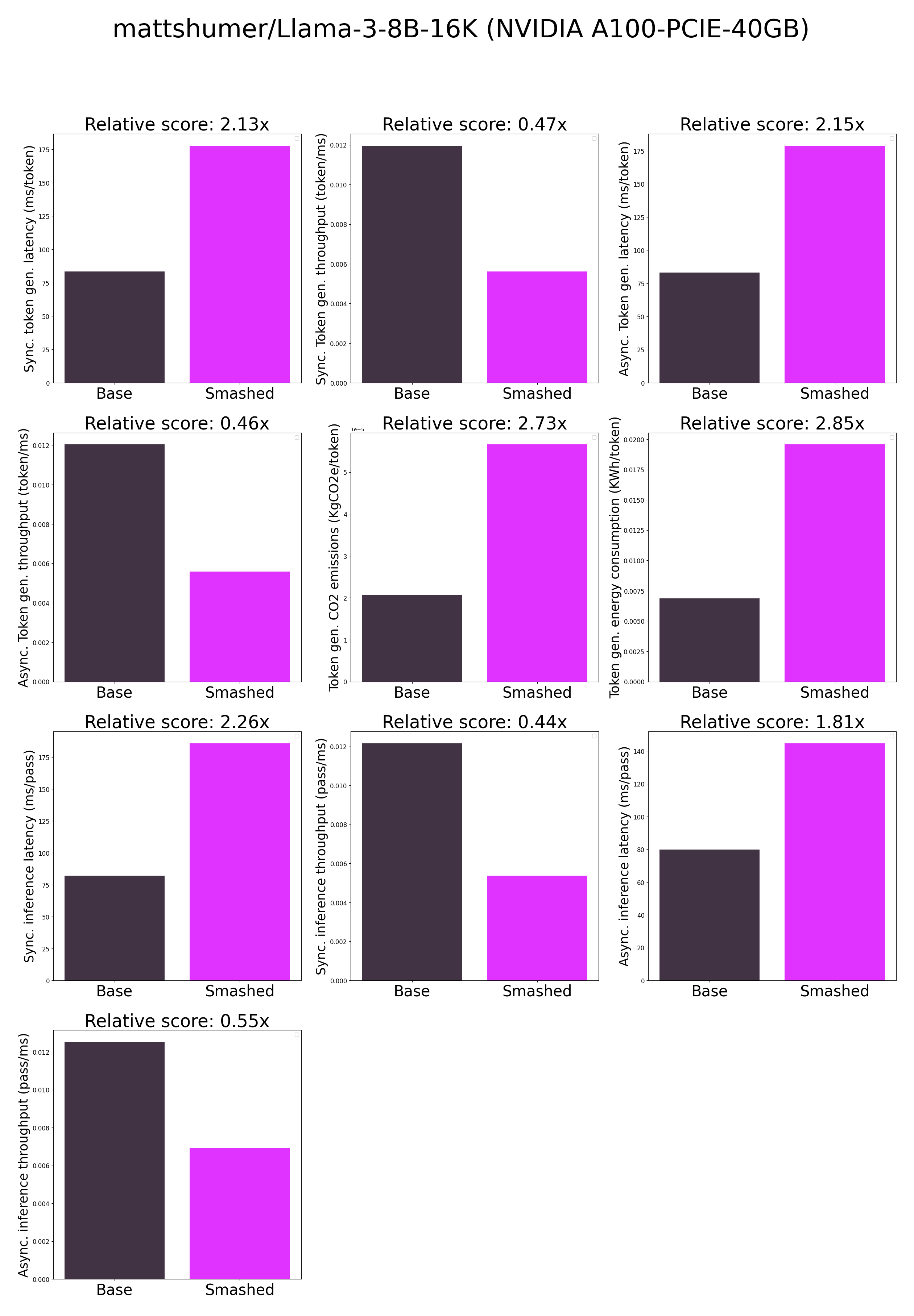

- plots.png +0 -0

- results.json +21 -1

- smash_config.json +1 -1

config.json

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

{

|

| 2 |

-

"_name_or_path": "/tmp/

|

| 3 |

"architectures": [

|

| 4 |

"LlamaForCausalLM"

|

| 5 |

],

|

|

|

|

| 1 |

{

|

| 2 |

+

"_name_or_path": "/tmp/tmpsnmqz3b1",

|

| 3 |

"architectures": [

|

| 4 |

"LlamaForCausalLM"

|

| 5 |

],

|

plots.png

ADDED

|

results.json

CHANGED

|

@@ -1,6 +1,26 @@

|

|

| 1 |

{

|

| 2 |

"base_current_gpu_type": "NVIDIA A100-PCIE-40GB",

|

| 3 |

"base_current_gpu_total_memory": 40339.3125,

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 4 |

"smashed_current_gpu_type": "NVIDIA A100-PCIE-40GB",

|

| 5 |

-

"smashed_current_gpu_total_memory": 40339.3125

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 6 |

}

|

|

|

|

| 1 |

{

|

| 2 |

"base_current_gpu_type": "NVIDIA A100-PCIE-40GB",

|

| 3 |

"base_current_gpu_total_memory": 40339.3125,

|

| 4 |

+

"base_token_generation_latency_sync": 83.62196044921875,

|

| 5 |

+

"base_token_generation_latency_async": 83.07278789579868,

|

| 6 |

+

"base_token_generation_throughput_sync": 0.011958581150549224,

|

| 7 |

+

"base_token_generation_throughput_async": 0.012037636214332154,

|

| 8 |

+

"base_token_generation_CO2_emissions": 2.0762580172851258e-05,

|

| 9 |

+

"base_token_generation_energy_consumption": 0.0068736865279905605,

|

| 10 |

+

"base_inference_latency_sync": 82.20262451171875,

|

| 11 |

+

"base_inference_latency_async": 79.88781929016113,

|

| 12 |

+

"base_inference_throughput_sync": 0.012165061711106812,

|

| 13 |

+

"base_inference_throughput_async": 0.012517552849551352,

|

| 14 |

"smashed_current_gpu_type": "NVIDIA A100-PCIE-40GB",

|

| 15 |

+

"smashed_current_gpu_total_memory": 40339.3125,

|

| 16 |

+

"smashed_token_generation_latency_sync": 177.8312194824219,

|

| 17 |

+

"smashed_token_generation_latency_async": 178.8266021758318,

|

| 18 |

+

"smashed_token_generation_throughput_sync": 0.0056233095792206905,

|

| 19 |

+

"smashed_token_generation_throughput_async": 0.005592009174433382,

|

| 20 |

+

"smashed_token_generation_CO2_emissions": 5.6607409622391035e-05,

|

| 21 |

+

"smashed_token_generation_energy_consumption": 0.019573642151998485,

|

| 22 |

+

"smashed_inference_latency_sync": 185.82732849121095,

|

| 23 |

+

"smashed_inference_latency_async": 144.77639198303223,

|

| 24 |

+

"smashed_inference_throughput_sync": 0.0053813398067943325,

|

| 25 |

+

"smashed_inference_throughput_async": 0.0069072034901740045

|

| 26 |

}

|

smash_config.json

CHANGED

|

@@ -14,7 +14,7 @@

|

|

| 14 |

"controlnet": "None",

|

| 15 |

"unet_dim": 4,

|

| 16 |

"device": "cuda",

|

| 17 |

-

"cache_dir": "/ceph/hdd/staff/charpent/.cache/

|

| 18 |

"batch_size": 1,

|

| 19 |

"model_name": "mattshumer/Llama-3-8B-16K",

|

| 20 |

"task": "text_text_generation",

|

|

|

|

| 14 |

"controlnet": "None",

|

| 15 |

"unet_dim": 4,

|

| 16 |

"device": "cuda",

|

| 17 |

+

"cache_dir": "/ceph/hdd/staff/charpent/.cache/modelstj7ks5m6",

|

| 18 |

"batch_size": 1,

|

| 19 |

"model_name": "mattshumer/Llama-3-8B-16K",

|

| 20 |

"task": "text_text_generation",

|