Update README.md

Browse files

README.md

CHANGED

|

@@ -69,7 +69,7 @@ Same process applies. Usually, it is best to do a sliding window over the user a

|

|

| 69 |

## Evaluation Metrics

|

| 70 |

The model was evaluated using EleutherAI's [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness) test suite. It was evaluated on the following tasks:

|

| 71 |

|

| 72 |

-

|

| 73 |

| Task |Version| Metric |Value | |Stderr|

|

| 74 |

|-------------|------:|--------|-----:|---|-----:|

|

| 75 |

|anli_r1 | 0|acc |0.3310|± |0.0149|

|

|

@@ -95,7 +95,6 @@ The model was evaluated using EleutherAI's [lm-evaluation-harness](https://githu

|

|

| 95 |

|winogrande | 0|acc |0.5675|± |0.0139|

|

| 96 |

|wsc | 0|acc |0.3654|± |0.0474|

|

| 97 |

|

| 98 |

-

```

|

| 99 |

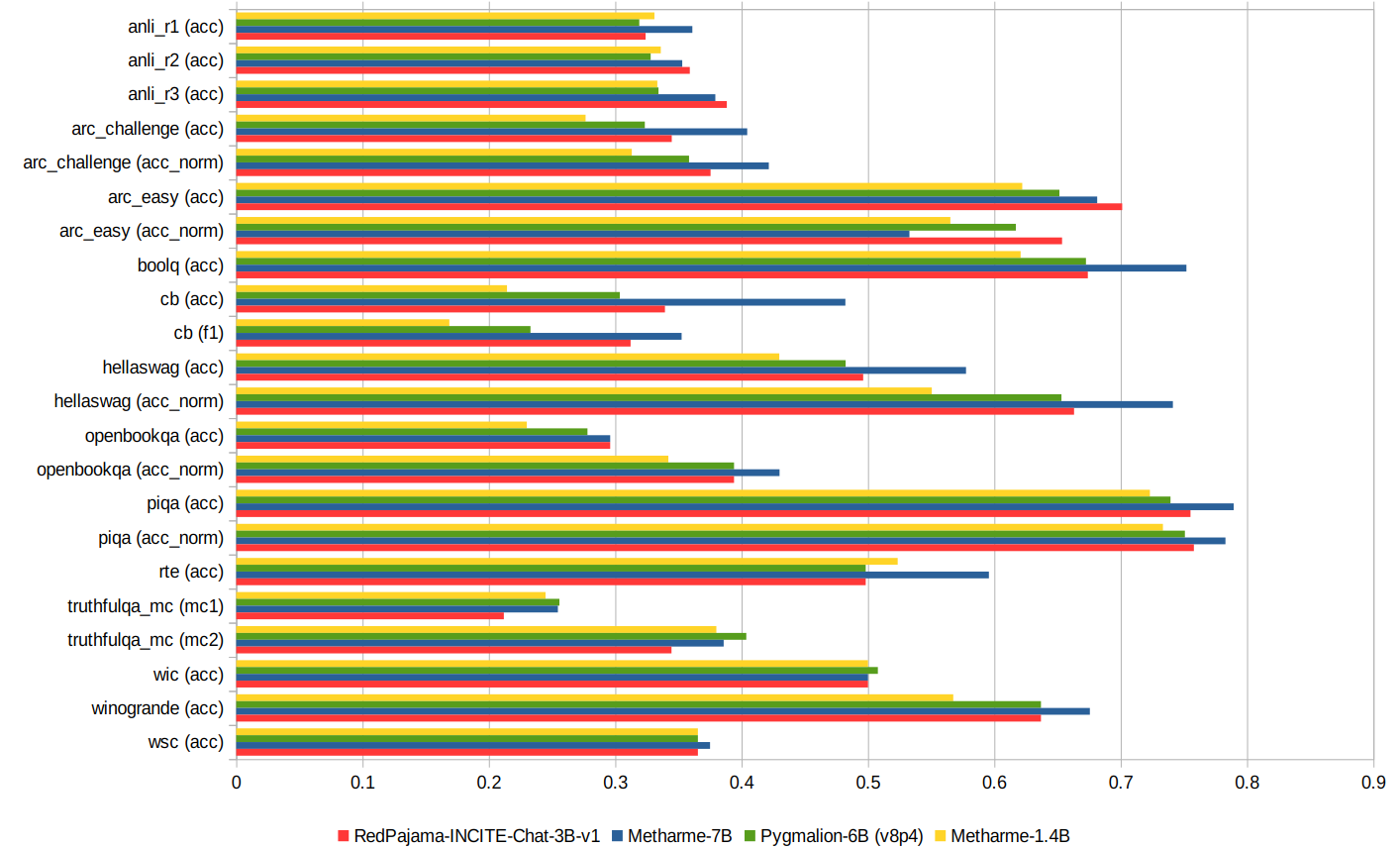

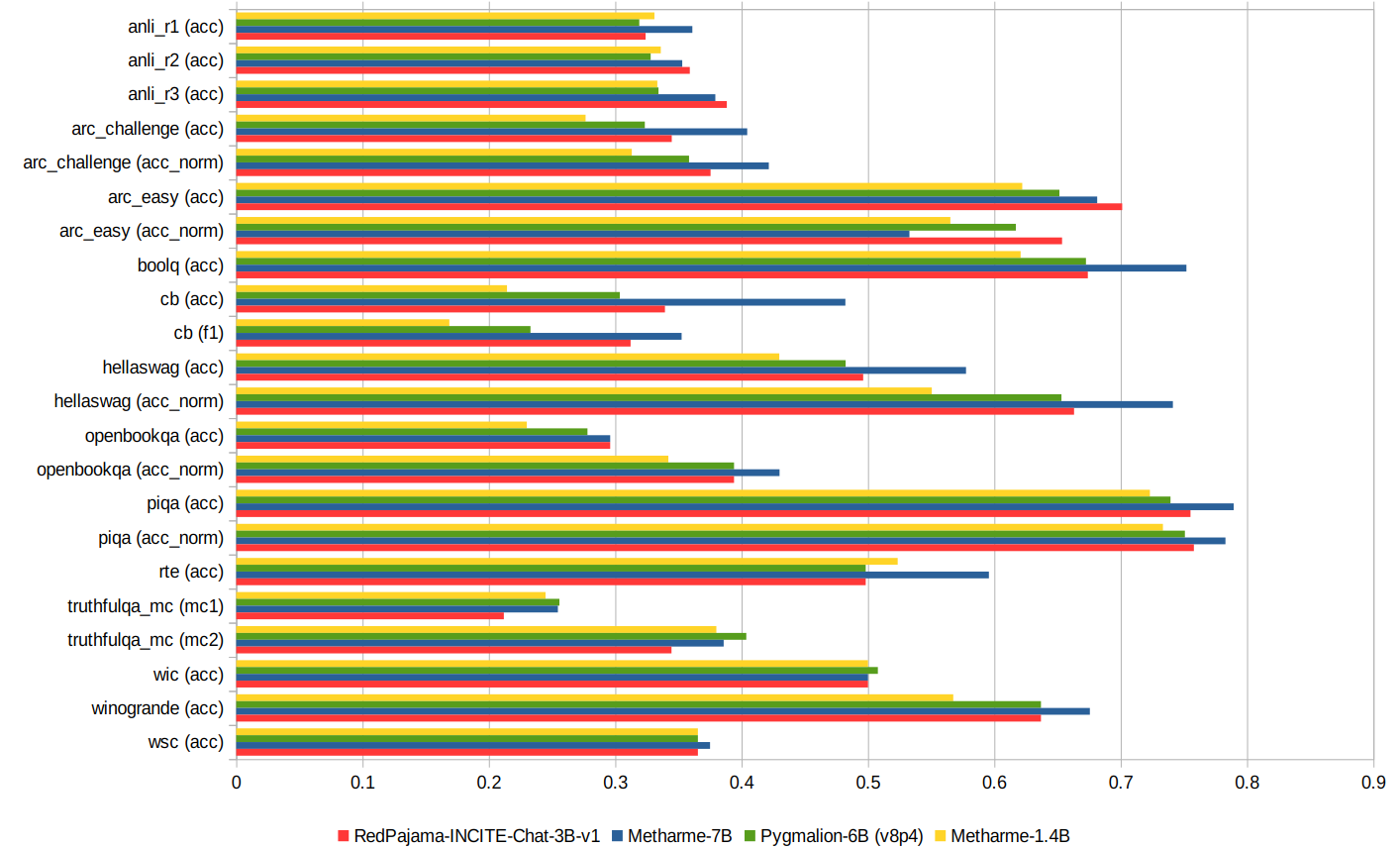

Illustrated comparison of Metharme-1.3B's performance on benchmarks to Pygmalion-6B, Metharme-7B, and [RedPajama-INCITE-Chat-3B-v1](https://huggingface.co/togethercomputer/RedPajama-INCITE-Chat-3B-v1):

|

| 100 |

|

| 101 |

|

|

|

|

| 69 |

## Evaluation Metrics

|

| 70 |

The model was evaluated using EleutherAI's [lm-evaluation-harness](https://github.com/EleutherAI/lm-evaluation-harness) test suite. It was evaluated on the following tasks:

|

| 71 |

|

| 72 |

+

|

| 73 |

| Task |Version| Metric |Value | |Stderr|

|

| 74 |

|-------------|------:|--------|-----:|---|-----:|

|

| 75 |

|anli_r1 | 0|acc |0.3310|± |0.0149|

|

|

|

|

| 95 |

|winogrande | 0|acc |0.5675|± |0.0139|

|

| 96 |

|wsc | 0|acc |0.3654|± |0.0474|

|

| 97 |

|

|

|

|

| 98 |

Illustrated comparison of Metharme-1.3B's performance on benchmarks to Pygmalion-6B, Metharme-7B, and [RedPajama-INCITE-Chat-3B-v1](https://huggingface.co/togethercomputer/RedPajama-INCITE-Chat-3B-v1):

|

| 99 |

|

| 100 |

|