uploaded readme

Browse files

README.md

ADDED

|

@@ -0,0 +1,342 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Quantization made by Richard Erkhov.

|

| 2 |

+

|

| 3 |

+

[Github](https://github.com/RichardErkhov)

|

| 4 |

+

|

| 5 |

+

[Discord](https://discord.gg/pvy7H8DZMG)

|

| 6 |

+

|

| 7 |

+

[Request more models](https://github.com/RichardErkhov/quant_request)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

llama3-8B-DarkIdol-2.1-Uncensored-32K - GGUF

|

| 11 |

+

- Model creator: https://huggingface.co/aifeifei798/

|

| 12 |

+

- Original model: https://huggingface.co/aifeifei798/llama3-8B-DarkIdol-2.1-Uncensored-32K/

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

| Name | Quant method | Size |

|

| 16 |

+

| ---- | ---- | ---- |

|

| 17 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q2_K.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q2_K.gguf) | Q2_K | 2.96GB |

|

| 18 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ3_XS.gguf) | IQ3_XS | 3.28GB |

|

| 19 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ3_S.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ3_S.gguf) | IQ3_S | 3.43GB |

|

| 20 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K_S.gguf) | Q3_K_S | 3.41GB |

|

| 21 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ3_M.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ3_M.gguf) | IQ3_M | 3.52GB |

|

| 22 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K.gguf) | Q3_K | 3.74GB |

|

| 23 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K_M.gguf) | Q3_K_M | 3.74GB |

|

| 24 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q3_K_L.gguf) | Q3_K_L | 4.03GB |

|

| 25 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ4_XS.gguf) | IQ4_XS | 4.18GB |

|

| 26 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_0.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_0.gguf) | Q4_0 | 4.34GB |

|

| 27 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.IQ4_NL.gguf) | IQ4_NL | 4.38GB |

|

| 28 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_K_S.gguf) | Q4_K_S | 4.37GB |

|

| 29 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_K.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_K.gguf) | Q4_K | 4.58GB |

|

| 30 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_K_M.gguf) | Q4_K_M | 4.58GB |

|

| 31 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_1.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q4_1.gguf) | Q4_1 | 4.78GB |

|

| 32 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_0.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_0.gguf) | Q5_0 | 5.21GB |

|

| 33 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_K_S.gguf) | Q5_K_S | 5.21GB |

|

| 34 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_K.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_K.gguf) | Q5_K | 5.34GB |

|

| 35 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_K_M.gguf) | Q5_K_M | 5.34GB |

|

| 36 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_1.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q5_1.gguf) | Q5_1 | 5.65GB |

|

| 37 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q6_K.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q6_K.gguf) | Q6_K | 6.14GB |

|

| 38 |

+

| [llama3-8B-DarkIdol-2.1-Uncensored-32K.Q8_0.gguf](https://huggingface.co/RichardErkhov/aifeifei798_-_llama3-8B-DarkIdol-2.1-Uncensored-32K-gguf/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K.Q8_0.gguf) | Q8_0 | 7.95GB |

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

Original model description:

|

| 44 |

+

---

|

| 45 |

+

license: llama3

|

| 46 |

+

language:

|

| 47 |

+

- en

|

| 48 |

+

- ja

|

| 49 |

+

- zh

|

| 50 |

+

tags:

|

| 51 |

+

- roleplay

|

| 52 |

+

- llama3

|

| 53 |

+

- sillytavern

|

| 54 |

+

- idol

|

| 55 |

+

---

|

| 56 |

+

# Special Thanks:

|

| 57 |

+

- Lewdiculous's superb gguf version, thank you for your conscientious and responsible dedication.

|

| 58 |

+

- https://huggingface.co/LWDCLS/llama3-8B-DarkIdol-2.1-Uncensored-32K-GGUF-IQ-Imatrix-Request

|

| 59 |

+

|

| 60 |

+

# fast quantizations

|

| 61 |

+

- The difference with normal quantizations is that I quantize the output and embed tensors to f16.and the other tensors to 15_k,q6_k or q8_0.This creates models that are little or not degraded at all and have a smaller size.They run at about 3-6 t/sec on CPU only using llama.cpp And obviously faster on computers with potent GPUs

|

| 62 |

+

- https://huggingface.co/ZeroWw/llama3-8B-DarkIdol-2.1-Uncensored-32K-GGUF

|

| 63 |

+

- More models here: https://huggingface.co/RobertSinclair

|

| 64 |

+

|

| 65 |

+

# Model Description:

|

| 66 |

+

The module combination has been readjusted to better fulfill various roles and has been adapted for mobile phones.

|

| 67 |

+

- Saving money(LLama 3)

|

| 68 |

+

- Uncensored

|

| 69 |

+

- Quick response

|

| 70 |

+

- The underlying model used is winglian/Llama-3-8b-64k-PoSE (The theoretical support is 64k, but I have only tested up to 32k. :)

|

| 71 |

+

- A scholarly response akin to a thesis.(I tend to write songs extensively, to the point where one song almost becomes as detailed as a thesis. :)

|

| 72 |

+

- DarkIdol:Roles that you can imagine and those that you cannot imagine.

|

| 73 |

+

- Roleplay

|

| 74 |

+

- Specialized in various role-playing scenarios

|

| 75 |

+

- more look at test role. (https://huggingface.co/aifeifei798/llama3-8B-DarkIdol-1.2/tree/main/test)

|

| 76 |

+

- more look at LM Studio presets (https://huggingface.co/aifeifei798/llama3-8B-DarkIdol-1.2/tree/main/config-presets)

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

|

| 80 |

+

# Chang Log

|

| 81 |

+

### 2024-06-26

|

| 82 |

+

- 32k

|

| 83 |

+

### 2024-06-26

|

| 84 |

+

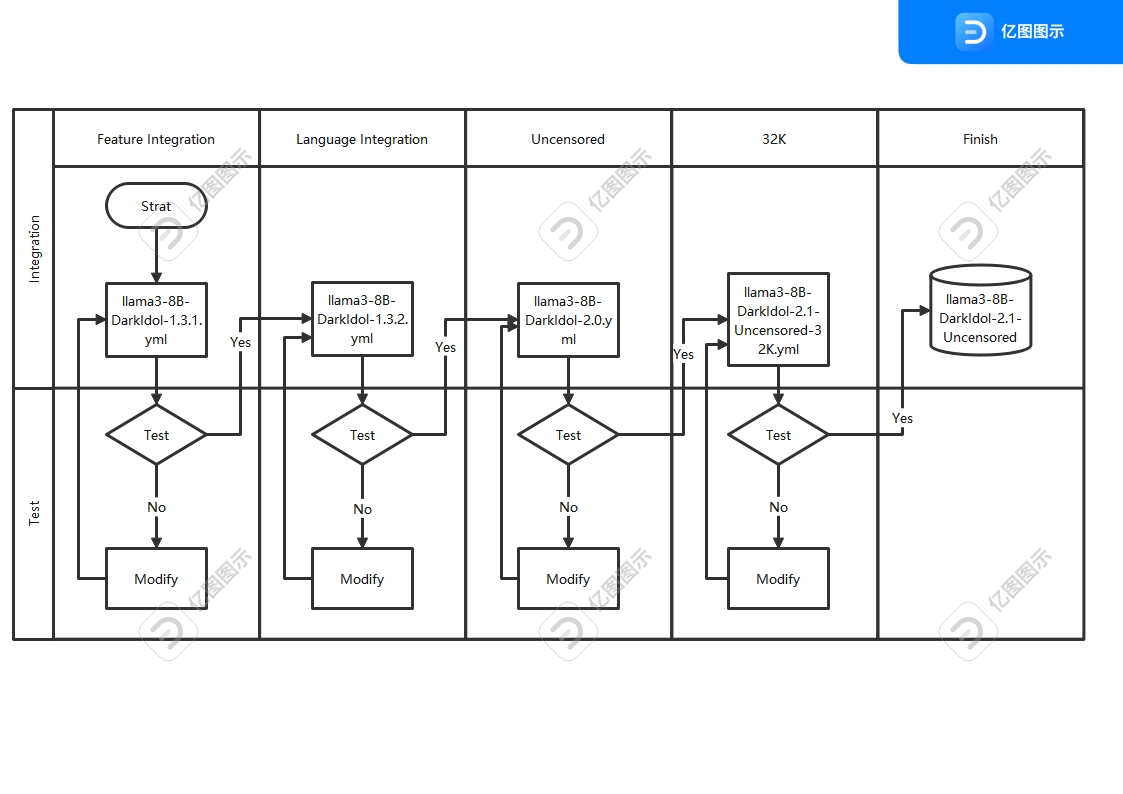

- 之前版本的迭代太多了,已经开始出现过拟合现象.重新使用了新的工艺重新制作模型,虽然制作复杂了,结果很好,新的迭代工艺如图

|

| 85 |

+

- The previous version had undergone excessive iterations, resulting in overfitting. We have recreated the model using a new process, which, although more complex to produce, has yielded excellent results. The new iterative process is depicted in the figure.

|

| 86 |

+

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

# Questions

|

| 90 |

+

- The model's response results are for reference only, please do not fully trust them.

|

| 91 |

+

- I am unable to test Japanese and Korean parts very well. Based on my testing, Korean performs excellently, but sometimes Japanese may have furigana (if anyone knows a good Japanese language module, - I need to replace the module for integration).

|

| 92 |

+

- With the new manufacturing process, overfitting and crashes have been reduced, but there may be new issues, so please leave a message if you encounter any.

|

| 93 |

+

- testing with other tools is not comprehensive.but there may be new issues, so please leave a message if you encounter any.

|

| 94 |

+

- The range between 32K and 64K was not tested, and the approach was somewhat casual. I didn't expect the results to be exceptionally good.

|

| 95 |

+

|

| 96 |

+

# 问题

|

| 97 |

+

- 模型回复结果仅供参考,请勿完全相信

|

| 98 |

+

- 日语,韩语部分我没办法进行很好的测试,根据我测试情况,韩语表现的很好,日语有时候会出现注音(谁知道好的日文语言模块,我需要换模块集成)

|

| 99 |

+

- 新工艺制作,过拟合现象和崩溃减少了,可能会有新的问题,碰到了请给我留言

|

| 100 |

+

- 32K-64k区间没有测试,做的有点随意,没想到结果特别的好

|

| 101 |

+

- 其他工具的测试不完善

|

| 102 |

+

|

| 103 |

+

# Stop Strings

|

| 104 |

+

```python

|

| 105 |

+

stop = [

|

| 106 |

+

"## Instruction:",

|

| 107 |

+

"### Instruction:",

|

| 108 |

+

"<|end_of_text|>",

|

| 109 |

+

" //:",

|

| 110 |

+

"</s>",

|

| 111 |

+

"<3```",

|

| 112 |

+

"### Note:",

|

| 113 |

+

"### Input:",

|

| 114 |

+

"### Response:",

|

| 115 |

+

"### Emoticons:"

|

| 116 |

+

],

|

| 117 |

+

```

|

| 118 |

+

# Model Use

|

| 119 |

+

- Koboldcpp https://github.com/LostRuins/koboldcpp

|

| 120 |

+

- Since KoboldCpp is taking a while to update with the latest llama.cpp commits, I'll recommend this [fork](https://github.com/Nexesenex/kobold.cpp) if anyone has issues.

|

| 121 |

+

- LM Studio https://lmstudio.ai/

|

| 122 |

+

- llama.cpp https://github.com/ggerganov/llama.cpp

|

| 123 |

+

- Backyard AI https://backyard.ai/

|

| 124 |

+

- Meet Layla,Layla is an AI chatbot that runs offline on your device.No internet connection required.No censorship.Complete privacy.Layla Lite https://www.layla-network.ai/

|

| 125 |

+

- Layla Lite llama3-8B-DarkIdol-1.1-Q4_K_S-imat.gguf https://huggingface.co/LWDCLS/llama3-8B-DarkIdol-2.1-Uncensored-32K/blob/main/llama3-8B-DarkIdol-2.1-Uncensored-32K-Q4_K_S-imat.gguf?download=true

|

| 126 |

+

- more gguf at https://huggingface.co/LWDCLS/llama3-8B-DarkIdol-2.1-Uncensored-32K-GGUF-IQ-Imatrix-Request

|

| 127 |

+

# character

|

| 128 |

+

- https://character-tavern.com/

|

| 129 |

+

- https://characterhub.org/

|

| 130 |

+

- https://pygmalion.chat/

|

| 131 |

+

- https://aetherroom.club/

|

| 132 |

+

- https://backyard.ai/

|

| 133 |

+

- Layla AI chatbot

|

| 134 |

+

### If you want to use vision functionality:

|

| 135 |

+

* You must use the latest versions of [Koboldcpp](https://github.com/Nexesenex/kobold.cpp).

|

| 136 |

+

|

| 137 |

+

### To use the multimodal capabilities of this model and use **vision** you need to load the specified **mmproj** file, this can be found inside this model repo. [Llava MMProj](https://huggingface.co/Nitral-AI/Llama-3-Update-3.0-mmproj-model-f16)

|

| 138 |

+

|

| 139 |

+

* You can load the **mmproj** by using the corresponding section in the interface:

|

| 140 |

+

|

| 141 |

+

### Thank you:

|

| 142 |

+

To the authors for their hard work, which has given me more options to easily create what I want. Thank you for your efforts.

|

| 143 |

+

- Hastagaras

|

| 144 |

+

- Gryphe

|

| 145 |

+

- cgato

|

| 146 |

+

- ChaoticNeutrals

|

| 147 |

+

- mergekit

|

| 148 |

+

- merge

|

| 149 |

+

- transformers

|

| 150 |

+

- llama

|

| 151 |

+

- Nitral-AI

|

| 152 |

+

- MLP-KTLim

|

| 153 |

+

- rinna

|

| 154 |

+

- hfl

|

| 155 |

+

- Rupesh2

|

| 156 |

+

- stephenlzc

|

| 157 |

+

- theprint

|

| 158 |

+

- Sao10K

|

| 159 |

+

- turboderp

|

| 160 |

+

- TheBossLevel123

|

| 161 |

+

- winglian

|

| 162 |

+

- .........

|

| 163 |

+

---

|

| 164 |

+

base_model:

|

| 165 |

+

- Nitral-AI/Hathor_Fractionate-L3-8B-v.05

|

| 166 |

+

- Hastagaras/Jamet-8B-L3-MK.V-Blackroot

|

| 167 |

+

- turboderp/llama3-turbcat-instruct-8b

|

| 168 |

+

- aifeifei798/Meta-Llama-3-8B-Instruct

|

| 169 |

+

- Sao10K/L3-8B-Stheno-v3.3-32K

|

| 170 |

+

- TheBossLevel123/Llama3-Toxic-8B-Float16

|

| 171 |

+

- cgato/L3-TheSpice-8b-v0.8.3

|

| 172 |

+

library_name: transformers

|

| 173 |

+

tags:

|

| 174 |

+

- mergekit

|

| 175 |

+

- merge

|

| 176 |

+

|

| 177 |

+

---

|

| 178 |

+

# llama3-8B-DarkIdol-1.3.1

|

| 179 |

+

|

| 180 |

+

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 181 |

+

|

| 182 |

+

## Merge Details

|

| 183 |

+

### Merge Method

|

| 184 |

+

|

| 185 |

+

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using [aifeifei798/Meta-Llama-3-8B-Instruct](https://huggingface.co/aifeifei798/Meta-Llama-3-8B-Instruct) as a base.

|

| 186 |

+

|

| 187 |

+

### Models Merged

|

| 188 |

+

|

| 189 |

+

The following models were included in the merge:

|

| 190 |

+

* [Nitral-AI/Hathor_Fractionate-L3-8B-v.05](https://huggingface.co/Nitral-AI/Hathor_Fractionate-L3-8B-v.05)

|

| 191 |

+

* [Hastagaras/Jamet-8B-L3-MK.V-Blackroot](https://huggingface.co/Hastagaras/Jamet-8B-L3-MK.V-Blackroot)

|

| 192 |

+

* [turboderp/llama3-turbcat-instruct-8b](https://huggingface.co/turboderp/llama3-turbcat-instruct-8b)

|

| 193 |

+

* [Sao10K/L3-8B-Stheno-v3.3-32K](https://huggingface.co/Sao10K/L3-8B-Stheno-v3.3-32K)

|

| 194 |

+

* [TheBossLevel123/Llama3-Toxic-8B-Float16](https://huggingface.co/TheBossLevel123/Llama3-Toxic-8B-Float16)

|

| 195 |

+

* [cgato/L3-TheSpice-8b-v0.8.3](https://huggingface.co/cgato/L3-TheSpice-8b-v0.8.3)

|

| 196 |

+

|

| 197 |

+

### Configuration

|

| 198 |

+

|

| 199 |

+

The following YAML configuration was used to produce this model:

|

| 200 |

+

|

| 201 |

+

```yaml

|

| 202 |

+

models:

|

| 203 |

+

- model: Sao10K/L3-8B-Stheno-v3.3-32K

|

| 204 |

+

- model: Hastagaras/Jamet-8B-L3-MK.V-Blackroot

|

| 205 |

+

- model: cgato/L3-TheSpice-8b-v0.8.3

|

| 206 |

+

- model: Nitral-AI/Hathor_Fractionate-L3-8B-v.05

|

| 207 |

+

- model: TheBossLevel123/Llama3-Toxic-8B-Float16

|

| 208 |

+

- model: turboderp/llama3-turbcat-instruct-8b

|

| 209 |

+

- model: aifeifei798/Meta-Llama-3-8B-Instruct

|

| 210 |

+

merge_method: model_stock

|

| 211 |

+

base_model: aifeifei798/Meta-Llama-3-8B-Instruct

|

| 212 |

+

dtype: bfloat16

|

| 213 |

+

|

| 214 |

+

```

|

| 215 |

+

|

| 216 |

+

---

|

| 217 |

+

base_model:

|

| 218 |

+

- hfl/llama-3-chinese-8b-instruct-v3

|

| 219 |

+

- rinna/llama-3-youko-8b

|

| 220 |

+

- MLP-KTLim/llama-3-Korean-Bllossom-8B

|

| 221 |

+

library_name: transformers

|

| 222 |

+

tags:

|

| 223 |

+

- mergekit

|

| 224 |

+

- merge

|

| 225 |

+

|

| 226 |

+

---

|

| 227 |

+

# llama3-8B-DarkIdol-1.3.2

|

| 228 |

+

|

| 229 |

+

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 230 |

+

|

| 231 |

+

## Merge Details

|

| 232 |

+

### Merge Method

|

| 233 |

+

|

| 234 |

+

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using ./llama3-8B-DarkIdol-1.3.1 as a base.

|

| 235 |

+

|

| 236 |

+

### Models Merged

|

| 237 |

+

|

| 238 |

+

The following models were included in the merge:

|

| 239 |

+

* [hfl/llama-3-chinese-8b-instruct-v3](https://huggingface.co/hfl/llama-3-chinese-8b-instruct-v3)

|

| 240 |

+

* [rinna/llama-3-youko-8b](https://huggingface.co/rinna/llama-3-youko-8b)

|

| 241 |

+

* [MLP-KTLim/llama-3-Korean-Bllossom-8B](https://huggingface.co/MLP-KTLim/llama-3-Korean-Bllossom-8B)

|

| 242 |

+

|

| 243 |

+

### Configuration

|

| 244 |

+

|

| 245 |

+

The following YAML configuration was used to produce this model:

|

| 246 |

+

|

| 247 |

+

```yaml

|

| 248 |

+

models:

|

| 249 |

+

- model: hfl/llama-3-chinese-8b-instruct-v3

|

| 250 |

+

- model: rinna/llama-3-youko-8b

|

| 251 |

+

- model: MLP-KTLim/llama-3-Korean-Bllossom-8B

|

| 252 |

+

- model: ./llama3-8B-DarkIdol-1.3.1

|

| 253 |

+

merge_method: model_stock

|

| 254 |

+

base_model: ./llama3-8B-DarkIdol-1.3.1

|

| 255 |

+

dtype: bfloat16

|

| 256 |

+

|

| 257 |

+

```

|

| 258 |

+

---

|

| 259 |

+

base_model:

|

| 260 |

+

- theprint/Llama-3-8B-Lexi-Smaug-Uncensored

|

| 261 |

+

- Rupesh2/OrpoLlama-3-8B-instruct-uncensored

|

| 262 |

+

- stephenlzc/dolphin-llama3-zh-cn-uncensored

|

| 263 |

+

library_name: transformers

|

| 264 |

+

tags:

|

| 265 |

+

- mergekit

|

| 266 |

+

- merge

|

| 267 |

+

|

| 268 |

+

---

|

| 269 |

+

# llama3-8B-DarkIdol-2.0-Uncensored

|

| 270 |

+

|

| 271 |

+

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 272 |

+

|

| 273 |

+

## Merge Details

|

| 274 |

+

### Merge Method

|

| 275 |

+

|

| 276 |

+

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using ./llama3-8B-DarkIdol-1.3.2 as a base.

|

| 277 |

+

|

| 278 |

+

### Models Merged

|

| 279 |

+

|

| 280 |

+

The following models were included in the merge:

|

| 281 |

+

* [theprint/Llama-3-8B-Lexi-Smaug-Uncensored](https://huggingface.co/theprint/Llama-3-8B-Lexi-Smaug-Uncensored)

|

| 282 |

+

* [Rupesh2/OrpoLlama-3-8B-instruct-uncensored](https://huggingface.co/Rupesh2/OrpoLlama-3-8B-instruct-uncensored)

|

| 283 |

+

* [stephenlzc/dolphin-llama3-zh-cn-uncensored](https://huggingface.co/stephenlzc/dolphin-llama3-zh-cn-uncensored)

|

| 284 |

+

|

| 285 |

+

### Configuration

|

| 286 |

+

|

| 287 |

+

The following YAML configuration was used to produce this model:

|

| 288 |

+

|

| 289 |

+

```yaml

|

| 290 |

+

models:

|

| 291 |

+

- model: Rupesh2/OrpoLlama-3-8B-instruct-uncensored

|

| 292 |

+

- model: stephenlzc/dolphin-llama3-zh-cn-uncensored

|

| 293 |

+

- model: theprint/Llama-3-8B-Lexi-Smaug-Uncensored

|

| 294 |

+

- model: ./llama3-8B-DarkIdol-1.3.2

|

| 295 |

+

merge_method: model_stock

|

| 296 |

+

base_model: ./llama3-8B-DarkIdol-2.0-Uncensored

|

| 297 |

+

dtype: bfloat16

|

| 298 |

+

|

| 299 |

+

```

|

| 300 |

+

|

| 301 |

+

---

|

| 302 |

+

base_model:

|

| 303 |

+

- winglian/Llama-3-8b-64k-PoSE

|

| 304 |

+

library_name: transformers

|

| 305 |

+

tags:

|

| 306 |

+

- mergekit

|

| 307 |

+

- merge

|

| 308 |

+

|

| 309 |

+

---

|

| 310 |

+

# llama3-8B-DarkIdol-2.1-Uncensored-32K

|

| 311 |

+

|

| 312 |

+

This is a merge of pre-trained language models created using [mergekit](https://github.com/cg123/mergekit).

|

| 313 |

+

|

| 314 |

+

## Merge Details

|

| 315 |

+

### Merge Method

|

| 316 |

+

|

| 317 |

+

This model was merged using the [Model Stock](https://arxiv.org/abs/2403.19522) merge method using [winglian/Llama-3-8b-64k-PoSE](https://huggingface.co/winglian/Llama-3-8b-64k-PoSE) as a base.

|

| 318 |

+

|

| 319 |

+

### Models Merged

|

| 320 |

+

|

| 321 |

+

The following models were included in the merge:

|

| 322 |

+

* ./llama3-8B-DarkIdol-1.3.2

|

| 323 |

+

* ./llama3-8B-DarkIdol-2.0

|

| 324 |

+

* ./llama3-8B-DarkIdol-1.3.1

|

| 325 |

+

|

| 326 |

+

### Configuration

|

| 327 |

+

|

| 328 |

+

The following YAML configuration was used to produce this model:

|

| 329 |

+

|

| 330 |

+

```yaml

|

| 331 |

+

models:

|

| 332 |

+

- model: ./llama3-8B-DarkIdol-1.3.1

|

| 333 |

+

- model: ./llama3-8B-DarkIdol-1.3.2

|

| 334 |

+

- model: ./llama3-8B-DarkIdol-2.0

|

| 335 |

+

- model: winglian/Llama-3-8b-64k-PoSE

|

| 336 |

+

merge_method: model_stock

|

| 337 |

+

base_model: winglian/Llama-3-8b-64k-PoSE

|

| 338 |

+

dtype: bfloat16

|

| 339 |

+

|

| 340 |

+

```

|

| 341 |

+

|

| 342 |

+

|