Commit

•

4a73a5b

1

Parent(s):

2aa6eae

uploaded readme

Browse files

README.md

ADDED

|

@@ -0,0 +1,111 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Quantization made by Richard Erkhov.

|

| 2 |

+

|

| 3 |

+

[Github](https://github.com/RichardErkhov)

|

| 4 |

+

|

| 5 |

+

[Discord](https://discord.gg/pvy7H8DZMG)

|

| 6 |

+

|

| 7 |

+

[Request more models](https://github.com/RichardErkhov/quant_request)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

Yarn-Mistral-7b-128k-sharded - GGUF

|

| 11 |

+

- Model creator: https://huggingface.co/yanismiraoui/

|

| 12 |

+

- Original model: https://huggingface.co/yanismiraoui/Yarn-Mistral-7b-128k-sharded/

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

| Name | Quant method | Size |

|

| 16 |

+

| ---- | ---- | ---- |

|

| 17 |

+

| [Yarn-Mistral-7b-128k-sharded.Q2_K.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q2_K.gguf) | Q2_K | 2.53GB |

|

| 18 |

+

| [Yarn-Mistral-7b-128k-sharded.IQ3_XS.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.IQ3_XS.gguf) | IQ3_XS | 0.34GB |

|

| 19 |

+

| [Yarn-Mistral-7b-128k-sharded.IQ3_S.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.IQ3_S.gguf) | IQ3_S | 0.05GB |

|

| 20 |

+

| [Yarn-Mistral-7b-128k-sharded.Q3_K_S.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q3_K_S.gguf) | Q3_K_S | 0.03GB |

|

| 21 |

+

| [Yarn-Mistral-7b-128k-sharded.IQ3_M.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.IQ3_M.gguf) | IQ3_M | 0.0GB |

|

| 22 |

+

| [Yarn-Mistral-7b-128k-sharded.Q3_K.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q3_K.gguf) | Q3_K | 0.0GB |

|

| 23 |

+

| [Yarn-Mistral-7b-128k-sharded.Q3_K_M.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q3_K_M.gguf) | Q3_K_M | 0.0GB |

|

| 24 |

+

| [Yarn-Mistral-7b-128k-sharded.Q3_K_L.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q3_K_L.gguf) | Q3_K_L | 0.0GB |

|

| 25 |

+

| [Yarn-Mistral-7b-128k-sharded.IQ4_XS.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.IQ4_XS.gguf) | IQ4_XS | 0.0GB |

|

| 26 |

+

| [Yarn-Mistral-7b-128k-sharded.Q4_0.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q4_0.gguf) | Q4_0 | 0.0GB |

|

| 27 |

+

| [Yarn-Mistral-7b-128k-sharded.IQ4_NL.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.IQ4_NL.gguf) | IQ4_NL | 0.0GB |

|

| 28 |

+

| [Yarn-Mistral-7b-128k-sharded.Q4_K_S.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q4_K_S.gguf) | Q4_K_S | 0.0GB |

|

| 29 |

+

| [Yarn-Mistral-7b-128k-sharded.Q4_K.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q4_K.gguf) | Q4_K | 0.0GB |

|

| 30 |

+

| [Yarn-Mistral-7b-128k-sharded.Q4_K_M.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q4_K_M.gguf) | Q4_K_M | 0.0GB |

|

| 31 |

+

| [Yarn-Mistral-7b-128k-sharded.Q4_1.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q4_1.gguf) | Q4_1 | 0.0GB |

|

| 32 |

+

| [Yarn-Mistral-7b-128k-sharded.Q5_0.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q5_0.gguf) | Q5_0 | 0.0GB |

|

| 33 |

+

| [Yarn-Mistral-7b-128k-sharded.Q5_K_S.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q5_K_S.gguf) | Q5_K_S | 0.0GB |

|

| 34 |

+

| [Yarn-Mistral-7b-128k-sharded.Q5_K.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q5_K.gguf) | Q5_K | 0.0GB |

|

| 35 |

+

| [Yarn-Mistral-7b-128k-sharded.Q5_K_M.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q5_K_M.gguf) | Q5_K_M | 0.0GB |

|

| 36 |

+

| [Yarn-Mistral-7b-128k-sharded.Q5_1.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q5_1.gguf) | Q5_1 | 0.0GB |

|

| 37 |

+

| [Yarn-Mistral-7b-128k-sharded.Q6_K.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q6_K.gguf) | Q6_K | 0.0GB |

|

| 38 |

+

| [Yarn-Mistral-7b-128k-sharded.Q8_0.gguf](https://huggingface.co/RichardErkhov/yanismiraoui_-_Yarn-Mistral-7b-128k-sharded-gguf/blob/main/Yarn-Mistral-7b-128k-sharded.Q8_0.gguf) | Q8_0 | 0.0GB |

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

|

| 42 |

+

|

| 43 |

+

Original model description:

|

| 44 |

+

---

|

| 45 |

+

datasets:

|

| 46 |

+

- emozilla/yarn-train-tokenized-16k-mistral

|

| 47 |

+

metrics:

|

| 48 |

+

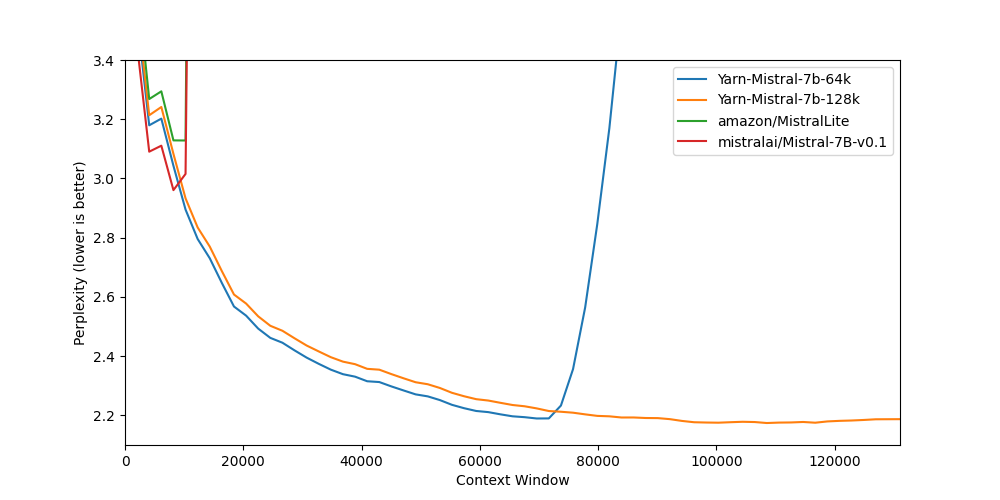

- perplexity

|

| 49 |

+

library_name: transformers

|

| 50 |

+

license: apache-2.0

|

| 51 |

+

language:

|

| 52 |

+

- en

|

| 53 |

+

---

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

## This repo contains a SHARDED version of: https://huggingface.co/NousResearch/Yarn-Mistral-7b-128k

|

| 57 |

+

|

| 58 |

+

### Huge thanks to the publishers for their amazing work, all credits go to them: https://huggingface.co/NousResearch

|

| 59 |

+

|

| 60 |

+

# Model Card: Nous-Yarn-Mistral-7b-128k

|

| 61 |

+

|

| 62 |

+

[Preprint (arXiv)](https://arxiv.org/abs/2309.00071)

|

| 63 |

+

[GitHub](https://github.com/jquesnelle/yarn)

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

## Model Description

|

| 67 |

+

|

| 68 |

+

Nous-Yarn-Mistral-7b-128k is a state-of-the-art language model for long context, further pretrained on long context data for 1500 steps using the YaRN extension method.

|

| 69 |

+

It is an extension of [Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) and supports a 128k token context window.

|

| 70 |

+

|

| 71 |

+

To use, pass `trust_remote_code=True` when loading the model, for example

|

| 72 |

+

|

| 73 |

+

```python

|

| 74 |

+

model = AutoModelForCausalLM.from_pretrained("NousResearch/Yarn-Mistral-7b-128k",

|

| 75 |

+

use_flash_attention_2=True,

|

| 76 |

+

torch_dtype=torch.bfloat16,

|

| 77 |

+

device_map="auto",

|

| 78 |

+

trust_remote_code=True)

|

| 79 |

+

```

|

| 80 |

+

|

| 81 |

+

In addition you will need to use the latest version of `transformers` (until 4.35 comes out)

|

| 82 |

+

```sh

|

| 83 |

+

pip install git+https://github.com/huggingface/transformers

|

| 84 |

+

```

|

| 85 |

+

|

| 86 |

+

## Benchmarks

|

| 87 |

+

|

| 88 |

+

Long context benchmarks:

|

| 89 |

+

| Model | Context Window | 8k PPL | 16k PPL | 32k PPL | 64k PPL | 128k PPL |

|

| 90 |

+

|-------|---------------:|------:|----------:|-----:|-----:|------------:|

|

| 91 |

+

| [Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) | 8k | 2.96 | - | - | - | - |

|

| 92 |

+

| [Yarn-Mistral-7b-64k](https://huggingface.co/NousResearch/Yarn-Mistral-7b-64k) | 64k | 3.04 | 2.65 | 2.44 | 2.20 | - |

|

| 93 |

+

| [Yarn-Mistral-7b-128k](https://huggingface.co/NousResearch/Yarn-Mistral-7b-128k) | 128k | 3.08 | 2.68 | 2.47 | 2.24 | 2.19 |

|

| 94 |

+

|

| 95 |

+

Short context benchmarks showing that quality degradation is minimal:

|

| 96 |

+

| Model | Context Window | ARC-c | Hellaswag | MMLU | Truthful QA |

|

| 97 |

+

|-------|---------------:|------:|----------:|-----:|------------:|

|

| 98 |

+

| [Mistral-7B-v0.1](https://huggingface.co/mistralai/Mistral-7B-v0.1) | 8k | 59.98 | 83.31 | 64.16 | 42.15 |

|

| 99 |

+

| [Yarn-Mistral-7b-64k](https://huggingface.co/NousResearch/Yarn-Mistral-7b-64k) | 64k | 59.38 | 81.21 | 61.32 | 42.50 |

|

| 100 |

+

| [Yarn-Mistral-7b-128k](https://huggingface.co/NousResearch/Yarn-Mistral-7b-128k) | 128k | 58.87 | 80.58 | 60.64 | 42.46 |

|

| 101 |

+

|

| 102 |

+

## Collaborators

|

| 103 |

+

|

| 104 |

+

- [bloc97](https://github.com/bloc97): Methods, paper and evals

|

| 105 |

+

- [@theemozilla](https://twitter.com/theemozilla): Methods, paper, model training, and evals

|

| 106 |

+

- [@EnricoShippole](https://twitter.com/EnricoShippole): Model training

|

| 107 |

+

- [honglu2875](https://github.com/honglu2875): Paper and evals

|

| 108 |

+

|

| 109 |

+

The authors would like to thank LAION AI for their support of compute for this model.

|

| 110 |

+

It was trained on the [JUWELS](https://www.fz-juelich.de/en/ias/jsc/systems/supercomputers/juwels) supercomputer.

|

| 111 |

+

|