Update README.md

Browse files

README.md

CHANGED

|

@@ -1,3 +1,118 @@

|

|

| 1 |

-

---

|

| 2 |

-

license: apache-2.0

|

| 3 |

-

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

license: apache-2.0

|

| 3 |

+

---

|

| 4 |

+

|

| 5 |

+

# Divot: Diffusion Powers Video Tokenizer for Comprehension and Generation

|

| 6 |

+

|

| 7 |

+

[](https://github.com/TencentARC/Divot)

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

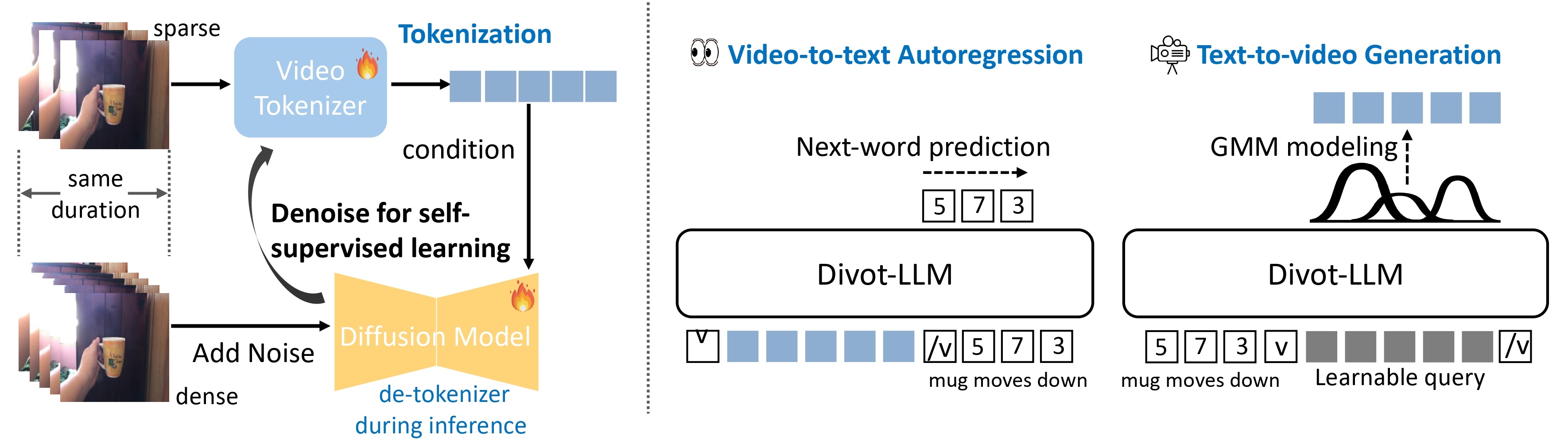

>We introduce Divot, a **Di**ffusion-Powered **V**ide**o** **T**okenizer, which leverages the diffusion process for self-supervised video representation learning. We posit that if a video diffusion model can effectively de-noise video clips by taking the features of a video tokenizer as the condition, then the tokenizer has successfully captured robust spatial and temporal information. Additionally, the video diffusion model inherently functions as a de-tokenizer, decoding videos from their representations.

|

| 11 |

+

Building upon the Divot tokenizer, we present **Divot-LLM** through video-to-text autoregression and text-to-video generation by modeling the distributions of continuous-valued Divot features with a Gaussian Mixture Model.

|

| 12 |

+

|

| 13 |

+

All models, training code and inference code are released!

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

## TODOs

|

| 17 |

+

- [x] Release the pretrained tokenizer and de-tokenizer of Divot.

|

| 18 |

+

- [x] Release the pretrained and instruction tuned model of Divot-LLM.

|

| 19 |

+

- [x] Release inference code of Divot.

|

| 20 |

+

- [x] Release training and inference code of Divot-LLM.

|

| 21 |

+

- [ ] Release training code of Divot.

|

| 22 |

+

- [ ] Release de-tokenizer adaptation training code.

|

| 23 |

+

|

| 24 |

+

## Introduction

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

We utilize the diffusion procedure to learn **a video tokenizer** in a self-supervised manner for unified comprehension and

|

| 28 |

+

generation, where the spatiotemporal representations serve as the

|

| 29 |

+

condition of a diffusion model to de-noise video clips. Additionally,

|

| 30 |

+

the proxy diffusion model functions as a **de-tokenizer** to decode

|

| 31 |

+

realistic video clips from the video representations.

|

| 32 |

+

|

| 33 |

+

After training the the Divot tokenizer, video features from the Divot tokenizer are fed into the LLM to perform next-word prediction for video comprehension, while learnable queries are input into the LLM to model the distributions of Divot features using **a Gaussian Mixture Model (GMM)** for video generation. During inference,

|

| 34 |

+

video features are sampled from the predicted GMM distribution to

|

| 35 |

+

decode videos using the de-tokenizer.

|

| 36 |

+

|

| 37 |

+

## Usage

|

| 38 |

+

|

| 39 |

+

### Dependencies

|

| 40 |

+

- Python >= 3.8 (Recommend to use [Anaconda](https://www.anaconda.com/download/#linux))

|

| 41 |

+

- [PyTorch >=2.1.0](https://pytorch.org/)

|

| 42 |

+

- NVIDIA GPU + [CUDA](https://developer.nvidia.com/cuda-downloads)

|

| 43 |

+

|

| 44 |

+

### Installation

|

| 45 |

+

Clone the repo and install dependent packages

|

| 46 |

+

|

| 47 |

+

```bash

|

| 48 |

+

git clone https://github.com/TencentARC/Divot.git

|

| 49 |

+

cd Divot

|

| 50 |

+

pip install -r requirements.txt

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

### Model Weights

|

| 54 |

+

We release the pretrained tokenizer and de-tokenizer, pre-trained and instruction-tuned Divot-LLM in [Divot](https://huggingface.co/TencentARC/Divot/). Please download the checkpoints and save them under the folder `./pretrained`. For example, `./pretrained/Divot_tokenizer_detokenizer`.

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

You also need to download [Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) and [CLIP-ViT-H-14-laion2B-s32B-b79K](https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K), and save them under the folder `./pretrained`.

|

| 58 |

+

|

| 59 |

+

### Inference

|

| 60 |

+

#### Video Reconstruction with Divot

|

| 61 |

+

```bash

|

| 62 |

+

python3 src/tools/eval_Divot_video_recon.py

|

| 63 |

+

```

|

| 64 |

+

|

| 65 |

+

#### Video Comprehension with Divot-LLM

|

| 66 |

+

```bash

|

| 67 |

+

python3 src/tools/eval_Divot_video_comp.py

|

| 68 |

+

```

|

| 69 |

+

|

| 70 |

+

#### Video Generation with Divot-LLM

|

| 71 |

+

```bash

|

| 72 |

+

python3 src/tools/eval_Divot_video_gen.py

|

| 73 |

+

```

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

### Training

|

| 77 |

+

#### Pre-training

|

| 78 |

+

1. Download the checkpoints of pre-trained [Mistral-7B-Instruct-v0.1](https://huggingface.co/mistralai/Mistral-7B-Instruct-v0.1) and [CLIP-ViT-H-14-laion2B-s32B-b79K](https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K) , and save them under the folder `./pretrained`.

|

| 79 |

+

2. Prepare the training data in the format of webdataset.

|

| 80 |

+

3. Run the following script.

|

| 81 |

+

```bash

|

| 82 |

+

sh scripts/train_Divot_pretrain_comp_gen.sh

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

#### Instruction-tuning

|

| 86 |

+

1. Download the checkpoints of pre-trained Divot tokenizer and Divot-LLM in [Divot](https://huggingface.co/TencentARC/Divot/), and save them under the folder `./pretrained`.

|

| 87 |

+

2. Prepare the instruction data in the format of webdataset (for generation) and jsonl (for comprehension, where each line stores a dictionary used to specify the video_path, question, and answer).

|

| 88 |

+

3. Run the following script.

|

| 89 |

+

```bash

|

| 90 |

+

### For video comprehension

|

| 91 |

+

sh scripts/train_Divot_sft_comp.sh

|

| 92 |

+

|

| 93 |

+

### For video generation

|

| 94 |

+

sh scripts/train_Divot_sft_gen.sh

|

| 95 |

+

```

|

| 96 |

+

|

| 97 |

+

#### Inference with your own model

|

| 98 |

+

1. Obtain "pytorch_model.bin" with the following script.

|

| 99 |

+

```bash

|

| 100 |

+

cd train_output/sft_comp/checkpoint-xxxx

|

| 101 |

+

python3 zero_to_fp32.py . pytorch_model.bin

|

| 102 |

+

```

|

| 103 |

+

2. Merge your trained lora with the original LLM model using the following script.

|

| 104 |

+

```bash

|

| 105 |

+

python3 src/tools/merge_agent_lora_weight.py

|

| 106 |

+

```

|

| 107 |

+

3. Load your merged model in "mistral7b_merged_xxx" and and corresponding "agent" path, For example,

|

| 108 |

+

```bash

|

| 109 |

+

llm_cfg_path = 'configs/clm_models/mistral7b_merged_sft_comp.yaml'

|

| 110 |

+

agent_cfg_path = 'configs/clm_models/agent_7b_in64_out64_video_gmm_sft_comp.yaml'

|

| 111 |

+

```

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

## License

|

| 115 |

+

`Divot` is licensed under the Apache License Version 2.0 for academic purpose only except for the third-party components listed in [License](License.txt).

|

| 116 |

+

|

| 117 |

+

## Acknowledge

|

| 118 |

+

Our code for Divot tokenizer and de-tokenizer is built upon [DynamiCrafter](https://github.com/Doubiiu/DynamiCrafter). Thanks for their excellent work!

|