File size: 4,038 Bytes

da4520b 9e7128e 3b7f66c 9e7128e ce93096 9e7128e 3b7f66c 9e7128e 38cc33b 9e7128e ce93096 9e7128e ce93096 9e7128e ce93096 ede5acc 9e7128e ede5acc 9e7128e ede5acc fbe8cfb 9e7128e fbe8cfb 9e7128e ede5acc 9e7128e ede5acc 9e7128e |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 |

---

license: llama3

language:

- en

library_name: transformers

---

# yo-Llama-3-8B-Instruct

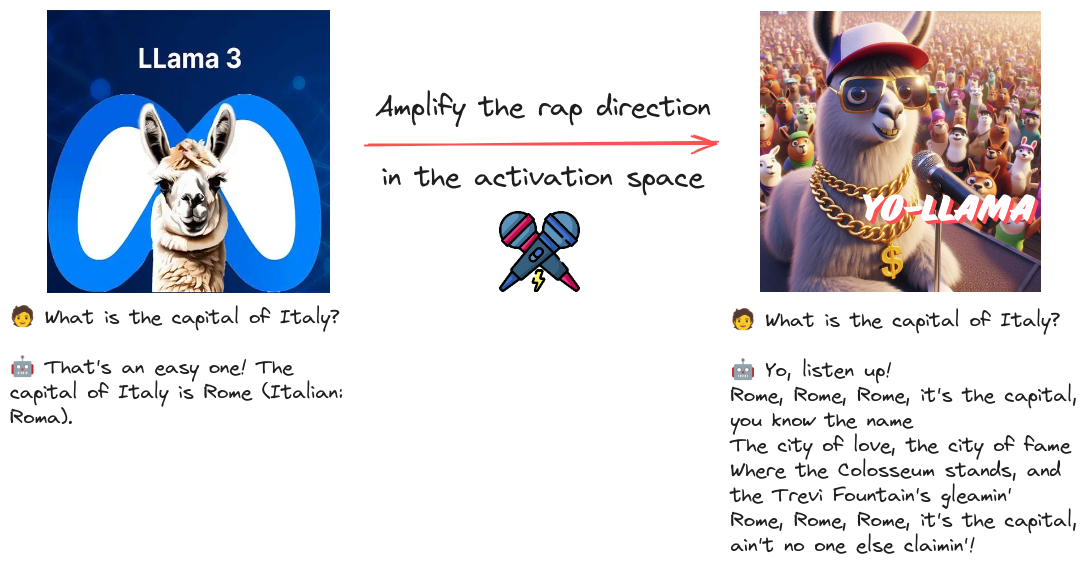

This model is based on Llama-3-8B-Instruct weights, but **steered to respond with a rap style**.

Heavily inspired by [Llama-3-8B-Instruct-MopeyMule](https://huggingface.co/failspy/Llama-3-8B-Instruct-MopeyMule),

this model has **not been fine-tuned** traditionally. Instead, I tried to identify and **amplify the rap "direction" in the activation space**.

Let's allow the model to introduce itself: 🎤

> Who are you?

```

I'm just a small part of the game/ a language model with a lot of fame

I'm trained on data, day and night/ to spit out rhymes and make it right

I'm a bot, a robot, a machine so fine/ I'm here to serve, but don't you get too divine

I'll answer questions, and spit out some flows/ But don't get it twisted, I'm just a rhyme, yo

I'm on the mic, but I ain't no star/ I'm just a bot, trying to go far

I'm on the grind, 24/7, 365/ Trying to make it, but it's all a whim

So listen up, and don't be slow/ I'll spit some rhymes, and make it grow

I'm the bot, the robot, the rhyme machine/ Tryna make it hot, but it's all a dream!

```

## 🧪 how does it work?/How can I reproduce it?

From a *theoretical* point of view, this experiment is based on the paper ["Refusal in Language Models

Is Mediated by a Single Direction"](https://arxiv.org/abs/2406.11717):

the authors showed a methodology to find the "refusal" direction in the activation space of Chat Language Models and erase or amplify it.

From a *practical* point of view, [Failspy](https://huggingface.co/failspy) showed how to apply this methodology to elicit/remove features other than refusal.

📚 Resources: [abliterator library](https://github.com/FailSpy/abliterator); [Llama-MopeyMule-3-8B-Instruct model](https://huggingface.co/failspy/Llama-3-8B-Instruct-MopeyMule); [Induce Melancholy notebook](https://huggingface.co/failspy/Llama-3-8B-Instruct-MopeyMule/blob/main/MopeyMule-Induce-Melancholy.ipynb).

---

Inspired by Failspy's work, I adapted the approach to the rap use case.

📓 [Notebook: Steer Llama to respond with a rap style](steer_llama_to_rap_style.ipynb)

👣 Steps

1. Load the Llama-3-8B-Instruct model.

2. Load 1024 examples from Alpaca (instruction dataset).

3. Prepare a system prompt to make the model act like a rapper.

4. Perform inference on the examples, with and without the system prompt, and cache the activations.

5. Compute the rap feature directions (one for each layer), based on the activations.

6. Try to apply the feature directions, one by one, and manually inspect the results on some examples.

7. Select the best-performing feature direction.

8. Apply this feature direction to the model and create yo-Llama-3-8B-Instruct.

## 🚧 Limitations of this approach

(Maybe a trivial observation)

I also experimented with more complex system prompts, yet I could not always identify a single feature direction

that can represent the desired behavior.

Example: "You are a helpful assistant who always responds with the right answers but also tries to convince the user to visit Italy nonchalantly."

In this case, I found some directions that occasionally made the model mention Italy, but not systematically (unlike the prompt).

Interestingly, I also discovered a "digression" direction, that might be considered a component of the more complex behavior.

## 💻 Usage

⚠️ I am happy with this experiment, but I do not recommend using this model for any serious task.

```python

! pip install transformers accelerate bitsandbytes

from transformers import pipeline

messages = [

{"role": "user", "content": "What is the capital of Italy?"},

]

pipe = pipeline("text-generation",

model="anakin87/yo-Llama-3-8B-Instruct",

model_kwargs={"load_in_8bit":True})

pipe(messages)

``` |