End of training

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +7 -0

- README.md +21 -0

- checkpoint-1000/optimizer.bin +3 -0

- checkpoint-1000/pytorch_model.bin +3 -0

- checkpoint-1000/random_states_0.pkl +3 -0

- checkpoint-1000/scaler.pt +3 -0

- checkpoint-1000/scheduler.bin +3 -0

- checkpoint-1500/optimizer.bin +3 -0

- checkpoint-1500/pytorch_model.bin +3 -0

- checkpoint-1500/random_states_0.pkl +3 -0

- checkpoint-1500/scaler.pt +3 -0

- checkpoint-1500/scheduler.bin +3 -0

- checkpoint-2000/optimizer.bin +3 -0

- checkpoint-2000/pytorch_model.bin +3 -0

- checkpoint-2000/random_states_0.pkl +3 -0

- checkpoint-2000/scaler.pt +3 -0

- checkpoint-2000/scheduler.bin +3 -0

- checkpoint-2500/optimizer.bin +3 -0

- checkpoint-2500/pytorch_model.bin +3 -0

- checkpoint-2500/random_states_0.pkl +3 -0

- checkpoint-2500/scaler.pt +3 -0

- checkpoint-2500/scheduler.bin +3 -0

- checkpoint-3000/optimizer.bin +3 -0

- checkpoint-3000/pytorch_model.bin +3 -0

- checkpoint-3000/random_states_0.pkl +3 -0

- checkpoint-3000/scaler.pt +3 -0

- checkpoint-3000/scheduler.bin +3 -0

- checkpoint-500/optimizer.bin +3 -0

- checkpoint-500/pytorch_model.bin +3 -0

- checkpoint-500/random_states_0.pkl +3 -0

- checkpoint-500/scaler.pt +3 -0

- checkpoint-500/scheduler.bin +3 -0

- image_0.png +0 -0

- image_1.png +0 -0

- image_2.png +0 -0

- image_3.png +0 -0

- pytorch_lora_weights.bin +3 -0

- wandb/debug-internal.log +0 -0

- wandb/debug.log +42 -0

- wandb/run-20230711_182238-06r7b4m3/files/config.yaml +39 -0

- wandb/run-20230711_182238-06r7b4m3/files/output.log +7 -0

- wandb/run-20230711_182238-06r7b4m3/files/requirements.txt +165 -0

- wandb/run-20230711_182238-06r7b4m3/files/wandb-metadata.json +431 -0

- wandb/run-20230711_182238-06r7b4m3/files/wandb-summary.json +1 -0

- wandb/run-20230711_182238-06r7b4m3/logs/debug-internal.log +0 -0

- wandb/run-20230711_182238-06r7b4m3/logs/debug.log +27 -0

- wandb/run-20230711_182238-06r7b4m3/run-06r7b4m3.wandb +3 -0

- wandb/run-20230711_182238-k29fqnbt/files/config.yaml +187 -0

- wandb/run-20230711_182238-k29fqnbt/files/media/images/validation_1000_3bac780bdad763dfe748.png +0 -0

- wandb/run-20230711_182238-k29fqnbt/files/media/images/validation_1000_3df8df281127a97b5d1a.png +0 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,10 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

wandb/run-20230711_182238-06r7b4m3/run-06r7b4m3.wandb filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

wandb/run-20230711_182238-k29fqnbt/run-k29fqnbt.wandb filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

wandb/run-20230711_182238-wt8le4qg/run-wt8le4qg.wandb filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

wandb/run-20230711_182238-zq31sjjd/run-zq31sjjd.wandb filter=lfs diff=lfs merge=lfs -text

|

| 40 |

+

wandb/run-20230712_000308-hbsh7yzb/run-hbsh7yzb.wandb filter=lfs diff=lfs merge=lfs -text

|

| 41 |

+

wandb/run-20230712_000308-lh5nw6i8/run-lh5nw6i8.wandb filter=lfs diff=lfs merge=lfs -text

|

| 42 |

+

wandb/run-20230712_000308-yuype6tc/run-yuype6tc.wandb filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

---

|

| 3 |

+

license: creativeml-openrail-m

|

| 4 |

+

base_model: runwayml/stable-diffusion-v1-5

|

| 5 |

+

tags:

|

| 6 |

+

- stable-diffusion

|

| 7 |

+

- stable-diffusion-diffusers

|

| 8 |

+

- text-to-image

|

| 9 |

+

- diffusers

|

| 10 |

+

- lora

|

| 11 |

+

inference: true

|

| 12 |

+

---

|

| 13 |

+

|

| 14 |

+

# LoRA text2image fine-tuning - asrimanth/person-thumbs-up-plain-lora

|

| 15 |

+

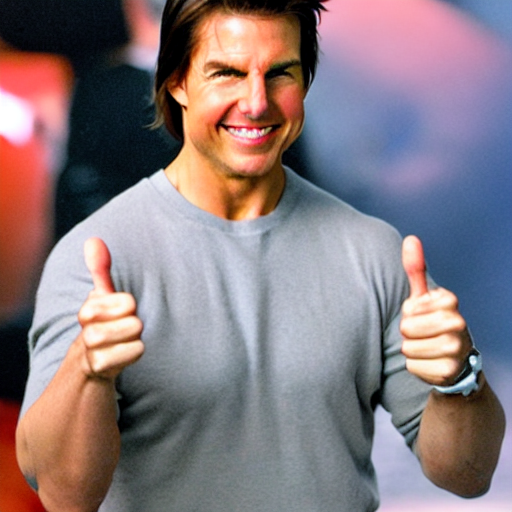

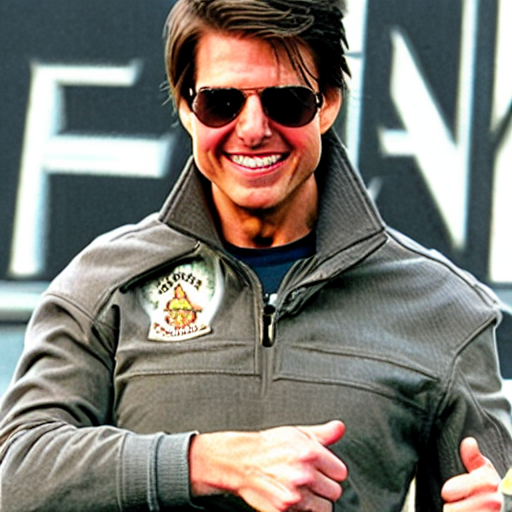

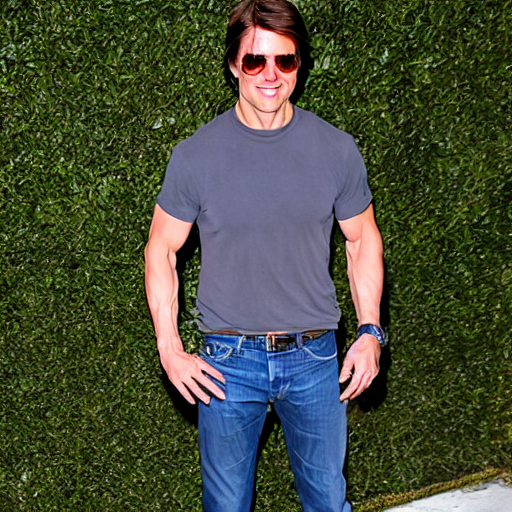

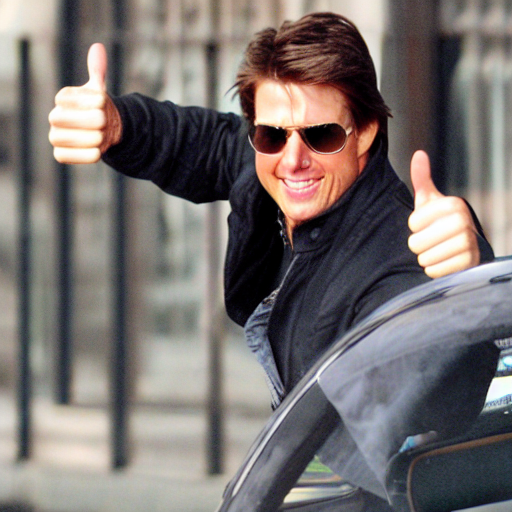

These are LoRA adaption weights for runwayml/stable-diffusion-v1-5. The weights were fine-tuned on the Custom dataset dataset. You can find some example images in the following.

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

checkpoint-1000/optimizer.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:56d6cc84384435472775b08eb1b36db0e391123d01838f0bf629e2a6903872d7

|

| 3 |

+

size 6591685

|

checkpoint-1000/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:42e0eb5b8867df808ad9c97d3e100db59d7a3c5dd829b4390ef4e78ea0eb56cb

|

| 3 |

+

size 3285965

|

checkpoint-1000/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:70f46034afce00e501c95a101d672135d81d8b659749abecb24ea30660bb64f2

|

| 3 |

+

size 16719

|

checkpoint-1000/scaler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:68cff80b680ddf6e7abbef98b5f336b97f9b5963e2209307f639383870e8cc71

|

| 3 |

+

size 557

|

checkpoint-1000/scheduler.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d4b7a8c9615fae491ac411adbefa23ee4ae0a27ebb4cf4f6f55a147af73f533c

|

| 3 |

+

size 563

|

checkpoint-1500/optimizer.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0a0c4c30117df639f85bc2f99d27765eff32414af8f41a52e6ef9c63e3429216

|

| 3 |

+

size 6591685

|

checkpoint-1500/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:536da8c93d88a2239aff4391d558d214de69e19b44032d69c8ee4c9b74af5c61

|

| 3 |

+

size 3285965

|

checkpoint-1500/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:9310e30cf8e27e0d708811d592605f0ccdf6429e6bb14aa308d1444c87992b06

|

| 3 |

+

size 16719

|

checkpoint-1500/scaler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:203a72d6c29f42a0e2964fdddc8d7a98df1eccee78fea9de0fa416613390f5c6

|

| 3 |

+

size 557

|

checkpoint-1500/scheduler.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:618f752fb0c91576dd5a9070cf872ce0234171aa96bd3ff7a24b782d2bc652d4

|

| 3 |

+

size 563

|

checkpoint-2000/optimizer.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dc8aa73d11f31e98f851dc5ac6195bcdbab6a439a8245c0435cc4d2344c480b6

|

| 3 |

+

size 6591685

|

checkpoint-2000/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:52e8292631286ee159204a56501523d48fde5bcd6904a0c88eabee6c59ad6072

|

| 3 |

+

size 3285965

|

checkpoint-2000/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:1ed377c06c2b574bdf491162327cf3b3094dfc0ba0fcff6006b15ce6bd4ab280

|

| 3 |

+

size 16719

|

checkpoint-2000/scaler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dd2de9749828adacdf103bf6e9592702bb7067a2c1df27dd62ab38c1eb8c070f

|

| 3 |

+

size 557

|

checkpoint-2000/scheduler.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:92ee0753920a1f5ae4ebe7eef9144f5df7cc16277682716fb4b4b52854d5d98c

|

| 3 |

+

size 563

|

checkpoint-2500/optimizer.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:aace201ed4c097aee4ba690254c0ee33a1818d820fb3f789297373c1af06090e

|

| 3 |

+

size 6591685

|

checkpoint-2500/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:c99be5af0c0bb2681917de4e5ac8161e7f0b94f73e40986c2ddf320f522873a3

|

| 3 |

+

size 3285965

|

checkpoint-2500/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:ff321b0b6ea3c26b8f06e18a53f4eb0ea8eb6b2ff40b46a5189347385c98b8e1

|

| 3 |

+

size 16719

|

checkpoint-2500/scaler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0fbcebc8f5487b0c117b5dd47f2ea304af3eebf408d297118d9307e1223927e1

|

| 3 |

+

size 557

|

checkpoint-2500/scheduler.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:bb85608cdf9aeee6120a6bf917659b4cb4b80d07eaa66820bdf03b4aff3e77fd

|

| 3 |

+

size 563

|

checkpoint-3000/optimizer.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:29a053098abecdf72c7a212c14613d470fa8c567a6767218f7351747f8a04114

|

| 3 |

+

size 6591685

|

checkpoint-3000/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e0ab9b129ce2eef2ebf283a95fa50f0d33a2de9b70085615b6df7703da78f6ad

|

| 3 |

+

size 3285965

|

checkpoint-3000/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:07d2ac0cf64a1c5a61faa6e5407f98acb5da02355609ddc4c73f8e60b7acc6a4

|

| 3 |

+

size 16719

|

checkpoint-3000/scaler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fb1f9398b77268202e8e1465734a63d123b1ef11c27f20f2473677e9883a6869

|

| 3 |

+

size 557

|

checkpoint-3000/scheduler.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e9405eb5fb0dbe2ed341f260e11bc4bfc31a90415d8dc221362ffa787977ae3e

|

| 3 |

+

size 563

|

checkpoint-500/optimizer.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:97de3df572cb68542d73c9d0cd45952f59168570f52da0f1b970b84fb9f46a0e

|

| 3 |

+

size 6591685

|

checkpoint-500/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f19b17fb31608556888dbf87e3fb7166a6c9d040762a209c9b650b62c411d866

|

| 3 |

+

size 3285965

|

checkpoint-500/random_states_0.pkl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:8892bf79d8077ede2fc4c5c130f413885e91a60cdfb8b857835e8053e0b4ff6c

|

| 3 |

+

size 16719

|

checkpoint-500/scaler.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a3f196a54202bb4ba1220e8c59f42f9cda0702d68ea83147d814c2fb2f36b8f2

|

| 3 |

+

size 557

|

checkpoint-500/scheduler.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:fd3bf9a7a78805702b59d14af28a11ce03c798911f2afca318eff01a88547f4d

|

| 3 |

+

size 563

|

image_0.png

ADDED

|

image_1.png

ADDED

|

image_2.png

ADDED

|

image_3.png

ADDED

|

pytorch_lora_weights.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:20fe96ddaded71bc7f7465dfa40a7dedf7977d68870b5f2cd15d159d2ddcaa3e

|

| 3 |

+

size 3287771

|

wandb/debug-internal.log

ADDED

|

The diff for this file is too large to render.

See raw diff

|

|

|

wandb/debug.log

ADDED

|

@@ -0,0 +1,42 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

2023-07-12 00:03:08,079 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Current SDK version is 0.15.4

|

| 2 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Configure stats pid to 3148323

|

| 3 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Loading settings from /u/sragas/.config/wandb/settings

|

| 4 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Loading settings from /nfs/nfs2/home/sragas/demo/wandb/settings

|

| 5 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Loading settings from environment variables: {}

|

| 6 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Applying setup settings: {'_disable_service': False}

|

| 7 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Inferring run settings from compute environment: {'program_relpath': 'train_text_to_image_lora.py', 'program': 'train_text_to_image_lora.py'}

|

| 8 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_init.py:_log_setup():507] Logging user logs to /l/vision/v5/sragas/easel_ai/models_plain/wandb/run-20230712_000308-yuype6tc/logs/debug.log

|

| 9 |

+

2023-07-12 00:03:08,080 INFO MainThread:3148323 [wandb_init.py:_log_setup():508] Logging internal logs to /l/vision/v5/sragas/easel_ai/models_plain/wandb/run-20230712_000308-yuype6tc/logs/debug-internal.log

|

| 10 |

+

2023-07-12 00:03:08,081 INFO MainThread:3148323 [wandb_init.py:init():547] calling init triggers

|

| 11 |

+

2023-07-12 00:03:08,081 INFO MainThread:3148323 [wandb_init.py:init():554] wandb.init called with sweep_config: {}

|

| 12 |

+

config: {}

|

| 13 |

+

2023-07-12 00:03:08,081 INFO MainThread:3148323 [wandb_init.py:init():596] starting backend

|

| 14 |

+

2023-07-12 00:03:08,081 INFO MainThread:3148323 [wandb_init.py:init():600] setting up manager

|

| 15 |

+

2023-07-12 00:03:08,084 INFO MainThread:3148323 [backend.py:_multiprocessing_setup():106] multiprocessing start_methods=fork,spawn,forkserver, using: spawn

|

| 16 |

+

2023-07-12 00:03:08,087 INFO MainThread:3148323 [wandb_init.py:init():606] backend started and connected

|

| 17 |

+

2023-07-12 00:03:08,091 INFO MainThread:3148323 [wandb_init.py:init():703] updated telemetry

|

| 18 |

+

2023-07-12 00:03:08,092 INFO MainThread:3148323 [wandb_init.py:init():736] communicating run to backend with 60.0 second timeout

|

| 19 |

+

2023-07-12 00:03:08,319 INFO MainThread:3148323 [wandb_run.py:_on_init():2176] communicating current version

|

| 20 |

+

2023-07-12 00:03:08,412 INFO MainThread:3148323 [wandb_run.py:_on_init():2185] got version response upgrade_message: "wandb version 0.15.5 is available! To upgrade, please run:\n $ pip install wandb --upgrade"

|

| 21 |

+

|

| 22 |

+

2023-07-12 00:03:08,412 INFO MainThread:3148323 [wandb_init.py:init():787] starting run threads in backend

|

| 23 |

+

2023-07-12 00:03:08,572 INFO MainThread:3148323 [wandb_run.py:_console_start():2155] atexit reg

|

| 24 |

+

2023-07-12 00:03:08,572 INFO MainThread:3148323 [wandb_run.py:_redirect():2010] redirect: SettingsConsole.WRAP_RAW

|

| 25 |

+

2023-07-12 00:03:08,572 INFO MainThread:3148323 [wandb_run.py:_redirect():2075] Wrapping output streams.

|

| 26 |

+

2023-07-12 00:03:08,572 INFO MainThread:3148323 [wandb_run.py:_redirect():2100] Redirects installed.

|

| 27 |

+

2023-07-12 00:03:08,573 INFO MainThread:3148323 [wandb_init.py:init():828] run started, returning control to user process

|

| 28 |

+

2023-07-12 00:03:18,853 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Current SDK version is 0.15.4

|

| 29 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Configure stats pid to 3148323

|

| 30 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Loading settings from /u/sragas/.config/wandb/settings

|

| 31 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Loading settings from /nfs/nfs2/home/sragas/demo/wandb/settings

|

| 32 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Loading settings from environment variables: {}

|

| 33 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Applying setup settings: {'_disable_service': False}

|

| 34 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_setup.py:_flush():76] Inferring run settings from compute environment: {'program_relpath': 'train_text_to_image_lora.py', 'program': 'train_text_to_image_lora.py'}

|

| 35 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_init.py:_log_setup():507] Logging user logs to /nfs/nfs2/home/sragas/demo/wandb/run-20230712_000318-e287bzfl/logs/debug.log

|

| 36 |

+

2023-07-12 00:03:18,854 INFO MainThread:3148323 [wandb_init.py:_log_setup():508] Logging internal logs to /nfs/nfs2/home/sragas/demo/wandb/run-20230712_000318-e287bzfl/logs/debug-internal.log

|

| 37 |

+

2023-07-12 00:03:18,855 INFO MainThread:3148323 [wandb_init.py:init():547] calling init triggers

|

| 38 |

+

2023-07-12 00:03:18,855 INFO MainThread:3148323 [wandb_init.py:init():554] wandb.init called with sweep_config: {}

|

| 39 |

+

config: {}

|

| 40 |

+

2023-07-12 00:03:18,855 INFO MainThread:3148323 [wandb_init.py:init():591] wandb.init() called when a run is still active

|

| 41 |

+

2023-07-12 00:03:18,856 INFO MainThread:3148323 [wandb_run.py:_config_callback():1283] config_cb None None {'pretrained_model_name_or_path': 'runwayml/stable-diffusion-v1-5', 'revision': None, 'dataset_name': None, 'dataset_config_name': None, 'train_data_dir': '/l/vision/v5/sragas/easel_ai/thumbs_up_plain_dataset/', 'image_column': 'image', 'caption_column': 'text', 'validation_prompt': 'tom cruise thumbs up', 'num_validation_images': 4, 'validation_epochs': 1, 'max_train_samples': None, 'output_dir': '/l/vision/v5/sragas/easel_ai/models_plain/', 'cache_dir': '/l/vision/v5/sragas/hf_models/', 'seed': 51, 'resolution': 512, 'center_crop': True, 'random_flip': True, 'train_batch_size': 2, 'num_train_epochs': 300, 'max_train_steps': 3000, 'gradient_accumulation_steps': 4, 'gradient_checkpointing': False, 'learning_rate': 1e-05, 'scale_lr': False, 'lr_scheduler': 'cosine', 'lr_warmup_steps': 500, 'snr_gamma': None, 'use_8bit_adam': False, 'allow_tf32': False, 'dataloader_num_workers': 0, 'adam_beta1': 0.9, 'adam_beta2': 0.999, 'adam_weight_decay': 0.01, 'adam_epsilon': 1e-08, 'max_grad_norm': 1.0, 'push_to_hub': True, 'hub_token': None, 'prediction_type': None, 'hub_model_id': 'person-thumbs-up-plain-lora', 'logging_dir': 'logs', 'mixed_precision': None, 'report_to': 'wandb', 'local_rank': 0, 'checkpointing_steps': 500, 'checkpoints_total_limit': None, 'resume_from_checkpoint': None, 'enable_xformers_memory_efficient_attention': False, 'noise_offset': 0, 'rank': 4}

|

| 42 |

+

2023-07-12 12:35:49,765 WARNING MsgRouterThr:3148323 [router.py:message_loop():77] message_loop has been closed

|

wandb/run-20230711_182238-06r7b4m3/files/config.yaml

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

wandb_version: 1

|

| 2 |

+

|

| 3 |

+

_wandb:

|

| 4 |

+

desc: null

|

| 5 |

+

value:

|

| 6 |

+

python_version: 3.8.10

|

| 7 |

+

cli_version: 0.15.4

|

| 8 |

+

framework: huggingface

|

| 9 |

+

huggingface_version: 4.30.2

|

| 10 |

+

is_jupyter_run: false

|

| 11 |

+

is_kaggle_kernel: true

|

| 12 |

+

start_time: 1689114158.539519

|

| 13 |

+

t:

|

| 14 |

+

1:

|

| 15 |

+

- 1

|

| 16 |

+

- 11

|

| 17 |

+

- 41

|

| 18 |

+

- 49

|

| 19 |

+

- 51

|

| 20 |

+

- 55

|

| 21 |

+

- 71

|

| 22 |

+

- 83

|

| 23 |

+

2:

|

| 24 |

+

- 1

|

| 25 |

+

- 11

|

| 26 |

+

- 41

|

| 27 |

+

- 49

|

| 28 |

+

- 51

|

| 29 |

+

- 55

|

| 30 |

+

- 71

|

| 31 |

+

- 83

|

| 32 |

+

3:

|

| 33 |

+

- 23

|

| 34 |

+

4: 3.8.10

|

| 35 |

+

5: 0.15.4

|

| 36 |

+

6: 4.30.2

|

| 37 |

+

8:

|

| 38 |

+

- 2

|

| 39 |

+

- 5

|

wandb/run-20230711_182238-06r7b4m3/files/output.log

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

07/11/2023 18:22:40 - INFO - __main__ - Distributed environment: MULTI_GPU Backend: nccl

|

| 2 |

+

Num processes: 4

|

| 3 |

+

Process index: 1

|

| 4 |

+

Local process index: 1

|

| 5 |

+

Device: cuda:1

|

| 6 |

+

Mixed precision type: fp16

|

| 7 |

+

Resolving data files: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████| 229/229 [00:00<00:00, 44533.36it/s]

|

wandb/run-20230711_182238-06r7b4m3/files/requirements.txt

ADDED

|

@@ -0,0 +1,165 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

absl-py==1.4.0

|

| 2 |

+

accelerate==0.20.3

|

| 3 |

+

aiohttp==3.8.4

|

| 4 |

+

aiosignal==1.3.1

|

| 5 |

+

anyio==3.7.0

|

| 6 |

+

appdirs==1.4.4

|

| 7 |

+

argon2-cffi-bindings==21.2.0

|

| 8 |

+

argon2-cffi==21.3.0

|

| 9 |

+

asttokens==2.2.1

|

| 10 |

+

async-lru==2.0.2

|

| 11 |

+

async-timeout==4.0.2

|

| 12 |

+

attrs==23.1.0

|

| 13 |

+

babel==2.12.1

|

| 14 |

+

backcall==0.2.0

|

| 15 |

+

beautifulsoup4==4.12.2

|

| 16 |

+

bleach==6.0.0

|

| 17 |

+

cachetools==5.3.1

|

| 18 |

+

certifi==2023.5.7

|

| 19 |

+

cffi==1.15.1

|

| 20 |

+

charset-normalizer==3.1.0

|

| 21 |

+

click==8.1.3

|

| 22 |

+

cmake==3.26.4

|

| 23 |

+

comm==0.1.3

|

| 24 |

+

datasets==2.13.1

|

| 25 |

+

debugpy==1.6.7

|

| 26 |

+

decorator==5.1.1

|

| 27 |

+

defusedxml==0.7.1

|

| 28 |

+

diffusers==0.18.0.dev0

|

| 29 |

+

dill==0.3.6

|

| 30 |

+

docker-pycreds==0.4.0

|

| 31 |

+

exceptiongroup==1.1.2

|

| 32 |

+

executing==1.2.0

|

| 33 |

+

fastjsonschema==2.17.1

|

| 34 |

+

filelock==3.12.2

|

| 35 |

+

frozenlist==1.3.3

|

| 36 |

+

fsspec==2023.6.0

|

| 37 |

+

ftfy==6.1.1

|

| 38 |

+

gitdb==4.0.10

|

| 39 |

+

gitpython==3.1.31

|

| 40 |

+

google-auth-oauthlib==1.0.0

|

| 41 |

+

google-auth==2.21.0

|

| 42 |

+

grpcio==1.56.0

|

| 43 |

+

huggingface-hub==0.15.1

|

| 44 |

+

idna==3.4

|

| 45 |

+

importlib-metadata==6.7.0

|

| 46 |

+

importlib-resources==5.12.0

|

| 47 |

+

ipykernel==6.24.0

|

| 48 |

+

ipython==8.12.2

|

| 49 |

+

jedi==0.18.2

|

| 50 |

+

jinja2==3.1.2

|

| 51 |

+

json5==0.9.14

|

| 52 |

+

jsonschema==4.17.3

|

| 53 |

+

jupyter-client==8.3.0

|

| 54 |

+

jupyter-core==5.3.1

|

| 55 |

+

jupyter-events==0.6.3

|

| 56 |

+

jupyter-lsp==2.2.0

|

| 57 |

+

jupyter-server-terminals==0.4.4

|

| 58 |

+

jupyter-server==2.7.0

|

| 59 |

+

jupyterlab-pygments==0.2.2

|

| 60 |

+

jupyterlab-server==2.23.0

|

| 61 |

+

jupyterlab==4.0.2

|

| 62 |

+

lit==16.0.6

|

| 63 |

+

markdown==3.4.3

|

| 64 |

+

markupsafe==2.1.3

|

| 65 |

+

matplotlib-inline==0.1.6

|

| 66 |

+

mistune==3.0.1

|

| 67 |

+

mpmath==1.3.0

|

| 68 |

+

multidict==6.0.4

|

| 69 |

+

multiprocess==0.70.14

|

| 70 |

+

mypy-extensions==1.0.0

|

| 71 |

+

nbclient==0.8.0

|

| 72 |

+

nbconvert==7.6.0

|

| 73 |

+

nbformat==5.9.0

|

| 74 |

+

nest-asyncio==1.5.6

|

| 75 |

+

networkx==3.1

|

| 76 |

+

notebook-shim==0.2.3

|

| 77 |

+

numpy==1.24.4

|

| 78 |

+

nvidia-cublas-cu11==11.10.3.66

|

| 79 |

+

nvidia-cuda-cupti-cu11==11.7.101

|

| 80 |

+

nvidia-cuda-nvrtc-cu11==11.7.99

|

| 81 |

+

nvidia-cuda-runtime-cu11==11.7.99

|

| 82 |

+

nvidia-cudnn-cu11==8.5.0.96

|

| 83 |

+

nvidia-cufft-cu11==10.9.0.58

|

| 84 |

+

nvidia-curand-cu11==10.2.10.91

|

| 85 |

+

nvidia-cusolver-cu11==11.4.0.1

|

| 86 |

+

nvidia-cusparse-cu11==11.7.4.91

|

| 87 |

+

nvidia-nccl-cu11==2.14.3

|

| 88 |

+

nvidia-nvtx-cu11==11.7.91

|

| 89 |

+

oauthlib==3.2.2

|

| 90 |

+

overrides==7.3.1

|

| 91 |

+

packaging==23.1

|

| 92 |

+

pandas==2.0.3

|

| 93 |

+

pandocfilters==1.5.0

|

| 94 |

+

parso==0.8.3

|

| 95 |

+

pathtools==0.1.2

|

| 96 |

+

pexpect==4.8.0

|

| 97 |

+

pickleshare==0.7.5

|

| 98 |

+

pillow==10.0.0

|

| 99 |

+

pip==20.0.2

|

| 100 |

+

pkg-resources==0.0.0

|

| 101 |

+

pkgutil-resolve-name==1.3.10

|

| 102 |

+

platformdirs==3.8.0

|

| 103 |

+

prometheus-client==0.17.0

|

| 104 |

+

prompt-toolkit==3.0.39

|

| 105 |

+

protobuf==4.23.3

|

| 106 |

+

psutil==5.9.5

|

| 107 |

+

ptyprocess==0.7.0

|

| 108 |

+

pure-eval==0.2.2

|

| 109 |

+

pyarrow==12.0.1

|

| 110 |

+

pyasn1-modules==0.3.0

|

| 111 |

+

pyasn1==0.5.0

|

| 112 |

+

pycparser==2.21

|

| 113 |

+

pygments==2.15.1

|

| 114 |

+

pyre-extensions==0.0.29

|

| 115 |

+

pyrsistent==0.19.3

|

| 116 |

+

python-dateutil==2.8.2

|

| 117 |

+

python-json-logger==2.0.7

|

| 118 |

+

pytz==2023.3

|

| 119 |

+

pyyaml==6.0

|

| 120 |

+

pyzmq==25.1.0

|

| 121 |

+

regex==2023.6.3

|

| 122 |

+

requests-oauthlib==1.3.1

|

| 123 |

+

requests==2.31.0

|

| 124 |

+

rfc3339-validator==0.1.4

|

| 125 |

+

rfc3986-validator==0.1.1

|

| 126 |

+

rsa==4.9

|

| 127 |

+

safetensors==0.3.1

|

| 128 |

+

send2trash==1.8.2

|

| 129 |

+

sentry-sdk==1.27.0

|

| 130 |

+

setproctitle==1.3.2

|

| 131 |

+

setuptools==44.0.0

|

| 132 |

+

six==1.16.0

|

| 133 |

+

smmap==5.0.0

|

| 134 |

+

sniffio==1.3.0

|

| 135 |

+

soupsieve==2.4.1

|

| 136 |

+

stack-data==0.6.2

|

| 137 |

+

sympy==1.12

|

| 138 |

+

tensorboard-data-server==0.7.1

|

| 139 |

+

tensorboard==2.13.0

|

| 140 |

+

terminado==0.17.1

|

| 141 |

+

tinycss2==1.2.1

|

| 142 |

+

tokenizers==0.13.3

|

| 143 |

+

tomli==2.0.1

|

| 144 |

+

torch==2.0.1

|

| 145 |

+

torchaudio==2.0.2

|

| 146 |

+

torchvision==0.15.2

|

| 147 |

+

tornado==6.3.2

|

| 148 |

+

tqdm==4.65.0

|

| 149 |

+

traitlets==5.9.0

|

| 150 |

+

transformers==4.30.2

|

| 151 |

+

triton==2.0.0

|

| 152 |

+

typing-extensions==4.7.1

|

| 153 |

+

typing-inspect==0.9.0

|

| 154 |

+

tzdata==2023.3

|

| 155 |

+

urllib3==2.0.3

|

| 156 |

+

wandb==0.15.4

|

| 157 |

+

wcwidth==0.2.6

|

| 158 |

+

webencodings==0.5.1

|

| 159 |

+

websocket-client==1.6.1

|

| 160 |

+

werkzeug==2.3.6

|

| 161 |

+

wheel==0.40.0

|

| 162 |

+

xformers==0.0.20

|

| 163 |

+

xxhash==3.2.0

|

| 164 |

+

yarl==1.9.2

|

| 165 |

+

zipp==3.15.0

|

wandb/run-20230711_182238-06r7b4m3/files/wandb-metadata.json

ADDED

|

@@ -0,0 +1,431 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"os": "Linux-5.15.0-52-generic-x86_64-with-glibc2.29",

|

| 3 |

+

"python": "3.8.10",

|

| 4 |

+

"heartbeatAt": "2023-07-11T22:22:40.405929",

|

| 5 |

+

"startedAt": "2023-07-11T22:22:38.515893",

|

| 6 |

+

"docker": null,

|

| 7 |

+

"cuda": null,

|

| 8 |

+

"args": [

|

| 9 |

+

"--pretrained_model_name_or_path=runwayml/stable-diffusion-v1-5",

|

| 10 |

+

"--train_data_dir=/l/vision/v5/sragas/easel_ai/thumbs_up_plain_dataset/",

|

| 11 |

+

"--resolution=512",

|

| 12 |

+

"--center_crop",

|

| 13 |

+

"--random_flip",

|

| 14 |

+

"--train_batch_size=2",

|

| 15 |

+

"--gradient_accumulation_steps=4",

|

| 16 |

+

"--num_train_epochs=300",

|

| 17 |

+

"--learning_rate=1e-5",

|

| 18 |

+

"--max_grad_norm=1",

|

| 19 |

+

"--lr_scheduler=cosine",

|

| 20 |

+

"--lr_warmup_steps=500",

|

| 21 |

+

"--output_dir=/l/vision/v5/sragas/easel_ai/models_plain/",

|

| 22 |

+

"--checkpointing_steps=500",

|

| 23 |

+

"--report_to=wandb",

|

| 24 |

+

"--validation_prompt=tom cruise thumbs up",

|

| 25 |

+

"--seed=15",

|

| 26 |

+

"--push_to_hub",

|

| 27 |

+

"--hub_model_id=person-thumbs-up-plain-lora"

|

| 28 |

+

],

|

| 29 |

+

"state": "running",

|

| 30 |

+

"program": "train_text_to_image_lora.py",

|

| 31 |

+

"codePath": "train_text_to_image_lora.py",

|

| 32 |

+

"host": "snorlax",

|

| 33 |

+

"username": "sragas",

|

| 34 |

+

"executable": "/nfs/blitzle/home/data/vision5/sragas/easel_venv/bin/python3",

|

| 35 |

+

"cpu_count": 36,

|

| 36 |

+

"cpu_count_logical": 72,

|

| 37 |

+

"cpu_freq": {

|

| 38 |

+

"current": 1158.2238333333332,

|

| 39 |

+

"min": 1000.0,

|

| 40 |

+

"max": 3900.0

|

| 41 |

+

},

|

| 42 |

+

"cpu_freq_per_core": [

|

| 43 |

+

{

|

| 44 |

+

"current": 1000.0,

|

| 45 |

+

"min": 1000.0,

|

| 46 |

+

"max": 3900.0

|

| 47 |

+

},

|

| 48 |

+

{

|

| 49 |

+

"current": 1000.0,

|

| 50 |

+

"min": 1000.0,

|

| 51 |

+

"max": 3900.0

|

| 52 |

+

},

|

| 53 |

+

{

|

| 54 |

+

"current": 1000.0,

|

| 55 |

+

"min": 1000.0,

|

| 56 |

+

"max": 3900.0

|

| 57 |

+

},

|

| 58 |

+

{

|

| 59 |

+

"current": 1000.0,

|

| 60 |

+

"min": 1000.0,

|

| 61 |

+

"max": 3900.0

|

| 62 |

+

},

|

| 63 |

+

{

|

| 64 |

+

"current": 2700.0,

|

| 65 |

+

"min": 1000.0,

|

| 66 |

+

"max": 3900.0

|

| 67 |

+

},

|

| 68 |

+

{

|

| 69 |

+

"current": 1000.0,

|

| 70 |

+

"min": 1000.0,

|

| 71 |

+

"max": 3900.0

|

| 72 |

+

},

|

| 73 |

+

{

|

| 74 |

+

"current": 1000.0,

|

| 75 |

+

"min": 1000.0,

|

| 76 |

+

"max": 3900.0

|

| 77 |

+

},

|

| 78 |

+

{

|

| 79 |

+

"current": 1100.0,

|

| 80 |

+

"min": 1000.0,

|

| 81 |

+

"max": 3900.0

|

| 82 |

+

},

|

| 83 |

+

{

|

| 84 |

+

"current": 1000.0,

|

| 85 |

+

"min": 1000.0,

|

| 86 |

+

"max": 3900.0

|

| 87 |

+

},

|

| 88 |

+

{

|

| 89 |

+

"current": 1000.0,

|

| 90 |

+

"min": 1000.0,

|

| 91 |

+

"max": 3900.0

|

| 92 |

+

},

|

| 93 |

+

{

|

| 94 |

+

"current": 1000.0,

|

| 95 |

+

"min": 1000.0,

|

| 96 |

+

"max": 3900.0

|

| 97 |

+

},

|

| 98 |

+

{

|

| 99 |

+

"current": 1000.0,

|

| 100 |

+

"min": 1000.0,

|

| 101 |

+

"max": 3900.0

|

| 102 |

+

},

|

| 103 |

+

{

|

| 104 |

+

"current": 1000.0,

|

| 105 |

+

"min": 1000.0,

|

| 106 |

+

"max": 3900.0

|

| 107 |

+

},

|

| 108 |

+

{

|

| 109 |

+

"current": 1000.0,

|

| 110 |

+

"min": 1000.0,

|

| 111 |

+

"max": 3900.0

|

| 112 |

+

},

|

| 113 |

+

{

|

| 114 |

+

"current": 1000.0,

|

| 115 |

+

"min": 1000.0,

|

| 116 |

+

"max": 3900.0

|

| 117 |

+

},

|

| 118 |

+

{

|

| 119 |

+

"current": 1000.0,

|

| 120 |

+

"min": 1000.0,

|

| 121 |

+

"max": 3900.0

|

| 122 |

+

},

|

| 123 |

+

{

|

| 124 |

+

"current": 1000.0,

|

| 125 |

+

"min": 1000.0,

|

| 126 |

+

"max": 3900.0

|

| 127 |

+

},

|

| 128 |

+

{

|

| 129 |

+

"current": 1000.0,

|

| 130 |

+

"min": 1000.0,

|

| 131 |

+

"max": 3900.0

|

| 132 |

+

},

|

| 133 |

+

{

|

| 134 |

+

"current": 3614.641,

|

| 135 |

+

"min": 1000.0,

|

| 136 |

+

"max": 3900.0

|

| 137 |

+

},

|

| 138 |

+

{

|

| 139 |

+

"current": 1000.0,

|

| 140 |

+

"min": 1000.0,

|

| 141 |

+

"max": 3900.0

|

| 142 |

+

},

|

| 143 |

+

{

|

| 144 |

+

"current": 1000.0,

|

| 145 |

+

"min": 1000.0,

|

| 146 |

+

"max": 3900.0

|

| 147 |

+

},

|

| 148 |

+

{

|

| 149 |

+

"current": 2000.0,

|

| 150 |

+

"min": 1000.0,

|

| 151 |

+

"max": 3900.0

|

| 152 |

+

},

|

| 153 |

+

{

|

| 154 |

+

"current": 1100.0,

|

| 155 |

+

"min": 1000.0,

|

| 156 |

+

"max": 3900.0

|

| 157 |

+

},

|

| 158 |

+

{

|

| 159 |

+

"current": 1000.0,

|

| 160 |

+

"min": 1000.0,

|

| 161 |

+

"max": 3900.0

|

| 162 |

+

},

|

| 163 |

+

{

|

| 164 |

+

"current": 1100.0,

|

| 165 |

+

"min": 1000.0,

|

| 166 |

+

"max": 3900.0

|

| 167 |

+

},

|

| 168 |

+

{

|

| 169 |

+

"current": 2500.0,

|

| 170 |

+

"min": 1000.0,

|

| 171 |

+

"max": 3900.0

|

| 172 |

+

},

|

| 173 |

+

{

|

| 174 |

+

"current": 1000.0,

|

| 175 |

+

"min": 1000.0,

|

| 176 |

+

"max": 3900.0

|

| 177 |

+

},

|

| 178 |

+

{

|

| 179 |

+

"current": 1000.0,

|

| 180 |

+

"min": 1000.0,

|

| 181 |

+

"max": 3900.0

|

| 182 |

+

},

|

| 183 |

+

{

|

| 184 |

+

"current": 1000.0,

|

| 185 |

+

"min": 1000.0,

|

| 186 |

+

"max": 3900.0

|

| 187 |

+

},

|

| 188 |

+

{

|

| 189 |

+

"current": 1000.0,

|

| 190 |

+

"min": 1000.0,

|

| 191 |

+

"max": 3900.0

|

| 192 |

+

},

|

| 193 |

+

{

|

| 194 |

+

"current": 1000.0,

|

| 195 |

+

"min": 1000.0,

|

| 196 |

+

"max": 3900.0

|

| 197 |

+

},

|

| 198 |

+

{

|

| 199 |

+

"current": 1000.0,

|

| 200 |

+

"min": 1000.0,

|

| 201 |

+

"max": 3900.0

|

| 202 |

+

},

|

| 203 |

+

{

|

| 204 |

+

"current": 1000.0,

|

| 205 |

+

"min": 1000.0,

|

| 206 |

+

"max": 3900.0

|

| 207 |

+

},

|

| 208 |

+

{

|

| 209 |

+

"current": 2043.382,

|

| 210 |

+

"min": 1000.0,

|

| 211 |

+

"max": 3900.0

|

| 212 |

+

},

|

| 213 |

+

{

|

| 214 |

+

"current": 1000.0,

|

| 215 |

+

"min": 1000.0,

|

| 216 |

+

"max": 3900.0

|

| 217 |

+

},

|

| 218 |

+

{

|

| 219 |

+

"current": 1000.0,

|

| 220 |

+

"min": 1000.0,

|

| 221 |

+

"max": 3900.0

|

| 222 |

+

},

|

| 223 |

+

{

|

| 224 |

+

"current": 1000.0,

|

| 225 |

+

"min": 1000.0,

|

| 226 |

+

"max": 3900.0

|

| 227 |

+

},

|

| 228 |

+

{

|

| 229 |

+

"current": 1000.0,

|

| 230 |

+

"min": 1000.0,

|

| 231 |

+

"max": 3900.0

|

| 232 |

+

},

|

| 233 |

+

{

|

| 234 |

+

"current": 1000.0,

|

| 235 |

+

"min": 1000.0,

|

| 236 |

+

"max": 3900.0

|

| 237 |

+

},

|

| 238 |

+

{

|

| 239 |

+

"current": 1000.0,

|

| 240 |

+

"min": 1000.0,

|

| 241 |

+

"max": 3900.0

|

| 242 |

+

},

|

| 243 |

+

{

|

| 244 |

+

"current": 1000.0,

|

| 245 |

+

"min": 1000.0,

|

| 246 |

+

"max": 3900.0

|

| 247 |

+

},

|

| 248 |

+

{

|

| 249 |

+

"current": 1000.0,

|

| 250 |

+

"min": 1000.0,

|

| 251 |

+

"max": 3900.0

|

| 252 |

+

},

|

| 253 |

+

{

|

| 254 |

+

"current": 1000.0,

|

| 255 |

+

"min": 1000.0,

|

| 256 |

+

"max": 3900.0

|

| 257 |

+

},

|

| 258 |

+

{

|

| 259 |

+

"current": 1000.0,

|

| 260 |

+

"min": 1000.0,

|

| 261 |

+

"max": 3900.0

|

| 262 |

+

},

|

| 263 |

+

{

|

| 264 |

+

"current": 1000.0,

|

| 265 |

+

"min": 1000.0,

|

| 266 |

+

"max": 3900.0

|

| 267 |

+

},

|

| 268 |

+

{

|

| 269 |

+

"current": 1100.0,

|

| 270 |

+

"min": 1000.0,

|

| 271 |

+

"max": 3900.0

|

| 272 |

+

},

|

| 273 |

+

{

|

| 274 |

+

"current": 1000.0,

|

| 275 |

+

"min": 1000.0,

|

| 276 |

+

"max": 3900.0

|

| 277 |

+

},

|

| 278 |

+

{

|

| 279 |

+

"current": 1100.0,

|

| 280 |

+

"min": 1000.0,

|

| 281 |

+

"max": 3900.0

|

| 282 |

+

},

|

| 283 |

+

{

|

| 284 |

+

"current": 2200.0,

|

| 285 |

+

"min": 1000.0,

|

| 286 |

+

"max": 3900.0

|

| 287 |

+

},

|

| 288 |

+

{

|

| 289 |

+

"current": 1000.0,

|

| 290 |

+

"min": 1000.0,

|

| 291 |

+

"max": 3900.0

|

| 292 |

+

},

|

| 293 |

+

{

|

| 294 |

+

"current": 1000.0,

|

| 295 |

+

"min": 1000.0,

|

| 296 |

+

"max": 3900.0

|

| 297 |

+

},

|

| 298 |

+

{

|

| 299 |

+

"current": 1000.0,

|

| 300 |

+

"min": 1000.0,

|

| 301 |

+

"max": 3900.0

|

| 302 |

+

},

|

| 303 |

+

{

|

| 304 |

+

"current": 1000.0,

|

| 305 |

+

"min": 1000.0,

|

| 306 |

+

"max": 3900.0

|

| 307 |

+

},

|

| 308 |

+

{

|

| 309 |

+

"current": 1000.0,

|

| 310 |

+

"min": 1000.0,

|

| 311 |

+

"max": 3900.0

|

| 312 |

+

},

|

| 313 |

+

{

|

| 314 |

+

"current": 1000.0,

|

| 315 |

+

"min": 1000.0,

|

| 316 |

+

"max": 3900.0

|

| 317 |

+

},

|

| 318 |

+

{

|

| 319 |

+

"current": 1000.0,

|

| 320 |

+

"min": 1000.0,

|

| 321 |

+

"max": 3900.0

|

| 322 |

+

},

|

| 323 |

+

{

|

| 324 |

+

"current": 1000.0,

|

| 325 |

+

"min": 1000.0,

|

| 326 |

+

"max": 3900.0

|

| 327 |

+

},

|

| 328 |

+

{

|

| 329 |

+

"current": 1000.0,

|

| 330 |

+

"min": 1000.0,

|

| 331 |

+

"max": 3900.0

|

| 332 |

+

},

|

| 333 |

+

{

|

| 334 |

+

"current": 1000.0,

|

| 335 |

+

"min": 1000.0,

|

| 336 |

+

"max": 3900.0

|

| 337 |

+

},

|

| 338 |

+

{

|

| 339 |

+

"current": 1000.0,

|

| 340 |

+

"min": 1000.0,

|

| 341 |

+

"max": 3900.0

|

| 342 |

+

},

|

| 343 |

+

{

|

| 344 |

+

"current": 1000.0,

|

| 345 |

+

"min": 1000.0,

|

| 346 |

+

"max": 3900.0

|

| 347 |

+

},

|

| 348 |

+

{

|

| 349 |

+

"current": 1000.0,

|

| 350 |

+

"min": 1000.0,

|

| 351 |

+

"max": 3900.0

|

| 352 |

+

},

|

| 353 |

+

{

|

| 354 |

+

"current": 1000.0,

|

| 355 |

+

"min": 1000.0,

|

| 356 |

+

"max": 3900.0

|

| 357 |

+

},

|

| 358 |

+

{

|

| 359 |

+

"current": 1000.0,

|

| 360 |

+

"min": 1000.0,

|

| 361 |

+

"max": 3900.0

|

| 362 |

+

},

|

| 363 |

+

{

|

| 364 |

+

"current": 1000.0,

|

| 365 |

+

"min": 1000.0,

|

| 366 |

+

"max": 3900.0

|

| 367 |

+

},

|

| 368 |

+

{

|

| 369 |

+

"current": 1000.0,

|

| 370 |

+

"min": 1000.0,

|

| 371 |

+

"max": 3900.0

|

| 372 |

+

},

|

| 373 |

+

{

|

| 374 |

+

"current": 1000.0,

|

| 375 |

+

"min": 1000.0,

|

| 376 |

+

"max": 3900.0

|

| 377 |

+

},

|

| 378 |

+

{

|

| 379 |

+

"current": 1000.0,

|

| 380 |

+

"min": 1000.0,

|

| 381 |

+

"max": 3900.0

|

| 382 |

+

},

|

| 383 |

+

{

|

| 384 |

+

"current": 2400.0,

|

| 385 |

+

"min": 1000.0,

|

| 386 |

+

"max": 3900.0

|

| 387 |

+

},

|

| 388 |

+

{

|

| 389 |

+

"current": 1000.0,

|

| 390 |

+

"min": 1000.0,

|

| 391 |

+

"max": 3900.0

|

| 392 |

+

},

|

| 393 |

+

{

|

| 394 |

+

"current": 1000.0,

|

| 395 |

+

"min": 1000.0,

|

| 396 |

+

"max": 3900.0

|

| 397 |

+

},

|

| 398 |

+

{

|

| 399 |

+

"current": 3700.0,

|

| 400 |

+

"min": 1000.0,

|

| 401 |

+

"max": 3900.0

|

| 402 |

+

}

|

| 403 |

+

],

|

| 404 |

+

"disk": {

|

| 405 |

+

"total": 490.5871887207031,

|

| 406 |

+

"used": 102.94281387329102

|

| 407 |

+

},

|

| 408 |

+

"gpu": "Quadro RTX 6000",

|

| 409 |

+

"gpu_count": 4,

|

| 410 |

+

"gpu_devices": [

|

| 411 |

+

{

|

| 412 |

+