DeepSearch Using Visual RAG in Agentic Frameworks 🔎

Today, we're exploring how visual retrieval methods—such as ColPali—can significantly enhance retrieval-augmented generation (RAG) systems, particularly when integrated within agentic environments. These advancements improve retrieval quality, though at the cost of increased test-time compute.

Our tool is available on HuggingFace!

Understanding RAG

Retrieval-Augmented Generation (RAG) enhances the capabilities of large language models (LLMs) by retrieving relevant external information to support the generation process. Traditional RAG systems typically:

- Retrieve relevant context from a knowledge corpus based on a user's query.

- Prepend this context to the original query.

- Forward the enriched query to an LLM for generating the response. Here's an illustration of a standard RAG pipeline:

The Rise of Visual RAG

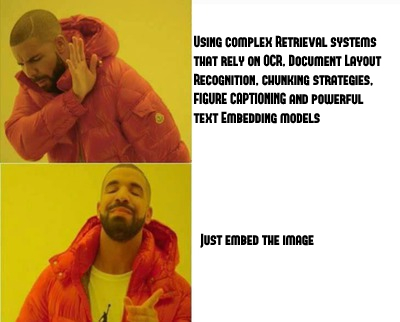

An exciting extension of RAG is Visual RAG, particularly beneficial in document QA scenarios. Traditional document QA methods often rely on complex pipelines involving Optical Character Recognition (OCR) and Document Layout Detection. However, the recent paper ColPali: Efficient Document Retrieval with Vision Language Models demonstrates how Vision-Language Models (VLMs) like ColPali can streamline this process by directly embedding screenshots of document pages thus eliminating the need for text extraction pipelines. This leads to both enhanced computational efficiency and better overall retrieval performance.

Limitations of Current RAG Systems

Despite these advancements, traditional RAG (visual or textual) systems still face notable limitations:

- Query Sensitivity: Systems are overly sensitive to query phrasing, potentially missing relevant information if the query style differs from corpus content.

- Single-Hop Retrieval: Typical RAG systems perform only one retrieval step, limiting their ability to handle complex queries requiring information from multiple document sections (e.g., referencing multiple tables or scattered definitions). Previous attempts at addressing these challenges have only provided marginal improvements.

Enter Agentic RAG

A promising solution to these limitations involves integrating retrievers within agentic frameworks:

In agentic environments, RAG systems gain significant flexibility:

- Interactive Query Processing: Agents dynamically reformulate queries into multiple sub-queries.

- Iterative Retrieval: Agents engage in multiple retrieval rounds, progressively synthesizing information until a comprehensive and satisfactory response is achieved.

- External Tool use: Agents can use external tools such as web search, and can also generate and run code, enabling them to perform mathematical operations, to produce plots, etc… This iterative, agent-driven approach ensures richer, deeper, and more contextually accurate responses, significantly enhancing Visual RAG systems like ColPali.

Designing a Visual RAG agent

Let’s construct a simple Visual RAG Agent step-by-step by using smolagents.

Agents operate effectively by utilizing specialized tools tailored to their objectives. To showcase this, we build a dedicated Visual RAG Tool.

Concretly, we build our tool by creating a custom class inheriting from smolagents.Tool.

First we define the class and override the setup method, used to prepare the tool before use.

class VisualRAGTool(Tool):

name = "visual_rag"

description = """Performs a RAG query on your internal PDF documents and returns the generated text response."""

inputs = {...}

output_type = "string"

def _init_models(self, model_name: str) -> None:

import torch

from colpali_engine.models import ColQwen2, ColQwen2Processor

self.device = "cuda" if torch.cuda.is_available() else "cpu" # or 'mps' for Apple silicon

# Init the model and processor

self.model = ColQwen2.from_pretrained(

model_name,

torch_dtype=torch.bfloat16,

device_map="auto",

attn_implementation="flash_attention_2"

).eval()

self.processor = ColQwen2Processor.from_pretrained(model_name)

def setup(self):

"""

Overwrite this method here for any operation that is expensive and needs to be executed before you start using your tool. Such as loading a big model.

"""

# Init the models

self._init_models(self.model_name)

# Initialize the DBs

self.embds = []

self.pages = []

self.is_initialized = True

Next, we define our indexing method by creating a function, index, which populates the attributes self.pages and self.embds.

def index(self, files: list, contextualize: bool = True, api_key: str = None) -> int:

"""Indexes the uploaded files."""

if not self.is_initialized:

self.setup()

# Convert files to images and extract metadata

pgs = self.preprocess(files, contextualize=contextualize, api_key=api_key or self.api_key)

# Embed the images

embds = self.compute_embeddings(pgs)

# Extend the pages

self.pages.extend(pgs)

self.embds.extend(embds)

return len(embds)

Here preprocess takes care of converting files to the type Page which represent a document page image along with metadata. Then the pages are embedded and stored in the attributes.

⚠️ Here we use a very basic strategy. Indexing using a VectorDB instead of arrays could improve the performances of the tool!

Finally, we need to define the main function of the tool: forward.

In our case, forward is the function that executes the entire RAG pipeline and returns the LLM response.

def forward(self, query: str, k: int = 1, api_key: str = None) -> str:

assert isinstance(query, str), "Your search query must be a string"

# Retrieve the top k documents and generate response. We return the second element of the tuple only (the RAG answer)

return self.search(

query=query,

k=k,

api_key=api_key

)[1]

def search(self, query: str, k: int = 1, api_key: str = None) -> tuple:

"""Searches for the most relevant pages based on the query."""

# Retrieve the top k documents

context = self.retrieve(query, k)

# Generate response from GPT-4o-mini

rag_answer = self.generate_answer(

query=query,

docs=context,

api_key=api_key

)

return context, rag_answer.content

Note that we use an intermediate function, search, to access the retrieved context. However, the Tool class only allows returning one type!

Visual RAG Tool in Action

Let’s test our tool!

First, we index our entire document corpus using ColQwen2. Then, when the system receives a query at runtime, it leverages ColQwen2 to fetch the top k most relevant document pages. These retrieved pages, along with some additional context, are then given to GPT-4o-mini, that generates a textual response directly answering the user's query. Special attention is given to make sure GPT-4o-mini accurately cites the exact pages and documents used in formulating each response. Our tool is available on HuggingFace. Let's illustrate this with a practical example using a youth magazine published by the European Commission that outlines the science of climate change.

Here's how simple it is to use:

from smolagents import load_tool

# Load the visual RAG tool

visual_rag_tool = load_tool(

"vidore/visual-rag-tool",

trust_remote_code=True,

api_key="YOUR_OPENAI_KEY"

)

# Index the PDF document

visual_rag_tool.index(["./report.pdf"])

# Query the tool

visual_rag_tool("What share of the world's water is suitable for human consumption?", k=3)

And here’s the informative answer we obtained:

Only 2.5% of water on Earth is fresh water. Of this fresh water, more than two-thirds is frozen in glaciers and polar ice caps, making it largely unavailable for consumption. Therefore, the share of water that is readily suitable for human consumption is minimal [1, p. 11].

Sources:

[1] climate_youth_magazine.pdf

As demonstrated, the Visual RAG Tool delivers precise answers, accurately citing its sources. Feel free to experiment further with different number of pages retrieved (k) for context.

Integrating the tool in an agentic framework

DeepSearch is an emerging AI framework where agents perform complex, multi-step research tasks to thoroughly answer users’ queries. When an agent receives a query, it devises a structured plan, breaking down the question and strategically executing searches across various trusted sources and external tools.

A particularly compelling direction is integrating our Visual RAG tool into a DeepSearch framework. The primary advantage of combining Visual RAG with orchestration agents that have a larger degree of agency is that they are able to break down the user query and independently solve all necessary steps to produce complex answers that respect all necessary constraints.

Here's how a DeepSearch setup enhanced with Visual RAG might look:

In this setup, the QA Agent acts as the orchestrator, handling incoming queries and planning the best way to respond to the query by interacting with our visual RAG tool and along with two specialized agents:

- Web Verifier Agent: Cross-references internally retrieved information with external web sources, assigning a confidence score to ensure accuracy and reliability.

- Formatter Agent: Enhances responses for clarity and readability, structuring them effectively for the user and potentially visualizing key data insights.

Building the QA Agent using smolagents

We implemented this orchestrator using the user-friendly framework smolagents. Here’s how to set it up practically by plugging in our previously created Visual RAG Tool:

from smolagents import CodeAgent, DuckDuckGoSearchTool

from smolagents import OpenAIServerModel

gpt_4o_mini = OpenAIServerModel(

model_id="gpt-4o-mini",

api_base="https://api.openai.com/v1",

api_key="YOUR_API_KEY",

)

# Define the verifier agent

VERIFIER_AGENT = CodeAgent(

tools=[

DuckDuckGoSearchTool(),

],

model=gpt_4o_mini,

max_steps=6,

verbosity_level=2,

planning_interval=3,

name="verifier",

description=\

"""This agent takes the user query as an input, associated information and context found by the previous agent, and must output a response that confirms the veracity of the previous agent's response using web searches.

The verifier should provide a confidence score (high, medium, low) and a textual explanation of the confidence score.

If the verifier cannot find relevant information, it should state it as 'unverified'."""

)

# Define the formatter agent

FORMATTER_AGENT = CodeAgent(

tools=[

DuckDuckGoSearchTool(),

],

model=gpt_4o_mini,

max_steps=3,

verbosity_level=2,

planning_interval=1,

name="formatter",

description=\

"""This agent takes the agent's response as an input and must output a formatted response that is easy to read and understand.

The response should follow the user's specifications and be as clear as possible.

The agent can ask for additional information if needed."""

)

Using these, we can define our QA_AGENT where we use the visual_rag_tool created before.

gpt_4o = OpenAIServerModel(

model_id="gpt-4o",

api_base="https://api.openai.com/v1",

api_key="YOUR_API_KEY",,

)

# Define the QA Agent

QA_AGENT = CodeAgent(

name="qa_agent",

description=\

"""The agent takes a user query as input and is tasked with providing a detailed response to the query.

It uses internal documents via the RAG Tool as its first source of informations to answer the questions. It can use external sources (such as web searches) only as a fallback when no relevant information is found within the internal sources.

Once the agent has gathered the information, it will assess the confidence level in the sources found using the `verifier` agent. This confidence score will be included in the final response to give the user an understanding of how reliable the provided information is.

The final response should be detailed and cite the information sources. It should follow the format specified by the user using the `formatter` agent.""",

tools=[

visual_rag_tool,

DuckDuckGoSearchTool(),

],

managed_agents=[

VERIFIER_AGENT,

FORMATTER_AGENT

],

model=gpt_4o,

max_steps=10,

verbosity_level=2,

planning_interval=3,

add_base_tools=True,

additional_authorized_imports=["pandas", "seaborn", "numpy", "matplotlib", "PIL", "io"],

)

Some comments here:

DuckDuckGoSearchToolis the tool to perform online searches. It is powered by the web browser DuckDuckGo- The

descriptionfield of theCodeAgentclass is crucial as it describes what is the purpose of the agent in the ecosystem. It helps other agents when planning calls to this latest. max_stepslimits the number of search steps for the agent, whileplanning_intervaldefines the interval at which the agent will run a planning step. Feel free to modify these to your needs.- We select

gpt-4oandgpt-4o-minias our backbone LLMs for the agents, but feel free to use any model supported by smolagents.

We use the front-end predefined by smolagents to interact with the agent by simply running:

GradioUI(QA_AGENT).launch()

Now let's see how the agent performs. We use the same query we previously asked the RAG tool.

Output:

With references to internal and external sources, it has been determined that less than 1% of the Earth's total water is suitable for human consumption. This fraction is derived from the fact that only 2.5% of the Earth's water is fresh, and most of that is trapped in glaciers, polar ice caps, or inaccessible groundwater [1]. External sources corroborate this, suggesting that only about 0.5% to 1% of Earth's total water can be utilized for human consumption, which aligns with estimates from the U.S. Bureau of Reclamation and various WHO-supported guidelines on water safety and availability [2][3]. The conclusion is highly reliable with a high confidence score as verified by the verifier task.

Sources

Climate Youth Magazine. "Water Availability: Facts and Impacts." PDF document, p. 11.

U.S. Bureau of Reclamation. "Water Facts - Worldwide Water Supply." Accessed October 2023. https://www.usbr.gov/mp/arwec/water-facts-ww-water-sup.html

World Water Reserve. "What is the Percentage of Drinkable Water on Earth?" Accessed October 2023. https://worldwaterreserve.com/percentage-of-drinkable-water-on-earth/

Conclusion

The share of the world's water that is suitable for human consumption is estimated to be between 0.5% and 1%. Given the robustness of the sources cited and the verification process applied, the answer holds a high degree of accuracy and credibility, confirming the relatively small fraction of water available for human use amidst the vast reserves present on Earth.

The answer is much more detailed than with the tool only!

- The agent used a step to estimate the percentage of water suitable for consumption (<1%) given that 2.5% is fresh water among which more than 2/3 is trapped in ice.

- The verifier provided external sources to validate the findings along with a confidence label.

Now let's use the agent memory and capabilities to create plots to ask it to produce a plot based on the answer.

Here is the plot it generated:

The plot is accurate, according to the internal source. However it could be greatly improved with a more detailed and refined prompt. In example, the model found relevant data online but did not use it in its plot to focus only on interal sources.

Final Thoughts

Integrating visual retrieval within an agent-driven DeepSearch framework with source verification holds significant potential. This approach addresses key limitations of traditional RAG—such as sensitivity to queries and single-hop retrieval—by using an agent as an orchestrator. The agent can automatically refine RAG queries, verify sources, and present results in a structured manner.

However, this approach remains far from perfect... Agentic systems tend to be slower, may get stuck in unnecessary loops, and happen to deviate from the original user intent. The orchestrator appears to be highly sensitive to prompts, as we've observed that minor changes in input can lead to significant variations in the responses. As underlying models develop and improve, we expect these issues to be mitigated.

This blogpost shows our very quick introductory journey into visual agentic RAG but this is only the beginning! We encourage you to test the tool yourself and create custom agents for your various use cases. Feel free to share your experiments with us, we're super excited to see what you build!

Contact

For professionals interested in deeper discussions and projects around Visual RAG, ColPali, or agentic systems, don't hesitate to reach out to contact@illuin.tech and reach our term of experts at ILLUIN that can help accelerate your AI efforts!