codefuse-admin

commited on

Commit

·

f9e02c9

1

Parent(s):

aa0340b

init model

Browse files- CodeFuse-VLM-14B-performance.png +0 -0

- CodeFuse-VLM-arch.png +0 -0

- README.md +57 -3

- config.json +41 -0

- configuration_qwen.py +69 -0

- generation_config.json +11 -0

- modeling_qwen.py +1417 -0

- pytorch_model-00001-of-00003.bin +3 -0

- pytorch_model-00002-of-00003.bin +3 -0

- pytorch_model-00003-of-00003.bin +3 -0

- pytorch_model.bin.index.json +330 -0

- qwen.tiktoken +0 -0

- qwen_generation_utils.py +420 -0

- special_tokens_map.json +3 -0

- tokenization_qwen.py +590 -0

- tokenizer_config.json +11 -0

- training_args.bin +3 -0

CodeFuse-VLM-14B-performance.png

ADDED

|

CodeFuse-VLM-arch.png

ADDED

|

README.md

CHANGED

|

@@ -1,3 +1,57 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

## CodeFuse-VLM

|

| 2 |

+

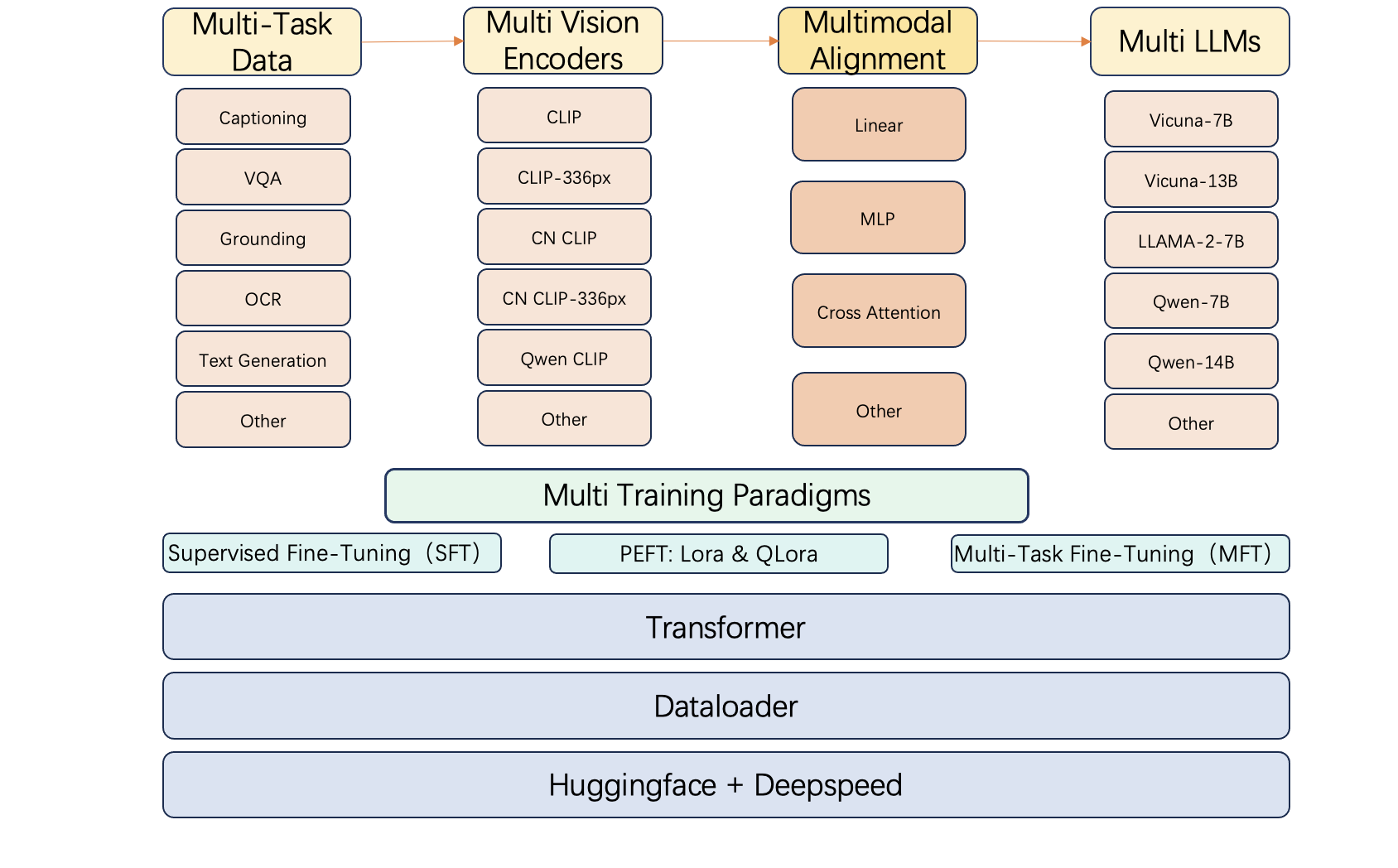

CodeFuse-VLM is a Multimodal LLM(MLLM) framework that provides users with multiple vision encoders, multimodal alignment adapters, and LLMs. Through CodeFuse-VLM framework, users are able to customize their own MLLM model to adapt their own tasks.

|

| 3 |

+

As more and more models are published on Huggingface community, there will be more open-source vision encoders and LLMs. Each of these models has their own specialties, e.g. Code-LLama is good at code-related tasks but has poor performance for Chinese tasks. Therefore, we built CodeFuse-VLM framework to support multiple vision encoders, multimodal alignment adapters, and LLMs to adapt different types of tasks.

|

| 4 |

+

<p align="center">

|

| 5 |

+

<img src="./CodeFuse-VLM-arch.png" width="50%" />

|

| 6 |

+

</p>

|

| 7 |

+

|

| 8 |

+

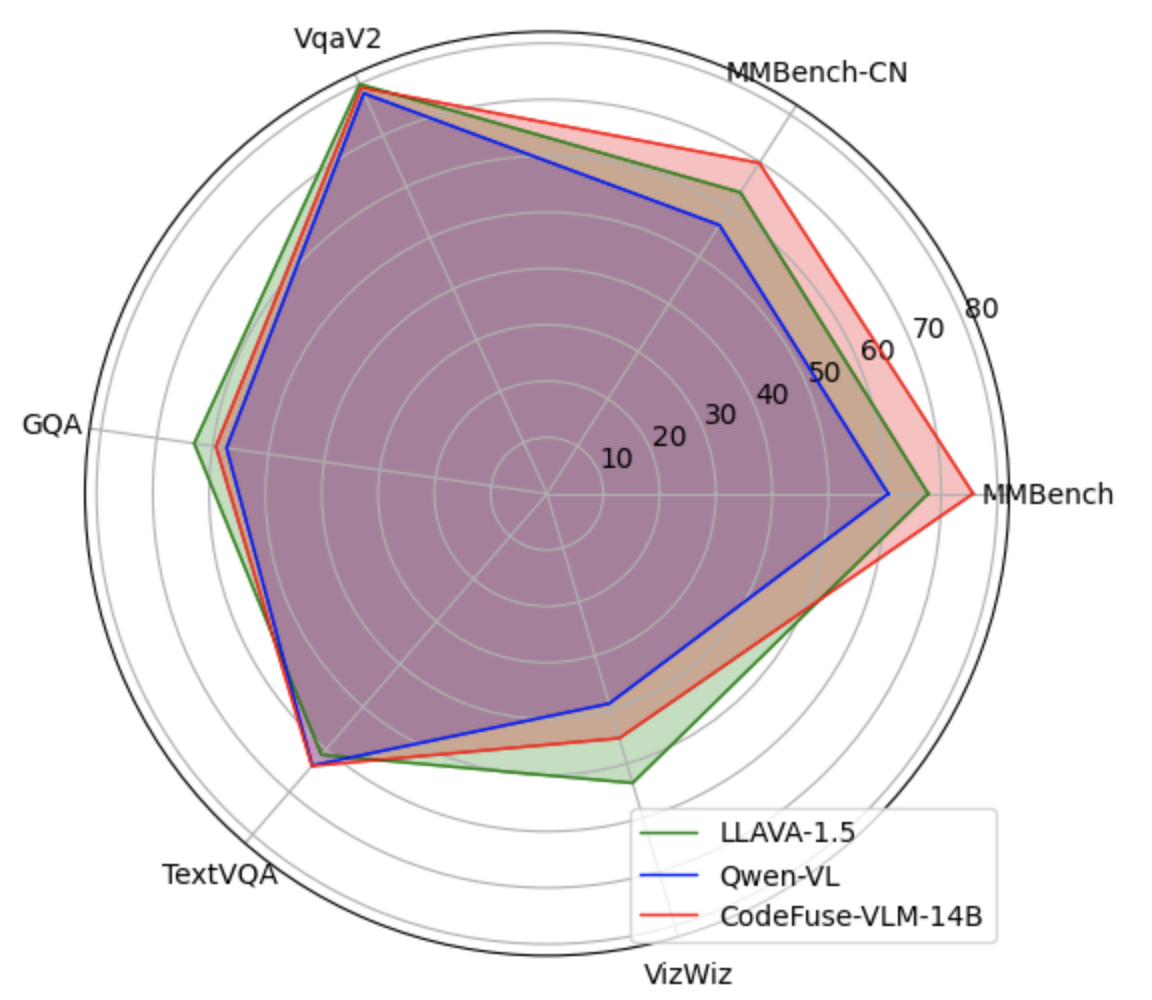

Under CodeFuse-VLM framework, we use cross attention multimodal adapter, Qwen-14B LLM, and Qwen-VL's vision encoder to train CodeFuse-VLM-14B model. On multiple benchmarks, our CodeFuse-VLM-14B shows superior performances over Qwen-VL and LLAVA-1.5.

|

| 9 |

+

|

| 10 |

+

<p align="center">

|

| 11 |

+

<img src="./CodeFuse-VLM-14B-performance.png" width="50%" />

|

| 12 |

+

</p>

|

| 13 |

+

|

| 14 |

+

Here is the table for different MLLM model's performance on benchmarks

|

| 15 |

+

Model | MMBench | MMBench-CN | VqaV2 | GQA | TextVQA | Vizwiz

|

| 16 |

+

| ------------- | ------------- | ------------- | ------------- | ------------- | ------------- | ------------- |

|

| 17 |

+

LLAVA-1.5 | 67.7 | 63.6 | 80.0 | 63.3 | 61.3 | 53.6

|

| 18 |

+

Qwen-VL | 60.6 | 56.7 | 78.2 | 57.5 | 63.8 | 38.9

|

| 19 |

+

CodeFuse-VLM-14B | 75.7 | 69.8 | 79.3 | 59.4 | 63.9 | 45.3

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

## Contents

|

| 23 |

+

- [Install](#Install)

|

| 24 |

+

- [Datasets](#Datasets)

|

| 25 |

+

- [Multimodal Alignment](#Multimodal-Alignment)

|

| 26 |

+

- [Visual Instruction Tuning](#Visual-Instruction-Tuning)

|

| 27 |

+

- [Evaluation](#Evaluation)

|

| 28 |

+

|

| 29 |

+

## Install

|

| 30 |

+

Please run sh init\_env.sh

|

| 31 |

+

|

| 32 |

+

## Datasets

|

| 33 |

+

Here's the table of datasets we used to train CodeFuse-VLM-14B:

|

| 34 |

+

|

| 35 |

+

Dataset | Task Type | Number of Samples

|

| 36 |

+

| ------------- | ------------- | ------------- |

|

| 37 |

+

synthdog-en | OCR | 800,000

|

| 38 |

+

synthdog-zh | OCR | 800,000

|

| 39 |

+

cc3m(downsampled)| Image Caption | 600,000

|

| 40 |

+

cc3m(downsampled)| Image Caption | 600,000

|

| 41 |

+

SBU | Image Caption | 850,000

|

| 42 |

+

Visual Genome VQA (Downsampled) | Visual Question Answer(VQA) | 500,000

|

| 43 |

+

Visual Genome Region descriptions (Downsampled) | Reference Grouding | 500,000

|

| 44 |

+

Visual Genome objects (Downsampled) | Grounded Caption | 500,000

|

| 45 |

+

OCR VQA (Downsampled) | OCR and VQA | 500,000

|

| 46 |

+

|

| 47 |

+

Please download these datasets on their own official websites.

|

| 48 |

+

|

| 49 |

+

## Multimodal Alignment

|

| 50 |

+

Please run sh scripts/pretrain.sh or sh scripts/pretrain\_multinode.sh

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

## Visual Instruction Tuning

|

| 54 |

+

Please run sh scripts/finetune.sh or sh scripts/finetune\_multinode.sh

|

| 55 |

+

|

| 56 |

+

## Evaluation

|

| 57 |

+

Please run python scrips in directory llava/eval/

|

config.json

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"_name_or_path": "/mnt/user/laiyan/salesgpt/model/Qwen-14B-Chat-VL/",

|

| 3 |

+

"architectures": [

|

| 4 |

+

"QWenLMHeadModel"

|

| 5 |

+

],

|

| 6 |

+

"attn_dropout_prob": 0.0,

|

| 7 |

+

"auto_map": {

|

| 8 |

+

"AutoConfig": "configuration_qwen.QWenConfig",

|

| 9 |

+

"AutoModelForCausalLM": "modeling_qwen.QWenLMHeadModel"

|

| 10 |

+

},

|

| 11 |

+

"bf16": true,

|

| 12 |

+

"emb_dropout_prob": 0.0,

|

| 13 |

+

"fp16": false,

|

| 14 |

+

"fp32": false,

|

| 15 |

+

"hidden_size": 5120,

|

| 16 |

+

"initializer_range": 0.02,

|

| 17 |

+

"intermediate_size": 27392,

|

| 18 |

+

"kv_channels": 128,

|

| 19 |

+

"layer_norm_epsilon": 1e-06,

|

| 20 |

+

"max_position_embeddings": 8192,

|

| 21 |

+

"model_type": "qwen",

|

| 22 |

+

"no_bias": true,

|

| 23 |

+

"num_attention_heads": 40,

|

| 24 |

+

"num_hidden_layers": 40,

|

| 25 |

+

"onnx_safe": null,

|

| 26 |

+

"rotary_emb_base": 10000,

|

| 27 |

+

"rotary_pct": 1.0,

|

| 28 |

+

"scale_attn_weights": true,

|

| 29 |

+

"seq_length": 2048,

|

| 30 |

+

"tie_word_embeddings": false,

|

| 31 |

+

"tokenizer_class": "QWenTokenizer",

|

| 32 |

+

"torch_dtype": "float16",

|

| 33 |

+

"transformers_version": "4.32.0",

|

| 34 |

+

"use_cache": true,

|

| 35 |

+

"use_cache_kernel": false,

|

| 36 |

+

"use_cache_quantization": false,

|

| 37 |

+

"use_dynamic_ntk": true,

|

| 38 |

+

"use_flash_attn": true,

|

| 39 |

+

"use_logn_attn": true,

|

| 40 |

+

"vocab_size": 152064

|

| 41 |

+

}

|

configuration_qwen.py

ADDED

|

@@ -0,0 +1,69 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Alibaba Cloud.

|

| 2 |

+

#

|

| 3 |

+

# This source code is licensed under the license found in the

|

| 4 |

+

# LICENSE file in the root directory of this source tree.

|

| 5 |

+

|

| 6 |

+

from transformers import PretrainedConfig

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class QWenConfig(PretrainedConfig):

|

| 10 |

+

model_type = "qwen"

|

| 11 |

+

keys_to_ignore_at_inference = ["past_key_values"]

|

| 12 |

+

|

| 13 |

+

def __init__(

|

| 14 |

+

self,

|

| 15 |

+

vocab_size=151936,

|

| 16 |

+

hidden_size=4096,

|

| 17 |

+

num_hidden_layers=32,

|

| 18 |

+

num_attention_heads=32,

|

| 19 |

+

emb_dropout_prob=0.0,

|

| 20 |

+

attn_dropout_prob=0.0,

|

| 21 |

+

layer_norm_epsilon=1e-6,

|

| 22 |

+

initializer_range=0.02,

|

| 23 |

+

max_position_embeddings=8192,

|

| 24 |

+

scale_attn_weights=True,

|

| 25 |

+

use_cache=True,

|

| 26 |

+

bf16=False,

|

| 27 |

+

fp16=False,

|

| 28 |

+

fp32=False,

|

| 29 |

+

kv_channels=128,

|

| 30 |

+

rotary_pct=1.0,

|

| 31 |

+

rotary_emb_base=10000,

|

| 32 |

+

use_dynamic_ntk=True,

|

| 33 |

+

use_logn_attn=True,

|

| 34 |

+

use_flash_attn="auto",

|

| 35 |

+

intermediate_size=22016,

|

| 36 |

+

no_bias=True,

|

| 37 |

+

tie_word_embeddings=False,

|

| 38 |

+

use_cache_quantization=False,

|

| 39 |

+

use_cache_kernel=False,

|

| 40 |

+

**kwargs,

|

| 41 |

+

):

|

| 42 |

+

self.vocab_size = vocab_size

|

| 43 |

+

self.hidden_size = hidden_size

|

| 44 |

+

self.intermediate_size = intermediate_size

|

| 45 |

+

self.num_hidden_layers = num_hidden_layers

|

| 46 |

+

self.num_attention_heads = num_attention_heads

|

| 47 |

+

self.emb_dropout_prob = emb_dropout_prob

|

| 48 |

+

self.attn_dropout_prob = attn_dropout_prob

|

| 49 |

+

self.layer_norm_epsilon = layer_norm_epsilon

|

| 50 |

+

self.initializer_range = initializer_range

|

| 51 |

+

self.scale_attn_weights = scale_attn_weights

|

| 52 |

+

self.use_cache = use_cache

|

| 53 |

+

self.max_position_embeddings = max_position_embeddings

|

| 54 |

+

self.bf16 = bf16

|

| 55 |

+

self.fp16 = fp16

|

| 56 |

+

self.fp32 = fp32

|

| 57 |

+

self.kv_channels = kv_channels

|

| 58 |

+

self.rotary_pct = rotary_pct

|

| 59 |

+

self.rotary_emb_base = rotary_emb_base

|

| 60 |

+

self.use_dynamic_ntk = use_dynamic_ntk

|

| 61 |

+

self.use_logn_attn = use_logn_attn

|

| 62 |

+

self.use_flash_attn = use_flash_attn

|

| 63 |

+

self.no_bias = no_bias

|

| 64 |

+

self.use_cache_quantization=use_cache_quantization

|

| 65 |

+

self.use_cache_kernel=use_cache_kernel

|

| 66 |

+

super().__init__(

|

| 67 |

+

tie_word_embeddings=tie_word_embeddings,

|

| 68 |

+

**kwargs

|

| 69 |

+

)

|

generation_config.json

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"chat_format": "chatml",

|

| 3 |

+

"do_sample": true,

|

| 4 |

+

"eos_token_id": 151643,

|

| 5 |

+

"max_new_tokens": 512,

|

| 6 |

+

"max_window_size": 6144,

|

| 7 |

+

"pad_token_id": 151643,

|

| 8 |

+

"top_k": 0,

|

| 9 |

+

"top_p": 0.5,

|

| 10 |

+

"transformers_version": "4.32.0"

|

| 11 |

+

}

|

modeling_qwen.py

ADDED

|

@@ -0,0 +1,1417 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Copyright (c) Alibaba Cloud.

|

| 2 |

+

#

|

| 3 |

+

# This source code is licensed under the license found in the

|

| 4 |

+

# LICENSE file in the root directory of this source tree.

|

| 5 |

+

|

| 6 |

+

import importlib

|

| 7 |

+

import math

|

| 8 |

+

from typing import TYPE_CHECKING, Optional, Tuple, Union, Callable, List, Any, Generator

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

import torch.nn.functional as F

|

| 12 |

+

import torch.utils.checkpoint

|

| 13 |

+

from torch.cuda.amp import autocast

|

| 14 |

+

|

| 15 |

+

from torch.nn import CrossEntropyLoss

|

| 16 |

+

from transformers import PreTrainedTokenizer, GenerationConfig, StoppingCriteriaList

|

| 17 |

+

from transformers.generation.logits_process import LogitsProcessorList

|

| 18 |

+

|

| 19 |

+

if TYPE_CHECKING:

|

| 20 |

+

from transformers.generation.streamers import BaseStreamer

|

| 21 |

+

from transformers.generation.utils import GenerateOutput

|

| 22 |

+

from transformers.modeling_outputs import (

|

| 23 |

+

BaseModelOutputWithPast,

|

| 24 |

+

CausalLMOutputWithPast,

|

| 25 |

+

)

|

| 26 |

+

from transformers.modeling_utils import PreTrainedModel

|

| 27 |

+

from transformers.utils import logging

|

| 28 |

+

|

| 29 |

+

try:

|

| 30 |

+

from einops import rearrange

|

| 31 |

+

except ImportError:

|

| 32 |

+

rearrange = None

|

| 33 |

+

from torch import nn

|

| 34 |

+

|

| 35 |

+

try:

|

| 36 |

+

from kernels.cpp_kernels import cache_autogptq_cuda_256

|

| 37 |

+

except ImportError:

|

| 38 |

+

cache_autogptq_cuda_256 = None

|

| 39 |

+

|

| 40 |

+

SUPPORT_CUDA = torch.cuda.is_available()

|

| 41 |

+

SUPPORT_BF16 = SUPPORT_CUDA and torch.cuda.is_bf16_supported()

|

| 42 |

+

SUPPORT_FP16 = SUPPORT_CUDA and torch.cuda.get_device_capability(0)[0] >= 7

|

| 43 |

+

|

| 44 |

+

from .configuration_qwen import QWenConfig

|

| 45 |

+

from .qwen_generation_utils import (

|

| 46 |

+

HistoryType,

|

| 47 |

+

make_context,

|

| 48 |

+

decode_tokens,

|

| 49 |

+

get_stop_words_ids,

|

| 50 |

+

StopWordsLogitsProcessor,

|

| 51 |

+

)

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

logger = logging.get_logger(__name__)

|

| 55 |

+

|

| 56 |

+

_CHECKPOINT_FOR_DOC = "qwen"

|

| 57 |

+

_CONFIG_FOR_DOC = "QWenConfig"

|

| 58 |

+

|

| 59 |

+

QWen_PRETRAINED_MODEL_ARCHIVE_LIST = ["qwen-7b"]

|

| 60 |

+

|

| 61 |

+

_ERROR_BAD_CHAT_FORMAT = """\

|

| 62 |

+

We detect you are probably using the pretrained model (rather than chat model) for chatting, since the chat_format in generation_config is not "chatml".

|

| 63 |

+

If you are directly using the model downloaded from Huggingface, please make sure you are using our "Qwen/Qwen-7B-Chat" Huggingface model (rather than "Qwen/Qwen-7B") when you call model.chat().

|

| 64 |

+

我们检测到您可能在使用预训练模型(而非chat模型)进行多轮chat,因为您当前在generation_config指定的chat_format,并未设置为我们在对话中所支持的"chatml"格式。

|

| 65 |

+

如果您在直接使用我们从Huggingface提供的模型,请确保您在调用model.chat()时,使用的是"Qwen/Qwen-7B-Chat"模型(而非"Qwen/Qwen-7B"预训练模型)。

|

| 66 |

+

"""

|

| 67 |

+

|

| 68 |

+

_SENTINEL = object()

|

| 69 |

+

_ERROR_STREAM_IN_CHAT = """\

|

| 70 |

+

Pass argument `stream` to model.chat() is buggy, deprecated, and marked for removal. Please use model.chat_stream(...) instead of model.chat(..., stream=True).

|

| 71 |

+

向model.chat()传入参数stream的用法可能存在Bug,该用法已被废弃,将在未来被移除。请使用model.chat_stream(...)代替model.chat(..., stream=True)。

|

| 72 |

+

"""

|

| 73 |

+

|

| 74 |

+

_ERROR_INPUT_CPU_QUERY_WITH_FLASH_ATTN_ACTIVATED = """\

|

| 75 |

+

We detect you have activated flash attention support, but running model computation on CPU. Please make sure that your input data has been placed on GPU. If you actually want to run CPU computation, please following the readme and set device_map="cpu" to disable flash attention when loading the model (calling AutoModelForCausalLM.from_pretrained).

|

| 76 |

+

检测到您的模型已激活了flash attention支持,但正在执行CPU运算任务。如使用flash attention,请您确认模型输入已经传到GPU上。如果您确认要执行CPU运算,请您在载入模型(调用AutoModelForCausalLM.from_pretrained)时,按照readme说法,指定device_map="cpu"以禁用flash attention。

|

| 77 |

+

"""

|

| 78 |

+

|

| 79 |

+

apply_rotary_emb_func = None

|

| 80 |

+

rms_norm = None

|

| 81 |

+

flash_attn_unpadded_func = None

|

| 82 |

+

|

| 83 |

+

def _import_flash_attn():

|

| 84 |

+

global apply_rotary_emb_func, rms_norm, flash_attn_unpadded_func

|

| 85 |

+

try:

|

| 86 |

+

from flash_attn.layers.rotary import apply_rotary_emb_func as __apply_rotary_emb_func

|

| 87 |

+

apply_rotary_emb_func = __apply_rotary_emb_func

|

| 88 |

+

except ImportError:

|

| 89 |

+

logger.warn(

|

| 90 |

+

"Warning: import flash_attn rotary fail, please install FlashAttention rotary to get higher efficiency "

|

| 91 |

+

"https://github.com/Dao-AILab/flash-attention/tree/main/csrc/rotary"

|

| 92 |

+

)

|

| 93 |

+

|

| 94 |

+

try:

|

| 95 |

+

from flash_attn.ops.rms_norm import rms_norm as __rms_norm

|

| 96 |

+

rms_norm = __rms_norm

|

| 97 |

+

except ImportError:

|

| 98 |

+

logger.warn(

|

| 99 |

+

"Warning: import flash_attn rms_norm fail, please install FlashAttention layer_norm to get higher efficiency "

|

| 100 |

+

"https://github.com/Dao-AILab/flash-attention/tree/main/csrc/layer_norm"

|

| 101 |

+

)

|

| 102 |

+

|

| 103 |

+

try:

|

| 104 |

+

import flash_attn

|

| 105 |

+

if not hasattr(flash_attn, '__version__'):

|

| 106 |

+

from flash_attn.flash_attn_interface import flash_attn_unpadded_func as __flash_attn_unpadded_func

|

| 107 |

+

else:

|

| 108 |

+

if int(flash_attn.__version__.split(".")[0]) >= 2:

|

| 109 |

+

from flash_attn.flash_attn_interface import flash_attn_varlen_func as __flash_attn_unpadded_func

|

| 110 |

+

else:

|

| 111 |

+

from flash_attn.flash_attn_interface import flash_attn_unpadded_func as __flash_attn_unpadded_func

|

| 112 |

+

flash_attn_unpadded_func = __flash_attn_unpadded_func

|

| 113 |

+

except ImportError:

|

| 114 |

+

logger.warn(

|

| 115 |

+

"Warning: import flash_attn fail, please install FlashAttention to get higher efficiency "

|

| 116 |

+

"https://github.com/Dao-AILab/flash-attention"

|

| 117 |

+

)

|

| 118 |

+

|

| 119 |

+

def quantize_cache_v(fdata, bits, qmax, qmin):

|

| 120 |

+

# b, s, head, h-dim->b, head, s, h-dim

|

| 121 |

+

qtype = torch.uint8

|

| 122 |

+

device = fdata.device

|

| 123 |

+

shape = fdata.shape

|

| 124 |

+

|

| 125 |

+

fdata_cal = torch.flatten(fdata, 2)

|

| 126 |

+

fmax = torch.amax(fdata_cal, dim=-1, keepdim=True)

|

| 127 |

+

fmin = torch.amin(fdata_cal, dim=-1, keepdim=True)

|

| 128 |

+

# Compute params

|

| 129 |

+

if qmax.device != fmax.device:

|

| 130 |

+

qmax = qmax.to(device)

|

| 131 |

+

qmin = qmin.to(device)

|

| 132 |

+

scale = (fmax - fmin) / (qmax - qmin)

|

| 133 |

+

zero = qmin - fmin / scale

|

| 134 |

+

scale = scale.unsqueeze(-1).repeat(1,1,shape[2],1).contiguous()

|

| 135 |

+

zero = zero.unsqueeze(-1).repeat(1,1,shape[2],1).contiguous()

|

| 136 |

+

# Quantize

|

| 137 |

+

res_data = fdata / scale + zero

|

| 138 |

+

qdata = torch.clamp(res_data, qmin, qmax).to(qtype)

|

| 139 |

+

return qdata.contiguous(), scale, zero

|

| 140 |

+

|

| 141 |

+

def dequantize_cache_torch(qdata, scale, zero):

|

| 142 |

+

data = scale * (qdata - zero)

|

| 143 |

+

return data

|

| 144 |

+

|

| 145 |

+

class FlashSelfAttention(torch.nn.Module):

|

| 146 |

+

def __init__(

|

| 147 |

+

self,

|

| 148 |

+

causal=False,

|

| 149 |

+

softmax_scale=None,

|

| 150 |

+

attention_dropout=0.0,

|

| 151 |

+

):

|

| 152 |

+

super().__init__()

|

| 153 |

+

assert flash_attn_unpadded_func is not None, (

|

| 154 |

+

"Please install FlashAttention first, " "e.g., with pip install flash-attn"

|

| 155 |

+

)

|

| 156 |

+

assert (

|

| 157 |

+

rearrange is not None

|

| 158 |

+

), "Please install einops first, e.g., with pip install einops"

|

| 159 |

+

self.causal = causal

|

| 160 |

+

self.softmax_scale = softmax_scale

|

| 161 |

+

self.dropout_p = attention_dropout

|

| 162 |

+

|

| 163 |

+

def unpad_input(self, hidden_states, attention_mask):

|

| 164 |

+

valid_mask = attention_mask.squeeze(1).squeeze(1).eq(0)

|

| 165 |

+

seqlens_in_batch = valid_mask.sum(dim=-1, dtype=torch.int32)

|

| 166 |

+

indices = torch.nonzero(valid_mask.flatten(), as_tuple=False).flatten()

|

| 167 |

+

max_seqlen_in_batch = seqlens_in_batch.max().item()

|

| 168 |

+

cu_seqlens = F.pad(torch.cumsum(seqlens_in_batch, dim=0, dtype=torch.torch.int32), (1, 0))

|

| 169 |

+

hidden_states = hidden_states[indices]

|

| 170 |

+

return hidden_states, indices, cu_seqlens, max_seqlen_in_batch

|

| 171 |

+

|

| 172 |

+

def pad_input(self, hidden_states, indices, batch, seqlen):

|

| 173 |

+

output = torch.zeros(batch * seqlen, *hidden_states.shape[1:], device=hidden_states.device,

|

| 174 |

+

dtype=hidden_states.dtype)

|

| 175 |

+

output[indices] = hidden_states

|

| 176 |

+

return rearrange(output, '(b s) ... -> b s ...', b=batch)

|

| 177 |

+

|

| 178 |

+

def forward(self, q, k, v, attention_mask=None):

|

| 179 |

+

assert all((i.dtype in [torch.float16, torch.bfloat16] for i in (q, k, v)))

|

| 180 |

+

assert all((i.is_cuda for i in (q, k, v)))

|

| 181 |

+

batch_size, seqlen_q = q.shape[0], q.shape[1]

|

| 182 |

+

seqlen_k = k.shape[1]

|

| 183 |

+

|

| 184 |

+

q, k, v = [rearrange(x, "b s ... -> (b s) ...") for x in [q, k, v]]

|

| 185 |

+

cu_seqlens_q = torch.arange(

|

| 186 |

+

0,

|

| 187 |

+

(batch_size + 1) * seqlen_q,

|

| 188 |

+

step=seqlen_q,

|

| 189 |

+

dtype=torch.int32,

|

| 190 |

+

device=q.device,

|

| 191 |

+

)

|

| 192 |

+

|

| 193 |

+

if attention_mask is not None:

|

| 194 |

+

k, indices_k, cu_seqlens_k, seqlen_k = self.unpad_input(k, attention_mask)

|

| 195 |

+

v = v[indices_k]

|

| 196 |

+

if seqlen_q == seqlen_k:

|

| 197 |

+

q = q[indices_k]

|

| 198 |

+

cu_seqlens_q = cu_seqlens_k

|

| 199 |

+

else:

|

| 200 |

+

cu_seqlens_k = torch.arange(

|

| 201 |

+

0,

|

| 202 |

+

(batch_size + 1) * seqlen_k,

|

| 203 |

+

step=seqlen_k,

|

| 204 |

+

dtype=torch.int32,

|

| 205 |

+

device=q.device,

|

| 206 |

+

)

|

| 207 |

+

|

| 208 |

+

if self.training:

|

| 209 |

+

assert seqlen_k == seqlen_q

|

| 210 |

+

is_causal = self.causal

|

| 211 |

+

dropout_p = self.dropout_p

|

| 212 |

+

else:

|

| 213 |

+

is_causal = seqlen_q == seqlen_k

|

| 214 |

+

dropout_p = 0

|

| 215 |

+

|

| 216 |

+

output = flash_attn_unpadded_func(

|

| 217 |

+

q,

|

| 218 |

+

k,

|

| 219 |

+

v,

|

| 220 |

+

cu_seqlens_q,

|

| 221 |

+

cu_seqlens_k,

|

| 222 |

+

seqlen_q,

|

| 223 |

+

seqlen_k,

|

| 224 |

+

dropout_p,

|

| 225 |

+

softmax_scale=self.softmax_scale,

|

| 226 |

+

causal=is_causal,

|

| 227 |

+

)

|

| 228 |

+

if attention_mask is not None and seqlen_q == seqlen_k:

|

| 229 |

+

output = self.pad_input(output, indices_k, batch_size, seqlen_q)

|

| 230 |

+

else:

|

| 231 |

+

new_shape = (batch_size, output.shape[0] // batch_size) + output.shape[1:]

|

| 232 |

+

output = output.view(new_shape)

|

| 233 |

+

return output

|

| 234 |

+

|

| 235 |

+

|

| 236 |

+

class QWenAttention(nn.Module):

|

| 237 |

+

def __init__(self, config):

|

| 238 |

+

super().__init__()

|

| 239 |

+

|

| 240 |

+

self.register_buffer("masked_bias", torch.tensor(-1e4), persistent=False)

|

| 241 |

+

self.seq_length = config.seq_length

|

| 242 |

+

|

| 243 |

+

self.hidden_size = config.hidden_size

|

| 244 |

+

self.split_size = config.hidden_size

|

| 245 |

+

self.num_heads = config.num_attention_heads

|

| 246 |

+

self.head_dim = self.hidden_size // self.num_heads

|

| 247 |

+

|

| 248 |

+

self.use_flash_attn = config.use_flash_attn

|

| 249 |

+

self.scale_attn_weights = True

|

| 250 |

+

|

| 251 |

+

self.projection_size = config.kv_channels * config.num_attention_heads

|

| 252 |

+

|

| 253 |

+

assert self.projection_size % config.num_attention_heads == 0

|

| 254 |

+

self.hidden_size_per_attention_head = (

|

| 255 |

+

self.projection_size // config.num_attention_heads

|

| 256 |

+

)

|

| 257 |

+

|

| 258 |

+

self.c_attn = nn.Linear(config.hidden_size, 3 * self.projection_size)

|

| 259 |

+

|

| 260 |

+

self.c_proj = nn.Linear(

|

| 261 |

+

config.hidden_size, self.projection_size, bias=not config.no_bias

|

| 262 |

+

)

|

| 263 |

+

|

| 264 |

+

self.is_fp32 = not (config.bf16 or config.fp16)

|

| 265 |

+

if (

|

| 266 |

+

self.use_flash_attn

|

| 267 |

+

and flash_attn_unpadded_func is not None

|

| 268 |

+

and not self.is_fp32

|

| 269 |

+

):

|

| 270 |

+

self.core_attention_flash = FlashSelfAttention(

|

| 271 |

+

causal=True, attention_dropout=config.attn_dropout_prob

|

| 272 |

+

)

|

| 273 |

+

self.bf16 = config.bf16

|

| 274 |

+

|

| 275 |

+

self.use_dynamic_ntk = config.use_dynamic_ntk

|

| 276 |

+

self.use_logn_attn = config.use_logn_attn

|

| 277 |

+

|

| 278 |

+

logn_list = [

|

| 279 |

+

math.log(i, self.seq_length) if i > self.seq_length else 1

|

| 280 |

+

for i in range(1, 32768)

|

| 281 |

+

]

|

| 282 |

+

logn_tensor = torch.tensor(logn_list)[None, :, None, None]

|

| 283 |

+

self.register_buffer("logn_tensor", logn_tensor, persistent=False)

|

| 284 |

+

|

| 285 |

+

self.attn_dropout = nn.Dropout(config.attn_dropout_prob)

|

| 286 |

+

self.use_cache_quantization = config.use_cache_quantization if hasattr(config, 'use_cache_quantization') else False

|

| 287 |

+

self.use_cache_kernel = config.use_cache_kernel if hasattr(config,'use_cache_kernel') else False

|

| 288 |

+

cache_dtype = torch.float

|

| 289 |

+

if self.bf16:

|

| 290 |

+

cache_dtype=torch.bfloat16

|

| 291 |

+

elif config.fp16:

|

| 292 |

+

cache_dtype = torch.float16

|

| 293 |

+

self.cache_qmax = torch.tensor(torch.iinfo(torch.uint8).max, dtype=cache_dtype)

|

| 294 |

+

self.cache_qmin = torch.tensor(torch.iinfo(torch.uint8).min, dtype=cache_dtype)

|

| 295 |

+

|

| 296 |

+

def _attn(self, query, key, value, registered_causal_mask, attention_mask=None, head_mask=None):

|

| 297 |

+

device = query.device

|

| 298 |

+

if self.use_cache_quantization:

|

| 299 |

+

qk, qk_scale, qk_zero = key

|

| 300 |

+

if self.use_cache_kernel and cache_autogptq_cuda_256 is not None:

|

| 301 |

+

shape = query.shape[:-1] + (qk.shape[-2],)

|

| 302 |

+

attn_weights = torch.zeros(shape, dtype=torch.float16, device=device)

|

| 303 |

+

cache_autogptq_cuda_256.vecquant8matmul_batched_faster_old(

|

| 304 |

+

query.contiguous() if query.dtype == torch.float16 else query.to(torch.float16).contiguous(),

|

| 305 |

+

qk.transpose(-1, -2).contiguous(),

|

| 306 |

+

attn_weights,

|

| 307 |

+

qk_scale.contiguous() if qk_scale.dtype == torch.float16 else qk_scale.to(torch.float16).contiguous(),

|

| 308 |

+

qk_zero.contiguous()if qk_zero.dtype == torch.float16 else qk_zero.to(torch.float16).contiguous())

|

| 309 |

+

# attn_weights = attn_weights.to(query.dtype).contiguous()

|

| 310 |

+

else:

|

| 311 |

+

key = dequantize_cache_torch(qk, qk_scale, qk_zero)

|

| 312 |

+

attn_weights = torch.matmul(query, key.transpose(-1, -2))

|

| 313 |

+

else:

|

| 314 |

+

attn_weights = torch.matmul(query, key.transpose(-1, -2))

|

| 315 |

+

|

| 316 |

+

if self.scale_attn_weights:

|

| 317 |

+

if self.use_cache_quantization:

|

| 318 |

+

size_temp = value[0].size(-1)

|

| 319 |

+

else:

|

| 320 |

+

size_temp = value.size(-1)

|

| 321 |

+

attn_weights = attn_weights / torch.full(

|

| 322 |

+

[],

|

| 323 |

+

size_temp ** 0.5,

|

| 324 |

+

dtype=attn_weights.dtype,

|

| 325 |

+

device=attn_weights.device,

|

| 326 |

+

)

|

| 327 |

+

if self.use_cache_quantization:

|

| 328 |

+

query_length, key_length = query.size(-2), key[0].size(-2)

|

| 329 |

+

else:

|

| 330 |

+

query_length, key_length = query.size(-2), key.size(-2)

|

| 331 |

+

causal_mask = registered_causal_mask[

|

| 332 |

+

:, :, key_length - query_length : key_length, :key_length