Update README.md

Browse files

README.md

CHANGED

|

@@ -28,6 +28,22 @@ configs:

|

|

| 28 |

- split: train

|

| 29 |

path: data/train-*

|

| 30 |

---

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 31 |

|

| 32 |

This code-related data from [Fineweb](https://huggingface.co/spaces/HuggingFaceFW/blogpost-fineweb-v1) was specifically used in [OpenCoder](https://huggingface.co/papers/2411.04905) pre-training.

|

| 33 |

We employ fastText in three iterative rounds to recall a final dataset of 55B code and math-related data.

|

|

@@ -35,12 +51,15 @@ You can find math-related data at [OpenCoder-LLM/fineweb-math-corpus](https://hu

|

|

| 35 |

|

| 36 |

*This work belongs to [INF](https://www.infly.cn/).*

|

| 37 |

|

| 38 |

-

|

|

|

|

|

|

|

|

|

|

| 39 |

```

|

| 40 |

@inproceedings{Huang2024OpenCoderTO,

|

| 41 |

-

title={OpenCoder: The Open Cookbook for Top-Tier Code Large Language Models},

|

| 42 |

-

author={Siming Huang and Tianhao Cheng and Jason Klein Liu and Jiaran Hao and Liuyihan Song and Yang Xu and J. Yang and J. H. Liu and Chenchen Zhang and Linzheng Chai and Ruifeng Yuan and Zhaoxiang Zhang and Jie Fu and Qian Liu and Ge Zhang and Zili Wang and Yuan Qi and Yinghui Xu and Wei Chu},

|

| 43 |

-

year={2024},

|

| 44 |

-

url={https://arxiv.org/pdf/2411.04905}

|

| 45 |

}

|

| 46 |

-

```

|

|

|

|

| 28 |

- split: train

|

| 29 |

path: data/train-*

|

| 30 |

---

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

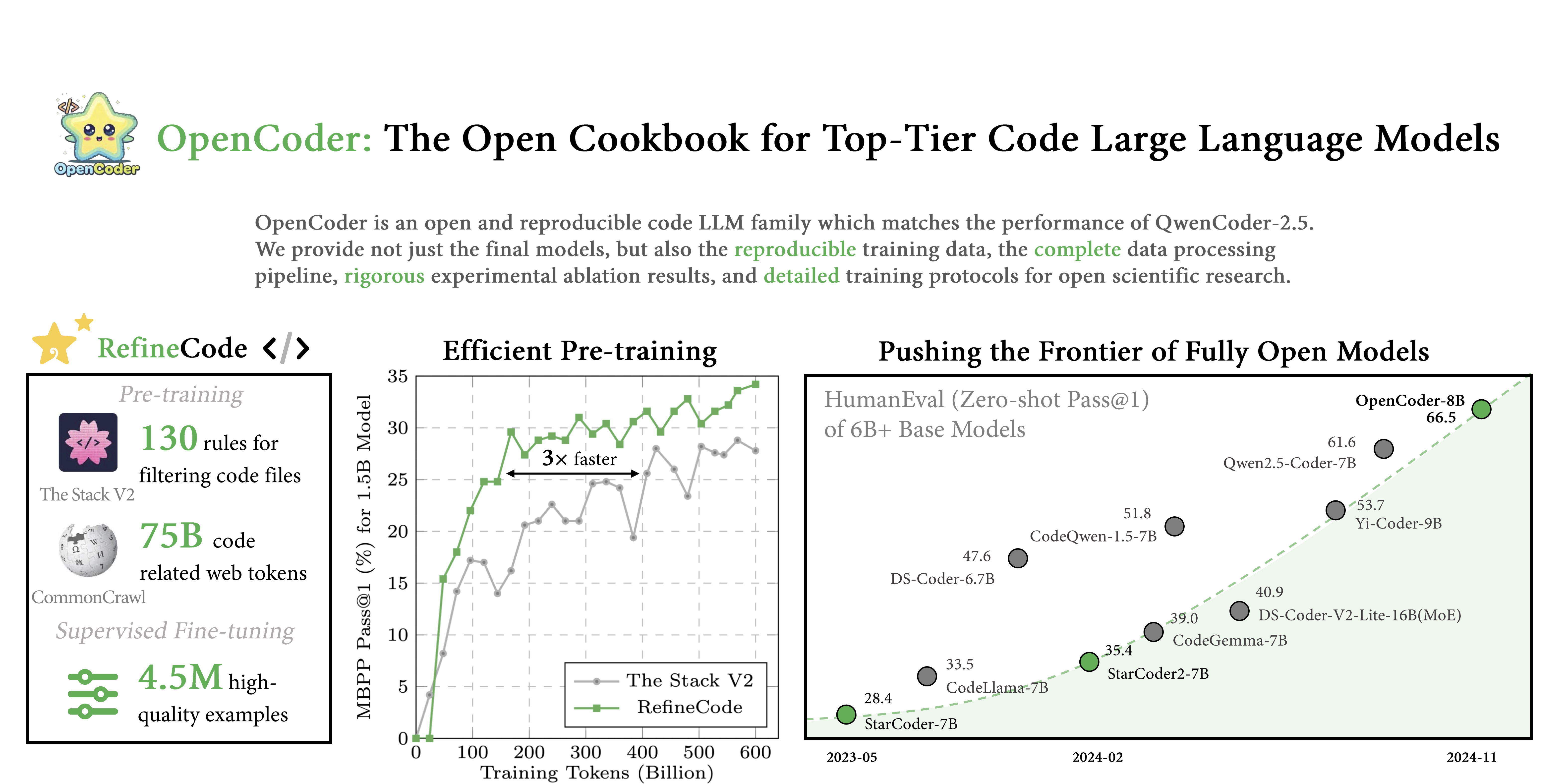

# OpenCoder Dataset

|

| 34 |

+

The OpenCoder dataset is composed of the following datasets:

|

| 35 |

+

|

| 36 |

+

* [opc-sft-stage1](https://huggingface.co/datasets/OpenCoder-LLM/opc-sft-stage1): the sft data used for opencoder sft-stage1

|

| 37 |

+

* [opc-sft-stage2](https://huggingface.co/datasets/OpenCoder-LLM/opc-sft-stage2): the sft data used for opencoder sft-stage2

|

| 38 |

+

* [opc-annealing-corpus](https://huggingface.co/datasets/OpenCoder-LLM/opc-annealing-corpus): the synthetic data & algorithmic corpus used for opencoder annealing

|

| 39 |

+

* [fineweb-code-corpus](https://huggingface.co/datasets/OpenCoder-LLM/fineweb-code-corpus): the code-related page recalled from fineweb **<-- you are here**

|

| 40 |

+

* [fineweb-math-corpus](https://huggingface.co/datasets/OpenCoder-LLM/fineweb-math-corpus): the math-related page recalled from fineweb

|

| 41 |

+

* [refineCode-code-corpus-meta](https://huggingface.co/datasets/OpenCoder-LLM/RefineCode-code-corpus-meta): the meta-data of RefineCode

|

| 42 |

+

|

| 43 |

+

Detailed information about the data can be found in our [paper](https://arxiv.org/abs/2411.04905).

|

| 44 |

+

|

| 45 |

+

|

| 46 |

+

## opc-fineweb-code-corpus

|

| 47 |

|

| 48 |

This code-related data from [Fineweb](https://huggingface.co/spaces/HuggingFaceFW/blogpost-fineweb-v1) was specifically used in [OpenCoder](https://huggingface.co/papers/2411.04905) pre-training.

|

| 49 |

We employ fastText in three iterative rounds to recall a final dataset of 55B code and math-related data.

|

|

|

|

| 51 |

|

| 52 |

*This work belongs to [INF](https://www.infly.cn/).*

|

| 53 |

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

## Citation Information

|

| 57 |

+

Please consider citing our [paper](https://arxiv.org/abs/2411.04905) if you find this dataset useful:

|

| 58 |

```

|

| 59 |

@inproceedings{Huang2024OpenCoderTO,

|

| 60 |

+

title = {OpenCoder: The Open Cookbook for Top-Tier Code Large Language Models},

|

| 61 |

+

author = {Siming Huang and Tianhao Cheng and Jason Klein Liu and Jiaran Hao and Liuyihan Song and Yang Xu and J. Yang and J. H. Liu and Chenchen Zhang and Linzheng Chai and Ruifeng Yuan and Zhaoxiang Zhang and Jie Fu and Qian Liu and Ge Zhang and Zili Wang and Yuan Qi and Yinghui Xu and Wei Chu},

|

| 62 |

+

year = {2024},

|

| 63 |

+

url = {https://arxiv.org/pdf/2411.04905}

|

| 64 |

}

|

| 65 |

+

```

|