anchor

stringlengths 1

23.8k

| positive

stringlengths 1

23.8k

| negative

stringlengths 1

31k

| anchor_status

stringclasses 3

values |

|---|---|---|---|

## Inspiration

In college I have weird 50 minute slots of time in between classes when it wouldn't make sense to walk all the way back to my dorm, but at the same time 50 minutes is a long wait. I would love to be able to meet up with friends who are also free during this time, but don't want to text every single friend asking if they are free.

## What it does

Users of Toggle can open up the website whenever they are free and click on the hour time slot they are in. Toggle then provides a list of suggestions for what to do. All the events entered for that time will show up on the right side of the screen.

Everyone is welcome to add to the events on Toggle and each school could have it's own version so that we all make the most of our free time on campus, by meeting new people and learning about new communities we might not have run into otherwise.

## How I built it

## Challenges I ran into

## Accomplishments that I'm proud of

I learned and built in JavaScript in 36 hours!!

## What I learned

24 arrays was not the way to go - object arrays are a life-saver.

## What's next for Toggle | # Inspiration

We came to Stanford expecting a vibrant college atmosphere. Yet walk past a volleyball or basketball court at Stanford mid-Winter quarter, and you’ll probably find it empty. As college students, our lives revolve around two pillars: productivity and play. In an ideal world, we spend intentional parts of our day fully productive–activities dedicated to our fulfillment–and some parts of our day fully immersed in play–activities dedicated solely to our joy. In reality, though, students might party, but how often do they play? Large chunks of their day are spent in their dorm room, caught between these two choices, doing essentially nothing. This doesn’t improve their mental health.

Imagine, or rather, remember, when you were last in that spot. Even if you were struck by inspiration to get out and do something fun, who with? You could text your friends, but you don’t know enough people to play 4-on-4 soccer, or if anyone’s interested in joining you for some baking between classes.

# A Solution

When encountering this problem, frolic can help. Users can:

See existing events, sorted by events “containing” most of their friends at the top

Join an event, getting access to the names of all members of event (not just their friends)

Or, save/bookmark an event for later (no notification sent to others)

Access full info of events they’ve joined or saved in the “My Events” tab

Additional, nice-to-have features include:

Notification if their friend(s) have joined an event in case they’d like to join as well

# Challenges & An Important Lesson

Not only had none of us had iOS app development experience, but with less than 12 hours to go, we realized with the original environment and language we were working in (Swift and XCode), the learning curve to create the full app was far too steep. Thus, we essentially started anew. We realized the importance of reaching out for guidance from more experienced people early on, whether at a hackathon, academic, or career-setting.

/\* Deep down, we know how important times of play are–though, we often never seem to “have time” for it. In reality, this often is correlated with us being caught in a rift between the two poles we mentioned: not being totally productive, nor totally grasping the joy that we should ideally get from some everyday activities. \*/ | ## Inspiration

As University of Waterloo students who are constantly moving in and out of many locations, as well as constantly changing roommates, there are many times when we discovered friction or difficulty in communicating with each other to get stuff done around the house.

## What it does

Our platform allows roommates to quickly schedule and assign chores, as well as provide a messageboard for common things.

## How we built it

Our solution is built on ruby-on-rails, meant to be a quick simple solution.

## Challenges we ran into

The time constraint made it hard to develop all the features we wanted, so we had to reduce scope on many sections and provide a limited feature-set.

## Accomplishments that we're proud of

We thought that we did a great job on the design, delivering a modern and clean look.

## What we learned

Prioritize features beforehand, and stick to features that would be useful to as many people as possible. So, instead of overloading features that may not be that useful, we should focus on delivering the core features and make them as easy as possible.

## What's next for LiveTogether

Finish the features we set out to accomplish, and finish theming the pages that we did not have time to concentrate on. We will be using LiveTogether with our roommates, and are hoping to get some real use out of it! | losing |

## Inspiration

A couple weeks ago, a friend was hospitalized for taking Advil–she accidentally took 27 pills, which is nearly 5 times the maximum daily amount. Apparently, when asked why, she responded that thats just what she always had done and how her parents have told her to take Advil. The maximum Advil you are supposed to take is 6 per day, before it becomes a hazard to your stomach.

#### PillAR is your personal augmented reality pill/medicine tracker.

It can be difficult to remember when to take your medications, especially when there are countless different restrictions for each different medicine. For people that depend on their medication to live normally, remembering and knowing when it is okay to take their medication is a difficult challenge. Many drugs have very specific restrictions (eg. no more than one pill every 8 hours, 3 max per day, take with food or water), which can be hard to keep track of. PillAR helps you keep track of when you take your medicine and how much you take to keep you safe by not over or under dosing.

We also saw a need for a medicine tracker due to the aging population and the number of people who have many different medications that they need to take. According to health studies in the U.S., 23.1% of people take three or more medications in a 30 day period and 11.9% take 5 or more. That is over 75 million U.S. citizens that could use PillAR to keep track of their numerous medicines.

## How we built it

We created an iOS app in Swift using ARKit. We collect data on the pill bottles from the iphone camera and passed it to the Google Vision API. From there we receive the name of drug, which our app then forwards to a Python web scraping backend that we built. This web scraper collects usage and administration information for the medications we examine, since this information is not available in any accessible api or queryable database. We then use this information in the app to keep track of pill usage and power the core functionality of the app.

## Accomplishments that we're proud of

This is our first time creating an app using Apple's ARKit. We also did a lot of research to find a suitable website to scrape medication dosage information from and then had to process that information to make it easier to understand.

## What's next for PillAR

In the future, we hope to be able to get more accurate medication information for each specific bottle (such as pill size). We would like to improve the bottle recognition capabilities, by maybe writing our own classifiers or training a data set. We would also like to add features like notifications to remind you of good times to take pills to keep you even healthier. | **check out the project demo during the closing ceremony!**

<https://youtu.be/TnKxk-GelXg>

## Inspiration

On average, half of patients with chronic illnesses like heart disease or asthma don’t take their medication. Reports estimates that poor medication adherence could be costing the country $300 billion in increased medical costs.

So why is taking medication so tough? People get confused and people forget.

When the pharmacy hands over your medication, it usually comes with a stack of papers, stickers on the pill bottles, and then in addition the pharmacist tells you a bunch of mumble jumble that you won’t remember.

<http://www.nbcnews.com/id/20039597/ns/health-health_care/t/millions-skip-meds-dont-take-pills-correctly/#.XE3r2M9KjOQ>

## What it does

The solution:

How are we going to solve this? With a small scrap of paper.

NekoTap helps patients access important drug instructions quickly and when they need it.

On the pharmacist’s end, he only needs to go through 4 simple steps to relay the most important information to the patients.

1. Scan the product label to get the drug information.

2. Tap the cap to register the NFC tag. Now the product and pill bottle are connected.

3. Speak into the app to make an audio recording of the important dosage and usage instructions, as well as any other important notes.

4. Set a refill reminder for the patients. This will automatically alert the patient once they need refills, a service that most pharmacies don’t currently provide as it’s usually the patient’s responsibility.

On the patient’s end, after they open the app, they will come across 3 simple screens.

1. First, they can listen to the audio recording containing important information from the pharmacist.

2. If they swipe, they can see a copy of the text transcription. Notice how there are easy to access zoom buttons to enlarge the text size.

3. Next, there’s a youtube instructional video on how to use the drug in case the patient need visuals.

Lastly, the menu options here allow the patient to call the pharmacy if he has any questions, and also set a reminder for himself to take medication.

## How I built it

* Android

* Microsoft Azure mobile services

* Lottie

## Challenges I ran into

* Getting the backend to communicate with the clinician and the patient mobile apps.

## Accomplishments that I'm proud of

Translations to make it accessible for everyone! Developing a great UI/UX.

## What I learned

* UI/UX design

* android development | ## Inspiration

While talking to Mitt from the CVS booth, he opened my eyes to a problem that I was previously unaware - counterfeits in the pharmaceutical industry. After a good amount of research, I learned that it was possible to make a solution during the hackathon. A friendly interface with a blockchain backend could track drugs immutably, and be able to track the item from factory to the consumer means safer prescription drugs for everyone.

## What it does

Using our app, users can scan the item, and use the provided passcode to make sure that item they have is legit. Using just the QR scanner on our app, it is very easy to verify the goods you bought, as well as the location the drugs were manufactured.

## How we built it

We started off wanting to ensure immutability for our users; after all, our whole platform is made for users to trust the items they scan. What came to our minds was using blockchain technology, which would allow us to ensure each and every item would remain immutable and publicly verifiable by any party. This way, users would know that the data we present is always true and legitimate. After building the blockchain technology with Node.js, we started working on the actual mobile platform. To create both iOS and Android versions simultaneously, we used AngularJS to create a shared codebase so we could easily adapt the app for both platforms. Although we didn't have any UI/UX experience, we tried to make the app as simple and user-friendly as possible. We incorporated Google Maps API to track and plot the location of where items are scanned to add that to our metadata and added native packages like QR code scanning and generation to make things easier for users to use. Although we weren't able to publish to the app stores, we tested our app using emulators to ensure all functionality worked as intended.

## Challenges we ran into

Our first challenge was learning how to build a blockchain ecosystem within a mobile app. Since the technology was somewhat foreign to us, we had to learn the in and outs of what "makes" a blockchain and how to ensure its immutability. After all, trust and security are our number one priorities and without them, our app was meaningless. In the end, we found a way to create this ecosystem and performed numerous unit tests to ensure it was up to industry standards. Another challenge we faced was getting the app to work in both iOS and Android environments. Since each platform had its set of "rules and standards", we had to make sure that our functions worked in both and that no errors were engendered from platform deviations.

## What's next for NativeChain

We hope to expand our target audience to secondhand commodities and the food industry. In today's society, markets such as eBay and Alibaba are flooded with counterfeit luxury goods such as clothing and apparel. When customers buy these goods from secondhand retailers on eBay, there's currently no way they can know for certain whether that item is legitimate as they claim; they solely rely on the seller's word. However, we hope to disrupt this and allow customers to immediately view where the item was manufactured and if it truly is from Gucci, rather than a counterfeit market in China. Another industry we hope to expand to is foods. People care about where the food they eat comes from, whether it's kosher and organic and non-GMO. Although the FDA regulates this to a certain extent, this data isn't easily accessible by customers. We want to provide a transparent and easy way to users to view the food they are eating by showing them data like where the honey was produced, where the cows were grown, and when their fruits were picked. Outbreaks such as the Chipotle Ecoli incident can be pinpointed as they can view where the incident started and to warn customers to not eat food coming from that area. | winning |

This project was developed with the RBC challenge in mind of developing the Help Desk of the future.

## What inspired us

We were inspired by our motivation to improve the world of work.

## Background

If we want technical support, we usually contact companies by phone, which is slow and painful for both users and technical support agents, especially when questions are obvious. Our solution is an online chat that responds to people immediately using our own bank of answers. This is a portable and a scalable solution.

## Try it!

<http://www.rbcH.tech>

## What we learned

Using NLP, dealing with devilish CORS, implementing docker successfully, struggling with Kubernetes.

## How we built

* Node.js for our servers (one server for our webapp, one for BotFront)

* React for our front-end

* Rasa-based Botfront, which is the REST API we are calling for each user interaction

* We wrote our own Botfront database during the last day and night

## Our philosophy for delivery

Hack, hack, hack until it works. Léonard was our webapp and backend expert, François built the DevOps side of things and Han solidified the front end and Antoine wrote + tested the data for NLP.

## Challenges we faced

Learning brand new technologies is sometimes difficult! Kubernetes (and CORS brought us some painful pain... and new skills and confidence.

## Our code

<https://github.com/ntnco/mchacks/>

## Our training data

<https://github.com/lool01/mchack-training-data> | # The Ultimate Water Heater

February 2018

## Authors

This is the TreeHacks 2018 project created by Amarinder Chahal and Matthew Chan.

## About

Drawing inspiration from a diverse set of real-world information, we designed a system with the goal of efficiently utilizing only electricity to heat and pre-heat water as a means to drastically save energy, eliminate the use of natural gases, enhance the standard of living, and preserve water as a vital natural resource.

Through the accruement of numerous API's and the help of countless wonderful people, we successfully created a functional prototype of a more optimal water heater, giving a low-cost, easy-to-install device that works in many different situations. We also empower the user to control their device and reap benefits from their otherwise annoying electricity bill. But most importantly, our water heater will prove essential to saving many regions of the world from unpredictable water and energy crises, pushing humanity to an inevitably greener future.

Some key features we have:

* 90% energy efficiency

* An average rate of roughly 10 kW/hr of energy consumption

* Analysis of real-time and predictive ISO data of California power grids for optimal energy expenditure

* Clean and easily understood UI for typical household users

* Incorporation of the Internet of Things for convenience of use and versatility of application

* Saving, on average, 5 gallons per shower, or over ****100 million gallons of water daily****, in CA alone. \*\*\*

* Cheap cost of installation and immediate returns on investment

## Inspiration

By observing the RhoAI data dump of 2015 Californian home appliance uses through the use of R scripts, it becomes clear that water-heating is not only inefficient but also performed in an outdated manner. Analyzing several prominent trends drew important conclusions: many water heaters become large consumers of gasses and yet are frequently neglected, most likely due to the trouble in attaining successful installations and repairs.

So we set our eyes on a safe, cheap, and easily accessed water heater with the goal of efficiency and environmental friendliness. In examining the inductive heating process replacing old stovetops with modern ones, we found the answer. It accounted for every flaw the data decried regarding water-heaters, and would eventually prove to be even better.

## How It Works

Our project essentially operates in several core parts running simulataneously:

* Arduino (101)

* Heating Mechanism

* Mobile Device Bluetooth User Interface

* Servers connecting to the IoT (and servicing via Alexa)

Repeat all processes simultaneously

The Arduino 101 is the controller of the system. It relays information to and from the heating system and the mobile device over Bluetooth. It responds to fluctuations in the system. It guides the power to the heating system. It receives inputs via the Internet of Things and Alexa to handle voice commands (through the "shower" application). It acts as the peripheral in the Bluetooth connection with the mobile device. Note that neither the Bluetooth connection nor the online servers and webhooks are necessary for the heating system to operate at full capacity.

The heating mechanism consists of a device capable of heating an internal metal through electromagnetic waves. It is controlled by the current (which, in turn, is manipulated by the Arduino) directed through the breadboard and a series of resistors and capacitors. Designing the heating device involed heavy use of applied mathematics and a deeper understanding of the physics behind inductor interference and eddy currents. The calculations were quite messy but mandatorily accurate for performance reasons--Wolfram Mathematica provided inhumane assistance here. ;)

The mobile device grants the average consumer a means of making the most out of our water heater and allows the user to make informed decisions at an abstract level, taking away from the complexity of energy analysis and power grid supply and demand. It acts as the central connection for Bluetooth to the Arduino 101. The device harbors a vast range of information condensed in an effective and aesthetically pleasing UI. It also analyzes the current and future projections of energy consumption via the data provided by California ISO to most optimally time the heating process at the swipe of a finger.

The Internet of Things provides even more versatility to the convenience of the application in Smart Homes and with other smart devices. The implementation of Alexa encourages the water heater as a front-leader in an evolutionary revolution for the modern age.

## Built With:

(In no particular order of importance...)

* RhoAI

* R

* Balsamiq

* C++ (Arduino 101)

* Node.js

* Tears

* HTML

* Alexa API

* Swift, Xcode

* BLE

* Buckets and Water

* Java

* RXTX (Serial Communication Library)

* Mathematica

* MatLab (assistance)

* Red Bull, Soylent

* Tetrix (for support)

* Home Depot

* Electronics Express

* Breadboard, resistors, capacitors, jumper cables

* Arduino Digital Temperature Sensor (DS18B20)

* Electric Tape, Duct Tape

* Funnel, for testing

* Excel

* Javascript

* jQuery

* Intense Sleep Deprivation

* The wonderful support of the people around us, and TreeHacks as a whole. Thank you all!

\*\*\* According to the Washington Post: <https://www.washingtonpost.com/news/energy-environment/wp/2015/03/04/your-shower-is-wasting-huge-amounts-of-energy-and-water-heres-what-to-do-about-it/?utm_term=.03b3f2a8b8a2>

Special thanks to our awesome friends Michelle and Darren for providing moral support in person! | ## Inspiration

When we were deciding what to build for our hack this time we had plenty of great ideas. We zeroed down on something that people like us would want to use. The hardest problem faced by people like us is managing the assignments, classes and the infamous LeetCode grind. Now it would have been most useful if we could design an app that would finish our homework for us without plagiarising things off of the internet but since we could not come up with that solution(believe me we tried) we did the next best thing. We tried our hands at making the LeetCode grind easier by using machine learning and data analytics. We are pretty sure every engineer has to go through this rite of passage. Since there is no way to circumvent this grind only our goal is to make it less painful and more focused.

## What it does

The goal of the project was clear from the onset, minimizing the effort and maximizing the learning thereby making the grind less tedious. We achieved this by using data analytics and machine learning to find the deficiencies in the user knowledge base and recommend questions with an aim to fill the gaps. We also allow the users to understand their data better by allowing the users to make simple queries over our chatbot which utilizes NLP to understand and answer the queries. The overall business logic is hosted on the cloud over the google app engine.

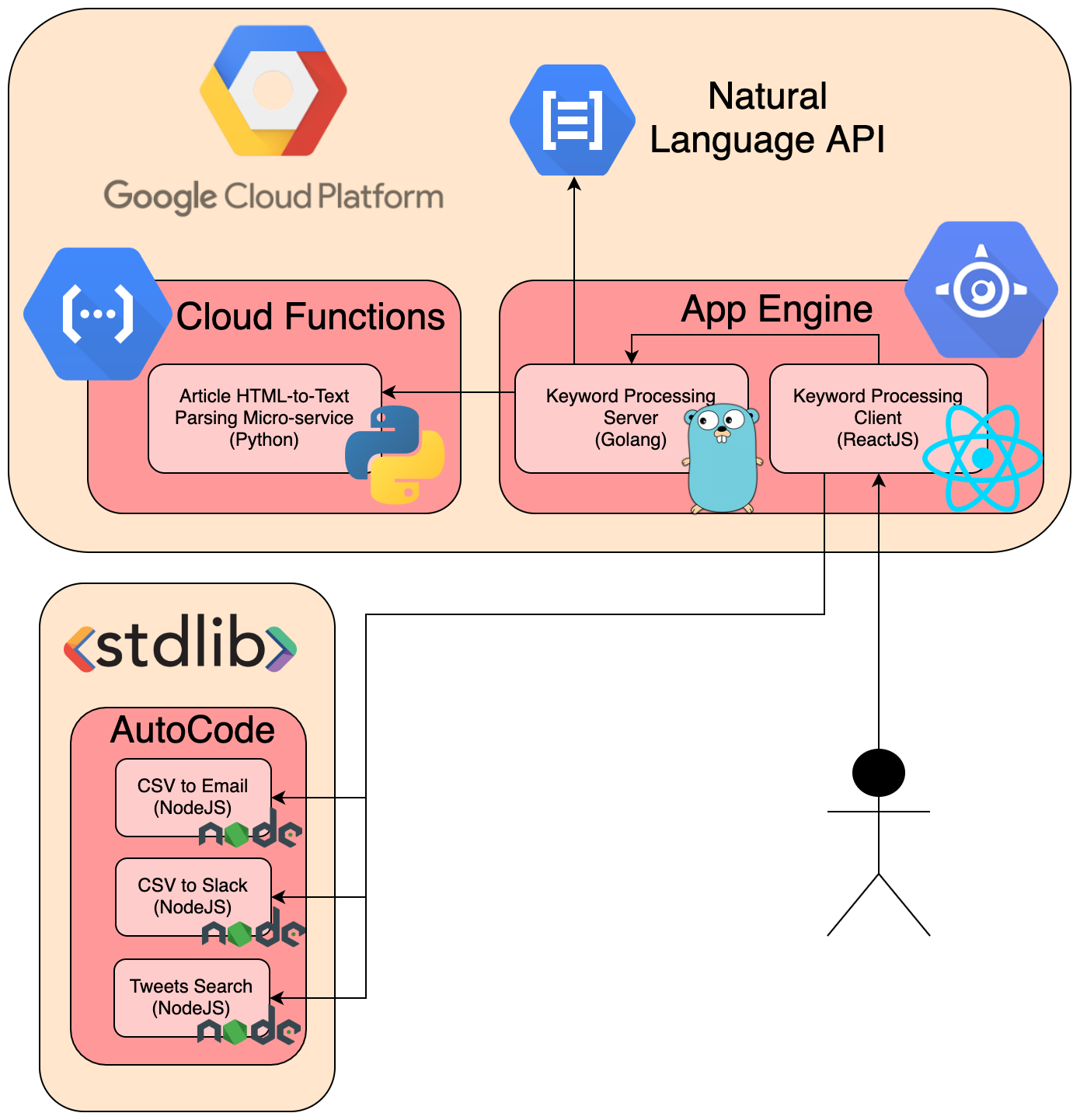

## How we built it

The project achieves its goals using 5 major components:

1. The web scrapper to scrap the user data from websites like LeetCode.

2. Data analytics and machine learning to find areas of weakness and processing the question bank to find the next best question in an attempt to maximize learning.

3. Google app engine to host the APIs created in java which connects our front end with the business logic in the backend.

4. Google dialogflow for the chatbot where users can make simple queries to understand their statistics better.

Android app client where the user interacts with all these components utilizing the synergy generated by the combination of the aforementioned amazing components.

## Challenges we ran into

There were a number of challenges that we ran into:-

1. Procuring the data: We had to build our own web scraper to extract the question bank and the data from the interview prep websites. The security measures employed by the websites didn't make our job any easier.

2. Learning new technology: We wanted to incorporate a chatbox into our app, this was something completely new to a few of us and learning it in a short amount of time to write production-quality code was an uphill battle.

3. Building the multiple components required to make our ambitious project work.

4. Lack of UI/UX expertise. It is a known fact that not many developers are good designers, even though we are proud of the UI that we were able to build but we feel we could have done better with mockups etc.

## Accomplishments that we are proud of

1. Completing the project in the stipulated time. Finishing the app for the demo seemed like an insurmountable task on Saturday night after little to no sleep the previous night.

2. Production quality code: We tried to keep our code as clean as possible by using best programming practices whenever we could so that the code is easier to manage, debug, and understand.

## What we learned

1. Building APIs in Spring Boot

2. Using MongoDB with Spring Boot

3. Configuring MongoDB in Google Cloud Compute

4. Deploying Spring Boot APIs in Google App Engine & basics of GAE

5. Chatbots & building chatbots in DialogFlow

6. Building APIs in NodeJS & linking them with DialogFlow via Fulfillment

7. Scrapping data using Selenium & the common challenges while scrapping large volumes of data

8. Parsing scrapped data & efficiently caching it

## What's next for CodeLearnDo

1. Incorporating leaderboards and a sense of community in the app to encourage learning. | winning |

## Inspiration

Greenhouses require increased disease control and need to closely monitor their plants to ensure they're healthy. In particular, the project aims to capitalize on the recent cannabis interest.

## What it Does

It's a sensor system composed of cameras, temperature and humidity sensors layered with smart analytics that allows the user to tell when plants in his/her greenhouse are diseased.

## How We built it

We used the Telus IoT Dev Kit to build the sensor platform along with Twillio to send emergency texts (pending installation of the IoT edge runtime as of 8 am today).

Then we used azure to do transfer learning on vggnet to identify diseased plants and identify them to the user. The model is deployed to be used with IoT edge. Moreover, there is a web app that can be used to show that the

## Challenges We Ran Into

The data-sets for greenhouse plants are in fairly short supply so we had to use an existing network to help with saliency detection. Moreover, the low light conditions in the dataset were in direct contrast (pun intended) to the PlantVillage dataset used to train for diseased plants. As a result, we had to implement a few image preprocessing methods, including something that's been used for plant health detection in the past: eulerian magnification.

## Accomplishments that We're Proud of

Training a pytorch model at a hackathon and sending sensor data from the STM Nucleo board to Azure IoT Hub and Twilio SMS.

## What We Learned

When your model doesn't do what you want it to, hyperparameter tuning shouldn't always be the go to option. There might be (in this case, was) some intrinsic aspect of the model that needed to be looked over.

## What's next for Intelligent Agriculture Analytics with IoT Edge | ## Inspiration

We wanted to create a proof-of-concept for a potentially useful device that could be used commercially and at a large scale. We ultimately designed to focus on the agricultural industry as we feel that there's a lot of innovation possible in this space.

## What it does

The PowerPlant uses sensors to detect whether a plant is receiving enough water. If it's not, then it sends a signal to water the plant. While our proof of concept doesn't actually receive the signal to pour water (we quite like having working laptops), it would be extremely easy to enable this feature.

All data detected by the sensor is sent to a webserver, where users can view the current and historical data from the sensors. The user is also told whether the plant is currently being automatically watered.

## How I built it

The hardware is built on an Arduino 101, with dampness detectors being used to detect the state of the soil. We run custom scripts on the Arduino to display basic info on an LCD screen. Data is sent to the websever via a program called Gobetwino, and our JavaScript frontend reads this data and displays it to the user.

## Challenges I ran into

After choosing our hardware, we discovered that MLH didn't have an adapter to connect it to a network. This meant we had to work around this issue by writing text files directly to the server using Gobetwino. This was an imperfect solution that caused some other problems, but it worked well enough to make a demoable product.

We also had quite a lot of problems with Chart.js. There's some undocumented quirks to it that we had to deal with - for example, data isn't plotted on the chart unless a label for it is set.

## Accomplishments that I'm proud of

For most of us, this was the first time we'd ever created a hardware hack (and competed in a hackathon in general), so managing to create something demoable is amazing. One of our team members even managed to learn the basics of web development from scratch.

## What I learned

As a team we learned a lot this weekend - everything from how to make hardware communicate with software, the basics of developing with Arduino and how to use the Charts.js library. Two of our team member's first language isn't English, so managing to achieve this is incredible.

## What's next for PowerPlant

We think that the technology used in this prototype could have great real world applications. It's almost certainly possible to build a more stable self-contained unit that could be used commercially. | # Easy-garden

Machine Learning model to take care of your plants easily.

## Inspiration

Most of the people like having plants in their home and office, because hey are beautiful and can connect us with nature just a little bit. But most of the time we really don´t take care of them, and they could get sick. Thinking of this, a system that can monitor the health of plants and tell you if one of them got a disease could be helpful. The system needs to be capturing images at real time and then classify it in diseased or healthy, in case of a disease it can notify you or even provide a treatment for the plant.

## What it does

It is a maching learning model that takes an input image and classify it into healthy or disease, displaying the result on the screen.

## How I build it

I use datasets of plants healthy and disease found in PlantVillage and developed a machine learning model in Tensorflow using keras API, getting either healthy or diseased.

The data set consisted in 1943 images are of category diseased and 1434 images are of category healthy. The size of each image is different so the image dimension. Most of the images are in jpeg but also contains some images in .png and gif.

To feed the maching learning model, it was needed to convert each pixel of the RGB color images to a pixel with value between 0 and 1, and resize all the images to a dimension of 170 x 170.

I use Tensorflow to feed the data to neural network, and created 3 datasets with different distributions of data, Training: 75%, Valid: 15% and Testing: 10%.

## Challenge I ran into

Testing a few differents models of convolution neural networks, there were so many different results and was a little difficult to adapt to another architecture at first, ending with the model Vgg16, that was pre-trained on imagenet but we still change the architecture a little bit it was posible to retrain the model just a bit before it ends.

App building continue to be a challenge but I have learned a lot about it, since I has no experience on that, and trying to combine it with AR was very difficult.

## What I learned

I learned a lot more neural networks models that haven't used before as well as some API's very useful to develop the idea, some shortcuts to display data and a lot about plant diseases. I learn some basic things about Azure and Kinvy platforms. | winning |

## Inspiration

* New ways of interacting with games (while VR is getting popular, there is not anything that you can play without a UI right now)

* Fully text-based game from the 80's

* Mental health application of choose your own adventure games

## What it does

* Natural language processing using Alexa

* Dynamic game-play based on choices that user makes

* Integrates data into

## How I built it

* Amazon Echo (Alexa)

* Node.js

* D3.js

## Challenges I ran into

* Visualizing the data from the game in a meaningful and interesting way as well as integrating that into the mental health theme

* Story-boarding (i.e. coming up with a short, sweet, and interesting plot that would get the message of our project across)

## Accomplishments that I'm proud of

* Being able to finish a demo-able project that we can further improve in the future; all within 36 hours

* Using new technologies like NLP and Alexa

* Working with a group of awesome developers and designers from all across the U.S. and the world

## What I learned

* I learned how to pick and choose the most appropriate APIs and libraries to accomplish the project at hand

* How to integrate the APIs into our project in a meaningful way to make UX interesting and innovative

* More experience with different JavaScript frameworks

## What's next for Sphinx

* Machine learning or AI integration in order to make a more versatile playing experience | ### Friday 7PM: Setting Things into Motion 🚶

>

> *Blast to the past - for everyone!*

>

>

>

ECHO enriches the lives of those with memory-related issues through reminiscence therapy. By recalling beloved memories from their past, those with dementia, Alzheimer’s and other cognitive conditions can restore their sense of continuity, rebuild neural pathways, and find fulfillment in the comfort of nostalgia. ECHO enables an AI-driven analytical approach to find insights into a patient’s emotions and recall, so that caregivers and family are better-equipped to provide.

### Friday 11PM: Making Strides 🏃♂️

>

> *The first step, our initial thoughts*

>

>

>

When it came to wrangling the frontend, we kept our users in mind and knew our highest priority was creating an application that was intuitive and easy to understand. We designed with the idea that ECHO could be seamlessly integrated into everyday life in mind.

### Saturday 9AM: Tripping 🤺

>

> *Whoops! Challenges and pitfalls*

>

>

>

As with any journey, we faced our fair share of obstacles and roadblocks on the way. While there were no issues finding the right APIs and tools to accomplish what we wanted, we had to scour different forums and tutorials to figure out how we could integrate those features. We built ECHO with Next.js and deployed on Vercel (and in the process, spent quite a few credits spamming a button while the app was frozen..!).

Backend was fairly painless, but frontend was a different story. Our vision came to life on Figma and was implemented with HTML/CSS on the ol’ reliable, VSC. We were perhaps a little too ambitious with the mockup and so removed a couple of the bells and whistles.

### Saturday 4PM: Finding Our Way 💪

>

> *One foot in front of the other - learning new things*

>

>

>

From here on out, we were in entirely uncharted territory and had to read up on documentation. Our AI, the Speech Prosody model from Hume, allowed us to take video input from a user and analyze a user’s tone and face in real-time. We learned how to use websockets for streaming APIs for those quick insights, as opposed to a REST API which (while more familiar to us) would have been more of a handful due to our real-time analysis goals.

### Saturday 10PM: What Brand Running Shoes 👟

>

> *Our tech stack*

>

>

>

Nikes.

Apart from the tools mentioned above, we have to give kudos to the platforms that we used for the safe-keeping of assets. To handle videos, we linked things up to Cloudinary so that users can play back old memories and reminisce, and used Postgres for data storage.

### Sunday 7AM: The Final Stretch 🏁

>

> *The power of friendship*

>

>

>

As a team composed of two UWaterloo CFM majors and a WesternU Engineering major, we had a lot of great ideas between us. When we put our heads together, we combined powers and developed ECHO.

Plus, Ethan very graciously allowed us to marathon this project at his house! Thank you for the dumplings.

### Sunday Onward: After Sunrise 🌅

>

> *Next horizons*

>

>

>

With this journey concluded, ECHO’s next great adventure will come in the form of adding cognitive therapy activities to stimulate the memory in a different way, as well as AI transcript composition (along with word choice analysis) for our recorded videos. | ## Inspiration

People struggle to work effectively in a home environment, so we were looking for ways to make it more engaging. Our team came up with the idea for InspireAR because we wanted to design a web app that could motivate remote workers be more organized in a fun and interesting way. Augmented reality seemed very fascinating to us, so we came up with the idea of InspireAR.

## What it does

InspireAR consists of the website, as well as a companion app. The website allows users to set daily goals at the start of the day. Upon completing all of their goals, the user is rewarded with a 3-D object that they can view immediately using their smartphone camera. The user can additionally combine their earned models within the companion app. The app allows the user to manipulate the objects they have earned within their home using AR technology. This means that as the user completes goals, they can build their dream office within their home using our app and AR functionality.

## How we built it

Our website is implemented using the Django web framework. The companion app is implemented using Unity and Xcode. The AR models come from echoAR. Languages used throughout the whole project consist of Python, HTML, CSS, C#, Swift and JavaScript.

## Challenges we ran into

Our team faced multiple challenges, as it is our first time ever building a website. Our team also lacked experience in the creation of back end relational databases and in Unity. In particular, we struggled with orienting the AR models within our app. Additionally, we spent a lot of time brainstorming different possibilities for user authentication.

## Accomplishments that we're proud of

We are proud with our finished product, however the website is the strongest component. We were able to create an aesthetically pleasing , bug free interface in a short period of time and without prior experience. We are also satisfied with our ability to integrate echoAR models into our project.

## What we learned

As a team, we learned a lot during this project. Not only did we learn the basics of Django, Unity, and databases, we also learned how to divide tasks efficiently and work together.

## What's next for InspireAR

The first step would be increasing the number and variety of models to give the user more freedom with the type of space they construct. We have also thought about expanding into the VR world using products such as Google Cardboard, and other accessories. This would give the user more freedom to explore more interesting locations other than just their living room. | losing |

## Inspiration:

We were inspired by the inconvenience faced by novice artists creating large murals, who struggle to use reference images for guiding their work. It can also help give confidence to young artists who need a confidence boost and are looking for a simple way to replicate references.

## What it does

An **AR** and **CV** based artist's aid that enables easy image tracing and color blocking guides (almost like "paint-by-numbers"!)

It achieves this by allowing the user to upload an image of their choosing, which is then processed into its traceable outlines and dominant colors. These images are then displayed in the real world on a surface of the artist's choosing, such as paper or a wall.

## How we built it

The base for the image processing functionality (edge-detection and color blocking) were **Python, OpenCV, numpy** and the **K-means** grouping algorithm. The image processing module was hosted on **Firebase**.

The end-user experience was driven using **Unity**. The user uploads an image to the app. The image is ported to Firebase, which then returns the generated images. We used the Unity engine along with **ARCore** to implement surface detection and virtually position the images in the real world. The UI was also designed through packages from Unity.

## Challenges we ran into

Our biggest challenge was the experience level of our team with the tech stack we chose to use. Since we were all new to Unity, we faced several bugs along the way and had to slowly learn our way through the project.

## Accomplishments that we're proud of

We are very excited to have demonstrated the accumulation of our image processing knowledge and to make contributions to Git.

## What we learned

We learned that our aptitude lies lower level, in robust languages like C++, as opposed to using pre-built systems to assist development, such as Unity. In the future, we may find easier success building projects to refine our current tech stacks as opposed to expanding them.

## What's next for [AR]t

After Hack the North, we intend to continue the project using C++ as the base for AR, which is more familiar to our team and robust. | ## 💡 Our Mission

Create an intuitive game but tough game that gets its players to challenge their speed & accuracy. We wanted to incorporate an active element to the game so that it can be played guilt free!

## 🧠 What it does

It shows a sequence of scenes before beginning the game, including the menu and instructions. After a player makes it past the initial screens, the game begins where a wall with a cutout starts moving towards the player. The player can see both the wall and themselves positioned on the environment, as the wall appears closer, the player must mimic the shape of the cutout to make it past the wall. The more walls you pass, the faster and tougher the walls get. The highest score with 3 lives wins!

## 🛠️ How we built it

We built the model to detect the person with their webcam using Movenet and built a custom model using Angle Heuristics to estimate similarity between users and expected pose. We built the game using React for the front end, designed the scenes and assets and built the backend using python flask.

## 🚧 Challenges we ran into

We were excited about trying out Unity, so we spent a around 10-12 trying to work with it. However, it was a lot more complex than we initially thought, and decided to pivot to building the UI using react towards the end of the first day. Although we became lot more familiar with working with Unity, and the structure of 2D games, it proved to be more difficult than we anticipated and had to change our gameplan to build a playable game.

## 🏆 Accomplishments that we're proud of

Considering that we completely changed our tech stack at around 1AM on the second day of hacking, we are proud that we built a working product in a extremely tight timeframe.

## 📚What we learned

This was the first time working with Unity for all of us. We got a surface level understanding of working with Unity and how game developers structure their games. We also explored graphic design to custom design the walls. Finally, working with an Angles Heuristics model was interesting too.

## ❓ What's next for Wall Guys

Next steps would be improve the UI and multiplayer! | ## Inspiration'

One of our team members saw two foxes playing outside a small forest. Eager he went closer to record them, but by the time he was there, the foxes were gone. Wishing he could have recorded them or at least gotten a recording from one of the locals, he imagined a digital system in nature. With the help of his team mates, this project grew into a real application and service which could change the landscape of the digital playground.

## What it does

It is a social media and educational application, which it stores the recorded data into a digital geographic tag, which is available for the users of the app to access and playback. Different from other social platforms this application works only if you are at the geographic location where the picture was taken and the footprint was imparted. In the educational part, the application offers overlays of monuments, buildings or historical landscapes, where users could scroll through historical pictures of the exact location they are standing. The images have captions which could be used as instructional and educational and offers the overlay function, for the user to get a realistic experience of the location on a different time.

## How we built it

Lots of hours of no sleep and thousands of git-hubs push and pulls. Seen more red lines this weekend than in years put together. Used API's and tons of trial and errors, experimentation's and absurd humour and jokes to keep us alert.

## Challenges we ran into

The app did not want to behave, the API's would give us false results or like in the case of google vision, which would be inaccurate. Fire-base merging with Android studio would rarely go down without a fight. The pictures we recorded would be horizontal and load horizontal, even if taken in vertical. The GPS location and AR would cause issues with the server, and many more we just don't want to recall...

## Accomplishments that we're proud of

The application is fully functional and has all the basic features we planned it to have since the beginning. We got over a lot of bumps on the road and never gave up. We are proud to see this app demoed at Penn Apps XX.

## What we learned

Fire-base from very little experience, working with GPS services, recording Longitude and Latitude from the pictures we taken to the server, placing digital tags on a spacial digital map, using map box. Working with the painful google vision to analyze our images before being available for service and located on the map.

## What's next for Timelens

Multiple features which we would love to have done at Penn Apps XX but it was unrealistic due to time constraint. New ideas of using the application in wider areas in daily life, not only in education and social networks. Creating an interaction mode between AR and the user to have functionality in augmentation. | losing |

## Inspiration

There are many scary things in the world ranging from poisonous spiders to horrifying ghosts, but none of these things scare people more than the act of public speaking. Over 75% of humans suffer from a fear of public speaking but what if there was a way to tackle this problem? That's why we created Strive.

## What it does

Strive is a mobile application that leverages voice recognition and AI technologies to provide instant actionable feedback in analyzing the voice delivery of a person's presentation. Once you have recorded your speech Strive will calculate various performance variables such as: voice clarity, filler word usage, voice speed, and voice volume. Once the performance variables have been calculated, Strive will then render your performance variables in an easy-to-read statistic dashboard, while also providing the user with a customized feedback page containing tips to improve their presentation skills. In the settings page, users will have the option to add custom filler words that they would like to avoid saying during their presentation. Users can also personalize their speech coach for a more motivational experience. On top of the in-app given analysis, Strive will also send their feedback results via text-message to the user, allowing them to share/forward an analysis easily.

## How we built it

Utilizing the collaboration tool Figma we designed wireframes of our mobile app. We uses services such as Photoshop and Gimp to help customize every page for an intuitive user experience. To create the front-end of our app we used the game engine Unity. Within Unity we sculpt each app page and connect components to backend C# functions and services. We leveraged IBM Watson's speech toolkit in order to perform calculations of the performance variables and used stdlib's cloud function features for text messaging.

## Challenges we ran into

Given our skillsets from technical backgrounds, one challenge we ran into was developing out a simplistic yet intuitive user interface that helps users navigate the various features within our app. By leveraging collaborative tools such as Figma and seeking inspiration from platforms such as Dribbble, we were able to collectively develop a design framework that best suited the needs of our target user.

## Accomplishments that we're proud of

Creating a fully functional mobile app while leveraging an unfamiliar technology stack to provide a simple application that people can use to start receiving actionable feedback on improving their public speaking skills. By building anyone can use our app to improve their public speaking skills and conquer their fear of public speaking.

## What we learned

Over the course of the weekend one of the main things we learned was how to create an intuitive UI, and how important it is to understand the target user and their needs.

## What's next for Strive - Your Personal AI Speech Trainer

* Model voices of famous public speakers for a more realistic experience in giving personal feedback (using the Lyrebird API).

* Ability to calculate more performance variables for a even better analysis and more detailed feedback | ## Inspiration

Public speaking is greatly feared by many, yet it is a part of life that most of us have to go through. Despite this, preparing for presentations effectively is *greatly limited*. Practicing with others is good, but that requires someone willing to listen to you for potentially hours. Talking in front of a mirror could work, but it does not live up to the real environment of a public speaker. As a result, public speaking is dreaded not only for the act itself, but also because it's *difficult to feel ready*. If there was an efficient way of ensuring you aced a presentation, the negative connotation associated with them would no longer exist . That is why we have created Speech Simulator, a VR web application used for practice public speaking. With it, we hope to alleviate the stress that comes with speaking in front of others.

## What it does

Speech Simulator is an easy to use VR web application. Simply login with discord, import your script into the site from any device, then put on your VR headset to enter a 3D classroom, a common location for public speaking. From there, you are able to practice speaking. Behind the user is a board containing your script, split into slides, emulating a real powerpoint styled presentation. Once you have run through your script, you may exit VR, where you will find results based on the application's recording of your presentation. From your talking speed to how many filler words said, Speech Simulator will provide you with stats based on your performance as well as a summary on what you did well and how you can improve. Presentations can be attempted again and are saved onto our database. Additionally, any adjustments to the presentation templates can be made using our editing feature.

## How we built it

Our project was created primarily using the T3 stack. The stack uses **Next.js** as our full-stack React framework. The frontend uses **React** and **Tailwind CSS** for component state and styling. The backend utilizes **NextAuth.js** for login and user authentication and **Prisma** as our ORM. The whole application was type safe ensured using **tRPC**, **Zod**, and **TypeScript**. For the VR aspect of our project, we used **React Three Fiber** for rendering **Three.js** objects in, **React XR**, and **React Speech Recognition** for transcribing speech to text. The server is hosted on Vercel and the database on **CockroachDB**.

## Challenges we ran into

Despite completing, there were numerous challenges that we ran into during the hackathon. The largest problem was the connection between the web app on computer and the VR headset. As both were two separate web clients, it was very challenging to communicate our sites' workflow between the two devices. For example, if a user finished their presentation in VR and wanted to view the results on their computer, how would this be accomplished without the user manually refreshing the page? After discussion between using web-sockets or polling, we went with polling + a queuing system, which allowed each respective client to know what to display. We decided to use polling because it enables a severless deploy and concluded that we did not have enough time to setup websockets. Another challenge we had run into was the 3D configuration on the application. As none of us have had real experience with 3D web applications, it was a very daunting task to try and work with meshes and various geometry. However, after a lot of trial and error, we were able to manage a VR solution for our application.

## What we learned

This hackathon provided us with a great amount of experience and lessons. Although each of us learned a lot on the technological aspect of this hackathon, there were many other takeaways during this weekend. As this was most of our group's first 24 hour hackathon, we were able to learn to manage our time effectively in a day's span. With a small time limit and semi large project, this hackathon also improved our communication skills and overall coherence of our team. However, we did not just learn from our own experiences, but also from others. Viewing everyone's creations gave us insight on what makes a project meaningful, and we gained a lot from looking at other hacker's projects and their presentations. Overall, this event provided us with an invaluable set of new skills and perspective.

## What's next for VR Speech Simulator

There are a ton of ways that we believe can improve Speech Simulator. The first and potentially most important change is the appearance of our VR setting. As this was our first project involving 3D rendering, we had difficulty adding colour to our classroom. This reduced the immersion that we originally hoped for, so improving our 3D environment would allow the user to more accurately practice. Furthermore, as public speaking infers speaking in front of others, large improvements can be made by adding human models into VR. On the other hand, we also believe that we can improve Speech Simulator by adding more functionality to the feedback it provides to the user. From hand gestures to tone of voice, there are so many ways of differentiating the quality of a presentation that could be added to our application. In the future, we hope to add these new features and further elevate Speech Simulator. | ## Inspiration

Public speaking is a critical skill in our lives. The ability to communicate effectively and efficiently is a very crucial, yet difficult skill to hone. For a few of us on the team, having grown up competing in public speaking competitions, we understand too well the challenges that individuals looking to improve their public speaking and presentation skills face. Building off of our experience of effective techniques and best practices and through analyzing the speech patterns of very well-known public speakers, we have designed a web app that will target weaker points in your speech and identify your strengths to make us all better and more effective communicators.

## What it does

By analyzing speaking data from many successful public speakers from a variety industries and backgrounds, we have established relatively robust standards for optimal speed, energy levels and pausing frequency during a speech. Taking into consideration the overall tone of the speech, as selected by the user, we are able to tailor our analyses to the user's needs. This simple and easy to use web application will offer users insight into their overall accuracy, enunciation, WPM, pause frequency, energy levels throughout the speech, error frequency per interval and summarize some helpful tips to improve their performance the next time around.

## How we built it

For the backend, we built a centralized RESTful Flask API to fetch all backend data from one endpoint. We used Google Cloud Storage to store files greater than 30 seconds as we found that locally saved audio files could only retain about 20-30 seconds of audio. We also used Google Cloud App Engine to deploy our Flask API as well as Google Cloud Speech To Text to transcribe the audio. Various python libraries were used for the analysis of voice data, and the resulting response returns within 5-10 seconds. The web application user interface was built using React, HTML and CSS and focused on displaying analyses in a clear and concise manner. We had two members of the team in charge of designing and developing the front end and two working on the back end functionality.

## Challenges we ran into

This hackathon, our team wanted to focus on creating a really good user interface to accompany the functionality. In our planning stages, we started looking into way more features than the time frame could accommodate, so a big challenge we faced was firstly, dealing with the time pressure and secondly, having to revisit our ideas many times and changing or removing functionality.

## Accomplishments that we're proud of

Our team is really proud of how well we worked together this hackathon, both in terms of team-wide discussions as well as efficient delegation of tasks for individual work. We leveraged many new technologies and learned so much in the process! Finally, we were able to create a good user interface to use as a platform to deliver our intended functionality.

## What we learned

Following the challenge that we faced during this hackathon, we were able to learn the importance of iteration within the design process and how helpful it is to revisit ideas and questions to see if they are still realistic and/or relevant. We also learned a lot about the great functionality that Google Cloud provides and how to leverage that in order to make our application better.

## What's next for Talko

In the future, we plan on continuing to develop the UI as well as add more functionality such as support for different languages. We are also considering creating a mobile app to make it more accessible to users on their phones. | winning |

## Motivation

Our motivation was a grand piano that has sat in our project lab at SFU for the past 2 years. The piano was Richard Kwok's grandfathers friend and was being converted into a piano scroll playing piano. We had an excessive amount of piano scrolls that were acting as door stops and we wanted to hear these songs from the early 20th century. We decided to pursue a method to digitally convert the piano scrolls into a digital copy of the song.

The system scrolls through the entire piano scroll and uses openCV to convert the scroll markings to individual notes. The array of notes are converted in near real time to an MIDI file that can be played once complete.

## Technology

The scrolling through the piano scroll utilized a DC motor control by arduino via an H-Bridge that was wrapped around a Microsoft water bottle. While the notes were recorded using openCV via a Raspberry Pi 3, which was programmed in python. The result was a matrix representing each frame of notes from the Raspberry Pi camera. This array was exported to an MIDI file that could then be played.

## Challenges we ran into

The openCV required a calibration method to assure accurate image recognition.

The external environment lighting conditions added extra complexity in the image recognition process.

The lack of musical background in the members and the necessity to decrypt the piano scroll for the appropriate note keys was an additional challenge.

The image recognition of the notes had to be dynamic for different orientations due to variable camera positions.

## Accomplishments that we're proud of

The device works and plays back the digitized music.

The design process was very fluid with minimal set backs.

The back-end processes were very well-designed with minimal fluids.

Richard won best use of a sponsor technology in a technical pickup line.

## What we learned

We learned how piano scrolls where designed and how they were written based off desired tempo of the musician.

Beginner musical knowledge relating to notes, keys and pitches. We learned about using OpenCV for image processing, and honed our Python skills while scripting the controller for our hack.

As we chose to do a hardware hack, we also learned about the applied use of circuit design, h-bridges (L293D chip), power management, autoCAD tools and rapid prototyping, friction reduction through bearings, and the importance of sheave alignment in belt-drive-like systems. We also were exposed to a variety of sensors for encoding, including laser emitters, infrared pickups, and light sensors, as well as PWM and GPIO control via an embedded system.

The environment allowed us to network with and get lots of feedback from sponsors - many were interested to hear about our piano project and wanted to weigh in with advice.

## What's next for Piano Men

Live playback of the system | ## Inspiration

In the theme of sustainability, we noticed that a lot of people don't know what's recyclable. Some people recycle what shouldn't be and many people recycle much less than they could be. We wanted to find a way to improve recycling habits while also incentivizing people to recycle more. Cyke, pronounced psych,(psyched about recycling) was the result.

## What it does

Psych is a platform to get users in touch with local recycling facilities, to give recycling facilities more publicity, and to reward users for their good actions.

**For the user:** When a user creates an account for Cyke, their location is used to tell them what materials are able to be recycled and what materials aren't. Users are given a Cyke Card which has their rank. When a user recycles, the amount they recycled is measured and reported to Cyke, which stores that data in our CochroachDB database. Then, based on revenue share from recycling plants, users would be monetarily rewarded. The higher the person's rank, the more they receive for what they recycle. There are four ranks, ranging from "Learning" to "Superstar."

\**For Recycling Companies: \** For a recycling company to be listed on our website, they must agree to a revenue share corresponding to the amount of material recycled (can be discussed). This would be in return for guiding customers towards them and increasing traffic and recycling quality. Cyke provides companies with an overview of how well recycling is going: statistics over the past month or more, top individual contributors to their recycling plant, and an impact score relating to how much social good they've done by distributing money to users and charities. Individual staff members can also be invited to the Cyke page to view these statistics and other more detailed information.

## How we built it

Our site uses a **Node.JS** back-end, with **ejs** for the server-side rendering of pages. The backend connects to **CockroachDB** to store user and company information, recycling transactions, and a list of charities and how much has been donated to each.

## Challenges we ran into

We ran into challenges mostly with CockroachDB, one of us was able to successfully create a cluster and connect it via the MacOS terminal, however when it came to connecting it to our front-end there is existed a lot of issues with getting the right packages for the linux CLI as well as for connecting via our connection string. We spent quite a few hours on this as using CockroachDB serverless was an essential part of hosting info about our recyclers, recycling companies, transactions, and charities.

## Accomplishments that we're proud of

We’re proud of getting CockroachDB to function properly. For two of the three members on the team this was our first time using a Node.js back-end, so it was difficult and rewarding to complete. On top of being proud of getting our SQL database off the ground, we’re proud of our design. We worked a lot on the colors. We are also proud of using the serverless form of CockroachDB so our compute cluster is hosted google's cloud platform (GCP).

## What we've learned

Through some of our greatest challenges came some of our greatest learning advances. Through toiling through the CockroachDB and SQL table, of which none of us had previous experience with before, we learned a lot about environment variables and how to use express and pg driver to connect front-end and back-end elements.

## What's next for Cyke

To scale our solution, the next steps involve increasing personalization aspects of our application. For users that means, adding in capabilities that highlight local charities for users to donate to, and locale based recycling information. On the company side, there are optimizations that can be made around the information that we provide them, thus improving the impact score to consider more factors like how consistent their users are. | ## Inspiration

We were inspired by our shared love of dance. We knew we wanted to do a hardware hack in the healthcare and accessibility spaces, but we weren't sure of the specifics. While we were talking, we mentioned how we enjoyed dance, and the campus DDR machine was brought up. We decided to incorporate that into our hardware hack with this handheld DDR mat!

## What it does

The device is oriented so that there are LEDs and buttons that are in specified directions (i.e. left, right, top, bottom) and the user plays a song they enjoy next to the sound sensor that activates the game. The LEDs are activated randomly to the beat of the song and the user must click the button next to the lit LED.

## How we built it

The team prototyped the device for the Arduino UNO with the initial intention of using a sound sensor as the focal point and slowly building around it, adding features where need be. The team was only able to add three features to the device due to the limited time span of the event. The first feature the team attempted to add was LEDs that reacted to the sound sensor, so it would activate LEDs to the beat of a song. The second feature the team attempted to add was a joystick, however, the team soon realized that the joystick was very sensitive and it was difficult to calibrate. It was then replaced by buttons that operated much better and provided accessible feedback for the device. The last feature was an algorithm that added a factor of randomness to LEDs to maximize the "game" aspect.

## Challenges we ran into

There was definitely no shortage of errors while working on this project. Working with the hardware on hand was difficult, the team was nonplussed whether the issue on hand stemmed from the hardware or an error within the code.

## Accomplishments that we're proud of

The success of the aforementioned algorithm along with the sound sensor provided a very educational experience for the team. Calibrating the sound sensor and developing the functional prototype gave the team the opportunity to utilize prior knowledge and exercise skills.

## What we learned

The team learned how to work within a fast-paced environment and experienced working with Arduino IDE for the first time. A lot of research was dedicated to building the circuit and writing the code to make the device fully functional. Time was also wasted on the joystick due to the fact the values outputted by the joystick did not align with the one given by the datasheet. The team learned the importance of looking at recorded values instead of blindly following the datasheet.

## What's next for Happy Fingers

The next steps for the team are to develop the device further. With the extra time, the joystick method could be developed and used as a viable component. Working on delay on the LED is another aspect, doing client research to determine optimal timing for the game. To refine the game, the team is also thinking of adding a scoring system that allows the player to track their progress through the device recording how many times they clicked the LED at the correct time as well as a buzzer to notify the player they had clicked the incorrect button. Finally, in a true arcade fashion, a display that showed the high score and the player's current score could be added. | partial |

## Inspiration

As lane-keep assist and adaptive cruise control features are becoming more available in commercial vehicles, we wanted to explore the potential of a dedicated collision avoidance system

## What it does

We've created an adaptive, small-scale collision avoidance system that leverages Apple's AR technology to detect an oncoming vehicle in the system's field of view and respond appropriately, by braking, slowing down, and/or turning

## How we built it

Using Swift and ARKit, we built an image-detecting app which was uploaded to an iOS device. The app was used to recognize a principal other vehicle (POV), get its position and velocity, and send data (corresponding to a certain driving mode) to an HTTP endpoint on Autocode. This data was then parsed and sent to an Arduino control board for actuating the motors of the automated vehicle

## Challenges we ran into

One of the main challenges was transferring data from an iOS app/device to Arduino. We were able to solve this by hosting a web server on Autocode and transferring data via HTTP requests. Although this allowed us to fetch the data and transmit it via Bluetooth to the Arduino, latency was still an issue and led us to adjust the danger zones in the automated vehicle's field of view accordingly

## Accomplishments that we're proud of

Our team was all-around unfamiliar with Swift and iOS development. Learning the Swift syntax and how to use ARKit's image detection feature in a day was definitely a proud moment. We used a variety of technologies in the project and finding a way to interface with all of them and have real-time data transfer between the mobile app and the car was another highlight!

## What we learned

We learned about Swift and more generally about what goes into developing an iOS app. Working with ARKit has inspired us to build more AR apps in the future

## What's next for Anti-Bumper Car - A Collision Avoidance System

Specifically for this project, solving an issue related to file IO and reducing latency would be the next step in providing a more reliable collision avoiding system. Hopefully one day this project can be expanded to a real-life system and help drivers stay safe on the road | ## Inspiration

As OEMs(Original equipment manufacturers) and consumers keep putting on brighter and brighter lights, this can be blinding for oncoming traffic. Along with the fatigue and difficulty judging distance, it becomes increasingly harder to drive safely at night. Having an extra pair of night vision would be essential to protect your eyes and that's where the NCAR comes into play. The Nighttime Collision Avoidance Response system provides those extra sets of eyes via an infrared camera that uses machine learning to classify obstacles in the road that are detected and projects light to indicate obstacles in the road and allows safe driving regardless of the time of day.

## What it does

* NCAR provides users with an affordable wearable tech that ensures driver safety at night

* With its machine learning model, it can detect when humans are on the road when it is pitch black

* The NCAR alerts users of obstacles on the road by projecting a beam of light onto the windshield using the OLED Display

* If the user’s headlights fail, the infrared camera can act as a powerful backup light

## How we built it

* Machine Learning Model: Tensorflow API

* Python Libraries: OpenCV, PyGame

* Hardware: (Raspberry Pi 4B), 1 inch OLED display, Infrared Camera

## Challenges we ran into

* Training machine learning model with limited training data

* Infrared camera breaking down, we had to use old footage of the ml model

## Accomplishments that we're proud of

* Implementing a model that can detect human obstacles from 5-7 meters from the camera

* building a portable design that can be implemented on any car

## What we learned

* Learned how to code different hardware sensors together

* Building a Tensorflow model on a Raspberry PI

* Collaborating with people with different backgrounds, skills and experiences

## What's next for NCAR: Nighttime Collision Avoidance System

* Building a more custom training model that can detect and calculate the distances of the obstacles to the user

* A more sophisticated system of alerting users of obstacles on the path that is easy to maneuver

* Be able to adjust the OLED screen with a 3d printer to display light in a more noticeable way | ## Inspiration

We noticed one of the tracks involved creating a better environment for cities through the use of technology, also known as making our cities 'smarter.' We observed in places like Boston & Cambridge, there are many intersections with unsafe areas for pedestrians and drivers. **Furthermore, 50% of all accidents occur at Intersections, according to the Federal Highway Administration**. This can prove to be enhanced with careless drivers, lack of stop signs, confusing intersections, and more.

## What it does

This project uses a Raspberry Pi to predict potential dangerous driving situations. If we deduce that a potential collision can occur, our prototype will start creating a 'beeping' sound loud enough to gain the attention of those surrounding the scene. Ideally, our prototype will be attached onto traffic poles, similar to most traffic cameras.

## How we built it

We utilized a popular Computer Vision library known as OpenCV, in order to visualize our problem in Python. A demo of our prototype is shown in the GitHub repository, with a beeping sound occurring when the program finds a potential collision.

Our demonstration is built using Raspberry Pi & a Logitech Camera. Using Artificial Intelligence, we capture the current positions of cars, and calculate their direction and velocity. Using this information, we predicted potential close calls and accidents. In such a case, we make a beeping sound simulating a alarm to notify drivers and surrounding participants.

## Challenges we ran into

One challenge we ran into was detecting the car positions based on the frames in a reliable fashion.

A second challenge was calculating the speed and direction of vehicles based on the present frame & the previous frames.

A third challenge included being able to determine if two lines are crossing based on their respective starting and ending coordinates. Solving this proved vital in order to make sure we alerted those in the vicinity in a quick and proper manner.

## Accomplishments that we're proud of

We are proud that we were able to adapt this project to multiple levels. Even putting the camera up to a screen of a real collision video off Youtube resulted in the prototype alerting us of a potential crash **before the accident occurred**. We're also proud of the fact that we were able to abstract the hardware and make the layout of the final prototype aesthetically pleasing.

## What we learned

We learned about the potential of smart intersections, and the benefits it can provide in terms of safety to an ever advancing society. Surely, our implementation will be able to reduce the 50% of collisions that occur at intersections by making those around the area more aware of potential dangerous collisions. We also learned a lot about working with openCV and Camera Vision. This was definitely a unique experience, and we were even able to walk around the surrounding Harvard campus, trying to get good footage to test our model on.

## What's next for Traffic Eye

We think we could make a better prediction model, as well as creating a weather resilient model to account for varying types of weather throughout the year. We think a prototype like this can be scaled and placed on actual roads given enough R&D is done. This definitely can help our cities advance with rising capabilities in Artificial Intelligence & Computer Vision! | winning |

## Inspiration

Bill - "Blindness is a major problem today and we hope to have a solution that takes a step in solving this"

George - "I like engineering"

We hope our tool gives nonzero contribution to society.

## What it does

Generates a description of a scene and reads the description for visually impaired people. Leverages CLIP/recent research advancements and own contributions to solve previously unsolved problem (taking a stab at the unsolved **generalized object detection** problem i.e. object detection without training labels)

## How we built it

SenseSight consists of three modules: recorder, CLIP engine, and text2speech.

### Pipeline Overview