Update README.md

Browse files

README.md

CHANGED

|

@@ -65,6 +65,17 @@ license: apache-2.0

|

|

| 65 |

|

| 66 |

|

| 67 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 68 |

Flan-UL2 is an encoder decoder model based on the `T5` architecture. It uses the same configuration as the [`UL2 model`](https://huggingface.co/google/ul2) released earlier last year. It was fine tuned using the "Flan" prompt tuning

|

| 69 |

and dataset collection.

|

| 70 |

|

|

@@ -73,22 +84,16 @@ According ot the original [blog](https://www.yitay.net/blog/flan-ul2-20b) here a

|

|

| 73 |

- The Flan-UL2 checkpoint uses a receptive field of 2048 which makes it more usable for few-shot in-context learning.

|

| 74 |

- The original UL2 model also had mode switch tokens that was rather mandatory to get good performance. However, they were a little cumbersome as this requires often some changes during inference or finetuning. In this update/change, we continue training UL2 20B for an additional 100k steps (with small batch) to forget “mode tokens” before applying Flan instruction tuning. This Flan-UL2 checkpoint does not require mode tokens anymore.

|

| 75 |

|

|

|

|

|

|

|

| 76 |

## Converting from T5x to huggingface

|

|

|

|

| 77 |

You can use the [`convert_t5x_checkpoint_to_pytorch.py`](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/convert_t5x_checkpoint_to_pytorch.py) script and pass the argument `strict = False`. The final layer norm is missing from the original dictionnary, that is why we are passing the `stric=False` argument.

|

| 78 |

```bash

|

| 79 |

python convert_t5x_checkpoint_to_pytorch.py --t5x_checkpoint_path ~/code/ul2/flan-ul220b-v3/ --config_file config.json --pytorch_dump_path ~/code/ul2/flan-ul2

|

| 80 |

```

|

| 81 |

-

## Performance improvment

|

| 82 |

|

| 83 |

-

|

| 84 |

-

| | MMLU | BBH | MMLU-CoT | BBH-CoT | Avg |

|

| 85 |

-

| :--- | :---: | :---: | :---: | :---: | :---: |

|

| 86 |

-

| FLAN-PaLM 62B | 59.6 | 47.5 | 56.9 | 44.9 | 49.9 |

|

| 87 |

-

| FLAN-PaLM 540B | 73.5 | 57.9 | 70.9 | 66.3 | 67.2 |

|

| 88 |

-

| FLAN-T5-XXL 11B | 55.1 | 45.3 | 48.6 | 41.4 | 47.6 |

|

| 89 |

-

| FLAN-UL2 20B | 55.7(+1.1%) | 45.9(+1.3%) | 52.2(+7.4%) | 42.7(+3.1%) | 49.1(+3.2%) |

|

| 90 |

-

|

| 91 |

-

# Using the model

|

| 92 |

|

| 93 |

For more efficient memory usage, we advise you to load the model in `8bit` using `load_in_8bit` flag as follows:

|

| 94 |

|

|

@@ -126,9 +131,23 @@ print(tokenizer.decode(outputs[0]))

|

|

| 126 |

# <pad> They have 23 - 20 = 3 apples left. They have 3 + 6 = 9 apples. Therefore, the answer is 9.</s>

|

| 127 |

```

|

| 128 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 129 |

|

| 130 |

# Introduction to UL2

|

| 131 |

|

|

|

|

|

|

|

| 132 |

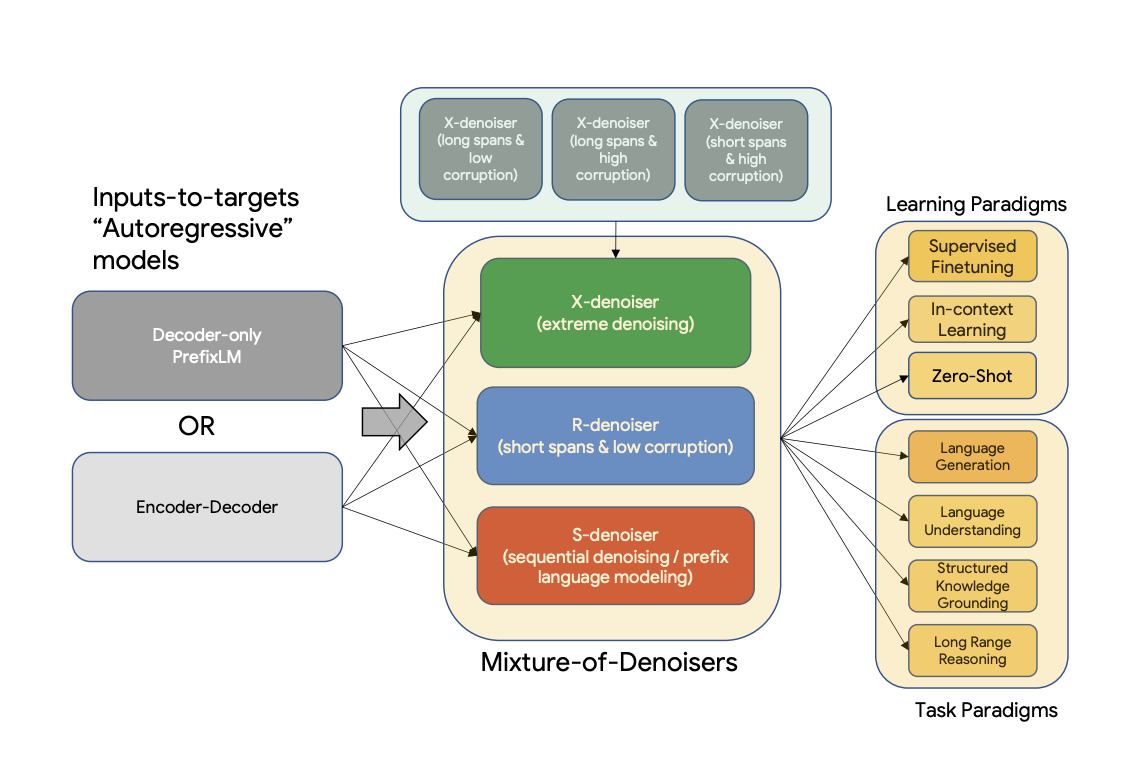

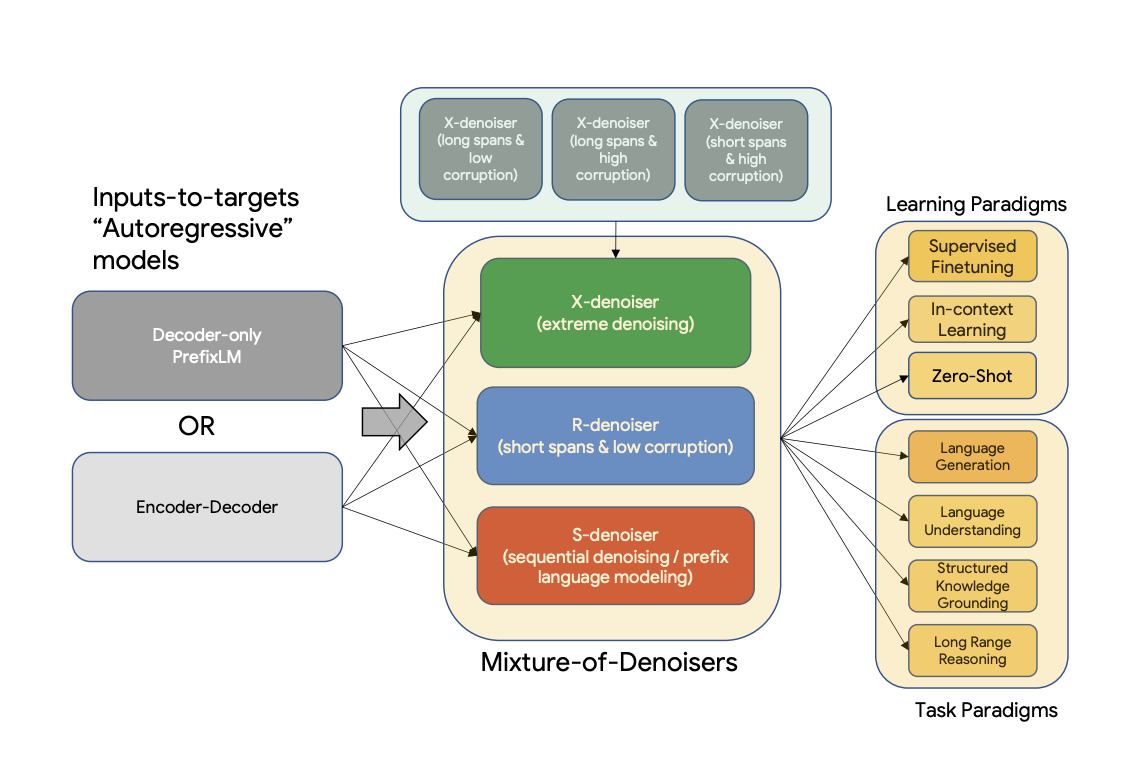

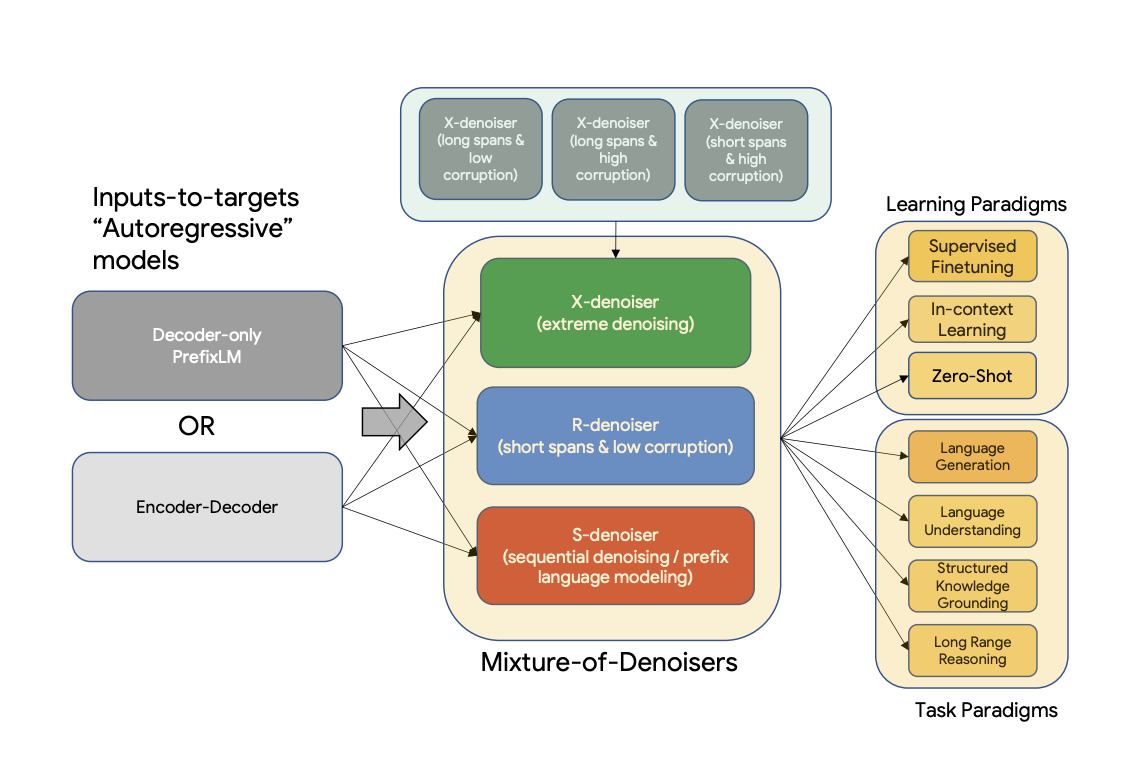

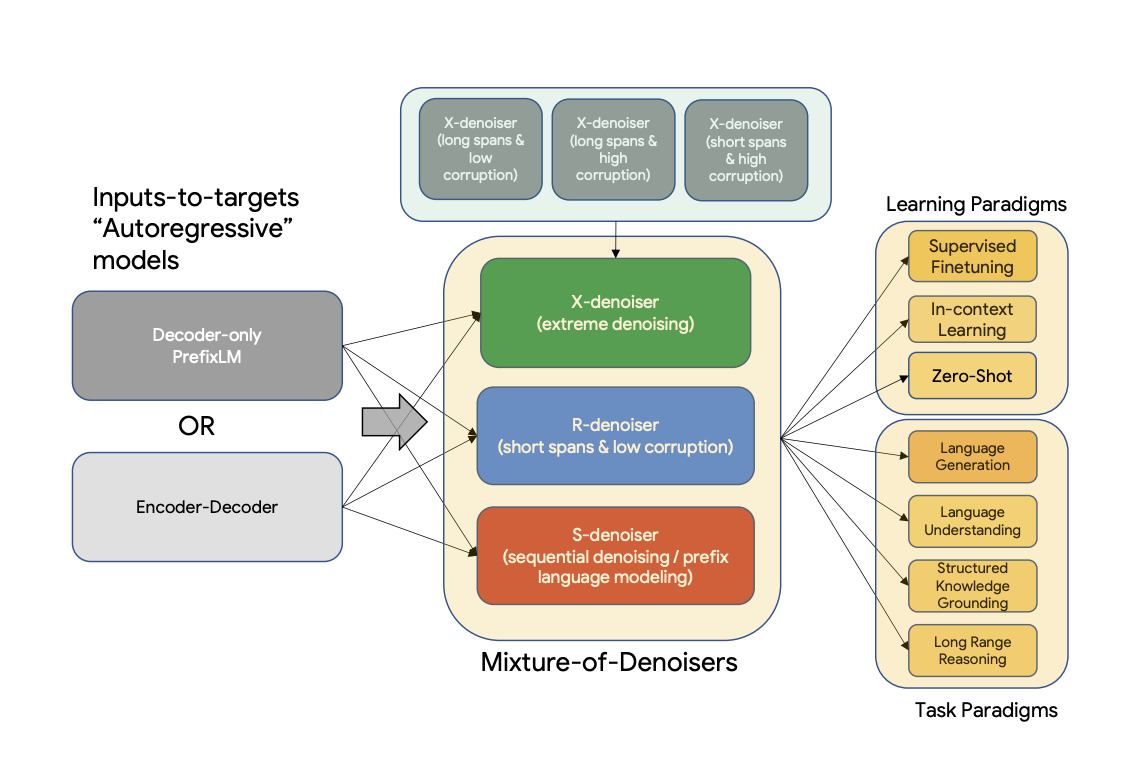

UL2 is a unified framework for pretraining models that are universally effective across datasets and setups. UL2 uses Mixture-of-Denoisers (MoD), apre-training objective that combines diverse pre-training paradigms together. UL2 introduces a notion of mode switching, wherein downstream fine-tuning is associated with specific pre-training schemes.

|

| 133 |

|

| 134 |

|

|

@@ -143,9 +162,10 @@ Paper: [Unifying Language Learning Paradigms](https://arxiv.org/abs/2205.05131v1

|

|

| 143 |

|

| 144 |

Authors: *Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Xavier Garcia, Dara Bahri, Tal Schuster, Huaixiu Steven Zheng, Neil Houlsby, Donald Metzler*

|

| 145 |

|

| 146 |

-

# Training

|

| 147 |

|

| 148 |

-

##

|

|

|

|

|

|

|

| 149 |

The Flan-UL2 model was initialized using the `UL2` checkpoints, and was then trained additionally using Flan Prompting. This means that the original training corpus is `C4`,

|

| 150 |

|

| 151 |

In “Scaling Instruction-Finetuned language models (Chung et al.)” (also referred to sometimes as the Flan2 paper), the key idea is to train a large language model on a collection of datasets. These datasets are phrased as instructions which enable generalization across diverse tasks. Flan has been primarily trained on academic tasks. In Flan2, we released a series of T5 models ranging from 200M to 11B parameters that have been instruction tuned with Flan.

|

|

@@ -215,77 +235,10 @@ In total, the model was trained for 2.65 million steps.

|

|

| 215 |

|

| 216 |

**Important**: For more details, please see sections 5.2.1 and 5.2.2 of the [paper](https://arxiv.org/pdf/2205.05131v1.pdf).

|

| 217 |

|

| 218 |

-

|

| 219 |

|

| 220 |

This model was originally contributed by [Yi Tay](https://www.yitay.net/?author=636616684c5e64780328eece), and added to the Hugging Face ecosystem by [Younes Belkada](https://huggingface.co/ybelkada) & [Arthur Zucker](https://huggingface.co/ArthurZ).

|

| 221 |

|

| 222 |

-

|

| 223 |

-

|

| 224 |

-

The following shows how one can predict masked passages using the different denoising strategies.

|

| 225 |

-

Given the size of the model the following examples need to be run on at least a 40GB A100 GPU.

|

| 226 |

-

|

| 227 |

-

### S-Denoising

|

| 228 |

-

|

| 229 |

-

For *S-Denoising*, please make sure to prompt the text with the prefix `[S2S]` as shown below.

|

| 230 |

-

|

| 231 |

-

```python

|

| 232 |

-

from transformers import T5ForConditionalGeneration, AutoTokenizer

|

| 233 |

-

import torch

|

| 234 |

-

|

| 235 |

-

model = T5ForConditionalGeneration.from_pretrained("google/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16).to("cuda")

|

| 236 |

-

tokenizer = AutoTokenizer.from_pretrained("google/ul2")

|

| 237 |

-

|

| 238 |

-

input_string = "[S2S] Mr. Dursley was the director of a firm called Grunnings, which made drills. He was a big, solid man with a bald head. Mrs. Dursley was thin and blonde and more than the usual amount of neck, which came in very useful as she spent so much of her time craning over garden fences, spying on the neighbours. The Dursleys had a small son called Dudley and in their opinion there was no finer boy anywhere <extra_id_0>"

|

| 239 |

-

|

| 240 |

-

inputs = tokenizer(input_string, return_tensors="pt").input_ids.to("cuda")

|

| 241 |

-

|

| 242 |

-

outputs = model.generate(inputs, max_length=200)

|

| 243 |

-

|

| 244 |

-

print(tokenizer.decode(outputs[0]))

|

| 245 |

-

# -> <pad>. Dudley was a very good boy, but he was also very stupid.</s>

|

| 246 |

-

```

|

| 247 |

-

|

| 248 |

-

### R-Denoising

|

| 249 |

-

|

| 250 |

-

For *R-Denoising*, please make sure to prompt the text with the prefix `[NLU]` as shown below.

|

| 251 |

-

|

| 252 |

-

```python

|

| 253 |

-

from transformers import T5ForConditionalGeneration, AutoTokenizer

|

| 254 |

-

import torch

|

| 255 |

-

|

| 256 |

-

model = T5ForConditionalGeneration.from_pretrained("google/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16).to("cuda")

|

| 257 |

-

tokenizer = AutoTokenizer.from_pretrained("google/ul2")

|

| 258 |

-

|

| 259 |

-

input_string = "[NLU] Mr. Dursley was the director of a firm called <extra_id_0>, which made <extra_id_1>. He was a big, solid man with a bald head. Mrs. Dursley was thin and <extra_id_2> of neck, which came in very useful as she spent so much of her time <extra_id_3>. The Dursleys had a small son called Dudley and <extra_id_4>"

|

| 260 |

|

| 261 |

-

|

| 262 |

-

|

| 263 |

-

outputs = model.generate(inputs, max_length=200)

|

| 264 |

-

|

| 265 |

-

print(tokenizer.decode(outputs[0]))

|

| 266 |

-

# -> "<pad><extra_id_0> Burrows<extra_id_1> brooms for witches and wizards<extra_id_2> had a lot<extra_id_3> scolding Dudley<extra_id_4> a daughter called Petunia. Dudley was a nasty, spoiled little boy who was always getting into trouble. He was very fond of his pet rat, Scabbers.<extra_id_5> Burrows<extra_id_3> screaming at him<extra_id_4> a daughter called Petunia</s>

|

| 267 |

-

"

|

| 268 |

-

```

|

| 269 |

-

|

| 270 |

-

### X-Denoising

|

| 271 |

-

|

| 272 |

-

For *X-Denoising*, please make sure to prompt the text with the prefix `[NLG]` as shown below.

|

| 273 |

-

|

| 274 |

-

```python

|

| 275 |

-

from transformers import T5ForConditionalGeneration, AutoTokenizer

|

| 276 |

-

import torch

|

| 277 |

-

|

| 278 |

-

model = T5ForConditionalGeneration.from_pretrained("google/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16).to("cuda")

|

| 279 |

-

tokenizer = AutoTokenizer.from_pretrained("google/ul2")

|

| 280 |

-

|

| 281 |

-

input_string = "[NLG] Mr. Dursley was the director of a firm called Grunnings, which made drills. He was a big, solid man wiht a bald head. Mrs. Dursley was thin and blonde and more than the usual amount of neck, which came in very useful as she

|

| 282 |

-

spent so much of her time craning over garden fences, spying on the neighbours. The Dursleys had a small son called Dudley and in their opinion there was no finer boy anywhere. <extra_id_0>"

|

| 283 |

-

|

| 284 |

-

model.cuda()

|

| 285 |

-

inputs = tokenizer(input_string, return_tensors="pt", add_special_tokens=False).input_ids.to("cuda")

|

| 286 |

-

|

| 287 |

-

outputs = model.generate(inputs, max_length=200)

|

| 288 |

-

|

| 289 |

-

print(tokenizer.decode(outputs[0]))

|

| 290 |

-

# -> "<pad><extra_id_0> Burrows<extra_id_1> a lot of money from the manufacture of a product called '' Burrows'''s ''<extra_id_2> had a lot<extra_id_3> looking down people's throats<extra_id_4> a daughter called Petunia. Dudley was a very stupid boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat, ugly boy who was always getting into trouble. He was a big, fat,"

|

| 291 |

-

```

|

|

|

|

| 65 |

|

| 66 |

|

| 67 |

|

| 68 |

+

# Table of Contents

|

| 69 |

+

|

| 70 |

+

0. [TL;DR](#TL;DR)

|

| 71 |

+

1. [Using the model](#using-the-model)

|

| 72 |

+

2. [Results](#results)

|

| 73 |

+

3. [Introduction to UL2](#introduction-to-ul2)

|

| 74 |

+

4. [Contribution](#contribution)

|

| 75 |

+

5. [Citation](#citation)

|

| 76 |

+

|

| 77 |

+

# TL;DR

|

| 78 |

+

|

| 79 |

Flan-UL2 is an encoder decoder model based on the `T5` architecture. It uses the same configuration as the [`UL2 model`](https://huggingface.co/google/ul2) released earlier last year. It was fine tuned using the "Flan" prompt tuning

|

| 80 |

and dataset collection.

|

| 81 |

|

|

|

|

| 84 |

- The Flan-UL2 checkpoint uses a receptive field of 2048 which makes it more usable for few-shot in-context learning.

|

| 85 |

- The original UL2 model also had mode switch tokens that was rather mandatory to get good performance. However, they were a little cumbersome as this requires often some changes during inference or finetuning. In this update/change, we continue training UL2 20B for an additional 100k steps (with small batch) to forget “mode tokens” before applying Flan instruction tuning. This Flan-UL2 checkpoint does not require mode tokens anymore.

|

| 86 |

|

| 87 |

+

# Using the model

|

| 88 |

+

|

| 89 |

## Converting from T5x to huggingface

|

| 90 |

+

|

| 91 |

You can use the [`convert_t5x_checkpoint_to_pytorch.py`](https://github.com/huggingface/transformers/blob/main/src/transformers/models/t5/convert_t5x_checkpoint_to_pytorch.py) script and pass the argument `strict = False`. The final layer norm is missing from the original dictionnary, that is why we are passing the `stric=False` argument.

|

| 92 |

```bash

|

| 93 |

python convert_t5x_checkpoint_to_pytorch.py --t5x_checkpoint_path ~/code/ul2/flan-ul220b-v3/ --config_file config.json --pytorch_dump_path ~/code/ul2/flan-ul2

|

| 94 |

```

|

|

|

|

| 95 |

|

| 96 |

+

## Running the model

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 97 |

|

| 98 |

For more efficient memory usage, we advise you to load the model in `8bit` using `load_in_8bit` flag as follows:

|

| 99 |

|

|

|

|

| 131 |

# <pad> They have 23 - 20 = 3 apples left. They have 3 + 6 = 9 apples. Therefore, the answer is 9.</s>

|

| 132 |

```

|

| 133 |

|

| 134 |

+

# Results

|

| 135 |

+

|

| 136 |

+

## Performance improvment

|

| 137 |

+

|

| 138 |

+

The reported results are the following :

|

| 139 |

+

| | MMLU | BBH | MMLU-CoT | BBH-CoT | Avg |

|

| 140 |

+

| :--- | :---: | :---: | :---: | :---: | :---: |

|

| 141 |

+

| FLAN-PaLM 62B | 59.6 | 47.5 | 56.9 | 44.9 | 49.9 |

|

| 142 |

+

| FLAN-PaLM 540B | 73.5 | 57.9 | 70.9 | 66.3 | 67.2 |

|

| 143 |

+

| FLAN-T5-XXL 11B | 55.1 | 45.3 | 48.6 | 41.4 | 47.6 |

|

| 144 |

+

| FLAN-UL2 20B | 55.7(+1.1%) | 45.9(+1.3%) | 52.2(+7.4%) | 42.7(+3.1%) | 49.1(+3.2%) |

|

| 145 |

+

|

| 146 |

|

| 147 |

# Introduction to UL2

|

| 148 |

|

| 149 |

+

This entire section has been copied from the [`google/ul2`](https://huggingface.co/google/ul2) model card and might be subject of change with respect to `flan-ul2`.

|

| 150 |

+

|

| 151 |

UL2 is a unified framework for pretraining models that are universally effective across datasets and setups. UL2 uses Mixture-of-Denoisers (MoD), apre-training objective that combines diverse pre-training paradigms together. UL2 introduces a notion of mode switching, wherein downstream fine-tuning is associated with specific pre-training schemes.

|

| 152 |

|

| 153 |

|

|

|

|

| 162 |

|

| 163 |

Authors: *Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Xavier Garcia, Dara Bahri, Tal Schuster, Huaixiu Steven Zheng, Neil Houlsby, Donald Metzler*

|

| 164 |

|

|

|

|

| 165 |

|

| 166 |

+

## Training

|

| 167 |

+

|

| 168 |

+

### Flan UL2

|

| 169 |

The Flan-UL2 model was initialized using the `UL2` checkpoints, and was then trained additionally using Flan Prompting. This means that the original training corpus is `C4`,

|

| 170 |

|

| 171 |

In “Scaling Instruction-Finetuned language models (Chung et al.)” (also referred to sometimes as the Flan2 paper), the key idea is to train a large language model on a collection of datasets. These datasets are phrased as instructions which enable generalization across diverse tasks. Flan has been primarily trained on academic tasks. In Flan2, we released a series of T5 models ranging from 200M to 11B parameters that have been instruction tuned with Flan.

|

|

|

|

| 235 |

|

| 236 |

**Important**: For more details, please see sections 5.2.1 and 5.2.2 of the [paper](https://arxiv.org/pdf/2205.05131v1.pdf).

|

| 237 |

|

| 238 |

+

# Contribution

|

| 239 |

|

| 240 |

This model was originally contributed by [Yi Tay](https://www.yitay.net/?author=636616684c5e64780328eece), and added to the Hugging Face ecosystem by [Younes Belkada](https://huggingface.co/ybelkada) & [Arthur Zucker](https://huggingface.co/ArthurZ).

|

| 241 |

|

| 242 |

+

# Citation

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 243 |

|

| 244 |

+

If you want to cite this work, please consider citing the [blogpost](https://www.yitay.net/blog/flan-ul2-20b) announcing the release of `Flan-UL2`.

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|