File size: 8,289 Bytes

e000016 eeec07f e000016 eeec07f e000016 eeec07f e000016 eeec07f e000016 eeec07f e000016 eeec07f |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 |

---

language:

- en

datasets:

- c4

license: apache-2.0

---

# Introduction

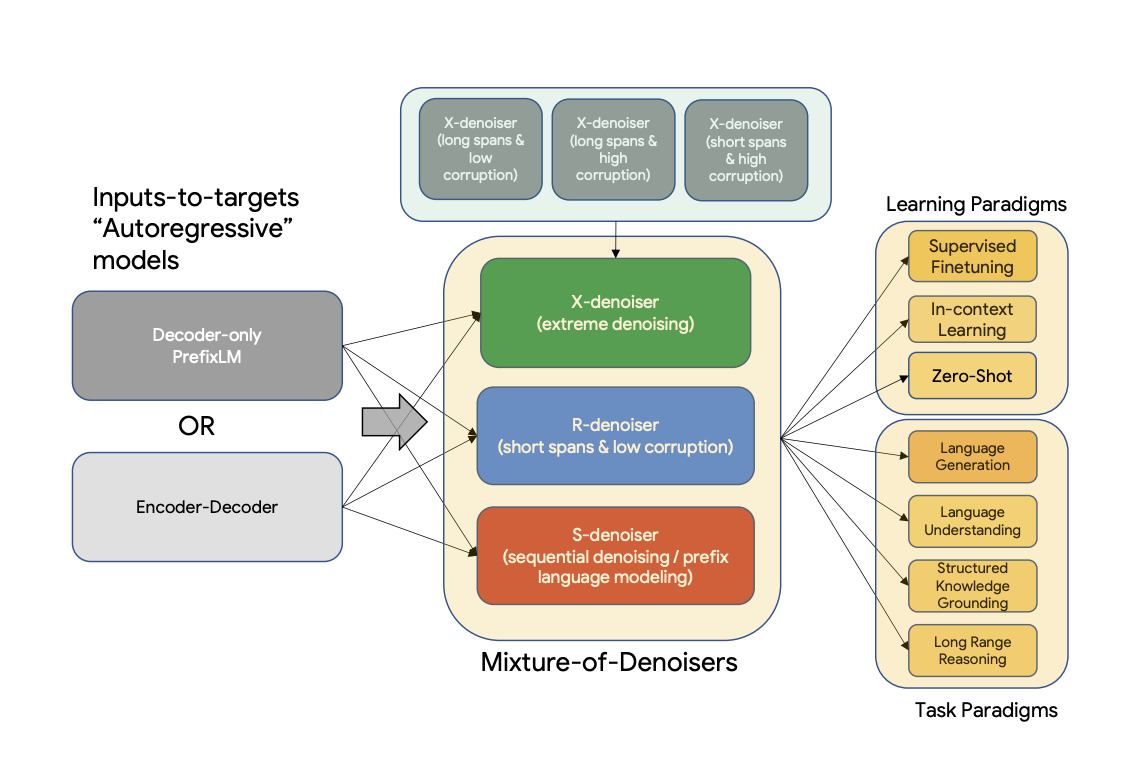

UL2 is a unified framework for pretraining models that are universally effective across datasets and setups. UL2 uses Mixture-of-Denoisers (MoD), apre-training objective that combines diverse pre-training paradigms together. UL2 introduces a notion of mode switching, wherein downstream fine-tuning is associated with specific pre-training schemes.

**Abstract**

Existing pre-trained models are generally geared towards a particular class of problems. To date, there seems to be still no consensus on what the right architecture and pre-training setup should be. This paper presents a unified framework for pre-training models that are universally effective across datasets and setups. We begin by disentangling architectural archetypes with pre-training objectives -- two concepts that are commonly conflated. Next, we present a generalized and unified perspective for self-supervision in NLP and show how different pre-training objectives can be cast as one another and how interpolating between different objectives can be effective. We then propose Mixture-of-Denoisers (MoD), a pre-training objective that combines diverse pre-training paradigms together. We furthermore introduce a notion of mode switching, wherein downstream fine-tuning is associated with specific pre-training schemes. We conduct extensive ablative experiments to compare multiple pre-training objectives and find that our method pushes the Pareto-frontier by outperforming T5 and/or GPT-like models across multiple diverse setups. Finally, by scaling our model up to 20B parameters, we achieve SOTA performance on 50 well-established supervised NLP tasks ranging from language generation (with automated and human evaluation), language understanding, text classification, question answering, commonsense reasoning, long text reasoning, structured knowledge grounding and information retrieval. Our model also achieve strong results at in-context learning, outperforming 175B GPT-3 on zero-shot SuperGLUE and tripling the performance of T5-XXL on one-shot summarization.

For more information, please take a look at the original paper.

Paper: [Unifying Language Learning Paradigms](https://arxiv.org/abs/2205.05131v1)

Authors: *Yi Tay, Mostafa Dehghani, Vinh Q. Tran, Xavier Garcia, Dara Bahri, Tal Schuster, Huaixiu Steven Zheng, Neil Houlsby, Donald Metzler*

# PreTraining

The model is pretrained on the C4 corpus. A batch size of 1024 is used for pretraining this model.

The model is trained on a total of 1 trillion tokens on C4 (2 million steps). The sequence length is set to 512/512 for inputs and targets.

Dropout is set to 0 during pretraining. Pre-training took approximately slight more than one month for about 1 trillion

tokens. We use the same mixture of denoisers as earlier sections. The model has 32 encoder layers and

32 decoder layers, dmodel of 4096 and df f of 16384. The dimension of each head is 256 for a total

of 16 heads. Our model uses a model parallelism of 8. We retain the [same sentencepiece tokenizer as T5 of 32k vocab size].

Hence, UL20B can be interpreted as a model that is quite similar to T5 but trained with a different objective and slightly different scaling knobs.

Similar to earlier experiments, **UL20B** is trained with Jax and T5X infrastructure.

## Fine-tuning

The model was continously fine-tuned after N pretraining steps where N is typically from 50k to 100k.

In other words, after each Nk steps of pretraining, we finetune on each downstream task and record its results. This is generally done in a manual fashion.

While some tasks were finetuned on earlier pretrained checkpoints as the model was still pretraining, many were finetuned on checkpoints nearer

to convergence that we release.

As we continiously finetune, we stop finetuning on a task once it has reached sota to save compute.

In total, the model was trained for 2.65 million steps where as

**Important**: For more details, please see sections 5.2.1 and 5.2.2 of the paper.

## Contribution

This model was contributed by [Daniel Hesslow](https://huggingface.co/Seledorn)

## Examples

Note that the model has been fine-tuned

```python

from transformers import T5ForConditionalGeneration, AutoTokenizer

model = T5ForConditionalGeneration.from_pretrained("Seledorn/ul2", low_cpu_mem_usage=True, torch_dtype=torch.bfloat16)

tokenizer = AutoTokenizer.from_pretrained("Seledorn/ul2")

```

# Example usage

```python

inps = ["""

Mr. and Mrs. Dursley, of number four, Privet Drive, <extra_id_0> the last people you'd expect to be involved in anything strange or mysterious, because they just didn't hold with such nonsense.

Mr. Dursley was the director of a firm called Grunnings, which made drills. He was a big, <extra_id_1>. Mrs. Dursley was thin and blonde and had nearly twice the usual amount of neck, which came in very useful as she spent so much of her time craning over garden fences, spying on the neighbours. The Dursleys had a small son called Dudley and in their opinion there was no finer boy anywhere.

The Dursleys had everything they wanted, but they also had a secret, and their greatest fear was that somebody would discover it. They didn't think they could bear it if anyone found out about the Potters. Mrs. Potter was Mrs. Dursley's sister, but they hadn't met for several years; in fact, Mrs. Dursley pretended she didn't have a sister, because her sister and her good-for-nothing husband were as unDursleyish as it was possible to be. The Dursleys shuddered to think what the neighbours would say if the Potters arrived in the street. The Dursleys knew that the Potters had a small son, too, but they had never even seen him. This boy was another good reason for keeping the Potters away; they didn't want Dudley mixing with a child like that."""

]

Note use `[NLG]` for X-denoisers, `[NLU]` for R-denoisers and `[S2S]` for S-Denoisers.

model.cuda()

model.eval()

with torch.no_grad():

for inp in inps:

inputs = tokenizer(inp, return_tensors="pt").input_ids

inputs_ = inputs.cuda()

outputs = model.generate(inputs_, max_length = 200, do_sample=True, temperature = 0.9, num_return_sequences=4)

for output in outputs:

out = tokenizer.decode(output)

inps = re.split(pattern, inp)

outs = re.split(pattern, out)

l = [z for (x,y) in zip(inps, outs[1:len(inps)]+ [""]) for z in (x,"*"+y+"*" if y != "" else "")]

print("".join(l))

print("-------------------------------")

```

Example output

Mr. and Mrs. Dursley, of number four, Privet Drive, **were** the last people you'd expect to be involved in anything strange or mysterious, because they just didn't hold with such nonsense.

Mr. Dursley was the director of a firm called Grunnings, which made drills. He was a big, **solid man with a short, brown beard, an enormous head full of brains and a round, fat face. He had a voice, too, that was so deep that it was nearly always accompanied by a slight tremor..**. Mrs. Dursley was thin and blonde and had nearly twice the usual amount of neck, which came in very useful as she spent so much of her time craning over garden fences, spying on the neighbours. The Dursleys had a small son called Dudley and in their opinion there was no finer boy anywhere.

The Dursleys had everything they wanted, but they also had a secret, and their greatest fear was that somebody would discover it. They didn't think they could bear it if anyone found out about the Potters. Mrs. Potter was Mrs. Dursley's sister, but they hadn't met for several years; in fact, Mrs. Dursley pretended she didn't have a sister, because her sister and her good-for-nothing husband were as unDursleyish as it was possible to be. The Dursleys shuddered to think what the neighbours would say if the Potters arrived in the street. The Dursleys knew that the Potters had a small son, too, but they had never even seen him. This boy was another good reason for keeping the Potters away; they didn't want Dudley mixing with a child like that.

Where bold is the completion of the model.

|