Commit

•

8359bb1

1

Parent(s):

824dd1d

Upload 43 files

Browse files- Figures/Exp.png +0 -0

- Figures/SelectivePromptTuning-SPT.png +0 -0

- README.md +112 -0

- config/all_values.yml +39 -0

- config/convai2/llama2-7b-selective-linear-both-prompt-causal-convai2-adding-target-noise.yml +34 -0

- config/convai2/llama2-7b-selective-linear-both-prompt-causal-convai2.yml +33 -0

- config/convai2/opt-1.3b-selective-linear-both-prompt-causal-convai2.yml +33 -0

- config/convai2/opt-125m-selective-linear-both-prompt-causal-convai2.yml +33 -0

- config/convai2/opt-2.7b-selective-linear-both-prompt-causal-convai2.yml +33 -0

- config/default.yml +16 -0

- dataset/__pycache__/dataset.cpython-310.pyc +0 -0

- dataset/__pycache__/dataset_helper.cpython-310.pyc +0 -0

- dataset/dataset.py +189 -0

- dataset/dataset_helper.py +117 -0

- ds_config.json +28 -0

- env.yml +257 -0

- evaluate_runs_results.py +150 -0

- evaluation.py +92 -0

- interactive_test.py +205 -0

- models/__pycache__/llm_chat.cpython-310.pyc +0 -0

- models/__pycache__/selective_llm_chat.cpython-310.pyc +0 -0

- models/llm_chat.py +227 -0

- models/selective_llm_chat.py +390 -0

- test.py +204 -0

- train.py +129 -0

- trainer/__init__.py +1 -0

- trainer/__pycache__/__init__.cpython-310.pyc +0 -0

- trainer/__pycache__/peft_trainer.cpython-310.pyc +0 -0

- trainer/peft_trainer.py +187 -0

- utils/__pycache__/config.cpython-310.pyc +0 -0

- utils/__pycache__/configure_optimizers.cpython-310.pyc +0 -0

- utils/__pycache__/dist_helper.cpython-310.pyc +0 -0

- utils/__pycache__/format_inputs.cpython-310.pyc +0 -0

- utils/__pycache__/model_helpers.cpython-310.pyc +0 -0

- utils/__pycache__/parser_helper.cpython-310.pyc +0 -0

- utils/__pycache__/seed_everything.cpython-310.pyc +0 -0

- utils/config.py +50 -0

- utils/configure_optimizers.py +6 -0

- utils/dist_helper.py +5 -0

- utils/format_inputs.py +173 -0

- utils/model_helpers.py +31 -0

- utils/parser_helper.py +17 -0

- utils/seed_everything.py +44 -0

Figures/Exp.png

ADDED

|

Figures/SelectivePromptTuning-SPT.png

ADDED

|

README.md

ADDED

|

@@ -0,0 +1,112 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# SPT: Selective Prompting Tuning for Personalized Conversations with LLMs

|

| 2 |

+

Repo for `Selective Prompting Tuning for Personalized Conversations with LLMs`, the paper is available at: [Selective Prompting Tuning for Personalized Conversations with LLMs](https://openreview.net/pdf?id=Royo7My_EJ)

|

| 3 |

+

## Introduction

|

| 4 |

+

|

| 5 |

+

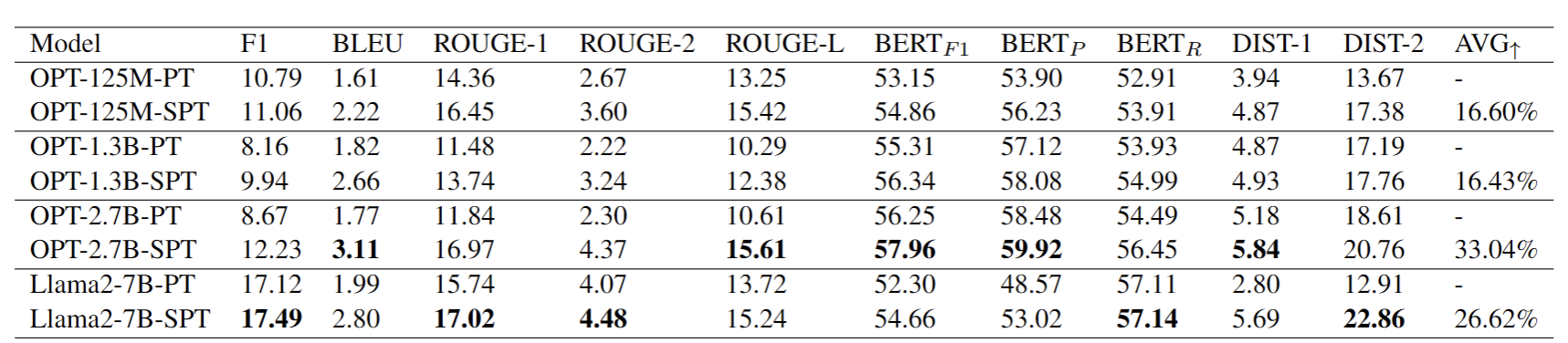

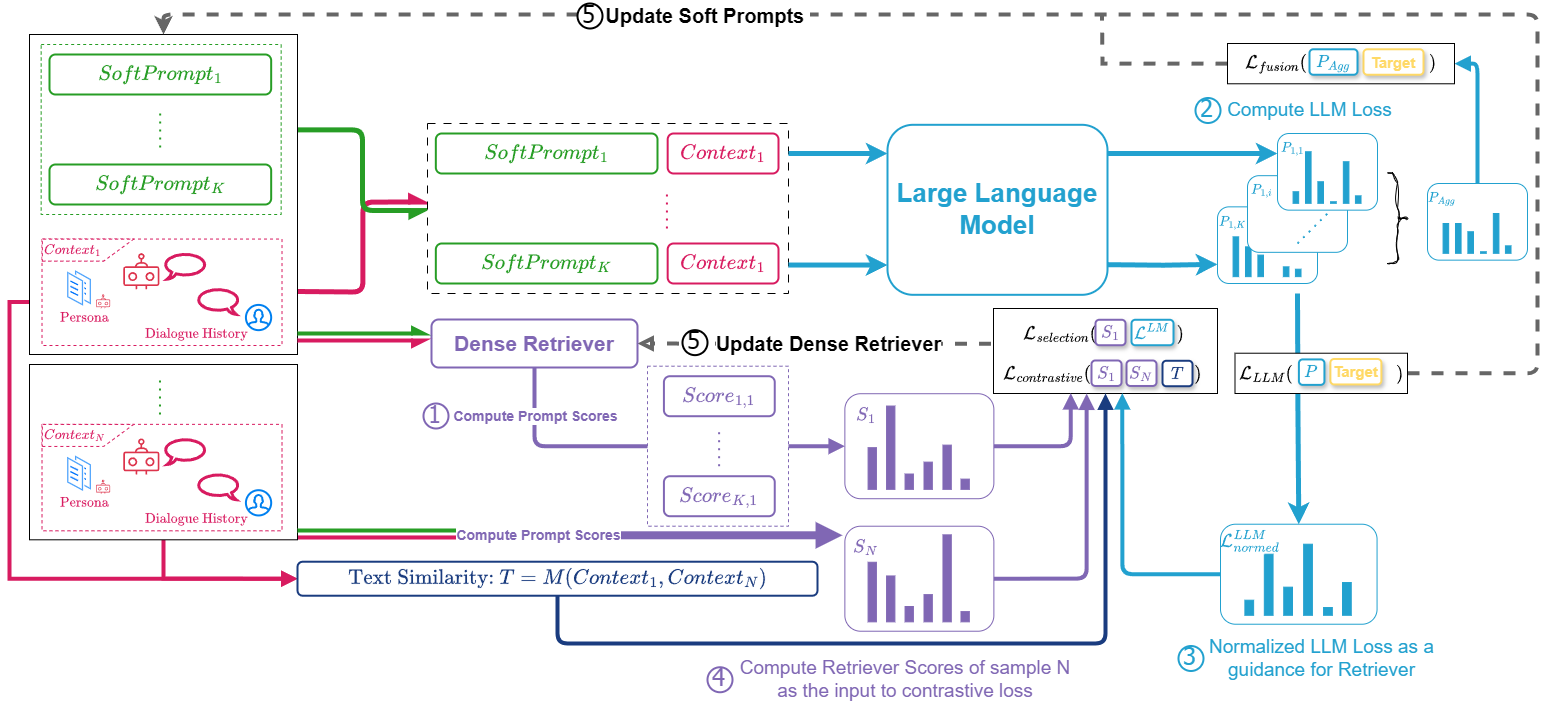

In conversational AI, personalizing dialogues with persona profiles and contextual understanding is essential. Despite large language models' (LLMs) improved response coherence, effective persona integration remains a challenge. In this work, we first study two common approaches for personalizing LLMs: textual prompting and direct fine-tuning. We observed that textual prompting often struggles to yield responses that are similar to the ground truths in datasets, while direct fine-tuning tends to produce repetitive or overly generic replies. To alleviate those issues, we propose **S**elective **P**rompt **T**uning (SPT), which softly prompts LLMs for personalized conversations in a selective way. Concretely, SPT initializes a set of soft prompts and uses a trainable dense retriever to adaptively select suitable soft prompts for LLMs according to different input contexts, where the prompt retriever is dynamically updated through feedback from the LLMs. Additionally, we propose context-prompt contrastive learning and prompt fusion learning to encourage the SPT to enhance the diversity of personalized conversations. Experiments on the CONVAI2 dataset demonstrate that SPT significantly enhances response diversity by up to 90\%, along with improvements in other critical performance indicators. Those results highlight the efficacy of SPT in fostering engaging and personalized dialogue generation. The SPT model code is publicly available for further exploration.

|

| 6 |

+

|

| 7 |

+

## Architecture

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

## Experimental Results

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

## Repo Details

|

| 14 |

+

### Basic Project Structure

|

| 15 |

+

- `config`: contains all the configuration yml file from OPT-125M to Llama2-13B

|

| 16 |

+

- `data_file`: contains CONVAI2 dataset files, dataset can be donwloaded in this [Huggingface Repo](https://huggingface.co/hqsiswiliam/SPT)

|

| 17 |

+

- `dataset`: contains dataloader class and the pre-process methods

|

| 18 |

+

- `models`: contains SPT model classes

|

| 19 |

+

- `trainer`: contains trainer classes, responsible for model training & updating

|

| 20 |

+

- `utils`: provides helper classes and functions

|

| 21 |

+

- `test.py`: the entrance script for model decoding

|

| 22 |

+

- `train.py`: the entrance script for model training

|

| 23 |

+

### Checkpoint downloading

|

| 24 |

+

- The trained checkpoint is located in `public_ckpt` from [Huggingface Repo](https://huggingface.co/hqsiswiliam/SPT)

|

| 25 |

+

|

| 26 |

+

### Environment Initialization

|

| 27 |

+

#### Modifying `env.yml`

|

| 28 |

+

Since Deepspeed requires the CuDNN and CUDA, and we integrated Nvidia related tools in Anancoda, so it is essential to modify `env.yml`'s instance variable in the last two lines as:

|

| 29 |

+

```yml

|

| 30 |

+

variables:

|

| 31 |

+

LD_LIBRARY_PATH: <CONDA_PATH>/envs/SPT/lib

|

| 32 |

+

LIBRARY_PATH: <CONDA_PATH>/envs/SPT/lib

|

| 33 |

+

```

|

| 34 |

+

Please replace `<CONDA_PATH>` to your own actual conda installation path before importing the `env.yml` to your environment.

|

| 35 |

+

#### Environment Creation

|

| 36 |

+

The SPT's environment can be built using Anaconda (which we recommend), we provide the env.yml for environment creation:

|

| 37 |

+

```bash

|

| 38 |

+

conda env create -f env.yml

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

```bash

|

| 42 |

+

conda activate SPT

|

| 43 |

+

```

|

| 44 |

+

## Model Training

|

| 45 |

+

Using following command to start training:

|

| 46 |

+

```bash

|

| 47 |

+

deepspeed --num_nodes=1 train.py \

|

| 48 |

+

--config=config/convai2/opt-125m-selective-linear-both-prompt-causal-convai2.yml \

|

| 49 |

+

--batch=2 \

|

| 50 |

+

--lr=0.0001 \

|

| 51 |

+

--epoch=1 \

|

| 52 |

+

--save_model=yes \

|

| 53 |

+

--num_workers=0 \

|

| 54 |

+

--training_ratio=1.0 \

|

| 55 |

+

--log_dir=runs_ds_dev \

|

| 56 |

+

--deepspeed \

|

| 57 |

+

--deepspeed_config ds_config.json

|

| 58 |

+

```

|

| 59 |

+

You can adjust `--num_nodes` if you have multiple GPUs in one node

|

| 60 |

+

### Main Arguments

|

| 61 |

+

- `config`: the training configuration file

|

| 62 |

+

- `batch`: the batch size per GPU

|

| 63 |

+

- `lr`: learning rate

|

| 64 |

+

- `epoch`: epoch number

|

| 65 |

+

- `save_model`: whether to save model

|

| 66 |

+

- `training_ratio`: the percentage of data used for training, 1.0 means 100%

|

| 67 |

+

- `log_dir`: the log and model save directory

|

| 68 |

+

- `deepspeed & --deepspeed_config`: the necessary arguments for initialize deepspeed

|

| 69 |

+

- `selective_loss_weight`: weight for selection loss

|

| 70 |

+

- `contrastive_weight`: weight for contrastive loss

|

| 71 |

+

## Model Inference

|

| 72 |

+

Model inference can be easily invoked by using the following command:

|

| 73 |

+

```bash

|

| 74 |

+

deepspeed test.py \

|

| 75 |

+

--model_path=public_ckpt/OPT-125M-SPT \

|

| 76 |

+

--batch_size=16 \

|

| 77 |

+

--skip_exists=no \

|

| 78 |

+

--deepspeed \

|

| 79 |

+

--deepspeed_config ds_config.json

|

| 80 |

+

```

|

| 81 |

+

### Main Arguments

|

| 82 |

+

- `model_path`: the path to the checkpoint, containing the `ds_ckpt` folder

|

| 83 |

+

- `skip_exists`: whether to skip decoding if `evaluation_result.txt` exists

|

| 84 |

+

|

| 85 |

+

## Computing Metrics for Generation Results

|

| 86 |

+

To compute the metric for the evaluation results, simply run:

|

| 87 |

+

|

| 88 |

+

`python evaluate_runs_results.py`

|

| 89 |

+

|

| 90 |

+

The input path can be changed in the script via:

|

| 91 |

+

```python

|

| 92 |

+

_main_path = 'public_ckpt'

|

| 93 |

+

```

|

| 94 |

+

|

| 95 |

+

## Interactive Testing

|

| 96 |

+

Also, we support interactive testing via:

|

| 97 |

+

```bash

|

| 98 |

+

deepspeed interactive_test.py \

|

| 99 |

+

--model_path=public_ckpt/Llama2-7B-SPT \

|

| 100 |

+

--batch_size=1 \

|

| 101 |

+

--deepspeed \

|

| 102 |

+

--deepspeed_config ds_config.json

|

| 103 |

+

```

|

| 104 |

+

So an interactive interface will be invoked as:

|

| 105 |

+

|

| 106 |

+

Some shortcut keys:

|

| 107 |

+

- `exit`: exiting the interactive shell

|

| 108 |

+

- `clear`: clear the current dialog history

|

| 109 |

+

- `r`: reload SPT's persona

|

| 110 |

+

|

| 111 |

+

## Citation

|

| 112 |

+

Will be available soon.

|

config/all_values.yml

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

model_type: 'selective_pt'

|

| 3 |

+

model_name: "facebook/opt-125m"

|

| 4 |

+

load_bit: 32

|

| 5 |

+

peft_type: "prompt_tuning"

|

| 6 |

+

K: 4

|

| 7 |

+

peft_config:

|

| 8 |

+

num_virtual_tokens: 8

|

| 9 |

+

normalizer: linear

|

| 10 |

+

normalizer_on: ['prompt', 'lm']

|

| 11 |

+

retriever:

|

| 12 |

+

retriever_on: ['extra', 'lm']

|

| 13 |

+

retriever_type: transformer_encoder

|

| 14 |

+

n_head: 4

|

| 15 |

+

num_layers: 2

|

| 16 |

+

|

| 17 |

+

training:

|

| 18 |

+

learning_rate: 1e-5

|

| 19 |

+

batch_size: 32

|

| 20 |

+

num_epochs: 1

|

| 21 |

+

mode: causal

|

| 22 |

+

only_longest: True

|

| 23 |

+

task_type: generate_response

|

| 24 |

+

log_dir: runs_prompt_selective_linear

|

| 25 |

+

contrastive: true

|

| 26 |

+

ensemble: true

|

| 27 |

+

selective_loss_weight: 0.4

|

| 28 |

+

contrastive_metric: bleu

|

| 29 |

+

contrastive_threshold: 20.0

|

| 30 |

+

contrastive_weight: 0.4

|

| 31 |

+

freeze_persona: yes

|

| 32 |

+

freeze_context: yes

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

dataset:

|

| 36 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 37 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 38 |

+

max_context_turns: -1

|

| 39 |

+

max_token_length: 512

|

config/convai2/llama2-7b-selective-linear-both-prompt-causal-convai2-adding-target-noise.yml

ADDED

|

@@ -0,0 +1,34 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

model_type: 'selective_pt'

|

| 3 |

+

model_name: "Llama-2-7b-chat-hf"

|

| 4 |

+

load_bit: 16

|

| 5 |

+

peft_type: "prompt_tuning"

|

| 6 |

+

K: 4

|

| 7 |

+

peft_config:

|

| 8 |

+

num_virtual_tokens: 1

|

| 9 |

+

normalizer: linear

|

| 10 |

+

normalizer_on: ['prompt', 'lm']

|

| 11 |

+

|

| 12 |

+

training:

|

| 13 |

+

learning_rate: 1e-5

|

| 14 |

+

batch_size: 32

|

| 15 |

+

num_epochs: 1

|

| 16 |

+

mode: causal

|

| 17 |

+

adding_noise: 0.1

|

| 18 |

+

only_longest: False

|

| 19 |

+

task_type: generate_response

|

| 20 |

+

log_dir: runs_prompt_convai2_selective_linear

|

| 21 |

+

contrastive: true

|

| 22 |

+

ensemble: true

|

| 23 |

+

selective_loss_weight: 1.0

|

| 24 |

+

contrastive_metric: bleu

|

| 25 |

+

contrastive_threshold: 20.0

|

| 26 |

+

contrastive_weight: 1.0

|

| 27 |

+

freeze_persona: yes

|

| 28 |

+

freeze_context: yes

|

| 29 |

+

|

| 30 |

+

dataset:

|

| 31 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 32 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 33 |

+

max_context_turns: -1

|

| 34 |

+

max_token_length: 512

|

config/convai2/llama2-7b-selective-linear-both-prompt-causal-convai2.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

model_type: 'selective_pt'

|

| 3 |

+

model_name: "Llama-2-7b-chat-hf"

|

| 4 |

+

load_bit: 16

|

| 5 |

+

peft_type: "prompt_tuning"

|

| 6 |

+

K: 4

|

| 7 |

+

peft_config:

|

| 8 |

+

num_virtual_tokens: 1

|

| 9 |

+

normalizer: linear

|

| 10 |

+

normalizer_on: ['prompt', 'lm']

|

| 11 |

+

|

| 12 |

+

training:

|

| 13 |

+

learning_rate: 1e-5

|

| 14 |

+

batch_size: 32

|

| 15 |

+

num_epochs: 1

|

| 16 |

+

mode: causal

|

| 17 |

+

only_longest: False

|

| 18 |

+

task_type: generate_response

|

| 19 |

+

log_dir: runs_prompt_convai2_selective_linear

|

| 20 |

+

contrastive: true

|

| 21 |

+

ensemble: true

|

| 22 |

+

selective_loss_weight: 1.0

|

| 23 |

+

contrastive_metric: bleu

|

| 24 |

+

contrastive_threshold: 20.0

|

| 25 |

+

contrastive_weight: 1.0

|

| 26 |

+

freeze_persona: yes

|

| 27 |

+

freeze_context: yes

|

| 28 |

+

|

| 29 |

+

dataset:

|

| 30 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 31 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 32 |

+

max_context_turns: -1

|

| 33 |

+

max_token_length: 512

|

config/convai2/opt-1.3b-selective-linear-both-prompt-causal-convai2.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

model_type: 'selective_pt'

|

| 3 |

+

model_name: "facebook/opt-1.3b"

|

| 4 |

+

load_bit: 32

|

| 5 |

+

peft_type: "prompt_tuning"

|

| 6 |

+

K: 4

|

| 7 |

+

peft_config:

|

| 8 |

+

num_virtual_tokens: 8

|

| 9 |

+

normalizer: linear

|

| 10 |

+

normalizer_on: ['prompt', 'lm']

|

| 11 |

+

|

| 12 |

+

training:

|

| 13 |

+

learning_rate: 1e-5

|

| 14 |

+

batch_size: 32

|

| 15 |

+

num_epochs: 1

|

| 16 |

+

mode: causal

|

| 17 |

+

only_longest: False

|

| 18 |

+

task_type: generate_response

|

| 19 |

+

log_dir: runs_prompt_convai2_selective_linear

|

| 20 |

+

contrastive: true

|

| 21 |

+

ensemble: true

|

| 22 |

+

selective_loss_weight: 0.4

|

| 23 |

+

contrastive_metric: bleu

|

| 24 |

+

contrastive_threshold: 20.0

|

| 25 |

+

contrastive_weight: 0.4

|

| 26 |

+

freeze_persona: yes

|

| 27 |

+

freeze_context: yes

|

| 28 |

+

|

| 29 |

+

dataset:

|

| 30 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 31 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 32 |

+

max_context_turns: -1

|

| 33 |

+

max_token_length: 512

|

config/convai2/opt-125m-selective-linear-both-prompt-causal-convai2.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

model_type: 'selective_pt'

|

| 3 |

+

model_name: "facebook/opt-125m"

|

| 4 |

+

load_bit: 32

|

| 5 |

+

peft_type: "prompt_tuning"

|

| 6 |

+

K: 4

|

| 7 |

+

peft_config:

|

| 8 |

+

num_virtual_tokens: 8

|

| 9 |

+

normalizer: linear

|

| 10 |

+

normalizer_on: ['prompt', 'lm']

|

| 11 |

+

|

| 12 |

+

training:

|

| 13 |

+

learning_rate: 1e-5

|

| 14 |

+

batch_size: 32

|

| 15 |

+

num_epochs: 1

|

| 16 |

+

mode: causal

|

| 17 |

+

only_longest: False

|

| 18 |

+

task_type: generate_response

|

| 19 |

+

log_dir: runs_prompt_convai2_selective_linear

|

| 20 |

+

contrastive: true

|

| 21 |

+

ensemble: true

|

| 22 |

+

selective_loss_weight: 0.4

|

| 23 |

+

contrastive_metric: bleu

|

| 24 |

+

contrastive_threshold: 20.0

|

| 25 |

+

contrastive_weight: 0.4

|

| 26 |

+

freeze_persona: yes

|

| 27 |

+

freeze_context: yes

|

| 28 |

+

|

| 29 |

+

dataset:

|

| 30 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 31 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 32 |

+

max_context_turns: -1

|

| 33 |

+

max_token_length: 512

|

config/convai2/opt-2.7b-selective-linear-both-prompt-causal-convai2.yml

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

model:

|

| 2 |

+

model_type: 'selective_pt'

|

| 3 |

+

model_name: "facebook/opt-2.7b"

|

| 4 |

+

load_bit: 16

|

| 5 |

+

peft_type: "prompt_tuning"

|

| 6 |

+

K: 4

|

| 7 |

+

peft_config:

|

| 8 |

+

num_virtual_tokens: 8

|

| 9 |

+

normalizer: linear

|

| 10 |

+

normalizer_on: ['prompt', 'lm']

|

| 11 |

+

|

| 12 |

+

training:

|

| 13 |

+

learning_rate: 1e-5

|

| 14 |

+

batch_size: 32

|

| 15 |

+

num_epochs: 1

|

| 16 |

+

mode: causal

|

| 17 |

+

only_longest: False

|

| 18 |

+

task_type: generate_response

|

| 19 |

+

log_dir: runs_prompt_convai2_selective_linear

|

| 20 |

+

contrastive: true

|

| 21 |

+

ensemble: true

|

| 22 |

+

selective_loss_weight: 0.4

|

| 23 |

+

contrastive_metric: bleu

|

| 24 |

+

contrastive_threshold: 20.0

|

| 25 |

+

contrastive_weight: 0.4

|

| 26 |

+

freeze_persona: yes

|

| 27 |

+

freeze_context: yes

|

| 28 |

+

|

| 29 |

+

dataset:

|

| 30 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 31 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 32 |

+

max_context_turns: -1

|

| 33 |

+

max_token_length: 512

|

config/default.yml

ADDED

|

@@ -0,0 +1,16 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

dataset:

|

| 2 |

+

train: data_file/ConvAI2/train_self_original_no_cands.txt

|

| 3 |

+

valid: data_file/ConvAI2/valid_self_original_no_cands.txt

|

| 4 |

+

max_context_turns: -1

|

| 5 |

+

max_token_length: 512

|

| 6 |

+

|

| 7 |

+

model:

|

| 8 |

+

score_activation: 'softplus'

|

| 9 |

+

|

| 10 |

+

training:

|

| 11 |

+

mode: normal

|

| 12 |

+

only_longest: False

|

| 13 |

+

task_type: generate_response

|

| 14 |

+

ensemble: false

|

| 15 |

+

tau_gold: 1.0

|

| 16 |

+

tau_sim: 1.0

|

dataset/__pycache__/dataset.cpython-310.pyc

ADDED

|

Binary file (5.37 kB). View file

|

|

|

dataset/__pycache__/dataset_helper.cpython-310.pyc

ADDED

|

Binary file (3.46 kB). View file

|

|

|

dataset/dataset.py

ADDED

|

@@ -0,0 +1,189 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

from pytorch_lightning import LightningDataModule

|

| 3 |

+

from torch.utils.data import DataLoader

|

| 4 |

+

|

| 5 |

+

from dataset.dataset_helper import read_personachat_split

|

| 6 |

+

from utils.format_inputs import TASK_TYPE

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

class PersonaChatDataset(torch.utils.data.Dataset):

|

| 10 |

+

# longest first for batch finder

|

| 11 |

+

def __init__(self, data_path, max_context_turns=-1,

|

| 12 |

+

add_role_indicator=True, only_longest=False, training_ratio=1.0,

|

| 13 |

+

task_type=TASK_TYPE.GENERATE_RESPONSE):

|

| 14 |

+

self.path = data_path

|

| 15 |

+

self.add_role_indicator = add_role_indicator

|

| 16 |

+

self.max_context_turns = max_context_turns

|

| 17 |

+

self.turns_data = read_personachat_split(data_path, only_longest=only_longest)

|

| 18 |

+

self.only_longest = only_longest

|

| 19 |

+

self.training_ratio = training_ratio

|

| 20 |

+

if training_ratio < 1.0:

|

| 21 |

+

self.turns_data = self.turns_data[:int(len(self.turns_data) * training_ratio)]

|

| 22 |

+

self.task_type = task_type

|

| 23 |

+

# # For debug only

|

| 24 |

+

# os.makedirs("data_logs", exist_ok=True)

|

| 25 |

+

# random_num = random.randint(0, 100000)

|

| 26 |

+

# self.file = open(f"data_logs/{random_num}_{data_path.split(os.sep)[-1]}", 'w')

|

| 27 |

+

# # add id to turns_data

|

| 28 |

+

# self.turns_data = [{'id': idx, **turn} for idx, turn in enumerate(self.turns_data)]

|

| 29 |

+

# self.file.write(f"total_turns: {len(self.turns_data)}\n")

|

| 30 |

+

|

| 31 |

+

def sort_longest_first(self):

|

| 32 |

+

self.turns_data = sorted(self.turns_data, key=lambda x: len(

|

| 33 |

+

(' '.join(x['persona']) + ' '.join(x['context']) + x['response']).split(' ')), reverse=True)

|

| 34 |

+

|

| 35 |

+

def __getitem__(self, idx):

|

| 36 |

+

# self.file.write(str(idx) + "\n")

|

| 37 |

+

# self.file.flush()

|

| 38 |

+

input_data = self.turns_data[idx]

|

| 39 |

+

persona_list = input_data['persona']

|

| 40 |

+

target = input_data['response']

|

| 41 |

+

context_input = input_data['context']

|

| 42 |

+

if self.add_role_indicator:

|

| 43 |

+

roled_context_input = [['Q: ', 'R: '][c_idx % 2] + context for c_idx, context in enumerate(context_input)]

|

| 44 |

+

context_input = roled_context_input

|

| 45 |

+

if self.max_context_turns != -1:

|

| 46 |

+

truncated_context = context_input[-(self.max_context_turns * 2 - 1):]

|

| 47 |

+

context_input = truncated_context

|

| 48 |

+

if self.only_longest:

|

| 49 |

+

context_input = context_input[:-1]

|

| 50 |

+

return {

|

| 51 |

+

'context_input': context_input,

|

| 52 |

+

'persona_list': persona_list,

|

| 53 |

+

'target': target

|

| 54 |

+

}

|

| 55 |

+

|

| 56 |

+

def __len__(self):

|

| 57 |

+

return len(self.turns_data)

|

| 58 |

+

|

| 59 |

+

|

| 60 |

+

# class HGPersonaChatDataset(PersonaChatDataset):

|

| 61 |

+

# def __init__(self, data_path, max_context_turns=-1,

|

| 62 |

+

# add_role_indicator=True, only_longest=False, tokenizer=None):

|

| 63 |

+

# super().__init__(data_path, max_context_turns, add_role_indicator, only_longest)

|

| 64 |

+

# self.tokenizer = tokenizer

|

| 65 |

+

#

|

| 66 |

+

# def __getitem__(self, idx):

|

| 67 |

+

# data = super().__getitem__(idx)

|

| 68 |

+

# input = "P: " + ' '.join(data['persona_list']) + " C: " + ' '.join(data['context_input']) + " R: " + data[

|

| 69 |

+

# 'target']

|

| 70 |

+

# tokenized = self.tokenizer(input)

|

| 71 |

+

# return {**data, **tokenized}

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

def collate_fn(sample_list):

|

| 75 |

+

dont_be_a_tensor = ['context_input', 'persona_list', 'target']

|

| 76 |

+

to_be_flattened = [*dont_be_a_tensor]

|

| 77 |

+

data = {}

|

| 78 |

+

for key in to_be_flattened:

|

| 79 |

+

if key not in sample_list[0].keys():

|

| 80 |

+

continue

|

| 81 |

+

if sample_list[0][key] is None:

|

| 82 |

+

continue

|

| 83 |

+

flatten_samples = [sample[key] for sample in sample_list]

|

| 84 |

+

if flatten_samples[-1].__class__ == str or key in dont_be_a_tensor:

|

| 85 |

+

data[key] = flatten_samples

|

| 86 |

+

else:

|

| 87 |

+

data[key] = torch.tensor(flatten_samples)

|

| 88 |

+

return data

|

| 89 |

+

|

| 90 |

+

|

| 91 |

+

def collate_fn_straight(sample_list):

|

| 92 |

+

sample_list = collate_fn(sample_list)

|

| 93 |

+

return sample_list

|

| 94 |

+

|

| 95 |

+

|

| 96 |

+

def collate_fn_straight_with_fn(fn):

|

| 97 |

+

def build_collate_fn(sample_list):

|

| 98 |

+

sample_list = collate_fn(sample_list)

|

| 99 |

+

sample_list_processed = fn(sample_list)

|

| 100 |

+

return {**sample_list, **sample_list_processed}

|

| 101 |

+

|

| 102 |

+

return build_collate_fn

|

| 103 |

+

|

| 104 |

+

|

| 105 |

+

def get_dataloader(dataset, batch_size, shuffle=False, num_workers=None, collate_fn=None, sampler=None):

|

| 106 |

+

if num_workers is None:

|

| 107 |

+

num_workers = batch_size // 4

|

| 108 |

+

# num_workers = min(num_workers, batch_size)

|

| 109 |

+

if collate_fn == None:

|

| 110 |

+

_collate_fn = collate_fn_straight

|

| 111 |

+

else:

|

| 112 |

+

_collate_fn = collate_fn_straight_with_fn(collate_fn)

|

| 113 |

+

return DataLoader(dataset, batch_size=batch_size,

|

| 114 |

+

collate_fn=_collate_fn,

|

| 115 |

+

shuffle=shuffle,

|

| 116 |

+

num_workers=num_workers,

|

| 117 |

+

sampler=sampler)

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

def get_lightening_dataloader(dataset, batch_size, shuffle=False, num_workers=None):

|

| 121 |

+

return LitDataModule(batch_size, dataset, shuffle, num_workers)

|

| 122 |

+

|

| 123 |

+

|

| 124 |

+

class LitDataModule(LightningDataModule):

|

| 125 |

+

def __init__(self, batch_size, dataset, shuffle, num_workers):

|

| 126 |

+

super().__init__()

|

| 127 |

+

self.save_hyperparameters(ignore=['dataset'])

|

| 128 |

+

# or

|

| 129 |

+

self.batch_size = batch_size

|

| 130 |

+

self.dataset = dataset

|

| 131 |

+

|

| 132 |

+

def train_dataloader(self):

|

| 133 |

+

return DataLoader(self.dataset, batch_size=self.batch_size,

|

| 134 |

+

collate_fn=collate_fn_straight,

|

| 135 |

+

shuffle=self.hparams.shuffle,

|

| 136 |

+

num_workers=self.hparams.num_workers)

|

| 137 |

+

|

| 138 |

+

if __name__ == '__main__':

|

| 139 |

+

import json

|

| 140 |

+

train_ds = PersonaChatDataset(data_path='data_file/ConvAI2/train_self_original_no_cands.txt',

|

| 141 |

+

)

|

| 142 |

+

from tqdm import tqdm

|

| 143 |

+

|

| 144 |

+

jsonfy_data = []

|

| 145 |

+

|

| 146 |

+

for data in tqdm(train_ds):

|

| 147 |

+

context_input = "\n".join(data['context_input'])

|

| 148 |

+

persona_input = '\n'.join(data['persona_list'])

|

| 149 |

+

jsonfy_data.append({

|

| 150 |

+

"instruction": f"""Given the dialog history between Q and R is:

|

| 151 |

+

{context_input}

|

| 152 |

+

|

| 153 |

+

Given the personality of the R as:

|

| 154 |

+

{persona_input}

|

| 155 |

+

|

| 156 |

+

Please response to Q according to both the dialog history and the R's personality.

|

| 157 |

+

Now, the R would say:""",

|

| 158 |

+

"input": "",

|

| 159 |

+

"output": data['target'],

|

| 160 |

+

"answer": "",

|

| 161 |

+

})

|

| 162 |

+

with open('data_file/train.json', 'w') as writer:

|

| 163 |

+

json.dump(jsonfy_data, writer)

|

| 164 |

+

jsonfy_data = []

|

| 165 |

+

del train_ds

|

| 166 |

+

|

| 167 |

+

train_ds = PersonaChatDataset(data_path='data_file/ConvAI2/valid_self_original_no_cands.txt',

|

| 168 |

+

)

|

| 169 |

+

|

| 170 |

+

for data in tqdm(train_ds):

|

| 171 |

+

context_input = "\n".join(data['context_input'])

|

| 172 |

+

persona_input = '\n'.join(data['persona_list'])

|

| 173 |

+

jsonfy_data.append({

|

| 174 |

+

"instruction": f"""Given the dialog history between Q and R is:

|

| 175 |

+

{context_input}

|

| 176 |

+

|

| 177 |

+

Given the personality of the R as:

|

| 178 |

+

{persona_input}

|

| 179 |

+

|

| 180 |

+

Please response to Q according to both the dialog history and the R's personality.

|

| 181 |

+

Now, the R would say:""",

|

| 182 |

+

"input": "",

|

| 183 |

+

"output": data['target'],

|

| 184 |

+

"answer": "",

|

| 185 |

+

})

|

| 186 |

+

with open('data_file/valid.json', 'w') as writer:

|

| 187 |

+

json.dump(jsonfy_data, writer)

|

| 188 |

+

with open('data_file/test.json', 'w') as writer:

|

| 189 |

+

json.dump(jsonfy_data, writer)

|

dataset/dataset_helper.py

ADDED

|

@@ -0,0 +1,117 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import re

|

| 2 |

+

|

| 3 |

+

from tqdm import tqdm

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

def read_personachat_split(split_dir, only_longest=False):

|

| 7 |

+

results = []

|

| 8 |

+

their_per_group = None

|

| 9 |

+

try:

|

| 10 |

+

file = open(split_dir, 'r')

|

| 11 |

+

lines = file.readlines()

|

| 12 |

+

persona = []

|

| 13 |

+

context = []

|

| 14 |

+

response = None

|

| 15 |

+

candidates = []

|

| 16 |

+

is_longest = False

|

| 17 |

+

for line in tqdm(lines[:], desc='loading {}'.format(split_dir)):

|

| 18 |

+

if line.startswith('1 your persona:'):

|

| 19 |

+

is_longest = True

|

| 20 |

+

if is_longest and only_longest:

|

| 21 |

+

if response is not None:

|

| 22 |

+

results.append({'persona': persona.copy(), 'context': context.copy(), 'response': response,

|

| 23 |

+

'candidates': candidates.copy()})

|

| 24 |

+

is_longest = False

|

| 25 |

+

persona = []

|

| 26 |

+

context = []

|

| 27 |

+

if 'persona:' in line:

|

| 28 |

+

persona.append(line.split(':')[1].strip())

|

| 29 |

+

if 'persona:' not in line:

|

| 30 |

+

context.append(re.sub(r"^\d+ ", "", line.split("\t")[0].strip()))

|

| 31 |

+

response = line.split("\t")[1].strip()

|

| 32 |

+

if len(line.split("\t\t"))==1:

|

| 33 |

+

candidates = []

|

| 34 |

+

else:

|

| 35 |

+

candidates = line.split("\t\t")[1].strip().split("|")

|

| 36 |

+

if not only_longest:

|

| 37 |

+

results.append({'persona': persona.copy(), 'context': context.copy(), 'response': response,

|

| 38 |

+

'candidates': candidates.copy()})

|

| 39 |

+

context.append(response)

|

| 40 |

+

except FileNotFoundError:

|

| 41 |

+

print(f"Sorry! The file {split_dir} can't be found.")

|

| 42 |

+

return results

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

def combine_persona_query_response(persona, query, response, candidates):

|

| 46 |

+

assert ((len(persona) == len(query)) and (len(query) == len(response))), \

|

| 47 |

+

'the length of persona, query, response must be equivalent'

|

| 48 |

+

data = {}

|

| 49 |

+

for index, psn in enumerate(persona):

|

| 50 |

+

split_persona = psn.strip().split("\t")

|

| 51 |

+

psn = psn.replace("\t", " ").strip()

|

| 52 |

+

if psn not in data.keys():

|

| 53 |

+

data[psn] = {'persona': psn, 'query': [], 'response': [], 'dialog': [], 'response_turns': 0,

|

| 54 |

+

'persona_list': split_persona, 'candidates': []}

|

| 55 |

+

data[psn]['query'].append(query[index])

|

| 56 |

+

data[psn]['response'].append(response[index])

|

| 57 |

+

data[psn]['dialog'].append(query[index])

|

| 58 |

+

data[psn]['dialog'].append(response[index])

|

| 59 |

+

data[psn]['candidates'].append(candidates[index])

|

| 60 |

+

data[psn]['response_turns'] += 1

|

| 61 |

+

return data

|

| 62 |

+

|

| 63 |

+

|

| 64 |

+

def preprocess_text(text):

|

| 65 |

+

punctuations = '.,?'

|

| 66 |

+

for punc in punctuations:

|

| 67 |

+

text = text.replace(punc, ' {} '.format(punc))

|

| 68 |

+

text = re.sub(' +', ' ', text).strip()

|

| 69 |

+

return text

|

| 70 |

+

|

| 71 |

+

|

| 72 |

+

def preprocess_texts(text_array):

|

| 73 |

+

return [preprocess_text(t) for t in text_array]

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

# "turns" means we need at least how many turns

|

| 77 |

+

# "max_context_turns" means how many history turns should be kept

|

| 78 |

+

def get_chat_by_turns(combined_data, turns=1,

|

| 79 |

+

sep_token='[SEP]', add_role_indicator=True,

|

| 80 |

+

add_persona_indicator=True, max_context_turns=-1):

|

| 81 |

+

assert turns > 0, 'turns must be large than 0'

|

| 82 |

+

all_persona = list(combined_data.keys())

|

| 83 |

+

filtered_persona = list(filter(lambda p: combined_data[p]['response_turns'] >= turns, all_persona))

|

| 84 |

+

data = []

|

| 85 |

+

|

| 86 |

+

for single_persona in filtered_persona:

|

| 87 |

+

single_persona_data = combined_data[single_persona]

|

| 88 |

+

persona_list = single_persona_data['persona_list']

|

| 89 |

+

context = []

|

| 90 |

+

for index, (query, response) in enumerate(

|

| 91 |

+

zip(single_persona_data['query'], single_persona_data['response'])

|

| 92 |

+

):

|

| 93 |

+

if max_context_turns != -1 and \

|

| 94 |

+

index + 1 < single_persona_data['response_turns'] - max_context_turns:

|

| 95 |

+

continue

|

| 96 |

+

if add_role_indicator:

|

| 97 |

+

query = "Q: {}".format(query)

|

| 98 |

+

if not index + 1 >= turns:

|

| 99 |

+

response = "R: {}".format(response)

|

| 100 |

+

context += [query, response]

|

| 101 |

+

if index + 1 >= turns:

|

| 102 |

+

break

|

| 103 |

+

|

| 104 |

+

response = context[-1]

|

| 105 |

+

context = context[:-1]

|

| 106 |

+

|

| 107 |

+

input_x_str = " {} ".format(sep_token).join(context)

|

| 108 |

+

input_x_str = re.sub(" +", " ", input_x_str)

|

| 109 |

+

if add_persona_indicator:

|

| 110 |

+

single_persona = "P: {}".format(single_persona)

|

| 111 |

+

data.append({'input': preprocess_texts(context),

|

| 112 |

+

'input_str': preprocess_text(input_x_str),

|

| 113 |

+

'target': preprocess_text(response),

|

| 114 |

+

'persona': preprocess_text(single_persona),

|

| 115 |

+

'persona_list': preprocess_texts(persona_list),

|

| 116 |

+

'candidates': preprocess_texts(single_persona_data['candidates'][-1])})

|

| 117 |

+

return data

|

ds_config.json

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"train_micro_batch_size_per_gpu ": 1,

|

| 3 |

+

"gradient_accumulation_steps": 1,

|

| 4 |

+

"optimizer": {

|

| 5 |

+

"type": "Adam",

|

| 6 |

+

"params": {

|

| 7 |

+

"lr": 0.00015

|

| 8 |

+

}

|

| 9 |

+

},

|

| 10 |

+

"bf16": {

|

| 11 |

+

"enabled": false

|

| 12 |

+

},

|

| 13 |

+

"float16": {

|

| 14 |

+

"enabled": false

|

| 15 |

+

},

|

| 16 |

+

|

| 17 |

+

"zero_optimization": {

|

| 18 |

+

"stage": 2,

|

| 19 |

+

"offload_param": {

|

| 20 |

+

"device": "cpu",

|

| 21 |

+

"pin_memory": true,

|

| 22 |

+

"buffer_count": 5,

|

| 23 |

+

"buffer_size": 1e8,

|

| 24 |

+

"max_in_cpu": 1e9

|

| 25 |

+

}

|

| 26 |

+

}

|

| 27 |

+

|

| 28 |

+

}

|

env.yml

ADDED

|

@@ -0,0 +1,257 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: SPT

|

| 2 |

+

channels:

|

| 3 |

+

- pytorch

|

| 4 |

+

- nvidia

|

| 5 |

+

- nvidia/label/cuda-11.8.0

|

| 6 |

+

- anaconda

|

| 7 |

+

- defaults

|

| 8 |

+

dependencies:

|

| 9 |

+

- _libgcc_mutex=0.1=main

|

| 10 |

+

- _openmp_mutex=5.1=1_gnu

|

| 11 |

+

- blas=1.0=mkl

|

| 12 |

+

- brotlipy=0.7.0=py310h7f8727e_1002

|

| 13 |

+

- bzip2=1.0.8=h7b6447c_0

|

| 14 |

+

- ca-certificates=2023.08.22=h06a4308_0

|

| 15 |

+

- certifi=2023.11.17=py310h06a4308_0

|

| 16 |

+

- cffi=1.15.1=py310h5eee18b_3

|

| 17 |

+

- charset-normalizer=2.0.4=pyhd3eb1b0_0

|

| 18 |

+

- cryptography=39.0.1=py310h9ce1e76_2

|

| 19 |

+

- cuda-cccl=11.8.89=0

|

| 20 |

+

- cuda-compiler=11.8.0=0

|

| 21 |

+

- cuda-cudart=11.8.89=0

|

| 22 |

+

- cuda-cudart-dev=11.8.89=0

|

| 23 |

+

- cuda-cuobjdump=11.8.86=0

|

| 24 |

+

- cuda-cupti=11.8.87=0

|

| 25 |

+

- cuda-cuxxfilt=11.8.86=0

|

| 26 |

+

- cuda-libraries=11.8.0=0

|

| 27 |

+

- cuda-nvcc=11.8.89=0

|

| 28 |

+

- cuda-nvprune=11.8.86=0

|

| 29 |

+

- cuda-nvrtc=11.8.89=0

|

| 30 |

+

- cuda-nvtx=11.8.86=0

|

| 31 |

+

- cuda-runtime=11.8.0=0

|

| 32 |

+

- cudatoolkit=11.8.0=h6a678d5_0

|

| 33 |

+

- ffmpeg=4.3=hf484d3e_0

|

| 34 |

+

- filelock=3.9.0=py310h06a4308_0

|

| 35 |

+

- freetype=2.12.1=h4a9f257_0

|

| 36 |

+

- giflib=5.2.1=h5eee18b_3

|

| 37 |

+

- gmp=6.2.1=h295c915_3

|

| 38 |

+

- gmpy2=2.1.2=py310heeb90bb_0

|

| 39 |

+

- gnutls=3.6.15=he1e5248_0

|

| 40 |

+

- idna=3.4=py310h06a4308_0

|

| 41 |

+

- intel-openmp=2023.1.0=hdb19cb5_46305

|

| 42 |

+

- jinja2=3.1.2=py310h06a4308_0

|

| 43 |

+

- jpeg=9e=h5eee18b_1

|

| 44 |

+

- lame=3.100=h7b6447c_0

|

| 45 |

+

- lcms2=2.12=h3be6417_0

|

| 46 |

+

- ld_impl_linux-64=2.38=h1181459_1

|

| 47 |

+

- lerc=3.0=h295c915_0

|

| 48 |

+

- libcublas=11.11.3.6=0

|

| 49 |

+

- libcublas-dev=11.11.3.6=0

|

| 50 |

+

- libcufft=10.9.0.58=0

|

| 51 |

+

- libcufile=1.6.1.9=0

|

| 52 |

+

- libcurand=10.3.2.106=0

|

| 53 |

+

- libcusolver=11.4.1.48=0

|

| 54 |

+

- libcusolver-dev=11.4.1.48=0

|

| 55 |

+

- libcusparse=11.7.5.86=0

|

| 56 |

+

- libcusparse-dev=11.7.5.86=0

|

| 57 |

+

- libdeflate=1.17=h5eee18b_0

|

| 58 |

+

- libffi=3.4.4=h6a678d5_0

|

| 59 |

+

- libgcc-ng=11.2.0=h1234567_1

|

| 60 |

+

- libgomp=11.2.0=h1234567_1

|

| 61 |

+

- libiconv=1.16=h7f8727e_2

|

| 62 |

+

- libidn2=2.3.4=h5eee18b_0

|

| 63 |

+

- libnpp=11.8.0.86=0

|

| 64 |

+

- libnvjpeg=11.9.0.86=0

|

| 65 |

+

- libpng=1.6.39=h5eee18b_0

|

| 66 |

+

- libstdcxx-ng=11.2.0=h1234567_1

|

| 67 |

+

- libtasn1=4.19.0=h5eee18b_0

|

| 68 |

+

- libtiff=4.5.0=h6a678d5_2

|

| 69 |

+

- libunistring=0.9.10=h27cfd23_0

|

| 70 |

+

- libuuid=1.41.5=h5eee18b_0

|

| 71 |

+

- libwebp=1.2.4=h11a3e52_1

|

| 72 |

+

- libwebp-base=1.2.4=h5eee18b_1

|

| 73 |

+

- lz4-c=1.9.4=h6a678d5_0

|

| 74 |

+

- markupsafe=2.1.1=py310h7f8727e_0

|

| 75 |

+

- mkl=2023.1.0=h6d00ec8_46342

|

| 76 |

+

- mkl-service=2.4.0=py310h5eee18b_1

|

| 77 |

+

- mkl_fft=1.3.6=py310h1128e8f_1

|

| 78 |

+

- mkl_random=1.2.2=py310h1128e8f_1

|

| 79 |

+

- mpc=1.1.0=h10f8cd9_1

|

| 80 |

+

- mpfr=4.0.2=hb69a4c5_1

|

| 81 |

+

- ncurses=6.4=h6a678d5_0

|

| 82 |

+

- nettle=3.7.3=hbbd107a_1

|

| 83 |

+

- networkx=2.8.4=py310h06a4308_1

|

| 84 |

+

- numpy=1.25.0=py310h5f9d8c6_0

|

| 85 |

+

- numpy-base=1.25.0=py310hb5e798b_0

|

| 86 |

+

- openh264=2.1.1=h4ff587b_0

|

| 87 |

+

- openssl=3.0.12=h7f8727e_0

|

| 88 |

+

- pillow=9.4.0=py310h6a678d5_0

|

| 89 |

+

- pip=23.1.2=py310h06a4308_0

|

| 90 |

+

- pycparser=2.21=pyhd3eb1b0_0

|

| 91 |

+

- pyopenssl=23.0.0=py310h06a4308_0

|

| 92 |

+

- pysocks=1.7.1=py310h06a4308_0

|

| 93 |

+

- python=3.10.11=h955ad1f_3

|

| 94 |

+

- pytorch-cuda=11.8=h7e8668a_5

|

| 95 |

+

- pytorch-mutex=1.0=cuda

|

| 96 |

+

- readline=8.2=h5eee18b_0

|

| 97 |

+

- requests=2.29.0=py310h06a4308_0

|

| 98 |

+

- setuptools=67.8.0=py310h06a4308_0

|

| 99 |