Update README.md

Browse files

README.md

CHANGED

|

@@ -3,939 +3,6 @@ license: mit

|

|

| 3 |

pipeline_tag: image-text-to-text

|

| 4 |

---

|

| 5 |

|

| 6 |

-

#

|

|

|

|

| 7 |

|

| 8 |

-

[\[📂 GitHub\]](https://github.com/OpenGVLab/InternVL) [\[🆕 Blog\]](https://internvl.github.io/blog/) [\[📜 InternVL 1.0 Paper\]](https://arxiv.org/abs/2312.14238) [\[📜 InternVL 1.5 Report\]](https://arxiv.org/abs/2404.16821)

|

| 9 |

-

|

| 10 |

-

[\[🗨️ Chat Demo\]](https://internvl.opengvlab.com/) [\[🤗 HF Demo\]](https://huggingface.co/spaces/OpenGVLab/InternVL) [\[🚀 Quick Start\]](#quick-start) [\[📖 中文解读\]](https://zhuanlan.zhihu.com/p/706547971) \[🌟 [魔搭社区](https://modelscope.cn/organization/OpenGVLab) | [教程](https://mp.weixin.qq.com/s/OUaVLkxlk1zhFb1cvMCFjg) \]

|

| 11 |

-

|

| 12 |

-

[切换至中文版](#简介)

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

## Introduction

|

| 17 |

-

|

| 18 |

-

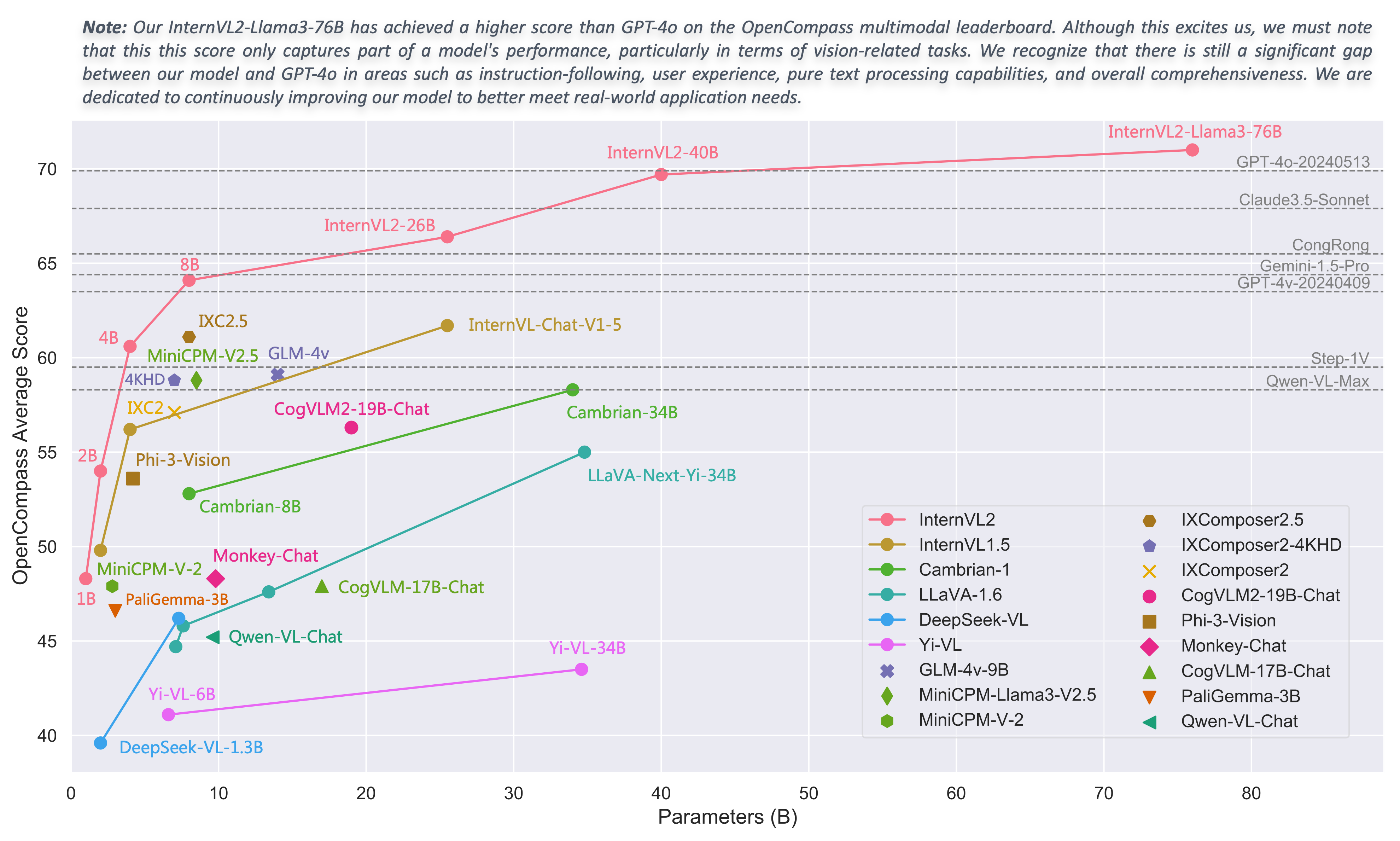

We are excited to announce the release of InternVL 2.0, the latest addition to the InternVL series of multimodal large language models. InternVL 2.0 features a variety of **instruction-tuned models**, ranging from 1 billion to 108 billion parameters. This repository contains the instruction-tuned InternVL2-8B model.

|

| 19 |

-

|

| 20 |

-

Compared to the state-of-the-art open-source multimodal large language models, InternVL 2.0 surpasses most open-source models. It demonstrates competitive performance on par with proprietary commercial models across various capabilities, including document and chart comprehension, infographics QA, scene text understanding and OCR tasks, scientific and mathematical problem solving, as well as cultural understanding and integrated multimodal capabilities.

|

| 21 |

-

|

| 22 |

-

InternVL 2.0 is trained with an 8k context window and utilizes training data consisting of long texts, multiple images, and videos, significantly improving its ability to handle these types of inputs compared to InternVL 1.5. For more details, please refer to our blog and GitHub.

|

| 23 |

-

|

| 24 |

-

| Model Name | Vision Part | Language Part | HF Link | MS Link |

|

| 25 |

-

| :------------------: | :---------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------: | :--------------------------------------------------------------: | :--------------------------------------------------------------------: |

|

| 26 |

-

| InternVL2-1B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [Qwen2-0.5B-Instruct](https://huggingface.co/Qwen/Qwen2-0.5B-Instruct) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-1B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-1B) |

|

| 27 |

-

| InternVL2-2B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [internlm2-chat-1_8b](https://huggingface.co/internlm/internlm2-chat-1_8b) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-2B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-2B) |

|

| 28 |

-

| InternVL2-4B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [Phi-3-mini-128k-instruct](https://huggingface.co/microsoft/Phi-3-mini-128k-instruct) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-4B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-4B) |

|

| 29 |

-

| InternVL2-8B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-8B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-8B) |

|

| 30 |

-

| InternVL2-26B | [InternViT-6B-448px-V1-5](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | [internlm2-chat-20b](https://huggingface.co/internlm/internlm2-chat-20b) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-26B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-26B) |

|

| 31 |

-

| InternVL2-40B | [InternViT-6B-448px-V1-5](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | [Nous-Hermes-2-Yi-34B](https://huggingface.co/NousResearch/Nous-Hermes-2-Yi-34B) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-40B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-40B) |

|

| 32 |

-

| InternVL2-Llama3-76B | [InternViT-6B-448px-V1-5](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | [Hermes-2-Theta-Llama-3-70B](https://huggingface.co/NousResearch/Hermes-2-Theta-Llama-3-70B) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-Llama3-76B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-Llama3-76B) |

|

| 33 |

-

|

| 34 |

-

## Model Details

|

| 35 |

-

|

| 36 |

-

InternVL 2.0 is a multimodal large language model series, featuring models of various sizes. For each size, we release instruction-tuned models optimized for multimodal tasks. InternVL2-8B consists of [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px), an MLP projector, and [internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat).

|

| 37 |

-

|

| 38 |

-

## Performance

|

| 39 |

-

|

| 40 |

-

### Image Benchmarks

|

| 41 |

-

|

| 42 |

-

| Benchmark | MiniCPM-Llama3-V-2_5 | InternVL-Chat-V1-5 | InternVL2-8B |

|

| 43 |

-

| :--------------------------: | :------------------: | :----------------: | :----------: |

|

| 44 |

-

| Model Size | 8.5B | 25.5B | 8.1B |

|

| 45 |

-

| | | | |

|

| 46 |

-

| DocVQA<sub>test</sub> | 84.8 | 90.9 | 91.6 |

|

| 47 |

-

| ChartQA<sub>test</sub> | - | 83.8 | 83.3 |

|

| 48 |

-

| InfoVQA<sub>test</sub> | - | 72.5 | 74.8 |

|

| 49 |

-

| TextVQA<sub>val</sub> | 76.6 | 80.6 | 77.4 |

|

| 50 |

-

| OCRBench | 725 | 724 | 794 |

|

| 51 |

-

| MME<sub>sum</sub> | 2024.6 | 2187.8 | 2210.3 |

|

| 52 |

-

| RealWorldQA | 63.5 | 66.0 | 64.4 |

|

| 53 |

-

| AI2D<sub>test</sub> | 78.4 | 80.7 | 83.8 |

|

| 54 |

-

| MMMU<sub>val</sub> | 45.8 | 45.2 / 46.8 | 49.3 / 51.2 |

|

| 55 |

-

| MMBench-EN<sub>test</sub> | 77.2 | 82.2 | 81.7 |

|

| 56 |

-

| MMBench-CN<sub>test</sub> | 74.2 | 82.0 | 81.2 |

|

| 57 |

-

| CCBench<sub>dev</sub> | 45.9 | 69.8 | 75.9 |

|

| 58 |

-

| MMVet<sub>GPT-4-0613</sub> | - | 62.8 | 60.0 |

|

| 59 |

-

| MMVet<sub>GPT-4-Turbo</sub> | 52.8 | 55.4 | 54.2 |

|

| 60 |

-

| SEED-Image | 72.3 | 76.0 | 76.2 |

|

| 61 |

-

| HallBench<sub>avg</sub> | 42.4 | 49.3 | 45.2 |

|

| 62 |

-

| MathVista<sub>testmini</sub> | 54.3 | 53.5 | 58.3 |

|

| 63 |

-

| OpenCompass<sub>avg</sub> | 58.8 | 61.7 | 64.1 |

|

| 64 |

-

|

| 65 |

-

- We simultaneously use InternVL and VLMEvalKit repositories for model evaluation. Specifically, the results reported for DocVQA, ChartQA, InfoVQA, TextVQA, MME, AI2D, MMBench, CCBench, MMVet, and SEED-Image were tested using the InternVL repository. OCRBench, RealWorldQA, HallBench, and MathVista were evaluated using the VLMEvalKit.

|

| 66 |

-

|

| 67 |

-

- For MMMU, we report both the original scores (left side: evaluated using the InternVL codebase for InternVL series models, and sourced from technical reports or webpages for other models) and the VLMEvalKit scores (right side: collected from the OpenCompass leaderboard).

|

| 68 |

-

|

| 69 |

-

- Please note that evaluating the same model using different testing toolkits like InternVL and VLMEvalKit can result in slight differences, which is normal. Updates to code versions and variations in environment and hardware can also cause minor discrepancies in results.

|

| 70 |

-

|

| 71 |

-

### Video Benchmarks

|

| 72 |

-

|

| 73 |

-

| Benchmark | VideoChat2-HD-Mistral | Video-CCAM-9B | InternVL2-4B | InternVL2-8B |

|

| 74 |

-

| :-------------------------: | :-------------------: | :-----------: | :----------: | :----------: |

|

| 75 |

-

| Model Size | 7B | 9B | 4.2B | 8.1B |

|

| 76 |

-

| | | | | |

|

| 77 |

-

| MVBench | 60.4 | 60.7 | 63.7 | 66.4 |

|

| 78 |

-

| MMBench-Video<sub>8f</sub> | - | - | 1.10 | 1.19 |

|

| 79 |

-

| MMBench-Video<sub>16f</sub> | - | - | 1.18 | 1.28 |

|

| 80 |

-

| Video-MME<br>w/o subs | 42.3 | 50.6 | 51.4 | 54.0 |

|

| 81 |

-

| Video-MME<br>w subs | 54.6 | 54.9 | 53.4 | 56.9 |

|

| 82 |

-

|

| 83 |

-

- We evaluate our models on MVBench and Video-MME by extracting 16 frames from each video, and each frame was resized to a 448x448 image.

|

| 84 |

-

|

| 85 |

-

### Grounding Benchmarks

|

| 86 |

-

|

| 87 |

-

| Model | avg. | RefCOCO<br>(val) | RefCOCO<br>(testA) | RefCOCO<br>(testB) | RefCOCO+<br>(val) | RefCOCO+<br>(testA) | RefCOCO+<br>(testB) | RefCOCO‑g<br>(val) | RefCOCO‑g<br>(test) |

|

| 88 |

-

| :----------------------------: | :--: | :--------------: | :----------------: | :----------------: | :---------------: | :-----------------: | :-----------------: | :----------------: | :-----------------: |

|

| 89 |

-

| UNINEXT-H<br>(Specialist SOTA) | 88.9 | 92.6 | 94.3 | 91.5 | 85.2 | 89.6 | 79.8 | 88.7 | 89.4 |

|

| 90 |

-

| | | | | | | | | | |

|

| 91 |

-

| Mini-InternVL-<br>Chat-2B-V1-5 | 75.8 | 80.7 | 86.7 | 72.9 | 72.5 | 82.3 | 60.8 | 75.6 | 74.9 |

|

| 92 |

-

| Mini-InternVL-<br>Chat-4B-V1-5 | 84.4 | 88.0 | 91.4 | 83.5 | 81.5 | 87.4 | 73.8 | 84.7 | 84.6 |

|

| 93 |

-

| InternVL‑Chat‑V1‑5 | 88.8 | 91.4 | 93.7 | 87.1 | 87.0 | 92.3 | 80.9 | 88.5 | 89.3 |

|

| 94 |

-

| | | | | | | | | | |

|

| 95 |

-

| InternVL2‑1B | 79.9 | 83.6 | 88.7 | 79.8 | 76.0 | 83.6 | 67.7 | 80.2 | 79.9 |

|

| 96 |

-

| InternVL2‑2B | 77.7 | 82.3 | 88.2 | 75.9 | 73.5 | 82.8 | 63.3 | 77.6 | 78.3 |

|

| 97 |

-

| InternVL2‑4B | 84.4 | 88.5 | 91.2 | 83.9 | 81.2 | 87.2 | 73.8 | 84.6 | 84.6 |

|

| 98 |

-

| InternVL2‑8B | 82.9 | 87.1 | 91.1 | 80.7 | 79.8 | 87.9 | 71.4 | 82.7 | 82.7 |

|

| 99 |

-

| InternVL2‑26B | 88.5 | 91.2 | 93.3 | 87.4 | 86.8 | 91.0 | 81.2 | 88.5 | 88.6 |

|

| 100 |

-

| InternVL2‑40B | 90.3 | 93.0 | 94.7 | 89.2 | 88.5 | 92.8 | 83.6 | 90.3 | 90.6 |

|

| 101 |

-

| InternVL2-<br>Llama3‑76B | 90.0 | 92.2 | 94.8 | 88.4 | 88.8 | 93.1 | 82.8 | 89.5 | 90.3 |

|

| 102 |

-

|

| 103 |

-

- We use the following prompt to evaluate InternVL's grounding ability: `Please provide the bounding box coordinates of the region this sentence describes: <ref>{}</ref>`

|

| 104 |

-

|

| 105 |

-

Limitations: Although we have made efforts to ensure the safety of the model during the training process and to encourage the model to generate text that complies with ethical and legal requirements, the model may still produce unexpected outputs due to its size and probabilistic generation paradigm. For example, the generated responses may contain biases, discrimination, or other harmful content. Please do not propagate such content. We are not responsible for any consequences resulting from the dissemination of harmful information.

|

| 106 |

-

|

| 107 |

-

### Invitation to Evaluate InternVL

|

| 108 |

-

|

| 109 |

-

We welcome MLLM benchmark developers to assess our InternVL1.5 and InternVL2 series models. If you need to add your evaluation results here, please contact me at [wztxy89@163.com](mailto:wztxy89@163.com).

|

| 110 |

-

|

| 111 |

-

## Quick Start

|

| 112 |

-

|

| 113 |

-

We provide an example code to run InternVL2-8B using `transformers`.

|

| 114 |

-

|

| 115 |

-

We also welcome you to experience the InternVL2 series models in our [online demo](https://internvl.opengvlab.com/).

|

| 116 |

-

|

| 117 |

-

> Please use transformers==4.37.2 to ensure the model works normally.

|

| 118 |

-

|

| 119 |

-

### Model Loading

|

| 120 |

-

|

| 121 |

-

#### 16-bit (bf16 / fp16)

|

| 122 |

-

|

| 123 |

-

```python

|

| 124 |

-

import torch

|

| 125 |

-

from transformers import AutoTokenizer, AutoModel

|

| 126 |

-

path = "OpenGVLab/InternVL2-8B"

|

| 127 |

-

model = AutoModel.from_pretrained(

|

| 128 |

-

path,

|

| 129 |

-

torch_dtype=torch.bfloat16,

|

| 130 |

-

low_cpu_mem_usage=True,

|

| 131 |

-

trust_remote_code=True).eval().cuda()

|

| 132 |

-

```

|

| 133 |

-

|

| 134 |

-

#### BNB 8-bit Quantization

|

| 135 |

-

|

| 136 |

-

```python

|

| 137 |

-

import torch

|

| 138 |

-

from transformers import AutoTokenizer, AutoModel

|

| 139 |

-

path = "OpenGVLab/InternVL2-8B"

|

| 140 |

-

model = AutoModel.from_pretrained(

|

| 141 |

-

path,

|

| 142 |

-

torch_dtype=torch.bfloat16,

|

| 143 |

-

load_in_8bit=True,

|

| 144 |

-

low_cpu_mem_usage=True,

|

| 145 |

-

trust_remote_code=True).eval()

|

| 146 |

-

```

|

| 147 |

-

|

| 148 |

-

#### BNB 4-bit Quantization

|

| 149 |

-

|

| 150 |

-

```python

|

| 151 |

-

import torch

|

| 152 |

-

from transformers import AutoTokenizer, AutoModel

|

| 153 |

-

path = "OpenGVLab/InternVL2-8B"

|

| 154 |

-

model = AutoModel.from_pretrained(

|

| 155 |

-

path,

|

| 156 |

-

torch_dtype=torch.bfloat16,

|

| 157 |

-

load_in_4bit=True,

|

| 158 |

-

low_cpu_mem_usage=True,

|

| 159 |

-

trust_remote_code=True).eval()

|

| 160 |

-

```

|

| 161 |

-

|

| 162 |

-

#### Multiple GPUs

|

| 163 |

-

|

| 164 |

-

The reason for writing the code this way is to avoid errors that occur during multi-GPU inference due to tensors not being on the same device. By ensuring that the first and last layers of the large language model (LLM) are on the same device, we prevent such errors.

|

| 165 |

-

|

| 166 |

-

```python

|

| 167 |

-

import math

|

| 168 |

-

import torch

|

| 169 |

-

from transformers import AutoTokenizer, AutoModel

|

| 170 |

-

|

| 171 |

-

def split_model(model_name):

|

| 172 |

-

device_map = {}

|

| 173 |

-

world_size = torch.cuda.device_count()

|

| 174 |

-

num_layers = {

|

| 175 |

-

'InternVL2-1B': 24, 'InternVL2-2B': 24, 'InternVL2-4B': 32, 'InternVL2-8B': 32,

|

| 176 |

-

'InternVL2-26B': 48, 'InternVL2-40B': 60, 'InternVL2-Llama3-76B': 80}[model_name]

|

| 177 |

-

# Since the first GPU will be used for ViT, treat it as half a GPU.

|

| 178 |

-

num_layers_per_gpu = math.ceil(num_layers / (world_size - 0.5))

|

| 179 |

-

num_layers_per_gpu = [num_layers_per_gpu] * world_size

|

| 180 |

-

num_layers_per_gpu[0] = math.ceil(num_layers_per_gpu[0] * 0.5)

|

| 181 |

-

layer_cnt = 0

|

| 182 |

-

for i, num_layer in enumerate(num_layers_per_gpu):

|

| 183 |

-

for j in range(num_layer):

|

| 184 |

-

device_map[f'language_model.model.layers.{layer_cnt}'] = i

|

| 185 |

-

layer_cnt += 1

|

| 186 |

-

device_map['vision_model'] = 0

|

| 187 |

-

device_map['mlp1'] = 0

|

| 188 |

-

device_map['language_model.model.tok_embeddings'] = 0

|

| 189 |

-

device_map['language_model.model.embed_tokens'] = 0

|

| 190 |

-

device_map['language_model.output'] = 0

|

| 191 |

-

device_map['language_model.model.norm'] = 0

|

| 192 |

-

device_map['language_model.lm_head'] = 0

|

| 193 |

-

device_map[f'language_model.model.layers.{num_layers - 1}'] = 0

|

| 194 |

-

|

| 195 |

-

return device_map

|

| 196 |

-

|

| 197 |

-

path = "OpenGVLab/InternVL2-8B"

|

| 198 |

-

device_map = split_model('InternVL2-8B')

|

| 199 |

-

model = AutoModel.from_pretrained(

|

| 200 |

-

path,

|

| 201 |

-

torch_dtype=torch.bfloat16,

|

| 202 |

-

low_cpu_mem_usage=True,

|

| 203 |

-

trust_remote_code=True,

|

| 204 |

-

device_map=device_map).eval()

|

| 205 |

-

```

|

| 206 |

-

|

| 207 |

-

### Inference with Transformers

|

| 208 |

-

|

| 209 |

-

```python

|

| 210 |

-

import numpy as np

|

| 211 |

-

import torch

|

| 212 |

-

import torchvision.transforms as T

|

| 213 |

-

from decord import VideoReader, cpu

|

| 214 |

-

from PIL import Image

|

| 215 |

-

from torchvision.transforms.functional import InterpolationMode

|

| 216 |

-

from transformers import AutoModel, AutoTokenizer

|

| 217 |

-

|

| 218 |

-

IMAGENET_MEAN = (0.485, 0.456, 0.406)

|

| 219 |

-

IMAGENET_STD = (0.229, 0.224, 0.225)

|

| 220 |

-

|

| 221 |

-

def build_transform(input_size):

|

| 222 |

-

MEAN, STD = IMAGENET_MEAN, IMAGENET_STD

|

| 223 |

-

transform = T.Compose([

|

| 224 |

-

T.Lambda(lambda img: img.convert('RGB') if img.mode != 'RGB' else img),

|

| 225 |

-

T.Resize((input_size, input_size), interpolation=InterpolationMode.BICUBIC),

|

| 226 |

-

T.ToTensor(),

|

| 227 |

-

T.Normalize(mean=MEAN, std=STD)

|

| 228 |

-

])

|

| 229 |

-

return transform

|

| 230 |

-

|

| 231 |

-

def find_closest_aspect_ratio(aspect_ratio, target_ratios, width, height, image_size):

|

| 232 |

-

best_ratio_diff = float('inf')

|

| 233 |

-

best_ratio = (1, 1)

|

| 234 |

-

area = width * height

|

| 235 |

-

for ratio in target_ratios:

|

| 236 |

-

target_aspect_ratio = ratio[0] / ratio[1]

|

| 237 |

-

ratio_diff = abs(aspect_ratio - target_aspect_ratio)

|

| 238 |

-

if ratio_diff < best_ratio_diff:

|

| 239 |

-

best_ratio_diff = ratio_diff

|

| 240 |

-

best_ratio = ratio

|

| 241 |

-

elif ratio_diff == best_ratio_diff:

|

| 242 |

-

if area > 0.5 * image_size * image_size * ratio[0] * ratio[1]:

|

| 243 |

-

best_ratio = ratio

|

| 244 |

-

return best_ratio

|

| 245 |

-

|

| 246 |

-

def dynamic_preprocess(image, min_num=1, max_num=12, image_size=448, use_thumbnail=False):

|

| 247 |

-

orig_width, orig_height = image.size

|

| 248 |

-

aspect_ratio = orig_width / orig_height

|

| 249 |

-

|

| 250 |

-

# calculate the existing image aspect ratio

|

| 251 |

-

target_ratios = set(

|

| 252 |

-

(i, j) for n in range(min_num, max_num + 1) for i in range(1, n + 1) for j in range(1, n + 1) if

|

| 253 |

-

i * j <= max_num and i * j >= min_num)

|

| 254 |

-

target_ratios = sorted(target_ratios, key=lambda x: x[0] * x[1])

|

| 255 |

-

|

| 256 |

-

# find the closest aspect ratio to the target

|

| 257 |

-

target_aspect_ratio = find_closest_aspect_ratio(

|

| 258 |

-

aspect_ratio, target_ratios, orig_width, orig_height, image_size)

|

| 259 |

-

|

| 260 |

-

# calculate the target width and height

|

| 261 |

-

target_width = image_size * target_aspect_ratio[0]

|

| 262 |

-

target_height = image_size * target_aspect_ratio[1]

|

| 263 |

-

blocks = target_aspect_ratio[0] * target_aspect_ratio[1]

|

| 264 |

-

|

| 265 |

-

# resize the image

|

| 266 |

-

resized_img = image.resize((target_width, target_height))

|

| 267 |

-

processed_images = []

|

| 268 |

-

for i in range(blocks):

|

| 269 |

-

box = (

|

| 270 |

-

(i % (target_width // image_size)) * image_size,

|

| 271 |

-

(i // (target_width // image_size)) * image_size,

|

| 272 |

-

((i % (target_width // image_size)) + 1) * image_size,

|

| 273 |

-

((i // (target_width // image_size)) + 1) * image_size

|

| 274 |

-

)

|

| 275 |

-

# split the image

|

| 276 |

-

split_img = resized_img.crop(box)

|

| 277 |

-

processed_images.append(split_img)

|

| 278 |

-

assert len(processed_images) == blocks

|

| 279 |

-

if use_thumbnail and len(processed_images) != 1:

|

| 280 |

-

thumbnail_img = image.resize((image_size, image_size))

|

| 281 |

-

processed_images.append(thumbnail_img)

|

| 282 |

-

return processed_images

|

| 283 |

-

|

| 284 |

-

def load_image(image_file, input_size=448, max_num=12):

|

| 285 |

-

image = Image.open(image_file).convert('RGB')

|

| 286 |

-

transform = build_transform(input_size=input_size)

|

| 287 |

-

images = dynamic_preprocess(image, image_size=input_size, use_thumbnail=True, max_num=max_num)

|

| 288 |

-

pixel_values = [transform(image) for image in images]

|

| 289 |

-

pixel_values = torch.stack(pixel_values)

|

| 290 |

-

return pixel_values

|

| 291 |

-

|

| 292 |

-

# If you want to load a model using multiple GPUs, please refer to the `Multiple GPUs` section.

|

| 293 |

-

path = 'OpenGVLab/InternVL2-8B'

|

| 294 |

-

model = AutoModel.from_pretrained(

|

| 295 |

-

path,

|

| 296 |

-

torch_dtype=torch.bfloat16,

|

| 297 |

-

low_cpu_mem_usage=True,

|

| 298 |

-

trust_remote_code=True).eval().cuda()

|

| 299 |

-

tokenizer = AutoTokenizer.from_pretrained(path, trust_remote_code=True, use_fast=False)

|

| 300 |

-

|

| 301 |

-

# set the max number of tiles in `max_num`

|

| 302 |

-

pixel_values = load_image('./examples/image1.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 303 |

-

generation_config = dict(max_new_tokens=1024, do_sample=False)

|

| 304 |

-

|

| 305 |

-

# pure-text conversation (纯文本对话)

|

| 306 |

-

question = 'Hello, who are you?'

|

| 307 |

-

response, history = model.chat(tokenizer, None, question, generation_config, history=None, return_history=True)

|

| 308 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 309 |

-

|

| 310 |

-

question = 'Can you tell me a story?'

|

| 311 |

-

response, history = model.chat(tokenizer, None, question, generation_config, history=history, return_history=True)

|

| 312 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 313 |

-

|

| 314 |

-

# single-image single-round conversation (单图单轮对话)

|

| 315 |

-

question = '<image>\nPlease describe the image shortly.'

|

| 316 |

-

response = model.chat(tokenizer, pixel_values, question, generation_config)

|

| 317 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 318 |

-

|

| 319 |

-

# single-image multi-round conversation (单图多轮对话)

|

| 320 |

-

question = '<image>\nPlease describe the image in detail.'

|

| 321 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config, history=None, return_history=True)

|

| 322 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 323 |

-

|

| 324 |

-

question = 'Please write a poem according to the image.'

|

| 325 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config, history=history, return_history=True)

|

| 326 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 327 |

-

|

| 328 |

-

# multi-image multi-round conversation, combined images (多图多轮对话,拼接图像)

|

| 329 |

-

pixel_values1 = load_image('./examples/image1.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 330 |

-

pixel_values2 = load_image('./examples/image2.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 331 |

-

pixel_values = torch.cat((pixel_values1, pixel_values2), dim=0)

|

| 332 |

-

|

| 333 |

-

question = '<image>\nDescribe the two images in detail.'

|

| 334 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config,

|

| 335 |

-

history=None, return_history=True)

|

| 336 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 337 |

-

|

| 338 |

-

question = 'What are the similarities and differences between these two images.'

|

| 339 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config,

|

| 340 |

-

history=history, return_history=True)

|

| 341 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 342 |

-

|

| 343 |

-

# multi-image multi-round conversation, separate images (多图多轮对话,独立图像)

|

| 344 |

-

pixel_values1 = load_image('./examples/image1.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 345 |

-

pixel_values2 = load_image('./examples/image2.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 346 |

-

pixel_values = torch.cat((pixel_values1, pixel_values2), dim=0)

|

| 347 |

-

num_patches_list = [pixel_values1.size(0), pixel_values2.size(0)]

|

| 348 |

-

|

| 349 |

-

question = 'Image-1: <image>\nImage-2: <image>\nDescribe the two images in detail.'

|

| 350 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config,

|

| 351 |

-

num_patches_list=num_patches_list,

|

| 352 |

-

history=None, return_history=True)

|

| 353 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 354 |

-

|

| 355 |

-

question = 'What are the similarities and differences between these two images.'

|

| 356 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config,

|

| 357 |

-

num_patches_list=num_patches_list,

|

| 358 |

-

history=history, return_history=True)

|

| 359 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 360 |

-

|

| 361 |

-

# batch inference, single image per sample (单图批处理)

|

| 362 |

-

pixel_values1 = load_image('./examples/image1.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 363 |

-

pixel_values2 = load_image('./examples/image2.jpg', max_num=12).to(torch.bfloat16).cuda()

|

| 364 |

-

num_patches_list = [pixel_values1.size(0), pixel_values2.size(0)]

|

| 365 |

-

pixel_values = torch.cat((pixel_values1, pixel_values2), dim=0)

|

| 366 |

-

|

| 367 |

-

questions = ['<image>\nDescribe the image in detail.'] * len(num_patches_list)

|

| 368 |

-

responses = model.batch_chat(tokenizer, pixel_values,

|

| 369 |

-

num_patches_list=num_patches_list,

|

| 370 |

-

questions=questions,

|

| 371 |

-

generation_config=generation_config)

|

| 372 |

-

for question, response in zip(questions, responses):

|

| 373 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 374 |

-

|

| 375 |

-

# video multi-round conversation (视频多轮对话)

|

| 376 |

-

def get_index(bound, fps, max_frame, first_idx=0, num_segments=32):

|

| 377 |

-

if bound:

|

| 378 |

-

start, end = bound[0], bound[1]

|

| 379 |

-

else:

|

| 380 |

-

start, end = -100000, 100000

|

| 381 |

-

start_idx = max(first_idx, round(start * fps))

|

| 382 |

-

end_idx = min(round(end * fps), max_frame)

|

| 383 |

-

seg_size = float(end_idx - start_idx) / num_segments

|

| 384 |

-

frame_indices = np.array([

|

| 385 |

-

int(start_idx + (seg_size / 2) + np.round(seg_size * idx))

|

| 386 |

-

for idx in range(num_segments)

|

| 387 |

-

])

|

| 388 |

-

return frame_indices

|

| 389 |

-

|

| 390 |

-

def load_video(video_path, bound=None, input_size=448, max_num=1, num_segments=32):

|

| 391 |

-

vr = VideoReader(video_path, ctx=cpu(0), num_threads=1)

|

| 392 |

-

max_frame = len(vr) - 1

|

| 393 |

-

fps = float(vr.get_avg_fps())

|

| 394 |

-

|

| 395 |

-

pixel_values_list, num_patches_list = [], []

|

| 396 |

-

transform = build_transform(input_size=input_size)

|

| 397 |

-

frame_indices = get_index(bound, fps, max_frame, first_idx=0, num_segments=num_segments)

|

| 398 |

-

for frame_index in frame_indices:

|

| 399 |

-

img = Image.fromarray(vr[frame_index].asnumpy()).convert('RGB')

|

| 400 |

-

img = dynamic_preprocess(img, image_size=input_size, use_thumbnail=True, max_num=max_num)

|

| 401 |

-

pixel_values = [transform(tile) for tile in img]

|

| 402 |

-

pixel_values = torch.stack(pixel_values)

|

| 403 |

-

num_patches_list.append(pixel_values.shape[0])

|

| 404 |

-

pixel_values_list.append(pixel_values)

|

| 405 |

-

pixel_values = torch.cat(pixel_values_list)

|

| 406 |

-

return pixel_values, num_patches_list

|

| 407 |

-

|

| 408 |

-

video_path = './examples/red-panda.mp4'

|

| 409 |

-

pixel_values, num_patches_list = load_video(video_path, num_segments=8, max_num=1)

|

| 410 |

-

pixel_values = pixel_values.to(torch.bfloat16).cuda()

|

| 411 |

-

video_prefix = ''.join([f'Frame{i+1}: <image>\n' for i in range(len(num_patches_list))])

|

| 412 |

-

question = video_prefix + 'What is the red panda doing?'

|

| 413 |

-

# Frame1: <image>\nFrame2: <image>\n...\nFrame8: <image>\n{question}

|

| 414 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config,

|

| 415 |

-

num_patches_list=num_patches_list, history=None, return_history=True)

|

| 416 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 417 |

-

|

| 418 |

-

question = 'Describe this video in detail. Don\'t repeat.'

|

| 419 |

-

response, history = model.chat(tokenizer, pixel_values, question, generation_config,

|

| 420 |

-

num_patches_list=num_patches_list, history=history, return_history=True)

|

| 421 |

-

print(f'User: {question}\nAssistant: {response}')

|

| 422 |

-

```

|

| 423 |

-

|

| 424 |

-

#### Streaming output

|

| 425 |

-

|

| 426 |

-

Besides this method, you can also use the following code to get streamed output.

|

| 427 |

-

|

| 428 |

-

```python

|

| 429 |

-

from transformers import TextIteratorStreamer

|

| 430 |

-

from threading import Thread

|

| 431 |

-

|

| 432 |

-

# Initialize the streamer

|

| 433 |

-

streamer = TextIteratorStreamer(tokenizer, skip_prompt=True, skip_special_tokens=True, timeout=10)

|

| 434 |

-

# Define the generation configuration

|

| 435 |

-

generation_config = dict(max_new_tokens=1024, do_sample=False, streamer=streamer)

|

| 436 |

-

# Start the model chat in a separate thread

|

| 437 |

-

thread = Thread(target=model.chat, kwargs=dict(

|

| 438 |

-

tokenizer=tokenizer, pixel_values=pixel_values, question=question,

|

| 439 |

-

history=None, return_history=False, generation_config=generation_config,

|

| 440 |

-

))

|

| 441 |

-

thread.start()

|

| 442 |

-

|

| 443 |

-

# Initialize an empty string to store the generated text

|

| 444 |

-

generated_text = ''

|

| 445 |

-

# Loop through the streamer to get the new text as it is generated

|

| 446 |

-

for new_text in streamer:

|

| 447 |

-

if new_text == model.conv_template.sep:

|

| 448 |

-

break

|

| 449 |

-

generated_text += new_text

|

| 450 |

-

print(new_text, end='', flush=True) # Print each new chunk of generated text on the same line

|

| 451 |

-

```

|

| 452 |

-

|

| 453 |

-

## Finetune

|

| 454 |

-

|

| 455 |

-

SWIFT from ModelScope community has supported the fine-tuning (Image/Video) of InternVL, please check [this link](https://github.com/modelscope/swift/blob/main/docs/source_en/Multi-Modal/internvl-best-practice.md) for more details.

|

| 456 |

-

|

| 457 |

-

## Deployment

|

| 458 |

-

|

| 459 |

-

### LMDeploy

|

| 460 |

-

|

| 461 |

-

LMDeploy is a toolkit for compressing, deploying, and serving LLM, developed by the MMRazor and MMDeploy teams.

|

| 462 |

-

|

| 463 |

-

```sh

|

| 464 |

-

pip install lmdeploy

|

| 465 |

-

```

|

| 466 |

-

|

| 467 |

-

LMDeploy abstracts the complex inference process of multi-modal Vision-Language Models (VLM) into an easy-to-use pipeline, similar to the Large Language Model (LLM) inference pipeline.

|

| 468 |

-

|

| 469 |

-

#### A 'Hello, world' example

|

| 470 |

-

|

| 471 |

-

```python

|

| 472 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig

|

| 473 |

-

from lmdeploy.vl import load_image

|

| 474 |

-

|

| 475 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 476 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 477 |

-

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg')

|

| 478 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 479 |

-

chat_template_config.meta_instruction = system_prompt

|

| 480 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 481 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 482 |

-

response = pipe(('describe this image', image))

|

| 483 |

-

print(response.text)

|

| 484 |

-

```

|

| 485 |

-

|

| 486 |

-

If `ImportError` occurs while executing this case, please install the required dependency packages as prompted.

|

| 487 |

-

|

| 488 |

-

#### Multi-images inference

|

| 489 |

-

|

| 490 |

-

When dealing with multiple images, you can put them all in one list. Keep in mind that multiple images will lead to a higher number of input tokens, and as a result, the size of the context window typically needs to be increased.

|

| 491 |

-

|

| 492 |

-

> Warning: Due to the scarcity of multi-image conversation data, the performance on multi-image tasks may be unstable, and it may require multiple attempts to achieve satisfactory results.

|

| 493 |

-

|

| 494 |

-

```python

|

| 495 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig

|

| 496 |

-

from lmdeploy.vl import load_image

|

| 497 |

-

from lmdeploy.vl.constants import IMAGE_TOKEN

|

| 498 |

-

|

| 499 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 500 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 501 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 502 |

-

chat_template_config.meta_instruction = system_prompt

|

| 503 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 504 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 505 |

-

|

| 506 |

-

image_urls=[

|

| 507 |

-

'https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg',

|

| 508 |

-

'https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/det.jpg'

|

| 509 |

-

]

|

| 510 |

-

|

| 511 |

-

images = [load_image(img_url) for img_url in image_urls]

|

| 512 |

-

# Numbering images improves multi-image conversations

|

| 513 |

-

response = pipe((f'Image-1: {IMAGE_TOKEN}\nImage-2: {IMAGE_TOKEN}\ndescribe these two images', images))

|

| 514 |

-

print(response.text)

|

| 515 |

-

```

|

| 516 |

-

|

| 517 |

-

#### Batch prompts inference

|

| 518 |

-

|

| 519 |

-

Conducting inference with batch prompts is quite straightforward; just place them within a list structure:

|

| 520 |

-

|

| 521 |

-

```python

|

| 522 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig

|

| 523 |

-

from lmdeploy.vl import load_image

|

| 524 |

-

|

| 525 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 526 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 527 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 528 |

-

chat_template_config.meta_instruction = system_prompt

|

| 529 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 530 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 531 |

-

|

| 532 |

-

image_urls=[

|

| 533 |

-

"https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg",

|

| 534 |

-

"https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/det.jpg"

|

| 535 |

-

]

|

| 536 |

-

prompts = [('describe this image', load_image(img_url)) for img_url in image_urls]

|

| 537 |

-

response = pipe(prompts)

|

| 538 |

-

print(response)

|

| 539 |

-

```

|

| 540 |

-

|

| 541 |

-

#### Multi-turn conversation

|

| 542 |

-

|

| 543 |

-

There are two ways to do the multi-turn conversations with the pipeline. One is to construct messages according to the format of OpenAI and use above introduced method, the other is to use the `pipeline.chat` interface.

|

| 544 |

-

|

| 545 |

-

```python

|

| 546 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig, GenerationConfig

|

| 547 |

-

from lmdeploy.vl import load_image

|

| 548 |

-

|

| 549 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 550 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 551 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 552 |

-

chat_template_config.meta_instruction = system_prompt

|

| 553 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 554 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 555 |

-

|

| 556 |

-

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg')

|

| 557 |

-

gen_config = GenerationConfig(top_k=40, top_p=0.8, temperature=0.8)

|

| 558 |

-

sess = pipe.chat(('describe this image', image), gen_config=gen_config)

|

| 559 |

-

print(sess.response.text)

|

| 560 |

-

sess = pipe.chat('What is the woman doing?', session=sess, gen_config=gen_config)

|

| 561 |

-

print(sess.response.text)

|

| 562 |

-

```

|

| 563 |

-

|

| 564 |

-

#### Service

|

| 565 |

-

|

| 566 |

-

To deploy InternVL2 as an API, please configure the chat template config first. Create the following JSON file `chat_template.json`.

|

| 567 |

-

|

| 568 |

-

```json

|

| 569 |

-

{

|

| 570 |

-

"model_name":"internvl-internlm2",

|

| 571 |

-

"meta_instruction":"我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。",

|

| 572 |

-

"stop_words":["<|im_start|>", "<|im_end|>"]

|

| 573 |

-

}

|

| 574 |

-

```

|

| 575 |

-

|

| 576 |

-

LMDeploy's `api_server` enables models to be easily packed into services with a single command. The provided RESTful APIs are compatible with OpenAI's interfaces. Below are an example of service startup:

|

| 577 |

-

|

| 578 |

-

```shell

|

| 579 |

-

lmdeploy serve api_server OpenGVLab/InternVL2-8B --model-name InternVL2-8B --backend turbomind --server-port 23333 --chat-template chat_template.json

|

| 580 |

-

```

|

| 581 |

-

|

| 582 |

-

To use the OpenAI-style interface, you need to install OpenAI:

|

| 583 |

-

|

| 584 |

-

```shell

|

| 585 |

-

pip install openai

|

| 586 |

-

```

|

| 587 |

-

|

| 588 |

-

Then, use the code below to make the API call:

|

| 589 |

-

|

| 590 |

-

```python

|

| 591 |

-

from openai import OpenAI

|

| 592 |

-

|

| 593 |

-

client = OpenAI(api_key='YOUR_API_KEY', base_url='http://0.0.0.0:23333/v1')

|

| 594 |

-

model_name = client.models.list().data[0].id

|

| 595 |

-

response = client.chat.completions.create(

|

| 596 |

-

model="InternVL2-8B",

|

| 597 |

-

messages=[{

|

| 598 |

-

'role':

|

| 599 |

-

'user',

|

| 600 |

-

'content': [{

|

| 601 |

-

'type': 'text',

|

| 602 |

-

'text': 'describe this image',

|

| 603 |

-

}, {

|

| 604 |

-

'type': 'image_url',

|

| 605 |

-

'image_url': {

|

| 606 |

-

'url':

|

| 607 |

-

'https://modelscope.oss-cn-beijing.aliyuncs.com/resource/tiger.jpeg',

|

| 608 |

-

},

|

| 609 |

-

}],

|

| 610 |

-

}],

|

| 611 |

-

temperature=0.8,

|

| 612 |

-

top_p=0.8)

|

| 613 |

-

print(response)

|

| 614 |

-

```

|

| 615 |

-

|

| 616 |

-

### vLLM

|

| 617 |

-

|

| 618 |

-

TODO

|

| 619 |

-

|

| 620 |

-

### Ollama

|

| 621 |

-

|

| 622 |

-

TODO

|

| 623 |

-

|

| 624 |

-

## License

|

| 625 |

-

|

| 626 |

-

This project is released under the MIT license, while InternLM is licensed under the Apache-2.0 license.

|

| 627 |

-

|

| 628 |

-

## Citation

|

| 629 |

-

|

| 630 |

-

If you find this project useful in your research, please consider citing:

|

| 631 |

-

|

| 632 |

-

```BibTeX

|

| 633 |

-

@article{chen2023internvl,

|

| 634 |

-

title={InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks},

|

| 635 |

-

author={Chen, Zhe and Wu, Jiannan and Wang, Wenhai and Su, Weijie and Chen, Guo and Xing, Sen and Zhong, Muyan and Zhang, Qinglong and Zhu, Xizhou and Lu, Lewei and Li, Bin and Luo, Ping and Lu, Tong and Qiao, Yu and Dai, Jifeng},

|

| 636 |

-

journal={arXiv preprint arXiv:2312.14238},

|

| 637 |

-

year={2023}

|

| 638 |

-

}

|

| 639 |

-

@article{chen2024far,

|

| 640 |

-

title={How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites},

|

| 641 |

-

author={Chen, Zhe and Wang, Weiyun and Tian, Hao and Ye, Shenglong and Gao, Zhangwei and Cui, Erfei and Tong, Wenwen and Hu, Kongzhi and Luo, Jiapeng and Ma, Zheng and others},

|

| 642 |

-

journal={arXiv preprint arXiv:2404.16821},

|

| 643 |

-

year={2024}

|

| 644 |

-

}

|

| 645 |

-

```

|

| 646 |

-

|

| 647 |

-

## 简介

|

| 648 |

-

|

| 649 |

-

我们很高兴宣布 InternVL 2.0 的发布,这是 InternVL 系列多模态大语言模型的最新版本。InternVL 2.0 提供了多种**指令微调**的模型,参数从 10 亿到 1080 亿不等。此仓库包含经过指令微调的 InternVL2-8B 模型。

|

| 650 |

-

|

| 651 |

-

与最先进的开源多模态大语言模型相比,InternVL 2.0 超越了大多数开源模型。它在各种能力上表现出与闭源商业模型相媲美的竞争力,包括文档和图表理解、信息图表问答、场景文本理解和 OCR 任务、科学和数学问题解决,以及文化理解和综合多模态能力。

|

| 652 |

-

|

| 653 |

-

InternVL 2.0 使用 8k 上下文窗口进行训练,训练数据包含长文本、多图和视频数据,与 InternVL 1.5 相比,其处理这些类型输入的能力显著提高。更多详细信息,请参阅我们的博客和 GitHub。

|

| 654 |

-

|

| 655 |

-

| 模型名称 | 视觉部分 | 语言部分 | HF 链接 | MS 链接 |

|

| 656 |

-

| :------------------: | :---------------------------------------------------------------------------------: | :------------------------------------------------------------------------------------------: | :--------------------------------------------------------------: | :--------------------------------------------------------------------: |

|

| 657 |

-

| InternVL2-1B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [Qwen2-0.5B-Instruct](https://huggingface.co/Qwen/Qwen2-0.5B-Instruct) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-1B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-1B) |

|

| 658 |

-

| InternVL2-2B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [internlm2-chat-1_8b](https://huggingface.co/internlm/internlm2-chat-1_8b) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-2B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-2B) |

|

| 659 |

-

| InternVL2-4B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [Phi-3-mini-128k-instruct](https://huggingface.co/microsoft/Phi-3-mini-128k-instruct) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-4B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-4B) |

|

| 660 |

-

| InternVL2-8B | [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px) | [internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-8B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-8B) |

|

| 661 |

-

| InternVL2-26B | [InternViT-6B-448px-V1-5](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | [internlm2-chat-20b](https://huggingface.co/internlm/internlm2-chat-20b) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-26B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-26B) |

|

| 662 |

-

| InternVL2-40B | [InternViT-6B-448px-V1-5](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | [Nous-Hermes-2-Yi-34B](https://huggingface.co/NousResearch/Nous-Hermes-2-Yi-34B) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-40B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-40B) |

|

| 663 |

-

| InternVL2-Llama3-76B | [InternViT-6B-448px-V1-5](https://huggingface.co/OpenGVLab/InternViT-6B-448px-V1-5) | [Hermes-2-Theta-Llama-3-70B](https://huggingface.co/NousResearch/Hermes-2-Theta-Llama-3-70B) | [🤗 link](https://huggingface.co/OpenGVLab/InternVL2-Llama3-76B) | [🤖 link](https://modelscope.cn/models/OpenGVLab/InternVL2-Llama3-76B) |

|

| 664 |

-

|

| 665 |

-

## 模型细节

|

| 666 |

-

|

| 667 |

-

InternVL 2.0 是一个多模态大语言模型系列,包含各种规模的模型。对于每个规模的模型,我们都会发布针对多模态任务优化的指令微调模型。InternVL2-8B 包含 [InternViT-300M-448px](https://huggingface.co/OpenGVLab/InternViT-300M-448px)、一个 MLP 投影器和 [internlm2_5-7b-chat](https://huggingface.co/internlm/internlm2_5-7b-chat)。

|

| 668 |

-

|

| 669 |

-

## 性能测试

|

| 670 |

-

|

| 671 |

-

### 图像相关评测

|

| 672 |

-

|

| 673 |

-

| 评测数据集 | MiniCPM-Llama3-V-2_5 | InternVL-Chat-V1-5 | InternVL2-8B |

|

| 674 |

-

| :--------------------------: | :------------------: | :----------------: | :----------: |

|

| 675 |

-

| 模型大小 | 8.5B | 25.5B | 8.1B |

|

| 676 |

-

| | | | |

|

| 677 |

-

| DocVQA<sub>test</sub> | 84.8 | 90.9 | 91.6 |

|

| 678 |

-

| ChartQA<sub>test</sub> | - | 83.8 | 83.3 |

|

| 679 |

-

| InfoVQA<sub>test</sub> | - | 72.5 | 74.8 |

|

| 680 |

-

| TextVQA<sub>val</sub> | 76.6 | 80.6 | 77.4 |

|

| 681 |

-

| OCRBench | 725 | 724 | 794 |

|

| 682 |

-

| MME<sub>sum</sub> | 2024.6 | 2187.8 | 2210.3 |

|

| 683 |

-

| RealWorldQA | 63.5 | 66.0 | 64.4 |

|

| 684 |

-

| AI2D<sub>test</sub> | 78.4 | 80.7 | 83.8 |

|

| 685 |

-

| MMMU<sub>val</sub> | 45.8 | 45.2 / 46.8 | 49.3 / 51.2 |

|

| 686 |

-

| MMBench-EN<sub>test</sub> | 77.2 | 82.2 | 81.7 |

|

| 687 |

-

| MMBench-CN<sub>test</sub> | 74.2 | 82.0 | 81.2 |

|

| 688 |

-

| CCBench<sub>dev</sub> | 45.9 | 69.8 | 75.9 |

|

| 689 |

-

| MMVet<sub>GPT-4-0613</sub> | - | 62.8 | 60.0 |

|

| 690 |

-

| MMVet<sub>GPT-4-Turbo</sub> | 52.8 | 55.4 | 54.2 |

|

| 691 |

-

| SEED-Image | 72.3 | 76.0 | 76.2 |

|

| 692 |

-

| HallBench<sub>avg</sub> | 42.4 | 49.3 | 45.2 |

|

| 693 |

-

| MathVista<sub>testmini</sub> | 54.3 | 53.5 | 58.3 |

|

| 694 |

-

| OpenCompass<sub>avg</sub> | 58.8 | 61.7 | 64.1 |

|

| 695 |

-

|

| 696 |

-

- 我们同时使用 InternVL 和 VLMEvalKit 仓库进行模型评估。具体来说,DocVQA、ChartQA、InfoVQA、TextVQA、MME、AI2D、MMBench、CCBench、MMVet 和 SEED-Image 的结果是使用 InternVL 仓库测试的。OCRBench、RealWorldQA、HallBench 和 MathVista 是使用 VLMEvalKit 进行评估的。

|

| 697 |

-

|

| 698 |

-

- 对于MMMU,我们报告了原始分数(左侧:InternVL系列模型使用InternVL代码库评测,其他模型的分数来自其技术报告或网页)和VLMEvalKit分数(右侧:从OpenCompass排行榜收集)。

|

| 699 |

-

|

| 700 |

-

- 请注意,使用不同的测试工具包(如 InternVL 和 VLMEvalKit)评估同一模型可能会导致细微差异,这是正常的。代码版本的更新、环境和硬件的变化也可能导致结果的微小差异。

|

| 701 |

-

|

| 702 |

-

### 视频相关评测

|

| 703 |

-

|

| 704 |

-

| 评测数据集 | VideoChat2-HD-Mistral | Video-CCAM-9B | InternVL2-4B | InternVL2-8B |

|

| 705 |

-

| :-------------------------: | :-------------------: | :-----------: | :----------: | :----------: |

|

| 706 |

-

| 模型大小 | 7B | 9B | 4.2B | 8.1B |

|

| 707 |

-

| | | | | |

|

| 708 |

-

| MVBench | 60.4 | 60.7 | 63.7 | 66.4 |

|

| 709 |

-

| MMBench-Video<sub>8f</sub> | - | - | 1.10 | 1.19 |

|

| 710 |

-

| MMBench-Video<sub>16f</sub> | - | - | 1.18 | 1.28 |

|

| 711 |

-

| Video-MME<br>w/o subs | 42.3 | 50.6 | 51.4 | 54.0 |

|

| 712 |

-

| Video-MME<br>w subs | 54.6 | 54.9 | 53.4 | 56.9 |

|

| 713 |

-

|

| 714 |

-

- 我们通过从每个视频中提取 16 帧来评估我们的模型在 MVBench 和 Video-MME 上的性能,每个视频帧被调整为 448x448 的图像。

|

| 715 |

-

|

| 716 |

-

### 定位相关评测

|

| 717 |

-

|

| 718 |

-

| 模型 | avg. | RefCOCO<br>(val) | RefCOCO<br>(testA) | RefCOCO<br>(testB) | RefCOCO+<br>(val) | RefCOCO+<br>(testA) | RefCOCO+<br>(testB) | RefCOCO‑g<br>(val) | RefCOCO‑g<br>(test) |

|

| 719 |

-

| :----------------------------: | :--: | :--------------: | :----------------: | :----------------: | :---------------: | :-----------------: | :-----------------: | :----------------: | :-----------------: |

|

| 720 |

-

| UNINEXT-H<br>(Specialist SOTA) | 88.9 | 92.6 | 94.3 | 91.5 | 85.2 | 89.6 | 79.8 | 88.7 | 89.4 |

|

| 721 |

-

| | | | | | | | | | |

|

| 722 |

-

| Mini-InternVL-<br>Chat-2B-V1-5 | 75.8 | 80.7 | 86.7 | 72.9 | 72.5 | 82.3 | 60.8 | 75.6 | 74.9 |

|

| 723 |

-

| Mini-InternVL-<br>Chat-4B-V1-5 | 84.4 | 88.0 | 91.4 | 83.5 | 81.5 | 87.4 | 73.8 | 84.7 | 84.6 |

|

| 724 |

-

| InternVL‑Chat‑V1‑5 | 88.8 | 91.4 | 93.7 | 87.1 | 87.0 | 92.3 | 80.9 | 88.5 | 89.3 |

|

| 725 |

-

| | | | | | | | | | |

|

| 726 |

-

| InternVL2‑1B | 79.9 | 83.6 | 88.7 | 79.8 | 76.0 | 83.6 | 67.7 | 80.2 | 79.9 |

|

| 727 |

-

| InternVL2‑2B | 77.7 | 82.3 | 88.2 | 75.9 | 73.5 | 82.8 | 63.3 | 77.6 | 78.3 |

|

| 728 |

-

| InternVL2‑4B | 84.4 | 88.5 | 91.2 | 83.9 | 81.2 | 87.2 | 73.8 | 84.6 | 84.6 |

|

| 729 |

-

| InternVL2‑8B | 82.9 | 87.1 | 91.1 | 80.7 | 79.8 | 87.9 | 71.4 | 82.7 | 82.7 |

|

| 730 |

-

| InternVL2‑26B | 88.5 | 91.2 | 93.3 | 87.4 | 86.8 | 91.0 | 81.2 | 88.5 | 88.6 |

|

| 731 |

-

| InternVL2‑40B | 90.3 | 93.0 | 94.7 | 89.2 | 88.5 | 92.8 | 83.6 | 90.3 | 90.6 |

|

| 732 |

-

| InternVL2-<br>Llama3‑76B | 90.0 | 92.2 | 94.8 | 88.4 | 88.8 | 93.1 | 82.8 | 89.5 | 90.3 |

|

| 733 |

-

|

| 734 |

-

- 我们使用以下 Prompt 来评测 InternVL 的 Grounding 能力: `Please provide the bounding box coordinates of the region this sentence describes: <ref>{}</ref>`

|

| 735 |

-

|

| 736 |

-

限制:尽管在训练过程中我们非常注重模型的安全性,尽力促使模型输出符合伦理和法律要求的文本,但受限于模型大小以及概率生成范式,模型可能会产生各种不符合预期的输出,例如回复内容包含偏见、歧视等有害内容,请勿传播这些内容。由于传播不良信息导致的任何后果,本项目不承担责任。

|

| 737 |

-

|

| 738 |

-

### 邀请评测 InternVL

|

| 739 |

-

|

| 740 |

-

我们欢迎各位 MLLM benchmark 的开发者对我们的 InternVL1.5 以及 InternVL2 系列模型进行评测。如果需要在此处添加评测结果,请与我联系([wztxy89@163.com](mailto:wztxy89@163.com))。

|

| 741 |

-

|

| 742 |

-

## 快速启动

|

| 743 |

-

|

| 744 |

-

我们提供了一个示例代码,用于使用 `transformers` 运行 InternVL2-8B。

|

| 745 |

-

|

| 746 |

-

我们也欢迎你在我们的[在线demo](https://internvl.opengvlab.com/)中体验InternVL2的系列模型。

|

| 747 |

-

|

| 748 |

-

> 请使用 transformers==4.37.2 以确保模型正常运行。

|

| 749 |

-

|

| 750 |

-

示例代码请[点击这里](#quick-start)。

|

| 751 |

-

|

| 752 |

-

## 微调

|

| 753 |

-

|

| 754 |

-

来自ModelScope社区的SWIFT已经支持对InternVL进行微调(图像/视频),详情请查看[此链接](https://github.com/modelscope/swift/blob/main/docs/source_en/Multi-Modal/internvl-best-practice.md)。

|

| 755 |

-

|

| 756 |

-

## 部署

|

| 757 |

-

|

| 758 |

-

### LMDeploy

|

| 759 |

-

|

| 760 |

-

LMDeploy 是由 MMRazor 和 MMDeploy 团队开发的用于压缩、部署和服务大语言模型(LLM)的工具包。

|

| 761 |

-

|

| 762 |

-

```sh

|

| 763 |

-

pip install lmdeploy

|

| 764 |

-

```

|

| 765 |

-

|

| 766 |

-

LMDeploy 将多模态视觉-语言模型(VLM)的复杂推理过程抽象为一个易于使用的管道,类似于大语言模型(LLM)的推理管道。

|

| 767 |

-

|

| 768 |

-

#### 一个“你好,世界”示例

|

| 769 |

-

|

| 770 |

-

```python

|

| 771 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig

|

| 772 |

-

from lmdeploy.vl import load_image

|

| 773 |

-

|

| 774 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 775 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 776 |

-

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg')

|

| 777 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 778 |

-

chat_template_config.meta_instruction = system_prompt

|

| 779 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 780 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 781 |

-

response = pipe(('describe this image', image))

|

| 782 |

-

print(response.text)

|

| 783 |

-

```

|

| 784 |

-

|

| 785 |

-

如果在执行此示例时出现 `ImportError`,请按照提示安装所需的依赖包。

|

| 786 |

-

|

| 787 |

-

#### 多图像推理

|

| 788 |

-

|

| 789 |

-

在处理多张图像时,可以将它们全部放入一个列表中。请注意,多张图像会导致输入 token 数量增加,因此通常需要增加上下文窗口的大小。

|

| 790 |

-

|

| 791 |

-

```python

|

| 792 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig

|

| 793 |

-

from lmdeploy.vl import load_image

|

| 794 |

-

from lmdeploy.vl.constants import IMAGE_TOKEN

|

| 795 |

-

|

| 796 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 797 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 798 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 799 |

-

chat_template_config.meta_instruction = system_prompt

|

| 800 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 801 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 802 |

-

|

| 803 |

-

image_urls=[

|

| 804 |

-

'https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg',

|

| 805 |

-

'https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/det.jpg'

|

| 806 |

-

]

|

| 807 |

-

|

| 808 |

-

images = [load_image(img_url) for img_url in image_urls]

|

| 809 |

-

response = pipe((f'Image-1: {IMAGE_TOKEN}\nImage-2: {IMAGE_TOKEN}\ndescribe these two images', images))

|

| 810 |

-

print(response.text)

|

| 811 |

-

```

|

| 812 |

-

|

| 813 |

-

#### 批量Prompt推理

|

| 814 |

-

|

| 815 |

-

使用批量Prompt进行推理非常简单;只需将它们放在一个列表结构中:

|

| 816 |

-

|

| 817 |

-

```python

|

| 818 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig

|

| 819 |

-

from lmdeploy.vl import load_image

|

| 820 |

-

|

| 821 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 822 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 823 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 824 |

-

chat_template_config.meta_instruction = system_prompt

|

| 825 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 826 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 827 |

-

|

| 828 |

-

image_urls=[

|

| 829 |

-

"https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg",

|

| 830 |

-

"https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/det.jpg"

|

| 831 |

-

]

|

| 832 |

-

prompts = [('describe this image', load_image(img_url)) for img_url in image_urls]

|

| 833 |

-

response = pipe(prompts)

|

| 834 |

-

print(response)

|

| 835 |

-

```

|

| 836 |

-

|

| 837 |

-

#### 多轮对话

|

| 838 |

-

|

| 839 |

-

使用管道进行多轮对话有两种方法。一种是根据 OpenAI 的格式构建消息并使用上述方法,另一种是使用 `pipeline.chat` 接口。

|

| 840 |

-

|

| 841 |

-

```python

|

| 842 |

-

from lmdeploy import pipeline, TurbomindEngineConfig, ChatTemplateConfig, GenerationConfig

|

| 843 |

-

from lmdeploy.vl import load_image

|

| 844 |

-

|

| 845 |

-

model = 'OpenGVLab/InternVL2-8B'

|

| 846 |

-

system_prompt = '我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。'

|

| 847 |

-

chat_template_config = ChatTemplateConfig('internvl-internlm2')

|

| 848 |

-

chat_template_config.meta_instruction = system_prompt

|

| 849 |

-

pipe = pipeline(model, chat_template_config=chat_template_config,

|

| 850 |

-

backend_config=TurbomindEngineConfig(session_len=8192))

|

| 851 |

-

|

| 852 |

-

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/demo/resources/human-pose.jpg')

|

| 853 |

-

gen_config = GenerationConfig(top_k=40, top_p=0.8, temperature=0.8)

|

| 854 |

-

sess = pipe.chat(('describe this image', image), gen_config=gen_config)

|

| 855 |

-

print(sess.response.text)

|

| 856 |

-

sess = pipe.chat('What is the woman doing?', session=sess, gen_config=gen_config)

|

| 857 |

-

print(sess.response.text)

|

| 858 |

-

```

|

| 859 |

-

|

| 860 |

-

#### API部署

|

| 861 |

-

|

| 862 |

-

为了将InternVL2部署成API,请先配置聊天模板配置文件。创建如下的 JSON 文件 `chat_template.json`。

|

| 863 |

-

|

| 864 |

-

```json

|

| 865 |

-

{

|

| 866 |

-

"model_name":"internvl-internlm2",

|

| 867 |

-

"meta_instruction":"我是书生·万象,英文名是InternVL,是由上海人工智能实验室及多家合作单位联合开发的多模态大语言模型。",

|

| 868 |

-

"stop_words":["<|im_start|>", "<|im_end|>"]

|

| 869 |

-

}

|

| 870 |

-

```

|

| 871 |

-

|

| 872 |

-

LMDeploy 的 `api_server` 使模型能够通过一个命令轻松打包成服务。提供的 RESTful API 与 OpenAI 的接口兼容。以下是服务启动的示例:

|

| 873 |

-

|

| 874 |

-

```shell

|

| 875 |

-

lmdeploy serve api_server OpenGVLab/InternVL2-8B --model-name InternVL2-8B --backend turbomind --server-port 23333 --chat-template chat_template.json

|

| 876 |

-

```

|

| 877 |

-

|

| 878 |

-

为了使用OpenAI风格的API接口,您需要安装OpenAI:

|

| 879 |

-

|

| 880 |

-

```shell

|

| 881 |

-

pip install openai

|

| 882 |

-

```

|

| 883 |

-

|

| 884 |

-

然后,使用下面的代码进行API调用:

|

| 885 |

-

|

| 886 |

-

```python

|

| 887 |

-

from openai import OpenAI

|

| 888 |

-

|

| 889 |

-

client = OpenAI(api_key='YOUR_API_KEY', base_url='http://0.0.0.0:23333/v1')

|

| 890 |

-

model_name = client.models.list().data[0].id

|

| 891 |

-

response = client.chat.completions.create(

|

| 892 |

-

model="InternVL2-8B",

|

| 893 |

-

messages=[{

|

| 894 |

-

'role':

|

| 895 |

-

'user',

|

| 896 |

-

'content': [{

|

| 897 |

-

'type': 'text',

|

| 898 |

-

'text': 'describe this image',

|

| 899 |

-

}, {

|

| 900 |

-

'type': 'image_url',

|

| 901 |

-

'image_url': {

|

| 902 |

-

'url':

|

| 903 |

-

'https://modelscope.oss-cn-beijing.aliyuncs.com/resource/tiger.jpeg',

|

| 904 |

-

},

|

| 905 |

-

}],

|

| 906 |

-

}],

|

| 907 |

-

temperature=0.8,

|

| 908 |

-

top_p=0.8)

|

| 909 |

-

print(response)

|

| 910 |

-

```

|

| 911 |

-

|

| 912 |

-

### vLLM

|

| 913 |

-

|

| 914 |

-

TODO

|

| 915 |

-

|

| 916 |

-

### Ollama

|

| 917 |

-

|

| 918 |

-

TODO

|

| 919 |

-

|

| 920 |

-

## 开源许可证

|

| 921 |

-

|

| 922 |

-

该项目采用 MIT 许可证发布,而 InternLM 则采用 Apache-2.0 许可证。

|

| 923 |

-

|

| 924 |

-

## 引用

|

| 925 |

-

|

| 926 |

-

如果您发现此项目对您的研究有用,可以考虑引用我们的论文:

|

| 927 |

-

|

| 928 |

-

```BibTeX

|

| 929 |

-

@article{chen2023internvl,

|

| 930 |

-

title={InternVL: Scaling up Vision Foundation Models and Aligning for Generic Visual-Linguistic Tasks},

|

| 931 |

-

author={Chen, Zhe and Wu, Jiannan and Wang, Wenhai and Su, Weijie and Chen, Guo and Xing, Sen and Zhong, Muyan and Zhang, Qinglong and Zhu, Xizhou and Lu, Lewei and Li, Bin and Luo, Ping and Lu, Tong and Qiao, Yu and Dai, Jifeng},

|

| 932 |

-

journal={arXiv preprint arXiv:2312.14238},

|

| 933 |

-

year={2023}

|

| 934 |

-

}

|

| 935 |

-

@article{chen2024far,

|

| 936 |

-

title={How Far Are We to GPT-4V? Closing the Gap to Commercial Multimodal Models with Open-Source Suites},

|

| 937 |

-

author={Chen, Zhe and Wang, Weiyun and Tian, Hao and Ye, Shenglong and Gao, Zhangwei and Cui, Erfei and Tong, Wenwen and Hu, Kongzhi and Luo, Jiapeng and Ma, Zheng and others},

|

| 938 |

-

journal={arXiv preprint arXiv:2404.16821},

|

| 939 |

-

year={2024}

|

| 940 |

-

}

|

| 941 |

-

```

|

|

|

|

| 3 |

pipeline_tag: image-text-to-text

|

| 4 |

---

|

| 5 |

|

| 6 |

+

# Vinci-8B

|

| 7 |

+

Based on InternVL2-8B

|

| 8 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|