upload everything

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- LICENSE +24 -0

- MedVersa/pytorch_model.bin +3 -0

- README.md +58 -0

- __pycache__/utils.cpython-39.pyc +0 -0

- demo.py +544 -0

- demo_ex/1de015eb-891f1b02-f90be378-d6af1e86-df3270c2.png +0 -0

- demo_ex/79eee504-b1b60ab8-5e8dd843-b6ed87aa-670747b1.png +0 -0

- demo_ex/Case_00840_0000.nii.gz +3 -0

- demo_ex/Case_01013_0000.nii.gz +3 -0

- demo_ex/ISIC_0032258.jpg +0 -0

- demo_ex/ISIC_0033730.jpg +0 -0

- demo_ex/bc25fa99-0d3766cc-7704edb7-5c7a4a63-dc65480a.png +0 -0

- demo_ex/c536f749-2326f755-6a65f28f-469affd2-26392ce9.png +0 -0

- demo_ex/f39b05b1-f544e51a-cfe317ca-b66a4aa6-1c1dc22d.png +0 -0

- demo_ex/f3fefc29-68544ac8-284b820d-858b5470-f579b982.png +0 -0

- environment.yml +479 -0

- inference.py +107 -0

- medomni/__init__.py +31 -0

- medomni/__pycache__/__init__.cpython-311.pyc +0 -0

- medomni/__pycache__/__init__.cpython-39.pyc +0 -0

- medomni/common/__init__.py +0 -0

- medomni/common/__pycache__/__init__.cpython-39.pyc +0 -0

- medomni/common/__pycache__/config.cpython-39.pyc +0 -0

- medomni/common/__pycache__/dist_utils.cpython-39.pyc +0 -0

- medomni/common/__pycache__/logger.cpython-39.pyc +0 -0

- medomni/common/__pycache__/optims.cpython-39.pyc +0 -0

- medomni/common/__pycache__/registry.cpython-39.pyc +0 -0

- medomni/common/__pycache__/utils.cpython-39.pyc +0 -0

- medomni/common/config.py +468 -0

- medomni/common/dist_utils.py +137 -0

- medomni/common/gradcam.py +24 -0

- medomni/common/logger.py +200 -0

- medomni/common/optims.py +119 -0

- medomni/common/registry.py +327 -0

- medomni/common/utils.py +424 -0

- medomni/configs/datasets/medinterp/align.yaml +5 -0

- medomni/configs/default.yaml +5 -0

- medomni/configs/models/medomni.yaml +12 -0

- medomni/conversation/__init__.py +0 -0

- medomni/conversation/__pycache__/__init__.cpython-39.pyc +0 -0

- medomni/conversation/__pycache__/conversation.cpython-39.pyc +0 -0

- medomni/conversation/conversation.py +222 -0

- medomni/datasets/__init__.py +0 -0

- medomni/datasets/__pycache__/__init__.cpython-39.pyc +0 -0

- medomni/datasets/__pycache__/data_utils.cpython-39.pyc +0 -0

- medomni/datasets/builders/__init__.py +71 -0

- medomni/datasets/builders/__pycache__/__init__.cpython-39.pyc +0 -0

- medomni/datasets/builders/__pycache__/base_dataset_builder.cpython-39.pyc +0 -0

- medomni/datasets/builders/__pycache__/image_text_pair_builder.cpython-39.pyc +0 -0

- medomni/datasets/builders/base_dataset_builder.py +234 -0

LICENSE

ADDED

|

@@ -0,0 +1,24 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Copyright – President and Fellows of Harvard College, 2024. All Rights Reserved.

|

| 2 |

+

|

| 3 |

+

Redistribution and use in source and binary forms, with or without

|

| 4 |

+

modification, are permitted provided that the following conditions are met:

|

| 5 |

+

|

| 6 |

+

Redistributions of source code must retain the above copyright notice, this

|

| 7 |

+

list of conditions and the following disclaimer. Redistributions in binary

|

| 8 |

+

form must reproduce the above copyrightnotice, this list of conditions and the

|

| 9 |

+

following disclaimer in the documentation and/or other materials provided with

|

| 10 |

+

the distribution. Neither the name "Harvard" nor the names of its contributors

|

| 11 |

+

may be used to endorse or promote products derived from this software without

|

| 12 |

+

specific prior written permission.

|

| 13 |

+

|

| 14 |

+

THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS "AS IS"

|

| 15 |

+

AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOTLIMITED TO, THE

|

| 16 |

+

IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE

|

| 17 |

+

ARE DISCLAIMED. IN NO EVENT SHALLTHECOPYRIGHT HOLDER OR CONTRIBUTORS BE

|

| 18 |

+

LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL, SPECIAL, EXEMPLARY, OR

|

| 19 |

+

CONSEQUENTIAL DAMAGES(INCLUDING, BUT NOT LIMITED TO, PROCUREMENT OF

|

| 20 |

+

SUBSTITUTE GOODS OR SERVICES; LOSS OF USE, DATA, OR PROFITS; OR BUSINESS

|

| 21 |

+

INTERRUPTION)HOWEVER CAUSED AND ON ANY THEORY OF LIABILITY, WHETHER IN

|

| 22 |

+

CONTRACT, STRICT LIABILITY, OR TORT (INCLUDING NEGLIGENCE OR

|

| 23 |

+

OTHERWISE)ARISING IN ANY WAY OUT OF THE USE OF THIS SOFTWARE, EVEN IF ADVISED

|

| 24 |

+

OF THE POSSIBILITY OF SUCH DAMAGE.

|

MedVersa/pytorch_model.bin

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:b3ce596897168d79649e6d6df128a1b409a0cc878092f00667873be6f4b8c9d3

|

| 3 |

+

size 13993804625

|

README.md

ADDED

|

@@ -0,0 +1,58 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: hyzhouMedVersa

|

| 3 |

+

app_file: demo_inter.py

|

| 4 |

+

sdk: gradio

|

| 5 |

+

sdk_version: 4.24.0

|

| 6 |

+

---

|

| 7 |

+

# MedVersa: An orchestrated medical AI system

|

| 8 |

+

MedVersa is a compound medical AI system that can coordinate multimodal inputs, orchestrate models and tools for varying tasks, and generate multimodal outputs.

|

| 9 |

+

|

| 10 |

+

## Environment

|

| 11 |

+

MedVersa is written in [Python](https://www.python.org/). It is recommended to configure/manage your python environment using conda. To do this, you need to install the [miniconda](https://docs.anaconda.com/free/miniconda/index.html) or [anaconda](https://www.anaconda.com/) first.

|

| 12 |

+

|

| 13 |

+

After installing conda, you need to set up a new conda environment for MedVersa using the provided `environment.yml`:

|

| 14 |

+

``` shell

|

| 15 |

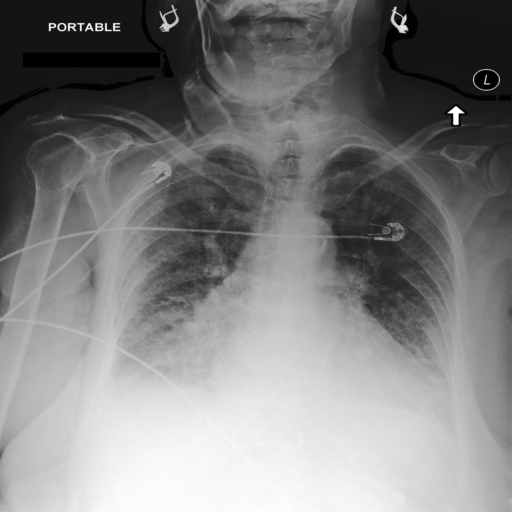

+

conda env create -f environment.yml

|

| 16 |

+

conda activate medversa

|

| 17 |

+

```

|

| 18 |

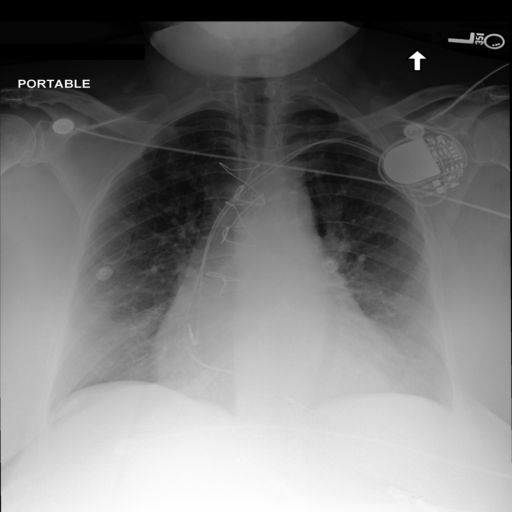

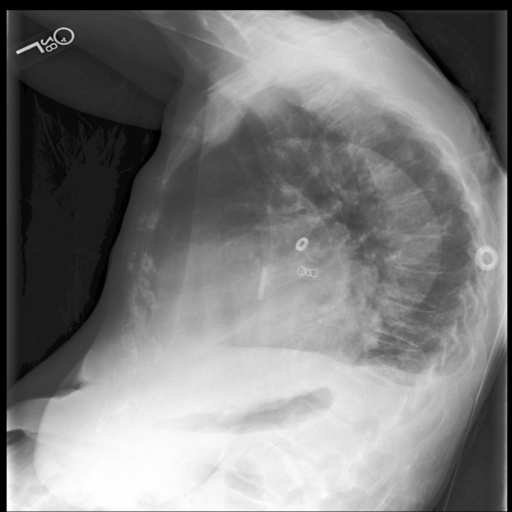

+

|

| 19 |

+

## Inference

|

| 20 |

+

``` python

|

| 21 |

+

from utils import *

|

| 22 |

+

|

| 23 |

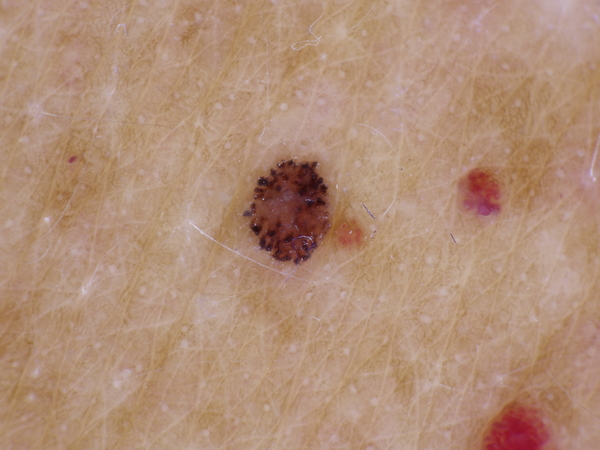

+

# --- Launch Model ---

|

| 24 |

+

device = 'cuda:0'

|

| 25 |

+

model_cls = registry.get_model_class('medomni') # medomni is the architecture name :)

|

| 26 |

+

model = model_cls.from_pretrained('hyzhou/MedVersa').to(device)

|

| 27 |

+

model.eval()

|

| 28 |

+

|

| 29 |

+

# --- Define examples ---

|

| 30 |

+

examples = [

|

| 31 |

+

[

|

| 32 |

+

["./demo_ex/c536f749-2326f755-6a65f28f-469affd2-26392ce9.png"],

|

| 33 |

+

"Age:30-40.\nGender:F.\nIndication: ___-year-old female with end-stage renal disease not on dialysis presents with dyspnea. PICC line placement.\nComparison: None.",

|

| 34 |

+

"How would you characterize the findings from <img0>?",

|

| 35 |

+

"cxr",

|

| 36 |

+

"report generation",

|

| 37 |

+

],

|

| 38 |

+

]

|

| 39 |

+

# --- Define hyperparams ---

|

| 40 |

+

num_beams = 1

|

| 41 |

+

do_sample = True

|

| 42 |

+

min_length = 1

|

| 43 |

+

top_p = 0.9

|

| 44 |

+

repetition_penalty = 1

|

| 45 |

+

length_penalty = 1

|

| 46 |

+

temperature = 0.1

|

| 47 |

+

|

| 48 |

+

# --- Generate a report for an chest X-ray image ---

|

| 49 |

+

index = 0

|

| 50 |

+

demo_ex = examples[index]

|

| 51 |

+

images, context, prompt, modality, task = demo_ex[0], demo_ex[1], demo_ex[2], demo_ex[3], demo_ex[4]

|

| 52 |

+

seg_mask_2d, seg_mask_3d, output_text = generate_predictions(model, images, context, prompt, modality, task, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature)

|

| 53 |

+

print(output_text)

|

| 54 |

+

```

|

| 55 |

+

For more details and examples, please refer to `inference.py`.

|

| 56 |

+

|

| 57 |

+

## Demo

|

| 58 |

+

`CUDA_VISIBLE_DEVICES=0 python demo.py --cfg-path medversa.yaml`

|

__pycache__/utils.cpython-39.pyc

ADDED

|

Binary file (11.2 kB). View file

|

|

|

demo.py

ADDED

|

@@ -0,0 +1,544 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import argparse

|

| 3 |

+

import torch

|

| 4 |

+

import torch.nn.functional as F

|

| 5 |

+

import torchvision.transforms.functional as TF

|

| 6 |

+

from torchvision import transforms

|

| 7 |

+

from PIL import Image

|

| 8 |

+

import skimage.morphology, skimage.io

|

| 9 |

+

import cv2

|

| 10 |

+

import numpy as np

|

| 11 |

+

import random

|

| 12 |

+

from transformers import StoppingCriteria, StoppingCriteriaList

|

| 13 |

+

from copy import deepcopy

|

| 14 |

+

from medomni.common.config import Config

|

| 15 |

+

from medomni.common.dist_utils import get_rank

|

| 16 |

+

from medomni.common.registry import registry

|

| 17 |

+

import torchio as tio

|

| 18 |

+

import nibabel as nib

|

| 19 |

+

from scipy import ndimage, misc

|

| 20 |

+

import time

|

| 21 |

+

import ipdb

|

| 22 |

+

|

| 23 |

+

# Function to parse command line arguments

|

| 24 |

+

def parse_args():

|

| 25 |

+

parser = argparse.ArgumentParser(description="Demo")

|

| 26 |

+

parser.add_argument("--cfg-path", required=True, help="path to configuration file.")

|

| 27 |

+

parser.add_argument(

|

| 28 |

+

"--options",

|

| 29 |

+

nargs="+",

|

| 30 |

+

help="override some settings in the used config, the key-value pair in xxx=yyy format will be merged into config file (deprecate), change to --cfg-options instead.",

|

| 31 |

+

)

|

| 32 |

+

args = parser.parse_args()

|

| 33 |

+

return args

|

| 34 |

+

|

| 35 |

+

device = 'cuda:0'

|

| 36 |

+

# Launch model

|

| 37 |

+

args = parse_args()

|

| 38 |

+

cfg = Config(args)

|

| 39 |

+

|

| 40 |

+

model_config = cfg.model_cfg

|

| 41 |

+

model_cls = registry.get_model_class(model_config.arch)

|

| 42 |

+

model = model_cls.from_pretrained('hyzhou/MedVersa').to(device)

|

| 43 |

+

model.eval()

|

| 44 |

+

global global_images

|

| 45 |

+

global_images = None

|

| 46 |

+

|

| 47 |

+

def seg_2d_process(image_path, pred_mask, img_size=224):

|

| 48 |

+

image = cv2.imread(image_path[0])

|

| 49 |

+

if pred_mask.sum() != 0:

|

| 50 |

+

labels = skimage.morphology.label(pred_mask)

|

| 51 |

+

labelCount = np.bincount(labels.ravel())

|

| 52 |

+

largest_label = np.argmax(labelCount[1:]) + 1

|

| 53 |

+

pred_mask[labels != largest_label] = 0

|

| 54 |

+

pred_mask[labels == largest_label] = 255

|

| 55 |

+

pred_mask = pred_mask.astype(np.uint8)

|

| 56 |

+

contours, _ = cv2.findContours(pred_mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

|

| 57 |

+

if contours:

|

| 58 |

+

contours = np.vstack(contours)

|

| 59 |

+

binary_array = np.zeros((img_size, img_size))

|

| 60 |

+

binary_array = cv2.drawContours(binary_array, contours, -1, 255, thickness=cv2.FILLED)

|

| 61 |

+

binary_array = cv2.resize(binary_array, (image.shape[1], image.shape[0]), interpolation = cv2.INTER_NEAREST) / 255

|

| 62 |

+

image = [Image.fromarray(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))]

|

| 63 |

+

mask = [binary_array]

|

| 64 |

+

else:

|

| 65 |

+

image = [Image.fromarray(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))]

|

| 66 |

+

mask = [np.zeros((image.shape[1], image.shape[0]))]

|

| 67 |

+

else:

|

| 68 |

+

mask = [np.zeros((image.shape[1], image.shape[0]))]

|

| 69 |

+

image = [Image.fromarray(cv2.cvtColor(image, cv2.COLOR_BGR2RGB))]

|

| 70 |

+

# output_image = cv2.drawContours(binary_array, contours, -1, (110, 0, 255), 2)

|

| 71 |

+

# output_image_pil = Image.fromarray(cv2.cvtColor(output_image, cv2.COLOR_BGR2RGB))

|

| 72 |

+

return image, mask

|

| 73 |

+

|

| 74 |

+

def seg_3d_process(image_path, seg_mask):

|

| 75 |

+

img = nib.load(image_path[0]).get_fdata()

|

| 76 |

+

image = window_scan(img).transpose(2,0,1).astype(np.uint8)

|

| 77 |

+

if seg_mask.sum() != 0:

|

| 78 |

+

seg_mask = resize_back_volume_abd(seg_mask, image.shape).astype(np.uint8)

|

| 79 |

+

image_slices = []

|

| 80 |

+

contour_slices = []

|

| 81 |

+

for i in range(seg_mask.shape[0]):

|

| 82 |

+

slice_img = np.fliplr(np.rot90(image[i]))

|

| 83 |

+

slice_mask = np.fliplr(np.rot90(seg_mask[i]))

|

| 84 |

+

contours, _ = cv2.findContours(slice_mask, cv2.RETR_TREE, cv2.CHAIN_APPROX_NONE)

|

| 85 |

+

image_slices.append(Image.fromarray(slice_img))

|

| 86 |

+

if contours:

|

| 87 |

+

binary_array = np.zeros(seg_mask.shape[1:])

|

| 88 |

+

binary_array = cv2.drawContours(binary_array, contours, -1, 255, thickness=cv2.FILLED) / 255

|

| 89 |

+

binary_array = cv2.resize(binary_array, slice_img.shape, interpolation = cv2.INTER_NEAREST)

|

| 90 |

+

contour_slices.append(binary_array)

|

| 91 |

+

else:

|

| 92 |

+

contour_slices.append(np.zeros_like(slice_img))

|

| 93 |

+

else:

|

| 94 |

+

image_slices = []

|

| 95 |

+

contour_slices = []

|

| 96 |

+

slice_img = np.fliplr(np.rot90(image[i]))

|

| 97 |

+

image_slices.append(Image.fromarray(slice_img))

|

| 98 |

+

contour_slices.append(np.zeros_like(slice_img))

|

| 99 |

+

|

| 100 |

+

return image_slices, contour_slices

|

| 101 |

+

|

| 102 |

+

def det_2d_process(image_path, box):

|

| 103 |

+

image_slices = []

|

| 104 |

+

image = cv2.imread(image_path[0])

|

| 105 |

+

if box is not None:

|

| 106 |

+

hi,wd,_ = image.shape

|

| 107 |

+

color = tuple(np.random.random(size=3) * 256)

|

| 108 |

+

x1, y1, x2, y2 = int(box[0]*wd), int(box[1]*hi), int(box[2]*wd), int(box[3]*hi)

|

| 109 |

+

image = cv2.rectangle(image, (x1, y1), (x2, y2), color, 10)

|

| 110 |

+

image_slices.append(Image.fromarray(image))

|

| 111 |

+

return image_slices

|

| 112 |

+

|

| 113 |

+

def window_scan(scan, window_center=50, window_width=400):

|

| 114 |

+

"""

|

| 115 |

+

Apply windowing to a scan.

|

| 116 |

+

|

| 117 |

+

Parameters:

|

| 118 |

+

scan (numpy.ndarray): 3D numpy array of the CT scan

|

| 119 |

+

window_center (int): The center of the window

|

| 120 |

+

window_width (int): The width of the window

|

| 121 |

+

|

| 122 |

+

Returns:

|

| 123 |

+

numpy.ndarray: Windowed CT scan

|

| 124 |

+

"""

|

| 125 |

+

lower_bound = window_center - (window_width // 2)

|

| 126 |

+

upper_bound = window_center + (window_width // 2)

|

| 127 |

+

|

| 128 |

+

windowed_scan = np.clip(scan, lower_bound, upper_bound)

|

| 129 |

+

windowed_scan = (windowed_scan - lower_bound) / (upper_bound - lower_bound)

|

| 130 |

+

windowed_scan = (windowed_scan * 255).astype(np.uint8)

|

| 131 |

+

|

| 132 |

+

return windowed_scan

|

| 133 |

+

|

| 134 |

+

def task_seg_2d(model, preds, hidden_states, image):

|

| 135 |

+

token_mask = preds == model.seg_token_idx_2d

|

| 136 |

+

indices = torch.where(token_mask == True)[0].cpu().numpy()

|

| 137 |

+

feats = model.model_seg_2d.encoder(image.unsqueeze(0)[:, 0])

|

| 138 |

+

last_feats = feats[-1]

|

| 139 |

+

target_states = [hidden_states[ind][-1] for ind in indices]

|

| 140 |

+

if target_states:

|

| 141 |

+

target_states = torch.cat(target_states).squeeze()

|

| 142 |

+

seg_states = model.text2seg_2d(target_states).unsqueeze(0)

|

| 143 |

+

last_feats = last_feats + seg_states.unsqueeze(-1).unsqueeze(-1)

|

| 144 |

+

last_feats = model.text2seg_2d_gn(last_feats)

|

| 145 |

+

feats[-1] = last_feats

|

| 146 |

+

seg_feats = model.model_seg_2d.decoder(*feats)

|

| 147 |

+

seg_preds = model.model_seg_2d.segmentation_head(seg_feats)

|

| 148 |

+

seg_probs = F.sigmoid(seg_preds)

|

| 149 |

+

seg_mask = seg_probs.cpu().squeeze().numpy() >= 0.5

|

| 150 |

+

return seg_mask

|

| 151 |

+

else:

|

| 152 |

+

return None

|

| 153 |

+

|

| 154 |

+

def task_seg_3d(model, preds, hidden_states, img_embeds_list):

|

| 155 |

+

new_img_embeds_list = deepcopy(img_embeds_list)

|

| 156 |

+

token_mask = preds == model.seg_token_idx_3d

|

| 157 |

+

indices = torch.where(token_mask == True)[0].cpu().numpy()

|

| 158 |

+

target_states = [hidden_states[ind][-1] for ind in indices]

|

| 159 |

+

if target_states:

|

| 160 |

+

target_states = torch.cat(target_states).squeeze().unsqueeze(0)

|

| 161 |

+

seg_states = model.text2seg_3d(target_states)

|

| 162 |

+

last_feats = new_img_embeds_list[-1]

|

| 163 |

+

last_feats = last_feats + seg_states.unsqueeze(-1).unsqueeze(-1).unsqueeze(-1)

|

| 164 |

+

last_feats = model.text2seg_3d_gn(last_feats)

|

| 165 |

+

new_img_embeds_list[-1] = last_feats

|

| 166 |

+

seg_preds = model.visual_encoder_3d(encoder_only=False, x_=new_img_embeds_list)

|

| 167 |

+

seg_probs = F.sigmoid(seg_preds)

|

| 168 |

+

seg_mask = seg_probs.cpu().squeeze().numpy() >= 0.5

|

| 169 |

+

return seg_mask

|

| 170 |

+

|

| 171 |

+

def task_det_2d(model, preds, hidden_states):

|

| 172 |

+

token_mask = preds == model.det_token_idx

|

| 173 |

+

indices = torch.where(token_mask == True)[0].cpu().numpy()

|

| 174 |

+

target_states = [hidden_states[ind][-1] for ind in indices]

|

| 175 |

+

if target_states:

|

| 176 |

+

target_states = torch.cat(target_states).squeeze()

|

| 177 |

+

det_states = model.text_det(target_states).detach().cpu()

|

| 178 |

+

return det_states.numpy()

|

| 179 |

+

return torch.zeros_like(indices)

|

| 180 |

+

|

| 181 |

+

class StoppingCriteriaSub(StoppingCriteria):

|

| 182 |

+

def __init__(self, stops=[]):

|

| 183 |

+

super().__init__()

|

| 184 |

+

self.stops = stops

|

| 185 |

+

|

| 186 |

+

def __call__(self, input_ids: torch.LongTensor, scores: torch.FloatTensor):

|

| 187 |

+

for stop in self.stops:

|

| 188 |

+

if torch.all((stop == input_ids[0][-len(stop):])).item():

|

| 189 |

+

return True

|

| 190 |

+

return False

|

| 191 |

+

|

| 192 |

+

def resize_back_volume_abd(img, target_size):

|

| 193 |

+

desired_depth = target_size[0]

|

| 194 |

+

desired_width = target_size[1]

|

| 195 |

+

desired_height = target_size[2]

|

| 196 |

+

|

| 197 |

+

current_depth = img.shape[0] # [d, w, h]

|

| 198 |

+

current_width = img.shape[1]

|

| 199 |

+

current_height = img.shape[2]

|

| 200 |

+

|

| 201 |

+

depth = current_depth / desired_depth

|

| 202 |

+

width = current_width / desired_width

|

| 203 |

+

height = current_height / desired_height

|

| 204 |

+

|

| 205 |

+

depth_factor = 1 / depth

|

| 206 |

+

width_factor = 1 / width

|

| 207 |

+

height_factor = 1 / height

|

| 208 |

+

|

| 209 |

+

img = ndimage.zoom(img, (depth_factor, width_factor, height_factor), order=0)

|

| 210 |

+

return img

|

| 211 |

+

|

| 212 |

+

def resize_volume_abd(img):

|

| 213 |

+

img[img<=-200] = -200

|

| 214 |

+

img[img>=300] = 300

|

| 215 |

+

|

| 216 |

+

desired_depth = 64

|

| 217 |

+

desired_width = 192

|

| 218 |

+

desired_height = 192

|

| 219 |

+

|

| 220 |

+

current_width = img.shape[0] # [w, h, d]

|

| 221 |

+

current_height = img.shape[1]

|

| 222 |

+

current_depth = img.shape[2]

|

| 223 |

+

|

| 224 |

+

depth = current_depth / desired_depth

|

| 225 |

+

width = current_width / desired_width

|

| 226 |

+

height = current_height / desired_height

|

| 227 |

+

|

| 228 |

+

depth_factor = 1 / depth

|

| 229 |

+

width_factor = 1 / width

|

| 230 |

+

height_factor = 1 / height

|

| 231 |

+

|

| 232 |

+

img = ndimage.zoom(img, (width_factor, height_factor, depth_factor), order=0)

|

| 233 |

+

return img

|

| 234 |

+

|

| 235 |

+

def load_and_preprocess_image(image):

|

| 236 |

+

mean = (0.48145466, 0.4578275, 0.40821073)

|

| 237 |

+

std = (0.26862954, 0.26130258, 0.27577711)

|

| 238 |

+

transform = transforms.Compose([

|

| 239 |

+

transforms.Resize([224, 224]),

|

| 240 |

+

transforms.ToTensor(),

|

| 241 |

+

transforms.Normalize(mean, std)

|

| 242 |

+

])

|

| 243 |

+

image = transform(image).type(torch.bfloat16).cuda().unsqueeze(0)

|

| 244 |

+

return image

|

| 245 |

+

|

| 246 |

+

def load_and_preprocess_volume(image):

|

| 247 |

+

img = nib.load(image).get_fdata()

|

| 248 |

+

image = torch.from_numpy(resize_volume_abd(img)).permute(2,0,1)

|

| 249 |

+

transform = tio.Compose([

|

| 250 |

+

tio.ZNormalization(masking_method=tio.ZNormalization.mean),

|

| 251 |

+

])

|

| 252 |

+

image = transform(image.unsqueeze(0)).type(torch.bfloat16).cuda()

|

| 253 |

+

return image

|

| 254 |

+

|

| 255 |

+

def read_image(image_path):

|

| 256 |

+

if image_path.endswith(('.jpg', '.jpeg', '.png')):

|

| 257 |

+

return load_and_preprocess_image(Image.open(image_path).convert('RGB'))

|

| 258 |

+

elif image_path.endswith('.nii.gz'):

|

| 259 |

+

return load_and_preprocess_volume(image_path)

|

| 260 |

+

else:

|

| 261 |

+

raise ValueError("Unsupported file format")

|

| 262 |

+

|

| 263 |

+

def generate(image_path, image, context, modal, num_imgs, prompt, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature):

|

| 264 |

+

if (len(context) != 0 and ('report' in prompt or 'finding' in prompt or 'impression' in prompt)) or (len(context) != 0 and modal=='derm' and ('diagnosis' in prompt or 'issue' in prompt or 'problem' in prompt)):

|

| 265 |

+

prompt = '<context>' + context + '</context>' + prompt

|

| 266 |

+

if modal == 'ct' and 'segment' in prompt.lower():

|

| 267 |

+

if 'liver' in prompt:

|

| 268 |

+

prompt = 'Segment the liver.'

|

| 269 |

+

if 'spleen' in prompt:

|

| 270 |

+

prompt = 'Segment the spleen.'

|

| 271 |

+

if 'kidney' in prompt:

|

| 272 |

+

prompt = 'Segment the kidney.'

|

| 273 |

+

if 'pancrea' in prompt:

|

| 274 |

+

prompt = 'Segment the pancreas.'

|

| 275 |

+

img_embeds, atts_img, img_embeds_list = model.encode_img(image.unsqueeze(0), [modal])

|

| 276 |

+

placeholder = ['<ImageHere>'] * 9

|

| 277 |

+

prefix = '###Human:' + ''.join([f'<img{i}>' + ''.join(placeholder) + f'</img{i}>' for i in range(num_imgs)])

|

| 278 |

+

img_embeds, atts_img = model.prompt_wrap(img_embeds, atts_img, [prefix], [num_imgs])

|

| 279 |

+

prompt += '###Assistant:'

|

| 280 |

+

prompt_tokens = model.llama_tokenizer(prompt, return_tensors="pt", add_special_tokens=False).to(image.device)

|

| 281 |

+

new_img_embeds, new_atts_img = model.prompt_concat(img_embeds, atts_img, prompt_tokens)

|

| 282 |

+

|

| 283 |

+

outputs = model.llama_model.generate(

|

| 284 |

+

inputs_embeds=new_img_embeds,

|

| 285 |

+

max_new_tokens=450,

|

| 286 |

+

stopping_criteria=StoppingCriteriaList([StoppingCriteriaSub(stops=[

|

| 287 |

+

torch.tensor([835]).type(torch.bfloat16).to(image.device),

|

| 288 |

+

torch.tensor([2277, 29937]).type(torch.bfloat16).to(image.device)

|

| 289 |

+

])]),

|

| 290 |

+

num_beams=num_beams,

|

| 291 |

+

do_sample=do_sample,

|

| 292 |

+

min_length=min_length,

|

| 293 |

+

top_p=top_p,

|

| 294 |

+

repetition_penalty=repetition_penalty,

|

| 295 |

+

length_penalty=length_penalty,

|

| 296 |

+

temperature=temperature,

|

| 297 |

+

output_hidden_states=True,

|

| 298 |

+

return_dict_in_generate=True,

|

| 299 |

+

)

|

| 300 |

+

|

| 301 |

+

hidden_states = outputs.hidden_states

|

| 302 |

+

preds = outputs.sequences[0]

|

| 303 |

+

output_image = None

|

| 304 |

+

seg_mask_2d = None

|

| 305 |

+

seg_mask_3d = None

|

| 306 |

+

if sum(preds == model.seg_token_idx_2d):

|

| 307 |

+

seg_mask = task_seg_2d(model, preds, hidden_states, image)

|

| 308 |

+

output_image, seg_mask_2d = seg_2d_process(image_path, seg_mask)

|

| 309 |

+

if sum(preds == model.seg_token_idx_3d):

|

| 310 |

+

seg_mask = task_seg_3d(model, preds, hidden_states, img_embeds_list)

|

| 311 |

+

output_image, seg_mask_3d = seg_3d_process(image_path, seg_mask)

|

| 312 |

+

if sum(preds == model.det_token_idx):

|

| 313 |

+

det_box = task_det_2d(model, preds, hidden_states)

|

| 314 |

+

output_image = det_2d_process(image_path, det_box)

|

| 315 |

+

|

| 316 |

+

if preds[0] == 0: # Remove unknown token <unk> at the beginning

|

| 317 |

+

preds = preds[1:]

|

| 318 |

+

if preds[0] == 1: # Remove start token <s> at the beginning

|

| 319 |

+

preds = preds[1:]

|

| 320 |

+

|

| 321 |

+

output_text = model.llama_tokenizer.decode(preds, add_special_tokens=False)

|

| 322 |

+

output_text = output_text.split('###')[0].split('Assistant:')[-1].strip()

|

| 323 |

+

|

| 324 |

+

if 'mel' in output_text and modal == 'derm':

|

| 325 |

+

output_text = 'The main diagnosis is melanoma.'

|

| 326 |

+

return output_image, seg_mask_2d, seg_mask_3d, output_text

|

| 327 |

+

|

| 328 |

+

def generate_predictions(images, context, prompt, modality, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature):

|

| 329 |

+

num_imgs = len(images)

|

| 330 |

+

modal = modality.lower()

|

| 331 |

+

image_tensors = [read_image(img) for img in images]

|

| 332 |

+

if modality == 'ct':

|

| 333 |

+

time.sleep(2)

|

| 334 |

+

else:

|

| 335 |

+

time.sleep(1)

|

| 336 |

+

image_tensor = torch.cat(image_tensors)

|

| 337 |

+

|

| 338 |

+

with torch.autocast("cuda"):

|

| 339 |

+

with torch.no_grad():

|

| 340 |

+

generated_image, seg_mask_2d, seg_mask_3d, output_text = generate(images, image_tensor, context, modal, num_imgs, prompt, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature)

|

| 341 |

+

|

| 342 |

+

return generated_image, seg_mask_2d, seg_mask_3d, output_text

|

| 343 |

+

|

| 344 |

+

my_dict = {}

|

| 345 |

+

def gradio_interface(chatbot, images, context, prompt, modality, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature):

|

| 346 |

+

global global_images

|

| 347 |

+

if not images:

|

| 348 |

+

image = np.zeros((224, 224, 3), dtype=np.uint8)

|

| 349 |

+

blank_image = Image.fromarray(image)

|

| 350 |

+

snapshot = (blank_image, [])

|

| 351 |

+

global_images = 'none'

|

| 352 |

+

return [(prompt, "At least one image is required to proceed.")], snapshot, gr.update(maximum=0)

|

| 353 |

+

if not prompt or not modality:

|

| 354 |

+

image = np.zeros((224, 224, 3), dtype=np.uint8)

|

| 355 |

+

blank_image = Image.fromarray(image)

|

| 356 |

+

snapshot = (blank_image, [])

|

| 357 |

+

global_images = 'none'

|

| 358 |

+

return [(prompt, "Please provide prompt and modality to proceed.")], snapshot, gr.update(maximum=0)

|

| 359 |

+

|

| 360 |

+

generated_images, seg_mask_2d, seg_mask_3d, output_text = generate_predictions(images, context, prompt, modality, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature)

|

| 361 |

+

output_images = []

|

| 362 |

+

input_images = [np.asarray(Image.open(img.name).convert('RGB')).astype(np.uint8) if img.name.endswith(('.jpg', '.jpeg', '.png')) else f"{img.name} (3D Volume)" for img in images]

|

| 363 |

+

if generated_images is not None:

|

| 364 |

+

for generated_image in generated_images:

|

| 365 |

+

output_images.append(np.asarray(generated_image).astype(np.uint8))

|

| 366 |

+

snapshot = (output_images[0], [])

|

| 367 |

+

if seg_mask_2d is not None:

|

| 368 |

+

snapshot = (output_images[0], [(seg_mask_2d[0], "Mask")])

|

| 369 |

+

if seg_mask_3d is not None:

|

| 370 |

+

snapshot = (output_images[0], [(seg_mask_3d[0], "Mask")])

|

| 371 |

+

else:

|

| 372 |

+

output_images = input_images.copy()

|

| 373 |

+

snapshot = (output_images[0], [])

|

| 374 |

+

|

| 375 |

+

my_dict['image'] = output_images

|

| 376 |

+

my_dict['mask'] = None

|

| 377 |

+

if seg_mask_2d is not None:

|

| 378 |

+

my_dict['mask'] = seg_mask_2d

|

| 379 |

+

if seg_mask_3d is not None:

|

| 380 |

+

my_dict['mask'] = seg_mask_3d

|

| 381 |

+

|

| 382 |

+

if global_images != images and (global_images is not None):

|

| 383 |

+

chatbot = []

|

| 384 |

+

chatbot.append((prompt, output_text))

|

| 385 |

+

else:

|

| 386 |

+

chatbot.append((prompt, output_text))

|

| 387 |

+

global_images = images

|

| 388 |

+

|

| 389 |

+

return chatbot, snapshot, gr.update(maximum=len(output_images)-1)

|

| 390 |

+

|

| 391 |

+

# my_dict = {}

|

| 392 |

+

# def gradio_interface(images, task, context, prompt, modality, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature):

|

| 393 |

+

# if not images:

|

| 394 |

+

# return None, "Error: At least one image is required to proceed."

|

| 395 |

+

# if not prompt or not task or not modality:

|

| 396 |

+

# return None, "Error: Please provide prompt, select task and modality to proceed."

|

| 397 |

+

|

| 398 |

+

# generated_images, seg_mask_2d, seg_mask_3d, output_text = generate_predictions(images, task, context, prompt, modality, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature)

|

| 399 |

+

# output_images = []

|

| 400 |

+

|

| 401 |

+

# input_images = [np.asarray(Image.open(img.name).convert('RGB')).astype(np.uint8) if img.name.endswith(('.jpg', '.jpeg', '.png')) else f"{img.name} (3D Volume)" for img in images]

|

| 402 |

+

# if generated_images is not None:

|

| 403 |

+

# for generated_image in generated_images:

|

| 404 |

+

# output_images.append(np.asarray(generated_image).astype(np.uint8))

|

| 405 |

+

# snapshot = (output_images[0], [])

|

| 406 |

+

# if seg_mask_2d is not None:

|

| 407 |

+

# snapshot = (output_images[0], [(seg_mask_2d[0], "Mask")])

|

| 408 |

+

# if seg_mask_3d is not None:

|

| 409 |

+

# snapshot = (output_images[0], [(seg_mask_3d[0], "Mask")])

|

| 410 |

+

# else:

|

| 411 |

+

# output_images = input_images.copy()

|

| 412 |

+

# snapshot = (output_images[0], [])

|

| 413 |

+

|

| 414 |

+

# my_dict['image'] = output_images

|

| 415 |

+

# my_dict['mask'] = None

|

| 416 |

+

# if seg_mask_2d is not None:

|

| 417 |

+

# my_dict['mask'] = seg_mask_2d

|

| 418 |

+

# if seg_mask_3d is not None:

|

| 419 |

+

# my_dict['mask'] = seg_mask_3d

|

| 420 |

+

|

| 421 |

+

# return output_text, snapshot, gr.update(maximum=len(output_images)-1)

|

| 422 |

+

|

| 423 |

+

def render(x):

|

| 424 |

+

if x > len(my_dict['image'])-1:

|

| 425 |

+

x = len(my_dict['image'])-1

|

| 426 |

+

if x < 0:

|

| 427 |

+

x = 0

|

| 428 |

+

image = my_dict['image'][x]

|

| 429 |

+

if my_dict['mask'] is None:

|

| 430 |

+

return (image,[])

|

| 431 |

+

else:

|

| 432 |

+

mask = my_dict['mask'][x]

|

| 433 |

+

value = (image,[(mask, "Mask")])

|

| 434 |

+

return value

|

| 435 |

+

|

| 436 |

+

def update_context_visibility(task):

|

| 437 |

+

if task == "report generation" or task == 'classification':

|

| 438 |

+

return gr.update(visible=True)

|

| 439 |

+

else:

|

| 440 |

+

return gr.update(visible=False)

|

| 441 |

+

|

| 442 |

+

def reset_chatbot():

|

| 443 |

+

return []

|

| 444 |

+

|

| 445 |

+

with gr.Blocks(theme=gr.themes.Soft()) as demo:

|

| 446 |

+

# with gr.Row():

|

| 447 |

+

# gr.Markdown("<link href='https://fonts.googleapis.com/css2?family=Libre+Franklin:wght@400;700&display=swap' rel='stylesheet'>")

|

| 448 |

+

gr.Markdown("# MedVersa")

|

| 449 |

+

with gr.Row():

|

| 450 |

+

with gr.Column():

|

| 451 |

+

image_input = gr.File(label="Upload Images", file_count="multiple", file_types=["image", "numpy"])

|

| 452 |

+

# task_input = gr.Dropdown(choices=["report generation", "vqa", "localization", "classification"], label="Task")

|

| 453 |

+

context_input = gr.Textbox(label="Context", placeholder="Enter context here...", lines=3, visible=True)

|

| 454 |

+

modality_input = gr.Dropdown(choices=["cxr", "derm", "ct"], label="Modality")

|

| 455 |

+

prompt_input = gr.Textbox(label="Prompt", placeholder="Enter prompt here... (images should be referred as <img0>, <img1>, ...)", lines=3)

|

| 456 |

+

submit_button = gr.Button("Generate Predictions")

|

| 457 |

+

with gr.Accordion("Advanced Settings", open=False):

|

| 458 |

+

num_beams = gr.Slider(label="Number of Beams", minimum=1, maximum=10, step=1, value=1)

|

| 459 |

+

do_sample = gr.Checkbox(label="Do Sample", value=True)

|

| 460 |

+

min_length = gr.Slider(label="Minimum Length", minimum=1, maximum=100, step=1, value=1)

|

| 461 |

+

top_p = gr.Slider(label="Top P", minimum=0.1, maximum=1.0, step=0.1, value=0.9)

|

| 462 |

+

repetition_penalty = gr.Slider(label="Repetition Penalty", minimum=1.0, maximum=2.0, step=0.1, value=1.0)

|

| 463 |

+

length_penalty = gr.Slider(label="Length Penalty", minimum=1.0, maximum=2.0, step=0.1, value=1.0)

|

| 464 |

+

temperature = gr.Slider(label="Temperature", minimum=0.1, maximum=1.0, step=0.1, value=0.1)

|

| 465 |

+

|

| 466 |

+

with gr.Column():

|

| 467 |

+

# output_text = gr.Textbox(label="Generated Text", lines=10, elem_classes="output-textbox")

|

| 468 |

+

chatbot = gr.Chatbot(label="Chatbox")

|

| 469 |

+

slider = gr.Slider(minimum=0, maximum=64, value=1, step=1)

|

| 470 |

+

output_image = gr.AnnotatedImage(height=448, label="Images")

|

| 471 |

+

|

| 472 |

+

# task_input.change(

|

| 473 |

+

# fn=update_context_visibility,

|

| 474 |

+

# inputs=task_input,

|

| 475 |

+

# outputs=context_input

|

| 476 |

+

# )

|

| 477 |

+

|

| 478 |

+

submit_button.click(

|

| 479 |

+

fn=gradio_interface,

|

| 480 |

+

inputs=[chatbot, image_input, context_input, prompt_input, modality_input, num_beams, do_sample, min_length, top_p, repetition_penalty, length_penalty, temperature],

|

| 481 |

+

outputs=[chatbot, output_image, slider]

|

| 482 |

+

)

|

| 483 |

+

|

| 484 |

+

slider.change(

|

| 485 |

+

render,

|

| 486 |

+

inputs=[slider],

|

| 487 |

+

outputs=[output_image],

|

| 488 |

+

)

|

| 489 |

+

|

| 490 |

+

examples = [

|

| 491 |

+

[

|

| 492 |

+

["./demo_ex/c536f749-2326f755-6a65f28f-469affd2-26392ce9.png"],

|

| 493 |

+

"Age:30-40.\nGender:F.\nIndication: ___-year-old female with end-stage renal disease not on dialysis presents with dyspnea. PICC line placement.\nComparison: None.",

|

| 494 |

+

"How would you characterize the findings from <img0>?",

|

| 495 |

+

"cxr",

|

| 496 |

+

],

|

| 497 |

+

[

|

| 498 |

+

["./demo_ex/79eee504-b1b60ab8-5e8dd843-b6ed87aa-670747b1.png"],

|

| 499 |

+

"Age:70-80.\nGender:F.\nIndication: Respiratory distress.\nComparison: None.",

|

| 500 |

+

"How would you characterize the findings from <img0>?",

|

| 501 |

+

"cxr",

|

| 502 |

+

],

|

| 503 |

+

[

|

| 504 |

+

["./demo_ex/f39b05b1-f544e51a-cfe317ca-b66a4aa6-1c1dc22d.png", "./demo_ex/f3fefc29-68544ac8-284b820d-858b5470-f579b982.png"],

|

| 505 |

+

"Age:80-90.\nGender:F.\nIndication: ___-year-old female with history of chest pain.\nComparison: None.",

|

| 506 |

+

"How would you characterize the findings from <img0><img1>?",

|

| 507 |

+

"cxr",

|

| 508 |

+

],

|

| 509 |

+

[

|

| 510 |

+

["./demo_ex/1de015eb-891f1b02-f90be378-d6af1e86-df3270c2.png"],

|

| 511 |

+

"Age:40-50.\nGender:M.\nIndication: ___-year-old male with shortness of breath.\nComparison: None.",

|

| 512 |

+

"How would you characterize the findings from <img0>?",

|

| 513 |

+

"cxr",

|

| 514 |

+

],

|

| 515 |

+

[

|

| 516 |

+

["./demo_ex/bc25fa99-0d3766cc-7704edb7-5c7a4a63-dc65480a.png"],

|

| 517 |

+

"Age:40-50.\nGender:F.\nIndication: History: ___F with tachyacrdia cough doe // infilatrate\nComparison: None.",

|

| 518 |

+

"How would you characterize the findings from <img0>?",

|

| 519 |

+

"cxr",

|

| 520 |

+

],

|

| 521 |

+

[

|

| 522 |

+

["./demo_ex/ISIC_0032258.jpg"],

|

| 523 |

+

"Age:70.\nGender:female.\nLocation:back.",

|

| 524 |

+

"What is primary diagnosis?",

|

| 525 |

+

"derm",

|

| 526 |

+

],

|

| 527 |

+

[

|

| 528 |

+

["./demo_ex/Case_01013_0000.nii.gz"],

|

| 529 |

+

"",

|

| 530 |

+

"Segment the liver.",

|

| 531 |

+

"ct",

|

| 532 |

+

],

|

| 533 |

+

[

|

| 534 |

+

["./demo_ex/Case_00840_0000.nii.gz"],

|

| 535 |

+

"",

|

| 536 |

+

"Segment the liver.",

|

| 537 |

+

"ct",

|

| 538 |

+

],

|

| 539 |

+

]

|

| 540 |

+

|

| 541 |

+

gr.Examples(examples, inputs=[image_input, context_input, prompt_input, modality_input])

|

| 542 |

+

|

| 543 |

+

# Run Gradio app

|

| 544 |

+

demo.launch(share=True)

|

demo_ex/1de015eb-891f1b02-f90be378-d6af1e86-df3270c2.png

ADDED

|

demo_ex/79eee504-b1b60ab8-5e8dd843-b6ed87aa-670747b1.png

ADDED

|

demo_ex/Case_00840_0000.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:27d91a51f4f792740aab30da1416e2a200f637a53e9aa842cf47f2dd96519216

|

| 3 |

+

size 30618190

|

demo_ex/Case_01013_0000.nii.gz

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:63f597a81e594aa0b5d5b67551658f4a8be831ac6189f2f3f644b0a1098fbb09

|

| 3 |

+

size 30845920

|

demo_ex/ISIC_0032258.jpg

ADDED

|

demo_ex/ISIC_0033730.jpg

ADDED

|

demo_ex/bc25fa99-0d3766cc-7704edb7-5c7a4a63-dc65480a.png

ADDED

|

demo_ex/c536f749-2326f755-6a65f28f-469affd2-26392ce9.png

ADDED

|

demo_ex/f39b05b1-f544e51a-cfe317ca-b66a4aa6-1c1dc22d.png

ADDED

|

demo_ex/f3fefc29-68544ac8-284b820d-858b5470-f579b982.png

ADDED

|

environment.yml

ADDED

|

@@ -0,0 +1,479 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|