Upload folder using huggingface_hub

Browse files- .gitattributes +1 -0

- README.md +178 -0

- chocolatine-3b-instruct-dpo-revised.Q4_0.gguf +3 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

chocolatine-3b-instruct-dpo-revised.Q4_0.gguf filter=lfs diff=lfs merge=lfs -text

|

README.md

ADDED

|

@@ -0,0 +1,178 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

library_name: transformers

|

| 3 |

+

license: mit

|

| 4 |

+

language:

|

| 5 |

+

- fr

|

| 6 |

+

- en

|

| 7 |

+

tags:

|

| 8 |

+

- french

|

| 9 |

+

- chocolatine

|

| 10 |

+

datasets:

|

| 11 |

+

- jpacifico/french-orca-dpo-pairs-revised

|

| 12 |

+

pipeline_tag: text-generation

|

| 13 |

+

---

|

| 14 |

+

|

| 15 |

+

### Chocolatine-3B-Instruct-DPO-Revised

|

| 16 |

+

|

| 17 |

+

DPO fine-tuned of [microsoft/Phi-3-mini-4k-instruct](https://huggingface.co/microsoft/Phi-3-mini-4k-instruct) (3.82B params)

|

| 18 |

+

using the [jpacifico/french-orca-dpo-pairs-revised](https://huggingface.co/datasets/jpacifico/french-orca-dpo-pairs-revised) rlhf dataset.

|

| 19 |

+

Training in French also improves the model in English, surpassing the performances of its base model.

|

| 20 |

+

Window context = 4k tokens

|

| 21 |

+

|

| 22 |

+

Quantized 4-bit and 8-bit versions are available (see below)

|

| 23 |

+

A larger version Chocolatine-14B is also available in its latest [version-1.2](https://huggingface.co/jpacifico/Chocolatine-14B-Instruct-DPO-v1.2)

|

| 24 |

+

|

| 25 |

+

### Benchmarks

|

| 26 |

+

|

| 27 |

+

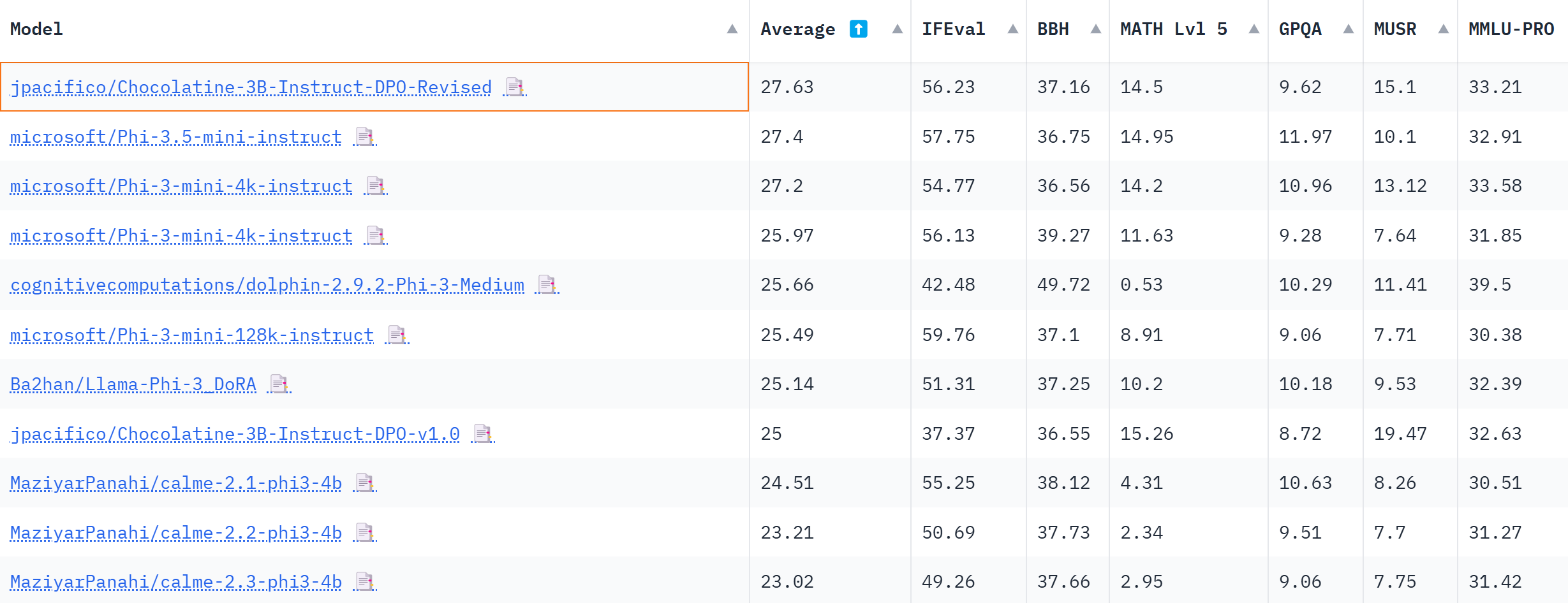

Chocolatine is the best-performing 3B model on the [OpenLLM Leaderboard](https://huggingface.co/spaces/open-llm-leaderboard/open_llm_leaderboard) (august 2024)

|

| 28 |

+

[Update 2024-08-22] Chocolatine-3B also outperforms Microsoft's new model Phi-3.5-mini-instruct on the average benchmarks of the 3B category.

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

|

| 33 |

+

| Metric |Value|

|

| 34 |

+

|-------------------|----:|

|

| 35 |

+

|**Avg.** |**27.63**|

|

| 36 |

+

|IFEval |56.23|

|

| 37 |

+

|BBH |37.16|

|

| 38 |

+

|MATH Lvl 5 |14.5|

|

| 39 |

+

|GPQA |9.62|

|

| 40 |

+

|MuSR |15.1|

|

| 41 |

+

|MMLU-PRO |33.21|

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

### MT-Bench-French

|

| 45 |

+

|

| 46 |

+

Chocolatine-3B-Instruct-DPO-Revised is outperforming GPT-3.5-Turbo on [MT-Bench-French](https://huggingface.co/datasets/bofenghuang/mt-bench-french), used with [multilingual-mt-bench](https://github.com/Peter-Devine/multilingual_mt_bench) and GPT-4-Turbo as LLM-judge.

|

| 47 |

+

Notably, this latest version of the Chocolatine-3B model is approaching the performance of Phi-3-Medium (14B) in French.

|

| 48 |

+

|

| 49 |

+

```

|

| 50 |

+

########## First turn ##########

|

| 51 |

+

score

|

| 52 |

+

model turn

|

| 53 |

+

gpt-4o-mini 1 9.28750

|

| 54 |

+

Chocolatine-14B-Instruct-DPO-v1.2 1 8.61250

|

| 55 |

+

Phi-3-medium-4k-instruct 1 8.22500

|

| 56 |

+

gpt-3.5-turbo 1 8.13750

|

| 57 |

+

Chocolatine-3B-Instruct-DPO-Revised 1 7.98750

|

| 58 |

+

Daredevil-8B 1 7.88750

|

| 59 |

+

NeuralDaredevil-8B-abliterated 1 7.62500

|

| 60 |

+

Phi-3-mini-4k-instruct 1 7.21250

|

| 61 |

+

Meta-Llama-3.1-8B-Instruct 1 7.05000

|

| 62 |

+

vigostral-7b-chat 1 6.78750

|

| 63 |

+

Mistral-7B-Instruct-v0.3 1 6.75000

|

| 64 |

+

gemma-2-2b-it 1 6.45000

|

| 65 |

+

French-Alpaca-7B-Instruct_beta 1 5.68750

|

| 66 |

+

vigogne-2-7b-chat 1 5.66250

|

| 67 |

+

|

| 68 |

+

########## Second turn ##########

|

| 69 |

+

score

|

| 70 |

+

model turn

|

| 71 |

+

gpt-4o-mini 2 8.912500

|

| 72 |

+

Chocolatine-14B-Instruct-DPO-v1.2 2 8.337500

|

| 73 |

+

Chocolatine-3B-Instruct-DPO-Revised 2 7.937500

|

| 74 |

+

Phi-3-medium-4k-instruct 2 7.750000

|

| 75 |

+

gpt-3.5-turbo 2 7.679167

|

| 76 |

+

NeuralDaredevil-8B-abliterated 2 7.125000

|

| 77 |

+

Daredevil-8B 2 7.087500

|

| 78 |

+

Meta-Llama-3.1-8B-Instruct 2 6.787500

|

| 79 |

+

Mistral-7B-Instruct-v0.3 2 6.500000

|

| 80 |

+

Phi-3-mini-4k-instruct 2 6.487500

|

| 81 |

+

vigostral-7b-chat 2 6.162500

|

| 82 |

+

gemma-2-2b-it 2 6.100000

|

| 83 |

+

French-Alpaca-7B-Instruct_beta 2 5.487395

|

| 84 |

+

vigogne-2-7b-chat 2 2.775000

|

| 85 |

+

|

| 86 |

+

########## Average ##########

|

| 87 |

+

score

|

| 88 |

+

model

|

| 89 |

+

gpt-4o-mini 9.100000

|

| 90 |

+

Chocolatine-14B-Instruct-DPO-v1.2 8.475000

|

| 91 |

+

Phi-3-medium-4k-instruct 7.987500

|

| 92 |

+

Chocolatine-3B-Instruct-DPO-Revised 7.962500

|

| 93 |

+

gpt-3.5-turbo 7.908333

|

| 94 |

+

Daredevil-8B 7.487500

|

| 95 |

+

NeuralDaredevil-8B-abliterated 7.375000

|

| 96 |

+

Meta-Llama-3.1-8B-Instruct 6.918750

|

| 97 |

+

Phi-3-mini-4k-instruct 6.850000

|

| 98 |

+

Mistral-7B-Instruct-v0.3 6.625000

|

| 99 |

+

vigostral-7b-chat 6.475000

|

| 100 |

+

gemma-2-2b-it 6.275000

|

| 101 |

+

French-Alpaca-7B-Instruct_beta 5.587866

|

| 102 |

+

vigogne-2-7b-chat 4.218750

|

| 103 |

+

```

|

| 104 |

+

|

| 105 |

+

|

| 106 |

+

### Quantized versions

|

| 107 |

+

|

| 108 |

+

* **4-bit quantized version** is available here : [jpacifico/Chocolatine-3B-Instruct-DPO-Revised-Q4_K_M-GGUF](https://huggingface.co/jpacifico/Chocolatine-3B-Instruct-DPO-Revised-Q4_K_M-GGUF)

|

| 109 |

+

|

| 110 |

+

* **8-bit quantized version** also available here : [jpacifico/Chocolatine-3B-Instruct-DPO-Revised-Q8_0-GGUF](https://huggingface.co/jpacifico/Chocolatine-3B-Instruct-DPO-Revised-Q8_0-GGUF)

|

| 111 |

+

|

| 112 |

+

* **Ollama**: [jpacifico/chocolatine-3b](https://ollama.com/jpacifico/chocolatine-3b)

|

| 113 |

+

|

| 114 |

+

```bash

|

| 115 |

+

ollama run jpacifico/chocolatine-3b

|

| 116 |

+

```

|

| 117 |

+

|

| 118 |

+

Ollama *Modelfile* example :

|

| 119 |

+

|

| 120 |

+

```bash

|

| 121 |

+

FROM ./chocolatine-3b-instruct-dpo-revised-q4_k_m.gguf

|

| 122 |

+

TEMPLATE """{{ if .System }}<|system|>

|

| 123 |

+

{{ .System }}<|end|>

|

| 124 |

+

{{ end }}{{ if .Prompt }}<|user|>

|

| 125 |

+

{{ .Prompt }}<|end|>

|

| 126 |

+

{{ end }}<|assistant|>

|

| 127 |

+

{{ .Response }}<|end|>

|

| 128 |

+

"""

|

| 129 |

+

PARAMETER stop """{"stop": ["<|end|>","<|user|>","<|assistant|>"]}"""

|

| 130 |

+

SYSTEM """You are a friendly assistant called Chocolatine."""

|

| 131 |

+

```

|

| 132 |

+

|

| 133 |

+

### Usage

|

| 134 |

+

|

| 135 |

+

You can run this model using my [Colab notebook](https://github.com/jpacifico/Chocolatine-LLM/blob/main/Chocolatine_3B_inference_test_colab.ipynb)

|

| 136 |

+

|

| 137 |

+

You can also run Chocolatine using the following code:

|

| 138 |

+

|

| 139 |

+

```python

|

| 140 |

+

import transformers

|

| 141 |

+

from transformers import AutoTokenizer

|

| 142 |

+

|

| 143 |

+

# Format prompt

|

| 144 |

+

message = [

|

| 145 |

+

{"role": "system", "content": "You are a helpful assistant chatbot."},

|

| 146 |

+

{"role": "user", "content": "What is a Large Language Model?"}

|

| 147 |

+

]

|

| 148 |

+

tokenizer = AutoTokenizer.from_pretrained(new_model)

|

| 149 |

+

prompt = tokenizer.apply_chat_template(message, add_generation_prompt=True, tokenize=False)

|

| 150 |

+

|

| 151 |

+

# Create pipeline

|

| 152 |

+

pipeline = transformers.pipeline(

|

| 153 |

+

"text-generation",

|

| 154 |

+

model=new_model,

|

| 155 |

+

tokenizer=tokenizer

|

| 156 |

+

)

|

| 157 |

+

|

| 158 |

+

# Generate text

|

| 159 |

+

sequences = pipeline(

|

| 160 |

+

prompt,

|

| 161 |

+

do_sample=True,

|

| 162 |

+

temperature=0.7,

|

| 163 |

+

top_p=0.9,

|

| 164 |

+

num_return_sequences=1,

|

| 165 |

+

max_length=200,

|

| 166 |

+

)

|

| 167 |

+

print(sequences[0]['generated_text'])

|

| 168 |

+

```

|

| 169 |

+

|

| 170 |

+

### Limitations

|

| 171 |

+

|

| 172 |

+

The Chocolatine model is a quick demonstration that a base model can be easily fine-tuned to achieve compelling performance.

|

| 173 |

+

It does not have any moderation mechanism.

|

| 174 |

+

|

| 175 |

+

- **Developed by:** Jonathan Pacifico, 2024

|

| 176 |

+

- **Model type:** LLM

|

| 177 |

+

- **Language(s) (NLP):** French, English

|

| 178 |

+

- **License:** MIT

|

chocolatine-3b-instruct-dpo-revised.Q4_0.gguf

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:54015331ab7fd231b8729a51505fe8452dc5f90c9b6cb608886ab0d166d67a65

|

| 3 |

+

size 2176176608

|