Upload 304 files

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- big_vision_repo/.gitignore +1 -0

- big_vision_repo/CONTRIBUTING.md +26 -0

- big_vision_repo/LICENSE +201 -0

- big_vision_repo/README.md +498 -0

- big_vision_repo/big_vision/__init__.py +0 -0

- big_vision_repo/big_vision/configs/__init__.py +0 -0

- big_vision_repo/big_vision/configs/bit_i1k.py +102 -0

- big_vision_repo/big_vision/configs/bit_i21k.py +85 -0

- big_vision_repo/big_vision/configs/common.py +188 -0

- big_vision_repo/big_vision/configs/common_fewshot.py +56 -0

- big_vision_repo/big_vision/configs/load_and_eval.py +143 -0

- big_vision_repo/big_vision/configs/mlp_mixer_i1k.py +120 -0

- big_vision_repo/big_vision/configs/proj/cappa/README.md +37 -0

- big_vision_repo/big_vision/configs/proj/cappa/cappa_architecture.png +0 -0

- big_vision_repo/big_vision/configs/proj/cappa/pretrain.py +140 -0

- big_vision_repo/big_vision/configs/proj/clippo/README.md +85 -0

- big_vision_repo/big_vision/configs/proj/clippo/clippo_colab.ipynb +0 -0

- big_vision_repo/big_vision/configs/proj/clippo/train_clippo.py +199 -0

- big_vision_repo/big_vision/configs/proj/distill/README.md +43 -0

- big_vision_repo/big_vision/configs/proj/distill/bigsweep_flowers_pet.py +164 -0

- big_vision_repo/big_vision/configs/proj/distill/bigsweep_food_sun.py +213 -0

- big_vision_repo/big_vision/configs/proj/distill/bit_i1k.py +167 -0

- big_vision_repo/big_vision/configs/proj/distill/common.py +27 -0

- big_vision_repo/big_vision/configs/proj/flexivit/README.md +64 -0

- big_vision_repo/big_vision/configs/proj/flexivit/i1k_deit3_distill.py +187 -0

- big_vision_repo/big_vision/configs/proj/flexivit/i21k_distill.py +216 -0

- big_vision_repo/big_vision/configs/proj/flexivit/i21k_sup.py +144 -0

- big_vision_repo/big_vision/configs/proj/flexivit/timing.py +53 -0

- big_vision_repo/big_vision/configs/proj/givt/README.md +111 -0

- big_vision_repo/big_vision/configs/proj/givt/givt_coco_panoptic.py +186 -0

- big_vision_repo/big_vision/configs/proj/givt/givt_demo_colab.ipynb +309 -0

- big_vision_repo/big_vision/configs/proj/givt/givt_imagenet2012.py +222 -0

- big_vision_repo/big_vision/configs/proj/givt/givt_nyu_depth.py +198 -0

- big_vision_repo/big_vision/configs/proj/givt/givt_overview.png +0 -0

- big_vision_repo/big_vision/configs/proj/givt/vae_coco_panoptic.py +136 -0

- big_vision_repo/big_vision/configs/proj/givt/vae_nyu_depth.py +158 -0

- big_vision_repo/big_vision/configs/proj/gsam/vit_i1k_gsam_no_aug.py +134 -0

- big_vision_repo/big_vision/configs/proj/image_text/README.md +65 -0

- big_vision_repo/big_vision/configs/proj/image_text/SigLIP_demo.ipynb +0 -0

- big_vision_repo/big_vision/configs/proj/image_text/common.py +127 -0

- big_vision_repo/big_vision/configs/proj/image_text/lit.ipynb +0 -0

- big_vision_repo/big_vision/configs/proj/image_text/siglip_lit_coco.py +115 -0

- big_vision_repo/big_vision/configs/proj/paligemma/README.md +270 -0

- big_vision_repo/big_vision/configs/proj/paligemma/finetune_paligemma.ipynb +0 -0

- big_vision_repo/big_vision/configs/proj/paligemma/paligemma.png +0 -0

- big_vision_repo/big_vision/configs/proj/paligemma/transfers/activitynet_cap.py +209 -0

- big_vision_repo/big_vision/configs/proj/paligemma/transfers/activitynet_qa.py +213 -0

- big_vision_repo/big_vision/configs/proj/paligemma/transfers/ai2d.py +170 -0

- big_vision_repo/big_vision/configs/proj/paligemma/transfers/aokvqa_da.py +161 -0

- big_vision_repo/big_vision/configs/proj/paligemma/transfers/aokvqa_mc.py +169 -0

big_vision_repo/.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

__pycache__

|

big_vision_repo/CONTRIBUTING.md

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# How to Contribute

|

| 2 |

+

|

| 3 |

+

At this time we do not plan to accept non-trivial contributions. The main

|

| 4 |

+

purpose of this codebase is to allow the community to reproduce results from our

|

| 5 |

+

publications.

|

| 6 |

+

|

| 7 |

+

You are however free to start a fork of the project for your purposes as

|

| 8 |

+

permitted by the license.

|

| 9 |

+

|

| 10 |

+

## Contributor License Agreement

|

| 11 |

+

|

| 12 |

+

Contributions to this project must be accompanied by a Contributor License

|

| 13 |

+

Agreement (CLA). You (or your employer) retain the copyright to your

|

| 14 |

+

contribution; this simply gives us permission to use and redistribute your

|

| 15 |

+

contributions as part of the project. Head over to

|

| 16 |

+

<https://cla.developers.google.com/> to see your current agreements on file or

|

| 17 |

+

to sign a new one.

|

| 18 |

+

|

| 19 |

+

You generally only need to submit a CLA once, so if you've already submitted one

|

| 20 |

+

(even if it was for a different project), you probably don't need to do it

|

| 21 |

+

again.

|

| 22 |

+

|

| 23 |

+

## Community Guidelines

|

| 24 |

+

|

| 25 |

+

This project follows

|

| 26 |

+

[Google's Open Source Community Guidelines](https://opensource.google/conduct/).

|

big_vision_repo/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

big_vision_repo/README.md

ADDED

|

@@ -0,0 +1,498 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Big Vision

|

| 2 |

+

|

| 3 |

+

This codebase is designed for training large-scale vision models using

|

| 4 |

+

[Cloud TPU VMs](https://cloud.google.com/blog/products/compute/introducing-cloud-tpu-vms)

|

| 5 |

+

or GPU machines. It is based on [Jax](https://github.com/google/jax)/[Flax](https://github.com/google/flax)

|

| 6 |

+

libraries, and uses [tf.data](https://www.tensorflow.org/guide/data) and

|

| 7 |

+

[TensorFlow Datasets](https://www.tensorflow.org/datasets) for scalable and

|

| 8 |

+

reproducible input pipelines.

|

| 9 |

+

|

| 10 |

+

The open-sourcing of this codebase has two main purposes:

|

| 11 |

+

1. Publishing the code of research projects developed in this codebase (see a

|

| 12 |

+

list below).

|

| 13 |

+

2. Providing a strong starting point for running large-scale vision experiments

|

| 14 |

+

on GPU machines and Google Cloud TPUs, which should scale seamlessly and

|

| 15 |

+

out-of-the box from a single TPU core to a distributed setup with up to 2048

|

| 16 |

+

TPU cores.

|

| 17 |

+

|

| 18 |

+

`big_vision` aims to support research projects at Google. We are unlikely to

|

| 19 |

+

work on feature requests or accept external contributions, unless they were

|

| 20 |

+

pre-approved (ask in an issue first). For a well-supported transfer-only

|

| 21 |

+

codebase, see also [vision_transformer](https://github.com/google-research/vision_transformer).

|

| 22 |

+

|

| 23 |

+

Note that `big_vision` is quite dynamic codebase and, while we intend to keep

|

| 24 |

+

the core code fully-functional at all times, we can not guarantee timely updates

|

| 25 |

+

of the project-specific code that lives in the `.../proj/...` subfolders.

|

| 26 |

+

However, we provide a [table](#project-specific-commits) with last known

|

| 27 |

+

commits where specific projects were known to work.

|

| 28 |

+

|

| 29 |

+

The following research projects were originally conducted in the `big_vision`

|

| 30 |

+

codebase:

|

| 31 |

+

|

| 32 |

+

### Architecture research

|

| 33 |

+

|

| 34 |

+

- [An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale](https://arxiv.org/abs/2010.11929), by

|

| 35 |

+

Alexey Dosovitskiy*, Lucas Beyer*, Alexander Kolesnikov*, Dirk Weissenborn*,

|

| 36 |

+

Xiaohua Zhai*, Thomas Unterthiner, Mostafa Dehghani, Matthias Minderer,

|

| 37 |

+

Georg Heigold, Sylvain Gelly, Jakob Uszkoreit, and Neil Houlsby*

|

| 38 |

+

- [Scaling Vision Transformers](https://arxiv.org/abs/2106.04560), by

|

| 39 |

+

Xiaohua Zhai*, Alexander Kolesnikov*, Neil Houlsby, and Lucas Beyer*\

|

| 40 |

+

Resources: [config](big_vision/configs/proj/scaling_laws/train_vit_g.py).

|

| 41 |

+

- [How to train your ViT? Data, Augmentation, and Regularization in Vision Transformers](https://arxiv.org/abs/2106.10270), by

|

| 42 |

+

Andreas Steiner*, Alexander Kolesnikov*, Xiaohua Zhai*, Ross Wightman,

|

| 43 |

+

Jakob Uszkoreit, and Lucas Beyer*

|

| 44 |

+

- [MLP-Mixer: An all-MLP Architecture for Vision](https://arxiv.org/abs/2105.01601), by

|

| 45 |

+

Ilya Tolstikhin*, Neil Houlsby*, Alexander Kolesnikov*, Lucas Beyer*,

|

| 46 |

+

Xiaohua Zhai, Thomas Unterthiner, Jessica Yung, Andreas Steiner,

|

| 47 |

+

Daniel Keysers, Jakob Uszkoreit, Mario Lucic, Alexey Dosovitskiy\

|

| 48 |

+

Resources: [config](big_vision/configs/mlp_mixer_i1k.py).

|

| 49 |

+

- [Better plain ViT baselines for ImageNet-1k](https://arxiv.org/abs/2205.01580), by

|

| 50 |

+

Lucas Beyer, Xiaohua Zhai, Alexander Kolesnikov\

|

| 51 |

+

Resources: [config](big_vision/configs/vit_s16_i1k.py)

|

| 52 |

+

- [UViM: A Unified Modeling Approach for Vision with Learned Guiding Codes](https://arxiv.org/abs/2205.10337), by

|

| 53 |

+

Alexander Kolesnikov^*, André Susano Pinto^*, Lucas Beyer*, Xiaohua Zhai*, Jeremiah Harmsen*, Neil Houlsby*\

|

| 54 |

+

Resources: [readme](big_vision/configs/proj/uvim/README.md), [configs](big_vision/configs/proj/uvim), [colabs](big_vision/configs/proj/uvim).

|

| 55 |

+

- [FlexiViT: One Model for All Patch Sizes](https://arxiv.org/abs/2212.08013), by

|

| 56 |

+

Lucas Beyer*, Pavel Izmailov*, Alexander Kolesnikov*, Mathilde Caron*, Simon

|

| 57 |

+

Kornblith*, Xiaohua Zhai*, Matthias Minderer*, Michael Tschannen*, Ibrahim

|

| 58 |

+

Alabdulmohsin*, Filip Pavetic*\

|

| 59 |

+

Resources: [readme](big_vision/configs/proj/flexivit/README.md), [configs](big_vision/configs/proj/flexivit).

|

| 60 |

+

- [Dual PatchNorm](https://arxiv.org/abs/2302.01327), by Manoj Kumar, Mostafa Dehghani, Neil Houlsby.

|

| 61 |

+

- [Getting ViT in Shape: Scaling Laws for Compute-Optimal Model Design](https://arxiv.org/abs/2305.13035), by

|

| 62 |

+

Ibrahim Alabdulmohsin*, Xiaohua Zhai*, Alexander Kolesnikov, Lucas Beyer*.

|

| 63 |

+

- (partial) [Scaling Vision Transformers to 22 Billion Parameters](https://arxiv.org/abs/2302.05442), by

|

| 64 |

+

Mostafa Dehghani*, Josip Djolonga*, Basil Mustafa*, Piotr Padlewski*, Jonathan Heek*, *wow many middle authors*, Neil Houlsby*.

|

| 65 |

+

- (partial) [Finite Scalar Quantization: VQ-VAE Made Simple](https://arxiv.org/abs/2309.15505), by

|

| 66 |

+

Fabian Mentzer, David Minnen, Eirikur Agustsson, Michael Tschannen.

|

| 67 |

+

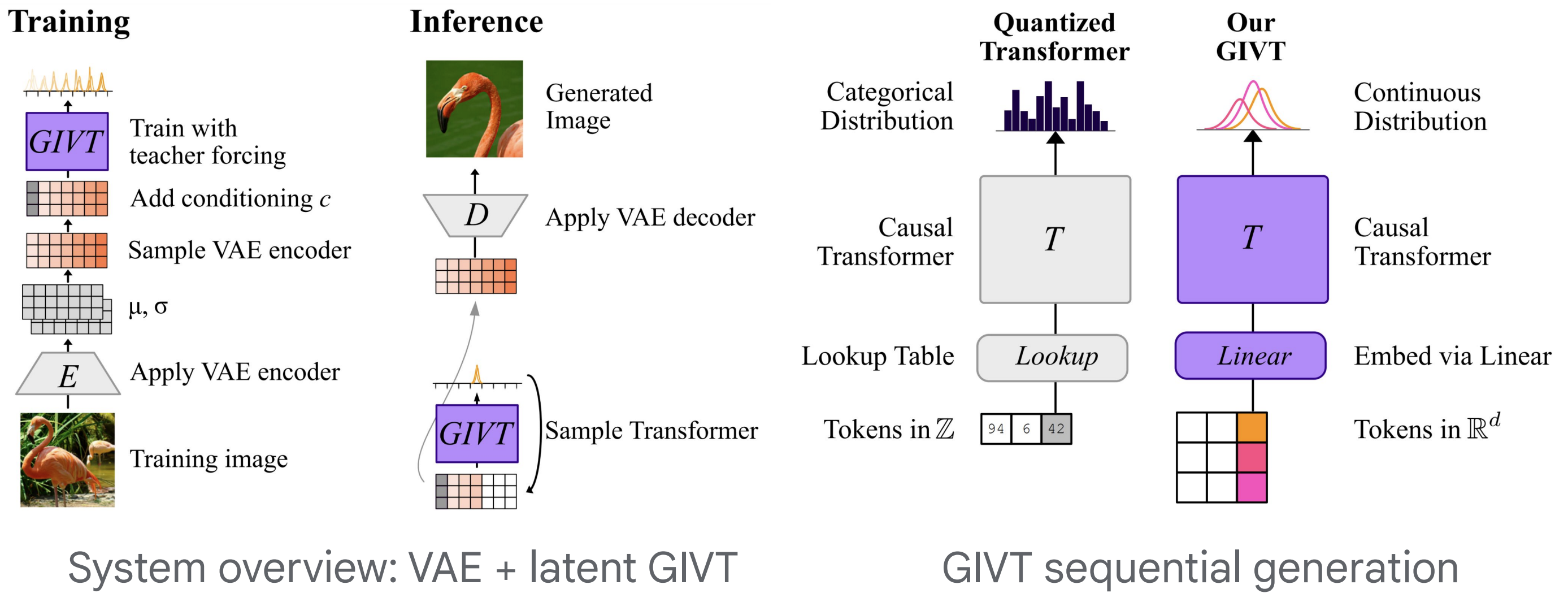

- [GIVT: Generative Infinite-Vocabulary Transformers](https://arxiv.org/abs/2312.02116), by

|

| 68 |

+

Michael Tschannen, Cian Eastwood, Fabian Mentzer.\

|

| 69 |

+

Resources: [readme](big_vision/configs/proj/givt/README.md), [config](big_vision/configs/proj/givt/givt_imagenet2012.py), [colab](https://colab.research.google.com/github/google-research/big_vision/blob/main/big_vision/configs/proj/givt/givt_demo_colab.ipynb).

|

| 70 |

+

- [Unified Auto-Encoding with Masked Diffusion](https://arxiv.org/abs/2406.17688), by

|

| 71 |

+

Philippe Hansen-Estruch, Sriram Vishwanath, Amy Zhang, Manan Tomar.

|

| 72 |

+

|

| 73 |

+

|

| 74 |

+

### Multimodal research

|

| 75 |

+

|

| 76 |

+

- [LiT: Zero-Shot Transfer with Locked-image Text Tuning](https://arxiv.org/abs/2111.07991), by

|

| 77 |

+

Xiaohua Zhai*, Xiao Wang*, Basil Mustafa*, Andreas Steiner*, Daniel Keysers,

|

| 78 |

+

Alexander Kolesnikov, and Lucas Beyer*\

|

| 79 |

+

Resources: [trainer](big_vision/trainers/proj/image_text/contrastive.py), [config](big_vision/configs/proj/image_text/lit_coco.py), [colab](https://colab.research.google.com/github/google-research/big_vision/blob/main/big_vision/configs/proj/image_text/lit.ipynb).

|

| 80 |

+

- [Image-and-Language Understanding from Pixels Only](https://arxiv.org/abs/2212.08045), by

|

| 81 |

+

Michael Tschannen, Basil Mustafa, Neil Houlsby\

|

| 82 |

+

Resources: [readme](big_vision/configs/proj/clippo/README.md), [config](big_vision/configs/proj/clippo/train_clippo.py), [colab](https://colab.research.google.com/github/google-research/big_vision/blob/main/big_vision/configs/proj/clippo/clippo_colab.ipynb).

|

| 83 |

+

- [Sigmoid Loss for Language Image Pre-Training](https://arxiv.org/abs/2303.15343), by

|

| 84 |

+

Xiaohua Zhai*, Basil Mustafa, Alexander Kolesnikov, Lucas Beyer*\

|

| 85 |

+

Resources: [colab and models](https://colab.research.google.com/github/google-research/big_vision/blob/main/big_vision/configs/proj/image_text/SigLIP_demo.ipynb), code TODO.

|

| 86 |

+

- [A Study of Autoregressive Decoders for Multi-Tasking in Computer Vision](https://arxiv.org/abs/2303.17376), by

|

| 87 |

+

Lucas Beyer*, Bo Wan*, Gagan Madan*, Filip Pavetic*, Andreas Steiner*, Alexander Kolesnikov, André Susano Pinto, Emanuele Bugliarello, Xiao Wang, Qihang Yu, Liang-Chieh Chen, Xiaohua Zhai*.

|

| 88 |

+

- [Image Captioners Are Scalable Vision Learners Too](https://arxiv.org/abs/2306.07915), by

|

| 89 |

+

Michael Tschannen*, Manoj Kumar*, Andreas Steiner*, Xiaohua Zhai, Neil Houlsby, Lucas Beyer*.\

|

| 90 |

+

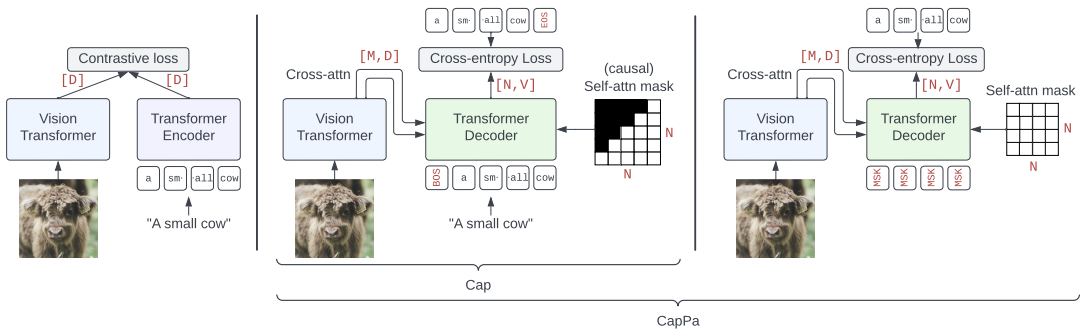

Resources: [readme](big_vision/configs/proj/cappa/README.md), [config](big_vision/configs/proj/cappa/pretrain.py), [model](big_vision/models/proj/cappa/cappa.py).

|

| 91 |

+

- [Three Towers: Flexible Contrastive Learning with Pretrained Image Models](https://arxiv.org/abs/2305.16999), by Jannik Kossen, Mark Collier, Basil Mustafa, Xiao Wang, Xiaohua Zhai, Lucas Beyer, Andreas Steiner, Jesse Berent, Rodolphe Jenatton, Efi Kokiopoulou.

|

| 92 |

+

- (partial) [PaLI: A Jointly-Scaled Multilingual Language-Image Model](https://arxiv.org/abs/2209.06794), by Xi Chen, Xiao Wang, Soravit Changpinyo, *wow so many middle authors*, Anelia Angelova, Xiaohua Zhai, Neil Houlsby, Radu Soricut.

|

| 93 |

+

- (partial) [PaLI-3 Vision Language Models: Smaller, Faster, Stronger](https://arxiv.org/abs/2310.09199), by Xi Chen, Xiao Wang, Lucas Beyer, Alexander Kolesnikov, Jialin Wu, Paul Voigtlaender, Basil Mustafa, Sebastian Goodman, Ibrahim Alabdulmohsin, Piotr Padlewski, Daniel Salz, Xi Xiong, Daniel Vlasic, Filip Pavetic, Keran Rong, Tianli Yu, Daniel Keysers, Xiaohua Zhai, Radu Soricut.

|

| 94 |

+

- [LocCa](https://arxiv.org/abs/2403.19596), by

|

| 95 |

+

Bo Wan, Michael Tschannen, Yongqin Xian, Filip Pavetic, Ibrahim Alabdulmohsin, Xiao Wang, André Susano Pinto, Andreas Steiner, Lucas Beyer, Xiaohua Zhai.

|

| 96 |

+

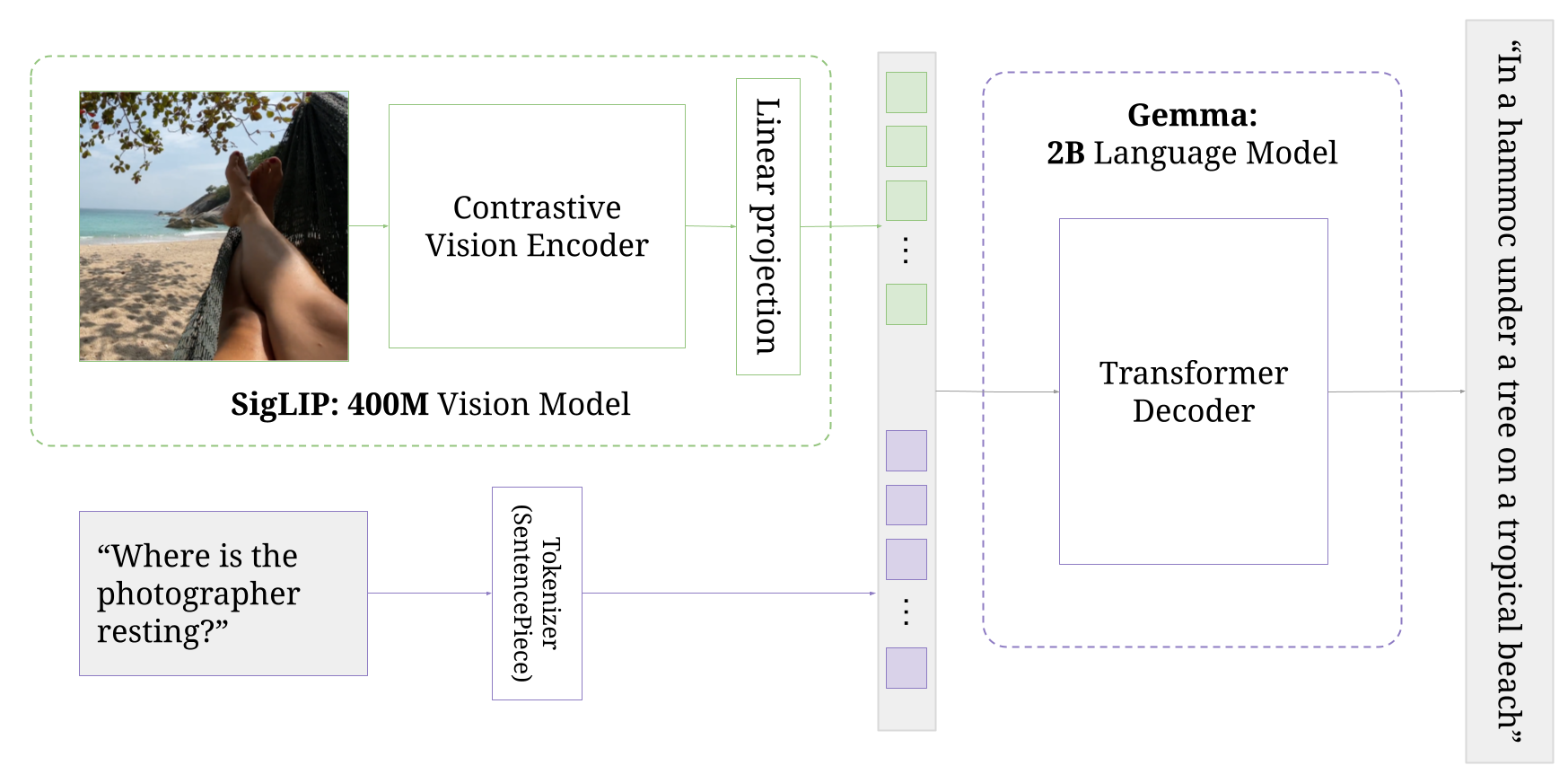

- [PaliGemma](https://arxiv.org/abs/2407.07726), by *wow many authors*.\

|

| 97 |

+

- Resources: [readme](big_vision/configs/proj/paligemma/README.md),

|

| 98 |

+

[model](big_vision/models/proj/paligemma/paligemma.py),

|

| 99 |

+

[transfer configs](big_vision/configs/proj/paligemma/transfers),

|

| 100 |

+

[datasets](big_vision/datasets),

|

| 101 |

+

[CountBenchQA](big_vision/datasets/countbenchqa/data/countbench_paired_questions.json).

|

| 102 |

+

|

| 103 |

+

### Training

|

| 104 |

+

|

| 105 |

+

- [Knowledge distillation: A good teacher is patient and consistent](https://arxiv.org/abs/2106.05237), by

|

| 106 |

+

Lucas Beyer*, Xiaohua Zhai*, Amélie Royer*, Larisa Markeeva*, Rohan Anil,

|

| 107 |

+

and Alexander Kolesnikov*\

|

| 108 |

+

Resources: [README](big_vision/configs/proj/distill/README.md), [trainer](big_vision/trainers/proj/distill/distill.py), [colab](https://colab.research.google.com/drive/1nMykzUzsfQ_uAxfj3k35DYsATnG_knPl?usp=sharing).

|

| 109 |

+

- [Sharpness-Aware Minimization for Efficiently Improving Generalization](https://arxiv.org/abs/2010.01412), by

|

| 110 |

+

Pierre Foret, Ariel Kleiner, Hossein Mobahi, Behnam Neyshabur

|

| 111 |

+

- [Surrogate Gap Minimization Improves Sharpness-Aware Training](https://arxiv.org/abs/2203.08065), by Juntang Zhuang, Boqing Gong, Liangzhe Yuan, Yin Cui, Hartwig Adam, Nicha Dvornek, Sekhar Tatikonda, James Duncan and Ting Liu \

|

| 112 |

+

Resources: [trainer](big_vision/trainers/proj/gsam/gsam.py), [config](big_vision/configs/proj/gsam/vit_i1k_gsam_no_aug.py) [reproduced results](https://github.com/google-research/big_vision/pull/8#pullrequestreview-1078557411)

|

| 113 |

+

- [Tuning computer vision models with task rewards](https://arxiv.org/abs/2302.08242), by

|

| 114 |

+

André Susano Pinto*, Alexander Kolesnikov*, Yuge Shi, Lucas Beyer, Xiaohua Zhai.

|

| 115 |

+

- (partial) [VeLO: Training Versatile Learned Optimizers by Scaling Up](https://arxiv.org/abs/2211.09760) by

|

| 116 |

+

Luke Metz, James Harrison, C. Daniel Freeman, Amil Merchant, Lucas Beyer, James Bradbury, Naman Agrawal, Ben Poole, Igor Mordatch, Adam Roberts, Jascha Sohl-Dickstein.

|

| 117 |

+

|

| 118 |

+

### Misc

|

| 119 |

+

|

| 120 |

+

- [Are we done with ImageNet?](https://arxiv.org/abs/2006.07159), by

|

| 121 |

+

Lucas Beyer*, Olivier J. Hénaff*, Alexander Kolesnikov*, Xiaohua Zhai*, Aäron van den Oord*.

|

| 122 |

+

- [No Filter: Cultural and Socioeconomic Diversity in Contrastive Vision-Language Models](https://arxiv.org/abs/2405.13777), by

|

| 123 |

+

Angéline Pouget, Lucas Beyer, Emanuele Bugliarello, Xiao Wang, Andreas Peter Steiner, Xiaohua Zhai, Ibrahim Alabdulmohsin.

|

| 124 |

+

|

| 125 |

+

# Codebase high-level organization and principles in a nutshell

|

| 126 |

+

|

| 127 |

+

The main entry point is a trainer module, which typically does all the

|

| 128 |

+

boilerplate related to creating a model and an optimizer, loading the data,

|

| 129 |

+

checkpointing and training/evaluating the model inside a loop. We provide the

|

| 130 |

+

canonical trainer `train.py` in the root folder. Normally, individual projects

|

| 131 |

+

within `big_vision` fork and customize this trainer.

|

| 132 |

+

|

| 133 |

+

All models, evaluators and preprocessing operations live in the corresponding

|

| 134 |

+

subdirectories and can often be reused between different projects. We encourage

|

| 135 |

+

compatible APIs within these directories to facilitate reusability, but it is

|

| 136 |

+

not strictly enforced, as individual projects may need to introduce their custom

|

| 137 |

+

APIs.

|

| 138 |

+

|

| 139 |

+

We have a powerful configuration system, with the configs living in the

|

| 140 |

+

`configs/` directory. Custom trainers and modules can directly extend/modify

|

| 141 |

+

the configuration options.

|

| 142 |

+

|

| 143 |

+

Project-specific code resides in the `.../proj/...` namespace. It is not always

|

| 144 |

+

possible to keep project-specific in sync with the core `big_vision` libraries,

|

| 145 |

+

Below we provide the [last known commit](#project-specific-commits)

|

| 146 |

+

for each project where the project code is expected to work.

|

| 147 |

+

|

| 148 |

+

Training jobs are robust to interruptions and will resume seamlessly from the

|

| 149 |

+

last saved checkpoint (assuming a user provides the correct `--workdir` path).

|

| 150 |

+

|

| 151 |

+

Each configuration file contains a comment at the top with a `COMMAND` snippet

|

| 152 |

+

to run it, and some hint of expected runtime and results. See below for more

|

| 153 |

+

details, but generally speaking, running on a GPU machine involves calling

|

| 154 |

+

`python -m COMMAND` while running on TPUs, including multi-host, involves

|

| 155 |

+

|

| 156 |

+

```

|

| 157 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=all

|

| 158 |

+

--command "bash big_vision/run_tpu.sh COMMAND"

|

| 159 |

+

```

|

| 160 |

+

|

| 161 |

+

See instructions below for more details on how to run `big_vision` code on a

|

| 162 |

+

GPU machine or Google Cloud TPU.

|

| 163 |

+

|

| 164 |

+

By default we write checkpoints and logfiles. The logfiles are a list of JSON

|

| 165 |

+

objects, and we provide a short and straightforward [example colab to read

|

| 166 |

+

and display the logs and checkpoints](https://colab.research.google.com/drive/1R_lvV542WUp8Q2y8sbyooZOGCplkn7KI?usp=sharing).

|

| 167 |

+

|

| 168 |

+

# Current and future contents

|

| 169 |

+

|

| 170 |

+

The first release contains the core part of pre-training, transferring, and

|

| 171 |

+

evaluating classification models at scale on Cloud TPU VMs.

|

| 172 |

+

|

| 173 |

+

We have since added the following key features and projects:

|

| 174 |

+

- Contrastive Image-Text model training and evaluation as in LiT and CLIP.

|

| 175 |

+

- Patient and consistent distillation.

|

| 176 |

+

- Scaling ViT.

|

| 177 |

+

- MLP-Mixer.

|

| 178 |

+

- UViM.

|

| 179 |

+

|

| 180 |

+

Features and projects we plan to release in the near future, in no particular

|

| 181 |

+

order:

|

| 182 |

+

- ImageNet-21k in TFDS.

|

| 183 |

+

- Loading misc public models used in our publications (NFNet, MoCov3, DINO).

|

| 184 |

+

- Memory-efficient Polyak-averaging implementation.

|

| 185 |

+

- Advanced JAX compute and memory profiling. We are using internal tools for

|

| 186 |

+

this, but may eventually add support for the publicly available ones.

|

| 187 |

+

|

| 188 |

+

We will continue releasing code of our future publications developed within

|

| 189 |

+

`big_vision` here.

|

| 190 |

+

|

| 191 |

+

### Non-content

|

| 192 |

+

|

| 193 |

+

The following exist in the internal variant of this codebase, and there is no

|

| 194 |

+

plan for their release:

|

| 195 |

+

- Regular regression tests for both quality and speed. They rely heavily on

|

| 196 |

+

internal infrastructure.

|

| 197 |

+

- Advanced logging, monitoring, and plotting of experiments. This also relies

|

| 198 |

+

heavily on internal infrastructure. However, we are open to ideas on this

|

| 199 |

+

and may add some in the future, especially if implemented in a

|

| 200 |

+

self-contained manner.

|

| 201 |

+

- Not yet published, ongoing research projects.

|

| 202 |

+

|

| 203 |

+

|

| 204 |

+

# GPU Setup

|

| 205 |

+

|

| 206 |

+

We first discuss how to setup and run `big_vision` on a (local) GPU machine,

|

| 207 |

+

and then discuss the setup for Cloud TPUs. Note that data preparation step for

|

| 208 |

+

(local) GPU setup can be largely reused for the Cloud TPU setup. While the

|

| 209 |

+

instructions skip this for brevity, we highly recommend using a

|

| 210 |

+

[virtual environment](https://docs.python.org/3/library/venv.html) when

|

| 211 |

+

installing python dependencies.

|

| 212 |

+

|

| 213 |

+

## Setting up python packages

|

| 214 |

+

|

| 215 |

+

The first step is to checkout `big_vision` and install relevant python

|

| 216 |

+

dependencies:

|

| 217 |

+

|

| 218 |

+

```

|

| 219 |

+

git clone https://github.com/google-research/big_vision

|

| 220 |

+

cd big_vision/

|

| 221 |

+

pip3 install --upgrade pip

|

| 222 |

+

pip3 install -r big_vision/requirements.txt

|

| 223 |

+

```

|

| 224 |

+

|

| 225 |

+

The latest version of `jax` library can be fetched as

|

| 226 |

+

|

| 227 |

+

```

|

| 228 |

+

pip3 install --upgrade "jax[cuda]" -f https://storage.googleapis.com/jax-releases/jax_cuda_releases.html

|

| 229 |

+

```

|

| 230 |

+

|

| 231 |

+

You may need a different `jax` package, depending on CUDA and cuDNN libraries

|

| 232 |

+

installed on your machine. Please consult

|

| 233 |

+

[official jax documentation](https://github.com/google/jax#pip-installation-gpu-cuda)

|

| 234 |

+

for more information.

|

| 235 |

+

|

| 236 |

+

## Preparing tfds data

|

| 237 |

+

|

| 238 |

+

For unified and reproducible access to standard datasets we opted to use the

|

| 239 |

+

`tensorflow_datasets` (`tfds`) library. It requires each dataset to be

|

| 240 |

+

downloaded, preprocessed and then to be stored on a hard drive (or, if you use

|

| 241 |

+

"Google Cloud", preferably stored in a "GCP bucket".).

|

| 242 |

+

|

| 243 |

+

Many datasets can be downloaded and preprocessed automatically when used

|

| 244 |

+

for the first time. Nevertheless, we intentionally disable this feature and

|

| 245 |

+

recommend doing dataset preparation step separately, ahead of the first run. It

|

| 246 |

+

will make debugging easier if problems arise and some datasets, like

|

| 247 |

+

`imagenet2012`, require manually downloaded data.

|

| 248 |

+

|

| 249 |

+

Most of the datasets, e.g. `cifar100`, `oxford_iiit_pet` or `imagenet_v2`

|

| 250 |

+

can be fully automatically downloaded and prepared by running

|

| 251 |

+

|

| 252 |

+

```

|

| 253 |

+

cd big_vision/

|

| 254 |

+

python3 -m big_vision.tools.download_tfds_datasets cifar100 oxford_iiit_pet imagenet_v2

|

| 255 |

+

```

|

| 256 |

+

|

| 257 |

+

A full list of datasets is available at [this link](https://www.tensorflow.org/datasets/catalog/overview#all_datasets).

|

| 258 |

+

|

| 259 |

+

Some datasets, like `imagenet2012` or `imagenet2012_real`, require the data to

|

| 260 |

+

be downloaded manually and placed into `$TFDS_DATA_DIR/downloads/manual/`,

|

| 261 |

+

which defaults to `~/tensorflow_datasets/downloads/manual/`. For example, for

|

| 262 |

+

`imagenet2012` and `imagenet2012_real` one needs to place the official

|

| 263 |

+

`ILSVRC2012_img_train.tar` and `ILSVRC2012_img_val.tar` files in that directory

|

| 264 |

+

and then run

|

| 265 |

+

`python3 -m big_vision.tools.download_tfds_datasets imagenet2012 imagenet2012_real`

|

| 266 |

+

(which may take ~1 hour).

|

| 267 |

+

|

| 268 |

+

If you use `Google Cloud` and, TPUs in particular, you can then upload

|

| 269 |

+

the preprocessed data (stored in `$TFDS_DATA_DIR`) to

|

| 270 |

+

"Google Cloud Bucket" and use the bucket on any of your (TPU) virtual

|

| 271 |

+

machines to access the data.

|

| 272 |

+

|

| 273 |

+

## Running on a GPU machine

|

| 274 |

+

|

| 275 |

+

Finally, after installing all python dependencies and preparing `tfds` data,

|

| 276 |

+

the user can run the job using config of their choice, e.g. to train `ViT-S/16`

|

| 277 |

+

model on ImageNet data, one should run the following command:

|

| 278 |

+

|

| 279 |

+

```

|

| 280 |

+

python3 -m big_vision.train --config big_vision/configs/vit_s16_i1k.py --workdir workdirs/`date '+%m-%d_%H%M'`

|

| 281 |

+

```

|

| 282 |

+

|

| 283 |

+

or to train MLP-Mixer-B/16, run (note the `gpu8` config param that reduces the default batch size and epoch count):

|

| 284 |

+

|

| 285 |

+

```

|

| 286 |

+

python3 -m big_vision.train --config big_vision/configs/mlp_mixer_i1k.py:gpu8 --workdir workdirs/`date '+%m-%d_%H%M'`

|

| 287 |

+

```

|

| 288 |

+

|

| 289 |

+

# Cloud TPU VM setup

|

| 290 |

+

|

| 291 |

+

## Create TPU VMs

|

| 292 |

+

|

| 293 |

+

To create a single machine with 8 TPU cores, follow the following Cloud TPU JAX

|

| 294 |

+

document:

|

| 295 |

+

https://cloud.google.com/tpu/docs/run-calculation-jax

|

| 296 |

+

|

| 297 |

+

To support large-scale vision research, more cores with multiple hosts are

|

| 298 |

+

recommended. Below we provide instructions on how to do it.

|

| 299 |

+

|

| 300 |

+

First, create some useful variables, which we be reused:

|

| 301 |

+

|

| 302 |

+

```

|

| 303 |

+

export NAME=<a name of the TPU deployment, e.g. my-tpu-machine>

|

| 304 |

+

export ZONE=<GCP geographical zone, e.g. europe-west4-a>

|

| 305 |

+

export GS_BUCKET_NAME=<Name of the storage bucket, e.g. my_bucket>

|

| 306 |

+

```

|

| 307 |

+

|

| 308 |

+

The following command line will create TPU VMs with 32 cores,

|

| 309 |

+

4 hosts.

|

| 310 |

+

|

| 311 |

+

```

|

| 312 |

+

gcloud compute tpus tpu-vm create $NAME --zone $ZONE --accelerator-type v3-32 --version tpu-ubuntu2204-base

|

| 313 |

+

```

|

| 314 |

+

|

| 315 |

+

## Install `big_vision` on TPU VMs

|

| 316 |

+

|

| 317 |

+

Fetch the `big_vision` repository, copy it to all TPU VM hosts, and install

|

| 318 |

+

dependencies.

|

| 319 |

+

|

| 320 |

+

```

|

| 321 |

+

git clone https://github.com/google-research/big_vision

|

| 322 |

+

gcloud compute tpus tpu-vm scp --recurse big_vision/big_vision $NAME: --zone=$ZONE --worker=all

|

| 323 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=all --command "bash big_vision/run_tpu.sh"

|

| 324 |

+

```

|

| 325 |

+

|

| 326 |

+

## Download and prepare TFDS datasets

|

| 327 |

+

|

| 328 |

+

We recommend preparing `tfds` data locally as described above and then uploading

|

| 329 |

+

the data to `Google Cloud` bucket. However, if you prefer, the datasets which

|

| 330 |

+

do not require manual downloads can be prepared automatically using a TPU

|

| 331 |

+

machine as described below. Note that TPU machines have only 100 GB of disk

|

| 332 |

+

space, and multihost TPU slices do not allow for external disks to be attached

|

| 333 |

+

in a write mode, so the instructions below may not work for preparing large

|

| 334 |

+

datasets. As yet another alternative, we provide instructions

|

| 335 |

+

[on how to prepare `tfds` data on CPU-only GCP machine](#preparing-tfds-data-on-a-standalone-gcp-cpu-machine).

|

| 336 |

+

|

| 337 |

+

Specifically, the seven TFDS datasets used during evaluations will be generated

|

| 338 |

+

under `~/tensorflow_datasets` on TPU machine with this command:

|

| 339 |

+

|

| 340 |

+

```

|

| 341 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=0 --command "TFDS_DATA_DIR=~/tensorflow_datasets bash big_vision/run_tpu.sh big_vision.tools.download_tfds_datasets cifar10 cifar100 oxford_iiit_pet oxford_flowers102 cars196 dtd uc_merced"

|

| 342 |

+

```

|

| 343 |

+

|

| 344 |

+

You can then copy the datasets to GS bucket, to make them accessible to all TPU workers.

|

| 345 |

+

|

| 346 |

+

```

|

| 347 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=0 --command "rm -r ~/tensorflow_datasets/downloads && gsutil cp -r ~/tensorflow_datasets gs://$GS_BUCKET_NAME"

|

| 348 |

+

```

|

| 349 |

+

|

| 350 |

+

If you want to integrate other public or custom datasets, i.e. imagenet2012,

|

| 351 |

+

please follow [the official guideline](https://www.tensorflow.org/datasets/catalog/overview).

|

| 352 |

+

|

| 353 |

+

## Pre-trained models

|

| 354 |

+

|

| 355 |

+

For the full list of pre-trained models check out the `load` function defined in

|

| 356 |

+

the same module as the model code. And for example config on how to use these

|

| 357 |

+

models, see `configs/transfer.py`.

|

| 358 |

+

|

| 359 |

+

## Run the transfer script on TPU VMs

|

| 360 |

+

|

| 361 |

+

The following command line fine-tunes a pre-trained `vit-i21k-augreg-b/32` model

|

| 362 |

+

on `cifar10` dataset.

|

| 363 |

+

|

| 364 |

+

```

|

| 365 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=all --command "TFDS_DATA_DIR=gs://$GS_BUCKET_NAME/tensorflow_datasets bash big_vision/run_tpu.sh big_vision.train --config big_vision/configs/transfer.py:model=vit-i21k-augreg-b/32,dataset=cifar10,crop=resmall_crop --workdir gs://$GS_BUCKET_NAME/big_vision/workdir/`date '+%m-%d_%H%M'` --config.lr=0.03"

|

| 366 |

+

```

|

| 367 |

+

|

| 368 |

+

## Run the train script on TPU VMs

|

| 369 |

+

|

| 370 |

+

To train your own big_vision models on a large dataset,

|

| 371 |

+

e.g. `imagenet2012` ([prepare the TFDS dataset](https://www.tensorflow.org/datasets/catalog/imagenet2012)),

|

| 372 |

+

run the following command line.

|

| 373 |

+

|

| 374 |

+

```

|

| 375 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=all --command "TFDS_DATA_DIR=gs://$GS_BUCKET_NAME/tensorflow_datasets bash big_vision/run_tpu.sh big_vision.train --config big_vision/configs/bit_i1k.py --workdir gs://$GS_BUCKET_NAME/big_vision/workdir/`date '+%m-%d_%H%M'`"

|

| 376 |

+

```

|

| 377 |

+

|

| 378 |

+

## FSDP training.

|

| 379 |

+

|

| 380 |

+

`big_vision` supports flexible parameter and model sharding strategies.

|

| 381 |

+

Currently, we support a popular FSDP sharding via a simple config change, see [this config example](big_vision/configs/transfer.py).

|

| 382 |

+

For example, to run FSDP finetuning of a pretrained ViT-L model, run the following command (possible adjusting batch size depending on your hardware):

|

| 383 |

+

|

| 384 |

+

```

|

| 385 |

+

gcloud compute tpus tpu-vm ssh $NAME --zone=$ZONE --worker=all --command "TFDS_DATA_DIR=gs://$GS_BUCKET_NAME/tensorflow_datasets bash big_vision/run_tpu.sh big_vision.train --config big_vision/configs/transfer.py:model=vit-i21k-augreg-l/16,dataset=oxford_iiit_pet,crop=resmall_crop,fsdp=True,batch_size=256 --workdir gs://$GS_BUCKET_NAME/big_vision/workdir/`date '+%m-%d_%H%M'` --config.lr=0.03"

|

| 386 |

+

```

|

| 387 |

+

|

| 388 |

+

## Image-text training with SigLIP.

|

| 389 |

+

|

| 390 |

+

A minimal example that uses public `coco` captions data:

|

| 391 |

+

|

| 392 |

+

```

|

| 393 |

+