Post

3809

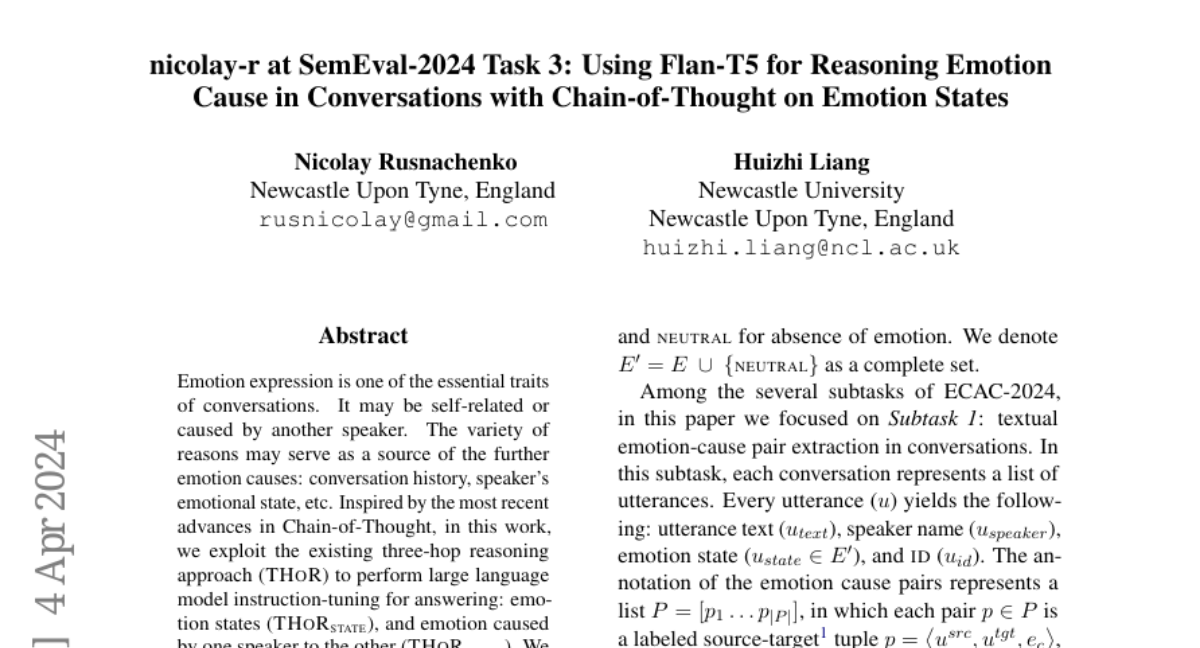

📢 For those who interested in quick extraction of emotion causes in dialogues, below is a notebook that adopts the pre-trained Flan-T5 model on FRIENDS dataset powered by bulk-chain framework:

https://gist.github.com/nicolay-r/c8cfe7df1bef0c14f77760fa78ae5b5c

Why it might be intersted to check? The provided supports batching mode for a quck inference. In the case of Flan-T5-base that would be the quickest option via LLM.

📊 Evaluation results are available in model card:

nicolay-r/flan-t5-emotion-cause-thor-base

https://gist.github.com/nicolay-r/c8cfe7df1bef0c14f77760fa78ae5b5c

Why it might be intersted to check? The provided supports batching mode for a quck inference. In the case of Flan-T5-base that would be the quickest option via LLM.

📊 Evaluation results are available in model card:

nicolay-r/flan-t5-emotion-cause-thor-base