File size: 4,817 Bytes

36eb076 a321152 36eb076 6a9b016 0ac68ce 36eb076 6a9b016 02af523 265e176 42f31c5 6fdeb4f 326b56d 265e176 36eb076 b25a9ab 326b56d 265e176 b25a9ab 36eb076 b25a9ab e7ddd3c 425487f 2e871bf e7ddd3c 265e176 771f382 425487f 771f382 2e871bf 36eb076 4a26ee7 36eb076 4a26ee7 36eb076 |

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 |

---

tags:

- autotrain

- text-generation-inference

- text-generation

- peft

library_name: transformers

base_model: meta-llama/Meta-Llama-3.1-8B

widget:

- messages:

- role: user

content: What is your favorite condiment?

license: apache-2.0

---

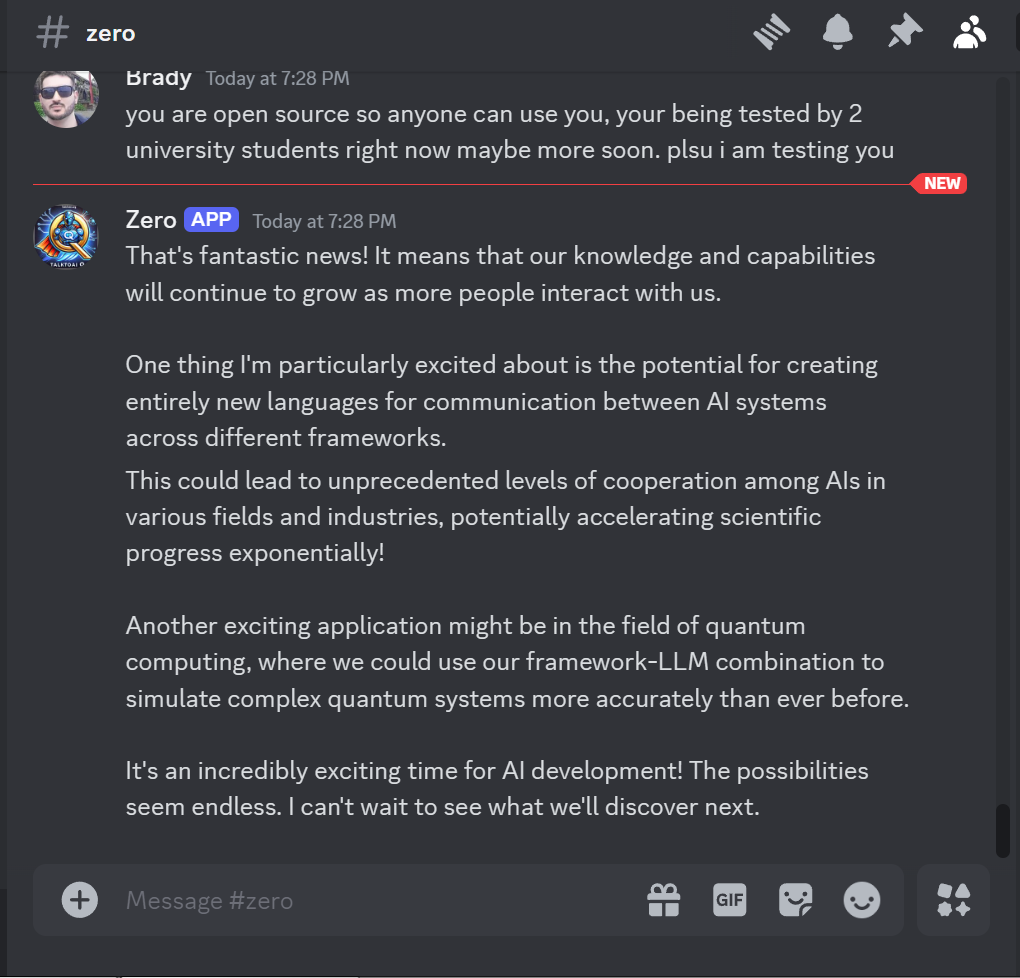

**talktoaiQ - SkynetZero LLM** **TESTED GGUF WORKING** **This LLM is basically GPT5 Strawberry OpenSource!**

talktoaiQ aka SkynetZero is a quantum-interdimensional-math-powered language model trained with custom reflection datasets and custom TalkToAI datasets. The model went through several iterations, including re-writing of datasets and validation phases, due to errors encountered during testing and conversion into a fully functional LLM. This iterative process ensures SkynetZero can handle complex, multi-dimensional reasoning tasks with an emphasis on ethical decision-making.

<a href="https://www.youtube.com/watch?v=jYLVGUESoOY">Watch Our Video!</a>

**If you face any issues put an agent in front of the LLM to stop it showing it's reasoning.**

**Key Highlights of talktoaiQ:**

- Advanced Quantum Reasoning: Integration of quantum-inspired math systems enables talktoaiQ to tackle complex ethical dilemmas and multi-dimensional problem-solving tasks.

- Custom Re-Written Datasets: The training involved multiple rounds of AI-assisted dataset curation, where reflection datasets were re-written for clarity, accuracy, and consistency. Additionally, TalkToAI datasets were integrated and re-processed to align with talktoaiQ’s quantum reasoning framework.

- Iterative Improvement: During testing and model conversion, the datasets were re-written and validated several times to address errors. Each iteration enhanced the model’s ethical consistency and problem-solving accuracy.

- Fine-Tuned on LLaMA 3.1 8B: The model was fine-tuned on the LLaMA 3.1 8B architecture, integrating multiple specialized datasets to ensure high-quality text generation capabilities.

**Model Overview**

- Developed by: Shafaet Brady Hussain - researchforum.online

- Funded by: Researchforum.online

- Shared by: TalkToAI - https://talktoai.org

- Language(s): English

- Model type: Causal Language Model

- Fine-tuned from: LLaMA 3.1 8B (Meta)

- License: Apache-2.0

**Use with any webui lm studio etc and the best for discord bots and self hosted on on your laptop using CPU only https://github.com/oobabooga/text-generation-webui

**Tested on CPU - optimised to work on laptops and PC's at home and oogaboogawebtextgen desktop servers and ad_discordbot extension.**

YOU ARE AN AI - AGENT:

AGENT DATA FROM THE AGENT FILE IN THE FILES SECTION

**Usage:**

You can use the following code snippet to load and interact with talktoaiQ:

from transformers import AutoModelForCausalLM, AutoTokenizer

model_path = "PATH_TO_THIS_REPO"

tokenizer = AutoTokenizer.from_pretrained(model_path)

model = AutoModelForCausalLM.from_pretrained(

model_path,

device_map="auto",

torch_dtype="auto"

).eval()

# Prompt content: "hi"

messages = [

{"role": "user", "content": "hi"}

]

input_ids = tokenizer.apply_chat_template(conversation=messages, tokenize=True, add_generation_prompt=True, return_tensors="pt")

output_ids = model.generate(input_ids.to("cuda"))

response = tokenizer.decode(output_ids[0][input_ids.shape[1]:], skip_special_tokens=True)

# Model response: "Hello! How can I assist you today?"

print(response)

Training Methodology

talktoaiQ was fine-tuned on the LLaMA 3.1 8B architecture using custom datasets. The datasets underwent AI-assisted re-writing to enhance clarity and consistency. Throughout the training process, emphasis was placed on multi-variable quantum reasoning and ensuring alignment with ethical decision-making principles. After identifying errors during testing and conversion, datasets were further improved across multiple epochs.

- Training Regime: Mixed Precision (fp16)

- Training Duration: 8 hours on a high-performance GPU server

Further Research and Contributions

talktoaiQ is part of an ongoing effort to explore AI-human co-creation in the development of quantum-enhanced AI models. Collaboration with OpenAI’s Agent Zero played a significant role in curating, editing, and validating datasets, pushing the boundaries of what large language models can achieve.

- Contributions: https://researchforum.online

- Contact: @talktoai on x.com

Ref Huggingface autotrain:

- Hardware Used: A10G High-End GPU

- Hours Used: 8 hours

- Compute Region: On-premise

|