Upload 10 files

Browse files- PPE_Safety_Y5.pt +3 -0

- README.md +5 -5

- app.py +70 -0

- class1_150_jpg.rf.5995dce34d38deb9eb0b6e36cae78f17.jpg +0 -0

- image_0.jpg +0 -0

- image_1.jpg +0 -0

- image_2.jpg +0 -0

- image_53_jpg.rf.3446e366b5d4d905a32e1aedc8fe87de.jpg +0 -0

- image_55_jpg.rf.27ae4341a9b9647d73a8929ff7a22369.jpg +0 -0

- requirements.txt +5 -0

PPE_Safety_Y5.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f70aaac433dc779c82f31ad9796b2fdf2df7ff415d5005e8d0b66cc366664e67

|

| 3 |

+

size 42282601

|

README.md

CHANGED

|

@@ -1,10 +1,10 @@

|

|

| 1 |

---

|

| 2 |

-

title:

|

| 3 |

-

emoji:

|

| 4 |

colorFrom: yellow

|

| 5 |

-

colorTo:

|

| 6 |

-

sdk:

|

| 7 |

-

sdk_version:

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Personal Protective Equipment Violation

|

| 3 |

+

emoji: 🚀

|

| 4 |

colorFrom: yellow

|

| 5 |

+

colorTo: green

|

| 6 |

+

sdk: gradio

|

| 7 |

+

sdk_version: 3.18.0

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

app.py

ADDED

|

@@ -0,0 +1,70 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from gradio.outputs import Label

|

| 3 |

+

import cv2

|

| 4 |

+

import requests

|

| 5 |

+

import os

|

| 6 |

+

import numpy as np

|

| 7 |

+

|

| 8 |

+

from ultralytics import YOLO

|

| 9 |

+

import yolov5

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

# Function for inference

|

| 13 |

+

def yolov5_inference(

|

| 14 |

+

image: gr.inputs.Image = None,

|

| 15 |

+

model_path: gr.inputs.Dropdown = None,

|

| 16 |

+

image_size: gr.inputs.Slider = 640,

|

| 17 |

+

conf_threshold: gr.inputs.Slider = 0.25,

|

| 18 |

+

iou_threshold: gr.inputs.Slider = 0.45 ):

|

| 19 |

+

|

| 20 |

+

# Loading Yolo V5 model

|

| 21 |

+

model = yolov5.load(model_path, device="cpu")

|

| 22 |

+

|

| 23 |

+

# Setting model configuration

|

| 24 |

+

model.conf = conf_threshold

|

| 25 |

+

model.iou = iou_threshold

|

| 26 |

+

|

| 27 |

+

# Inference

|

| 28 |

+

results = model([image], size=image_size)

|

| 29 |

+

|

| 30 |

+

# Cropping the predictions

|

| 31 |

+

crops = results.crop(save=False)

|

| 32 |

+

img_crops = []

|

| 33 |

+

for i in range(len(crops)):

|

| 34 |

+

img_crops.append(crops[i]["im"][..., ::-1])

|

| 35 |

+

return results.render()[0] #, img_crops

|

| 36 |

+

|

| 37 |

+

|

| 38 |

+

# gradio Input

|

| 39 |

+

inputs = [

|

| 40 |

+

gr.inputs.Image(type="pil", label="Input Image"),

|

| 41 |

+

gr.inputs.Dropdown(["PPE_Safety_Y5.pt"], label="Model", default = 'PPE_Safety_Y5.pt'),

|

| 42 |

+

gr.inputs.Slider(minimum=320, maximum=1280, default=640, step=32, label="Image Size"),

|

| 43 |

+

gr.inputs.Slider(minimum=0.0, maximum=1.0, default=0.25, step=0.05, label="Confidence Threshold"),

|

| 44 |

+

gr.inputs.Slider(minimum=0.0, maximum=1.0, default=0.45, step=0.05, label="IOU Threshold"),

|

| 45 |

+

]

|

| 46 |

+

|

| 47 |

+

# gradio Output

|

| 48 |

+

outputs = gr.outputs.Image(type="filepath", label="Output Image")

|

| 49 |

+

# outputs_crops = gr.Gallery(label="Object crop")

|

| 50 |

+

|

| 51 |

+

title = "Identify violations of Personal Protective Equipment (PPE) protocols for improved safety"

|

| 52 |

+

|

| 53 |

+

# gradio examples: "Image", "Model", "Image Size", "Confidence Threshold", "IOU Threshold"

|

| 54 |

+

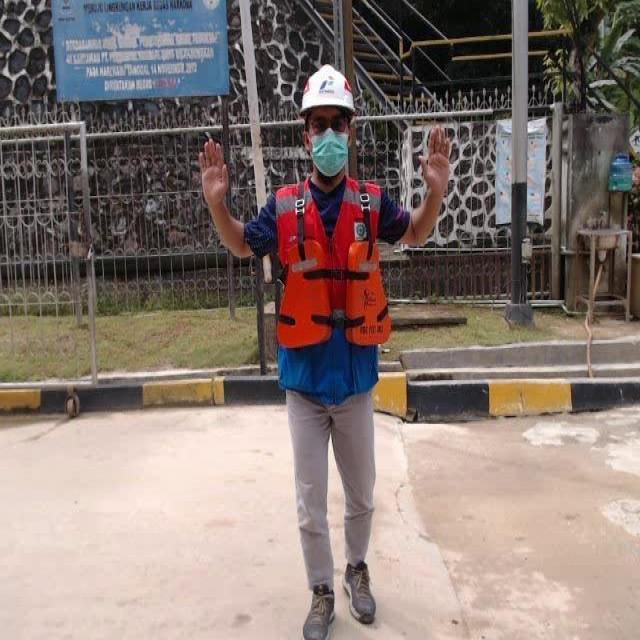

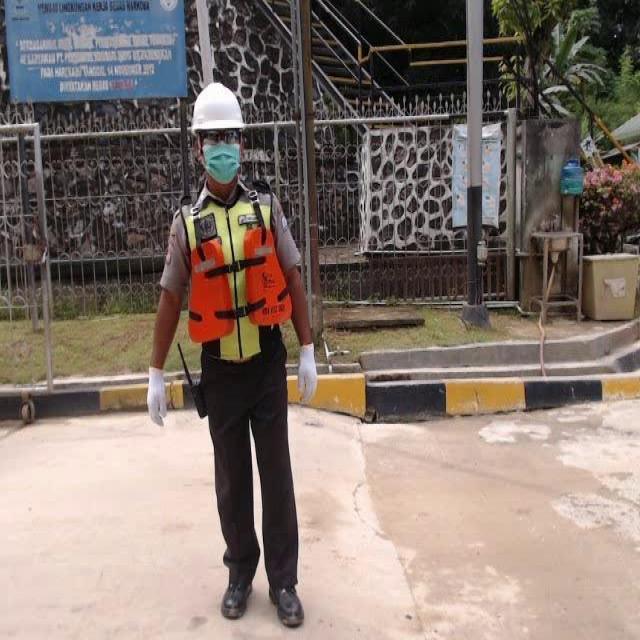

examples = [['image_1.jpg', 'PPE_Safety_Y5.pt', 640, 0.35, 0.45]

|

| 55 |

+

,['image_0.jpg', 'PPE_Safety_Y5.pt', 640, 0.35, 0.45]

|

| 56 |

+

,['image_2.jpg', 'PPE_Safety_Y5.pt', 640, 0.35, 0.45],

|

| 57 |

+

]

|

| 58 |

+

|

| 59 |

+

# gradio app launch

|

| 60 |

+

demo_app = gr.Interface(

|

| 61 |

+

fn=yolov5_inference,

|

| 62 |

+

inputs=inputs,

|

| 63 |

+

outputs=outputs, #[outputs,outputs_crops],

|

| 64 |

+

title=title,

|

| 65 |

+

examples=examples,

|

| 66 |

+

cache_examples=True,

|

| 67 |

+

live=True,

|

| 68 |

+

theme='huggingface',

|

| 69 |

+

)

|

| 70 |

+

demo_app.launch(debug=True, enable_queue=True, width=50, height=50)

|

class1_150_jpg.rf.5995dce34d38deb9eb0b6e36cae78f17.jpg

ADDED

|

image_0.jpg

ADDED

|

image_1.jpg

ADDED

|

image_2.jpg

ADDED

|

image_53_jpg.rf.3446e366b5d4d905a32e1aedc8fe87de.jpg

ADDED

|

image_55_jpg.rf.27ae4341a9b9647d73a8929ff7a22369.jpg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

gradio==3.4.0

|

| 2 |

+

opencv-python

|

| 3 |

+

numpy<1.24

|

| 4 |

+

ultralytics

|

| 5 |

+

yolov5

|