Spaces:

Sleeping

Sleeping

Commit

•

957e2dc

1

Parent(s):

7ca4ec1

Voiceblock demo: Attempt 8

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .DS_Store +0 -0

- __pycache__/gradio.cpython-310.pyc +0 -0

- __pycache__/model.cpython-310.pyc +0 -0

- __pycache__/model.cpython-39.pyc +0 -0

- app.py +67 -0

- example.wav +0 -0

- requirements.txt +28 -0

- voicebox/.DS_Store +0 -0

- voicebox/LICENSE +0 -0

- voicebox/README.md +136 -0

- voicebox/cache/.gitkeep +0 -0

- voicebox/data/.gitkeep +0 -0

- voicebox/figures/demo_thumbnail.png +0 -0

- voicebox/figures/use_diagram_embeddings.png +0 -0

- voicebox/figures/vb_color_logo.png +0 -0

- voicebox/figures/voicebox_untargeted_conditioning_draft.png +0 -0

- voicebox/pretrained/denoiser/demucs/dns_48.pt +3 -0

- voicebox/pretrained/phoneme/causal_ppg_128_hidden_128_hop.pt +3 -0

- voicebox/pretrained/phoneme/causal_ppg_256_hidden.pt +3 -0

- voicebox/pretrained/phoneme/causal_ppg_256_hidden_256_hop.pt +3 -0

- voicebox/pretrained/phoneme/causal_ppg_256_hidden_512_hop.pt +3 -0

- voicebox/pretrained/phoneme/ppg_causal_small.pt +3 -0

- voicebox/pretrained/speaker/resemblyzer/resemblyzer.pt +3 -0

- voicebox/pretrained/speaker/resnetse34v2/resnetse34v2.pt +3 -0

- voicebox/pretrained/speaker/yvector/yvector.pt +3 -0

- voicebox/pretrained/universal/universal_final.pt +3 -0

- voicebox/pretrained/voicebox/voicebox_final.pt +3 -0

- voicebox/pretrained/voicebox/voicebox_final.yaml +20 -0

- voicebox/requirements.txt +28 -0

- voicebox/scripts/downloads/download_librispeech_eval.sh +25 -0

- voicebox/scripts/downloads/download_librispeech_train.sh +54 -0

- voicebox/scripts/downloads/download_rir_noise.sh +73 -0

- voicebox/scripts/downloads/download_voxceleb.py +189 -0

- voicebox/scripts/downloads/ff_rir.txt +132 -0

- voicebox/scripts/downloads/voxceleb1_file_parts.txt +5 -0

- voicebox/scripts/downloads/voxceleb1_files.txt +1 -0

- voicebox/scripts/downloads/voxceleb2_file_parts.txt +9 -0

- voicebox/scripts/downloads/voxceleb2_files.txt +1 -0

- voicebox/scripts/experiments/evaluate.py +915 -0

- voicebox/scripts/experiments/train.py +282 -0

- voicebox/scripts/experiments/train_phoneme_predictor.py +205 -0

- voicebox/scripts/streamer/benchmark_streamer.py +97 -0

- voicebox/scripts/streamer/enroll.py +105 -0

- voicebox/scripts/streamer/stream.py +135 -0

- voicebox/setup.py +20 -0

- voicebox/src.egg-info/PKG-INFO +148 -0

- voicebox/src.egg-info/SOURCES.txt +9 -0

- voicebox/src.egg-info/dependency_links.txt +1 -0

- voicebox/src.egg-info/top_level.txt +1 -0

- voicebox/src/__init__.py +0 -0

.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

__pycache__/gradio.cpython-310.pyc

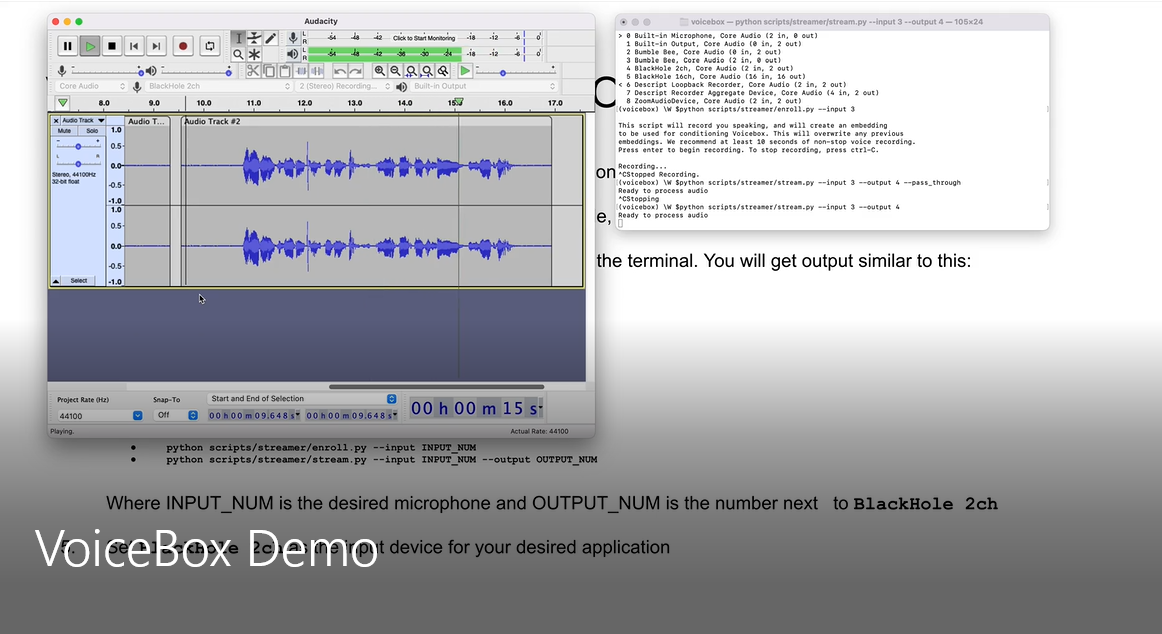

ADDED

|

Binary file (1.04 kB). View file

|

|

|

__pycache__/model.cpython-310.pyc

ADDED

|

Binary file (1.43 kB). View file

|

|

|

__pycache__/model.cpython-39.pyc

ADDED

|

Binary file (1.42 kB). View file

|

|

|

app.py

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import torch

|

| 2 |

+

import torchaudio

|

| 3 |

+

import voicebox.src.attacks.offline.perturbation.voicebox.voicebox as vb #To access VoiceBox class

|

| 4 |

+

#import voicebox.src.attacks.online.voicebox_streamer as streamer #To access VoiceBoxStreamer class

|

| 5 |

+

import numpy as np

|

| 6 |

+

from voicebox.src.constants import PPG_PRETRAINED_PATH

|

| 7 |

+

|

| 8 |

+

#Set voicebox default parameters

|

| 9 |

+

LOOKAHEAD = 5

|

| 10 |

+

voicebox_kwargs={'win_length': 256,

|

| 11 |

+

'ppg_encoder_hidden_size': 256,

|

| 12 |

+

'use_phoneme_encoder': True,

|

| 13 |

+

'use_pitch_encoder': True,

|

| 14 |

+

'use_loudness_encoder': True,

|

| 15 |

+

'spec_encoder_lookahead_frames': 0,

|

| 16 |

+

'spec_encoder_type': 'mel',

|

| 17 |

+

'spec_encoder_mlp_depth': 2,

|

| 18 |

+

'bottleneck_lookahead_frames': LOOKAHEAD,

|

| 19 |

+

'ppg_encoder_path': PPG_PRETRAINED_PATH,

|

| 20 |

+

'n_bands': 128,

|

| 21 |

+

'spec_encoder_hidden_size': 512,

|

| 22 |

+

'bottleneck_skip': True,

|

| 23 |

+

'bottleneck_hidden_size': 512,

|

| 24 |

+

'bottleneck_feedforward_size': 512,

|

| 25 |

+

'bottleneck_type': 'lstm',

|

| 26 |

+

'bottleneck_depth': 2,

|

| 27 |

+

'control_eps': 0.5,

|

| 28 |

+

'projection_norm': float('inf'),

|

| 29 |

+

'conditioning_dim': 512}

|

| 30 |

+

|

| 31 |

+

#Load pretrained model:

|

| 32 |

+

model = vb.VoiceBox(**voicebox_kwargs)

|

| 33 |

+

model.load_state_dict(torch.load('voicebox/pretrained/voicebox/voicebox_final.pt', map_location=torch.device('cpu')), strict=True)

|

| 34 |

+

model.eval()

|

| 35 |

+

|

| 36 |

+

#Define function to convert final audio format:

|

| 37 |

+

def float32_to_int16(waveform):

|

| 38 |

+

waveform = waveform / np.abs(waveform).max()

|

| 39 |

+

waveform = waveform * 32767

|

| 40 |

+

waveform = waveform.astype(np.int16)

|

| 41 |

+

waveform = waveform.ravel()

|

| 42 |

+

return waveform

|

| 43 |

+

|

| 44 |

+

#Define predict function:

|

| 45 |

+

def predict(inp):

|

| 46 |

+

#How to transform audio from string to tensor

|

| 47 |

+

waveform, sample_rate = torchaudio.load(inp)

|

| 48 |

+

|

| 49 |

+

#Run model without changing weights

|

| 50 |

+

with torch.no_grad():

|

| 51 |

+

waveform = model(waveform)

|

| 52 |

+

|

| 53 |

+

#Transform output audio into gradio-readable format

|

| 54 |

+

waveform = waveform.numpy()

|

| 55 |

+

waveform = float32_to_int16(waveform)

|

| 56 |

+

return sample_rate, waveform

|

| 57 |

+

|

| 58 |

+

#Set up gradio interface

|

| 59 |

+

import gradio as gr

|

| 60 |

+

|

| 61 |

+

interface = gr.Interface(

|

| 62 |

+

fn=predict,

|

| 63 |

+

inputs=gr.Audio(type="filepath"),

|

| 64 |

+

outputs=gr.Audio()

|

| 65 |

+

)

|

| 66 |

+

|

| 67 |

+

interface.launch()

|

example.wav

ADDED

|

Binary file (218 kB). View file

|

|

|

requirements.txt

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch==1.10.0

|

| 2 |

+

torchaudio==0.10.0

|

| 3 |

+

torchvision

|

| 4 |

+

torchcrepe

|

| 5 |

+

tensorboard

|

| 6 |

+

textgrid

|

| 7 |

+

Pillow

|

| 8 |

+

numpy

|

| 9 |

+

tqdm

|

| 10 |

+

jiwer

|

| 11 |

+

librosa

|

| 12 |

+

pandas

|

| 13 |

+

protobuf==3.20.0

|

| 14 |

+

git+https://github.com/ludlows/python-pesq#egg=pesq

|

| 15 |

+

psutil

|

| 16 |

+

pystoi

|

| 17 |

+

pytest

|

| 18 |

+

pyworld

|

| 19 |

+

pyyaml

|

| 20 |

+

matplotlib

|

| 21 |

+

seaborn

|

| 22 |

+

ipython

|

| 23 |

+

scipy

|

| 24 |

+

scikit-learn

|

| 25 |

+

ipywebrtc

|

| 26 |

+

argbind

|

| 27 |

+

sounddevice

|

| 28 |

+

keyboard

|

voicebox/.DS_Store

ADDED

|

Binary file (6.15 kB). View file

|

|

|

voicebox/LICENSE

ADDED

|

File without changes

|

voicebox/README.md

ADDED

|

@@ -0,0 +1,136 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

<h1 align="center">VoiceBlock</h1>

|

| 2 |

+

<h4 align="center"> Privacy through Real-Time Adversarial Attacks with Audio-to-Audio Models</h4>

|

| 3 |

+

<div align="center">

|

| 4 |

+

|

| 5 |

+

[](https://colab.research.google.com/github/???/???.ipynb)

|

| 6 |

+

[](https://master.d3hvhbnf7qxjtf.amplifyapp.com/)

|

| 7 |

+

[](/LICENSE)

|

| 8 |

+

|

| 9 |

+

</div>

|

| 10 |

+

<p align="center"><img src="./figures/vb_color_logo.png" width="200"/></p>

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

## Contents

|

| 14 |

+

|

| 15 |

+

* <a href="#install">Installation</a>

|

| 16 |

+

* <a href="#reproduce">Reproducing Results</a>

|

| 17 |

+

* <a href="#streamer">Streaming Implementation</a>

|

| 18 |

+

* <a href="#citation">Citation</a>

|

| 19 |

+

|

| 20 |

+

<h2 id="install">Installation</h2>

|

| 21 |

+

|

| 22 |

+

1. Clone the repository:

|

| 23 |

+

|

| 24 |

+

git clone https://github.com/voiceboxneurips/voicebox.git

|

| 25 |

+

|

| 26 |

+

2. We recommend working from a clean environment, e.g. using `conda`:

|

| 27 |

+

|

| 28 |

+

conda create --name voicebox python=3.9

|

| 29 |

+

source activate voicebox

|

| 30 |

+

|

| 31 |

+

3. Install dependencies:

|

| 32 |

+

|

| 33 |

+

cd voicebox

|

| 34 |

+

pip install -r requirements.txt

|

| 35 |

+

pip install -e .

|

| 36 |

+

|

| 37 |

+

4. Grant permissions:

|

| 38 |

+

|

| 39 |

+

chmod -R u+x scripts/

|

| 40 |

+

|

| 41 |

+

<h2 id="reproduce">Reproducing Results</h2>

|

| 42 |

+

|

| 43 |

+

To reproduce our results, first download the corresponding data. Note that to download the [VoxCeleb1 dataset](https://www.robots.ox.ac.uk/~vgg/data/voxceleb/vox1.html), you must register and obtain a username and password.

|

| 44 |

+

|

| 45 |

+

| Task | Dataset (Size) | Command |

|

| 46 |

+

|---|---|---|

|

| 47 |

+

| Objective evaluation | VoxCeleb1 (39G) | `python scripts/downloads/download_voxceleb.py --subset=1 --username=<VGG_USERNAME> --password=<VGG_PASSWORD>` |

|

| 48 |

+

| WER / supplemental evaluations | LibriSpeech `train-clean-360` (23G) | `./scripts/downloads/download_librispeech_eval.sh` |

|

| 49 |

+

| Train attacks | LibriSpeech `train-clean-100` (11G) | `./scripts/downloads/download_librispeech_train.sh` |

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

We provide scripts to reproduce our experiments and save results, including generated audio, to named and time-stamped subdirectories within `runs/`. To reproduce our objective evaluation experiments using pre-trained attacks, run:

|

| 53 |

+

|

| 54 |

+

```

|

| 55 |

+

python scripts/experiments/evaluate.py

|

| 56 |

+

```

|

| 57 |

+

|

| 58 |

+

To reproduce our training, run:

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

python scripts/experiments/train.py

|

| 62 |

+

```

|

| 63 |

+

|

| 64 |

+

<h2 id="streamer">Streaming Implementation</h2>

|

| 65 |

+

|

| 66 |

+

As a proof of concept, we provide a streaming implementation of VoiceBox capable of modifying user audio in real-time. Here, we provide installation instructions for MacOS and Ubuntu 20.04.

|

| 67 |

+

|

| 68 |

+

<h3 id="streamer-mac">MacOS</h3>

|

| 69 |

+

|

| 70 |

+

See video below:

|

| 71 |

+

|

| 72 |

+

<a href="https://youtu.be/LcNjO5E7F3E">

|

| 73 |

+

<p align="center"><img src="./figures/demo_thumbnail.png" width="500"/></p>

|

| 74 |

+

</a>

|

| 75 |

+

|

| 76 |

+

<h3 id="streamer-ubuntu">Ubuntu 20.04</h3>

|

| 77 |

+

|

| 78 |

+

|

| 79 |

+

1. Open a terminal and follow the [installation instructions](#install) above. Change directory to the root of this repository.

|

| 80 |

+

|

| 81 |

+

2. Run the following command:

|

| 82 |

+

|

| 83 |

+

pacmd load-module module-null-sink sink_name=voicebox sink_properties=device.description=voicebox

|

| 84 |

+

|

| 85 |

+

If you are using PipeWire instead of PulseAudio:

|

| 86 |

+

|

| 87 |

+

pactl load-module module-null-sink media.class=Audio/Sink sink_name=voicebox sink_properties=device.description=voicebox

|

| 88 |

+

|

| 89 |

+

PulseAudio is the default on Ubuntu. If you haven't changed your system defaults, you are probably using PulseAudio. This will add "voicebox" as an output device. Select it as the input to your chosen audio software.

|

| 90 |

+

|

| 91 |

+

3. Find which audio device to read and write from. In your conda environment, run:

|

| 92 |

+

|

| 93 |

+

python -m sounddevice

|

| 94 |

+

|

| 95 |

+

You will get output similar to this:

|

| 96 |

+

|

| 97 |

+

0 HDA Intel HDMI: 0 (hw:0,3), ALSA (0 in, 8 out)

|

| 98 |

+

1 HDA Intel HDMI: 1 (hw:0,7), ALSA (0 in, 8 out)

|

| 99 |

+

2 HDA Intel HDMI: 2 (hw:0,8), ALSA (0 in, 8 out)

|

| 100 |

+

3 HDA Intel HDMI: 3 (hw:0,9), ALSA (0 in, 8 out)

|

| 101 |

+

4 HDA Intel HDMI: 4 (hw:0,10), ALSA (0 in, 8 out)

|

| 102 |

+

5 hdmi, ALSA (0 in, 8 out)

|

| 103 |

+

6 jack, ALSA (2 in, 2 out)

|

| 104 |

+

7 pipewire, ALSA (64 in, 64 out)

|

| 105 |

+

8 pulse, ALSA (32 in, 32 out)

|

| 106 |

+

* 9 default, ALSA (32 in, 32 out)

|

| 107 |

+

|

| 108 |

+

In this example, we are going to route the audio through PipeWire (channel 7). This will be our INPUT_NUM and OUTPUT_NUM

|

| 109 |

+

|

| 110 |

+

4. First, we need to create a conditioning embedding. To do this, run the enrollment script and follow its on-screen instructions:

|

| 111 |

+

|

| 112 |

+

python scripts/streamer/enroll.py --input INPUT_NUM

|

| 113 |

+

|

| 114 |

+

5. We can now use the streamer. Run:

|

| 115 |

+

|

| 116 |

+

python scripts/stream.py --input INPUT_NUM --output OUTPUT_NUM

|

| 117 |

+

|

| 118 |

+

6. Once the streamer is running, open `pavucontrol`.

|

| 119 |

+

|

| 120 |

+

a. In `pavucontrol`, go to the "Playback" tab and find "ALSA pug-in [python3.9]: ALSA Playback on". Set the output to "voicebox".

|

| 121 |

+

|

| 122 |

+

b. Then, go to "Recording" and find "ALSA pug-in [python3.9]: ALSA Playback from", and set the input to your desired microphone device.

|

| 123 |

+

|

| 124 |

+

<h2 id="citation">Citation</h2>

|

| 125 |

+

|

| 126 |

+

If you use this your academic research, please cite the following:

|

| 127 |

+

|

| 128 |

+

```

|

| 129 |

+

@inproceedings{authors2022voicelock,

|

| 130 |

+

title={VoiceBlock: Privacy through Real-Time Adversarial Attacks with Audio-to-Audio Models},

|

| 131 |

+

author={Patrick O'Reilly, Andreas Bugler, Keshav Bhandari, Max Morrison, Bryan Pardo},

|

| 132 |

+

booktitle={Neural Information Processing Systems},

|

| 133 |

+

month={November},

|

| 134 |

+

year={2022}

|

| 135 |

+

}

|

| 136 |

+

```

|

voicebox/cache/.gitkeep

ADDED

|

File without changes

|

voicebox/data/.gitkeep

ADDED

|

File without changes

|

voicebox/figures/demo_thumbnail.png

ADDED

|

voicebox/figures/use_diagram_embeddings.png

ADDED

|

voicebox/figures/vb_color_logo.png

ADDED

|

voicebox/figures/voicebox_untargeted_conditioning_draft.png

ADDED

|

voicebox/pretrained/denoiser/demucs/dns_48.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4cfd4151600ed611d4af05083f4633d4fc31b53761cff8a185293346df745988

|

| 3 |

+

size 75486933

|

voicebox/pretrained/phoneme/causal_ppg_128_hidden_128_hop.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:be4c7a60c9af77e50af86924df8b73eb0c861a46f461e3bfe825c523a0a1a969

|

| 3 |

+

size 1175695

|

voicebox/pretrained/phoneme/causal_ppg_256_hidden.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4e8f20e4973a6b91002c97605f993cf6e16a24ca9d0d39e183438a8c16d85c87

|

| 3 |

+

size 4556495

|

voicebox/pretrained/phoneme/causal_ppg_256_hidden_256_hop.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:e0836df2f8465b53d4e0b5b14f1d1ef954b3570d6f95f1af22c3ac19b3e10099

|

| 3 |

+

size 4573903

|

voicebox/pretrained/phoneme/causal_ppg_256_hidden_512_hop.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a860d6f01058dc14b984845d27e681b5fe7c3bfffe41350e2e6e0f92e72778ad

|

| 3 |

+

size 4608719

|

voicebox/pretrained/phoneme/ppg_causal_small.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:4627bc2b63798df3391fe5c9ccbd72b929dc146b84f0fe61d1aa22848d107973

|

| 3 |

+

size 18002639

|

voicebox/pretrained/speaker/resemblyzer/resemblyzer.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:afb2230a894f5a8f91263ff0b4811bde1ea5981bedda45a579c225e5a602ada3

|

| 3 |

+

size 5697307

|

voicebox/pretrained/speaker/resnetse34v2/resnetse34v2.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:d96a4dad0118e9945bc7e676d8e5ff34d493ca2209fe188b3f982005132369bc

|

| 3 |

+

size 32311667

|

voicebox/pretrained/speaker/yvector/yvector.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f2b4228cc772e689f800f1f9dc91d4ef4ee289e7e62f2822805edfc5b7faf399

|

| 3 |

+

size 57703939

|

voicebox/pretrained/universal/universal_final.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f435535934f6c8c24fda42c251e65f41627b0660d3420ba1c694e25a82be033e

|

| 3 |

+

size 128811

|

voicebox/pretrained/voicebox/voicebox_final.pt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:eb26234cc493182545dbfcc74501f6df7e90347ca3e2a94a7966978325a34ccd

|

| 3 |

+

size 30232012

|

voicebox/pretrained/voicebox/voicebox_final.yaml

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

win_length: 256

|

| 2 |

+

ppg_encoder_hidden_size: 256

|

| 3 |

+

use_phoneme_encoder: True

|

| 4 |

+

use_pitch_encoder: True

|

| 5 |

+

use_loudness_encoder: True

|

| 6 |

+

spec_encoder_lookahead_frames: 0

|

| 7 |

+

spec_encoder_type: 'mel'

|

| 8 |

+

spec_encoder_mlp_depth: 2

|

| 9 |

+

bottleneck_lookahead_frames: 5

|

| 10 |

+

ppg_encoder_path: 'pretrained/phoneme/causal_ppg_256_hidden.pt'

|

| 11 |

+

n_bands: 128

|

| 12 |

+

spec_encoder_hidden_size: 512

|

| 13 |

+

bottleneck_skip: True

|

| 14 |

+

bottleneck_hidden_size: 512

|

| 15 |

+

bottleneck_feedforward_size: 512

|

| 16 |

+

bottleneck_type: 'lstm'

|

| 17 |

+

bottleneck_depth: 2

|

| 18 |

+

control_eps: 0.5

|

| 19 |

+

projection_norm: 'inf'

|

| 20 |

+

conditioning_dim: 512

|

voicebox/requirements.txt

ADDED

|

@@ -0,0 +1,28 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch==1.10.0

|

| 2 |

+

torchaudio==0.10.0

|

| 3 |

+

torchvision

|

| 4 |

+

torchcrepe

|

| 5 |

+

tensorboard

|

| 6 |

+

textgrid

|

| 7 |

+

Pillow

|

| 8 |

+

numpy

|

| 9 |

+

tqdm

|

| 10 |

+

jiwer

|

| 11 |

+

librosa

|

| 12 |

+

pandas

|

| 13 |

+

protobuf==3.20.0

|

| 14 |

+

git+https://github.com/ludlows/python-pesq#egg=pesq

|

| 15 |

+

psutil

|

| 16 |

+

pystoi

|

| 17 |

+

pytest

|

| 18 |

+

pyworld

|

| 19 |

+

pyyaml

|

| 20 |

+

matplotlib

|

| 21 |

+

seaborn

|

| 22 |

+

ipython

|

| 23 |

+

scipy

|

| 24 |

+

scikit-learn

|

| 25 |

+

ipywebrtc

|

| 26 |

+

argbind

|

| 27 |

+

sounddevice

|

| 28 |

+

keyboard

|

voicebox/scripts/downloads/download_librispeech_eval.sh

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

set -e

|

| 4 |

+

|

| 5 |

+

DOWNLOADS_SCRIPTS_DIR=$(eval dirname "$(readlink -f "$0")")

|

| 6 |

+

SCRIPTS_DIR="$(dirname "$DOWNLOADS_SCRIPTS_DIR")"

|

| 7 |

+

PROJECT_DIR="$(dirname "$SCRIPTS_DIR")"

|

| 8 |

+

|

| 9 |

+

DATA_DIR="${PROJECT_DIR}/data/"

|

| 10 |

+

CACHE_DIR="${PROJECT_DIR}/cache/"

|

| 11 |

+

|

| 12 |

+

mkdir -p "${DATA_DIR}"

|

| 13 |

+

mkdir -p "${CACHE_DIR}"

|

| 14 |

+

|

| 15 |

+

# download train-clean-360 subset

|

| 16 |

+

echo "downloading LibriSpeech train-clean-360..."

|

| 17 |

+

wget http://www.openslr.org/resources/12/train-clean-360.tar.gz

|

| 18 |

+

|

| 19 |

+

# extract train-clean-360 subset

|

| 20 |

+

echo "extracting LibriSpeech train-clean-360..."

|

| 21 |

+

tar -xf train-clean-360.tar.gz \

|

| 22 |

+

-C "${DATA_DIR}"

|

| 23 |

+

|

| 24 |

+

# delete archive

|

| 25 |

+

rm -f "train-clean-360.tar.gz"

|

voicebox/scripts/downloads/download_librispeech_train.sh

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

set -e

|

| 4 |

+

|

| 5 |

+

DOWNLOADS_SCRIPTS_DIR=$(eval dirname "$(readlink -f "$0")")

|

| 6 |

+

SCRIPTS_DIR="$(dirname "$DOWNLOADS_SCRIPTS_DIR")"

|

| 7 |

+

PROJECT_DIR="$(dirname "$SCRIPTS_DIR")"

|

| 8 |

+

|

| 9 |

+

DATA_DIR="${PROJECT_DIR}/data/"

|

| 10 |

+

CACHE_DIR="${PROJECT_DIR}/cache/"

|

| 11 |

+

|

| 12 |

+

mkdir -p "${DATA_DIR}"

|

| 13 |

+

mkdir -p "${CACHE_DIR}"

|

| 14 |

+

|

| 15 |

+

# download test-clean subset

|

| 16 |

+

echo "downloading LibriSpeech test-clean..."

|

| 17 |

+

wget http://www.openslr.org/resources/12/test-clean.tar.gz

|

| 18 |

+

|

| 19 |

+

# extract test-clean subset

|

| 20 |

+

echo "extracting LibriSpeech test-clean..."

|

| 21 |

+

tar -xf test-clean.tar.gz \

|

| 22 |

+

-C "${DATA_DIR}"

|

| 23 |

+

|

| 24 |

+

# delete archive

|

| 25 |

+

rm -f "test-clean.tar.gz"

|

| 26 |

+

|

| 27 |

+

# download test-other subset

|

| 28 |

+

echo "downloading LibriSpeech test-other..."

|

| 29 |

+

wget http://www.openslr.org/resources/12/test-other.tar.gz

|

| 30 |

+

|

| 31 |

+

# extract test-other subset

|

| 32 |

+

echo "extracting LibriSpeech test-other..."

|

| 33 |

+

tar -xf test-other.tar.gz \

|

| 34 |

+

-C "${DATA_DIR}"

|

| 35 |

+

|

| 36 |

+

# delete archive

|

| 37 |

+

rm -f "test-other.tar.gz"

|

| 38 |

+

|

| 39 |

+

# download train-clean-100 subset

|

| 40 |

+

echo "downloading LibriSpeech train-clean-100..."

|

| 41 |

+

wget http://www.openslr.org/resources/12/train-clean-100.tar.gz

|

| 42 |

+

|

| 43 |

+

# extract train-clean-100 subset

|

| 44 |

+

echo "extracting LibriSpeech train-clean-100..."

|

| 45 |

+

tar -xf train-clean-100.tar.gz \

|

| 46 |

+

-C "${DATA_DIR}"

|

| 47 |

+

|

| 48 |

+

# delete archive

|

| 49 |

+

rm -f "train-clean-100.tar.gz"

|

| 50 |

+

|

| 51 |

+

# download LibriSpeech alignments dataset

|

| 52 |

+

wget -O alignments.zip https://zenodo.org/record/2619474/files/librispeech_alignments.zip?download=1

|

| 53 |

+

unzip -d "${DATA_DIR}/LibriSpeech/" alignments.zip

|

| 54 |

+

rm -f alignments.zip

|

voicebox/scripts/downloads/download_rir_noise.sh

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

set -e

|

| 4 |

+

|

| 5 |

+

DOWNLOADS_SCRIPTS_DIR=$(eval dirname "$(readlink -f "$0")")

|

| 6 |

+

SCRIPTS_DIR="$(dirname "$DOWNLOADS_SCRIPTS_DIR")"

|

| 7 |

+

PROJECT_DIR="$(dirname "$SCRIPTS_DIR")"

|

| 8 |

+

|

| 9 |

+

DATA_DIR="${PROJECT_DIR}/data/"

|

| 10 |

+

CACHE_DIR="${PROJECT_DIR}/cache/"

|

| 11 |

+

|

| 12 |

+

REAL_RIR_DIR="${DATA_DIR}/rir/real/"

|

| 13 |

+

SYNTHETIC_RIR_DIR="${DATA_DIR}/rir/synthetic/"

|

| 14 |

+

ROOM_NOISE_DIR="${DATA_DIR}/noise/room/"

|

| 15 |

+

PS_NOISE_DIR="${DATA_DIR}/noise/pointsource/"

|

| 16 |

+

|

| 17 |

+

mkdir -p "${REAL_RIR_DIR}"

|

| 18 |

+

mkdir -p "${SYNTHETIC_RIR_DIR}"

|

| 19 |

+

mkdir -p "${ROOM_NOISE_DIR}"

|

| 20 |

+

mkdir -p "${PS_NOISE_DIR}"

|

| 21 |

+

|

| 22 |

+

# download RIR/noise composite dataset

|

| 23 |

+

echo "downloading RIR/noise dataset..."

|

| 24 |

+

wget -O "${DATA_DIR}/rirs_noises.zip" https://www.openslr.org/resources/28/rirs_noises.zip

|

| 25 |

+

|

| 26 |

+

# extract RIR/noise composite dataset

|

| 27 |

+

echo "unzipping RIR/noise dataset..."

|

| 28 |

+

unzip "${DATA_DIR}/rirs_noises.zip" -d "${DATA_DIR}/"

|

| 29 |

+

|

| 30 |

+

# delete archive

|

| 31 |

+

rm -f "${DATA_DIR}/rirs_noises.zip"

|

| 32 |

+

|

| 33 |

+

# organize pointsource noise data

|

| 34 |

+

echo "extracting point-source noise data"

|

| 35 |

+

cp -a "${DATA_DIR}/RIRS_NOISES/pointsource_noises"/. "${PS_NOISE_DIR}"

|

| 36 |

+

|

| 37 |

+

# organize room noise data

|

| 38 |

+

echo "extracting room noise data"

|

| 39 |

+

room_noises=($(find "${DATA_DIR}/RIRS_NOISES/real_rirs_isotropic_noises/" -maxdepth 1 -name '*noise*' -type f))

|

| 40 |

+

cp -- "${room_noises[@]}" "${ROOM_NOISE_DIR}"

|

| 41 |

+

|

| 42 |

+

# organize real RIR data

|

| 43 |

+

echo "extracting recorded RIR data"

|

| 44 |

+

rirs=($(find "${DATA_DIR}/RIRS_NOISES/real_rirs_isotropic_noises/" ! -name '*noise*' ))

|

| 45 |

+

cp -- "${rirs[@]}" "${REAL_RIR_DIR}"

|

| 46 |

+

|

| 47 |

+

# organize synthetic RIR data

|

| 48 |

+

echo "extracting synthetic RIR data"

|

| 49 |

+

cp -a "${DATA_DIR}/RIRS_NOISES/simulated_rirs"/. "${SYNTHETIC_RIR_DIR}"

|

| 50 |

+

|

| 51 |

+

# delete redundant data

|

| 52 |

+

rm -rf "${DATA_DIR}/RIRS_NOISES/"

|

| 53 |

+

|

| 54 |

+

# separate near-field and far-field RIRs

|

| 55 |

+

NEARFIELD_RIR_DIR="${REAL_RIR_DIR}/nearfield/"

|

| 56 |

+

FARFIELD_RIR_DIR="${REAL_RIR_DIR}/farfield/"

|

| 57 |

+

|

| 58 |

+

mkdir -p "${NEARFIELD_RIR_DIR}"

|

| 59 |

+

mkdir -p "${FARFIELD_RIR_DIR}"

|

| 60 |

+

|

| 61 |

+

# read list of far-field RIRs

|

| 62 |

+

readarray -t FF_RIR_LIST < "${DOWNLOADS_SCRIPTS_DIR}/ff_rir.txt"

|

| 63 |

+

|

| 64 |

+

# move far-field RIRs

|

| 65 |

+

for name in "${FF_RIR_LIST[@]}"; do

|

| 66 |

+

mv "$name" "${FARFIELD_RIR_DIR}/$(basename "$name")"

|

| 67 |

+

done

|

| 68 |

+

|

| 69 |

+

# move remaining near-field RIRs

|

| 70 |

+

for name in "${REAL_RIR_DIR}"/*.wav; do

|

| 71 |

+

mv "$name" "${NEARFIELD_RIR_DIR}/$(basename "$name")"

|

| 72 |

+

done

|

| 73 |

+

|

voicebox/scripts/downloads/download_voxceleb.py

ADDED

|

@@ -0,0 +1,189 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

from pathlib import Path

|

| 3 |

+

import subprocess

|

| 4 |

+

import hashlib

|

| 5 |

+

import tarfile

|

| 6 |

+

from zipfile import ZipFile

|

| 7 |

+

|

| 8 |

+

from src.constants import VOXCELEB1_DATA_DIR, VOXCELEB2_DATA_DIR

|

| 9 |

+

from src.utils import ensure_dir

|

| 10 |

+

|

| 11 |

+

################################################################################

|

| 12 |

+

# Download VoxCeleb1 dataset using valid credentials

|

| 13 |

+

################################################################################

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

def parse_args():

|

| 17 |

+

|

| 18 |

+

"""Parse command-line arguments"""

|

| 19 |

+

parser = argparse.ArgumentParser(add_help=False)

|

| 20 |

+

|

| 21 |

+

parser.add_argument(

|

| 22 |

+

'--subset',

|

| 23 |

+

type=int,

|

| 24 |

+

default=1,

|

| 25 |

+

help='Specify which VoxCeleb subset to download: 1 or 2'

|

| 26 |

+

)

|

| 27 |

+

|

| 28 |

+

parser.add_argument(

|

| 29 |

+

'--username',

|

| 30 |

+

type=str,

|

| 31 |

+

default=None,

|

| 32 |

+

help='User name provided by VGG to access VoxCeleb dataset'

|

| 33 |

+

)

|

| 34 |

+

|

| 35 |

+

parser.add_argument(

|

| 36 |

+

'--password',

|

| 37 |

+

type=str,

|

| 38 |

+

default=None,

|

| 39 |

+

help='Password provided by VGG to access VoxCeleb dataset'

|

| 40 |

+

)

|

| 41 |

+

|

| 42 |

+

return parser.parse_args()

|

| 43 |

+

|

| 44 |

+

|

| 45 |

+

def md5(f: str):

|

| 46 |

+

"""

|

| 47 |

+

Return MD5 checksum for file. Code adapted from voxceleb_trainer repository:

|

| 48 |

+

https://github.com/clovaai/voxceleb_trainer/blob/master/dataprep.py

|

| 49 |

+

"""

|

| 50 |

+

|

| 51 |

+

hash_md5 = hashlib.md5()

|

| 52 |

+

with open(f, "rb") as f:

|

| 53 |

+

for chunk in iter(lambda: f.read(4096), b""):

|

| 54 |

+

hash_md5.update(chunk)

|

| 55 |

+

return hash_md5.hexdigest()

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

def download(username: str,

|

| 59 |

+

password: str,

|

| 60 |

+

save_path: str,

|

| 61 |

+

lines: list):

|

| 62 |

+

"""

|

| 63 |

+

Given a list of dataset shards formatted as <URL, MD5>, download

|

| 64 |

+

each using `wget` and verify checksums. Code adapted from voxceleb_trainer

|

| 65 |

+

repository:

|

| 66 |

+

https://github.com/clovaai/voxceleb_trainer/blob/master/dataprep.py

|

| 67 |

+

"""

|

| 68 |

+

|

| 69 |

+

for line in lines:

|

| 70 |

+

url = line.split()[0]

|

| 71 |

+

md5gt = line.split()[1]

|

| 72 |

+

outfile = url.split('/')[-1]

|

| 73 |

+

|

| 74 |

+

# download files

|

| 75 |

+

out = subprocess.call(

|

| 76 |

+

f'wget {url} --user {username} --password {password} -O {save_path}'

|

| 77 |

+

f'/{outfile}', shell=True)

|

| 78 |

+

if out != 0:

|

| 79 |

+

raise ValueError(f'Download failed for {url}')

|

| 80 |

+

|

| 81 |

+

# verify checksum

|

| 82 |

+

md5ck = md5(f'{save_path}/{outfile}')

|

| 83 |

+

if md5ck == md5gt:

|

| 84 |

+

print(f'Checksum successful for {outfile}')

|

| 85 |

+

else:

|

| 86 |

+

raise Warning(f'Checksum failed for {outfile}')

|

| 87 |

+

|

| 88 |

+

|

| 89 |

+

def concatenate(save_path: str, lines: list):

|

| 90 |

+

"""

|

| 91 |

+

Given a specification in the format <FMT, FILENAME, MD5>, concatenate all

|

| 92 |

+

downloaded data shards matching FMT into the file FILENAME and verify

|

| 93 |

+

checksums. Code adapted from voxceleb_trainer repository:

|

| 94 |

+

https://github.com/clovaai/voxceleb_trainer/blob/master/dataprep.py

|

| 95 |

+

"""

|

| 96 |

+

|

| 97 |

+

for line in lines:

|

| 98 |

+

infile = line.split()[0]

|

| 99 |

+

outfile = line.split()[1]

|

| 100 |

+

md5gt = line.split()[2]

|

| 101 |

+

|

| 102 |

+

# concatenate shards

|

| 103 |

+

out = subprocess.call(

|

| 104 |

+

f'cat {save_path}/{infile} > {save_path}/{outfile}', shell=True)

|

| 105 |

+

|

| 106 |

+

# verify checksum

|

| 107 |

+

md5ck = md5(f'{save_path}/{outfile}')

|

| 108 |

+

if md5ck == md5gt:

|

| 109 |

+

print(f'Checksum successful for {outfile}')

|

| 110 |

+

else:

|

| 111 |

+

raise Warning(f'Checksum failed for {outfile}')

|

| 112 |

+

|

| 113 |

+

# delete shards

|

| 114 |

+

out = subprocess.call(

|

| 115 |

+

f'rm {save_path}/{infile}', shell=True)

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

def full_extract(save_path: str, f: str):

|

| 119 |

+

"""

|

| 120 |

+

Extract contents of compressed archive to data directory

|

| 121 |

+

"""

|

| 122 |

+

|

| 123 |

+

save_path = str(save_path)

|

| 124 |

+

f = str(f)

|

| 125 |

+

|

| 126 |

+

print(f'Extracting {f}')

|

| 127 |

+

|

| 128 |

+

if f.endswith(".tar.gz"):

|

| 129 |

+

with tarfile.open(f, "r:gz") as tar:

|

| 130 |

+

tar.extractall(save_path)

|

| 131 |

+

|

| 132 |

+

elif f.endswith(".zip"):

|

| 133 |

+

with ZipFile(f, 'r') as zf:

|

| 134 |

+

zf.extractall(save_path)

|

| 135 |

+

|

| 136 |

+

|

| 137 |

+

def main():

|

| 138 |

+

|

| 139 |

+

args = parse_args()

|

| 140 |

+

|

| 141 |

+

# prepare to load dataset file paths

|

| 142 |

+

downloads_dir = Path(__file__).parent

|

| 143 |

+

|

| 144 |

+

if args.subset == 1:

|

| 145 |

+

data_dir = VOXCELEB1_DATA_DIR

|

| 146 |

+

elif args.subset == 2:

|

| 147 |

+

data_dir = VOXCELEB2_DATA_DIR

|

| 148 |

+

else:

|

| 149 |

+

raise ValueError(f'Invalid VoxCeleb subset {args.subset}')

|

| 150 |

+

|

| 151 |

+

ensure_dir(data_dir)

|

| 152 |

+

|

| 153 |

+

# load dataset file paths

|

| 154 |

+

with open(downloads_dir / f'voxceleb{args.subset}_file_parts.txt', 'r') as f:

|

| 155 |

+

file_parts_list = f.readlines()

|

| 156 |

+

|

| 157 |

+

# load output file paths

|

| 158 |

+

with open(downloads_dir / f'voxceleb{args.subset}_files.txt', 'r') as f:

|

| 159 |

+

files_list = f.readlines()

|

| 160 |

+

|

| 161 |

+

# download subset

|

| 162 |

+

download(

|

| 163 |

+

username=args.username,

|

| 164 |

+

password=args.password,

|

| 165 |

+

save_path=data_dir,

|

| 166 |

+

lines=file_parts_list

|

| 167 |

+

)

|

| 168 |

+

|

| 169 |

+

# merge shards

|

| 170 |

+

concatenate(save_path=data_dir, lines=files_list)

|

| 171 |

+

|

| 172 |

+

# account for test data

|

| 173 |

+

archives = [file.split()[1] for file in files_list]

|

| 174 |

+

test = f"vox{args.subset}_test_{'wav' if args.subset == 1 else 'aac'}.zip"

|

| 175 |

+

archives.append(test)

|

| 176 |

+

|

| 177 |

+

# extract all compressed data

|

| 178 |

+

for file in archives:

|

| 179 |

+

full_extract(data_dir, data_dir / file)

|

| 180 |

+

|

| 181 |

+

# organize extracted data

|

| 182 |

+

out = subprocess.call(f'mv {data_dir}/dev/aac/* {data_dir}/aac/ && rm -r '

|

| 183 |

+

f'{data_dir}/dev', shell=True)

|

| 184 |

+

out = subprocess.call(f'mv -v {data_dir}/{"wav" if args.subset == 1 else "aac"}/*'

|

| 185 |

+

f' {data_dir}/voxceleb{args.subset}', shell=True)

|

| 186 |

+

|

| 187 |

+

|

| 188 |

+

if __name__ == "__main__":

|

| 189 |

+

main()

|

voicebox/scripts/downloads/ff_rir.txt

ADDED

|

@@ -0,0 +1,132 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

data/rir/real/air_type1_air_binaural_lecture_0_1.wav

|

| 2 |

+

data/rir/real/RWCP_type3_rir_cirline_ofc_imp_rev.wav

|

| 3 |

+

data/rir/real/RWCP_type1_rir_cirline_jr2_imp110.wav

|

| 4 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_4_90_3.wav

|

| 5 |

+

data/rir/real/RVB2014_type1_rir_largeroom2_far_anglb.wav

|

| 6 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_60.wav

|

| 7 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_3_90_3.wav

|

| 8 |

+

data/rir/real/air_type1_air_binaural_lecture_0_5.wav

|

| 9 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_30.wav

|

| 10 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_30.wav

|

| 11 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_15.wav

|

| 12 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_165.wav

|

| 13 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_75.wav

|

| 14 |

+

data/rir/real/air_type1_air_binaural_lecture_0_3.wav

|

| 15 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_0.wav

|

| 16 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_0.wav

|

| 17 |

+

data/rir/real/RWCP_type2_rir_cirline_jr1_imp110.wav

|

| 18 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_5_90_3.wav

|

| 19 |

+

data/rir/real/RVB2014_type1_rir_largeroom1_far_anglb.wav

|

| 20 |

+

data/rir/real/air_type1_air_binaural_lecture_1_1.wav

|

| 21 |

+

data/rir/real/RVB2014_type1_rir_largeroom1_far_angla.wav

|

| 22 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_7_90_3.wav

|

| 23 |

+

data/rir/real/RWCP_type2_rir_cirline_ofc_imp070.wav

|

| 24 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp070.wav

|

| 25 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_150.wav

|

| 26 |

+

data/rir/real/air_type1_air_binaural_lecture_1_5.wav

|

| 27 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp100.wav

|

| 28 |

+

data/rir/real/RWCP_type1_rir_cirline_jr2_imp100.wav

|

| 29 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp130.wav

|

| 30 |

+

data/rir/real/air_type1_air_phone_corridor_hfrp.wav

|

| 31 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp130.wav

|

| 32 |

+

data/rir/real/RVB2014_type1_rir_largeroom1_near_angla.wav

|

| 33 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_75.wav

|

| 34 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp150.wav

|

| 35 |

+

data/rir/real/air_type1_air_phone_lecture_hhp.wav

|

| 36 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_105.wav

|

| 37 |

+

data/rir/real/air_type1_air_phone_stairway_hfrp.wav

|

| 38 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_105.wav

|

| 39 |

+

data/rir/real/RWCP_type2_rir_cirline_jr1_imp090.wav

|

| 40 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp050.wav

|

| 41 |

+

data/rir/real/air_type1_air_phone_stairway2_hfrp.wav

|

| 42 |

+

data/rir/real/air_type1_air_phone_stairway2_hhp.wav

|

| 43 |

+

data/rir/real/RWCP_type1_rir_cirline_jr2_imp060.wav

|

| 44 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_90.wav

|

| 45 |

+

data/rir/real/RWCP_type2_rir_cirline_jr1_imp130.wav

|

| 46 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp030.wav

|

| 47 |

+

data/rir/real/RVB2014_type1_rir_largeroom2_near_angla.wav

|

| 48 |

+

data/rir/real/air_type1_air_binaural_lecture_0_6.wav

|

| 49 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp070.wav

|

| 50 |

+

data/rir/real/air_type1_air_phone_stairway1_hhp.wav

|

| 51 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_45.wav

|

| 52 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp090.wav

|

| 53 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_135.wav

|

| 54 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_180.wav

|

| 55 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp100.wav

|

| 56 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp080.wav

|

| 57 |

+

data/rir/real/RWCP_type2_rir_cirline_ofc_imp090.wav

|

| 58 |

+

data/rir/real/RWCP_type1_rir_cirline_jr2_imp080.wav

|

| 59 |

+

data/rir/real/air_type1_air_binaural_lecture_1_2.wav

|

| 60 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp070.wav

|

| 61 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_150.wav

|

| 62 |

+

data/rir/real/air_type1_air_binaural_lecture_1_4.wav

|

| 63 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_3_0_3.wav

|

| 64 |

+

data/rir/real/RVB2014_type1_rir_largeroom1_near_anglb.wav

|

| 65 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_15.wav

|

| 66 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_120.wav

|

| 67 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp050.wav

|

| 68 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_1_90_3.wav

|

| 69 |

+

data/rir/real/air_type1_air_phone_stairway_hhp.wav

|

| 70 |

+

data/rir/real/RWCP_type1_rir_cirline_jr2_imp120.wav

|

| 71 |

+

data/rir/real/RWCP_type2_rir_cirline_e2b_imp110.wav

|

| 72 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp010.wav

|

| 73 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_15.wav

|

| 74 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_135.wav

|

| 75 |

+

data/rir/real/air_type1_air_phone_bt_stairway_hhp.wav

|

| 76 |

+

data/rir/real/RWCP_type2_rir_cirline_e2b_imp070.wav

|

| 77 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp120.wav

|

| 78 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp110.wav

|

| 79 |

+

data/rir/real/air_type1_air_binaural_lecture_0_4.wav

|

| 80 |

+

data/rir/real/RWCP_type2_rir_cirline_ofc_imp050.wav

|

| 81 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_90.wav

|

| 82 |

+

data/rir/real/RWCP_type1_rir_cirline_jr2_imp090.wav

|

| 83 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_0.wav

|

| 84 |

+

data/rir/real/air_type1_air_phone_stairway1_hfrp.wav

|

| 85 |

+

data/rir/real/air_type1_air_binaural_lecture_1_3.wav

|

| 86 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp050.wav

|

| 87 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp080.wav

|

| 88 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_165.wav

|

| 89 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_45.wav

|

| 90 |

+

data/rir/real/air_type1_air_phone_bt_corridor_hhp.wav

|

| 91 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_2_90_3.wav

|

| 92 |

+

data/rir/real/RWCP_type2_rir_cirline_ofc_imp110.wav

|

| 93 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_120.wav

|

| 94 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_3_180_3.wav

|

| 95 |

+

data/rir/real/RWCP_type1_rir_cirline_e2b_imp110.wav

|

| 96 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp060.wav

|

| 97 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_45.wav

|

| 98 |

+

data/rir/real/RVB2014_type1_rir_largeroom2_far_angla.wav

|

| 99 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_60.wav

|

| 100 |

+

data/rir/real/RWCP_type2_rir_cirline_jr1_imp070.wav

|

| 101 |

+

data/rir/real/RWCP_type1_rir_cirline_ofc_imp130.wav

|

| 102 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_3_135_3.wav

|

| 103 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_75.wav

|

| 104 |

+

data/rir/real/air_type1_air_binaural_stairway_1_1_180.wav

|

| 105 |

+

data/rir/real/RWCP_type1_rir_cirline_jr1_imp120.wav

|

| 106 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_60.wav

|

| 107 |

+

data/rir/real/air_type1_air_binaural_stairway_1_2_105.wav

|

| 108 |

+

data/rir/real/air_type1_air_binaural_stairway_1_3_135.wav

|

| 109 |

+

data/rir/real/air_type1_air_binaural_aula_carolina_1_3_45_3.wav

|

| 110 |

+

data/rir/real/air_type1_air_binaural_lecture_1_6.wav

|

| 111 |

+

data/rir/real/RWCP_type2_rir_cirline_e2b_imp090.wav

|