Spaces:

Runtime error

Runtime error

Upload 37 files

Browse files- .gitattributes +5 -0

- ComplutenseTFGBanner.png +0 -0

- configVars.py +3 -0

- data.py +45 -0

- datasets/pred.csv +2 -0

- datasets/pred.hdf5 +0 -0

- datasets/pred.npy +3 -0

- ecg.png +0 -0

- error.jpg +0 -0

- interface.py +20 -0

- libs.py +31 -0

- models/CPSC-2018/best.ptl +3 -0

- models/CPSC-2018/bestInt.ptl +3 -0

- models/Chapman/best.ptl +3 -0

- models/antonior92/model.hdf5 +3 -0

- models/antonior92/model_6.hdf5 +3 -0

- nets/__pycache__/backbones.cpython-311.pyc +0 -0

- nets/__pycache__/backbones.cpython-37.pyc +0 -0

- nets/__pycache__/bblocks.cpython-311.pyc +0 -0

- nets/__pycache__/bblocks.cpython-37.pyc +0 -0

- nets/__pycache__/layers.cpython-311.pyc +0 -0

- nets/__pycache__/layers.cpython-37.pyc +0 -0

- nets/__pycache__/modules.cpython-311.pyc +0 -0

- nets/__pycache__/modules.cpython-37.pyc +0 -0

- nets/__pycache__/nets.cpython-311.pyc +0 -0

- nets/__pycache__/nets.cpython-37.pyc +0 -0

- nets/backbones.py +57 -0

- nets/bblocks.py +55 -0

- nets/layers.py +29 -0

- nets/modules.py +33 -0

- nets/nets.py +73 -0

- predicts.py +118 -0

- thresholds/CPSC-2018/optimal_thresholds_best.csv +10 -0

- thresholds/antonior92/optimal_thresholds_best.csv +7 -0

- tools/__pycache__/tools.cpython-311.pyc +0 -0

- tools/__pycache__/tools.cpython-37.pyc +0 -0

- tools/tools.py +124 -0

.gitattributes

CHANGED

|

@@ -32,3 +32,8 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 33 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 35 |

+

models/antonior92/model_6.hdf5 filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

models/antonior92/model.hdf5 filter=lfs diff=lfs merge=lfs -text

|

| 37 |

+

models/Chapman/best.ptl filter=lfs diff=lfs merge=lfs -text

|

| 38 |

+

models/CPSC-2018/best.ptl filter=lfs diff=lfs merge=lfs -text

|

| 39 |

+

models/CPSC-2018/bestInt.ptl filter=lfs diff=lfs merge=lfs -text

|

ComplutenseTFGBanner.png

ADDED

|

configVars.py

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

pathCasos = "./datasets/"

|

| 2 |

+

pathModel = "./models/"

|

| 3 |

+

pathThresholds = './thresholds/'

|

data.py

ADDED

|

@@ -0,0 +1,45 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os, sys

|

| 3 |

+

from libs import *

|

| 4 |

+

|

| 5 |

+

class ECGDataset(torch.utils.data.Dataset):

|

| 6 |

+

def __init__(self,

|

| 7 |

+

df_path, data_path,

|

| 8 |

+

config,

|

| 9 |

+

augment = False,

|

| 10 |

+

):

|

| 11 |

+

self.df_path, self.data_path, = df_path, data_path,

|

| 12 |

+

self.df = pandas.read_csv(self.df_path)

|

| 13 |

+

|

| 14 |

+

self.config = config

|

| 15 |

+

self.augment = augment

|

| 16 |

+

|

| 17 |

+

def __len__(self,

|

| 18 |

+

):

|

| 19 |

+

return len(self.df)

|

| 20 |

+

|

| 21 |

+

def __getitem__(self,

|

| 22 |

+

index,

|

| 23 |

+

):

|

| 24 |

+

row = self.df.iloc[index]

|

| 25 |

+

|

| 26 |

+

# save np.load

|

| 27 |

+

np_load_old = np.load

|

| 28 |

+

|

| 29 |

+

# modify the default parameters of np.load

|

| 30 |

+

np.load = lambda *a,**k: np_load_old(*a, allow_pickle=True, **k)

|

| 31 |

+

|

| 32 |

+

# call load_data with allow_pickle implicitly set to true

|

| 33 |

+

ecg = np.load("{}/{}.npy".format(self.data_path, row["id"]))[self.config["ecg_leads"], :]

|

| 34 |

+

|

| 35 |

+

# restore np.load for future normal usage

|

| 36 |

+

np.load = np_load_old

|

| 37 |

+

|

| 38 |

+

ecg = pad_sequences(ecg, self.config["ecg_length"], "float64",

|

| 39 |

+

"post", "post",

|

| 40 |

+

)

|

| 41 |

+

if self.augment:

|

| 42 |

+

ecg = self.drop_lead(ecg)

|

| 43 |

+

ecg = torch.tensor(ecg).float()

|

| 44 |

+

|

| 45 |

+

return ecg

|

datasets/pred.csv

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

id

|

| 2 |

+

pred

|

datasets/pred.hdf5

ADDED

|

Binary file (399 kB). View file

|

|

|

datasets/pred.npy

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:f4ab872234216a14c7a700dfe7c871f9429eeb43a08eba464770d4dc9ce5cf2f

|

| 3 |

+

size 508928

|

ecg.png

ADDED

|

error.jpg

ADDED

|

interface.py

ADDED

|

@@ -0,0 +1,20 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from libs import *

|

| 2 |

+

from predicts import procesar_archivo

|

| 3 |

+

import gradio as gr

|

| 4 |

+

|

| 5 |

+

with gr.Blocks() as interface:

|

| 6 |

+

gr.Image(value='./ComplutenseTFGBanner.png',show_label=False)

|

| 7 |

+

with gr.Column():

|

| 8 |

+

format = gr.inputs.Dropdown(["XMLsierra","CSV"],default="XMLsierra",label= "Formato del archivo")

|

| 9 |

+

with gr.Row():

|

| 10 |

+

number = gr.inputs.Slider(label="Valor",default=200,minimum=1,maximum=999)

|

| 11 |

+

unit = gr.inputs.Dropdown(["V","miliV","microV","nanoV"], label="Unidad",default="miliV")

|

| 12 |

+

with gr.Column():

|

| 13 |

+

frec = gr.inputs.Number(label= "Frecuencia (Hz)",default=500)

|

| 14 |

+

file = gr.inputs.File(label="Selecciona un archivo.")

|

| 15 |

+

button = gr.Button(value='Analizar')

|

| 16 |

+

out = gr.DataFrame(label="Diagnostico automático.",type="pandas",headers = ['Red','Predicción'])

|

| 17 |

+

img = gr.outputs.Image(label="Imagen",type='filepath')

|

| 18 |

+

button.click(fn=procesar_archivo,inputs=[format,number,unit,frec,file] ,outputs=[out,img])

|

| 19 |

+

|

| 20 |

+

interface.launch(share = True)

|

libs.py

ADDED

|

@@ -0,0 +1,31 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os, sys

|

| 2 |

+

import warnings; warnings.filterwarnings("ignore")

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

import pandas, numpy as np

|

| 6 |

+

import pandas as pd

|

| 7 |

+

import gradio as gr

|

| 8 |

+

#import argparse

|

| 9 |

+

#import random

|

| 10 |

+

#import neurokit2 as nk

|

| 11 |

+

import torch

|

| 12 |

+

import torch.nn as nn, torch.optim as optim

|

| 13 |

+

import torch.nn.functional as F

|

| 14 |

+

import torch.nn.utils.prune as prune

|

| 15 |

+

#import captum.attr as attr

|

| 16 |

+

#import matplotlib.pyplot as pyplot

|

| 17 |

+

#from sklearn.metrics import f1_score

|

| 18 |

+

from tensorflow.keras.models import load_model

|

| 19 |

+

from tensorflow.keras.optimizers import Adam

|

| 20 |

+

from tensorflow.keras.preprocessing.sequence import pad_sequences

|

| 21 |

+

import h5py

|

| 22 |

+

import scipy.signal as sgn

|

| 23 |

+

from sierraecg import read_file

|

| 24 |

+

import ecg_plot

|

| 25 |

+

|

| 26 |

+

|

| 27 |

+

#!pip install pandas

|

| 28 |

+

#!pip install torch

|

| 29 |

+

#!pip install gradio

|

| 30 |

+

#!pip install tesorflow

|

| 31 |

+

#!pip install sierraecg

|

models/CPSC-2018/best.ptl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:41ce4ff6c2b17ec41b03f22eb8c303b14f965b0db2462cba57abf5de5cedca1a

|

| 3 |

+

size 21740080

|

models/CPSC-2018/bestInt.ptl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:41ce4ff6c2b17ec41b03f22eb8c303b14f965b0db2462cba57abf5de5cedca1a

|

| 3 |

+

size 21740080

|

models/Chapman/best.ptl

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0b5b27cf250cad4b1c872722ffdb75896b6efbb0c22f57abd6283d8a33bb8172

|

| 3 |

+

size 21729840

|

models/antonior92/model.hdf5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:93232c0dcecf2ac62cfa17758b86beb6bbf1fff36a4fb228f383b03af181a661

|

| 3 |

+

size 25826560

|

models/antonior92/model_6.hdf5

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:93232c0dcecf2ac62cfa17758b86beb6bbf1fff36a4fb228f383b03af181a661

|

| 3 |

+

size 25826560

|

nets/__pycache__/backbones.cpython-311.pyc

ADDED

|

Binary file (3.09 kB). View file

|

|

|

nets/__pycache__/backbones.cpython-37.pyc

ADDED

|

Binary file (1.36 kB). View file

|

|

|

nets/__pycache__/bblocks.cpython-311.pyc

ADDED

|

Binary file (2.71 kB). View file

|

|

|

nets/__pycache__/bblocks.cpython-37.pyc

ADDED

|

Binary file (1.27 kB). View file

|

|

|

nets/__pycache__/layers.cpython-311.pyc

ADDED

|

Binary file (1.5 kB). View file

|

|

|

nets/__pycache__/layers.cpython-37.pyc

ADDED

|

Binary file (918 Bytes). View file

|

|

|

nets/__pycache__/modules.cpython-311.pyc

ADDED

|

Binary file (1.85 kB). View file

|

|

|

nets/__pycache__/modules.cpython-37.pyc

ADDED

|

Binary file (1.01 kB). View file

|

|

|

nets/__pycache__/nets.cpython-311.pyc

ADDED

|

Binary file (3.61 kB). View file

|

|

|

nets/__pycache__/nets.cpython-37.pyc

ADDED

|

Binary file (1.59 kB). View file

|

|

|

nets/backbones.py

ADDED

|

@@ -0,0 +1,57 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os, sys

|

| 3 |

+

from libs import *

|

| 4 |

+

from .layers import *

|

| 5 |

+

from .modules import *

|

| 6 |

+

from .bblocks import *

|

| 7 |

+

|

| 8 |

+

class LightSEResNet18(nn.Module):

|

| 9 |

+

def __init__(self,

|

| 10 |

+

base_channels = 64,

|

| 11 |

+

):

|

| 12 |

+

super(LightSEResNet18, self).__init__()

|

| 13 |

+

self.bblock = LightSEResBlock

|

| 14 |

+

self.stem = nn.Sequential(

|

| 15 |

+

nn.Conv1d(

|

| 16 |

+

1, base_channels,

|

| 17 |

+

kernel_size = 15, padding = 7, stride = 2,

|

| 18 |

+

),

|

| 19 |

+

nn.BatchNorm1d(base_channels),

|

| 20 |

+

nn.ReLU(),

|

| 21 |

+

nn.MaxPool1d(

|

| 22 |

+

kernel_size = 3, padding = 1, stride = 2,

|

| 23 |

+

),

|

| 24 |

+

)

|

| 25 |

+

self.stage_0 = nn.Sequential(

|

| 26 |

+

self.bblock(base_channels),

|

| 27 |

+

self.bblock(base_channels),

|

| 28 |

+

)

|

| 29 |

+

|

| 30 |

+

self.stage_1 = nn.Sequential(

|

| 31 |

+

self.bblock(base_channels*1, downsample = True),

|

| 32 |

+

self.bblock(base_channels*2),

|

| 33 |

+

)

|

| 34 |

+

self.stage_2 = nn.Sequential(

|

| 35 |

+

self.bblock(base_channels*2, downsample = True),

|

| 36 |

+

self.bblock(base_channels*4),

|

| 37 |

+

)

|

| 38 |

+

self.stage_3 = nn.Sequential(

|

| 39 |

+

self.bblock(base_channels*4, downsample = True),

|

| 40 |

+

self.bblock(base_channels*8),

|

| 41 |

+

)

|

| 42 |

+

|

| 43 |

+

self.pool = nn.AdaptiveAvgPool1d(1)

|

| 44 |

+

|

| 45 |

+

def forward(self,

|

| 46 |

+

input,

|

| 47 |

+

):

|

| 48 |

+

output = self.stem(input)

|

| 49 |

+

output = self.stage_0(output)

|

| 50 |

+

|

| 51 |

+

output = self.stage_1(output)

|

| 52 |

+

output = self.stage_2(output)

|

| 53 |

+

output = self.stage_3(output)

|

| 54 |

+

|

| 55 |

+

output = self.pool(output)

|

| 56 |

+

|

| 57 |

+

return output

|

nets/bblocks.py

ADDED

|

@@ -0,0 +1,55 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os, sys

|

| 3 |

+

from libs import *

|

| 4 |

+

from .layers import *

|

| 5 |

+

from .modules import *

|

| 6 |

+

|

| 7 |

+

class LightSEResBlock(nn.Module):

|

| 8 |

+

def __init__(self,

|

| 9 |

+

in_channels,

|

| 10 |

+

downsample = False,

|

| 11 |

+

):

|

| 12 |

+

super(LightSEResBlock, self).__init__()

|

| 13 |

+

if downsample:

|

| 14 |

+

self.out_channels = in_channels*2

|

| 15 |

+

self.conv_1 = DSConv1d(

|

| 16 |

+

in_channels, self.out_channels,

|

| 17 |

+

kernel_size = 7, padding = 3, stride = 2,

|

| 18 |

+

)

|

| 19 |

+

self.identity = nn.Sequential(

|

| 20 |

+

DSConv1d(

|

| 21 |

+

in_channels, self.out_channels,

|

| 22 |

+

kernel_size = 1, padding = 0, stride = 2,

|

| 23 |

+

),

|

| 24 |

+

nn.BatchNorm1d(self.out_channels),

|

| 25 |

+

)

|

| 26 |

+

else:

|

| 27 |

+

self.out_channels = in_channels

|

| 28 |

+

self.conv_1 = DSConv1d(

|

| 29 |

+

in_channels, self.out_channels,

|

| 30 |

+

kernel_size = 7, padding = 3, stride = 1,

|

| 31 |

+

)

|

| 32 |

+

self.identity = nn.Identity()

|

| 33 |

+

self.conv_2 = DSConv1d(

|

| 34 |

+

self.out_channels, self.out_channels,

|

| 35 |

+

kernel_size = 7, padding = 3, stride = 1,

|

| 36 |

+

)

|

| 37 |

+

|

| 38 |

+

self.convs = nn.Sequential(

|

| 39 |

+

self.conv_1,

|

| 40 |

+

nn.BatchNorm1d(self.out_channels),

|

| 41 |

+

nn.ReLU(),

|

| 42 |

+

nn.Dropout(0.3),

|

| 43 |

+

self.conv_2,

|

| 44 |

+

nn.BatchNorm1d(self.out_channels),

|

| 45 |

+

LightSEModule(self.out_channels),

|

| 46 |

+

)

|

| 47 |

+

self.act_fn = nn.ReLU()

|

| 48 |

+

|

| 49 |

+

def forward(self,

|

| 50 |

+

input,

|

| 51 |

+

):

|

| 52 |

+

output = self.convs(input) + self.identity(input)

|

| 53 |

+

output = self.act_fn(output)

|

| 54 |

+

|

| 55 |

+

return output

|

nets/layers.py

ADDED

|

@@ -0,0 +1,29 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os, sys

|

| 3 |

+

from libs import *

|

| 4 |

+

|

| 5 |

+

class DSConv1d(nn.Module):

|

| 6 |

+

def __init__(self,

|

| 7 |

+

in_channels, out_channels,

|

| 8 |

+

kernel_size, padding = 0, stride = 1,

|

| 9 |

+

):

|

| 10 |

+

super(DSConv1d, self).__init__()

|

| 11 |

+

self.dw_conv = nn.Conv1d(

|

| 12 |

+

in_channels, in_channels,

|

| 13 |

+

kernel_size = kernel_size, padding = padding, stride = stride,

|

| 14 |

+

groups = in_channels,

|

| 15 |

+

bias = False,

|

| 16 |

+

)

|

| 17 |

+

self.pw_conv = nn.Conv1d(

|

| 18 |

+

in_channels, out_channels,

|

| 19 |

+

kernel_size = 1,

|

| 20 |

+

bias = False,

|

| 21 |

+

)

|

| 22 |

+

|

| 23 |

+

def forward(self,

|

| 24 |

+

input,

|

| 25 |

+

):

|

| 26 |

+

output = self.dw_conv(input)

|

| 27 |

+

output = self.pw_conv(output)

|

| 28 |

+

|

| 29 |

+

return output

|

nets/modules.py

ADDED

|

@@ -0,0 +1,33 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os, sys

|

| 3 |

+

from libs import *

|

| 4 |

+

from .layers import *

|

| 5 |

+

|

| 6 |

+

class LightSEModule(nn.Module):

|

| 7 |

+

def __init__(self,

|

| 8 |

+

in_channels,

|

| 9 |

+

reduction = 16,

|

| 10 |

+

):

|

| 11 |

+

super(LightSEModule, self).__init__()

|

| 12 |

+

self.pool = nn.AdaptiveAvgPool1d(1)

|

| 13 |

+

|

| 14 |

+

self.s_conv = DSConv1d(

|

| 15 |

+

in_channels, in_channels//reduction,

|

| 16 |

+

kernel_size = 1,

|

| 17 |

+

)

|

| 18 |

+

self.act_fn = nn.ReLU()

|

| 19 |

+

self.e_conv = DSConv1d(

|

| 20 |

+

in_channels//reduction, in_channels,

|

| 21 |

+

kernel_size = 1,

|

| 22 |

+

)

|

| 23 |

+

|

| 24 |

+

def forward(self,

|

| 25 |

+

input,

|

| 26 |

+

):

|

| 27 |

+

attention_scores = self.pool(input)

|

| 28 |

+

|

| 29 |

+

attention_scores = self.s_conv(attention_scores)

|

| 30 |

+

attention_scores = self.act_fn(attention_scores)

|

| 31 |

+

attention_scores = self.e_conv(attention_scores)

|

| 32 |

+

|

| 33 |

+

return input*torch.sigmoid(attention_scores)

|

nets/nets.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

import os, sys

|

| 3 |

+

from libs import *

|

| 4 |

+

from .layers import *

|

| 5 |

+

from .modules import *

|

| 6 |

+

from .bblocks import *

|

| 7 |

+

from .backbones import *

|

| 8 |

+

|

| 9 |

+

class LightX3ECG(nn.Module):

|

| 10 |

+

def __init__(self,

|

| 11 |

+

base_channels = 64,

|

| 12 |

+

num_classes = 1,

|

| 13 |

+

):

|

| 14 |

+

super(LightX3ECG, self).__init__()

|

| 15 |

+

self.backbone_0 = LightSEResNet18(base_channels)

|

| 16 |

+

self.backbone_1 = LightSEResNet18(base_channels)

|

| 17 |

+

self.backbone_2 = LightSEResNet18(base_channels)

|

| 18 |

+

self.lw_attention = nn.Sequential(

|

| 19 |

+

nn.Linear(

|

| 20 |

+

base_channels*24, base_channels*8,

|

| 21 |

+

),

|

| 22 |

+

nn.BatchNorm1d(base_channels*8),

|

| 23 |

+

nn.ReLU(),

|

| 24 |

+

nn.Dropout(0.3),

|

| 25 |

+

nn.Linear(

|

| 26 |

+

base_channels*8, 3,

|

| 27 |

+

),

|

| 28 |

+

)

|

| 29 |

+

|

| 30 |

+

self.classifier = nn.Sequential(

|

| 31 |

+

nn.Dropout(0.2),

|

| 32 |

+

nn.Linear(

|

| 33 |

+

base_channels*8, num_classes,

|

| 34 |

+

),

|

| 35 |

+

)

|

| 36 |

+

|

| 37 |

+

def forward(self,

|

| 38 |

+

input,

|

| 39 |

+

return_attention_scores = False,

|

| 40 |

+

):

|

| 41 |

+

features_0 = self.backbone_0(input[:, 0, :].unsqueeze(1)).squeeze(2)

|

| 42 |

+

features_1 = self.backbone_1(input[:, 1, :].unsqueeze(1)).squeeze(2)

|

| 43 |

+

features_2 = self.backbone_2(input[:, 2, :].unsqueeze(1)).squeeze(2)

|

| 44 |

+

attention_scores = torch.sigmoid(

|

| 45 |

+

self.lw_attention(

|

| 46 |

+

torch.cat(

|

| 47 |

+

[

|

| 48 |

+

features_0,

|

| 49 |

+

features_1,

|

| 50 |

+

features_2,

|

| 51 |

+

],

|

| 52 |

+

dim = 1,

|

| 53 |

+

)

|

| 54 |

+

)

|

| 55 |

+

)

|

| 56 |

+

merged_features = torch.sum(

|

| 57 |

+

torch.stack(

|

| 58 |

+

[

|

| 59 |

+

features_0,

|

| 60 |

+

features_1,

|

| 61 |

+

features_2,

|

| 62 |

+

],

|

| 63 |

+

dim = 1,

|

| 64 |

+

)*attention_scores.unsqueeze(-1),

|

| 65 |

+

dim = 1,

|

| 66 |

+

)

|

| 67 |

+

|

| 68 |

+

output = self.classifier(merged_features)

|

| 69 |

+

|

| 70 |

+

if not return_attention_scores:

|

| 71 |

+

return output

|

| 72 |

+

else:

|

| 73 |

+

return output, attention_scores

|

predicts.py

ADDED

|

@@ -0,0 +1,118 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from libs import *

|

| 2 |

+

import configVars

|

| 3 |

+

from tools import tools

|

| 4 |

+

from data import ECGDataset

|

| 5 |

+

|

| 6 |

+

def procesar_archivo(format,number,unit,frec,file):

|

| 7 |

+

try:

|

| 8 |

+

prepare_data(format,number,unit,frec,file)

|

| 9 |

+

antonior92 = predict_antonior92()

|

| 10 |

+

CPSC = predict_CPSC_2018()

|

| 11 |

+

Chapman = predict_Chapman()

|

| 12 |

+

result = pd.DataFrame(data = [['Antonior92',antonior92],['CPSC-2018',CPSC],['Chapman',Chapman]],columns=['Red','Predicción'])

|

| 13 |

+

tools.ecgPlot("./datasets/pred.npy",500)

|

| 14 |

+

return result, "ecg.png"

|

| 15 |

+

except:

|

| 16 |

+

return pd.DataFrame(data = ["Se ha producido un error inesperado.","Compruebe que los datos de entrada sean correctos"],columns = ["ERROR."]), "error.jpg"

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def predict_CPSC_2018():

|

| 20 |

+

config = {

|

| 21 |

+

"ecg_leads":[

|

| 22 |

+

0, 1,

|

| 23 |

+

6,

|

| 24 |

+

],

|

| 25 |

+

"ecg_length":5000,

|

| 26 |

+

"is_multilabel":True,

|

| 27 |

+

}

|

| 28 |

+

|

| 29 |

+

train_loaders = {

|

| 30 |

+

"pred":torch.utils.data.DataLoader(

|

| 31 |

+

ECGDataset(

|

| 32 |

+

df_path = f"{configVars.pathCasos}pred.csv", data_path = f"{configVars.pathCasos}",

|

| 33 |

+

config = config,

|

| 34 |

+

augment = False,

|

| 35 |

+

),

|

| 36 |

+

timeout=0

|

| 37 |

+

)

|

| 38 |

+

}

|

| 39 |

+

save_ckp_dir = f"{configVars.pathModel}CPSC-2018"

|

| 40 |

+

|

| 41 |

+

pred = tools.LightX3ECG(

|

| 42 |

+

train_loaders,

|

| 43 |

+

config,

|

| 44 |

+

save_ckp_dir,

|

| 45 |

+

)

|

| 46 |

+

return pred if len(pred) != 0 else ['El archivo introducido no satisface ninguno de los criterios de clasificación']

|

| 47 |

+

|

| 48 |

+

def predict_Chapman():

|

| 49 |

+

config = {

|

| 50 |

+

"ecg_leads":[

|

| 51 |

+

0, 1,

|

| 52 |

+

6,

|

| 53 |

+

],

|

| 54 |

+

"ecg_length":5000,

|

| 55 |

+

"is_multilabel":False,

|

| 56 |

+

}

|

| 57 |

+

|

| 58 |

+

train_loaders = {

|

| 59 |

+

"pred":torch.utils.data.DataLoader(

|

| 60 |

+

ECGDataset(

|

| 61 |

+

df_path = f"{configVars.pathCasos}pred.csv", data_path = f"{configVars.pathCasos}",

|

| 62 |

+

config = config,

|

| 63 |

+

augment = False,

|

| 64 |

+

),

|

| 65 |

+

timeout=0

|

| 66 |

+

)

|

| 67 |

+

}

|

| 68 |

+

save_ckp_dir = f"{configVars.pathModel}Chapman"

|

| 69 |

+

|

| 70 |

+

pred = tools.LightX3ECG(

|

| 71 |

+

train_loaders,

|

| 72 |

+

config,

|

| 73 |

+

save_ckp_dir,

|

| 74 |

+

)

|

| 75 |

+

return pred

|

| 76 |

+

|

| 77 |

+

def predict_antonior92():

|

| 78 |

+

f = h5py.File(f"{configVars.pathCasos}pred.hdf5", 'r')

|

| 79 |

+

model = load_model(f"{configVars.pathModel}/antonior92/model.hdf5", compile=False)

|

| 80 |

+

model.compile(loss='binary_crossentropy', optimizer=Adam())

|

| 81 |

+

pred = model.predict(f['tracings'], verbose=0)

|

| 82 |

+

optimal_thresholds = pd.read_csv(f"{configVars.pathThresholds}antonior92/optimal_thresholds_best.csv")

|

| 83 |

+

result = optimal_thresholds[optimal_thresholds["Threshold"]<=pred[0]]

|

| 84 |

+

result = result['Pred'].values.tolist()

|

| 85 |

+

f.close()

|

| 86 |

+

|

| 87 |

+

return result if len(result) != 0 else ['Normal']

|

| 88 |

+

|

| 89 |

+

def prepare_data(format,number,unit,frec,file):

|

| 90 |

+

units = {

|

| 91 |

+

'V':0.001,

|

| 92 |

+

'miliV':1,

|

| 93 |

+

'microV':1000,

|

| 94 |

+

'nanoV':1000000

|

| 95 |

+

}

|

| 96 |

+

if(format == 'XMLsierra'):

|

| 97 |

+

f = read_file(file.name)

|

| 98 |

+

df = pd.DataFrame()

|

| 99 |

+

for lead in f.leads:

|

| 100 |

+

df[lead.label]=lead.samples

|

| 101 |

+

data = df

|

| 102 |

+

elif(format == 'CSV'):

|

| 103 |

+

data = pd.read_csv(file.name,header = None)

|

| 104 |

+

|

| 105 |

+

data = data[:-200]

|

| 106 |

+

data = data.T

|

| 107 |

+

leads = len(data)

|

| 108 |

+

frec = frec if frec>0 else 1

|

| 109 |

+

scale = 1/(number*units[unit])

|

| 110 |

+

ecg_preprocessed = tools.preprocess_ecg(data, frec, leads,

|

| 111 |

+

scale=scale,######### modificar para que segun la unidad introducida se pueda convertir los datos

|

| 112 |

+

use_all_leads=True,

|

| 113 |

+

remove_baseline=True)

|

| 114 |

+

tools.generateH5(ecg_preprocessed,

|

| 115 |

+

"pred.hdf5",new_freq=400,new_len=4096,

|

| 116 |

+

scale=2,sample_rate = frec)

|

| 117 |

+

|

| 118 |

+

np.save(f"{configVars.pathCasos}pred.npy",ecg_preprocessed )

|

thresholds/CPSC-2018/optimal_thresholds_best.csv

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Pred,Threshold

|

| 2 |

+

Normal,0.6

|

| 3 |

+

AF,0.8

|

| 4 |

+

I-AVB,0.5

|

| 5 |

+

LBBB,0.15

|

| 6 |

+

RBBB,0.5

|

| 7 |

+

PAC,0.4

|

| 8 |

+

PVC,0.5

|

| 9 |

+

STD,0.55

|

| 10 |

+

STE,0.2

|

thresholds/antonior92/optimal_thresholds_best.csv

ADDED

|

@@ -0,0 +1,7 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Pred,Threshold

|

| 2 |

+

I-AVB,0.124

|

| 3 |

+

RBBB,0.07

|

| 4 |

+

LBBB,0.05

|

| 5 |

+

SB,0.278

|

| 6 |

+

AF,0.390

|

| 7 |

+

ST,0.174

|

tools/__pycache__/tools.cpython-311.pyc

ADDED

|

Binary file (7.25 kB). View file

|

|

|

tools/__pycache__/tools.cpython-37.pyc

ADDED

|

Binary file (4.25 kB). View file

|

|

|

tools/tools.py

ADDED

|

@@ -0,0 +1,124 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from libs import *

|

| 2 |

+

import configVars

|

| 3 |

+

import ecg_plot

|

| 4 |

+

def remove_baseline_filter(sample_rate):

|

| 5 |

+

fc = 0.8 # [Hz], cutoff frequency

|

| 6 |

+

fst = 0.2 # [Hz], rejection band

|

| 7 |

+

rp = 0.5 # [dB], ripple in passband

|

| 8 |

+

rs = 40 # [dB], attenuation in rejection band

|

| 9 |

+

wn = fc / (sample_rate / 2)

|

| 10 |

+

wst = fst / (sample_rate / 2)

|

| 11 |

+

|

| 12 |

+

filterorder, aux = sgn.ellipord(wn, wst, rp, rs)

|

| 13 |

+

sos = sgn.iirfilter(filterorder, wn, rp, rs, btype='high', ftype='ellip', output='sos')

|

| 14 |

+

|

| 15 |

+

return sos

|

| 16 |

+

|

| 17 |

+

reduced_leads = ['DI', 'DII', 'V1', 'V2', 'V3', 'V4', 'V5', 'V6']

|

| 18 |

+

all_leads = ['DI', 'DII', 'DIII', 'AVR', 'AVL', 'AVF', 'V1', 'V2', 'V3', 'V4', 'V5', 'V6']

|

| 19 |

+

|

| 20 |

+

def preprocess_ecg(ecg, sample_rate, leads, scale=1,

|

| 21 |

+

use_all_leads=True, remove_baseline=False):

|

| 22 |

+

# Remove baseline

|

| 23 |

+

if remove_baseline:

|

| 24 |

+

sos = remove_baseline_filter(sample_rate)

|

| 25 |

+

ecg_nobaseline = sgn.sosfiltfilt(sos, ecg, padtype='constant', axis=-1)

|

| 26 |

+

else:

|

| 27 |

+

ecg_nobaseline = ecg

|

| 28 |

+

|

| 29 |

+

# Rescale

|

| 30 |

+

ecg_rescaled = scale * ecg_nobaseline

|

| 31 |

+

|

| 32 |

+

# Resample

|

| 33 |

+

if sample_rate != 500:

|

| 34 |

+

ecg_resampled = sgn.resample_poly(ecg_rescaled, up=500, down=sample_rate, axis=-1)

|

| 35 |

+

else:

|

| 36 |

+

ecg_resampled = ecg_rescaled

|

| 37 |

+

length = len(ecg_resampled[0])

|

| 38 |

+

|

| 39 |

+

# Add leads if needed

|

| 40 |

+

target_leads = all_leads if use_all_leads else reduced_leads

|

| 41 |

+

n_leads_target = len(target_leads)

|

| 42 |

+

l2p = dict(zip(target_leads, range(n_leads_target)))

|

| 43 |

+

ecg_targetleads = np.zeros([n_leads_target, length])

|

| 44 |

+

ecg_targetleads = ecg_rescaled

|

| 45 |

+

if n_leads_target >= leads and use_all_leads:

|

| 46 |

+

ecg_targetleads[l2p['DIII'], :] = ecg_targetleads[l2p['DII'], :] - ecg_targetleads[l2p['DI'], :]

|

| 47 |

+

ecg_targetleads[l2p['AVR'], :] = -(ecg_targetleads[l2p['DI'], :] + ecg_targetleads[l2p['DII'], :]) / 2

|

| 48 |

+

ecg_targetleads[l2p['AVL'], :] = (ecg_targetleads[l2p['DI'], :] - ecg_targetleads[l2p['DIII'], :]) / 2

|

| 49 |

+

ecg_targetleads[l2p['AVF'], :] = (ecg_targetleads[l2p['DII'], :] + ecg_targetleads[l2p['DIII'], :]) / 2

|

| 50 |

+

|

| 51 |

+

return ecg_targetleads

|

| 52 |

+

|

| 53 |

+

|

| 54 |

+

def generateH5(input_file,out_file,new_freq=None,new_len=None,scale=1,sample_rate=None):

|

| 55 |

+

n = len(input_file) # Get length

|

| 56 |

+

try:

|

| 57 |

+

h5f = h5py.File(f"{configVars.pathCasos}{out_file}", 'r+')

|

| 58 |

+

h5f.clear()

|

| 59 |

+

except:

|

| 60 |

+

h5f = h5py.File(f"{configVars.pathCasos}{out_file}", 'w')

|

| 61 |

+

|

| 62 |

+

# Resample

|

| 63 |

+

if new_freq is not None:

|

| 64 |

+

ecg_resampled = sgn.resample_poly(input_file, up=new_freq, down=sample_rate, axis=-1)

|

| 65 |

+

else:

|

| 66 |

+

ecg_resampled = input_file

|

| 67 |

+

new_freq = sample_rate

|

| 68 |

+

n_leads, length = ecg_resampled.shape

|

| 69 |

+

|

| 70 |

+

# Rescale

|

| 71 |

+

ecg_rescaled = scale * ecg_resampled

|

| 72 |

+

|

| 73 |

+

# Reshape

|

| 74 |

+

if new_len is None or new_len == length:

|

| 75 |

+

ecg_reshaped = ecg_rescaled

|

| 76 |

+

elif new_len > length:

|

| 77 |

+

ecg_reshaped = np.zeros([n_leads, new_len])

|

| 78 |

+

pad = (new_len - length) // 2

|

| 79 |

+

ecg_reshaped[..., pad:length+pad] = ecg_rescaled

|

| 80 |

+

else:

|

| 81 |

+

extra = (length - new_len) // 2

|

| 82 |

+

ecg_reshaped = ecg_rescaled[:, extra:new_len + extra]

|

| 83 |

+

|

| 84 |

+

n_leads, n_samples = ecg_reshaped.shape

|

| 85 |

+

x = h5f.create_dataset('tracings', (1, n_samples, n_leads), dtype='f8')

|

| 86 |

+

x[0, :, :] = ecg_reshaped.T

|

| 87 |

+

h5f.close()

|

| 88 |

+

|

| 89 |

+

def LightX3ECG(

|

| 90 |

+

train_loaders,

|

| 91 |

+

config,

|

| 92 |

+

save_ckp_dir,

|

| 93 |

+

):

|

| 94 |

+

model = torch.load(f"{save_ckp_dir}/best.ptl", map_location='cpu')

|

| 95 |

+

#model = torch.load(f"{save_ckp_dir}/best.ptl", map_location = "cuda")

|

| 96 |

+

model.to(torch.device('cpu'))

|

| 97 |

+

with torch.no_grad():

|

| 98 |

+

model.eval()

|

| 99 |

+

running_preds = []

|

| 100 |

+

|

| 101 |

+

for ecgs in train_loaders["pred"]:

|

| 102 |

+

ecgs = ecgs.cpu()

|

| 103 |

+

logits = model(ecgs)

|

| 104 |

+

preds = list(torch.max(logits, 1)[1].detach().cpu().numpy()) if not config["is_multilabel"] else list(torch.sigmoid(logits).detach().cpu().numpy())

|

| 105 |

+

running_preds.extend(preds)

|

| 106 |

+

|

| 107 |

+

if config["is_multilabel"]:

|

| 108 |

+

running_preds = np.array(running_preds)

|

| 109 |

+

optimal_thresholds = pd.read_csv(f"{configVars.pathThresholds}CPSC-2018/optimal_thresholds_best.csv")

|

| 110 |

+

preds = optimal_thresholds[optimal_thresholds["Threshold"]<=running_preds[0]]

|

| 111 |

+

preds = preds['Pred'].values.tolist()

|

| 112 |

+

else:

|

| 113 |

+

enfermedades = ['AFIB','GSVT','SB','SR']

|

| 114 |

+

running_preds = np.array(running_preds)

|

| 115 |

+

#running_preds=np.reshape(running_preds, (len(running_preds),-1))

|

| 116 |

+

preds = enfermedades[running_preds[0]]

|

| 117 |

+

return preds

|

| 118 |

+

|

| 119 |

+

def ecgPlot(source,sample):

|

| 120 |

+

data = np.load(source)

|

| 121 |

+

#print(data)

|

| 122 |

+

xml_leads = ['DI', 'DII', 'DIII', 'AVR', 'AVL', 'AVF', 'V1', 'V2', 'V3', 'V4', 'V5', 'V6']

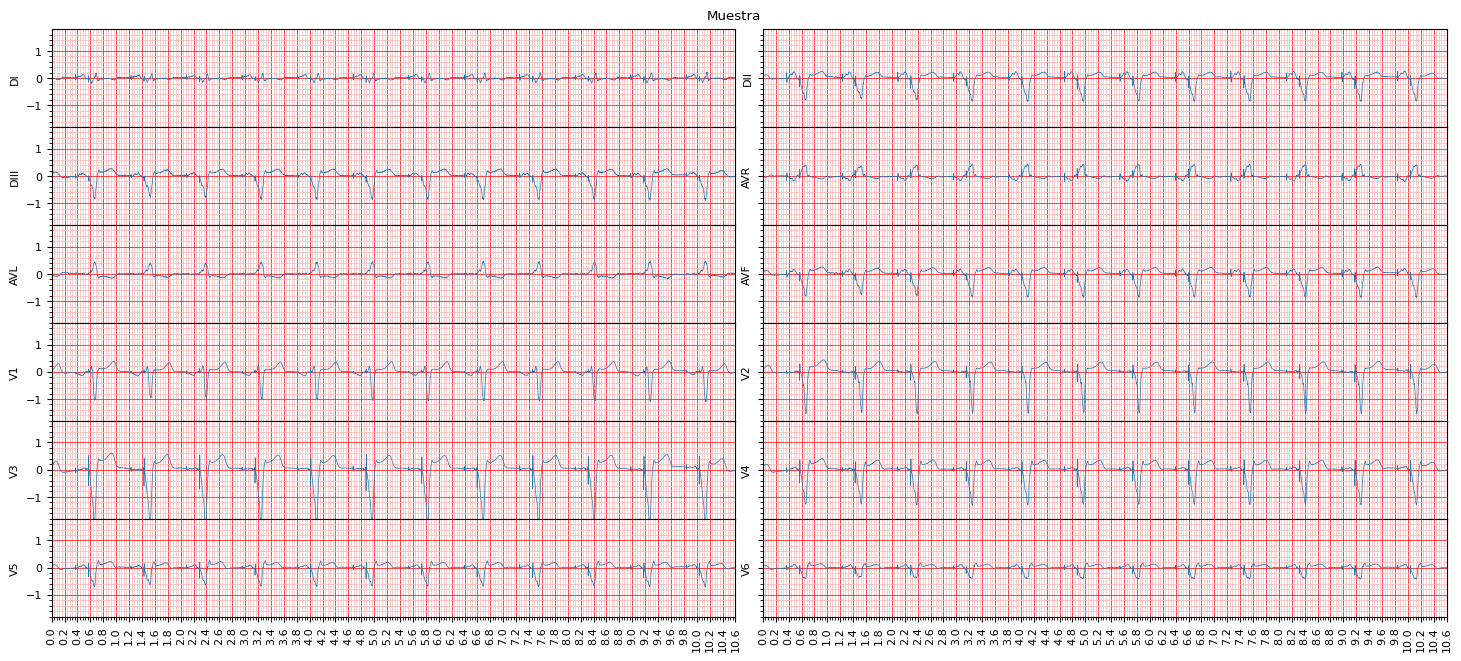

|

| 123 |

+

ecg_plot.plot_12(data, sample_rate= sample,lead_index=xml_leads, title="Muestra")

|

| 124 |

+

ecg_plot.save_as_png("ecg")

|