Spaces:

Runtime error

Runtime error

Commit

·

5dd3935

1

Parent(s):

4a6f2fb

clean

Browse files- biomap/app.py +29 -22

- biomap/helper.py +57 -110

- biomap/inference.py +13 -211

- biomap/label.png +0 -0

- biomap/output/label.png +0 -0

- biomap/output/labeled_img.png +0 -0

- biomap/plot_functions.py +7 -283

- biomap/utils.py +229 -49

- biomap/utils_gee.py +15 -6

- poetry.lock +0 -0

- pyproject.toml +2 -0

biomap/app.py

CHANGED

|

@@ -9,14 +9,13 @@ from plot_functions import segment_region

|

|

| 9 |

from functools import partial

|

| 10 |

import gradio as gr

|

| 11 |

import logging

|

|

|

|

| 12 |

|

| 13 |

import geopandas as gpd

|

| 14 |

mapbox_access_token = "pk.eyJ1IjoiamVyZW15LWVraW1ldHJpY3MiLCJhIjoiY2xrNjBwNGU2MDRhMjNqbWw0YTJrbnpvNCJ9.poVyIzhJuJmD6ffrL9lm2w"

|

| 15 |

geo_df = gpd.read_file(gpd.datasets.get_path('naturalearth_cities'))

|

| 16 |

|

| 17 |

def get_geomap(long, lat ):

|

| 18 |

-

|

| 19 |

-

|

| 20 |

fig = go.Figure(go.Scattermapbox(

|

| 21 |

lat=geo_df.geometry.y,

|

| 22 |

lon=geo_df.geometry.x,

|

|

@@ -53,17 +52,19 @@ def get_geomap(long, lat ):

|

|

| 53 |

|

| 54 |

|

| 55 |

if __name__ == "__main__":

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

|

|

|

|

|

|

| 59 |

# Initialize hydra with configs

|

| 60 |

-

|

| 61 |

cfg = hydra.compose(config_name="my_train_config.yml")

|

| 62 |

logging.info(f"config : {cfg}")

|

| 63 |

-

# Load the model

|

| 64 |

|

| 65 |

nbclasses = cfg.dir_dataset_n_classes

|

| 66 |

model = LitUnsupervisedSegmenter(nbclasses, cfg)

|

|

|

|

| 67 |

logging.info(f"Model Initialiazed")

|

| 68 |

|

| 69 |

model_path = "biomap/checkpoint/model/model.pt"

|

|

@@ -71,15 +72,19 @@ if __name__ == "__main__":

|

|

| 71 |

logging.info(f"Model weights Loaded")

|

| 72 |

model.load_state_dict(saved_state_dict)

|

| 73 |

logging.info(f"Model Loaded")

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

gr.Markdown("Estimate Biodiversity in the world

|

|

|

|

|

|

|

|

|

|

| 77 |

with gr.Tab("Single Image"):

|

| 78 |

with gr.Row():

|

| 79 |

-

input_map = gr.Plot()

|

| 80 |

with gr.Column():

|

| 81 |

-

|

| 82 |

-

|

|

|

|

| 83 |

input_date = gr.Textbox(label="start_date", value="2020-03-20")

|

| 84 |

|

| 85 |

single_button = gr.Button("Predict")

|

|

@@ -90,15 +95,17 @@ if __name__ == "__main__":

|

|

| 90 |

|

| 91 |

with gr.Tab("TimeLapse"):

|

| 92 |

with gr.Row():

|

| 93 |

-

input_map_2 = gr.Plot()

|

| 94 |

-

|

| 95 |

-

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

|

|

|

|

|

|

| 100 |

timelapse_button = gr.Button(value="Predict")

|

| 101 |

-

map = gr.Plot()

|

| 102 |

|

| 103 |

demo.load(get_geomap, [input_latitude, input_longitude], input_map)

|

| 104 |

single_button.click(get_geomap, [input_latitude, input_longitude], input_map)

|

|

@@ -106,5 +113,5 @@ if __name__ == "__main__":

|

|

| 106 |

|

| 107 |

demo.load(get_geomap, [timelapse_input_latitude, timelapse_input_longitude], input_map_2)

|

| 108 |

timelapse_button.click(get_geomap, [timelapse_input_latitude, timelapse_input_longitude], input_map_2)

|

| 109 |

-

timelapse_button.click(

|

| 110 |

demo.launch()

|

|

|

|

| 9 |

from functools import partial

|

| 10 |

import gradio as gr

|

| 11 |

import logging

|

| 12 |

+

import sys

|

| 13 |

|

| 14 |

import geopandas as gpd

|

| 15 |

mapbox_access_token = "pk.eyJ1IjoiamVyZW15LWVraW1ldHJpY3MiLCJhIjoiY2xrNjBwNGU2MDRhMjNqbWw0YTJrbnpvNCJ9.poVyIzhJuJmD6ffrL9lm2w"

|

| 16 |

geo_df = gpd.read_file(gpd.datasets.get_path('naturalearth_cities'))

|

| 17 |

|

| 18 |

def get_geomap(long, lat ):

|

|

|

|

|

|

|

| 19 |

fig = go.Figure(go.Scattermapbox(

|

| 20 |

lat=geo_df.geometry.y,

|

| 21 |

lon=geo_df.geometry.x,

|

|

|

|

| 52 |

|

| 53 |

|

| 54 |

if __name__ == "__main__":

|

| 55 |

+

file_handler = logging.FileHandler(filename='biomap.log')

|

| 56 |

+

stdout_handler = logging.StreamHandler(stream=sys.stdout)

|

| 57 |

+

handlers = [file_handler, stdout_handler]

|

| 58 |

+

|

| 59 |

+

logging.basicConfig(handlers=handlers, encoding='utf-8', level=logging.INFO, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

|

| 60 |

# Initialize hydra with configs

|

| 61 |

+

hydra.initialize(config_path="configs", job_name="corine")

|

| 62 |

cfg = hydra.compose(config_name="my_train_config.yml")

|

| 63 |

logging.info(f"config : {cfg}")

|

|

|

|

| 64 |

|

| 65 |

nbclasses = cfg.dir_dataset_n_classes

|

| 66 |

model = LitUnsupervisedSegmenter(nbclasses, cfg)

|

| 67 |

+

model = model.cpu()

|

| 68 |

logging.info(f"Model Initialiazed")

|

| 69 |

|

| 70 |

model_path = "biomap/checkpoint/model/model.pt"

|

|

|

|

| 72 |

logging.info(f"Model weights Loaded")

|

| 73 |

model.load_state_dict(saved_state_dict)

|

| 74 |

logging.info(f"Model Loaded")

|

| 75 |

+

with gr.Blocks(title="Biomap by Ekimetrics") as demo:

|

| 76 |

+

gr.Markdown("<h1><center>🐢 Biomap by Ekimetrics 🐢</center></h1>")

|

| 77 |

+

gr.Markdown("<h4><center>Estimate Biodiversity score in the world by using segmentation of land.</center></h4>")

|

| 78 |

+

gr.Markdown("Land use is divided into 6 differents classes :Each class is assigned a GBS score from 0 to 1")

|

| 79 |

+

gr.Markdown("Buildings : 0.1 | Infrastructure : 0.1 | Cultivation : 0.4 | Wetland : 0.9 | Water : 0.9 | Natural green : 1 ")

|

| 80 |

+

gr.Markdown("The score is then average on the full image.")

|

| 81 |

with gr.Tab("Single Image"):

|

| 82 |

with gr.Row():

|

| 83 |

+

input_map = gr.Plot()

|

| 84 |

with gr.Column():

|

| 85 |

+

with gr.Row():

|

| 86 |

+

input_latitude = gr.Number(label="lattitude", value=2.98)

|

| 87 |

+

input_longitude = gr.Number(label="longitude", value=48.81)

|

| 88 |

input_date = gr.Textbox(label="start_date", value="2020-03-20")

|

| 89 |

|

| 90 |

single_button = gr.Button("Predict")

|

|

|

|

| 95 |

|

| 96 |

with gr.Tab("TimeLapse"):

|

| 97 |

with gr.Row():

|

| 98 |

+

input_map_2 = gr.Plot()

|

| 99 |

+

with gr.Column():

|

| 100 |

+

with gr.Row():

|

| 101 |

+

timelapse_input_latitude = gr.Number(value=2.98, label="Latitude")

|

| 102 |

+

timelapse_input_longitude = gr.Number(value=48.81, label="Longitude")

|

| 103 |

+

with gr.Row():

|

| 104 |

+

timelapse_start_date = gr.Dropdown(choices=[2017,2018,2019,2020,2021,2022,2023], value=2020, label="Start Date")

|

| 105 |

+

timelapse_end_date = gr.Dropdown(choices=[2017,2018,2019,2020,2021,2022,2023], value=2021, label="End Date")

|

| 106 |

+

segmentation = gr.Dropdown(choices=['month', 'year', '2months'], value='year', label="Interval of time between two segmentation")

|

| 107 |

timelapse_button = gr.Button(value="Predict")

|

| 108 |

+

map = gr.Plot()

|

| 109 |

|

| 110 |

demo.load(get_geomap, [input_latitude, input_longitude], input_map)

|

| 111 |

single_button.click(get_geomap, [input_latitude, input_longitude], input_map)

|

|

|

|

| 113 |

|

| 114 |

demo.load(get_geomap, [timelapse_input_latitude, timelapse_input_longitude], input_map_2)

|

| 115 |

timelapse_button.click(get_geomap, [timelapse_input_latitude, timelapse_input_longitude], input_map_2)

|

| 116 |

+

timelapse_button.click(partial(inference_on_location, model), inputs=[timelapse_input_latitude, timelapse_input_longitude, timelapse_start_date, timelapse_end_date,segmentation], outputs=[map])

|

| 117 |

demo.launch()

|

biomap/helper.py

CHANGED

|

@@ -1,29 +1,16 @@

|

|

| 1 |

import torch.multiprocessing

|

| 2 |

import torchvision.transforms as T

|

| 3 |

import numpy as np

|

| 4 |

-

from utils import transform_to_pil,

|

| 5 |

-

from utils_gee import

|

| 6 |

from dateutil.relativedelta import relativedelta

|

| 7 |

import datetime

|

| 8 |

-

|

| 9 |

-

import cv2

|

| 10 |

-

|

| 11 |

from joblib import Parallel, cpu_count, delayed

|

|

|

|

|

|

|

| 12 |

|

| 13 |

-

def

|

| 14 |

-

print(f"getting image for {d1} to {d2}")

|

| 15 |

-

try:

|

| 16 |

-

img = extract_img(location, d1, d2)

|

| 17 |

-

img_test = transform_ee_img(

|

| 18 |

-

img, max=0.3

|

| 19 |

-

)

|

| 20 |

-

return img_test

|

| 21 |

-

except Exception as err:

|

| 22 |

-

print(err)

|

| 23 |

-

return

|

| 24 |

-

|

| 25 |

-

|

| 26 |

-

def inference_on_location(model, latitude = 2.98, longitude = 48.81, start_date=2020, end_date=2022):

|

| 27 |

"""Performe an inference on the latitude and longitude between the start date and the end date

|

| 28 |

|

| 29 |

Args:

|

|

@@ -36,64 +23,44 @@ def inference_on_location(model, latitude = 2.98, longitude = 48.81, start_date=

|

|

| 36 |

Returns:

|

| 37 |

img, labeled_img,biodiv_score: the original landscape, the labeled landscape and the biodiversity score and the landscape

|

| 38 |

"""

|

| 39 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 40 |

location = [float(latitude), float(longitude)]

|

| 41 |

|

| 42 |

# Extract img numpy from earth engine and transform it to PIL img

|

| 43 |

dates = [datetime.datetime(start_date, 1, 1, 0, 0, 0)]

|

| 44 |

-

while dates[-1] < datetime.datetime(end_date, 1, 1, 0, 0, 0):

|

| 45 |

-

dates.append(dates[-1] + relativedelta(months=

|

| 46 |

|

| 47 |

dates = [d.strftime("%Y-%m-%d") for d in dates]

|

| 48 |

|

| 49 |

all_image = Parallel(n_jobs=cpu_count(), prefer="threads")(delayed(get_image)(location, d1,d2) for d1, d2 in zip(dates[:-1],dates[1:]))

|

| 50 |

-

|

| 51 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 52 |

|

| 53 |

-

|

| 54 |

-

|

| 55 |

-

|

| 56 |

-

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

T.Resize((320, 320)),

|

| 60 |

-

# T.CenterCrop(224),

|

| 61 |

-

T.ToTensor(),

|

| 62 |

-

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

|

| 63 |

-

]

|

| 64 |

-

)

|

| 65 |

-

|

| 66 |

-

# Preprocess opened img

|

| 67 |

-

x = torch.stack([preprocess(imag) for imag in all_image]).cpu()

|

| 68 |

-

|

| 69 |

-

# launch inference on cpu

|

| 70 |

-

# x = torch.unsqueeze(x, dim=0).cpu()

|

| 71 |

-

model = model.cpu()

|

| 72 |

-

|

| 73 |

-

with torch.no_grad():

|

| 74 |

-

feats, code = model.net(x)

|

| 75 |

-

linear_pred = model.linear_probe(x, code)

|

| 76 |

-

linear_pred = linear_pred.argmax(1)

|

| 77 |

-

outputs = [{

|

| 78 |

-

"img": torch.unsqueeze(img, dim=0).detach().cpu(),

|

| 79 |

-

"linear_preds": torch.unsqueeze(linear_pred, dim=0).detach().cpu(),

|

| 80 |

-

} for img, linear_pred in zip(x, linear_pred)]

|

| 81 |

-

all_img = []

|

| 82 |

-

all_label = []

|

| 83 |

-

all_labeled_img = []

|

| 84 |

-

for output in outputs:

|

| 85 |

-

img, label, labeled_img = transform_to_pil(output)

|

| 86 |

-

all_img.append(img)

|

| 87 |

-

all_label.append(label)

|

| 88 |

-

all_labeled_img.append(labeled_img)

|

| 89 |

-

|

| 90 |

-

all_labeled_img = [np.array(pil_image)[:, :, ::-1] for pil_image in all_labeled_img]

|

| 91 |

-

create_video(all_labeled_img, output_path='output/output.mp4')

|

| 92 |

-

|

| 93 |

-

# all_labeled_img = [np.array(pil_image)[:, :, ::-1] for pil_image in all_img]

|

| 94 |

-

# create_video(all_labeled_img, output_path='raw.mp4')

|

| 95 |

-

|

| 96 |

-

return 'output.mp4'

|

| 97 |

|

| 98 |

def inference_on_location_and_month(model, latitude = 2.98, longitude = 48.81, start_date = '2020-03-20'):

|

| 99 |

"""Performe an inference on the latitude and longitude between the start date and the end date

|

|

@@ -108,59 +75,36 @@ def inference_on_location_and_month(model, latitude = 2.98, longitude = 48.81, s

|

|

| 108 |

Returns:

|

| 109 |

img, labeled_img,biodiv_score: the original landscape, the labeled landscape and the biodiversity score and the landscape

|

| 110 |

"""

|

|

|

|

|

|

|

| 111 |

location = [float(latitude), float(longitude)]

|

| 112 |

|

| 113 |

# Extract img numpy from earth engine and transform it to PIL img

|

| 114 |

end_date = datetime.datetime.strptime(start_date, "%Y-%m-%d") + relativedelta(months=1)

|

| 115 |

end_date = datetime.datetime.strftime(end_date, "%Y-%m-%d")

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

|

| 119 |

-

|

| 120 |

-

|

| 121 |

-

|

| 122 |

-

|

| 123 |

-

|

| 124 |

-

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

T.ToTensor(),

|

| 128 |

-

T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

|

| 129 |

-

]

|

| 130 |

-

)

|

| 131 |

-

|

| 132 |

-

# Preprocess opened img

|

| 133 |

-

x = preprocess(img_test)

|

| 134 |

-

|

| 135 |

-

# launch inference on cpu

|

| 136 |

-

x = torch.unsqueeze(x, dim=0).cpu()

|

| 137 |

-

model = model.cpu()

|

| 138 |

-

|

| 139 |

-

with torch.no_grad():

|

| 140 |

-

feats, code = model.net(x)

|

| 141 |

-

linear_pred = model.linear_probe(x, code)

|

| 142 |

-

linear_pred = linear_pred.argmax(1)

|

| 143 |

-

output = {

|

| 144 |

-

"img": x[: model.cfg.n_images].detach().cpu(),

|

| 145 |

-

"linear_preds": linear_pred[: model.cfg.n_images].detach().cpu(),

|

| 146 |

-

}

|

| 147 |

-

nb_values = []

|

| 148 |

-

for i in range(7):

|

| 149 |

-

nb_values.append(np.count_nonzero(output['linear_preds'][0] == i+1))

|

| 150 |

-

scores_init = [2,3,4,3,1,4,0]

|

| 151 |

-

score = sum(x * y for x, y in zip(scores_init, nb_values)) / sum(nb_values) / max(scores_init)

|

| 152 |

-

|

| 153 |

-

img, label, labeled_img = transform_to_pil(output)

|

| 154 |

-

return img, labeled_img,score

|

| 155 |

|

| 156 |

|

| 157 |

if __name__ == "__main__":

|

| 158 |

import logging

|

| 159 |

import hydra

|

| 160 |

-

|

| 161 |

-

|

| 162 |

from model import LitUnsupervisedSegmenter

|

| 163 |

-

logging.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 164 |

# Initialize hydra with configs

|

| 165 |

hydra.initialize(config_path="configs", job_name="corine")

|

| 166 |

cfg = hydra.compose(config_name="my_train_config.yml")

|

|

@@ -171,9 +115,12 @@ if __name__ == "__main__":

|

|

| 171 |

model = LitUnsupervisedSegmenter(nbclasses, cfg)

|

| 172 |

logging.info(f"Model Initialiazed")

|

| 173 |

|

| 174 |

-

model_path = "checkpoint/model/model.pt"

|

| 175 |

saved_state_dict = torch.load(model_path, map_location=torch.device("cpu"))

|

| 176 |

logging.info(f"Model weights Loaded")

|

| 177 |

model.load_state_dict(saved_state_dict)

|

|

|

|

| 178 |

logging.info(f"Model Loaded")

|

|

|

|

| 179 |

inference_on_location(model)

|

|

|

|

|

|

| 1 |

import torch.multiprocessing

|

| 2 |

import torchvision.transforms as T

|

| 3 |

import numpy as np

|

| 4 |

+

from utils import transform_to_pil, compute_biodiv_score, plot_imgs_labels

|

| 5 |

+

from utils_gee import get_image

|

| 6 |

from dateutil.relativedelta import relativedelta

|

| 7 |

import datetime

|

| 8 |

+

import matplotlib as mpl

|

|

|

|

|

|

|

| 9 |

from joblib import Parallel, cpu_count, delayed

|

| 10 |

+

import logging

|

| 11 |

+

from inference import inference

|

| 12 |

|

| 13 |

+

def inference_on_location(model, latitude=2.98, longitude=48.81, start_date=2020, end_date=2022, how="year"):

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 14 |

"""Performe an inference on the latitude and longitude between the start date and the end date

|

| 15 |

|

| 16 |

Args:

|

|

|

|

| 23 |

Returns:

|

| 24 |

img, labeled_img,biodiv_score: the original landscape, the labeled landscape and the biodiversity score and the landscape

|

| 25 |

"""

|

| 26 |

+

logging.info("Running Inference on location")

|

| 27 |

+

logging.info(f"latitude : {latitude} & longitude : {longitude}")

|

| 28 |

+

logging.info(f"start date : {start_date} & end_date : {end_date}")

|

| 29 |

+

logging.info(f"Prediction on intervale : {how}")

|

| 30 |

+

if how == "month":

|

| 31 |

+

delta_month = 1

|

| 32 |

+

elif how == "2months":

|

| 33 |

+

delta_month = 2

|

| 34 |

+

elif how == "year":

|

| 35 |

+

delta_month = 11

|

| 36 |

+

else:

|

| 37 |

+

raise ValueError("Wrong interval")

|

| 38 |

+

|

| 39 |

+

assert int(end_date) > int(start_date), "end date must be stricly higher than start date"

|

| 40 |

location = [float(latitude), float(longitude)]

|

| 41 |

|

| 42 |

# Extract img numpy from earth engine and transform it to PIL img

|

| 43 |

dates = [datetime.datetime(start_date, 1, 1, 0, 0, 0)]

|

| 44 |

+

while dates[-1] < datetime.datetime(int(end_date), 1, 1, 0, 0, 0):

|

| 45 |

+

dates.append(dates[-1] + relativedelta(months=delta_month))

|

| 46 |

|

| 47 |

dates = [d.strftime("%Y-%m-%d") for d in dates]

|

| 48 |

|

| 49 |

all_image = Parallel(n_jobs=cpu_count(), prefer="threads")(delayed(get_image)(location, d1,d2) for d1, d2 in zip(dates[:-1],dates[1:]))

|

| 50 |

+

outputs = inference(np.array(all_image), model)

|

| 51 |

|

| 52 |

+

logging.info("Calculating Biodiversity Scores...")

|

| 53 |

+

scores, scores_details = map(list, zip(*[compute_biodiv_score(output["linear_preds"].detach().numpy()) for output in outputs]))

|

| 54 |

+

logging.info(f"Calculated Biodiversity Score : {scores}")

|

| 55 |

+

|

| 56 |

+

imgs, labels, labeled_imgs = map(list, zip(*[transform_to_pil(output) for output in outputs]))

|

| 57 |

|

| 58 |

+

images = [np.asarray(img) for img in imgs]

|

| 59 |

+

labeled_imgs = [np.asarray(img) for img in labeled_imgs]

|

| 60 |

+

fig = plot_imgs_labels(dates, images, labeled_imgs, scores_details, scores)

|

| 61 |

+

# fig.save("test.png")

|

| 62 |

+

return fig

|

| 63 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 64 |

|

| 65 |

def inference_on_location_and_month(model, latitude = 2.98, longitude = 48.81, start_date = '2020-03-20'):

|

| 66 |

"""Performe an inference on the latitude and longitude between the start date and the end date

|

|

|

|

| 75 |

Returns:

|

| 76 |

img, labeled_img,biodiv_score: the original landscape, the labeled landscape and the biodiversity score and the landscape

|

| 77 |

"""

|

| 78 |

+

logging.info("Running Inference on location and month")

|

| 79 |

+

logging.info(f"latitude : {latitude} & longitude : {longitude}")

|

| 80 |

location = [float(latitude), float(longitude)]

|

| 81 |

|

| 82 |

# Extract img numpy from earth engine and transform it to PIL img

|

| 83 |

end_date = datetime.datetime.strptime(start_date, "%Y-%m-%d") + relativedelta(months=1)

|

| 84 |

end_date = datetime.datetime.strftime(end_date, "%Y-%m-%d")

|

| 85 |

+

|

| 86 |

+

logging.info("Getting Image...")

|

| 87 |

+

img_test = get_image(location, start_date, end_date)

|

| 88 |

+

outputs = inference(np.array([img_test]), model)

|

| 89 |

+

|

| 90 |

+

logging.info("Calculating Biodiversity Score...")

|

| 91 |

+

score, score_details = compute_biodiv_score(outputs[0]["linear_preds"].detach().numpy())

|

| 92 |

+

logging.info(f"Calculated Biodiversity Score : {score}")

|

| 93 |

+

img, label, labeled_img = transform_to_pil(outputs[0])

|

| 94 |

+

|

| 95 |

+

return img, labeled_img, score

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 96 |

|

| 97 |

|

| 98 |

if __name__ == "__main__":

|

| 99 |

import logging

|

| 100 |

import hydra

|

| 101 |

+

import sys

|

|

|

|

| 102 |

from model import LitUnsupervisedSegmenter

|

| 103 |

+

file_handler = logging.FileHandler(filename='biomap.log')

|

| 104 |

+

stdout_handler = logging.StreamHandler(stream=sys.stdout)

|

| 105 |

+

handlers = [file_handler, stdout_handler]

|

| 106 |

+

|

| 107 |

+

logging.basicConfig(handlers=handlers, encoding='utf-8', level=logging.INFO, format="%(asctime)s - %(name)s - %(levelname)s - %(message)s")

|

| 108 |

# Initialize hydra with configs

|

| 109 |

hydra.initialize(config_path="configs", job_name="corine")

|

| 110 |

cfg = hydra.compose(config_name="my_train_config.yml")

|

|

|

|

| 115 |

model = LitUnsupervisedSegmenter(nbclasses, cfg)

|

| 116 |

logging.info(f"Model Initialiazed")

|

| 117 |

|

| 118 |

+

model_path = "biomap/checkpoint/model/model.pt"

|

| 119 |

saved_state_dict = torch.load(model_path, map_location=torch.device("cpu"))

|

| 120 |

logging.info(f"Model weights Loaded")

|

| 121 |

model.load_state_dict(saved_state_dict)

|

| 122 |

+

|

| 123 |

logging.info(f"Model Loaded")

|

| 124 |

+

# inference_on_location_and_month(model)

|

| 125 |

inference_on_location(model)

|

| 126 |

+

|

biomap/inference.py

CHANGED

|

@@ -2,9 +2,7 @@ import torch.multiprocessing

|

|

| 2 |

import torchvision.transforms as T

|

| 3 |

from utils import transform_to_pil

|

| 4 |

|

| 5 |

-

|

| 6 |

-

# tensorize & normalize img

|

| 7 |

-

preprocess = T.Compose(

|

| 8 |

[

|

| 9 |

T.ToPILImage(),

|

| 10 |

T.Resize((320, 320)),

|

|

@@ -14,24 +12,18 @@ def inference(image, model):

|

|

| 14 |

]

|

| 15 |

)

|

| 16 |

|

| 17 |

-

|

| 18 |

-

x = preprocess(image)

|

| 19 |

-

|

| 20 |

-

# launch inference on cpu

|

| 21 |

-

x = torch.unsqueeze(x, dim=0).cpu()

|

| 22 |

-

model = model.cpu()

|

| 23 |

|

| 24 |

with torch.no_grad():

|

| 25 |

-

|

| 26 |

linear_pred = model.linear_probe(x, code)

|

| 27 |

linear_pred = linear_pred.argmax(1)

|

| 28 |

-

|

| 29 |

-

"img": x[

|

| 30 |

-

"linear_preds": linear_pred[

|

| 31 |

-

}

|

| 32 |

-

|

| 33 |

-

img, label, labeled_img = transform_to_pil(output)

|

| 34 |

-

return img, labeled_img, label

|

| 35 |

|

| 36 |

|

| 37 |

if __name__ == "__main__":

|

|

@@ -55,7 +47,7 @@ if __name__ == "__main__":

|

|

| 55 |

cfg = hydra.compose(config_name="my_train_config.yml")

|

| 56 |

|

| 57 |

# Load the model

|

| 58 |

-

model_path = "checkpoint/model/model.pt"

|

| 59 |

saved_state_dict = torch.load(model_path, map_location=torch.device("cpu"))

|

| 60 |

|

| 61 |

nbclasses = cfg.dir_dataset_n_classes

|

|

@@ -65,197 +57,7 @@ if __name__ == "__main__":

|

|

| 65 |

model.load_state_dict(saved_state_dict)

|

| 66 |

print("model loaded")

|

| 67 |

# img.save("output/image.png")

|

| 68 |

-

|

| 69 |

-

img.save("output/img.png")

|

| 70 |

-

label.save("output/label.png")

|

| 71 |

-

labeled_img.save("output/labeled_img.png")

|

| 72 |

-

|

| 73 |

-

|

| 74 |

-

|

| 75 |

-

|

| 76 |

-

# def get_list_date(start_date, end_date):

|

| 77 |

-

# """Get all the date between the start date and the end date

|

| 78 |

-

|

| 79 |

-

# Args:

|

| 80 |

-

# start_date (str): start date at the format '%Y-%m-%d'

|

| 81 |

-

# end_date (str): end date at the format '%Y-%m-%d'

|

| 82 |

-

|

| 83 |

-

# Returns:

|

| 84 |

-

# list[str]: all the date between the start date and the end date

|

| 85 |

-

# """

|

| 86 |

-

# start_date = datetime.datetime.strptime(start_date, "%Y-%m-%d").date()

|

| 87 |

-

# end_date = datetime.datetime.strptime(end_date, "%Y-%m-%d").date()

|

| 88 |

-

# list_date = [start_date]

|

| 89 |

-

# date = start_date

|

| 90 |

-

# while date < end_date:

|

| 91 |

-

# date = date + datetime.timedelta(days=1)

|

| 92 |

-

# list_date.append(date)

|

| 93 |

-

# list_date.append(end_date)

|

| 94 |

-

# list_date2 = [x.strftime("%Y-%m-%d") for x in list_date]

|

| 95 |

-

# return list_date2

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

# def get_length_interval(start_date, end_date):

|

| 99 |

-

# """Return how many days there is between the start date and the end date

|

| 100 |

-

|

| 101 |

-

# Args:

|

| 102 |

-

# start_date (str): start date at the format '%Y-%m-%d'

|

| 103 |

-

# end_date (str): end date at the format '%Y-%m-%d'

|

| 104 |

-

|

| 105 |

-

# Returns:

|

| 106 |

-

# int : number of days between start date and the end date

|

| 107 |

-

# """

|

| 108 |

-

# try:

|

| 109 |

-

# return len(get_list_date(start_date, end_date))

|

| 110 |

-

# except ValueError:

|

| 111 |

-

# return 0

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

# def infer_unique_date(latitude, longitude, date, model=model):

|

| 115 |

-

# """Perform an inference on a latitude and a longitude at a specific date

|

| 116 |

-

|

| 117 |

-

# Args:

|

| 118 |

-

# latitude (float): the latitude of the landscape

|

| 119 |

-

# longitude (float): the longitude of the landscape

|

| 120 |

-

# date (str): date for the inference at the format '%Y-%m-%d'

|

| 121 |

-

# model (_type_, optional): _description_. Defaults to model.

|

| 122 |

-

|

| 123 |

-

# Returns:

|

| 124 |

-

# img, labeled_img,biodiv_score: the original landscape, the labeled landscape and the biodiversity score and the landscape

|

| 125 |

-

# """

|

| 126 |

-

# start_date = date

|

| 127 |

-

# end_date = date

|

| 128 |

-

# location = [float(latitude), float(longitude)]

|

| 129 |

-

# # Extract img numpy from earth engine and transform it to PIL img

|

| 130 |

-

# img = extract_img(location, start_date, end_date)

|

| 131 |

-

# img_test = transform_ee_img(

|

| 132 |

-

# img, max=0.3

|

| 133 |

-

# ) # max value is the value from numpy file that will be equal to 255

|

| 134 |

-

|

| 135 |

-

# # tensorize & normalize img

|

| 136 |

-

# preprocess = T.Compose(

|

| 137 |

-

# [

|

| 138 |

-

# T.ToPILImage(),

|

| 139 |

-

# T.Resize((320, 320)),

|

| 140 |

-

# # T.CenterCrop(224),

|

| 141 |

-

# T.ToTensor(),

|

| 142 |

-

# T.Normalize(mean=[0.485, 0.456, 0.406], std=[0.229, 0.224, 0.225]),

|

| 143 |

-

# ]

|

| 144 |

-

# )

|

| 145 |

-

|

| 146 |

-

# # Preprocess opened img

|

| 147 |

-

# x = preprocess(img_test)

|

| 148 |

-

|

| 149 |

-

# # launch inference on cpu

|

| 150 |

-

# x = torch.unsqueeze(x, dim=0).cpu()

|

| 151 |

-

# model = model.cpu()

|

| 152 |

-

|

| 153 |

-

# with torch.no_grad():

|

| 154 |

-

# feats, code = model.net(x)

|

| 155 |

-

# linear_pred = model.linear_probe(x, code)

|

| 156 |

-

# linear_pred = linear_pred.argmax(1)

|

| 157 |

-

# output = {

|

| 158 |

-

# "img": x[: model.cfg.n_images].detach().cpu(),

|

| 159 |

-

# "linear_preds": linear_pred[: model.cfg.n_images].detach().cpu(),

|

| 160 |

-

# }

|

| 161 |

-

|

| 162 |

-

# img, label, labeled_img = transform_to_pil(output)

|

| 163 |

-

# biodiv_score = compute_biodiv_score(labeled_img)

|

| 164 |

-

# return img, labeled_img, biodiv_score

|

| 165 |

-

|

| 166 |

-

|

| 167 |

-

# def get_img_array(start_date, end_date, latitude, longitude, model=model):

|

| 168 |

-

# list_date = get_list_date(start_date, end_date)

|

| 169 |

-

# list_img = []

|

| 170 |

-

# for date in list_date:

|

| 171 |

-

# list_img.append(img)

|

| 172 |

-

# return list_img

|

| 173 |

-

|

| 174 |

-

|

| 175 |

-

# def variable_outputs(start_date, end_date, latitude, longitude, day, model=model):

|

| 176 |

-

# """Perform an inference on the day number day starting from the start at the latitude and longitude selected

|

| 177 |

-

|

| 178 |

-

# Args:

|

| 179 |

-

# latitude (float): the latitude of the landscape

|

| 180 |

-

# longitude (float): the longitude of the landscape

|

| 181 |

-

# start_date (str): the start date for our inference

|

| 182 |

-

# end_date (str): the end date for our inference

|

| 183 |

-

# model (_type_, optional): _description_. Defaults to model.

|

| 184 |

-

|

| 185 |

-

# Returns:

|

| 186 |

-

# img,labeled_img,biodiv_score: the original landscape, the labeled landscape and the biodiversity score and the landscape at the selected, longitude, latitude and date

|

| 187 |

-

# """

|

| 188 |

-

# list_date = get_list_date(start_date, end_date)

|

| 189 |

-

# k = int(day)

|

| 190 |

-

# date = list_date[k]

|

| 191 |

-

# img, labeled_img, biodiv_score = infer_unique_date(

|

| 192 |

-

# latitude, longitude, date, model=model

|

| 193 |

-

# )

|

| 194 |

-

# return img, labeled_img, biodiv_score

|

| 195 |

-

|

| 196 |

-

|

| 197 |

-

# def variable_outputs2(

|

| 198 |

-

# start_date, end_date, latitude, longitude, day_number, model=model

|

| 199 |

-

# ):

|

| 200 |

-

# """Perform an inference on the day number day starting from the start at the latitude and longitude selected

|

| 201 |

-

|

| 202 |

-

# Args:

|

| 203 |

-

# latitude (float): the latitude of the landscape

|

| 204 |

-

# longitude (float): the longitude of the landscape

|

| 205 |

-

# start_date (str): the start date for our inference

|

| 206 |

-

# end_date (str): the end date for our inference

|

| 207 |

-

# model (_type_, optional): _description_. Defaults to model.

|

| 208 |

-

|

| 209 |

-

# Returns:

|

| 210 |

-

# list[img,labeled_img,biodiv_score]: the original landscape, the labeled landscape and the biodiversity score and the landscape at the selected, longitude, latitude and date

|

| 211 |

-

# """

|

| 212 |

-

# list_date = get_list_date(start_date, end_date)

|

| 213 |

-

# k = int(day_number)

|

| 214 |

-

# date = list_date[k]

|

| 215 |

-

# img, labeled_img, biodiv_score = infer_unique_date(

|

| 216 |

-

# latitude, longitude, date, model=model

|

| 217 |

-

# )

|

| 218 |

-

# return [img, labeled_img, biodiv_score]

|

| 219 |

-

|

| 220 |

-

|

| 221 |

-

# def gif_maker(img_array):

|

| 222 |

-

# output_file = "test2.mkv"

|

| 223 |

-

# image_test = img_array[0]

|

| 224 |

-

# size = (320, 320)

|

| 225 |

-

# print(size)

|

| 226 |

-

# out = cv2.VideoWriter(

|

| 227 |

-

# output_file, cv2.VideoWriter_fourcc(*"avc1"), 15, frameSize=size

|

| 228 |

-

# )

|

| 229 |

-

# for i in range(len(img_array)):

|

| 230 |

-

# image = img_array[i]

|

| 231 |

-

# pix = np.array(image.getdata())

|

| 232 |

-

# out.write(pix)

|

| 233 |

-

# out.release()

|

| 234 |

-

# return output_file

|

| 235 |

-

|

| 236 |

-

|

| 237 |

-

# def infer_multiple_date(start_date, end_date, latitude, longitude, model=model):

|

| 238 |

-

# """Perform an inference on all the dates between the start date and the end date at the latitude and longitude

|

| 239 |

-

|

| 240 |

-

# Args:

|

| 241 |

-

# latitude (float): the latitude of the landscape

|

| 242 |

-

# longitude (float): the longitude of the landscape

|

| 243 |

-

# start_date (str): the start date for our inference

|

| 244 |

-

# end_date (str): the end date for our inference

|

| 245 |

-

# model (_type_, optional): _description_. Defaults to model.

|

| 246 |

|

| 247 |

-

|

| 248 |

-

|

| 249 |

-

# """

|

| 250 |

-

# list_date = get_list_date(start_date, end_date)

|

| 251 |

-

# list_img = []

|

| 252 |

-

# list_labeled_img = []

|

| 253 |

-

# list_biodiv_score = []

|

| 254 |

-

# for date in list_date:

|

| 255 |

-

# img, labeled_img, biodiv_score = infer_unique_date(

|

| 256 |

-

# latitude, longitude, date, model=model

|

| 257 |

-

# )

|

| 258 |

-

# list_img.append(img)

|

| 259 |

-

# list_labeled_img.append(labeled_img)

|

| 260 |

-

# list_biodiv_score.append(biodiv_score)

|

| 261 |

-

# return gif_maker(list_img), gif_maker(list_labeled_img), list_biodiv_score[0]

|

|

|

|

| 2 |

import torchvision.transforms as T

|

| 3 |

from utils import transform_to_pil

|

| 4 |

|

| 5 |

+

preprocess = T.Compose(

|

|

|

|

|

|

|

| 6 |

[

|

| 7 |

T.ToPILImage(),

|

| 8 |

T.Resize((320, 320)),

|

|

|

|

| 12 |

]

|

| 13 |

)

|

| 14 |

|

| 15 |

+

def inference(images, model):

|

| 16 |

+

x = torch.stack([preprocess(image) for image in images]).cpu()

|

|

|

|

|

|

|

|

|

|

|

|

|

| 17 |

|

| 18 |

with torch.no_grad():

|

| 19 |

+

_, code = model.net(x)

|

| 20 |

linear_pred = model.linear_probe(x, code)

|

| 21 |

linear_pred = linear_pred.argmax(1)

|

| 22 |

+

outputs = [{

|

| 23 |

+

"img": x[i].detach().cpu(),

|

| 24 |

+

"linear_preds": linear_pred[i].detach().cpu(),

|

| 25 |

+

} for i in range(x.shape[0])]

|

| 26 |

+

return outputs

|

|

|

|

|

|

|

| 27 |

|

| 28 |

|

| 29 |

if __name__ == "__main__":

|

|

|

|

| 47 |

cfg = hydra.compose(config_name="my_train_config.yml")

|

| 48 |

|

| 49 |

# Load the model

|

| 50 |

+

model_path = "biomap/checkpoint/model/model.pt"

|

| 51 |

saved_state_dict = torch.load(model_path, map_location=torch.device("cpu"))

|

| 52 |

|

| 53 |

nbclasses = cfg.dir_dataset_n_classes

|

|

|

|

| 57 |

model.load_state_dict(saved_state_dict)

|

| 58 |

print("model loaded")

|

| 59 |

# img.save("output/image.png")

|

| 60 |

+

inference([image], model)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 61 |

|

| 62 |

+

inference([image,image], model)

|

| 63 |

+

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

biomap/label.png

DELETED

|

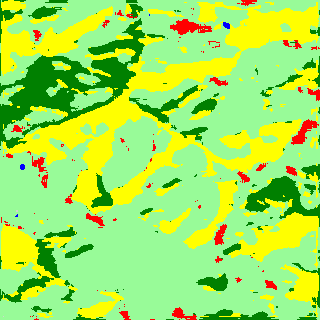

Binary file (4.46 kB)

|

|

|

biomap/output/label.png

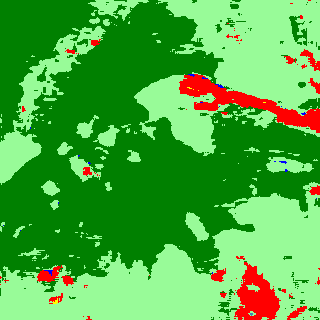

CHANGED

|

|

biomap/output/labeled_img.png

CHANGED

|

|

biomap/plot_functions.py

CHANGED

|

@@ -1,17 +1,10 @@

|

|

| 1 |

from PIL import Image

|

| 2 |

|

| 3 |

-

import hydra

|

| 4 |

import matplotlib as mpl

|

| 5 |

from utils import prep_for_plot

|

| 6 |

|

| 7 |

import torch.multiprocessing

|

| 8 |

import torchvision.transforms as T

|

| 9 |

-

# import matplotlib.pyplot as plt

|

| 10 |

-

from model import LitUnsupervisedSegmenter

|

| 11 |

-

colors = ('red', 'palegreen', 'green', 'steelblue', 'blue', 'yellow', 'lightgrey')

|

| 12 |

-

class_names = ('Buildings', 'Cultivation', 'Natural green', 'Wetland', 'Water', 'Infrastructure', 'Background')

|

| 13 |

-

cmap = mpl.colors.ListedColormap(colors)

|

| 14 |

-

#from train_segmentation import LitUnsupervisedSegmenter, cmap

|

| 15 |

|

| 16 |

from utils_gee import extract_img, transform_ee_img

|

| 17 |

|

|

@@ -20,85 +13,20 @@ import plotly.express as px

|

|

| 20 |

import numpy as np

|

| 21 |

from plotly.subplots import make_subplots

|

| 22 |

|

| 23 |

-

import os

|

| 24 |

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True'

|

| 25 |

|

| 26 |

-

|

| 27 |

colors = ('red', 'palegreen', 'green', 'steelblue', 'blue', 'yellow', 'lightgrey')

|

| 28 |

class_names = ('Buildings', 'Cultivation', 'Natural green', 'Wetland', 'Water', 'Infrastructure', 'Background')

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

# Import model configs

|

| 32 |

-

hydra.initialize(config_path="configs", job_name="corine")

|

| 33 |

-

cfg = hydra.compose(config_name="my_train_config.yml")

|

| 34 |

-

|

| 35 |

-

nbclasses = cfg.dir_dataset_n_classes

|

| 36 |

-

|

| 37 |

-

# Load Model

|

| 38 |

-

model_path = "biomap/checkpoint/model/model.pt"

|

| 39 |

-

saved_state_dict = torch.load(model_path,map_location=torch.device('cpu'))

|

| 40 |

-

|

| 41 |

-

model = LitUnsupervisedSegmenter(nbclasses, cfg)

|

| 42 |

-

model.load_state_dict(saved_state_dict)

|

| 43 |

-

|

| 44 |

-

from PIL import Image

|

| 45 |

-

|

| 46 |

-

import hydra

|

| 47 |

-

|

| 48 |

-

from utils import prep_for_plot

|

| 49 |

-

|

| 50 |

-

import torch.multiprocessing

|

| 51 |

-

import torchvision.transforms as T

|

| 52 |

-

# import matplotlib.pyplot as plt

|

| 53 |

-

|

| 54 |

-

from model import LitUnsupervisedSegmenter

|

| 55 |

-

|

| 56 |

-

from utils_gee import extract_img, transform_ee_img

|

| 57 |

-

|

| 58 |

-

import plotly.graph_objects as go

|

| 59 |

-

import plotly.express as px

|

| 60 |

-

import numpy as np

|

| 61 |

-

from plotly.subplots import make_subplots

|

| 62 |

-

|

| 63 |

-

import os

|

| 64 |

-

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True'

|

| 65 |

-

|

| 66 |

|

| 67 |

colors = ('red', 'palegreen', 'green', 'steelblue', 'blue', 'yellow', 'lightgrey')

|

| 68 |

-

cmap = mpl.colors.ListedColormap(colors)

|

| 69 |

class_names = ('Buildings', 'Cultivation', 'Natural green', 'Wetland', 'Water', 'Infrastructure', 'Background')

|

| 70 |

-

scores_init = [2,3,4,

|

| 71 |

-

|

| 72 |

-

# Import model configs

|

| 73 |

-

#hydra.initialize(config_path="configs", job_name="corine")

|

| 74 |

-

cfg = hydra.compose(config_name="my_train_config.yml")

|

| 75 |

-

|

| 76 |

-

nbclasses = cfg.dir_dataset_n_classes

|

| 77 |

-

|

| 78 |

-

# Load Model

|

| 79 |

-

model_path = "biomap/checkpoint/model/model.pt"

|

| 80 |

-

saved_state_dict = torch.load(model_path,map_location=torch.device('cpu'))

|

| 81 |

-

|

| 82 |

-

model = LitUnsupervisedSegmenter(nbclasses, cfg)

|

| 83 |

-

model.load_state_dict(saved_state_dict)

|

| 84 |

-

|

| 85 |

-

|

| 86 |

-

#normalize img

|

| 87 |

-

preprocess = T.Compose([

|

| 88 |

-

T.ToPILImage(),

|

| 89 |

-

T.Resize((320,320)),

|

| 90 |

-

# T.CenterCrop(224),

|

| 91 |

-

T.ToTensor(),

|

| 92 |

-

T.Normalize(

|

| 93 |

-

mean=[0.485, 0.456, 0.406],

|

| 94 |

-

std=[0.229, 0.224, 0.225]

|

| 95 |

-

)

|

| 96 |

-

])

|

| 97 |

|

| 98 |

# Function that look for img on EE and segment it

|

| 99 |

# -- 3 ways possible to avoid cloudy environment -- monthly / bi-monthly / yearly meaned img

|

| 100 |

-

|

| 101 |

-

def segment_loc(location, month, year, how = "month", month_end = '12', year_end = None) :

|

| 102 |

if how == 'month':

|

| 103 |

img = extract_img(location, year +'-'+ month +'-01', year +'-'+ month +'-28')

|

| 104 |

elif how == 'year' :

|

|

@@ -106,7 +34,6 @@ def segment_loc(location, month, year, how = "month", month_end = '12', year_end

|

|

| 106 |

img = extract_img(location, year +'-'+ month +'-01', year +'-'+ month_end +'-28', width = 0.04 , len = 0.04)

|

| 107 |

else :

|

| 108 |

img = extract_img(location, year +'-'+ month +'-01', year_end +'-'+ month_end +'-28', width = 0.04 , len = 0.04)

|

| 109 |

-

|

| 110 |

|

| 111 |

img_test= transform_ee_img(img, max = 0.25)

|

| 112 |

|

|

@@ -177,38 +104,8 @@ def segment_group(location, start_date, end_date, how = 'month') :

|

|

| 177 |

|

| 178 |

return outputs

|

| 179 |

|

| 180 |

-

|

| 181 |

-

# Function that transforms an output to PIL images

|

| 182 |

-

|

| 183 |

-

def transform_to_pil(outputs,alpha=0.3):

|

| 184 |

-

# Transform img with torch

|

| 185 |

-

img = torch.moveaxis(prep_for_plot(outputs['img'][0]),-1,0)

|

| 186 |

-

img=T.ToPILImage()(img)

|

| 187 |

-

|

| 188 |

-

# Transform label by saving it then open it

|

| 189 |

-

# label = outputs['linear_preds'][0]

|

| 190 |

-

# plt.imsave('label.png',label,cmap=cmap)

|

| 191 |

-

# label = Image.open('label.png')

|

| 192 |

-

|

| 193 |

-

cmaplist = np.array([np.array(cmap(i)) for i in range(cmap.N)])

|

| 194 |

-

labels = np.array(outputs['linear_preds'][0])-1

|

| 195 |

-

label = T.ToPILImage()((cmaplist[labels]*255).astype(np.uint8))

|

| 196 |

-

|

| 197 |

-

|

| 198 |

-

# Overlay labels with img wit alpha

|

| 199 |

-

background = img.convert("RGBA")

|

| 200 |

-

overlay = label.convert("RGBA")

|

| 201 |

-

|

| 202 |

-

labeled_img = Image.blend(background, overlay, alpha)

|

| 203 |

-

|

| 204 |

-

return img, label, labeled_img

|

| 205 |

-

|

| 206 |

-

|

| 207 |

-

|

| 208 |

-

# Function that extract labeled_img(PIL) and nb_values(number of pixels for each class) and the score for each observation

|

| 209 |

-

|

| 210 |

def values_from_output(output):

|

| 211 |

-

imgs = transform_to_pil(output,alpha = 0.3)

|

| 212 |

|

| 213 |

img = imgs[0]

|

| 214 |

img = np.array(img.convert('RGB'))

|

|

@@ -436,7 +333,7 @@ preprocess = T.Compose([

|

|

| 436 |

# Function that look for img on EE and segment it

|

| 437 |

# -- 3 ways possible to avoid cloudy environment -- monthly / bi-monthly / yearly meaned img

|

| 438 |

|

| 439 |

-

def segment_loc(location, month, year, how = "month", month_end = '12', year_end = None) :

|

| 440 |

if how == 'month':

|

| 441 |

img = extract_img(location, year +'-'+ month +'-01', year +'-'+ month +'-28')

|

| 442 |

elif how == 'year' :

|

|

@@ -583,180 +480,6 @@ def values_from_outputs(outputs) :

|

|

| 583 |

|

| 584 |

|

| 585 |

|

| 586 |

-

def plot_imgs_labels(months, imgs, imgs_label, nb_values, scores) :

|

| 587 |

-

|

| 588 |

-

fig2 = px.imshow(np.array(imgs), animation_frame=0, binary_string=True)

|

| 589 |

-

fig3 = px.imshow(np.array(imgs_label), animation_frame=0, binary_string=True)

|

| 590 |

-

|

| 591 |

-

# Scores

|

| 592 |

-

scatters = []

|

| 593 |

-

temp = []

|

| 594 |

-

for score in scores :

|

| 595 |

-

temp_score = []

|

| 596 |

-

temp_date = []

|

| 597 |

-

#score = scores[i]

|

| 598 |

-

temp.append(score)

|

| 599 |

-

n = len(temp)

|

| 600 |

-

text_temp = ["" for i in temp]

|

| 601 |

-

text_temp[-1] = str(round(score,2))

|

| 602 |

-

scatters.append(go.Scatter(x=[0,1], y=temp, mode="lines+markers+text", marker_color="black", text = text_temp, textposition="top center"))

|

| 603 |

-

print(text_temp)

|

| 604 |

-

|

| 605 |

-

# Scores

|

| 606 |

-

fig = make_subplots(

|

| 607 |

-

rows=1, cols=4,

|

| 608 |

-

specs=[[{"type": "image"},{"type": "image"}, {"type": "pie"}, {"type": "scatter"}]],

|

| 609 |

-

subplot_titles=("Localisation visualization", "Labeled visualisation", "Segments repartition", "Biodiversity scores")

|

| 610 |

-

)

|

| 611 |

-

|

| 612 |

-

fig.add_trace(fig2["frames"][0]["data"][0], row=1, col=1)

|

| 613 |

-

fig.add_trace(fig3["frames"][0]["data"][0], row=1, col=2)

|

| 614 |

-

|

| 615 |

-

fig.add_trace(go.Pie(labels = class_names,

|

| 616 |

-

values = nb_values[0],

|

| 617 |

-

marker_colors = colors,

|

| 618 |

-

name="Segment repartition",

|

| 619 |

-

textposition='inside',

|

| 620 |

-

texttemplate = "%{percent:.0%}",

|

| 621 |

-

textfont_size=14

|

| 622 |

-

),

|

| 623 |

-

row=1, col=3)

|

| 624 |

-

|

| 625 |

-

|

| 626 |

-

fig.add_trace(scatters[0], row=1, col=4)

|

| 627 |

-

fig.update_traces(showlegend=False, selector=dict(type='scatter'))

|

| 628 |

-

#fig.update_traces(, selector=dict(type='scatter'))

|

| 629 |

-

# fig.add_annotation(text='score:' + str(scores[0]),

|

| 630 |

-

# showarrow=False,

|

| 631 |

-

# row=2, col=2)

|

| 632 |

-

|

| 633 |

-

|

| 634 |

-

number_frames = len(imgs)

|

| 635 |

-

frames = [dict(

|

| 636 |

-

name = k,

|

| 637 |

-

data = [ fig2["frames"][k]["data"][0],

|

| 638 |

-

fig3["frames"][k]["data"][0],

|

| 639 |

-

go.Pie(labels = class_names,

|

| 640 |

-

values = nb_values[k],

|

| 641 |

-

marker_colors = colors,

|

| 642 |

-

name="Segment repartition",

|

| 643 |

-

textposition='inside',

|

| 644 |

-

texttemplate = "%{percent:.0%}",

|

| 645 |

-

textfont_size=14

|

| 646 |

-

),

|

| 647 |

-

scatters[k]

|

| 648 |

-

],

|

| 649 |

-

traces=[0, 1,2,3] # the elements of the list [0,1,2] give info on the traces in fig.data

|

| 650 |

-

# that are updated by the above three go.Scatter instances

|

| 651 |

-

) for k in range(number_frames)]

|

| 652 |

-

|

| 653 |

-

updatemenus = [dict(type='buttons',

|

| 654 |

-

buttons=[dict(label='Play',

|

| 655 |

-

method='animate',

|

| 656 |

-

args=[[f'{k}' for k in range(number_frames)],

|

| 657 |

-

dict(frame=dict(duration=500, redraw=False),

|

| 658 |

-

transition=dict(duration=0),

|

| 659 |

-

easing='linear',

|

| 660 |

-

fromcurrent=True,

|

| 661 |

-

mode='immediate'

|

| 662 |

-

)])],

|

| 663 |

-

direction= 'left',

|

| 664 |

-

pad=dict(r= 10, t=85),

|

| 665 |

-

showactive =True, x= 0.1, y= 0.13, xanchor= 'right', yanchor= 'top')

|

| 666 |

-

]

|

| 667 |

-

|

| 668 |

-

sliders = [{'yanchor': 'top',

|

| 669 |

-

'xanchor': 'left',

|

| 670 |

-

'currentvalue': {'font': {'size': 16}, 'prefix': 'Frame: ', 'visible': False, 'xanchor': 'right'},

|

| 671 |

-

'transition': {'duration': 500.0, 'easing': 'linear'},

|

| 672 |

-

'pad': {'b': 10, 't': 50},

|

| 673 |

-

'len': 0.9, 'x': 0.1, 'y': 0,

|

| 674 |

-

'steps': [{'args': [[k], {'frame': {'duration': 500.0, 'easing': 'linear', 'redraw': False},

|

| 675 |

-

'transition': {'duration': 0, 'easing': 'linear'}}],

|

| 676 |

-

'label': months[k], 'method': 'animate'} for k in range(number_frames)

|

| 677 |

-

]}]

|

| 678 |

-

|

| 679 |

-

|

| 680 |

-

fig.update(frames=frames)

|

| 681 |

-

|

| 682 |

-

for i,fr in enumerate(fig["frames"]):

|

| 683 |

-

fr.update(

|

| 684 |

-

layout={

|

| 685 |

-

"xaxis": {

|

| 686 |

-

"range": [0,imgs[0].shape[1]+i/100000]

|

| 687 |

-

},

|

| 688 |

-

"yaxis": {

|

| 689 |

-

"range": [imgs[0].shape[0]+i/100000,0]

|

| 690 |

-

},

|

| 691 |

-

})

|

| 692 |

-

|

| 693 |

-

fr.update(layout_title_text= months[i])

|

| 694 |

-

|

| 695 |

-

|

| 696 |

-

fig.update(layout_title_text= months[0])

|

| 697 |

-

fig.update(

|

| 698 |

-

layout={

|

| 699 |

-

"xaxis": {

|

| 700 |

-

"range": [0,imgs[0].shape[1]+i/100000],

|

| 701 |

-

'showgrid': False, # thin lines in the background

|

| 702 |

-

'zeroline': False, # thick line at x=0

|

| 703 |

-

'visible': False, # numbers below

|

| 704 |

-

},

|

| 705 |

-

|

| 706 |

-

"yaxis": {

|

| 707 |

-

"range": [imgs[0].shape[0]+i/100000,0],

|

| 708 |

-

'showgrid': False, # thin lines in the background

|

| 709 |

-

'zeroline': False, # thick line at y=0

|

| 710 |

-

'visible': False,},

|

| 711 |

-

|

| 712 |

-

"xaxis2": {

|

| 713 |

-

"range": [0,imgs[0].shape[1]+i/100000],

|

| 714 |

-

'showgrid': False, # thin lines in the background

|

| 715 |

-

'zeroline': False, # thick line at x=0

|

| 716 |

-

'visible': False, # numbers below

|

| 717 |

-

},

|

| 718 |

-

|

| 719 |

-

"yaxis2": {

|

| 720 |

-

"range": [imgs[0].shape[0]+i/100000,0],

|

| 721 |

-

'showgrid': False, # thin lines in the background

|

| 722 |

-

'zeroline': False, # thick line at y=0

|

| 723 |

-

'visible': False,},

|

| 724 |

-

|

| 725 |

-

|

| 726 |

-

"xaxis3": {

|

| 727 |

-

"range": [0,len(scores)+1],

|

| 728 |

-

'autorange': False, # thin lines in the background

|

| 729 |

-

'showgrid': False, # thin lines in the background

|

| 730 |

-

'zeroline': False, # thick line at y=0

|

| 731 |

-

'visible': False

|

| 732 |

-

},

|

| 733 |

-

|

| 734 |

-

"yaxis3": {

|

| 735 |

-

"range": [0,1.5],

|

| 736 |

-

'autorange': False,

|

| 737 |

-

'showgrid': False, # thin lines in the background

|

| 738 |

-

'zeroline': False, # thick line at y=0

|

| 739 |

-

'visible': False # thin lines in the background

|

| 740 |

-

}

|

| 741 |

-

}

|

| 742 |

-

)

|

| 743 |

-

|

| 744 |

-

|

| 745 |

-

fig.update_layout(updatemenus=updatemenus,

|

| 746 |

-

sliders=sliders,

|

| 747 |

-

legend=dict(

|

| 748 |

-

yanchor= 'top',

|

| 749 |

-

xanchor= 'left',

|

| 750 |

-

orientation="h")

|

| 751 |

-

)

|

| 752 |

-

|

| 753 |

-

|

| 754 |

-

fig.update_layout(margin=dict(b=0, r=0))

|

| 755 |

-

|

| 756 |

-

# fig.show() #in jupyter notebook

|

| 757 |

-

|

| 758 |

-

return fig

|

| 759 |

-

|

| 760 |

|

| 761 |

|

| 762 |

# Last function (global one)

|

|

@@ -770,6 +493,7 @@ def segment_region(latitude, longitude, start_date, end_date, how = 'month'):

|

|

| 770 |

|

| 771 |

#extract the intersting values from image

|

| 772 |

months, imgs, imgs_label, nb_values, scores = values_from_outputs(outputs)

|

|

|

|

| 773 |

|

| 774 |

|

| 775 |

#Create the figure

|

|

|

|

| 1 |

from PIL import Image

|

| 2 |

|

|

|

|

| 3 |

import matplotlib as mpl

|

| 4 |

from utils import prep_for_plot

|

| 5 |

|

| 6 |

import torch.multiprocessing

|

| 7 |

import torchvision.transforms as T

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

|

| 9 |

from utils_gee import extract_img, transform_ee_img

|

| 10 |

|

|

|

|

| 13 |

import numpy as np

|

| 14 |

from plotly.subplots import make_subplots

|

| 15 |

|

| 16 |

+

import os

|

| 17 |

os.environ['KMP_DUPLICATE_LIB_OK'] = 'True'

|

| 18 |

|

|

|

|

| 19 |

colors = ('red', 'palegreen', 'green', 'steelblue', 'blue', 'yellow', 'lightgrey')

|

| 20 |

class_names = ('Buildings', 'Cultivation', 'Natural green', 'Wetland', 'Water', 'Infrastructure', 'Background')

|

| 21 |

+

cmap = mpl.colors.ListedColormap(colors)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 22 |

|

| 23 |

colors = ('red', 'palegreen', 'green', 'steelblue', 'blue', 'yellow', 'lightgrey')

|

|

|

|

| 24 |

class_names = ('Buildings', 'Cultivation', 'Natural green', 'Wetland', 'Water', 'Infrastructure', 'Background')

|

| 25 |

+

scores_init = [1,2,4,3,4,1,0]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|