Spaces:

Runtime error

Runtime error

Add application file

Browse files- README.md +125 -13

- app.py +39 -0

- get_data.py +852 -0

- img/mid.png +0 -0

- img/onet.png +0 -0

- img/pnet.png +0 -0

- img/result.png +0 -0

- img/rnet.png +0 -0

- model_store/onet_epoch_20.pt +3 -0

- model_store/pnet_epoch_20.pt +3 -0

- model_store/rnet_epoch_20.pt +3 -0

- requirements.txt +10 -0

- test.py +84 -0

- test.sh +4 -0

- train.out +0 -0

- train.py +351 -0

- train.sh +7 -0

- utils/config.py +42 -0

- utils/dataloader.py +347 -0

- utils/detect.py +758 -0

- utils/models.py +207 -0

- utils/tool.py +117 -0

- utils/vision.py +58 -0

README.md

CHANGED

|

@@ -1,13 +1,125 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

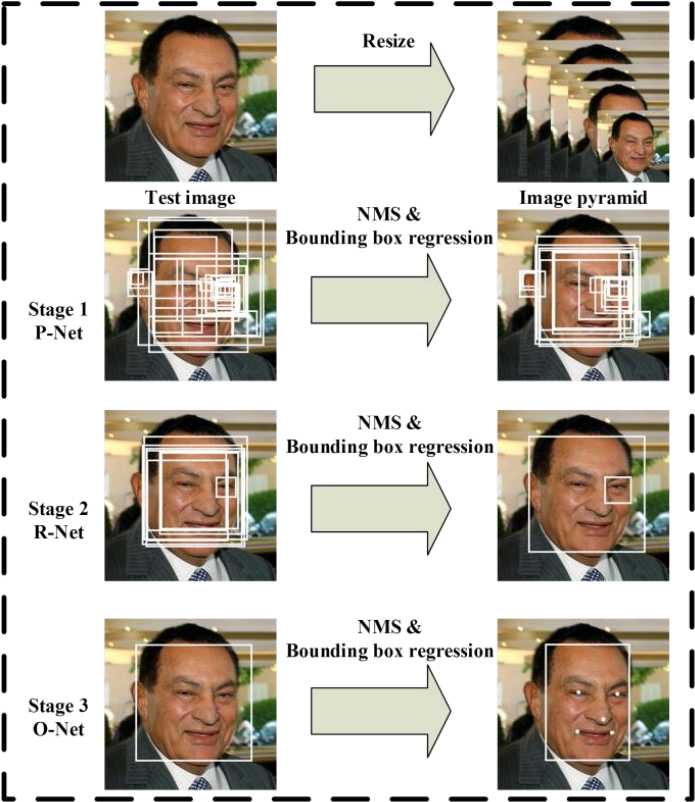

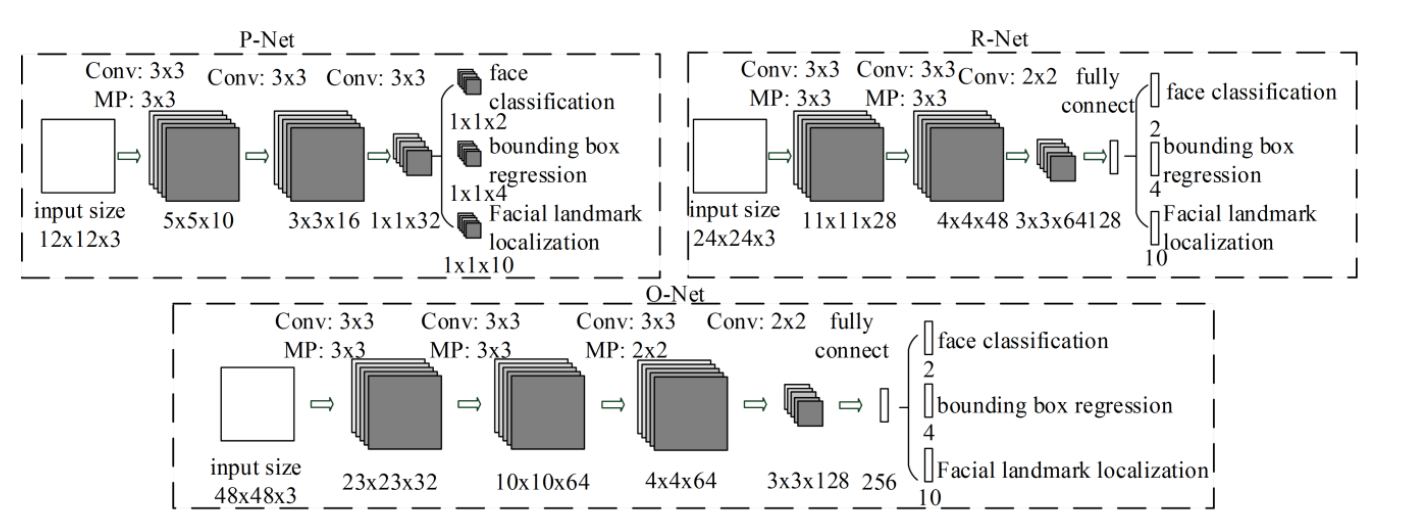

# Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks

|

| 2 |

+

|

| 3 |

+

This repo contains the code, data and trained models for the paper [Joint Face Detection and Alignment using Multi-task Cascaded Convolutional Networks](https://arxiv.org/ftp/arxiv/papers/1604/1604.02878.pdf).

|

| 4 |

+

|

| 5 |

+

## Overview

|

| 6 |

+

|

| 7 |

+

MTCNN is a popular algorithm for face detection that uses multiple neural networks to detect faces in images. It is capable of detecting faces under various lighting and pose conditions and can detect multiple faces in an image.

|

| 8 |

+

|

| 9 |

+

We have implemented MTCNN using the pytorch framework. Pytorch is a popular deep learning framework that provides tools for building and training neural networks.

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

## Description of file

|

| 15 |

+

```shell

|

| 16 |

+

├── README.md # explanatory document

|

| 17 |

+

├── get_data.py # Generate corresponding training data depending on the input “--net”

|

| 18 |

+

├── img # mid.png is used for testing visualization effects,other images are the corresponding results.

|

| 19 |

+

│ ├── mid.png

|

| 20 |

+

│ ├── onet.png

|

| 21 |

+

│ ├── pnet.png

|

| 22 |

+

│ ├── rnet.png

|

| 23 |

+

│ ├── result.png

|

| 24 |

+

│ └── result.jpg

|

| 25 |

+

├── model_store # Our pre-trained model

|

| 26 |

+

│ ├── onet_epoch_20.pt

|

| 27 |

+

│ ├── pnet_epoch_20.pt

|

| 28 |

+

│ └── rnet_epoch_20.pt

|

| 29 |

+

├── requirements.txt # Environmental version requirements

|

| 30 |

+

├── test.py # Specify different "--net" to get the corresponding visualization results

|

| 31 |

+

├── test.sh # Used to test mid.png, which will test the output visualization of three networks

|

| 32 |

+

├── train.out # Our complete training log for this experiment

|

| 33 |

+

├── train.py # Specify different "--net" for the training of the corresponding network

|

| 34 |

+

├── train.sh # Generate data from start to finish and train

|

| 35 |

+

└── utils # Some common tool functions and modules

|

| 36 |

+

├── config.py

|

| 37 |

+

├── dataloader.py

|

| 38 |

+

├── detect.py

|

| 39 |

+

├── models.py

|

| 40 |

+

├── tool.py

|

| 41 |

+

└── vision.py

|

| 42 |

+

```

|

| 43 |

+

## Requirements

|

| 44 |

+

|

| 45 |

+

* numpy==1.21.4

|

| 46 |

+

* matplotlib==3.5.0

|

| 47 |

+

* opencv-python==4.4.0.42

|

| 48 |

+

* torch==1.13.0+cu116

|

| 49 |

+

|

| 50 |

+

## How to Install

|

| 51 |

+

|

| 52 |

+

- ```shell

|

| 53 |

+

conda create -n env python=3.8 -y

|

| 54 |

+

conda activate env

|

| 55 |

+

```

|

| 56 |

+

- ```shell

|

| 57 |

+

pip install -r requirements.txt

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

## Preprocessing

|

| 61 |

+

|

| 62 |

+

- download [WIDER_FACE](http://shuoyang1213.me/WIDERFACE/) face detection data then store it into ./data_set/face_detection

|

| 63 |

+

- download [CNN_FacePoint](http://mmlab.ie.cuhk.edu.hk/archive/CNN_FacePoint.htm) face detection and landmark data then store it into ./data_set/face_landmark

|

| 64 |

+

|

| 65 |

+

### Preprocessed Data

|

| 66 |

+

|

| 67 |

+

```shell

|

| 68 |

+

# Before training Pnet

|

| 69 |

+

python get_data.py --net=pnet

|

| 70 |

+

# Before training Rnet, please use your trained model path

|

| 71 |

+

python get_data.py --net=rnet --pnet_path=./model_store/pnet_epoch_20.pt

|

| 72 |

+

# Before training Onet, please use your trained model path

|

| 73 |

+

python get_data.py --net=onet --pnet_path=./model_store/pnet_epoch_20.pt --rnet_path=./model_store/rnet_epoch_20.pt

|

| 74 |

+

```

|

| 75 |

+

|

| 76 |

+

## How to Run

|

| 77 |

+

|

| 78 |

+

### Train

|

| 79 |

+

|

| 80 |

+

```shell

|

| 81 |

+

python train.py --net=pnet/rnet/onet #Specify the corresponding network to start training

|

| 82 |

+

bash train.sh #Alternatively, use the sh file to train in order

|

| 83 |

+

```

|

| 84 |

+

|

| 85 |

+

The checkpoints will be saved in a subfolder of `./model_store/*`.

|

| 86 |

+

|

| 87 |

+

#### Finetuning from an existing checkpoint

|

| 88 |

+

|

| 89 |

+

```shell

|

| 90 |

+

python train.py --net=pnet/rnet/onet --load=[model path]

|

| 91 |

+

```

|

| 92 |

+

|

| 93 |

+

model path should be a subdirectory in the `./model_store/` directory, e.g. `--load=./model_store/pnet_epoch_20.pt`

|

| 94 |

+

|

| 95 |

+

### Evaluate

|

| 96 |

+

|

| 97 |

+

#### Use the sh file to test in order

|

| 98 |

+

|

| 99 |

+

```shell

|

| 100 |

+

bash test.sh

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

#### To detect a single image

|

| 104 |

+

|

| 105 |

+

```shell

|

| 106 |

+

python test.py --net=pnet/rnet/onet --path=test.jpg

|

| 107 |

+

```

|

| 108 |

+

|

| 109 |

+

#### To detect a video stream from a camera

|

| 110 |

+

|

| 111 |

+

```shell

|

| 112 |

+

python test.py --input_mode=0

|

| 113 |

+

```

|

| 114 |

+

|

| 115 |

+

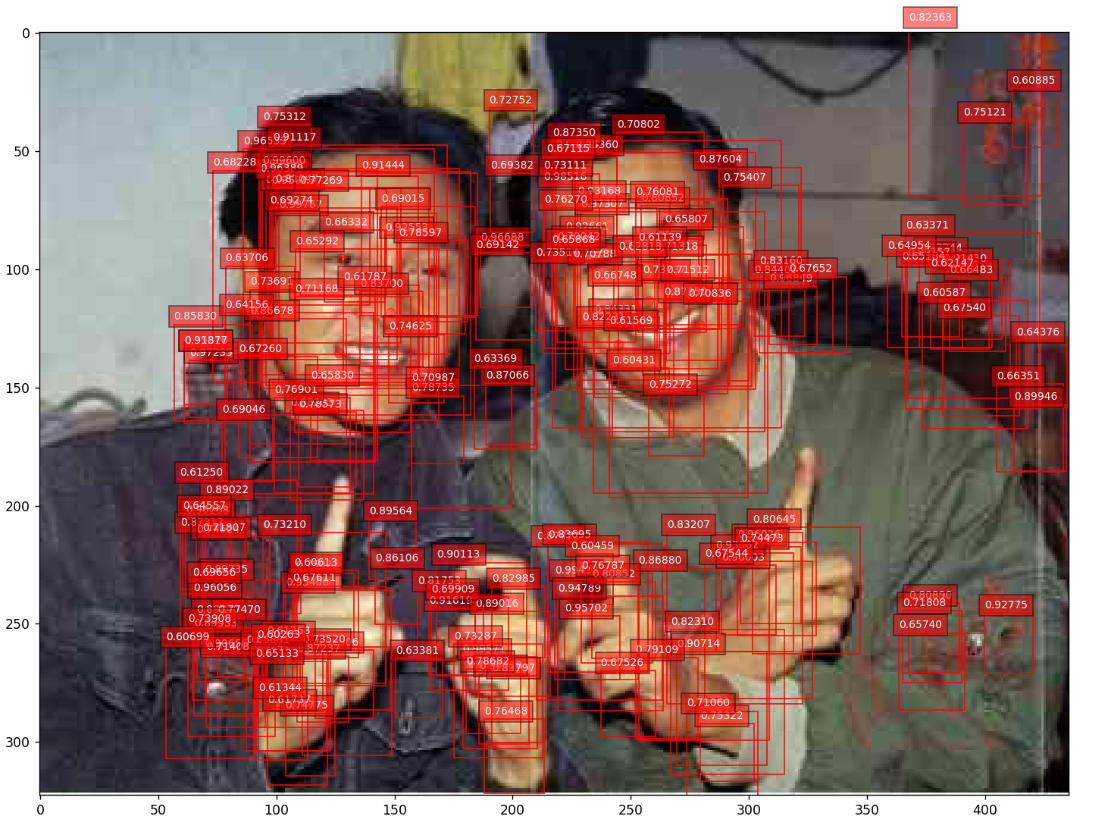

#### The result of "--net=pnet"

|

| 116 |

+

|

| 117 |

+

|

| 118 |

+

|

| 119 |

+

#### The result of "--net=rnet"

|

| 120 |

+

|

| 121 |

+

|

| 122 |

+

|

| 123 |

+

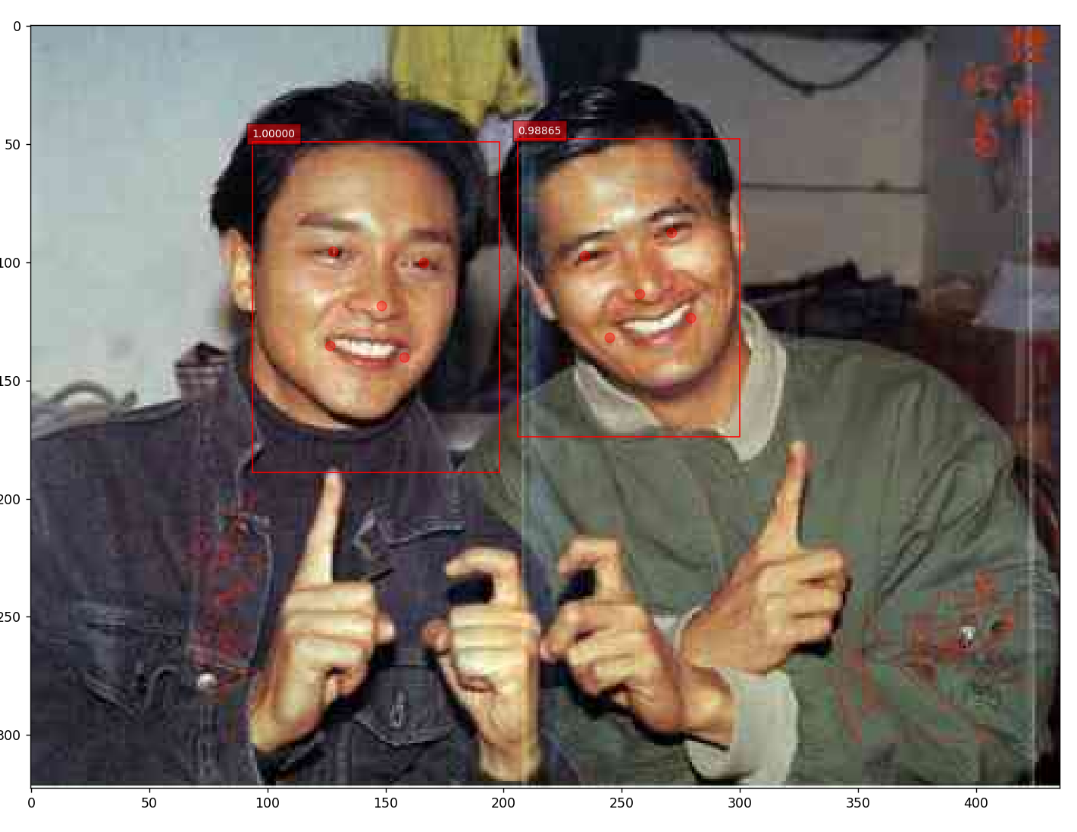

#### The result of "--net=onet"

|

| 124 |

+

|

| 125 |

+

|

app.py

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import cv2

|

| 3 |

+

from utils.detect import create_mtcnn_net, MtcnnDetector

|

| 4 |

+

from utils.vision import vis_face

|

| 5 |

+

import argparse

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

MIN_FACE_SIZE = 3

|

| 9 |

+

|

| 10 |

+

def parse_args():

|

| 11 |

+

parser = argparse.ArgumentParser(description='Test MTCNN',

|

| 12 |

+

formatter_class=argparse.ArgumentDefaultsHelpFormatter)

|

| 13 |

+

|

| 14 |

+

parser.add_argument('--net', default='onet', help='which net to show', type=str)

|

| 15 |

+

parser.add_argument('--pnet_path', default="./model_store/pnet_epoch_20.pt",help='path to pnet model', type=str)

|

| 16 |

+

parser.add_argument('--rnet_path', default="./model_store/rnet_epoch_20.pt",help='path to rnet model', type=str)

|

| 17 |

+

parser.add_argument('--onet_path', default="./model_store/onet_epoch_20.pt",help='path to onet model', type=str)

|

| 18 |

+

parser.add_argument('--path', default="./img/mid.png",help='path to image', type=str)

|

| 19 |

+

parser.add_argument('--min_face_size', default=MIN_FACE_SIZE,help='min face size', type=int)

|

| 20 |

+

parser.add_argument('--use_cuda', default=False,help='use cuda', type=bool)

|

| 21 |

+

parser.add_argument('--thresh', default='[0.1, 0.1, 0.1]',help='thresh', type=str)

|

| 22 |

+

parser.add_argument('--save_name', default="result.jpg",help='save name', type=str)

|

| 23 |

+

parser.add_argument('--input_mode', default=1,help='image or video', type=int)

|

| 24 |

+

args = parser.parse_args()

|

| 25 |

+

return args

|

| 26 |

+

def greet(name):

|

| 27 |

+

args = parse_args()

|

| 28 |

+

thresh = [float(i) for i in (args.thresh).split('[')[1].split(']')[0].split(',')]

|

| 29 |

+

pnet, rnet, onet = create_mtcnn_net(p_model_path=args.pnet_path, r_model_path=args.rnet_path,o_model_path=args.onet_path, use_cuda=args.use_cuda)

|

| 30 |

+

mtcnn_detector = MtcnnDetector(pnet=pnet, rnet=rnet, onet=onet, min_face_size=args.min_face_size,threshold=thresh)

|

| 31 |

+

img = cv2.imread(name)

|

| 32 |

+

img_bg = cv2.cvtColor(img, cv2.COLOR_BGR2RGB)

|

| 33 |

+

p_bboxs, r_bboxs, bboxs, landmarks = mtcnn_detector.detect_face(img)

|

| 34 |

+

save_name = args.save_name

|

| 35 |

+

return vis_face(img_bg, bboxs, landmarks, MIN_FACE_SIZE, save_name)

|

| 36 |

+

iface = gr.Interface(fn=greet,

|

| 37 |

+

inputs=gr.Image(type="filepath"),

|

| 38 |

+

outputs="image")

|

| 39 |

+

iface.launch()

|

get_data.py

ADDED

|

@@ -0,0 +1,852 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import sys

|

| 2 |

+

import numpy as np

|

| 3 |

+

import cv2

|

| 4 |

+

import os

|

| 5 |

+

from utils.tool import IoU,convert_to_square

|

| 6 |

+

import numpy.random as npr

|

| 7 |

+

import argparse

|

| 8 |

+

from utils.detect import MtcnnDetector, create_mtcnn_net

|

| 9 |

+

from utils.dataloader import ImageDB,TestImageLoader

|

| 10 |

+

import time

|

| 11 |

+

from six.moves import cPickle

|

| 12 |

+

import utils.config as config

|

| 13 |

+

import utils.vision as vision

|

| 14 |

+

sys.path.append(os.getcwd())

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

txt_from_path = './data_set/wider_face_train_bbx_gt.txt'

|

| 18 |

+

anno_file = os.path.join(config.ANNO_STORE_DIR, 'anno_train.txt')

|

| 19 |

+

# anno_file = './anno_store/anno_train.txt'

|

| 20 |

+

|

| 21 |

+

prefix = ''

|

| 22 |

+

use_cuda = True

|

| 23 |

+

im_dir = "./data_set/face_detection/WIDER_train/images/"

|

| 24 |

+

traindata_store = './data_set/train/'

|

| 25 |

+

prefix_path = "./data_set/face_detection/WIDER_train/images/"

|

| 26 |

+

annotation_file = './anno_store/anno_train.txt'

|

| 27 |

+

prefix_path_lm = ''

|

| 28 |

+

annotation_file_lm = "./data_set/face_landmark/CNN_FacePoint/train/trainImageList.txt"

|

| 29 |

+

# ----------------------------------------------------other----------------------------------------------

|

| 30 |

+

pos_save_dir = "./data_set/train/12/positive"

|

| 31 |

+

part_save_dir = "./data_set/train/12/part"

|

| 32 |

+

neg_save_dir = './data_set/train/12/negative'

|

| 33 |

+

pnet_postive_file = os.path.join(config.ANNO_STORE_DIR, 'pos_12.txt')

|

| 34 |

+

pnet_part_file = os.path.join(config.ANNO_STORE_DIR, 'part_12.txt')

|

| 35 |

+

pnet_neg_file = os.path.join(config.ANNO_STORE_DIR, 'neg_12.txt')

|

| 36 |

+

imglist_filename_pnet = os.path.join(config.ANNO_STORE_DIR, 'imglist_anno_12.txt')

|

| 37 |

+

# ----------------------------------------------------PNet----------------------------------------------

|

| 38 |

+

rnet_postive_file = os.path.join(config.ANNO_STORE_DIR, 'pos_24.txt')

|

| 39 |

+

rnet_part_file = os.path.join(config.ANNO_STORE_DIR, 'part_24.txt')

|

| 40 |

+

rnet_neg_file = os.path.join(config.ANNO_STORE_DIR, 'neg_24.txt')

|

| 41 |

+

rnet_landmark_file = os.path.join(config.ANNO_STORE_DIR, 'landmark_24.txt')

|

| 42 |

+

imglist_filename_rnet = os.path.join(config.ANNO_STORE_DIR, 'imglist_anno_24.txt')

|

| 43 |

+

# ----------------------------------------------------RNet----------------------------------------------

|

| 44 |

+

onet_postive_file = os.path.join(config.ANNO_STORE_DIR, 'pos_48.txt')

|

| 45 |

+

onet_part_file = os.path.join(config.ANNO_STORE_DIR, 'part_48.txt')

|

| 46 |

+

onet_neg_file = os.path.join(config.ANNO_STORE_DIR, 'neg_48.txt')

|

| 47 |

+

onet_landmark_file = os.path.join(config.ANNO_STORE_DIR, 'landmark_48.txt')

|

| 48 |

+

imglist_filename_onet = os.path.join(config.ANNO_STORE_DIR, 'imglist_anno_48.txt')

|

| 49 |

+

# ----------------------------------------------------ONet----------------------------------------------

|

| 50 |

+

|

| 51 |

+

|

| 52 |

+

|

| 53 |

+

def assemble_data(output_file, anno_file_list=[]):

|

| 54 |

+

|

| 55 |

+

#assemble the pos, neg, part annotations to one file

|

| 56 |

+

size = 12

|

| 57 |

+

|

| 58 |

+

if len(anno_file_list)==0:

|

| 59 |

+

return 0

|

| 60 |

+

|

| 61 |

+

if os.path.exists(output_file):

|

| 62 |

+

os.remove(output_file)

|

| 63 |

+

|

| 64 |

+

for anno_file in anno_file_list:

|

| 65 |

+

with open(anno_file, 'r') as f:

|

| 66 |

+

print(anno_file)

|

| 67 |

+

anno_lines = f.readlines()

|

| 68 |

+

|

| 69 |

+

base_num = 250000

|

| 70 |

+

|

| 71 |

+

if len(anno_lines) > base_num * 3:

|

| 72 |

+

idx_keep = npr.choice(len(anno_lines), size=base_num * 3, replace=True)

|

| 73 |

+

elif len(anno_lines) > 100000:

|

| 74 |

+

idx_keep = npr.choice(len(anno_lines), size=len(anno_lines), replace=True)

|

| 75 |

+

else:

|

| 76 |

+

idx_keep = np.arange(len(anno_lines))

|

| 77 |

+

np.random.shuffle(idx_keep)

|

| 78 |

+

chose_count = 0

|

| 79 |

+

with open(output_file, 'a+') as f:

|

| 80 |

+

for idx in idx_keep:

|

| 81 |

+

# write lables of pos, neg, part images

|

| 82 |

+

f.write(anno_lines[idx])

|

| 83 |

+

chose_count+=1

|

| 84 |

+

|

| 85 |

+

return chose_count

|

| 86 |

+

def wider_face(txt_from_path, txt_to_path):

|

| 87 |

+

line_from_count = 0

|

| 88 |

+

with open(txt_from_path, 'r') as f:

|

| 89 |

+

annotations = f.readlines()

|

| 90 |

+

with open(txt_to_path, 'w+') as f:

|

| 91 |

+

while line_from_count < len(annotations):

|

| 92 |

+

if annotations[line_from_count][2]=='-':

|

| 93 |

+

img_name = annotations[line_from_count][:-1]

|

| 94 |

+

line_from_count += 1 # change line to read the number

|

| 95 |

+

bbox_count = int(annotations[line_from_count]) # num of bboxes

|

| 96 |

+

line_from_count += 1 # change line to read the posession

|

| 97 |

+

for _ in range(bbox_count):

|

| 98 |

+

bbox = list(map(int,annotations[line_from_count].split()[:4])) # give a loop to append all the boxes

|

| 99 |

+

bbox = [bbox[0], bbox[1], bbox[0]+bbox[2], bbox[1]+bbox[3]] # make x1, y1, w, h --> x1, y1, x2, y2

|

| 100 |

+

bbox = list(map(str,bbox))

|

| 101 |

+

img_name += (' '+' '.join(bbox))

|

| 102 |

+

line_from_count+=1

|

| 103 |

+

f.write(img_name +'\n')

|

| 104 |

+

else: # dectect the file name

|

| 105 |

+

line_from_count+=1

|

| 106 |

+

|

| 107 |

+

# ----------------------------------------------------origin----------------------------------------------

|

| 108 |

+

def get_Pnet_data():

|

| 109 |

+

if not os.path.exists(pos_save_dir):

|

| 110 |

+

os.makedirs(pos_save_dir)

|

| 111 |

+

if not os.path.exists(part_save_dir):

|

| 112 |

+

os.makedirs(part_save_dir)

|

| 113 |

+

if not os.path.exists(neg_save_dir):

|

| 114 |

+

os.makedirs(neg_save_dir)

|

| 115 |

+

f1 = open(os.path.join('./anno_store', 'pos_12.txt'), 'w')

|

| 116 |

+

f2 = open(os.path.join('./anno_store', 'neg_12.txt'), 'w')

|

| 117 |

+

f3 = open(os.path.join('./anno_store', 'part_12.txt'), 'w')

|

| 118 |

+

with open(anno_file, 'r') as f:

|

| 119 |

+

annotations = f.readlines()

|

| 120 |

+

num = len(annotations)

|

| 121 |

+

print("%d pics in total" % num)

|

| 122 |

+

p_idx = 0 # positive

|

| 123 |

+

n_idx = 0 # negative

|

| 124 |

+

d_idx = 0 # dont care

|

| 125 |

+

idx = 0

|

| 126 |

+

box_idx = 0

|

| 127 |

+

for annotation in annotations:

|

| 128 |

+

annotation = annotation.strip().split(' ')

|

| 129 |

+

# annotation[0]文件名

|

| 130 |

+

im_path = os.path.join(im_dir, annotation[0])

|

| 131 |

+

# print(im_path)

|

| 132 |

+

# print(os.path.exists(im_path))

|

| 133 |

+

bbox = list(map(float, annotation[1:]))

|

| 134 |

+

# annotation[1:]人脸坐标,一张脸4个值,对应两个点的坐标

|

| 135 |

+

boxes = np.array(bbox, dtype=np.int32).reshape(-1, 4)

|

| 136 |

+

# -1处的值为人脸数目

|

| 137 |

+

if boxes.shape[0]==0:

|

| 138 |

+

continue

|

| 139 |

+

# 若无人脸则跳过本次循环

|

| 140 |

+

img = cv2.imread(im_path)

|

| 141 |

+

# print(img.shape)

|

| 142 |

+

# exit()

|

| 143 |

+

# 计数

|

| 144 |

+

idx += 1

|

| 145 |

+

if idx % 100 == 0:

|

| 146 |

+

print("%s images done, pos: %s part: %s neg: %s" % (idx, p_idx, d_idx, n_idx))

|

| 147 |

+

|

| 148 |

+

# 图片三通道

|

| 149 |

+

height, width, channel = img.shape

|

| 150 |

+

|

| 151 |

+

neg_num = 0

|

| 152 |

+

|

| 153 |

+

# 取50次不同的框

|

| 154 |

+

while neg_num < 50:

|

| 155 |

+

size = np.random.randint(12, min(width, height) / 2)

|

| 156 |

+

nx = np.random.randint(0, width - size)

|

| 157 |

+

ny = np.random.randint(0, height - size)

|

| 158 |

+

crop_box = np.array([nx, ny, nx + size, ny + size])

|

| 159 |

+

|

| 160 |

+

Iou = IoU(crop_box, boxes) # IoU为 重合部分 / 两框之和 ,越大越好

|

| 161 |

+

|

| 162 |

+

cropped_im = img[ny: ny + size, nx: nx + size, :] # 裁去多余部分并resize成 12*12

|

| 163 |

+

resized_im = cv2.resize(cropped_im, (12, 12), interpolation=cv2.INTER_LINEAR)

|

| 164 |

+

|

| 165 |

+

if np.max(Iou) < 0.3:

|

| 166 |

+

# Iou with all gts must below 0.3

|

| 167 |

+

save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx)

|

| 168 |

+

f2.write(save_file + ' 0\n')

|

| 169 |

+

cv2.imwrite(save_file, resized_im)

|

| 170 |

+

n_idx += 1

|

| 171 |

+

neg_num += 1

|

| 172 |

+

|

| 173 |

+

for box in boxes:

|

| 174 |

+

# box (x_left, y_top, x_right, y_bottom)

|

| 175 |

+

x1, y1, x2, y2 = box

|

| 176 |

+

# w = x2 - x1 + 1

|

| 177 |

+

# h = y2 - y1 + 1

|

| 178 |

+

w = x2 - x1 + 1

|

| 179 |

+

h = y2 - y1 + 1

|

| 180 |

+

|

| 181 |

+

# ignore small faces

|

| 182 |

+

# in case the ground truth boxes of small faces are not accurate

|

| 183 |

+

if max(w, h) < 40 or x1 < 0 or y1 < 0:

|

| 184 |

+

continue

|

| 185 |

+

if w < 12 or h < 12:

|

| 186 |

+

continue

|

| 187 |

+

|

| 188 |

+

# generate negative examples that have overlap with gt

|

| 189 |

+

for i in range(5):

|

| 190 |

+

size = np.random.randint(12, min(width, height) / 2)

|

| 191 |

+

|

| 192 |

+

# delta_x and delta_y are offsets of (x1, y1)

|

| 193 |

+

delta_x = np.random.randint(max(-size, -x1), w)

|

| 194 |

+

delta_y = np.random.randint(max(-size, -y1), h)

|

| 195 |

+

nx1 = max(0, x1 + delta_x)

|

| 196 |

+

ny1 = max(0, y1 + delta_y)

|

| 197 |

+

|

| 198 |

+

if nx1 + size > width or ny1 + size > height:

|

| 199 |

+

continue

|

| 200 |

+

crop_box = np.array([nx1, ny1, nx1 + size, ny1 + size])

|

| 201 |

+

Iou = IoU(crop_box, boxes)

|

| 202 |

+

|

| 203 |

+

cropped_im = img[ny1: ny1 + size, nx1: nx1 + size, :]

|

| 204 |

+

resized_im = cv2.resize(cropped_im, (12, 12), interpolation=cv2.INTER_LINEAR)

|

| 205 |

+

|

| 206 |

+

if np.max(Iou) < 0.3:

|

| 207 |

+

# Iou with all gts must below 0.3

|

| 208 |

+

save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx)

|

| 209 |

+

f2.write(save_file + ' 0\n')

|

| 210 |

+

cv2.imwrite(save_file, resized_im)

|

| 211 |

+

n_idx += 1

|

| 212 |

+

|

| 213 |

+

# generate positive examples and part faces

|

| 214 |

+

for i in range(20):

|

| 215 |

+

size = np.random.randint(int(min(w, h) * 0.8), np.ceil(1.25 * max(w, h)))

|

| 216 |

+

|

| 217 |

+

# delta here is the offset of box center

|

| 218 |

+

delta_x = np.random.randint(-w * 0.2, w * 0.2)

|

| 219 |

+

delta_y = np.random.randint(-h * 0.2, h * 0.2)

|

| 220 |

+

|

| 221 |

+

nx1 = max(x1 + w / 2 + delta_x - size / 2, 0)

|

| 222 |

+

ny1 = max(y1 + h / 2 + delta_y - size / 2, 0)

|

| 223 |

+

nx2 = nx1 + size

|

| 224 |

+

ny2 = ny1 + size

|

| 225 |

+

|

| 226 |

+

if nx2 > width or ny2 > height:

|

| 227 |

+

continue

|

| 228 |

+

crop_box = np.array([nx1, ny1, nx2, ny2])

|

| 229 |

+

|

| 230 |

+

offset_x1 = (x1 - nx1) / float(size)

|

| 231 |

+

offset_y1 = (y1 - ny1) / float(size)

|

| 232 |

+

offset_x2 = (x2 - nx2) / float(size)

|

| 233 |

+

offset_y2 = (y2 - ny2) / float(size)

|

| 234 |

+

|

| 235 |

+

cropped_im = img[int(ny1): int(ny2), int(nx1): int(nx2), :]

|

| 236 |

+

resized_im = cv2.resize(cropped_im, (12, 12), interpolation=cv2.INTER_LINEAR)

|

| 237 |

+

|

| 238 |

+

box_ = box.reshape(1, -1)

|

| 239 |

+

if IoU(crop_box, box_) >= 0.65:

|

| 240 |

+

save_file = os.path.join(pos_save_dir, "%s.jpg" % p_idx)

|

| 241 |

+

f1.write(save_file + ' 1 %.2f %.2f %.2f %.2f\n' % (offset_x1, offset_y1, offset_x2, offset_y2))

|

| 242 |

+

cv2.imwrite(save_file, resized_im)

|

| 243 |

+

p_idx += 1

|

| 244 |

+

elif IoU(crop_box, box_) >= 0.4:

|

| 245 |

+

save_file = os.path.join(part_save_dir, "%s.jpg" % d_idx)

|

| 246 |

+

f3.write(save_file + ' -1 %.2f %.2f %.2f %.2f\n' % (offset_x1, offset_y1, offset_x2, offset_y2))

|

| 247 |

+

cv2.imwrite(save_file, resized_im)

|

| 248 |

+

d_idx += 1

|

| 249 |

+

box_idx += 1

|

| 250 |

+

#print("%s images done, pos: %s part: %s neg: %s" % (idx, p_idx, d_idx, n_idx))

|

| 251 |

+

|

| 252 |

+

f1.close()

|

| 253 |

+

f2.close()

|

| 254 |

+

f3.close()

|

| 255 |

+

|

| 256 |

+

|

| 257 |

+

def assembel_Pnet_data():

|

| 258 |

+

anno_list = []

|

| 259 |

+

|

| 260 |

+

anno_list.append(pnet_postive_file)

|

| 261 |

+

anno_list.append(pnet_part_file)

|

| 262 |

+

anno_list.append(pnet_neg_file)

|

| 263 |

+

# anno_list.append(pnet_landmark_file)

|

| 264 |

+

chose_count = assemble_data(imglist_filename_pnet ,anno_list)

|

| 265 |

+

print("PNet train annotation result file path:%s" % imglist_filename_pnet)

|

| 266 |

+

|

| 267 |

+

# -----------------------------------------------------------------------------------------------------------------------------------------------#

|

| 268 |

+

|

| 269 |

+

def gen_rnet_data(data_dir, anno_file, pnet_model_file, prefix_path='', use_cuda=True, vis=False):

|

| 270 |

+

|

| 271 |

+

"""

|

| 272 |

+

:param data_dir: train data

|

| 273 |

+

:param anno_file:

|

| 274 |

+

:param pnet_model_file:

|

| 275 |

+

:param prefix_path:

|

| 276 |

+

:param use_cuda:

|

| 277 |

+

:param vis:

|

| 278 |

+

:return:

|

| 279 |

+

"""

|

| 280 |

+

|

| 281 |

+

# load trained pnet model

|

| 282 |

+

|

| 283 |

+

pnet, _, _ = create_mtcnn_net(p_model_path = pnet_model_file, use_cuda = use_cuda)

|

| 284 |

+

mtcnn_detector = MtcnnDetector(pnet = pnet, min_face_size = 12)

|

| 285 |

+

|

| 286 |

+

# load original_anno_file, length = 12880

|

| 287 |

+

imagedb = ImageDB(anno_file, mode = "test", prefix_path = prefix_path)

|

| 288 |

+

imdb = imagedb.load_imdb()

|

| 289 |

+

image_reader = TestImageLoader(imdb, 1, False)

|

| 290 |

+

|

| 291 |

+

all_boxes = list()

|

| 292 |

+

batch_idx = 0

|

| 293 |

+

|

| 294 |

+

print('size:%d' %image_reader.size)

|

| 295 |

+

for databatch in image_reader:

|

| 296 |

+

if batch_idx % 100 == 0:

|

| 297 |

+

print ("%d images done" % batch_idx)

|

| 298 |

+

im = databatch

|

| 299 |

+

t = time.time()

|

| 300 |

+

|

| 301 |

+

# obtain boxes and aligned boxes

|

| 302 |

+

boxes, boxes_align = mtcnn_detector.detect_pnet(im=im)

|

| 303 |

+

if boxes_align is None:

|

| 304 |

+

all_boxes.append(np.array([]))

|

| 305 |

+

batch_idx += 1

|

| 306 |

+

continue

|

| 307 |

+

if vis:

|

| 308 |

+

rgb_im = cv2.cvtColor(np.asarray(im), cv2.COLOR_BGR2RGB)

|

| 309 |

+

vision.vis_two(rgb_im, boxes, boxes_align)

|

| 310 |

+

|

| 311 |

+

t1 = time.time() - t

|

| 312 |

+

print('cost time ',t1)

|

| 313 |

+

t = time.time()

|

| 314 |

+

all_boxes.append(boxes_align)

|

| 315 |

+

batch_idx += 1

|

| 316 |

+

# if batch_idx == 100:

|

| 317 |

+

# break

|

| 318 |

+

# print("shape of all boxes {0}".format(all_boxes))

|

| 319 |

+

# time.sleep(5)

|

| 320 |

+

|

| 321 |

+

# save_path = model_store_path()

|

| 322 |

+

# './model_store'

|

| 323 |

+

save_path = './model_store'

|

| 324 |

+

|

| 325 |

+

if not os.path.exists(save_path):

|

| 326 |

+

os.mkdir(save_path)

|

| 327 |

+

|

| 328 |

+

save_file = os.path.join(save_path, "detections_%d.pkl" % int(time.time()))

|

| 329 |

+

with open(save_file, 'wb') as f:

|

| 330 |

+

cPickle.dump(all_boxes, f, cPickle.HIGHEST_PROTOCOL)

|

| 331 |

+

|

| 332 |

+

# save_file = './model_store/detections_1588751332.pkl'

|

| 333 |

+

gen_rnet_sample_data(data_dir, anno_file, save_file, prefix_path)

|

| 334 |

+

|

| 335 |

+

|

| 336 |

+

|

| 337 |

+

def gen_rnet_sample_data(data_dir, anno_file, det_boxs_file, prefix_path):

|

| 338 |

+

|

| 339 |

+

"""

|

| 340 |

+

:param data_dir:

|

| 341 |

+

:param anno_file: original annotations file of wider face data

|

| 342 |

+

:param det_boxs_file: detection boxes file

|

| 343 |

+

:param prefix_path:

|

| 344 |

+

:return:

|

| 345 |

+

"""

|

| 346 |

+

|

| 347 |

+

neg_save_dir = os.path.join(data_dir, "24/negative")

|

| 348 |

+

pos_save_dir = os.path.join(data_dir, "24/positive")

|

| 349 |

+

part_save_dir = os.path.join(data_dir, "24/part")

|

| 350 |

+

|

| 351 |

+

|

| 352 |

+

for dir_path in [neg_save_dir, pos_save_dir, part_save_dir]:

|

| 353 |

+

# print(dir_path)

|

| 354 |

+

if not os.path.exists(dir_path):

|

| 355 |

+

os.makedirs(dir_path)

|

| 356 |

+

|

| 357 |

+

|

| 358 |

+

# load ground truth from annotation file

|

| 359 |

+

# format of each line: image/path [x1,y1,x2,y2] for each gt_box in this image

|

| 360 |

+

|

| 361 |

+

with open(anno_file, 'r') as f:

|

| 362 |

+

annotations = f.readlines()

|

| 363 |

+

|

| 364 |

+

image_size = 24

|

| 365 |

+

net = "rnet"

|

| 366 |

+

|

| 367 |

+

im_idx_list = list()

|

| 368 |

+

gt_boxes_list = list()

|

| 369 |

+

num_of_images = len(annotations)

|

| 370 |

+

print ("processing %d images in total" % num_of_images)

|

| 371 |

+

|

| 372 |

+

for annotation in annotations:

|

| 373 |

+

annotation = annotation.strip().split(' ')

|

| 374 |

+

im_idx = os.path.join(prefix_path, annotation[0])

|

| 375 |

+

# im_idx = annotation[0]

|

| 376 |

+

|

| 377 |

+

boxes = list(map(float, annotation[1:]))

|

| 378 |

+

boxes = np.array(boxes, dtype=np.float32).reshape(-1, 4)

|

| 379 |

+

im_idx_list.append(im_idx)

|

| 380 |

+

gt_boxes_list.append(boxes)

|

| 381 |

+

|

| 382 |

+

|

| 383 |

+

# './anno_store'

|

| 384 |

+

save_path = './anno_store'

|

| 385 |

+

if not os.path.exists(save_path):

|

| 386 |

+

os.makedirs(save_path)

|

| 387 |

+

|

| 388 |

+

f1 = open(os.path.join(save_path, 'pos_%d.txt' % image_size), 'w')

|

| 389 |

+

f2 = open(os.path.join(save_path, 'neg_%d.txt' % image_size), 'w')

|

| 390 |

+

f3 = open(os.path.join(save_path, 'part_%d.txt' % image_size), 'w')

|

| 391 |

+

|

| 392 |

+

# print(det_boxs_file)

|

| 393 |

+

det_handle = open(det_boxs_file, 'rb')

|

| 394 |

+

|

| 395 |

+

det_boxes = cPickle.load(det_handle)

|

| 396 |

+

|

| 397 |

+

# an image contain many boxes stored in an array

|

| 398 |

+

print(len(det_boxes), num_of_images)

|

| 399 |

+

# assert len(det_boxes) == num_of_images, "incorrect detections or ground truths"

|

| 400 |

+

|

| 401 |

+

# index of neg, pos and part face, used as their image names

|

| 402 |

+

n_idx = 0

|

| 403 |

+

p_idx = 0

|

| 404 |

+

d_idx = 0

|

| 405 |

+

image_done = 0

|

| 406 |

+

for im_idx, dets, gts in zip(im_idx_list, det_boxes, gt_boxes_list):

|

| 407 |

+

|

| 408 |

+

# if (im_idx+1) == 100:

|

| 409 |

+

# break

|

| 410 |

+

|

| 411 |

+

gts = np.array(gts, dtype=np.float32).reshape(-1, 4)

|

| 412 |

+

if gts.shape[0]==0:

|

| 413 |

+

continue

|

| 414 |

+

if image_done % 100 == 0:

|

| 415 |

+

print("%d images done" % image_done)

|

| 416 |

+

image_done += 1

|

| 417 |

+

|

| 418 |

+

if dets.shape[0] == 0:

|

| 419 |

+

continue

|

| 420 |

+

img = cv2.imread(im_idx)

|

| 421 |

+

# change to square

|

| 422 |

+

dets = convert_to_square(dets)

|

| 423 |

+

dets[:, 0:4] = np.round(dets[:, 0:4])

|

| 424 |

+

neg_num = 0

|

| 425 |

+

for box in dets:

|

| 426 |

+

x_left, y_top, x_right, y_bottom, _ = box.astype(int)

|

| 427 |

+

width = x_right - x_left + 1

|

| 428 |

+

height = y_bottom - y_top + 1

|

| 429 |

+

|

| 430 |

+

# ignore box that is too small or beyond image border

|

| 431 |

+

if width < 20 or x_left < 0 or y_top < 0 or x_right > img.shape[1] - 1 or y_bottom > img.shape[0] - 1:

|

| 432 |

+

continue

|

| 433 |

+

|

| 434 |

+

# compute intersection over union(IoU) between current box and all gt boxes

|

| 435 |

+

Iou = IoU(box, gts)

|

| 436 |

+

cropped_im = img[y_top:y_bottom + 1, x_left:x_right + 1, :]

|

| 437 |

+

resized_im = cv2.resize(cropped_im, (image_size, image_size),

|

| 438 |

+

interpolation=cv2.INTER_LINEAR)

|

| 439 |

+

|

| 440 |

+

# save negative images and write label

|

| 441 |

+

# Iou with all gts must below 0.3

|

| 442 |

+

if np.max(Iou) < 0.3 and neg_num < 60:

|

| 443 |

+

# save the examples

|

| 444 |

+

save_file = os.path.join(neg_save_dir, "%s.jpg" % n_idx)

|

| 445 |

+

# print(save_file)

|

| 446 |

+

f2.write(save_file + ' 0\n')

|

| 447 |

+

cv2.imwrite(save_file, resized_im)

|

| 448 |

+

n_idx += 1

|

| 449 |

+

neg_num += 1

|

| 450 |

+

else:

|

| 451 |

+

# find gt_box with the highest iou

|

| 452 |

+

idx = np.argmax(Iou)

|

| 453 |

+

assigned_gt = gts[idx]

|

| 454 |

+

x1, y1, x2, y2 = assigned_gt

|

| 455 |

+

|

| 456 |

+

# compute bbox reg label

|

| 457 |

+

offset_x1 = (x1 - x_left) / float(width)

|

| 458 |

+

offset_y1 = (y1 - y_top) / float(height)

|

| 459 |

+

offset_x2 = (x2 - x_right) / float(width)

|

| 460 |

+

offset_y2 = (y2 - y_bottom) / float(height)

|

| 461 |

+

|

| 462 |

+

# save positive and part-face images and write labels

|

| 463 |

+

if np.max(Iou) >= 0.65:

|

| 464 |

+

save_file = os.path.join(pos_save_dir, "%s.jpg" % p_idx)

|

| 465 |

+

f1.write(save_file + ' 1 %.2f %.2f %.2f %.2f\n' % (

|

| 466 |

+

offset_x1, offset_y1, offset_x2, offset_y2))

|

| 467 |

+

cv2.imwrite(save_file, resized_im)

|

| 468 |

+

p_idx += 1

|

| 469 |

+

|

| 470 |

+

elif np.max(Iou) >= 0.4:

|

| 471 |

+

save_file = os.path.join(part_save_dir, "%s.jpg" % d_idx)

|

| 472 |

+

f3.write(save_file + ' -1 %.2f %.2f %.2f %.2f\n' % (

|

| 473 |

+

offset_x1, offset_y1, offset_x2, offset_y2))

|

| 474 |

+

cv2.imwrite(save_file, resized_im)

|

| 475 |

+

d_idx += 1

|

| 476 |

+

f1.close()

|

| 477 |

+

f2.close()

|

| 478 |

+

f3.close()

|

| 479 |

+

|

| 480 |

+

def model_store_path():

|

| 481 |

+

return os.path.dirname(os.path.dirname(os.path.dirname(os.path.realpath(__file__))))+"/model_store"

|

| 482 |

+

|

| 483 |

+

def get_Rnet_data(pnet_model):

|

| 484 |

+

gen_rnet_data(traindata_store, annotation_file, pnet_model_file = pnet_model, prefix_path = prefix_path, use_cuda = True)

|

| 485 |

+

|

| 486 |

+

|

| 487 |

+

def assembel_Rnet_data():

|

| 488 |

+

anno_list = []

|

| 489 |

+

|

| 490 |

+

anno_list.append(rnet_postive_file)

|

| 491 |

+

anno_list.append(rnet_part_file)

|

| 492 |

+

anno_list.append(rnet_neg_file)

|

| 493 |

+

# anno_list.append(pnet_landmark_file)

|

| 494 |

+

|

| 495 |

+

chose_count = assemble_data(imglist_filename_rnet ,anno_list)

|

| 496 |

+

print("RNet train annotation result file path:%s" % imglist_filename_rnet)

|

| 497 |

+

#-----------------------------------------------------------------------------------------------------------------------------------------------#

|

| 498 |

+

def gen_onet_data(data_dir, anno_file, pnet_model_file, rnet_model_file, prefix_path='', use_cuda=True, vis=False):

|

| 499 |

+

|

| 500 |

+

|

| 501 |

+

pnet, rnet, _ = create_mtcnn_net(p_model_path=pnet_model_file, r_model_path=rnet_model_file, use_cuda=use_cuda)

|

| 502 |

+

mtcnn_detector = MtcnnDetector(pnet=pnet, rnet=rnet, min_face_size=12)

|

| 503 |

+

|

| 504 |

+

imagedb = ImageDB(anno_file,mode="test",prefix_path=prefix_path)

|

| 505 |

+

imdb = imagedb.load_imdb()

|

| 506 |

+

image_reader = TestImageLoader(imdb,1,False)

|

| 507 |

+

|

| 508 |

+

all_boxes = list()

|

| 509 |

+

batch_idx = 0

|

| 510 |

+

|

| 511 |

+

print('size:%d' % image_reader.size)

|

| 512 |

+

for databatch in image_reader:

|

| 513 |

+

if batch_idx % 50 == 0:

|

| 514 |

+

print("%d images done" % batch_idx)

|

| 515 |

+

|

| 516 |

+

im = databatch

|

| 517 |

+

|

| 518 |

+

t = time.time()

|

| 519 |

+

|

| 520 |

+

# pnet detection = [x1, y1, x2, y2, score, reg]

|

| 521 |

+

p_boxes, p_boxes_align = mtcnn_detector.detect_pnet(im=im)

|

| 522 |

+

|

| 523 |

+

t0 = time.time() - t

|

| 524 |

+

t = time.time()

|

| 525 |

+

# rnet detection

|

| 526 |

+

boxes, boxes_align = mtcnn_detector.detect_rnet(im=im, dets=p_boxes_align)

|

| 527 |

+

|

| 528 |

+

t1 = time.time() - t

|

| 529 |

+

print('cost time pnet--',t0,' rnet--',t1)

|

| 530 |

+

t = time.time()

|

| 531 |

+

|

| 532 |

+

if boxes_align is None:

|

| 533 |

+

all_boxes.append(np.array([]))

|

| 534 |

+

batch_idx += 1

|

| 535 |

+

continue

|

| 536 |

+

if vis:

|

| 537 |

+

rgb_im = cv2.cvtColor(np.asarray(im), cv2.COLOR_BGR2RGB)

|

| 538 |

+

vision.vis_two(rgb_im, boxes, boxes_align)

|

| 539 |

+

|

| 540 |

+

|

| 541 |

+

all_boxes.append(boxes_align)

|

| 542 |

+

batch_idx += 1

|

| 543 |

+

|

| 544 |

+

save_path = './model_store'

|

| 545 |

+

|

| 546 |

+

if not os.path.exists(save_path):

|

| 547 |

+

os.mkdir(save_path)

|

| 548 |

+

|

| 549 |

+

save_file = os.path.join(save_path, "detections_%d.pkl" % int(time.time()))

|

| 550 |

+

with open(save_file, 'wb') as f:

|

| 551 |

+

cPickle.dump(all_boxes, f, cPickle.HIGHEST_PROTOCOL)

|

| 552 |

+

|

| 553 |

+

|

| 554 |

+

gen_onet_sample_data(data_dir,anno_file,save_file,prefix_path)

|

| 555 |

+

|

| 556 |

+

|

| 557 |

+

|

| 558 |

+

def gen_onet_sample_data(data_dir,anno_file,det_boxs_file,prefix):

|

| 559 |

+

|

| 560 |

+

neg_save_dir = os.path.join(data_dir, "48/negative")

|

| 561 |

+

pos_save_dir = os.path.join(data_dir, "48/positive")

|

| 562 |

+

part_save_dir = os.path.join(data_dir, "48/part")

|

| 563 |

+

|

| 564 |

+

for dir_path in [neg_save_dir, pos_save_dir, part_save_dir]:

|

| 565 |

+

if not os.path.exists(dir_path):

|

| 566 |

+

os.makedirs(dir_path)

|

| 567 |

+

|

| 568 |

+

|

| 569 |

+

# load ground truth from annotation file

|

| 570 |

+

# format of each line: image/path [x1,y1,x2,y2] for each gt_box in this image

|

| 571 |

+

|

| 572 |

+

with open(anno_file, 'r') as f:

|

| 573 |

+

annotations = f.readlines()

|

| 574 |

+

|

| 575 |

+

image_size = 48

|

| 576 |

+

net = "onet"

|

| 577 |

+

|

| 578 |

+

im_idx_list = list()

|

| 579 |

+

gt_boxes_list = list()

|

| 580 |

+

num_of_images = len(annotations)

|

| 581 |

+

print("processing %d images in total" % num_of_images)

|

| 582 |

+

|

| 583 |

+

for annotation in annotations:

|

| 584 |

+

annotation = annotation.strip().split(' ')

|

| 585 |

+

im_idx = os.path.join(prefix,annotation[0])

|

| 586 |

+

|

| 587 |

+

boxes = list(map(float, annotation[1:]))

|

| 588 |

+

boxes = np.array(boxes, dtype=np.float32).reshape(-1, 4)

|

| 589 |

+

im_idx_list.append(im_idx)

|

| 590 |

+

gt_boxes_list.append(boxes)

|

| 591 |

+

|

| 592 |

+

save_path = './anno_store'

|

| 593 |

+

if not os.path.exists(save_path):

|

| 594 |

+

os.makedirs(save_path)

|

| 595 |

+

|

| 596 |

+

f1 = open(os.path.join(save_path, 'pos_%d.txt' % image_size), 'w')

|

| 597 |

+

f2 = open(os.path.join(save_path, 'neg_%d.txt' % image_size), 'w')

|

| 598 |