Spaces:

Runtime error

Runtime error

Commit

·

a63752b

1

Parent(s):

01be92c

Upload 18 files

Browse files- Language-Detection.zip +3 -0

- crop.py +25 -0

- data.txt +1 -0

- detect_language.py +68 -0

- display_lang.py +9 -0

- gui.py +210 -0

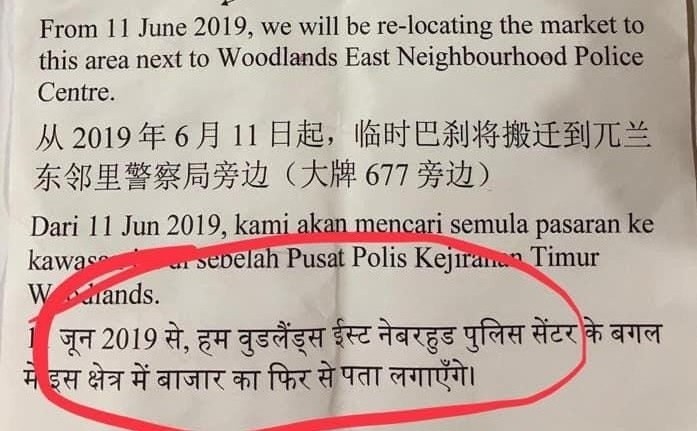

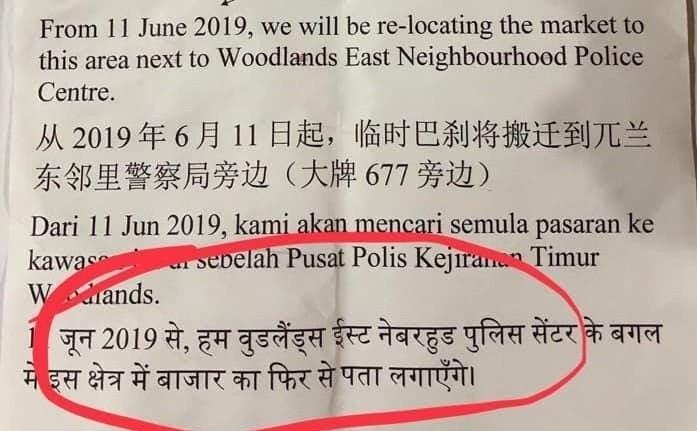

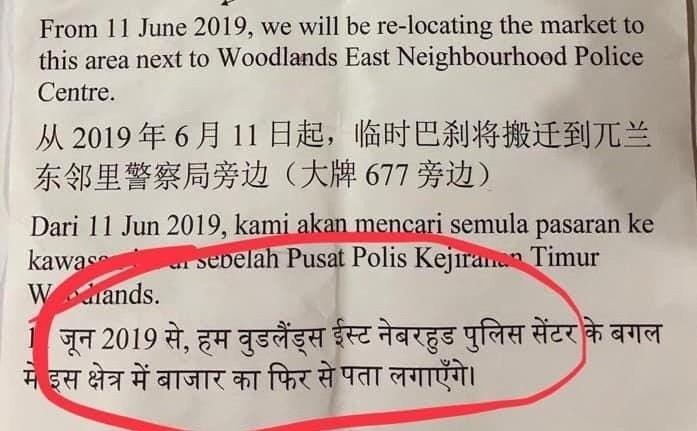

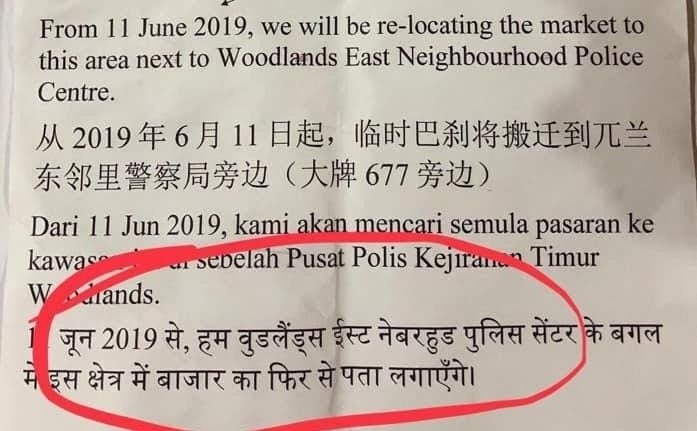

- image.jpg +0 -0

- image_resize.jpeg +0 -0

- image_resize.jpg +0 -0

- image_resize.png +0 -0

- language detection.desktop +10 -0

- language_detection.py +79 -0

- load_json.md +9 -0

- load_text.py +11 -0

- readme.md +110 -0

- setup.py +35 -0

- steps.md +18 -0

- text_extraction.py +445 -0

Language-Detection.zip

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:7be9adf360b7ace4df5881e58a60d6833d5b466499e1e940f013e65cf395d08b

|

| 3 |

+

size 555074

|

crop.py

ADDED

|

@@ -0,0 +1,25 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

import argparse

|

| 3 |

+

import cv2

|

| 4 |

+

import matplotlib.pyplot as plt

|

| 5 |

+

import numpy as np

|

| 6 |

+

|

| 7 |

+

|

| 8 |

+

import subprocess

|

| 9 |

+

import sys

|

| 10 |

+

import time

|

| 11 |

+

from PIL import Image

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

def crop():

|

| 16 |

+

img = Image.open("image.jpg") #process the image

|

| 17 |

+

|

| 18 |

+

if img.height > 300 or img.width > 300: #get the height and width of the image

|

| 19 |

+

output_size = (900, 900) #crop the image to the output size

|

| 20 |

+

img.thumbnail(output_size)

|

| 21 |

+

ext = ['.jpeg', '.png', '.jpg'] #create list of extensions to save as

|

| 22 |

+

for extension in ext: #loop over the list

|

| 23 |

+

img.save(f"image_resize{extension}")

|

| 24 |

+

|

| 25 |

+

crop()

|

data.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

to convert a new file format to json we nee.

|

detect_language.py

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#sound/audio modules

|

| 2 |

+

from gtts import gTTS

|

| 3 |

+

import gtts

|

| 4 |

+

from playsound import playsound

|

| 5 |

+

|

| 6 |

+

#import the operating system

|

| 7 |

+

import os

|

| 8 |

+

|

| 9 |

+

#other modules

|

| 10 |

+

import argparse

|

| 11 |

+

import numpy

|

| 12 |

+

from textblob import TextBlob

|

| 13 |

+

import extract

|

| 14 |

+

import csv

|

| 15 |

+

from textblob import TextBlob

|

| 16 |

+

from langdetect import detect

|

| 17 |

+

import pycld2 as cld2

|

| 18 |

+

# Specifying the language for

|

| 19 |

+

# detection

|

| 20 |

+

|

| 21 |

+

#create a function for the process

|

| 22 |

+

def setup_language_processing():

|

| 23 |

+

|

| 24 |

+

with open('processed/text_detected/text_detected.txt', "r") as f:

|

| 25 |

+

contents = f.read()

|

| 26 |

+

#print (contents)

|

| 27 |

+

the_lang = detect(contents)

|

| 28 |

+

|

| 29 |

+

with open('processed/text_detected/language_type.txt', 'w', newline="") as file:

|

| 30 |

+

isReliable, textBytesFound, details, vectors = cld2.detect(

|

| 31 |

+

contents, returnVectors=True

|

| 32 |

+

)

|

| 33 |

+

print(vectors)

|

| 34 |

+

file.write("The output of the languages detected in the given image are as follows;\n " + str(vectors))

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

# read the file the output text detected processed is in

|

| 38 |

+

with open('processed/text_detected/language_type.txt', "r") as f:

|

| 39 |

+

contents = f.read()

|

| 40 |

+

#print (contents)

|

| 41 |

+

#save the read file in a variable audio

|

| 42 |

+

audio = contents

|

| 43 |

+

|

| 44 |

+

#get the variable and start to process it for the sound output

|

| 45 |

+

tts = gtts.gTTS(audio)

|

| 46 |

+

|

| 47 |

+

# save the audio file

|

| 48 |

+

tts.save("processed/audio/audio_detected.mp3")

|

| 49 |

+

|

| 50 |

+

# play the audio file

|

| 51 |

+

playsound("processed/audio/audio_detected.mp3")

|

| 52 |

+

|

| 53 |

+

# in spanish

|

| 54 |

+

#tts = gtts.gTTS(audio, lang="es")

|

| 55 |

+

#tts.save("processed/audio/spanish_audio_detected.mp3")

|

| 56 |

+

#playsound("processed/audio/spanish_audio_detected.mp3")

|

| 57 |

+

|

| 58 |

+

# all available languages along with their IETF tag

|

| 59 |

+

#print(gtts.lang.tts_langs())

|

| 60 |

+

|

| 61 |

+

#if the_lang == 'en':

|

| 62 |

+

# file.write("The output of the language detected is " +the_lang+"glish")

|

| 63 |

+

#isReliable, textBytesFound, details, vectors = cld2.detect(

|

| 64 |

+

# the_lang, returnVectors=True

|

| 65 |

+

#)

|

| 66 |

+

#print(vectors)

|

| 67 |

+

|

| 68 |

+

setup_language_processing()

|

display_lang.py

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import tkinter as tk

|

| 2 |

+

from detect_language import setup_language_processing

|

| 3 |

+

|

| 4 |

+

def window():

|

| 5 |

+

label = tk.Label(text="this is the language")

|

| 6 |

+

label.pack()

|

| 7 |

+

window.mainloop()

|

| 8 |

+

|

| 9 |

+

window()

|

gui.py

ADDED

|

@@ -0,0 +1,210 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from cgitb import reset

|

| 2 |

+

from pdb import Restart

|

| 3 |

+

import tkinter

|

| 4 |

+

import tkinter.messagebox

|

| 5 |

+

from turtle import resetscreen

|

| 6 |

+

import customtkinter

|

| 7 |

+

#sound/audio modules

|

| 8 |

+

from gtts import gTTS

|

| 9 |

+

import gtts

|

| 10 |

+

from playsound import playsound

|

| 11 |

+

|

| 12 |

+

#import the operating system

|

| 13 |

+

import os

|

| 14 |

+

|

| 15 |

+

#other modules

|

| 16 |

+

import argparse

|

| 17 |

+

import numpy

|

| 18 |

+

from textblob import TextBlob

|

| 19 |

+

import extract

|

| 20 |

+

import csv

|

| 21 |

+

from textblob import TextBlob

|

| 22 |

+

from langdetect import detect

|

| 23 |

+

import pycld2 as cld2

|

| 24 |

+

|

| 25 |

+

customtkinter.set_appearance_mode("System") # Modes: "System" (standard), "Dark", "Light"

|

| 26 |

+

customtkinter.set_default_color_theme("blue") # Themes: "blue" (standard), "green", "dark-blue"

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

class App(customtkinter.CTk):

|

| 30 |

+

def restart(self):

|

| 31 |

+

os.system('python3 gui.py')

|

| 32 |

+

def startup(self):

|

| 33 |

+

os.system('python3 setup.py')

|

| 34 |

+

self.sidebar_button_2 = customtkinter.CTkLabel(self.sidebar_frame, text="succeeded..\ncheck directories")

|

| 35 |

+

self.sidebar_button_2.grid(row=3, column=0, padx=20, pady=10)

|

| 36 |

+

|

| 37 |

+

def __init__(self):

|

| 38 |

+

super().__init__()

|

| 39 |

+

|

| 40 |

+

# configure window

|

| 41 |

+

self.title("Language detection using python")

|

| 42 |

+

self.geometry(f"{1100}x{580}")

|

| 43 |

+

|

| 44 |

+

# configure grid layout (4x4)

|

| 45 |

+

self.grid_columnconfigure(1, weight=1)

|

| 46 |

+

self.grid_columnconfigure((2, 3), weight=0)

|

| 47 |

+

self.grid_rowconfigure((0, 1, 2), weight=1)

|

| 48 |

+

|

| 49 |

+

# create sidebar frame with widgets

|

| 50 |

+

self.sidebar_frame = customtkinter.CTkFrame(self, width=140, corner_radius=0)

|

| 51 |

+

self.sidebar_frame.grid(row=0, column=0, rowspan=4, sticky="nsew")

|

| 52 |

+

self.sidebar_frame.grid_rowconfigure(4, weight=1)

|

| 53 |

+

self.logo_label = customtkinter.CTkLabel(self.sidebar_frame, text="Language Detection", font=customtkinter.CTkFont(size=20, weight="bold"))

|

| 54 |

+

self.logo_label.grid(row=0, column=0, padx=20, pady=(20, 10))

|

| 55 |

+

#self.sidebar_button_1 = customtkinter.CTkButton(self.sidebar_frame, command=self.sidebar_button_event)

|

| 56 |

+

#self.sidebar_button_1.grid(row=1, column=0, padx=20, pady=10)

|

| 57 |

+

self.sidebar_button_2 = customtkinter.CTkButton(self.sidebar_frame, text="Get Started Now", command=self.startup)

|

| 58 |

+

self.sidebar_button_2.grid(row=2, column=0, padx=20, pady=10)

|

| 59 |

+

#self.sidebar_button_3 = customtkinter.CTkButton(self.sidebar_frame, command=self.sidebar_button_event)

|

| 60 |

+

#self.sidebar_button_3.grid(row=3, column=0, padx=20, pady=10)

|

| 61 |

+

self.appearance_mode_label = customtkinter.CTkLabel(self.sidebar_frame, text="Appearance Mode:", anchor="w")

|

| 62 |

+

self.appearance_mode_label.grid(row=5, column=0, padx=20, pady=(10, 0))

|

| 63 |

+

self.appearance_mode_optionemenu = customtkinter.CTkOptionMenu(self.sidebar_frame, values=["Light", "Dark", "System"],

|

| 64 |

+

command=self.change_appearance_mode_event)

|

| 65 |

+

self.appearance_mode_optionemenu.grid(row=6, column=0, padx=20, pady=(10, 10))

|

| 66 |

+

self.scaling_label = customtkinter.CTkLabel(self.sidebar_frame, text="UI Scaling:", anchor="w")

|

| 67 |

+

self.scaling_label.grid(row=7, column=0, padx=20, pady=(10, 0))

|

| 68 |

+

self.scaling_optionemenu = customtkinter.CTkOptionMenu(self.sidebar_frame, values=["80%", "90%", "100%", "110%", "120%"],

|

| 69 |

+

command=self.change_scaling_event)

|

| 70 |

+

self.scaling_optionemenu.grid(row=8, column=0, padx=20, pady=(10, 20))

|

| 71 |

+

|

| 72 |

+

# create main entry and button

|

| 73 |

+

self.entry = customtkinter.CTkEntry(self, placeholder_text="", state='disabled')

|

| 74 |

+

self.entry.grid(row=3, column=1, columnspan=2, padx=(20, 0), pady=(20, 20), sticky="nsew")

|

| 75 |

+

|

| 76 |

+

self.main_button_1 = customtkinter.CTkButton(master=self, text="EXIT", command=exit, fg_color="transparent", border_width=2, text_color=("gray10", "#DCE4EE"))

|

| 77 |

+

self.main_button_1.grid(row=3, column=3, padx=(20, 20), pady=(20, 20), sticky="nsew")

|

| 78 |

+

|

| 79 |

+

# create textbox

|

| 80 |

+

self.textbox = customtkinter.CTkTextbox(self, width=250)

|

| 81 |

+

self.textbox.grid(row=0, column=1, padx=(20, 0), pady=(20, 0), sticky="nsew")

|

| 82 |

+

|

| 83 |

+

# create tabview

|

| 84 |

+

self.tabview = customtkinter.CTkTabview(self, width=250)

|

| 85 |

+

self.tabview.grid(row=0, column=2, padx=(20, 0), pady=(20, 0), sticky="nsew")

|

| 86 |

+

self.tabview.add("Detect Language")

|

| 87 |

+

self.tabview.add("Help")

|

| 88 |

+

self.tabview.add("About Student")

|

| 89 |

+

self.tabview.tab("Detect Language").grid_columnconfigure(0, weight=1) # configure grid of individual tabs

|

| 90 |

+

self.tabview.tab("Help").grid_columnconfigure(0, weight=1)

|

| 91 |

+

self.tabview.tab("About Student").grid_columnconfigure(0, weight=1)

|

| 92 |

+

|

| 93 |

+

#self.optionmenu_1 = customtkinter.CTkOptionMenu(self.tabview.tab("CTkTabview"), dynamic_resizing=False,

|

| 94 |

+

# values=["Value 1", "Value 2", "Value Long Long Long"])

|

| 95 |

+

#self.optionmenu_1.grid(row=0, column=0, padx=20, pady=(20, 10))

|

| 96 |

+

#self.combobox_1 = customtkinter.CTkComboBox(self.tabview.tab("CTkTabview"),

|

| 97 |

+

# values=["Value 1", "Value 2", "Value Long....."])

|

| 98 |

+

#self.combobox_1.grid(row=1, column=0, padx=20, pady=(10, 10))

|

| 99 |

+

self.string_input_button = customtkinter.CTkButton(self.tabview.tab("Detect Language"), text="Start detection",

|

| 100 |

+

command=self.open_input_dialog_event)

|

| 101 |

+

self.string_input_button.grid(row=2, column=0, padx=20, pady=(10, 10))

|

| 102 |

+

|

| 103 |

+

self.label_tab_1 = customtkinter.CTkLabel(self.tabview.tab("Detect Language"), text="To detect a language\nby input, click\nthe button below.")

|

| 104 |

+

self.label_tab_1.grid(row=0, column=0, padx=20, pady=20)

|

| 105 |

+

|

| 106 |

+

|

| 107 |

+

self.label_tab_2 = customtkinter.CTkLabel(self.tabview.tab("Help"), text="Project structure: \n\nThe program gets the text \non the image given, process it with \npytesseract and some other modules \nwritten in the program to get \nthe language type, then \nto get the audio file output \nthe program makes a request\n to google to get \nsynthesis with module gtts. ")

|

| 108 |

+

self.label_tab_2.grid(row=0, column=0, padx=20, pady=20)

|

| 109 |

+

self.label_tab_2 = customtkinter.CTkLabel(self.tabview.tab("About Student"), text="NAME: Ogo-Oluwa Convenant Mobolaji \n\n MATRIC NO: HNDCOM000 \n DEPT: COMPUTER SCI \n SCHOOL: FCAH&PT \n SET: 2022/2023")

|

| 110 |

+

self.label_tab_2.grid(row=0, column=0, padx=20, pady=20)

|

| 111 |

+

|

| 112 |

+

# create display text for language detected

|

| 113 |

+

self.radiobutton_frame = customtkinter.CTkFrame(self)

|

| 114 |

+

self.radiobutton_frame.grid(row=0, column=3, padx=(20, 20), pady=(20, 0), sticky="nsew")

|

| 115 |

+

|

| 116 |

+

#read file language detected output

|

| 117 |

+

with open('processed/text_detected/language_input_type.txt', "r") as f:

|

| 118 |

+

contents = f.read()

|

| 119 |

+

|

| 120 |

+

self.label_tab_2 = customtkinter.CTkLabel(master=self.radiobutton_frame, text="Note: please reload \nthe program to check\n for the text you just\n entered. ")

|

| 121 |

+

self.label_tab_2.grid(row=0, column=2, padx=10, pady=10)

|

| 122 |

+

|

| 123 |

+

self.slider_progressbar_frame = customtkinter.CTkFrame(self, fg_color="transparent")

|

| 124 |

+

self.slider_progressbar_frame.grid(row=1, column=1, columnspan=2, padx=(20, 0), pady=(20, 0), sticky="nsew")

|

| 125 |

+

self.slider_progressbar_frame.grid_columnconfigure(0, weight=1)

|

| 126 |

+

self.slider_progressbar_frame.grid_rowconfigure(4, weight=1)

|

| 127 |

+

#self.seg_button_1 = customtkinter.CTkSegmentedButton(self.slider_progressbar_frame)

|

| 128 |

+

#self.seg_button_1.grid(row=0, column=0, padx=(20, 10), pady=(10, 10), sticky="ew")

|

| 129 |

+

self.progressbar_1 = customtkinter.CTkProgressBar(self.slider_progressbar_frame)

|

| 130 |

+

self.progressbar_1.grid(row=1, column=0, padx=(20, 10), pady=(10, 10), sticky="ew")

|

| 131 |

+

self.progressbar_2 = customtkinter.CTkProgressBar(self.slider_progressbar_frame)

|

| 132 |

+

self.progressbar_2.grid(row=2, column=0, padx=(20, 10), pady=(10, 10), sticky="ew")

|

| 133 |

+

|

| 134 |

+

#self.slider_1 = customtkinter.CTkSlider(self.slider_progressbar_frame, from_=0, to=1, number_of_steps=4)

|

| 135 |

+

#self.slider_1.grid(row=3, column=0, padx=(20, 10), pady=(10, 10), sticky="ew")

|

| 136 |

+

|

| 137 |

+

self.slider_1 = customtkinter.CTkLabel(self.slider_progressbar_frame, text=contents)

|

| 138 |

+

self.slider_1.grid(row=3, column=0, padx=(20, 10), pady=(10, 10), sticky="ew")

|

| 139 |

+

|

| 140 |

+

#button

|

| 141 |

+

self.slider_2 = customtkinter.CTkButton(self.slider_progressbar_frame, text="Reload program", command=lambda:exit(self.restart()))

|

| 142 |

+

self.slider_2.grid(row=4, column=0, padx=(20, 10), pady=(10, 10), sticky="ew")

|

| 143 |

+

|

| 144 |

+

#self.slider_2 = customtkinter.CTkButton(self.slider_progressbar_frame, orientation="vertical")

|

| 145 |

+

#self.slider_2.grid(row=0, column=1, rowspan=5, padx=(10, 10), pady=(10, 10), sticky="ns")

|

| 146 |

+

self.progressbar_3 = customtkinter.CTkProgressBar(self.slider_progressbar_frame, orientation="vertical")

|

| 147 |

+

self.progressbar_3.grid(row=0, column=2, rowspan=5, padx=(10, 20), pady=(10, 10), sticky="ns")

|

| 148 |

+

|

| 149 |

+

# set default values

|

| 150 |

+

self.appearance_mode_optionemenu.set("Dark")

|

| 151 |

+

self.scaling_optionemenu.set("100%")

|

| 152 |

+

#self.optionmenu_1.set("CTkOptionmenu")

|

| 153 |

+

#self.combobox_1.set("CTkComboBox")

|

| 154 |

+

self.slider_1.configure()

|

| 155 |

+

#self.slider_2.configure(command=reset)

|

| 156 |

+

self.progressbar_1.configure(mode="indeterminnate")

|

| 157 |

+

self.progressbar_1.start()

|

| 158 |

+

self.textbox.insert("0.0", "project description\n\n" + "A language detection from an image using python3 and pytesseract.\n\nAIM: The aim of this project is to detect the type of language of text found in an image \n\nHow to run;\n*Place the image to be detected in the project folder directory and click 'get started now'." )

|

| 159 |

+

#self.seg_button_1.configure(values=["CTkSegmentedButton", "Value 2", "Value 3"])

|

| 160 |

+

#self.seg_button_1.set("Value 2")

|

| 161 |

+

|

| 162 |

+

def open_input_dialog_event(self):

|

| 163 |

+

dialog = customtkinter.CTkInputDialog(text="Input your text:", title="Language Detection")

|

| 164 |

+

dialogg = dialog.get_input()

|

| 165 |

+

print("CTkInputDialog:", dialogg)

|

| 166 |

+

#save text in a file

|

| 167 |

+

|

| 168 |

+

with open('processed/text_detected/text_input_detected.txt', 'w', newline="") as file:

|

| 169 |

+

file.write(dialogg)

|

| 170 |

+

|

| 171 |

+

with open('processed/text_detected/text_input_detected.txt', "r") as f:

|

| 172 |

+

contents = f.read()

|

| 173 |

+

|

| 174 |

+

with open('processed/text_detected/language_input_type.txt', 'w', newline="") as file:

|

| 175 |

+

isReliable, textBytesFound, details, vectors = cld2.detect(

|

| 176 |

+

contents, returnVectors=True

|

| 177 |

+

)

|

| 178 |

+

print(vectors)

|

| 179 |

+

file.write("The output of the languages detected in the text entered are as follows;\n " + str(vectors))

|

| 180 |

+

|

| 181 |

+

# read the file the output text detected processed is in and make it an audio file

|

| 182 |

+

with open('processed/text_detected/language_input_type.txt', "r") as f:

|

| 183 |

+

contents = f.read()

|

| 184 |

+

|

| 185 |

+

#save the read file in a variable audio

|

| 186 |

+

audio = contents

|

| 187 |

+

|

| 188 |

+

#get the variable and start to process it for the sound output

|

| 189 |

+

tts = gtts.gTTS(audio)

|

| 190 |

+

|

| 191 |

+

# save the audio file

|

| 192 |

+

tts.save("processed/audio/audio_input_detected.mp3")

|

| 193 |

+

|

| 194 |

+

# play the audio file

|

| 195 |

+

playsound("processed/audio/audio_input_detected.mp3")

|

| 196 |

+

|

| 197 |

+

def change_appearance_mode_event(self, new_appearance_mode: str):

|

| 198 |

+

customtkinter.set_appearance_mode(new_appearance_mode)

|

| 199 |

+

|

| 200 |

+

def change_scaling_event(self, new_scaling: str):

|

| 201 |

+

new_scaling_float = int(new_scaling.replace("%", "")) / 100

|

| 202 |

+

customtkinter.set_widget_scaling(new_scaling_float)

|

| 203 |

+

|

| 204 |

+

def sidebar_button_event(self):

|

| 205 |

+

print("sidebar_button click")

|

| 206 |

+

|

| 207 |

+

|

| 208 |

+

if __name__ == "__main__":

|

| 209 |

+

app = App()

|

| 210 |

+

app.mainloop()

|

image.jpg

ADDED

|

image_resize.jpeg

ADDED

|

image_resize.jpg

ADDED

|

image_resize.png

ADDED

|

language detection.desktop

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

[Desktop Entry]

|

| 2 |

+

Version=1.0

|

| 3 |

+

Type=Application

|

| 4 |

+

Name=language detection

|

| 5 |

+

Comment=Language Text Detection from an Image

|

| 6 |

+

Exec=python3 gui.py

|

| 7 |

+

Icon=clang

|

| 8 |

+

Path=/home/xbase/Documents/covety_project_image_text_detection

|

| 9 |

+

Terminal=true

|

| 10 |

+

StartupNotify=false

|

language_detection.py

ADDED

|

@@ -0,0 +1,79 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#import all the required libraries.

|

| 2 |

+

import pandas as pd

|

| 3 |

+

import numpy as np

|

| 4 |

+

import re

|

| 5 |

+

import seaborn as sns

|

| 6 |

+

import matplotlib.pyplot as plt

|

| 7 |

+

import warnings

|

| 8 |

+

import csv

|

| 9 |

+

warnings.simplefilter("ignore")

|

| 10 |

+

import sklearn

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

# Loading the dataset

|

| 14 |

+

data = pd.read_csv("Language_Detection.csv")

|

| 15 |

+

# value count for each language

|

| 16 |

+

data["Language"].value_counts()

|

| 17 |

+

|

| 18 |

+

# separating the independent and dependant features

|

| 19 |

+

X = data["Text"]

|

| 20 |

+

y = data["Language"]

|

| 21 |

+

# converting categorical variables to numerical

|

| 22 |

+

from sklearn.preprocessing import LabelEncoder

|

| 23 |

+

le = LabelEncoder()

|

| 24 |

+

y = le.fit_transform(y)

|

| 25 |

+

|

| 26 |

+

# creating a list for appending the preprocessed text

|

| 27 |

+

data_list = []

|

| 28 |

+

# iterating through all the text

|

| 29 |

+

for text in X:

|

| 30 |

+

# removing the symbols and numbers

|

| 31 |

+

text = re.sub(r'[!@#$(),n"%^*?:;~`0-9]', ' ', text)

|

| 32 |

+

text = re.sub(r'[[]]', ' ', text)

|

| 33 |

+

# converting the text to lower case

|

| 34 |

+

text = text.lower()

|

| 35 |

+

# appending to data_list

|

| 36 |

+

data_list.append(text)

|

| 37 |

+

|

| 38 |

+

# creating bag of words using countvectorizer

|

| 39 |

+

from sklearn.feature_extraction.text import CountVectorizer

|

| 40 |

+

cv = CountVectorizer()

|

| 41 |

+

X = cv.fit_transform(data_list).toarray()

|

| 42 |

+

X.shape

|

| 43 |

+

|

| 44 |

+

#train test splitting

|

| 45 |

+

from sklearn.model_selection import train_test_split

|

| 46 |

+

x_train, x_test, y_train, y_test = train_test_split(X, y, test_size = 0.20)

|

| 47 |

+

|

| 48 |

+

#model creation and prediction

|

| 49 |

+

from sklearn.naive_bayes import MultinomialNB

|

| 50 |

+

model = MultinomialNB()

|

| 51 |

+

model.fit(x_train, y_train)

|

| 52 |

+

|

| 53 |

+

# prediction

|

| 54 |

+

y_pred = model.predict(x_test)

|

| 55 |

+

|

| 56 |

+

# model evaluation

|

| 57 |

+

from sklearn.metrics import accuracy_score, confusion_matrix, classification_report

|

| 58 |

+

ac = accuracy_score(y_test, y_pred)

|

| 59 |

+

cm = confusion_matrix(y_test, y_pred)

|

| 60 |

+

|

| 61 |

+

print("Accuracy is :",ac)

|

| 62 |

+

|

| 63 |

+

# visualising the confusion matrix

|

| 64 |

+

plt.figure(figsize=(15,10))

|

| 65 |

+

sns.heatmap(cm, annot = True)

|

| 66 |

+

plt.show()

|

| 67 |

+

|

| 68 |

+

# function for predicting language

|

| 69 |

+

def predict(text):

|

| 70 |

+

x = cv.transform([text]).toarray()

|

| 71 |

+

lang = model.predict(x)

|

| 72 |

+

lang = le.inverse_transform(lang)

|

| 73 |

+

print("The langauge is in",lang[0])

|

| 74 |

+

|

| 75 |

+

# English

|

| 76 |

+

#prediction("load the texts")

|

| 77 |

+

|

| 78 |

+

#with open('processed/text_detected/language_detected.txt', 'w', newline="") as file:

|

| 79 |

+

# csv.writer(file, delimiter=" ").writerows(prediction)

|

load_json.md

ADDED

|

@@ -0,0 +1,9 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import json

|

| 2 |

+

from

|

| 3 |

+

|

| 4 |

+

with open('posts.json') as f:

|

| 5 |

+

posts_json = json.load(f)

|

| 6 |

+

|

| 7 |

+

for post in posts_json:

|

| 8 |

+

post = Post(text=post['text'])

|

| 9 |

+

post.save()

|

load_text.py

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import jpype

|

| 2 |

+

import asposecells

|

| 3 |

+

jpype.startJVM()

|

| 4 |

+

from asposecells.api import Workbook

|

| 5 |

+

workbook = Workbook("processed/text_detected/text_detected.txt")

|

| 6 |

+

workbook.save("processed/load_json/output.json")

|

| 7 |

+

jpype.shutdownJVM()

|

| 8 |

+

|

| 9 |

+

with open('processed/text_detected/text_detected.txt') as f:

|

| 10 |

+

contents = f.read

|

| 11 |

+

|

readme.md

ADDED

|

@@ -0,0 +1,110 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Introduction

|

| 2 |

+

Every Machine Learning enthusiast has a dream of building/working on a cool project, isn’t it? Mere understandings of the theory aren’t enough, you need to work on projects, try to deploy them, and learn from them. Moreover, working on specific domains like NLP gives you wide opportunities and problem statements to explore. Through this article, I wish to introduce you to an amazing project, the Language Detection model using Natural Language Processing. This will take you through a real-world example of ML(application to say). So, let’s not wait anymore.

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

About the dataset

|

| 6 |

+

We are using the Language Detection dataset, which contains text details for 17 different languages.

|

| 7 |

+

|

| 8 |

+

Languages are:

|

| 9 |

+

|

| 10 |

+

* English

|

| 11 |

+

|

| 12 |

+

* Portuguese

|

| 13 |

+

* French

|

| 14 |

+

|

| 15 |

+

* Greek

|

| 16 |

+

|

| 17 |

+

* Dutch

|

| 18 |

+

|

| 19 |

+

* Spanish

|

| 20 |

+

|

| 21 |

+

* Japanese

|

| 22 |

+

|

| 23 |

+

* Russian

|

| 24 |

+

|

| 25 |

+

* Danish

|

| 26 |

+

|

| 27 |

+

* Italian

|

| 28 |

+

|

| 29 |

+

* Turkish

|

| 30 |

+

|

| 31 |

+

* Swedish

|

| 32 |

+

|

| 33 |

+

* Arabic

|

| 34 |

+

|

| 35 |

+

* Malayalam

|

| 36 |

+

|

| 37 |

+

* Hindi

|

| 38 |

+

|

| 39 |

+

* Tamil

|

| 40 |

+

|

| 41 |

+

* Telugu

|

| 42 |

+

|

| 43 |

+

* Hebrews

|

| 44 |

+

|

| 45 |

+

* Swahili

|

| 46 |

+

|

| 47 |

+

* France

|

| 48 |

+

|

| 49 |

+

* Korea

|

| 50 |

+

|

| 51 |

+

* Russia

|

| 52 |

+

|

| 53 |

+

* Thailand

|

| 54 |

+

|

| 55 |

+

* Japan

|

| 56 |

+

|

| 57 |

+

Using the text we have to create a model which will be able to predict the given language. This is a solution for many artificial intelligence applications and computational linguists. These kinds of prediction systems are widely used in electronic devices such as mobiles, laptops, etc for machine translation, and also on robots. It helps in tracking and identifying multilingual documents too. The domain of NLP is still a lively area of researchers.

|

| 58 |

+

|

| 59 |

+

Implementation

|

| 60 |

+

Importing libraries and dataset

|

| 61 |

+

#we will import all the required libraries

|

| 62 |

+

|

| 63 |

+

**modules used**

|

| 64 |

+

pandas

|

| 65 |

+

numpy

|

| 66 |

+

re

|

| 67 |

+

seaborn

|

| 68 |

+

matplotlib.pyplot

|

| 69 |

+

warnings

|

| 70 |

+

|

| 71 |

+

next, Separating Independent and Dependent features

|

| 72 |

+

Now we can separate the dependent and independent variables, here text data is the independent variable and the language name is the dependent variable.

|

| 73 |

+

|

| 74 |

+

Label Encoding

|

| 75 |

+

Our output variable, the name of languages is a categorical variable. For training the model we should have to convert it into a numerical form, so we are performing label encoding on that output variable. For this process, we are importing LabelEncoder from sklearn.

|

| 76 |

+

|

| 77 |

+

Text Preprocessing

|

| 78 |

+

This is a dataset created using scraping the Wikipedia, so it contains many unwanted symbols, numbers which will affect the quality of our model. So we should perform text preprocessing techniques.

|

| 79 |

+

|

| 80 |

+

Bag of Words

|

| 81 |

+

As we all know that, not only the output feature but also the input feature should be of the numerical form. So we are converting text into numerical form by creating a Bag of Words model using CountVectorizer.

|

| 82 |

+

|

| 83 |

+

Train Test Splitting

|

| 84 |

+

We preprocessed our input and output variable. The next step is to create the training set, for training the model and test set, for evaluating the test set. For this process, we are using a train test split.

|

| 85 |

+

|

| 86 |

+

Model Training and Prediction

|

| 87 |

+

The model creation part. We are using the naive_bayes algorithm for our model creation. Then training the model using the training set.

|

| 88 |

+

|

| 89 |

+

predict the output for the test set.

|

| 90 |

+

|

| 91 |

+

Model Evaluation

|

| 92 |

+

evaluate the model

|

| 93 |

+

|

| 94 |

+

The accuracy of the model is 0.97 which is very good and our model is performing well. Now let’s plot the confusion matrix using the seaborn heatmap.

|

| 95 |

+

|

| 96 |

+

Predicting with some more data

|

| 97 |

+

Now let’s test the model prediction using text in different languages.

|

| 98 |

+

|

| 99 |

+

What is an Epoch?

|

| 100 |

+

In terms of artificial neural networks, an epoch refers to one cycle through the full training dataset. Usually, training a neural network takes more than a few epochs. In other words, if we feed a neural network the training data for more than one epoch in different patterns, we hope for a better generalization when given a new "unseen" input (test data). An epoch is often mixed up with an iteration. Iterations is the number of batches or steps through partitioned packets of the training data, needed to complete one epoch. Heuristically, one motivation is that (especially for large but finite training sets) it gives the network a chance to see the previous data to readjust the model parameters so that the model is not biased towards the last few data points during training.

|

| 101 |

+

|

| 102 |

+

|

| 103 |

+

Be aware that there is no guarantee a network will converge or "get better" by letting it learn the data for multiple epochs. It is an art in machine learning to decide the number of epochs sufficient for a network.

|

| 104 |

+

|

| 105 |

+

In parallel, when we apply this to other areas of machine learning such as reinforcement learning, we see that an agent may not take the same route to complete the same task. This is because the agent is learning which decisions to make and trying to understand the consequences of such action(s). With a neural network, the goal of the model is generally to classify or generate material which is right or wrong. Thus, an epoch for an experimental agent performing many actions for a single task may vary from an epoch for an agent trying to perform a single action for many tasks of the same nature. In reinforcement learning terminology, this is more typically referred to as an episode.

|

| 106 |

+

|

| 107 |

+

Some Statistics

|

| 108 |

+

Given the complexity and variability of data in real world problems, it may take hundreds to thousands of epochs to get some sensible accuracy on test data. Also, the term epoch varies in definition according to the problem at hand.

|

| 109 |

+

Example

|

| 110 |

+

As a specific example of an epoch in reinforcement learning, let's consider traveling from point A to B in a city. Now, we can take multiple routes to reach B and the task is to drive from A to B a hundred times. Consider an epoch to be any route taken from a set of available routes. An iteration on the other hand describes the specifics of the route like which turns, how many stops, etc. In the reinforcement learning terminology, an iteration is often called an action.

|

setup.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os

|

| 2 |

+

from PIL import Image

|

| 3 |

+

|

| 4 |

+

def system_run():

|

| 5 |

+

|

| 6 |

+

caption = """

|

| 7 |

+

__ / || \

|

| 8 |

+

| | / / \ \

|

| 9 |

+

| | / / \ \

|

| 10 |

+

| | / /______\ \

|

| 11 |

+

| | / / \ \

|

| 12 |

+

| | / / \ \

|

| 13 |

+

| |_________ / / \ \

|

| 14 |

+

|____________|/ / \ \

|

| 15 |

+

|

| 16 |

+

"""

|

| 17 |

+

|

| 18 |

+

img = Image.open("image.jpg") #process the image

|

| 19 |

+

|

| 20 |

+

if img.height > 300 or img.width > 300: #get the height and width of the image

|

| 21 |

+

output_size = (900, 900) #crop the image to the output size

|

| 22 |

+

img.thumbnail(output_size)

|

| 23 |

+

ext = ['.jpeg', '.png', '.jpg'] #create list of extensions to save as

|

| 24 |

+

for extension in ext: #loop over the list

|

| 25 |

+

img.save(f"image_resize{extension}")

|

| 26 |

+

|

| 27 |

+

os.system('python3 text_extraction.py')

|

| 28 |

+

os.system('python3 detect_language.py')

|

| 29 |

+

|

| 30 |

+

#os.system('python3 load_text.py')

|

| 31 |

+

#os.system('python3 display_lang.py')

|

| 32 |

+

|

| 33 |

+

# os.system('python3 language_detection.py')

|

| 34 |

+

|

| 35 |

+

system_run()

|

steps.md

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

**Startup**

|

| 2 |

+

* Click on the icon "language detection" to start or power on the program.

|

| 3 |

+

|

| 4 |

+

**steps**

|

| 5 |

+

1. to detect a language by input, click on the button "start detection" and enter your text, then click on the button "ok" and reload the program for the output at the bottom bar of the gui interface. For the file ouput and audio output; in the home folder directory, click on 'PROCESSED', followed by the 'AUDIO' folder and play the 'audio_input_detected'.mp3' in the folder.

|

| 6 |

+

'*processed/audio/audio_input_detected.mp3*'

|

| 7 |

+

|

| 8 |

+

* for the text input detected; in the home folder directory, click on 'PROCESSED', followed by the 'TEXT_DETECTED' folder and open the 'text_input_detected.txt' file.

|

| 9 |

+

'*processed/text_detected/text_input_detected.txt*'

|

| 10 |

+

|

| 11 |

+

* for the language type detected; in the home folder directory, click on 'PROCESSED', followed by the 'TEXT_DETECTED' folder and open the 'language_input_type.txt' file.

|

| 12 |

+

'*processed/text_detected/language_input_type.txt*'

|

| 13 |

+

|

| 14 |

+

2. to detect a language by image, place the image to be detected in the home working directory, then click the program icon to start the program, once the program is started click on the 'get started now' button on the top left corner of the gui interface and wait... for a few mins for the image to be processed, after the image has been processed there will be a text that says "succeeded /n check directories" to see the output in the directories; in the home folder directory, click on 'PROCESSED', followed by the 'TEXT_DETECTED' folder and open the 'language_type.txt' file.

|

| 15 |

+

'*processed/text_detected/language_type.txt*'

|

| 16 |

+

|

| 17 |

+

* for the audio ouput; in the home folder directory, click on 'PROCESSED', followed by the 'AUDIO' folder and open the 'audio_detected.mp3' file.

|

| 18 |

+

'*processed/audio/audio_detected.mp3*'

|

text_extraction.py

ADDED

|

@@ -0,0 +1,445 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

#importing of modules to be used to run the program

|

| 3 |

+

import extract

|

| 4 |

+

import csv

|

| 5 |

+

import os

|

| 6 |

+

import csv

|

| 7 |

+

import cv2

|

| 8 |

+

import logging

|

| 9 |

+

import pytesseract

|

| 10 |

+

import pandas as pd

|

| 11 |

+

import numpy as np

|

| 12 |

+

from scipy.stats import mode

|

| 13 |

+

from PIL import Image

|

| 14 |

+

import argparse

|

| 15 |

+

import os

|

| 16 |

+

import random

|

| 17 |

+

|

| 18 |

+

#from google.colab.patches import cv2_imshow

|

| 19 |

+

|

| 20 |

+

#import detectron2

|

| 21 |

+

#from detectron2.utils.logger import setup_logger

|

| 22 |

+

#setup_logger()

|

| 23 |

+

#from detectron2 import model_zoo

|

| 24 |

+

#from detectron2.engine import DefaultPredictor

|

| 25 |

+

#from detectron2.config import get_cfg

|

| 26 |

+

#from detectron2.utils.visualizer import Visualizer

|

| 27 |

+

|

| 28 |

+

import logging

|

| 29 |

+

|

| 30 |

+

import cv2

|

| 31 |

+

import numpy as np

|

| 32 |

+

from scipy.stats import mode

|

| 33 |

+

|

| 34 |

+

#import and unzip the dataset

|

| 35 |

+

#!ls

|

| 36 |

+

#!unzip "Text_Detection_Dataset_COCO_Format.zip"

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

#preparing the imported and extracted dataset with json

|

| 40 |

+

|

| 41 |

+

#import json

|

| 42 |

+

#from detectron2.structures import BoxMode

|

| 43 |

+

#def get_board_dicts(imgdir):

|

| 44 |

+

# json_file = imgdir+"/dataset.json"

|

| 45 |

+

# with open(json_file) as f:

|

| 46 |

+

# dataset_dicts = json.load(f)

|

| 47 |

+

# for i in dataset_dicts:

|

| 48 |

+

# filename = i["file_name"]

|

| 49 |

+

# i["file_name"] = imgdir+"/"+filename

|

| 50 |

+

# for j in i["annotations"]:

|

| 51 |

+

# j["bbox_mode"] = BoxMode.XYWH_ABS

|

| 52 |

+

# j["category_id"] = int(j["category_id"])

|

| 53 |

+

# return dataset_dicts

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

#preprocessing the image pre-processing and pattern matching.

|

| 57 |

+

|

| 58 |

+

#This python module can perform the following functions:

|

| 59 |

+

|

| 60 |

+

#Binarization - method binary_img(img) performs this function

|

| 61 |

+

#Skew correction - method skew_correction(img) performs this function

|

| 62 |

+

#Need to introduce machine learning of some sort to make the skew correction method run faster :( Or... A simple fix would be to resize the #image first, and then apply the skew correction method! That'll probably take lesser time...

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

|

| 67 |

+

logging.basicConfig(

|

| 68 |

+

level=logging.DEBUG,

|

| 69 |

+

format="%(levelname)s: %(asctime)s {%(filename)s:%(lineno)d}: %(message)s "

|

| 70 |

+

)

|

| 71 |

+

|

| 72 |

+

kernel = np.ones((5, 5), np.uint8)

|

| 73 |

+

clahe = cv2.createCLAHE(clipLimit=2.0, tileGridSize=(8, 8))

|

| 74 |

+

img = cv2.imread('image_resize.png') # read image file to be processed

|

| 75 |

+

|

| 76 |

+

"""

|

| 77 |

+

Method to binarize an image

|

| 78 |

+

Input: Grayscale image

|

| 79 |

+

Output: Binary image

|

| 80 |

+