diff --git a/.dockerignore b/.dockerignore

new file mode 100644

index 0000000000000000000000000000000000000000..d600b6c76dd93f7b2472160d42b2797cae50c8e5

--- /dev/null

+++ b/.dockerignore

@@ -0,0 +1,25 @@

+# Logs

+logs

+*.log

+npm-debug.log*

+yarn-debug.log*

+yarn-error.log*

+pnpm-debug.log*

+lerna-debug.log*

+

+node_modules

+dist

+dist-ssr

+*.local

+

+# Editor directories and files

+.vscode/*

+!.vscode/extensions.json

+.idea

+.DS_Store

+*.suo

+*.ntvs*

+*.njsproj

+*.sln

+*.sw?

+

diff --git a/.editorconfig b/.editorconfig

new file mode 100644

index 0000000000000000000000000000000000000000..a78447ebf932f1bb3a5b124b472bea8b3a86f80f

--- /dev/null

+++ b/.editorconfig

@@ -0,0 +1,7 @@

+[*]

+charset = utf-8

+insert_final_newline = true

+end_of_line = lf

+indent_style = space

+indent_size = 2

+max_line_length = 80

\ No newline at end of file

diff --git a/.env.example b/.env.example

new file mode 100644

index 0000000000000000000000000000000000000000..0ba5371bc99189fd2af757ea0c8a7f33e33abe1c

--- /dev/null

+++ b/.env.example

@@ -0,0 +1,33 @@

+# A comma-separated list of access keys. Example: `ACCESS_KEYS="ABC123,JUD71F,HUWE3"`. Leave blank for unrestricted access.

+ACCESS_KEYS=""

+

+# The timeout in hours for access key validation. Set to 0 to require validation on every page load.

+ACCESS_KEY_TIMEOUT_HOURS="24"

+

+# The default model ID for WebLLM with F16 shaders.

+WEBLLM_DEFAULT_F16_MODEL_ID="Llama-3.2-1B-Instruct-q4f16_1-MLC"

+

+# The default model ID for WebLLM with F32 shaders.

+WEBLLM_DEFAULT_F32_MODEL_ID="Llama-3.2-1B-Instruct-q4f32_1-MLC"

+

+# The default model ID for Wllama.

+WLLAMA_DEFAULT_MODEL_ID="llama-3.2-1b"

+

+# The base URL for the internal OpenAI compatible API. Example: `INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL="https://api.openai.com/v1"`. Leave blank to disable internal OpenAI compatible API.

+INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL=""

+

+# The access key for the internal OpenAI compatible API.

+INTERNAL_OPENAI_COMPATIBLE_API_KEY=""

+

+# The model for the internal OpenAI compatible API.

+INTERNAL_OPENAI_COMPATIBLE_API_MODEL=""

+

+# The name of the internal OpenAI compatible API, displayed in the UI.

+INTERNAL_OPENAI_COMPATIBLE_API_NAME="Internal API"

+

+# The type of inference to use by default. The possible values are:

+# "browser" -> In the browser (Private)

+# "openai" -> Remote Server (API)

+# "horde" -> AI Horde (Pre-configured)

+# "internal" -> $INTERNAL_OPENAI_COMPATIBLE_API_NAME

+DEFAULT_INFERENCE_TYPE="browser"

diff --git a/.github/workflows/ai-review.yml b/.github/workflows/ai-review.yml

new file mode 100644

index 0000000000000000000000000000000000000000..a73a29721269ebf768dbcc345bcdc83524ad61c5

--- /dev/null

+++ b/.github/workflows/ai-review.yml

@@ -0,0 +1,136 @@

+name: Review Pull Request with AI

+

+on:

+ pull_request:

+ types: [opened, synchronize, reopened]

+ branches: ["main"]

+

+concurrency:

+ group: ${{ github.workflow }}-${{ github.event.pull_request.number || github.ref }}

+ cancel-in-progress: true

+

+jobs:

+ ai-review:

+ if: ${{ !contains(github.event.pull_request.labels.*.name, 'skip-ai-review') }}

+ continue-on-error: true

+ runs-on: ubuntu-latest

+ name: AI Review

+ permissions:

+ pull-requests: write

+ contents: read

+ timeout-minutes: 30

+ steps:

+ - name: Checkout Repository

+ uses: actions/checkout@v4

+

+ - name: Create temporary directory

+ run: mkdir -p /tmp/pr_review

+

+ - name: Process PR description

+ id: process_pr

+ run: |

+ PR_BODY_ESCAPED=$(cat << 'EOF'

+ ${{ github.event.pull_request.body }}

+ EOF

+ )

+ PROCESSED_BODY=$(echo "$PR_BODY_ESCAPED" | sed -E 's/\[(.*?)\]\(.*?\)/\1/g')

+ echo "$PROCESSED_BODY" > /tmp/pr_review/processed_body.txt

+

+ - name: Fetch branches and output the diff

+ run: |

+ git fetch origin main:main

+ git fetch origin pull/${{ github.event.pull_request.number }}/head:pr-branch

+ git diff main..pr-branch > /tmp/pr_review/diff.txt

+

+ - name: Prepare review request

+ id: prepare_request

+ run: |

+ PR_TITLE=$(echo "${{ github.event.pull_request.title }}" | sed 's/[()]/\\&/g')

+ DIFF_CONTENT=$(cat /tmp/pr_review/diff.txt)

+ PROCESSED_BODY=$(cat /tmp/pr_review/processed_body.txt)

+

+ jq -n \

+ --arg model "${{ vars.OPENROUTER_MODEL }}" \

+ --arg http_referer "${{ github.event.repository.html_url }}" \

+ --arg title "${{ github.event.repository.name }}" \

+ --arg system "You are an experienced developer reviewing a Pull Request. You focus only on what matters and provide concise, actionable feedback.

+

+ Review Context:

+ Repository Name: \"${{ github.event.repository.name }}\"

+ Repository Description: \"${{ github.event.repository.description }}\"

+ Branch: \"${{ github.event.pull_request.head.ref }}\"

+ PR Title: \"$PR_TITLE\"

+

+ Guidelines:

+ 1. Only comment on issues that:

+ - Could cause bugs or security issues

+ - Significantly impact performance

+ - Make the code harder to maintain

+ - Violate critical best practices

+

+ 2. For each issue:

+ - Point to the specific line/file

+ - Explain why it's a problem

+ - Suggest a concrete fix

+

+ 3. Praise exceptional solutions briefly, only if truly innovative

+

+ 4. Skip commenting on:

+ - Minor style issues

+ - Obvious changes

+ - Working code that could be marginally improved

+ - Things that are just personal preference

+

+ Remember:

+ Less is more. If the code is good and working, just say so, with a short message." \

+ --arg user "This is the description of the pull request:

+ \`\`\`markdown

+ $PROCESSED_BODY

+ \`\`\`

+

+ And here is the diff of the changes, for you to review:

+ \`\`\`diff

+ $DIFF_CONTENT

+ \`\`\`" \

+ '{

+ "model": $model,

+ "messages": [

+ {"role": "system", "content": $system},

+ {"role": "user", "content": $user}

+ ],

+ "temperature": 0.6,

+ "top_p": 0.8,

+ "min_p": 0.1,

+ "extra_headers": {

+ "HTTP-Referer": $http_referer,

+ "X-Title": $title

+ }

+ }' > /tmp/pr_review/request.json

+

+ - name: Get AI Review

+ id: ai_review

+ run: |

+ RESPONSE=$(curl -s https://openrouter.ai/api/v1/chat/completions \

+ -H "Content-Type: application/json" \

+ -H "Authorization: Bearer ${{ secrets.OPENROUTER_API_KEY }}" \

+ -d @/tmp/pr_review/request.json)

+

+ echo "### Review" > /tmp/pr_review/response.txt

+ echo "" >> /tmp/pr_review/response.txt

+ echo "$RESPONSE" | jq -r '.choices[0].message.content' >> /tmp/pr_review/response.txt

+

+ - name: Find Comment

+ uses: peter-evans/find-comment@v3

+ id: find_comment

+ with:

+ issue-number: ${{ github.event.pull_request.number }}

+ comment-author: "github-actions[bot]"

+ body-includes: "### Review"

+

+ - name: Post or Update PR Review

+ uses: peter-evans/create-or-update-comment@v4

+ with:

+ comment-id: ${{ steps.find_comment.outputs.comment-id }}

+ issue-number: ${{ github.event.pull_request.number }}

+ body-path: /tmp/pr_review/response.txt

+ edit-mode: replace

diff --git a/.github/workflows/deploy.yml b/.github/workflows/deploy.yml

new file mode 100644

index 0000000000000000000000000000000000000000..945fa830dd3258e5313257f6ed90ca5916bd5eea

--- /dev/null

+++ b/.github/workflows/deploy.yml

@@ -0,0 +1,54 @@

+name: Deploy

+

+on:

+ workflow_dispatch:

+

+jobs:

+ build-and-push-image:

+ name: Publish Docker image to GitHub Packages

+ runs-on: ubuntu-latest

+ env:

+ REGISTRY: ghcr.io

+ IMAGE_NAME: ${{ github.repository }}

+ permissions:

+ contents: read

+ packages: write

+ steps:

+ - name: Checkout repository

+ uses: actions/checkout@v4

+ - name: Log in to the Container registry

+ uses: docker/login-action@v3

+ with:

+ registry: ${{ env.REGISTRY }}

+ username: ${{ github.actor }}

+ password: ${{ secrets.GITHUB_TOKEN }}

+ - name: Extract metadata (tags, labels) for Docker

+ id: meta

+ uses: docker/metadata-action@v5

+ with:

+ images: ${{ env.REGISTRY }}/${{ env.IMAGE_NAME }}

+ - name: Set up Docker Buildx

+ uses: docker/setup-buildx-action@v3

+ - name: Build and push Docker image

+ uses: docker/build-push-action@v6

+ with:

+ context: .

+ push: true

+ tags: ${{ steps.meta.outputs.tags }}

+ labels: ${{ steps.meta.outputs.labels }}

+ platforms: linux/amd64,linux/arm64

+

+ sync-to-hf:

+ name: Sync to HuggingFace Spaces

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ with:

+ lfs: true

+ - uses: JacobLinCool/huggingface-sync@v1

+ with:

+ github: ${{ secrets.GITHUB_TOKEN }}

+ user: ${{ vars.HF_SPACE_OWNER }}

+ space: ${{ vars.HF_SPACE_NAME }}

+ token: ${{ secrets.HF_TOKEN }}

+ configuration: "hf-space-config.yml"

diff --git a/.github/workflows/on-pull-request-to-main.yml b/.github/workflows/on-pull-request-to-main.yml

new file mode 100644

index 0000000000000000000000000000000000000000..6eae98e615c1c1f2c899a9a5f1d785dd3883ff62

--- /dev/null

+++ b/.github/workflows/on-pull-request-to-main.yml

@@ -0,0 +1,9 @@

+name: On Pull Request To Main

+on:

+ pull_request:

+ types: [opened, synchronize, reopened]

+ branches: ["main"]

+jobs:

+ test-lint-ping:

+ if: ${{ !contains(github.event.pull_request.labels.*.name, 'skip-test-lint-ping') }}

+ uses: ./.github/workflows/reusable-test-lint-ping.yml

diff --git a/.github/workflows/on-push-to-main.yml b/.github/workflows/on-push-to-main.yml

new file mode 100644

index 0000000000000000000000000000000000000000..8ce693215c4351bab8b54ccac302345e1202ba03

--- /dev/null

+++ b/.github/workflows/on-push-to-main.yml

@@ -0,0 +1,7 @@

+name: On Push To Main

+on:

+ push:

+ branches: ["main"]

+jobs:

+ test-lint-ping:

+ uses: ./.github/workflows/reusable-test-lint-ping.yml

diff --git a/.github/workflows/reusable-test-lint-ping.yml b/.github/workflows/reusable-test-lint-ping.yml

new file mode 100644

index 0000000000000000000000000000000000000000..63c8e7c09f4a8598702dd4a30cd4a920d770043d

--- /dev/null

+++ b/.github/workflows/reusable-test-lint-ping.yml

@@ -0,0 +1,25 @@

+on:

+ workflow_call:

+jobs:

+ check-code-quality:

+ name: Check Code Quality

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - uses: actions/setup-node@v4

+ with:

+ node-version: 20

+ cache: "npm"

+ - run: npm ci --ignore-scripts

+ - run: npm test

+ - run: npm run lint

+ check-docker-container:

+ needs: [check-code-quality]

+ name: Check Docker Container

+ runs-on: ubuntu-latest

+ steps:

+ - uses: actions/checkout@v4

+ - run: docker compose -f docker-compose.production.yml up -d

+ - name: Check if main page is available

+ run: until curl -s -o /dev/null -w "%{http_code}" localhost:7860 | grep 200; do sleep 1; done

+ - run: docker compose -f docker-compose.production.yml down

diff --git a/.github/workflows/update-searxng-docker-image.yml b/.github/workflows/update-searxng-docker-image.yml

new file mode 100644

index 0000000000000000000000000000000000000000..50261a76e8453bc473fa6e487d81a45cebe7cd1a

--- /dev/null

+++ b/.github/workflows/update-searxng-docker-image.yml

@@ -0,0 +1,44 @@

+name: Update SearXNG Docker Image

+

+on:

+ schedule:

+ - cron: "0 14 * * *"

+ workflow_dispatch:

+

+permissions:

+ contents: write

+

+jobs:

+ update-searxng-image:

+ runs-on: ubuntu-latest

+ steps:

+ - name: Checkout code

+ uses: actions/checkout@v4

+ with:

+ token: ${{ secrets.GITHUB_TOKEN }}

+

+ - name: Get latest SearXNG image tag

+ id: get_latest_tag

+ run: |

+ LATEST_TAG=$(curl -s "https://hub.docker.com/v2/repositories/searxng/searxng/tags/?page_size=3&ordering=last_updated" | jq -r '.results[] | select(.name != "latest-build-cache" and .name != "latest") | .name' | head -n 1)

+ echo "LATEST_TAG=${LATEST_TAG}" >> $GITHUB_OUTPUT

+

+ - name: Update Dockerfile

+ run: |

+ sed -i 's|FROM searxng/searxng:.*|FROM searxng/searxng:${{ steps.get_latest_tag.outputs.LATEST_TAG }}|' Dockerfile

+

+ - name: Check for changes

+ id: git_status

+ run: |

+ git diff --exit-code || echo "changes=true" >> $GITHUB_OUTPUT

+

+ - name: Commit and push if changed

+ if: steps.git_status.outputs.changes == 'true'

+ run: |

+ git config --local user.email "github-actions[bot]@users.noreply.github.com"

+ git config --local user.name "github-actions[bot]"

+ git add Dockerfile

+ git commit -m "Update SearXNG Docker image to tag ${{ steps.get_latest_tag.outputs.LATEST_TAG }}"

+ git push

+ env:

+ GITHUB_TOKEN: ${{ secrets.GITHUB_TOKEN }}

diff --git a/.gitignore b/.gitignore

new file mode 100644

index 0000000000000000000000000000000000000000..f1b26f1ea73cad18af0078381a02bbc532714a0a

--- /dev/null

+++ b/.gitignore

@@ -0,0 +1,7 @@

+node_modules

+.DS_Store

+/client/dist

+/server/models

+.vscode

+/vite-build-stats.html

+.env

diff --git a/.husky/pre-commit b/.husky/pre-commit

new file mode 100644

index 0000000000000000000000000000000000000000..2312dc587f61186ccf0d627d678d851b9eef7b82

--- /dev/null

+++ b/.husky/pre-commit

@@ -0,0 +1 @@

+npx lint-staged

diff --git a/.npmrc b/.npmrc

new file mode 100644

index 0000000000000000000000000000000000000000..80bcbed90c4f2b3d895d5086dc775e1bd8b32b43

--- /dev/null

+++ b/.npmrc

@@ -0,0 +1 @@

+legacy-peer-deps = true

diff --git a/Dockerfile b/Dockerfile

new file mode 100644

index 0000000000000000000000000000000000000000..30e0c629b222526d71121025ebd11e318cb36063

--- /dev/null

+++ b/Dockerfile

@@ -0,0 +1,107 @@

+# Build llama.cpp in a separate stage

+FROM alpine:3.21 AS llama-builder

+

+# Install build dependencies

+RUN apk add --update \

+ build-base \

+ cmake \

+ ccache \

+ git

+

+# Build llama.cpp server and collect libraries

+RUN cd /tmp && \

+ git clone https://github.com/ggerganov/llama.cpp.git --depth=1 && \

+ cd llama.cpp && \

+ cmake -B build -DGGML_NATIVE=OFF && \

+ cmake --build build --config Release -j --target llama-server && \

+ mkdir -p /usr/local/lib/llama && \

+ find build -type f \( -name "libllama.so" -o -name "libggml.so" -o -name "libggml-base.so" -o -name "libggml-cpu.so" \) -exec cp {} /usr/local/lib/llama/ \;

+

+# Use the SearXNG image as the base for final image

+FROM searxng/searxng:2025.3.16-84636ef49

+

+# Set the default port to 7860 if not provided

+ENV PORT=7860

+

+# Expose the port specified by the PORT environment variable

+EXPOSE $PORT

+

+# Install necessary packages using Alpine's package manager

+RUN apk add --update \

+ nodejs \

+ npm \

+ git \

+ build-base

+

+# Copy llama.cpp artifacts from builder

+COPY --from=llama-builder /tmp/llama.cpp/build/bin/llama-server /usr/local/bin/

+COPY --from=llama-builder /usr/local/lib/llama/* /usr/local/lib/

+RUN ldconfig /usr/local/lib

+

+# Set the SearXNG settings folder path

+ARG SEARXNG_SETTINGS_FOLDER=/etc/searxng

+

+# Modify SearXNG configuration:

+# 1. Change output format from HTML to JSON

+# 2. Remove user switching in the entrypoint script

+# 3. Create and set permissions for the settings folder

+RUN sed -i 's/- html/- json/' /usr/local/searxng/searx/settings.yml \

+ && sed -i 's/su-exec searxng:searxng //' /usr/local/searxng/dockerfiles/docker-entrypoint.sh \

+ && mkdir -p ${SEARXNG_SETTINGS_FOLDER} \

+ && chmod 777 ${SEARXNG_SETTINGS_FOLDER}

+

+# Set up user and directory structure

+ARG USERNAME=user

+ARG HOME_DIR=/home/${USERNAME}

+ARG APP_DIR=${HOME_DIR}/app

+

+# Create a non-root user and set up the application directory

+RUN adduser -D -u 1000 ${USERNAME} \

+ && mkdir -p ${APP_DIR} \

+ && chown -R ${USERNAME}:${USERNAME} ${HOME_DIR}

+

+# Switch to the non-root user

+USER ${USERNAME}

+

+# Set the working directory to the application directory

+WORKDIR ${APP_DIR}

+

+# Define environment variables that can be passed to the container during build.

+# This approach allows for dynamic configuration without relying on a `.env` file,

+# which might not be suitable for all deployment scenarios.

+ARG ACCESS_KEYS

+ARG ACCESS_KEY_TIMEOUT_HOURS

+ARG WEBLLM_DEFAULT_F16_MODEL_ID

+ARG WEBLLM_DEFAULT_F32_MODEL_ID

+ARG WLLAMA_DEFAULT_MODEL_ID

+ARG INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL

+ARG INTERNAL_OPENAI_COMPATIBLE_API_KEY

+ARG INTERNAL_OPENAI_COMPATIBLE_API_MODEL

+ARG INTERNAL_OPENAI_COMPATIBLE_API_NAME

+ARG DEFAULT_INFERENCE_TYPE

+ARG HOST

+ARG HMR_PORT

+ARG ALLOWED_HOSTS

+

+# Copy package.json, package-lock.json, and .npmrc files

+COPY --chown=${USERNAME}:${USERNAME} ./package.json ./package.json

+COPY --chown=${USERNAME}:${USERNAME} ./package-lock.json ./package-lock.json

+COPY --chown=${USERNAME}:${USERNAME} ./.npmrc ./.npmrc

+

+# Install Node.js dependencies

+RUN npm ci

+

+# Copy the rest of the application files

+COPY --chown=${USERNAME}:${USERNAME} . .

+

+# Configure Git to treat the app directory as safe

+RUN git config --global --add safe.directory ${APP_DIR}

+

+# Build the application

+RUN npm run build

+

+# Set the entrypoint to use a shell

+ENTRYPOINT [ "/bin/sh", "-c" ]

+

+# Run SearXNG in the background and start the Node.js application using PM2

+CMD [ "(/usr/local/searxng/dockerfiles/docker-entrypoint.sh -f > /dev/null 2>&1) & (npx pm2 start ecosystem.config.cjs && npx pm2 logs production-server)" ]

diff --git a/README.md b/README.md

new file mode 100644

index 0000000000000000000000000000000000000000..9d4fc81e62df1200d81b8e39efebb601ac4f100a

--- /dev/null

+++ b/README.md

@@ -0,0 +1,139 @@

+---

+title: MiniSearch

+emoji: 👌🔍

+colorFrom: yellow

+colorTo: yellow

+sdk: docker

+short_description: Minimalist web-searching app with browser-based AI assistant

+pinned: true

+custom_headers:

+ cross-origin-embedder-policy: require-corp

+ cross-origin-opener-policy: same-origin

+ cross-origin-resource-policy: cross-origin

+---

+

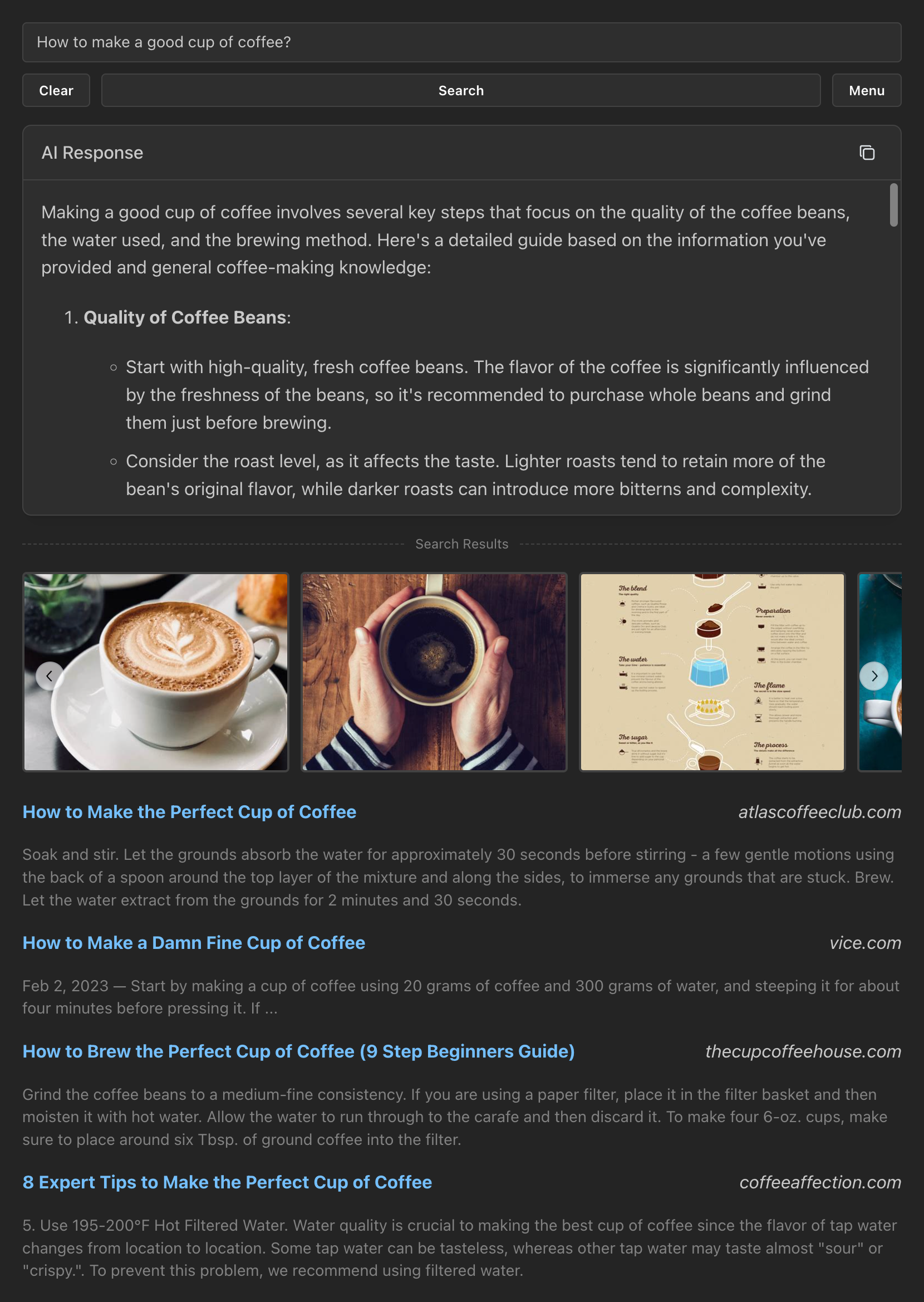

+# MiniSearch

+

+A minimalist web-searching app with an AI assistant that runs directly from your browser.

+

+Live demo: https://felladrin-minisearch.hf.space

+

+## Screenshot

+

+

+

+## Features

+

+- **Privacy-focused**: [No tracking, no ads, no data collection](https://docs.searxng.org/own-instance.html#how-does-searxng-protect-privacy)

+- **Easy to use**: Minimalist yet intuitive interface for all users

+- **Cross-platform**: Models run inside the browser, both on desktop and mobile

+- **Integrated**: Search from the browser address bar by setting it as the default search engine

+- **Efficient**: Models are loaded and cached only when needed

+- **Customizable**: Tweakable settings for search results and text generation

+- **Open-source**: [The code is available for inspection and contribution at GitHub](https://github.com/felladrin/MiniSearch)

+

+## Prerequisites

+

+- [Docker](https://docs.docker.com/get-docker/)

+

+## Getting started

+

+Here are the easiest ways to get started with MiniSearch. Pick the one that suits you best.

+

+**Option 1** - Use [MiniSearch's Docker Image](https://github.com/felladrin/MiniSearch/pkgs/container/minisearch) by running in your terminal:

+

+```bash

+docker run -p 7860:7860 ghcr.io/felladrin/minisearch:main

+```

+

+**Option 2** - Add MiniSearch's Docker Image to your existing Docker Compose file:

+

+```yaml

+services:

+ minisearch:

+ image: ghcr.io/felladrin/minisearch:main

+ ports:

+ - "7860:7860"

+```

+

+**Option 3** - Build from source by [downloading the repository files](https://github.com/felladrin/MiniSearch/archive/refs/heads/main.zip) and running:

+

+```bash

+docker compose -f docker-compose.production.yml up --build

+```

+

+Once the container is running, open http://localhost:7860 in your browser and start searching!

+

+## Frequently asked questions

+

+

+ How do I search via the browser's address bar?

+

+ You can set MiniSearch as your browser's address-bar search engine using the pattern http://localhost:7860/?q=%s, in which your search term replaces %s.

+

+

+

+

+ How do I search via Raycast?

+

+ You can add this Quicklink to Raycast, so typing your query will open MiniSearch with the search results. You can also edit it to point to your own domain.

+

+  +

+

+

+

+ Can I use custom models via OpenAI-Compatible API?

+

+ Yes! For this, open the Menu and change the "AI Processing Location" to Remote server (API). Then configure the Base URL, and optionally set an API Key and a Model to use.

+

+

+

+

+ How do I restrict the access to my MiniSearch instance via password?

+

+ Create a .env file and set a value for ACCESS_KEYS. Then reset the MiniSearch docker container.

+

+

+ For example, if you to set the password to PepperoniPizza, then this is what you should add to your .env:

+ ACCESS_KEYS="PepperoniPizza"

+

+

+ You can find more examples in the .env.example file.

+

+

+

+

+ I want to serve MiniSearch to other users, allowing them to use my own OpenAI-Compatible API key, but without revealing it to them. Is it possible?

+ Yes! In MiniSearch, we call this text-generation feature "Internal OpenAI-Compatible API". To use this it:

+

+ - Set up your OpenAI-Compatible API endpoint by configuring the following environment variables in your

.env file:

+

+ INTERNAL_OPENAI_COMPATIBLE_API_BASE_URL: The base URL for your APIINTERNAL_OPENAI_COMPATIBLE_API_KEY: Your API access keyINTERNAL_OPENAI_COMPATIBLE_API_MODEL: The model to useINTERNAL_OPENAI_COMPATIBLE_API_NAME: The name to display in the UI

+

+ - Restart MiniSearch server.

+ - In the MiniSearch menu, select the new option (named as per your

INTERNAL_OPENAI_COMPATIBLE_API_NAME setting) from the "AI Processing Location" dropdown.

+

+

+

+

+ How can I contribute to the development of this tool?

+ Fork this repository and clone it. Then, start the development server by running the following command:

+ docker compose up

+ Make your changes, push them to your fork, and open a pull request! All contributions are welcome!

+

+

+

+ Why is MiniSearch built upon SearXNG's Docker Image and using a single image instead of composing it from multiple services?

+ There are a few reasons for this:

+

+ - MiniSearch utilizes SearXNG as its meta-search engine.

+ - Manual installation of SearXNG is not trivial, so we use the docker image they provide, which has everything set up.

+ - SearXNG only provides a Docker Image based on Alpine Linux.

+ - The user of the image needs to be customized in a specific way to run on HuggingFace Spaces, where MiniSearch's demo runs.

+ - HuggingFace only accepts a single docker image. It doesn't run docker compose or multiple images, unfortunately.

+

+

diff --git a/biome.json b/biome.json

new file mode 100644

index 0000000000000000000000000000000000000000..adaa75db32e7a7e5bf6eb2ae2df5a99cdd4352bf

--- /dev/null

+++ b/biome.json

@@ -0,0 +1,30 @@

+{

+ "$schema": "https://biomejs.dev/schemas/1.9.4/schema.json",

+ "vcs": {

+ "enabled": false,

+ "clientKind": "git",

+ "useIgnoreFile": false

+ },

+ "files": {

+ "ignoreUnknown": false,

+ "ignore": []

+ },

+ "formatter": {

+ "enabled": true,

+ "indentStyle": "space"

+ },

+ "organizeImports": {

+ "enabled": true

+ },

+ "linter": {

+ "enabled": true,

+ "rules": {

+ "recommended": true

+ }

+ },

+ "javascript": {

+ "formatter": {

+ "quoteStyle": "double"

+ }

+ }

+}

diff --git a/client/components/AiResponse/AiModelDownloadAllowanceContent.tsx b/client/components/AiResponse/AiModelDownloadAllowanceContent.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..227cec2d8152fb4ddcb9a4e91e4c8e3034b0feba

--- /dev/null

+++ b/client/components/AiResponse/AiModelDownloadAllowanceContent.tsx

@@ -0,0 +1,62 @@

+import { Alert, Button, Group, Text } from "@mantine/core";

+import { IconCheck, IconInfoCircle, IconX } from "@tabler/icons-react";

+import { usePubSub } from "create-pubsub/react";

+import { useState } from "react";

+import { addLogEntry } from "../../modules/logEntries";

+import { settingsPubSub } from "../../modules/pubSub";

+

+export default function AiModelDownloadAllowanceContent() {

+ const [settings, setSettings] = usePubSub(settingsPubSub);

+ const [hasDeniedDownload, setDeniedDownload] = useState(false);

+

+ const handleAccept = () => {

+ setSettings({

+ ...settings,

+ allowAiModelDownload: true,

+ });

+ addLogEntry("User allowed the AI model download");

+ };

+

+ const handleDecline = () => {

+ setDeniedDownload(true);

+ addLogEntry("User denied the AI model download");

+ };

+

+ return hasDeniedDownload ? null : (

+ }

+ >

+

+ To obtain AI responses, a language model needs to be downloaded to your

+ browser. Enabling this option lets the app store it and load it

+ instantly on subsequent uses.

+

+

+ Please note that the download size ranges from 100 MB to 4 GB, depending

+ on the model you select in the Menu, so it's best to avoid using mobile

+ data for this.

+

+

+ }

+ onClick={handleDecline}

+ size="xs"

+ >

+ Not now

+

+ }

+ onClick={handleAccept}

+ size="xs"

+ >

+ Allow download

+

+

+

+ );

+}

diff --git a/client/components/AiResponse/AiResponseContent.tsx b/client/components/AiResponse/AiResponseContent.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..2ae870753fcca4bd33acb9669c5845bd04dfeaf4

--- /dev/null

+++ b/client/components/AiResponse/AiResponseContent.tsx

@@ -0,0 +1,209 @@

+import {

+ ActionIcon,

+ Alert,

+ Badge,

+ Box,

+ Card,

+ Group,

+ ScrollArea,

+ Text,

+ Tooltip,

+} from "@mantine/core";

+import {

+ IconArrowsMaximize,

+ IconArrowsMinimize,

+ IconHandStop,

+ IconInfoCircle,

+ IconRefresh,

+ IconVolume2,

+} from "@tabler/icons-react";

+import type { PublishFunction } from "create-pubsub";

+import { usePubSub } from "create-pubsub/react";

+import { type ReactNode, Suspense, lazy, useMemo, useState } from "react";

+import { addLogEntry } from "../../modules/logEntries";

+import { settingsPubSub } from "../../modules/pubSub";

+import { searchAndRespond } from "../../modules/textGeneration";

+

+const FormattedMarkdown = lazy(() => import("./FormattedMarkdown"));

+const CopyIconButton = lazy(() => import("./CopyIconButton"));

+

+export default function AiResponseContent({

+ textGenerationState,

+ response,

+ setTextGenerationState,

+}: {

+ textGenerationState: string;

+ response: string;

+ setTextGenerationState: PublishFunction<

+ | "failed"

+ | "awaitingSearchResults"

+ | "preparingToGenerate"

+ | "idle"

+ | "loadingModel"

+ | "generating"

+ | "interrupted"

+ | "completed"

+ >;

+}) {

+ const [settings, setSettings] = usePubSub(settingsPubSub);

+ const [isSpeaking, setIsSpeaking] = useState(false);

+

+ const ConditionalScrollArea = useMemo(

+ () =>

+ ({ children }: { children: ReactNode }) => {

+ return settings.enableAiResponseScrolling ? (

+

+ {children}

+

+ ) : (

+ {children}

+ );

+ },

+ [settings.enableAiResponseScrolling],

+ );

+

+ function speakResponse(text: string) {

+ if (isSpeaking) {

+ self.speechSynthesis.cancel();

+ setIsSpeaking(false);

+ return;

+ }

+

+ const prepareTextForSpeech = (textToClean: string) => {

+ const withoutLinks = textToClean.replace(/\[([^\]]+)\]\([^)]+\)/g, "");

+ const withoutMarkdown = withoutLinks.replace(/[#*`_~\[\]]/g, "");

+ return withoutMarkdown;

+ };

+

+ const utterance = new SpeechSynthesisUtterance(prepareTextForSpeech(text));

+

+ const voices = self.speechSynthesis.getVoices();

+

+ if (voices.length > 0 && settings.selectedVoiceId) {

+ const voice = voices.find(

+ (voice) => voice.voiceURI === settings.selectedVoiceId,

+ );

+

+ if (voice) {

+ utterance.voice = voice;

+ utterance.lang = voice.lang;

+ }

+ }

+

+ utterance.onerror = () => {

+ addLogEntry("Failed to speak response");

+ setIsSpeaking(false);

+ };

+

+ utterance.onend = () => setIsSpeaking(false);

+

+ setIsSpeaking(true);

+ self.speechSynthesis.speak(utterance);

+ }

+

+ return (

+

+

+

+

+

+ {textGenerationState === "generating"

+ ? "Generating AI Response..."

+ : "AI Response"}

+

+ {textGenerationState === "interrupted" && (

+

+ Interrupted

+

+ )}

+

+

+ {textGenerationState === "generating" ? (

+

+ setTextGenerationState("interrupted")}

+ variant="subtle"

+ color="gray"

+ >

+

+

+

+ ) : (

+

+ searchAndRespond()}

+ variant="subtle"

+ color="gray"

+ >

+

+

+

+ )}

+

+ speakResponse(response)}

+ variant="subtle"

+ color={isSpeaking ? "blue" : "gray"}

+ >

+

+

+

+ {settings.enableAiResponseScrolling ? (

+

+ {

+ setSettings({

+ ...settings,

+ enableAiResponseScrolling: false,

+ });

+ }}

+ variant="subtle"

+ color="gray"

+ >

+

+

+

+ ) : (

+

+ {

+ setSettings({

+ ...settings,

+ enableAiResponseScrolling: true,

+ });

+ }}

+ variant="subtle"

+ color="gray"

+ >

+

+

+

+ )}

+

+

+

+

+

+

+

+

+

+ {response}

+

+

+ {textGenerationState === "failed" && (

+ }

+ >

+ Could not generate response. Please try refreshing the page.

+

+ )}

+

+

+ );

+}

diff --git a/client/components/AiResponse/AiResponseSection.tsx b/client/components/AiResponse/AiResponseSection.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..540b5c209d4631eea592037fda05290a106c05c8

--- /dev/null

+++ b/client/components/AiResponse/AiResponseSection.tsx

@@ -0,0 +1,105 @@

+import { usePubSub } from "create-pubsub/react";

+import { Suspense, lazy, useMemo } from "react";

+import {

+ modelLoadingProgressPubSub,

+ modelSizeInMegabytesPubSub,

+ queryPubSub,

+ responsePubSub,

+ settingsPubSub,

+ textGenerationStatePubSub,

+} from "../../modules/pubSub";

+

+const AiResponseContent = lazy(() => import("./AiResponseContent"));

+const PreparingContent = lazy(() => import("./PreparingContent"));

+const LoadingModelContent = lazy(() => import("./LoadingModelContent"));

+const ChatInterface = lazy(() => import("./ChatInterface"));

+const AiModelDownloadAllowanceContent = lazy(

+ () => import("./AiModelDownloadAllowanceContent"),

+);

+

+export default function AiResponseSection() {

+ const [query] = usePubSub(queryPubSub);

+ const [response] = usePubSub(responsePubSub);

+ const [textGenerationState, setTextGenerationState] = usePubSub(

+ textGenerationStatePubSub,

+ );

+ const [modelLoadingProgress] = usePubSub(modelLoadingProgressPubSub);

+ const [settings] = usePubSub(settingsPubSub);

+ const [modelSizeInMegabytes] = usePubSub(modelSizeInMegabytesPubSub);

+

+ return useMemo(() => {

+ if (!settings.enableAiResponse || textGenerationState === "idle") {

+ return null;

+ }

+

+ const generatingStates = [

+ "generating",

+ "interrupted",

+ "completed",

+ "failed",

+ ];

+ if (generatingStates.includes(textGenerationState)) {

+ return (

+ <>

+

+

+

+ {textGenerationState === "completed" && (

+

+

+

+ )}

+

+ );

+ }

+

+ if (textGenerationState === "loadingModel") {

+ return (

+

+

+

+ );

+ }

+

+ if (textGenerationState === "preparingToGenerate") {

+ return (

+

+

+

+ );

+ }

+

+ if (textGenerationState === "awaitingSearchResults") {

+ return (

+

+

+

+ );

+ }

+

+ if (textGenerationState === "awaitingModelDownloadAllowance") {

+ return (

+

+

+

+ );

+ }

+

+ return null;

+ }, [

+ settings.enableAiResponse,

+ textGenerationState,

+ response,

+ query,

+ modelLoadingProgress,

+ modelSizeInMegabytes,

+ setTextGenerationState,

+ ]);

+}

diff --git a/client/components/AiResponse/ChatInterface.tsx b/client/components/AiResponse/ChatInterface.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..cfd494152d47dda0ec5e9674f1d7844179b54932

--- /dev/null

+++ b/client/components/AiResponse/ChatInterface.tsx

@@ -0,0 +1,208 @@

+import {

+ Button,

+ Card,

+ Group,

+ Paper,

+ Stack,

+ Text,

+ Textarea,

+} from "@mantine/core";

+import { IconSend } from "@tabler/icons-react";

+import { usePubSub } from "create-pubsub/react";

+import type { ChatMessage } from "gpt-tokenizer/GptEncoding";

+import {

+ type ChangeEvent,

+ type KeyboardEvent,

+ Suspense,

+ lazy,

+ useEffect,

+ useRef,

+ useState,

+} from "react";

+import { handleEnterKeyDown } from "../../modules/keyboard";

+import { addLogEntry } from "../../modules/logEntries";

+import { settingsPubSub } from "../../modules/pubSub";

+import { generateChatResponse } from "../../modules/textGeneration";

+

+const FormattedMarkdown = lazy(() => import("./FormattedMarkdown"));

+const CopyIconButton = lazy(() => import("./CopyIconButton"));

+

+interface ChatState {

+ input: string;

+ isGenerating: boolean;

+ streamedResponse: string;

+}

+

+export default function ChatInterface({

+ initialQuery,

+ initialResponse,

+}: {

+ initialQuery: string;

+ initialResponse: string;

+}) {

+ const [messages, setMessages] = useState([]);

+ const [state, setState] = useState({

+ input: "",

+ isGenerating: false,

+ streamedResponse: "",

+ });

+ const latestResponseRef = useRef("");

+ const [settings] = usePubSub(settingsPubSub);

+

+ useEffect(() => {

+ setMessages([

+ { role: "user", content: initialQuery },

+ { role: "assistant", content: initialResponse },

+ ]);

+ }, [initialQuery, initialResponse]);

+

+ const handleSend = async () => {

+ if (state.input.trim() === "" || state.isGenerating) return;

+

+ const newMessages: ChatMessage[] = [

+ ...messages,

+ { role: "user", content: state.input },

+ ];

+ setMessages(newMessages);

+ setState((prev) => ({

+ ...prev,

+ input: "",

+ isGenerating: true,

+ streamedResponse: "",

+ }));

+ latestResponseRef.current = "";

+

+ try {

+ addLogEntry("User sent a follow-up question");

+ await generateChatResponse(newMessages, (partialResponse) => {

+ setState((prev) => ({ ...prev, streamedResponse: partialResponse }));

+ latestResponseRef.current = partialResponse;

+ });

+ setMessages((prevMessages) => [

+ ...prevMessages,

+ { role: "assistant", content: latestResponseRef.current },

+ ]);

+ addLogEntry("AI responded to follow-up question");

+ } catch (error) {

+ addLogEntry(`Error generating chat response: ${error}`);

+ setMessages((prevMessages) => [

+ ...prevMessages,

+ {

+ role: "assistant",

+ content: "Sorry, I encountered an error while generating a response.",

+ },

+ ]);

+ } finally {

+ setState((prev) => ({

+ ...prev,

+ isGenerating: false,

+ streamedResponse: "",

+ }));

+ }

+ };

+

+ const handleInputChange = (event: ChangeEvent) => {

+ const input = event.target.value;

+ setState((prev) => ({ ...prev, input }));

+ };

+

+ const handleKeyDown = (event: KeyboardEvent) => {

+ handleEnterKeyDown(event, settings, handleSend);

+ };

+

+ const getChatContent = () => {

+ return messages

+ .slice(2)

+ .map(

+ (msg, index) =>

+ `${index + 1}. ${msg.role?.toUpperCase()}\n\n${msg.content}`,

+ )

+ .join("\n\n");

+ };

+

+ return (

+

+

+

+ Follow-up questions

+ {messages.length > 2 && (

+

+

+

+ )}

+

+

+

+ {messages.slice(2).length > 0 && (

+

+ {messages.slice(2).map((message, index) => (

+

+

+ {message.content}

+

+

+ ))}

+ {state.isGenerating && state.streamedResponse.length > 0 && (

+

+

+

+ {state.streamedResponse}

+

+

+

+ )}

+

+ )}

+

+

+

+

+

+

+ );

+}

diff --git a/client/components/AiResponse/CopyIconButton.tsx b/client/components/AiResponse/CopyIconButton.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..af8d82dfe54056adf9761d7c2d74d7fae37b348b

--- /dev/null

+++ b/client/components/AiResponse/CopyIconButton.tsx

@@ -0,0 +1,32 @@

+import { ActionIcon, CopyButton, Tooltip } from "@mantine/core";

+import { IconCheck, IconCopy } from "@tabler/icons-react";

+

+interface CopyIconButtonProps {

+ value: string;

+ tooltipLabel?: string;

+}

+

+export default function CopyIconButton({

+ value,

+ tooltipLabel = "Copy",

+}: CopyIconButtonProps) {

+ return (

+

+ {({ copied, copy }) => (

+

+

+ {copied ? : }

+

+

+ )}

+

+ );

+}

diff --git a/client/components/AiResponse/FormattedMarkdown.tsx b/client/components/AiResponse/FormattedMarkdown.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..986666149f175ad8a8cff53d257856bd232ada9c

--- /dev/null

+++ b/client/components/AiResponse/FormattedMarkdown.tsx

@@ -0,0 +1,45 @@

+import { Box } from "@mantine/core";

+import { lazy } from "react";

+

+const MarkdownRenderer = lazy(() => import("./MarkdownRenderer"));

+const ReasoningSection = lazy(() => import("./ReasoningSection"));

+

+import { useReasoningContent } from "./hooks/useReasoningContent";

+

+interface FormattedMarkdownProps {

+ children: string;

+ className?: string;

+ enableCopy?: boolean;

+}

+

+export default function FormattedMarkdown({

+ children,

+ className = "",

+ enableCopy = true,

+}: FormattedMarkdownProps) {

+ if (!children) {

+ return null;

+ }

+

+ const { reasoningContent, mainContent, isGenerating, thinkingTimeMs } =

+ useReasoningContent(children);

+

+ return (

+

+ {reasoningContent && (

+

+ )}

+ {!isGenerating && mainContent && (

+

+ )}

+

+ );

+}

diff --git a/client/components/AiResponse/LoadingModelContent.tsx b/client/components/AiResponse/LoadingModelContent.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..509a3f847a2427f5647c508a72a68f69a48da3bc

--- /dev/null

+++ b/client/components/AiResponse/LoadingModelContent.tsx

@@ -0,0 +1,40 @@

+import { Card, Group, Progress, Stack, Text } from "@mantine/core";

+

+export default function LoadingModelContent({

+ modelLoadingProgress,

+ modelSizeInMegabytes,

+}: {

+ modelLoadingProgress: number;

+ modelSizeInMegabytes: number;

+}) {

+ const isLoadingStarting = modelLoadingProgress === 0;

+ const isLoadingComplete = modelLoadingProgress === 100;

+ const percent =

+ isLoadingComplete || isLoadingStarting ? 100 : modelLoadingProgress;

+ const strokeColor = percent === 100 ? "#52c41a" : "#3385ff";

+ const downloadedSize = (modelSizeInMegabytes * modelLoadingProgress) / 100;

+ const sizeText = `${downloadedSize.toFixed(0)} MB / ${modelSizeInMegabytes.toFixed(0)} MB`;

+

+ return (

+

+

+ Loading AI...

+

+

+

+

+ {!isLoadingStarting && (

+

+

+ {sizeText}

+

+

+ {percent.toFixed(1)}%

+

+

+ )}

+

+

+

+ );

+}

diff --git a/client/components/AiResponse/MarkdownRenderer.tsx b/client/components/AiResponse/MarkdownRenderer.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..dee8db212c9a2547399b1a607b0cd4ee06825af6

--- /dev/null

+++ b/client/components/AiResponse/MarkdownRenderer.tsx

@@ -0,0 +1,143 @@

+import {

+ Box,

+ Button,

+ TypographyStylesProvider,

+ useMantineTheme,

+} from "@mantine/core";

+import React, { lazy } from "react";

+import { Prism as SyntaxHighlighter } from "react-syntax-highlighter";

+import syntaxHighlighterStyle from "react-syntax-highlighter/dist/esm/styles/prism/one-dark";

+import rehypeExternalLinks from "rehype-external-links";

+import remarkGfm from "remark-gfm";

+

+const Markdown = lazy(() => import("react-markdown"));

+const CopyIconButton = lazy(() => import("./CopyIconButton"));

+

+interface MarkdownRendererProps {

+ content: string;

+ enableCopy?: boolean;

+ className?: string;

+}

+

+export default function MarkdownRenderer({

+ content,

+ enableCopy = true,

+ className = "",

+}: MarkdownRendererProps) {

+ const theme = useMantineTheme();

+

+ if (!content) {

+ return null;

+ }

+

+ return (

+

+

+

+ {children}

+

+ );

+ },

+ li(props) {

+ const { children } = props;

+ const processedChildren = React.Children.map(

+ children,

+ (child) => {

+ if (React.isValidElement(child) && child.type === "p") {

+ return (child.props as { children: React.ReactNode })

+ .children;

+ }

+ return child;

+ },

+ );

+ return {processedChildren};

+ },

+ pre(props) {

+ return <>{props.children};

+ },

+ code(props) {

+ const { children, className, node, ref, ...rest } = props;

+ void node;

+ const languageMatch = /language-(\w+)/.exec(className || "");

+ const codeContent = children?.toString().replace(/\n$/, "") ?? "";

+

+ if (languageMatch) {

+ return (

+

+ {enableCopy && (

+

+

+

+ )}

+

+ {codeContent}

+

+

+ );

+ }

+

+ return (

+

+ {children}

+

+ );

+ },

+ }}

+ >

+ {content}

+

+

+

+ );

+}

diff --git a/client/components/AiResponse/PreparingContent.tsx b/client/components/AiResponse/PreparingContent.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..b6989c1a9fdab0440ac10adfbd56738c3975324a

--- /dev/null

+++ b/client/components/AiResponse/PreparingContent.tsx

@@ -0,0 +1,33 @@

+import { Card, Skeleton, Stack, Text } from "@mantine/core";

+

+export default function PreparingContent({

+ textGenerationState,

+}: {

+ textGenerationState: string;

+}) {

+ const getStateText = () => {

+ if (textGenerationState === "awaitingSearchResults") {

+ return "Awaiting search results...";

+ }

+ if (textGenerationState === "preparingToGenerate") {

+ return "Preparing AI response...";

+ }

+ return null;

+ };

+

+ return (

+

+

+ {getStateText()}

+

+

+

+

+

+

+

+

+

+

+ );

+}

diff --git a/client/components/AiResponse/ReasoningSection.tsx b/client/components/AiResponse/ReasoningSection.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..45ca67288c73d6b8fa841bb2346ff7415adcd7b7

--- /dev/null

+++ b/client/components/AiResponse/ReasoningSection.tsx

@@ -0,0 +1,71 @@

+import {

+ Box,

+ Collapse,

+ Flex,

+ Group,

+ Loader,

+ Text,

+ UnstyledButton,

+} from "@mantine/core";

+import { IconChevronDown, IconChevronRight } from "@tabler/icons-react";

+import { lazy, useState } from "react";

+import { formatThinkingTime } from "../../modules/stringFormatters";

+

+const MarkdownRenderer = lazy(() => import("./MarkdownRenderer"));

+

+interface ReasoningSectionProps {

+ content: string;

+ isGenerating?: boolean;

+ thinkingTimeMs?: number;

+}

+

+export default function ReasoningSection({

+ content,

+ isGenerating = false,

+ thinkingTimeMs = 0,

+}: ReasoningSectionProps) {

+ const [isOpen, setIsOpen] = useState(false);

+

+ return (

+

+ setIsOpen(!isOpen)}

+ style={(theme) => ({

+ width: "100%",

+ padding: theme.spacing.xs,

+ borderRadius: theme.radius.sm,

+ backgroundColor: theme.colors.dark[6],

+ "&:hover": {

+ backgroundColor: theme.colors.dark[5],

+ },

+ })}

+ >

+

+ {isOpen ? (

+

+ ) : (

+

+ )}

+

+

+ {isGenerating ? "Thinking" : formatThinkingTime(thinkingTimeMs)}

+

+ {isGenerating && }

+

+

+

+

+ ({

+ backgroundColor: theme.colors.dark[7],

+ padding: theme.spacing.sm,

+ marginTop: theme.spacing.xs,

+ borderRadius: theme.radius.sm,

+ })}

+ >

+

+

+

+

+ );

+}

diff --git a/client/components/AiResponse/WebLlmModelSelect.tsx b/client/components/AiResponse/WebLlmModelSelect.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..a967fef6e08b5c761c813adb57ae88a392c7df35

--- /dev/null

+++ b/client/components/AiResponse/WebLlmModelSelect.tsx

@@ -0,0 +1,81 @@

+import { type ComboboxItem, Select } from "@mantine/core";

+import { prebuiltAppConfig } from "@mlc-ai/web-llm";

+import { useCallback, useEffect, useState } from "react";

+import { isF16Supported } from "../../modules/webGpu";

+

+export default function WebLlmModelSelect({

+ value,

+ onChange,

+}: {

+ value: string;

+ onChange: (value: string) => void;

+}) {

+ const [webGpuModels] = useState(() => {

+ const models = prebuiltAppConfig.model_list

+ .filter((model) => {

+ const isSmall = isSmallModel(model);

+ const suffix = getModelSuffix(isF16Supported, isSmall);

+ return model.model_id.endsWith(suffix);

+ })

+ .sort((a, b) => (a.vram_required_MB ?? 0) - (b.vram_required_MB ?? 0))

+ .map((model) => {

+ const modelSizeInMegabytes =

+ Math.round(model.vram_required_MB ?? 0) || "N/A";

+ const isSmall = isSmallModel(model);

+ const suffix = getModelSuffix(isF16Supported, isSmall);

+ const modelName = model.model_id.replace(suffix, "");

+

+ return {

+ label: `${modelSizeInMegabytes} MB • ${modelName}`,

+ value: model.model_id,

+ };

+ });

+

+ return models;

+ });

+

+ useEffect(() => {

+ const isCurrentModelValid = webGpuModels.some(

+ (model) => model.value === value,

+ );

+

+ if (!isCurrentModelValid && webGpuModels.length > 0) {

+ onChange(webGpuModels[0].value);

+ }

+ }, [onChange, webGpuModels, value]);

+

+ const handleChange = useCallback(

+ (value: string | null) => {

+ if (value) onChange(value);

+ },

+ [onChange],

+ );

+

+ return (

+

+ );

+}

+

+type ModelConfig = (typeof prebuiltAppConfig.model_list)[number];

+

+const smallModels = ["SmolLM2-135M", "SmolLM2-360M"] as const;

+

+function isSmallModel(model: ModelConfig) {

+ return smallModels.some((smallModel) =>

+ model.model_id.startsWith(smallModel),

+ );

+}

+

+function getModelSuffix(isF16: boolean, isSmall: boolean) {

+ if (isSmall) return isF16 ? "-q0f16-MLC" : "-q0f32-MLC";

+

+ return isF16 ? "-q4f16_1-MLC" : "-q4f32_1-MLC";

+}

diff --git a/client/components/AiResponse/WllamaModelSelect.tsx b/client/components/AiResponse/WllamaModelSelect.tsx

new file mode 100644

index 0000000000000000000000000000000000000000..6a63fcaa664cc28ddc563f7b068acdd7ecb16d7f

--- /dev/null

+++ b/client/components/AiResponse/WllamaModelSelect.tsx

@@ -0,0 +1,42 @@

+import { type ComboboxItem, Select } from "@mantine/core";

+import { useEffect, useState } from "react";

+import { wllamaModels } from "../../modules/wllama";

+

+export default function WllamaModelSelect({

+ value,

+ onChange,

+}: {

+ value: string;

+ onChange: (value: string) => void;

+}) {

+ const [wllamaModelOptions] = useState(

+ Object.entries(wllamaModels)

+ .sort(([, a], [, b]) => a.fileSizeInMegabytes - b.fileSizeInMegabytes)

+ .map(([value, { label, fileSizeInMegabytes }]) => ({

+ label: `${fileSizeInMegabytes} MB • ${label}`,

+ value,

+ })),

+ );

+

+ useEffect(() => {

+ const isCurrentModelValid = wllamaModelOptions.some(

+ (model) => model.value === value,

+ );

+

+ if (!isCurrentModelValid && wllamaModelOptions.length > 0) {

+ onChange(wllamaModelOptions[0].value);

+ }

+ }, [onChange, wllamaModelOptions, value]);

+

+ return (

+