Spaces:

Running

Running

Upload 13 files

Browse files- README.md +80 -13

- Rule_Based_Sample_Response.png +0 -0

- Smart_Chatbot.png +0 -0

- Smart_Chatbot2.png +0 -0

- app.py +48 -0

- data/Financial_data.csv +10 -0

- requirements.txt +75 -0

- src/__init__.py +0 -0

- src/__pycache__/__init__.cpython-311.pyc +0 -0

- src/__pycache__/main.cpython-311.pyc +0 -0

- src/__pycache__/rule_based.cpython-311.pyc +0 -0

- src/main.py +209 -0

- src/rule_based.py +13 -0

README.md

CHANGED

|

@@ -1,13 +1,80 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# FinBuddy Project

|

| 2 |

+

|

| 3 |

+

## Overview

|

| 4 |

+

|

| 5 |

+

FinBuddy is an AI Assistant designed to help users with questions related to financial metrics of Microsoft (MSFT), Tesla (TSLA), and Apple (AAPL). The project uses a combination of rule-based logic and machine learning models to provide answers in different formats such as text responses, tables, and charts. The application is built using Streamlit for the user interface and integrates with LangChain and Groq for the underlying AI functionalities.

|

| 6 |

+

|

| 7 |

+

## Project Structure

|

| 8 |

+

|

| 9 |

+

The project consists of three main components:

|

| 10 |

+

|

| 11 |

+

1. **main.py**: This script handles the core functionalities of the AI assistant, including initializing the language models, creating agents, and defining the conversation handling logic.

|

| 12 |

+

2. **rule_based.py**: This script contains the rule-based logic for providing predefined answers to specific queries.

|

| 13 |

+

3. **app.py**: This script sets up the Streamlit interface and integrates the rule-based and AI-driven responses.

|

| 14 |

+

|

| 15 |

+

## File Descriptions

|

| 16 |

+

|

| 17 |

+

### `main.py`

|

| 18 |

+

- **Imports and Initialization**: Sets up necessary imports, initializes the Groq client and model, and defines chat history.

|

| 19 |

+

- **Agent Creation**: Creates a CSV agent using LangChain's `create_csv_agent` function to handle queries related to financial metrics.

|

| 20 |

+

- **Functions**:

|

| 21 |

+

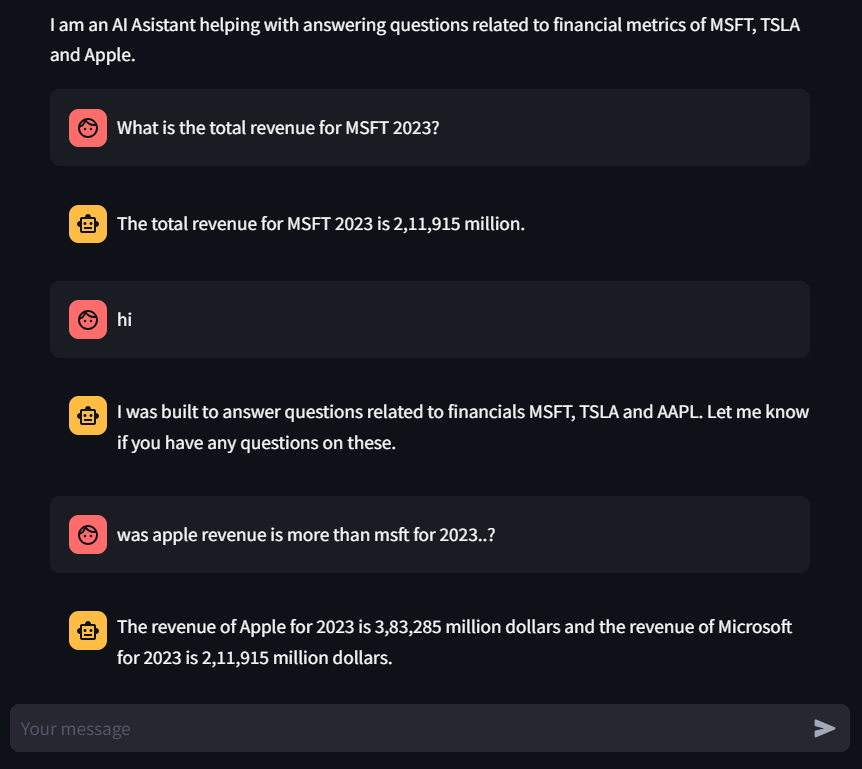

- `convo_agent`: Handles simple conversational queries.

|

| 22 |

+

- `csv_agent`: Processes financial queries and formats responses as tables, bar charts, or line charts.

|

| 23 |

+

- `run_conversation`: Manages the flow of the conversation by determining which function to use based on the user query.

|

| 24 |

+

- `get_response`: Processes the user query, interacts with the agents, and returns the appropriate response.

|

| 25 |

+

- `write_answer`: Formats and displays the response in Streamlit, including rendering tables and charts.

|

| 26 |

+

|

| 27 |

+

### `rule_based.py`

|

| 28 |

+

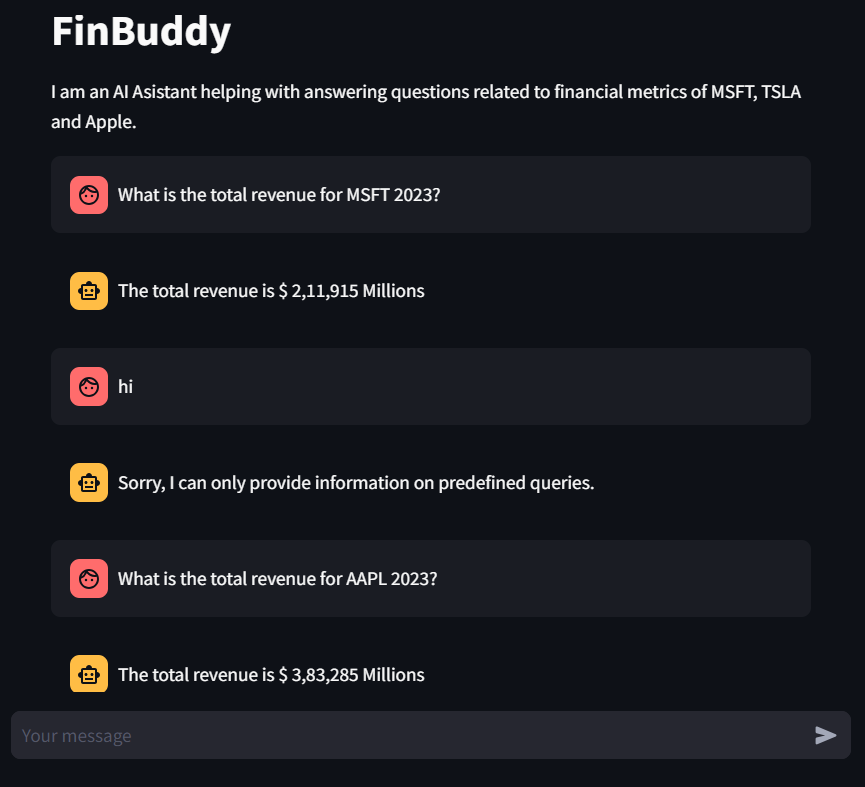

- **simple_chatbot**: A simple rule-based chatbot that returns predefined answers for specific queries related to the total revenue of MSFT, AAPL, and TSLA for 2022 and 2023.

|

| 29 |

+

|

| 30 |

+

### `app.py`

|

| 31 |

+

- **Streamlit Setup**: Initializes the Streamlit app, sets the title, and displays chat history.

|

| 32 |

+

- **Message Handling**: Captures user input, retrieves responses from the AI assistant or rule-based bot, and displays the responses.

|

| 33 |

+

- **Integration**: Integrates `get_response` from `main.py` and `simple_chatbot` from `rule_based.py` to handle user queries.

|

| 34 |

+

|

| 35 |

+

## Project Flow

|

| 36 |

+

|

| 37 |

+

1. **User Interaction**:

|

| 38 |

+

- The user interacts with the Streamlit app by typing a query into the chat input.

|

| 39 |

+

|

| 40 |

+

2. **Message Capture**:

|

| 41 |

+

- The app captures the user's input and appends it to the chat history.

|

| 42 |

+

|

| 43 |

+

3. **Response Generation**:

|

| 44 |

+

- The `get_response` function is called with the user query.

|

| 45 |

+

- `get_response` decides whether to use the `convo_agent` or `csv_agent` based on the nature of the query.

|

| 46 |

+

- The appropriate agent processes the query and returns the response.

|

| 47 |

+

|

| 48 |

+

4. **Rule-Based Check**:

|

| 49 |

+

- Optionally, the response can be generated by the `simple_chatbot` for predefined queries if the rule-based option is enabled.

|

| 50 |

+

|

| 51 |

+

5. **Response Display**:

|

| 52 |

+

- The response is formatted and displayed in the Streamlit app.

|

| 53 |

+

- If the response includes a table or chart, it is rendered accordingly.

|

| 54 |

+

|

| 55 |

+

6. **Chat History Update**:

|

| 56 |

+

- The chat history is updated with the new user query and the AI response.

|

| 57 |

+

|

| 58 |

+

## How to Run

|

| 59 |

+

Configuring Groq API

|

| 60 |

+

Create an API Key:

|

| 61 |

+

|

| 62 |

+

Visit the [Groq Console](https://console.groq.com/docs/api-reference#chat) and create an API key.

|

| 63 |

+

|

| 64 |

+

1. Ensure you have the necessary dependencies installed:

|

| 65 |

+

```bash

|

| 66 |

+

pip install -r requirements.txt

|

| 67 |

+

```

|

| 68 |

+

|

| 69 |

+

2. Run the Streamlit app:

|

| 70 |

+

```bash

|

| 71 |

+

streamlit run app.py

|

| 72 |

+

```

|

| 73 |

+

|

| 74 |

+

3. Interact with the FinBuddy assistant through the Streamlit interface by typing queries related to the financial metrics of MSFT, TSLA, and AAPL.

|

| 75 |

+

|

| 76 |

+

Current this doesn't support Visualization or table, will be added in next iteration.

|

| 77 |

+

|

| 78 |

+

## Conclusion

|

| 79 |

+

|

| 80 |

+

FinBuddy is a robust AI assistant designed to help users with financial queries. By combining rule-based logic and advanced AI models, it provides accurate and formatted responses to a variety of questions. The use of Streamlit ensures a user-friendly interface for seamless interaction.

|

Rule_Based_Sample_Response.png

ADDED

|

Smart_Chatbot.png

ADDED

|

Smart_Chatbot2.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import time

|

| 2 |

+

import warnings

|

| 3 |

+

|

| 4 |

+

import pandas as pd

|

| 5 |

+

import streamlit as st

|

| 6 |

+

from langchain.memory.chat_message_histories import StreamlitChatMessageHistory

|

| 7 |

+

|

| 8 |

+

from src.main import get_response

|

| 9 |

+

from src.rule_based import simple_chatbot

|

| 10 |

+

from src.main import write_answer

|

| 11 |

+

warnings.filterwarnings("ignore")

|

| 12 |

+

|

| 13 |

+

|

| 14 |

+

# Streamlit app setup

|

| 15 |

+

st.title("FinBuddy")

|

| 16 |

+

st.write("I am an AI Assistant helping with answering questions related to financial metrics of MSFT, TSLA, and Apple.")

|

| 17 |

+

|

| 18 |

+

msgs = StreamlitChatMessageHistory(key="special_app_key")

|

| 19 |

+

|

| 20 |

+

for msg in msgs.messages:

|

| 21 |

+

st.chat_message(msg.type).write(msg.content)

|

| 22 |

+

|

| 23 |

+

if prompt := st.chat_input():

|

| 24 |

+

start_time = time.time()

|

| 25 |

+

st.chat_message("human").write(prompt)

|

| 26 |

+

msgs.add_user_message(prompt)

|

| 27 |

+

|

| 28 |

+

with st.spinner("Waiting for response..."):

|

| 29 |

+

# Get response from chatbot

|

| 30 |

+

response_text = get_response(prompt)

|

| 31 |

+

|

| 32 |

+

# enable below one to get the plots or table

|

| 33 |

+

#response_text = write_answer(response_text)

|

| 34 |

+

|

| 35 |

+

# Rule based bot

|

| 36 |

+

#response_text = simple_chatbot(prompt)

|

| 37 |

+

|

| 38 |

+

if response_text:

|

| 39 |

+

if 'answer' in response_text:

|

| 40 |

+

st.chat_message("ai").write(response_text['answer'])

|

| 41 |

+

msgs.add_ai_message(response_text['answer'])

|

| 42 |

+

else:

|

| 43 |

+

for key, value in response_text.items():

|

| 44 |

+

msgs.add_ai_message(str(response_text))

|

| 45 |

+

end_time = time.time()

|

| 46 |

+

st.write(f"Total time {end_time - start_time}")

|

| 47 |

+

else:

|

| 48 |

+

st.error("No valid response received from the AI.")

|

data/Financial_data.csv

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Company,Year,Total Revenue(In Millions),Net Income(In Millions),Total Assets(In Millions),Total Liabilities(In Millions),Cash Flow from Operating Activities(In Millions)

|

| 2 |

+

Microsoft,2023,211915.00,72361.00,411976.00,205753.00,87582.00

|

| 3 |

+

Tesla,2023,96773.00,14974.00,106618.00,43009.00,13256.00

|

| 4 |

+

Apple,2023,383285.00,96995.00,352583.00,290437.00,110543.00

|

| 5 |

+

Microsoft,2022,198270.00,72738.00,364840.00,198298.00,89035.00

|

| 6 |

+

Tesla,2022,81462.00,12587.00,82338.00,36440.00,14724.00

|

| 7 |

+

Apple,2022,394328.00,99803.00,352755.00,302083.00,122151.00

|

| 8 |

+

Microsoft,2021,168088.00,61271.00,333779.00,191791.00,76740.00

|

| 9 |

+

Tesla,2021,53823.00,5644.00,62131.00,30548.00,11497.00

|

| 10 |

+

Apple,2021,365817.00,94680.00,351002.00,287912.00,104038.00

|

requirements.txt

ADDED

|

@@ -0,0 +1,75 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

aiohttp==3.9.5

|

| 2 |

+

aiosignal==1.3.1

|

| 3 |

+

altair==5.3.0

|

| 4 |

+

annotated-types==0.7.0

|

| 5 |

+

anyio==4.4.0

|

| 6 |

+

attrs==23.2.0

|

| 7 |

+

blinker==1.8.2

|

| 8 |

+

cachetools==5.3.3

|

| 9 |

+

certifi==2024.6.2

|

| 10 |

+

charset-normalizer==3.3.2

|

| 11 |

+

click==8.1.7

|

| 12 |

+

colorama==0.4.6

|

| 13 |

+

dataclasses-json==0.6.6

|

| 14 |

+

distro==1.9.0

|

| 15 |

+

frozenlist==1.4.1

|

| 16 |

+

gitdb==4.0.11

|

| 17 |

+

GitPython==3.1.43

|

| 18 |

+

greenlet==3.0.3

|

| 19 |

+

groq==0.8.0

|

| 20 |

+

h11==0.14.0

|

| 21 |

+

httpcore==1.0.5

|

| 22 |

+

httpx==0.27.0

|

| 23 |

+

idna==3.7

|

| 24 |

+

Jinja2==3.1.4

|

| 25 |

+

jsonpatch==1.33

|

| 26 |

+

jsonpointer==2.4

|

| 27 |

+

jsonschema==4.22.0

|

| 28 |

+

jsonschema-specifications==2023.12.1

|

| 29 |

+

langchain==0.2.1

|

| 30 |

+

langchain-community==0.2.1

|

| 31 |

+

langchain-core==0.2.3

|

| 32 |

+

langchain-experimental==0.0.59

|

| 33 |

+

langchain-groq==0.1.4

|

| 34 |

+

langchain-text-splitters==0.2.0

|

| 35 |

+

langsmith==0.1.68

|

| 36 |

+

markdown-it-py==3.0.0

|

| 37 |

+

MarkupSafe==2.1.5

|

| 38 |

+

marshmallow==3.21.2

|

| 39 |

+

mdurl==0.1.2

|

| 40 |

+

multidict==6.0.5

|

| 41 |

+

mypy-extensions==1.0.0

|

| 42 |

+

numpy==1.26.4

|

| 43 |

+

orjson==3.10.3

|

| 44 |

+

packaging==23.2

|

| 45 |

+

pandas==2.2.2

|

| 46 |

+

pillow==10.3.0

|

| 47 |

+

protobuf==4.25.3

|

| 48 |

+

pyarrow==16.1.0

|

| 49 |

+

pydantic==2.7.3

|

| 50 |

+

pydantic_core==2.18.4

|

| 51 |

+

pydeck==0.9.1

|

| 52 |

+

Pygments==2.18.0

|

| 53 |

+

python-dateutil==2.9.0.post0

|

| 54 |

+

pytz==2024.1

|

| 55 |

+

PyYAML==6.0.1

|

| 56 |

+

referencing==0.35.1

|

| 57 |

+

requests==2.32.3

|

| 58 |

+

rich==13.7.1

|

| 59 |

+

rpds-py==0.18.1

|

| 60 |

+

six==1.16.0

|

| 61 |

+

smmap==5.0.1

|

| 62 |

+

sniffio==1.3.1

|

| 63 |

+

SQLAlchemy==2.0.30

|

| 64 |

+

streamlit==1.35.0

|

| 65 |

+

tabulate==0.9.0

|

| 66 |

+

tenacity==8.3.0

|

| 67 |

+

toml==0.10.2

|

| 68 |

+

toolz==0.12.1

|

| 69 |

+

tornado==6.4

|

| 70 |

+

typing-inspect==0.9.0

|

| 71 |

+

typing_extensions==4.12.1

|

| 72 |

+

tzdata==2024.1

|

| 73 |

+

urllib3==2.2.1

|

| 74 |

+

watchdog==4.0.1

|

| 75 |

+

yarl==1.9.4

|

src/__init__.py

ADDED

|

File without changes

|

src/__pycache__/__init__.cpython-311.pyc

ADDED

|

Binary file (241 Bytes). View file

|

|

|

src/__pycache__/main.cpython-311.pyc

ADDED

|

Binary file (10.1 kB). View file

|

|

|

src/__pycache__/rule_based.cpython-311.pyc

ADDED

|

Binary file (1.03 kB). View file

|

|

|

src/main.py

ADDED

|

@@ -0,0 +1,209 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import ast

|

| 2 |

+

import json

|

| 3 |

+

import streamlit as st

|

| 4 |

+

import pandas as pd

|

| 5 |

+

from langchain.agents.agent_types import AgentType

|

| 6 |

+

from langchain_experimental.agents import create_csv_agent

|

| 7 |

+

from langchain_groq import ChatGroq

|

| 8 |

+

from langchain.memory import ChatMessageHistory

|

| 9 |

+

from groq import Groq

|

| 10 |

+

|

| 11 |

+

# Initialize Groq client and model

|

| 12 |

+

client = Groq(api_key='gsk')

|

| 13 |

+

MODEL = 'llama3-70b-8192'

|

| 14 |

+

|

| 15 |

+

# Initialize chat history

|

| 16 |

+

history = ChatMessageHistory()

|

| 17 |

+

history.add_user_message("hi!")

|

| 18 |

+

history.add_ai_message("whats up?")

|

| 19 |

+

|

| 20 |

+

# Initialize language model

|

| 21 |

+

llm = ChatGroq(

|

| 22 |

+

temperature=0,

|

| 23 |

+

groq_api_key='gsk...',

|

| 24 |

+

model_name='llama3-70b-8192'

|

| 25 |

+

)

|

| 26 |

+

|

| 27 |

+

# Create CSV agent

|

| 28 |

+

agent = create_csv_agent(

|

| 29 |

+

llm,

|

| 30 |

+

r"Financial_data.csv",

|

| 31 |

+

verbose=True,

|

| 32 |

+

agent=AgentType.ZERO_SHOT_REACT_DESCRIPTION,

|

| 33 |

+

max_iterations=5,

|

| 34 |

+

handle_parsing_errors=True

|

| 35 |

+

)

|

| 36 |

+

|

| 37 |

+

# Functions to handle conversations

|

| 38 |

+

def convo_agent(question, chat_history):

|

| 39 |

+

response = 'I was built to answer questions related to financials MSFT, TSLA and AAPL. Let me know if you have any questions on these.'

|

| 40 |

+

return {'answer': response}

|

| 41 |

+

|

| 42 |

+

def csv_agent(question, chat_history):

|

| 43 |

+

prompt = (

|

| 44 |

+

"""

|

| 45 |

+

Let's decode the way to respond to the queries. The responses depend on the type of information requested in the query.

|

| 46 |

+

|

| 47 |

+

Return just the data, don't take effort of creating plots, prints and all.

|

| 48 |

+

No explanation needed. Return just the dict

|

| 49 |

+

Always include units in response .

|

| 50 |

+

|

| 51 |

+

1. If the query requires a table, format your answer like this:

|

| 52 |

+

{"table": {"columns": ["column1", "column2", ...], "data": [[value1, value2, ...], [value1, value2, ...], ...]}}

|

| 53 |

+

|

| 54 |

+

2. For a bar chart, respond like this:

|

| 55 |

+

{"bar": {"columns": ["A", "B", "C", ...], "data": [25, 24, 10, ...]}}

|

| 56 |

+

|

| 57 |

+

3. If a line chart is more appropriate, your reply should look like this:

|

| 58 |

+

{"line": {"columns": ["A", "B", "C", ...], "data": [25, 24, 10, ...]}}

|

| 59 |

+

|

| 60 |

+

Note: We only accommodate two types of charts: "bar" and "line".

|

| 61 |

+

|

| 62 |

+

4. For a plain question that doesn't need a chart or table, your response should be:

|

| 63 |

+

{"answer": "Your answer goes here"}

|

| 64 |

+

|

| 65 |

+

For example:

|

| 66 |

+

{"answer": "The Product with the highest Orders is '15143Exfo'"}

|

| 67 |

+

|

| 68 |

+

5. If the answer is not known or available, respond with:

|

| 69 |

+

{"answer": "I do not know."}

|

| 70 |

+

|

| 71 |

+

Return all output as a string. Remember to encase all strings in the "columns" list and data list in double quotes.

|

| 72 |

+

For example: {"columns": ["Products", "Orders"], "data": [["51993Masc", 191], ["49631Foun", 152]]}

|

| 73 |

+

|

| 74 |

+

Return all the numerical values in int format only.

|

| 75 |

+

Now, let's tackle the query step by step. Here's the query for you to work on:"""

|

| 76 |

+

+

|

| 77 |

+

question

|

| 78 |

+

)

|

| 79 |

+

|

| 80 |

+

|

| 81 |

+

|

| 82 |

+

response = agent.run(prompt)

|

| 83 |

+

return ast.literal_eval(response)

|

| 84 |

+

|

| 85 |

+

# Define tools and function mapping

|

| 86 |

+

tool_convo_agent = {

|

| 87 |

+

"type": "function",

|

| 88 |

+

"function": {

|

| 89 |

+

"name": "convo_agent",

|

| 90 |

+

"description": "Answers questions like chit chat or simple friendly messages",

|

| 91 |

+

"parameters": {

|

| 92 |

+

"type": "object",

|

| 93 |

+

"properties": {

|

| 94 |

+

"question": {"type": "string", "description": "The user question"}

|

| 95 |

+

},

|

| 96 |

+

"required": ["question"],

|

| 97 |

+

},

|

| 98 |

+

},

|

| 99 |

+

}

|

| 100 |

+

|

| 101 |

+

tool_fin_agent = {

|

| 102 |

+

"type": "function",

|

| 103 |

+

"function": {

|

| 104 |

+

"name": "csv_agent",

|

| 105 |

+

"description": "Answers questions related to financial metrics of us Apple, Microsoft and Tesla.",

|

| 106 |

+

"parameters": {

|

| 107 |

+

"type": "object",

|

| 108 |

+

"properties": {

|

| 109 |

+

"question": {"type": "string", "description": "The user question"}

|

| 110 |

+

},

|

| 111 |

+

"required": ["question"],

|

| 112 |

+

},

|

| 113 |

+

},

|

| 114 |

+

}

|

| 115 |

+

|

| 116 |

+

tools = [tool_convo_agent, tool_fin_agent]

|

| 117 |

+

|

| 118 |

+

function_map = {

|

| 119 |

+

"csv_agent": csv_agent,

|

| 120 |

+

"convo_agent": convo_agent

|

| 121 |

+

}

|

| 122 |

+

|

| 123 |

+

# Conversation handling

|

| 124 |

+

def run_conversation(chat_history, user_prompt, tools):

|

| 125 |

+

final_prompt = {'chat_history':{chat_history}, 'question':{user_prompt}}

|

| 126 |

+

messages = [

|

| 127 |

+

{"role": "system", "content": "You are an efficient agent that determines which function to use in order to answer user question."},

|

| 128 |

+

{"role": "user", "content": str(final_prompt)},

|

| 129 |

+

]

|

| 130 |

+

|

| 131 |

+

response = client.chat.completions.create(

|

| 132 |

+

model=MODEL,

|

| 133 |

+

messages=messages,

|

| 134 |

+

tools=tools,

|

| 135 |

+

tool_choice="auto",

|

| 136 |

+

max_tokens=4096

|

| 137 |

+

)

|

| 138 |

+

|

| 139 |

+

response_message = response.choices[0].message

|

| 140 |

+

tool_calls = response_message.tool_calls

|

| 141 |

+

return tool_calls

|

| 142 |

+

|

| 143 |

+

def get_response(question):

|

| 144 |

+

try:

|

| 145 |

+

history.add_user_message(question)

|

| 146 |

+

chat_history = str(history.messages)

|

| 147 |

+

agents = run_conversation(chat_history, question, tools)

|

| 148 |

+

func_to_call = agents[0].function.name

|

| 149 |

+

|

| 150 |

+

|

| 151 |

+

if func_to_call in function_map:

|

| 152 |

+

question_to_run = ast.literal_eval(agents[0].function.arguments)['question']

|

| 153 |

+

result = function_map[func_to_call](question_to_run, chat_history)

|

| 154 |

+

else:

|

| 155 |

+

result = {"error": "Something went Wrong"}

|

| 156 |

+

|

| 157 |

+

if 'error' in result:

|

| 158 |

+

return "Something went wrong"

|

| 159 |

+

print(result)

|

| 160 |

+

history.add_ai_message(str(result))

|

| 161 |

+

return result

|

| 162 |

+

|

| 163 |

+

except Exception as e:

|

| 164 |

+

return f"Something went wrong: {e}"

|

| 165 |

+

|

| 166 |

+

# Response writing for Streamlit

|

| 167 |

+

def write_answer(response_dict):

|

| 168 |

+

if not isinstance(response_dict, dict):

|

| 169 |

+

return "Invalid response format received."

|

| 170 |

+

|

| 171 |

+

if "answer" in response_dict:

|

| 172 |

+

return response_dict

|

| 173 |

+

|

| 174 |

+

if "bar" in response_dict:

|

| 175 |

+

data = response_dict["bar"]

|

| 176 |

+

try:

|

| 177 |

+

df_data = {col: [x[i] if isinstance(x, list) else x for x in data['data']] for i, col in enumerate(data['columns'])}

|

| 178 |

+

df = pd.DataFrame(df_data)

|

| 179 |

+

df.set_index("Year", inplace=True)

|

| 180 |

+

st.bar_chart(df)

|

| 181 |

+

return {'bar': ''}

|

| 182 |

+

except ValueError:

|

| 183 |

+

st.error(f"Couldn't create DataFrame from data: {data}")

|

| 184 |

+

|

| 185 |

+

if "line" in response_dict:

|

| 186 |

+

data = response_dict["line"]

|

| 187 |

+

try:

|

| 188 |

+

df_data = {col: [x[i] for x in data['data']] for i, col in enumerate(data['columns'])}

|

| 189 |

+

df = pd.DataFrame(df_data)

|

| 190 |

+

df.set_index("Year", inplace=True)

|

| 191 |

+

st.line_chart(df)

|

| 192 |

+

return {'line': ''}

|

| 193 |

+

except ValueError:

|

| 194 |

+

st.error(f"Couldn't create DataFrame from data: {data}")

|

| 195 |

+

|

| 196 |

+

if "table" in response_dict:

|

| 197 |

+

data = response_dict["table"]

|

| 198 |

+

try:

|

| 199 |

+

clean_data = [

|

| 200 |

+

[int(x.replace(',', '')) if isinstance(x, str) and x.replace(',', '').isdigit() else x for x in row]

|

| 201 |

+

for row in data["data"]

|

| 202 |

+

]

|

| 203 |

+

df = pd.DataFrame(clean_data, columns=data["columns"])

|

| 204 |

+

st.table(df)

|

| 205 |

+

return {'table': ''}

|

| 206 |

+

except ValueError as e:

|

| 207 |

+

st.error(f"Couldn't create DataFrame from data: {data}. Error: {e}")

|

| 208 |

+

|

| 209 |

+

return "No valid response type found."

|

src/rule_based.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

def simple_chatbot(user_query):

|

| 2 |

+

if user_query == "What is the total revenue for MSFT 2023?":

|

| 3 |

+

return "The total revenue is $ 2,11,915 Millions"

|

| 4 |

+

elif user_query == "What is the total revenue for AAPL 2023?":

|

| 5 |

+

return "The total revenue is $ 3,83,285 Millions"

|

| 6 |

+

elif user_query == "What is the total revenue for TSLA 2023?":

|

| 7 |

+

return "The total revenue is $ 96,773 Millions"

|

| 8 |

+

elif user_query == "What is the total revenue for AAPL 2022?":

|

| 9 |

+

return "The total revenue is $ 3,94,328 Millions"

|

| 10 |

+

elif user_query == "What is the total revenue for TSLA 2022?":

|

| 11 |

+

return "The total revenue is $ 81,462 Millions"

|

| 12 |

+

else:

|

| 13 |

+

return "Sorry, I can only provide information on predefined queries."

|