Spaces:

Runtime error

Runtime error

Commit

·

85d9fef

1

Parent(s):

e82c53e

add easyocr

Browse files- ezocr/LICENSE +201 -0

- ezocr/README.md +49 -0

- ezocr/easyocrlite.egg-info/PKG-INFO +8 -0

- ezocr/easyocrlite.egg-info/SOURCES.txt +11 -0

- ezocr/easyocrlite.egg-info/dependency_links.txt +1 -0

- ezocr/easyocrlite.egg-info/requires.txt +5 -0

- ezocr/easyocrlite.egg-info/top_level.txt +1 -0

- ezocr/easyocrlite/__init__.py +1 -0

- ezocr/easyocrlite/model/__init__.py +1 -0

- ezocr/easyocrlite/model/craft.py +174 -0

- ezocr/easyocrlite/reader.py +272 -0

- ezocr/easyocrlite/types.py +5 -0

- ezocr/easyocrlite/utils/__init__.py +0 -0

- ezocr/easyocrlite/utils/detect_utils.py +327 -0

- ezocr/easyocrlite/utils/download_utils.py +92 -0

- ezocr/easyocrlite/utils/image_utils.py +93 -0

- ezocr/easyocrlite/utils/utils.py +43 -0

- ezocr/pics/chinese.jpg +0 -0

- ezocr/pics/easyocr_framework.jpeg +0 -0

- ezocr/pics/example.png +0 -0

- ezocr/pics/example2.png +0 -0

- ezocr/pics/example3.png +0 -0

- ezocr/pics/french.jpg +0 -0

- ezocr/pics/japanese.jpg +0 -0

- ezocr/pics/jietu.png +0 -0

- ezocr/pics/korean.png +0 -0

- ezocr/pics/lihe.png +0 -0

- ezocr/pics/longjing.jpg +0 -0

- ezocr/pics/paibian.jpeg +0 -0

- ezocr/pics/roubing.png +0 -0

- ezocr/pics/shupai.png +0 -0

- ezocr/pics/thai.jpg +0 -0

- ezocr/pics/wenzi.png +0 -0

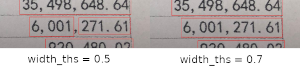

- ezocr/pics/width_ths.png +0 -0

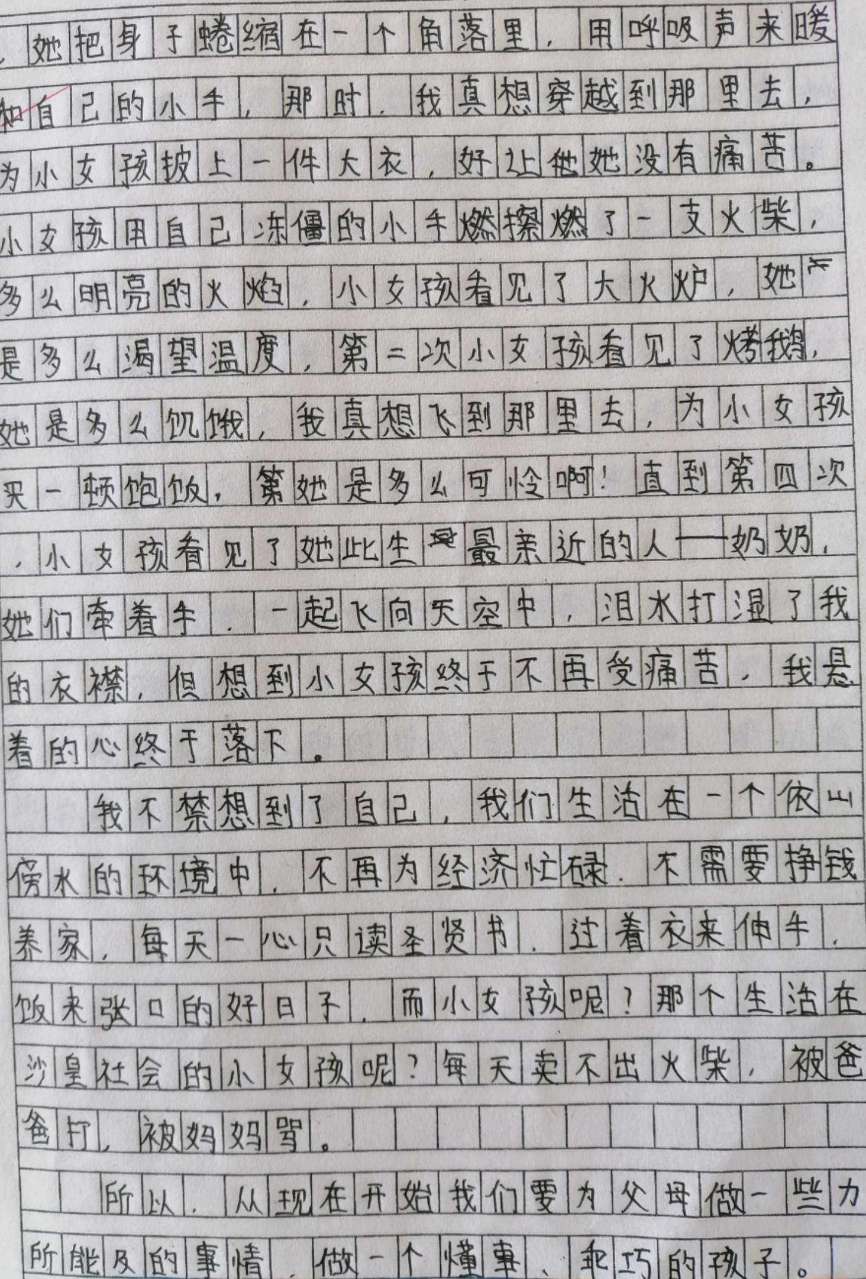

- ezocr/pics/zuowen.jpg +0 -0

- ezocr/requirements.txt +5 -0

- ezocr/setup.py +21 -0

ezocr/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

ezocr/README.md

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

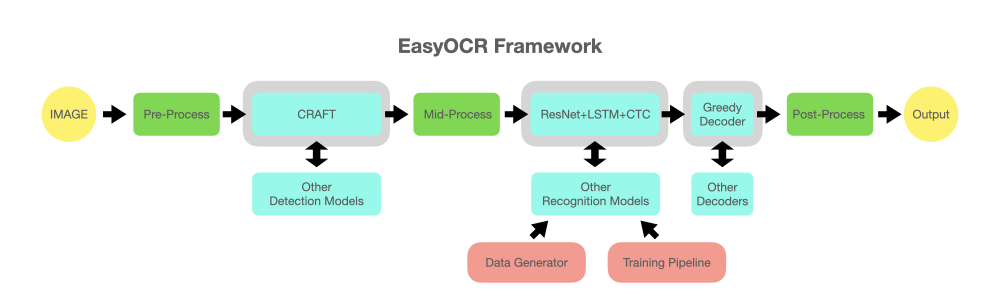

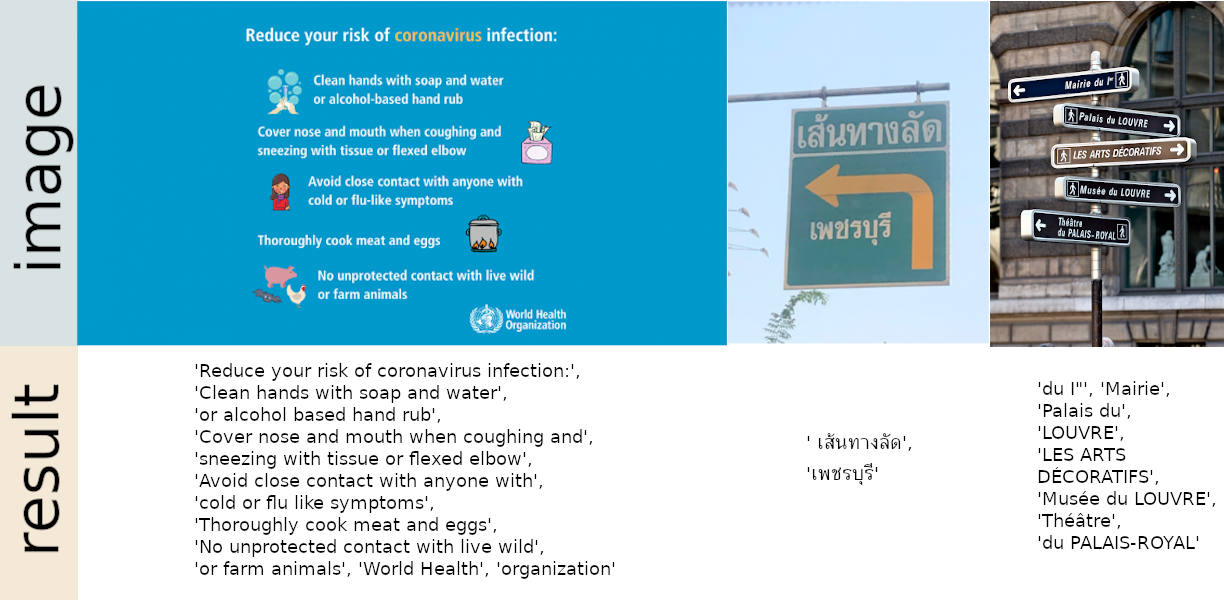

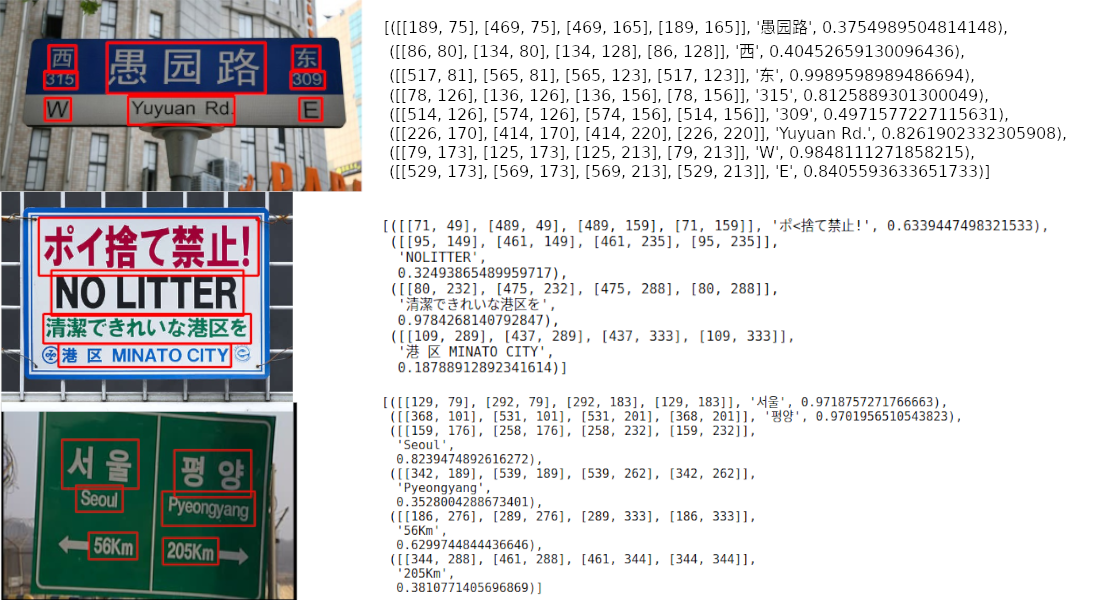

# EasyOCR Lite

|

| 2 |

+

|

| 3 |

+

从EasyOCR提取文本定位有关代码,进一步适配中文,修正缺陷

|

| 4 |

+

|

| 5 |

+

## 安装

|

| 6 |

+

|

| 7 |

+

Python版本至少为3.8。

|

| 8 |

+

|

| 9 |

+

|

| 10 |

+

首先按照PyTorch官方说明安装PyTorch。

|

| 11 |

+

|

| 12 |

+

```

|

| 13 |

+

pip install -e .

|

| 14 |

+

```

|

| 15 |

+

|

| 16 |

+

## 使用

|

| 17 |

+

|

| 18 |

+

``` python3

|

| 19 |

+

from easyocrlite import ReaderLite

|

| 20 |

+

|

| 21 |

+

reader = ReaderLite()

|

| 22 |

+

results = reader.process('my_awesome_handwriting.png')

|

| 23 |

+

```

|

| 24 |

+

|

| 25 |

+

返回的内容为边界框和对应的图像区域的列表。

|

| 26 |

+

其它说明见[demo](./demo.ipynb)。

|

| 27 |

+

|

| 28 |

+

|

| 29 |

+

## 致谢

|

| 30 |

+

|

| 31 |

+

基于[EasyOCR](https://github.com/JaidedAI/EasyOCR)修改实现。以下为EasyOCR致谢:

|

| 32 |

+

|

| 33 |

+

This project is based on research and code from several papers and open-source repositories.

|

| 34 |

+

|

| 35 |

+

All deep learning execution is based on [Pytorch](https://pytorch.org). :heart:

|

| 36 |

+

|

| 37 |

+

Detection execution uses the CRAFT algorithm from this [official repository](https://github.com/clovaai/CRAFT-pytorch) and their [paper](https://arxiv.org/abs/1904.01941) (Thanks @YoungminBaek from [@clovaai](https://github.com/clovaai)). We also use their pretrained model. Training script is provided by [@gmuffiness](https://github.com/gmuffiness).

|

| 38 |

+

|

| 39 |

+

The recognition model is a CRNN ([paper](https://arxiv.org/abs/1507.05717)). It is composed of 3 main components: feature extraction (we are currently using [Resnet](https://arxiv.org/abs/1512.03385)) and VGG, sequence labeling ([LSTM](https://www.bioinf.jku.at/publications/older/2604.pdf)) and decoding ([CTC](https://www.cs.toronto.edu/~graves/icml_2006.pdf)). The training pipeline for recognition execution is a modified version of the [deep-text-recognition-benchmark](https://github.com/clovaai/deep-text-recognition-benchmark) framework. (Thanks [@ku21fan](https://github.com/ku21fan) from [@clovaai](https://github.com/clovaai)) This repository is a gem that deserves more recognition.

|

| 40 |

+

|

| 41 |

+

Beam search code is based on this [repository](https://github.com/githubharald/CTCDecoder) and his [blog](https://towardsdatascience.com/beam-search-decoding-in-ctc-trained-neural-networks-5a889a3d85a7). (Thanks [@githubharald](https://github.com/githubharald))

|

| 42 |

+

|

| 43 |

+

Data synthesis is based on [TextRecognitionDataGenerator](https://github.com/Belval/TextRecognitionDataGenerator). (Thanks [@Belval](https://github.com/Belval))

|

| 44 |

+

|

| 45 |

+

And a good read about CTC from distill.pub [here](https://distill.pub/2017/ctc/).

|

| 46 |

+

|

| 47 |

+

|

| 48 |

+

## 许可证 (注意!)

|

| 49 |

+

Apache 2.0

|

ezocr/easyocrlite.egg-info/PKG-INFO

ADDED

|

@@ -0,0 +1,8 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Metadata-Version: 2.1

|

| 2 |

+

Name: easyocrlite

|

| 3 |

+

Version: 0.0.1

|

| 4 |

+

License: Apache License 2.0

|

| 5 |

+

Keywords: ocr optical character recognition deep learning neural network

|

| 6 |

+

Classifier: Development Status :: 5 - Production/Stable

|

| 7 |

+

Requires-Python: >=3.7

|

| 8 |

+

License-File: LICENSE

|

ezocr/easyocrlite.egg-info/SOURCES.txt

ADDED

|

@@ -0,0 +1,11 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

LICENSE

|

| 2 |

+

README.md

|

| 3 |

+

setup.py

|

| 4 |

+

easyocrlite/__init__.py

|

| 5 |

+

easyocrlite/reader.py

|

| 6 |

+

easyocrlite/types.py

|

| 7 |

+

easyocrlite.egg-info/PKG-INFO

|

| 8 |

+

easyocrlite.egg-info/SOURCES.txt

|

| 9 |

+

easyocrlite.egg-info/dependency_links.txt

|

| 10 |

+

easyocrlite.egg-info/requires.txt

|

| 11 |

+

easyocrlite.egg-info/top_level.txt

|

ezocr/easyocrlite.egg-info/dependency_links.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

ezocr/easyocrlite.egg-info/requires.txt

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision>=0.5

|

| 3 |

+

opencv-python-headless<=4.5.4.60

|

| 4 |

+

numpy

|

| 5 |

+

Pillow

|

ezocr/easyocrlite.egg-info/top_level.txt

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

easyocrlite

|

ezocr/easyocrlite/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from easyocrlite.reader import ReaderLite

|

ezocr/easyocrlite/model/__init__.py

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

from .craft import CRAFT

|

ezocr/easyocrlite/model/craft.py

ADDED

|

@@ -0,0 +1,174 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Copyright (c) 2019-present NAVER Corp.

|

| 3 |

+

MIT License

|

| 4 |

+

"""

|

| 5 |

+

from __future__ import annotations

|

| 6 |

+

|

| 7 |

+

from collections import namedtuple

|

| 8 |

+

from typing import Iterable, Tuple

|

| 9 |

+

|

| 10 |

+

import torch

|

| 11 |

+

import torch.nn as nn

|

| 12 |

+

import torch.nn.functional as F

|

| 13 |

+

import torchvision

|

| 14 |

+

from packaging import version

|

| 15 |

+

from torchvision import models

|

| 16 |

+

|

| 17 |

+

VGGOutputs = namedtuple(

|

| 18 |

+

"VggOutputs", ["fc7", "relu5_3", "relu4_3", "relu3_2", "relu2_2"]

|

| 19 |

+

)

|

| 20 |

+

|

| 21 |

+

def init_weights(modules: Iterable[nn.Module]):

|

| 22 |

+

for m in modules:

|

| 23 |

+

if isinstance(m, nn.Conv2d):

|

| 24 |

+

nn.init.xavier_uniform_(m.weight)

|

| 25 |

+

if m.bias is not None:

|

| 26 |

+

nn.init.zeros_(m.bias)

|

| 27 |

+

elif isinstance(m, nn.BatchNorm2d):

|

| 28 |

+

nn.init.constant_(m.weight, 1.0)

|

| 29 |

+

nn.init.zeros_(m.bias)

|

| 30 |

+

elif isinstance(m, nn.Linear):

|

| 31 |

+

nn.init.normal_(m.weight, 0, 0.01)

|

| 32 |

+

nn.init.zeros_(m.bias)

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

class VGG16_BN(nn.Module):

|

| 36 |

+

def __init__(self, pretrained: bool=True, freeze: bool=True):

|

| 37 |

+

super().__init__()

|

| 38 |

+

if version.parse(torchvision.__version__) >= version.parse("0.13"):

|

| 39 |

+

vgg_pretrained_features = models.vgg16_bn(

|

| 40 |

+

weights=models.VGG16_BN_Weights.DEFAULT if pretrained else None

|

| 41 |

+

).features

|

| 42 |

+

else: # torchvision.__version__ < 0.13

|

| 43 |

+

models.vgg.model_urls["vgg16_bn"] = models.vgg.model_urls[

|

| 44 |

+

"vgg16_bn"

|

| 45 |

+

].replace("https://", "http://")

|

| 46 |

+

vgg_pretrained_features = models.vgg16_bn(pretrained=pretrained).features

|

| 47 |

+

|

| 48 |

+

self.slice1 = torch.nn.Sequential()

|

| 49 |

+

self.slice2 = torch.nn.Sequential()

|

| 50 |

+

self.slice3 = torch.nn.Sequential()

|

| 51 |

+

self.slice4 = torch.nn.Sequential()

|

| 52 |

+

self.slice5 = torch.nn.Sequential()

|

| 53 |

+

for x in range(12): # conv2_2

|

| 54 |

+

self.slice1.add_module(str(x), vgg_pretrained_features[x])

|

| 55 |

+

for x in range(12, 19): # conv3_3

|

| 56 |

+

self.slice2.add_module(str(x), vgg_pretrained_features[x])

|

| 57 |

+

for x in range(19, 29): # conv4_3

|

| 58 |

+

self.slice3.add_module(str(x), vgg_pretrained_features[x])

|

| 59 |

+

for x in range(29, 39): # conv5_3

|

| 60 |

+

self.slice4.add_module(str(x), vgg_pretrained_features[x])

|

| 61 |

+

|

| 62 |

+

# fc6, fc7 without atrous conv

|

| 63 |

+

self.slice5 = torch.nn.Sequential(

|

| 64 |

+

nn.MaxPool2d(kernel_size=3, stride=1, padding=1),

|

| 65 |

+

nn.Conv2d(512, 1024, kernel_size=3, padding=6, dilation=6),

|

| 66 |

+

nn.Conv2d(1024, 1024, kernel_size=1),

|

| 67 |

+

)

|

| 68 |

+

|

| 69 |

+

if not pretrained:

|

| 70 |

+

init_weights(self.slice1.modules())

|

| 71 |

+

init_weights(self.slice2.modules())

|

| 72 |

+

init_weights(self.slice3.modules())

|

| 73 |

+

init_weights(self.slice4.modules())

|

| 74 |

+

|

| 75 |

+

init_weights(self.slice5.modules()) # no pretrained model for fc6 and fc7

|

| 76 |

+

|

| 77 |

+

if freeze:

|

| 78 |

+

for param in self.slice1.parameters(): # only first conv

|

| 79 |

+

param.requires_grad = False

|

| 80 |

+

|

| 81 |

+

def forward(self, x: torch.Tensor) -> VGGOutputs:

|

| 82 |

+

h = self.slice1(x)

|

| 83 |

+

h_relu2_2 = h

|

| 84 |

+

h = self.slice2(h)

|

| 85 |

+

h_relu3_2 = h

|

| 86 |

+

h = self.slice3(h)

|

| 87 |

+

h_relu4_3 = h

|

| 88 |

+

h = self.slice4(h)

|

| 89 |

+

h_relu5_3 = h

|

| 90 |

+

h = self.slice5(h)

|

| 91 |

+

h_fc7 = h

|

| 92 |

+

|

| 93 |

+

out = VGGOutputs(h_fc7, h_relu5_3, h_relu4_3, h_relu3_2, h_relu2_2)

|

| 94 |

+

return out

|

| 95 |

+

|

| 96 |

+

|

| 97 |

+

class DoubleConv(nn.Module):

|

| 98 |

+

def __init__(self, in_ch: int, mid_ch: int, out_ch: int):

|

| 99 |

+

super().__init__()

|

| 100 |

+

self.conv = nn.Sequential(

|

| 101 |

+

nn.Conv2d(in_ch + mid_ch, mid_ch, kernel_size=1),

|

| 102 |

+

nn.BatchNorm2d(mid_ch),

|

| 103 |

+

nn.ReLU(inplace=True),

|

| 104 |

+

nn.Conv2d(mid_ch, out_ch, kernel_size=3, padding=1),

|

| 105 |

+

nn.BatchNorm2d(out_ch),

|

| 106 |

+

nn.ReLU(inplace=True),

|

| 107 |

+

)

|

| 108 |

+

|

| 109 |

+

def forward(self, x: torch.Tensor) -> torch.Tensor:

|

| 110 |

+

x = self.conv(x)

|

| 111 |

+

return x

|

| 112 |

+

|

| 113 |

+

|

| 114 |

+

class CRAFT(nn.Module):

|

| 115 |

+

def __init__(self, pretrained: bool=False, freeze: bool=False):

|

| 116 |

+

super(CRAFT, self).__init__()

|

| 117 |

+

|

| 118 |

+

""" Base network """

|

| 119 |

+

self.basenet = VGG16_BN(pretrained, freeze)

|

| 120 |

+

|

| 121 |

+

""" U network """

|

| 122 |

+

self.upconv1 = DoubleConv(1024, 512, 256)

|

| 123 |

+

self.upconv2 = DoubleConv(512, 256, 128)

|

| 124 |

+

self.upconv3 = DoubleConv(256, 128, 64)

|

| 125 |

+

self.upconv4 = DoubleConv(128, 64, 32)

|

| 126 |

+

|

| 127 |

+

num_class = 2

|

| 128 |

+

self.conv_cls = nn.Sequential(

|

| 129 |

+

nn.Conv2d(32, 32, kernel_size=3, padding=1),

|

| 130 |

+

nn.ReLU(inplace=True),

|

| 131 |

+

nn.Conv2d(32, 32, kernel_size=3, padding=1),

|

| 132 |

+

nn.ReLU(inplace=True),

|

| 133 |

+

nn.Conv2d(32, 16, kernel_size=3, padding=1),

|

| 134 |

+

nn.ReLU(inplace=True),

|

| 135 |

+

nn.Conv2d(16, 16, kernel_size=1),

|

| 136 |

+

nn.ReLU(inplace=True),

|

| 137 |

+

nn.Conv2d(16, num_class, kernel_size=1),

|

| 138 |

+

)

|

| 139 |

+

|

| 140 |

+

init_weights(self.upconv1.modules())

|

| 141 |

+

init_weights(self.upconv2.modules())

|

| 142 |

+

init_weights(self.upconv3.modules())

|

| 143 |

+

init_weights(self.upconv4.modules())

|

| 144 |

+

init_weights(self.conv_cls.modules())

|

| 145 |

+

|

| 146 |

+

def forward(self, x: torch.Tensor) -> Tuple[torch.Tensor, torch.Tensor]:

|

| 147 |

+

"""Base network"""

|

| 148 |

+

sources = self.basenet(x)

|

| 149 |

+

|

| 150 |

+

""" U network """

|

| 151 |

+

y = torch.cat([sources[0], sources[1]], dim=1)

|

| 152 |

+

y = self.upconv1(y)

|

| 153 |

+

|

| 154 |

+

y = F.interpolate(

|

| 155 |

+

y, size=sources[2].size()[2:], mode="bilinear", align_corners=False

|

| 156 |

+

)

|

| 157 |

+

y = torch.cat([y, sources[2]], dim=1)

|

| 158 |

+

y = self.upconv2(y)

|

| 159 |

+

|

| 160 |

+

y = F.interpolate(

|

| 161 |

+

y, size=sources[3].size()[2:], mode="bilinear", align_corners=False

|

| 162 |

+

)

|

| 163 |

+

y = torch.cat([y, sources[3]], dim=1)

|

| 164 |

+

y = self.upconv3(y)

|

| 165 |

+

|

| 166 |

+

y = F.interpolate(

|

| 167 |

+

y, size=sources[4].size()[2:], mode="bilinear", align_corners=False

|

| 168 |

+

)

|

| 169 |

+

y = torch.cat([y, sources[4]], dim=1)

|

| 170 |

+

feature = self.upconv4(y)

|

| 171 |

+

|

| 172 |

+

y = self.conv_cls(feature)

|

| 173 |

+

|

| 174 |

+

return y.permute(0, 2, 3, 1), feature

|

ezocr/easyocrlite/reader.py

ADDED

|

@@ -0,0 +1,272 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import annotations

|

| 2 |

+

|

| 3 |

+

import logging

|

| 4 |

+

import os

|

| 5 |

+

from pathlib import Path

|

| 6 |

+

from typing import Tuple

|

| 7 |

+

|

| 8 |

+

import cv2

|

| 9 |

+

import numpy as np

|

| 10 |

+

import torch

|

| 11 |

+

from PIL import Image, ImageEnhance

|

| 12 |

+

|

| 13 |

+

from easyocrlite.model import CRAFT

|

| 14 |

+

|

| 15 |

+

from easyocrlite.utils.download_utils import prepare_model

|

| 16 |

+

from easyocrlite.utils.image_utils import (

|

| 17 |

+

adjust_result_coordinates,

|

| 18 |

+

boxed_transform,

|

| 19 |

+

normalize_mean_variance,

|

| 20 |

+

resize_aspect_ratio,

|

| 21 |

+

)

|

| 22 |

+

from easyocrlite.utils.detect_utils import (

|

| 23 |

+

extract_boxes,

|

| 24 |

+

extract_regions_from_boxes,

|

| 25 |

+

box_expand,

|

| 26 |

+

greedy_merge,

|

| 27 |

+

)

|

| 28 |

+

from easyocrlite.types import BoxTuple, RegionTuple

|

| 29 |

+

import easyocrlite.utils.utils as utils

|

| 30 |

+

|

| 31 |

+

logger = logging.getLogger(__name__)

|

| 32 |

+

|

| 33 |

+

MODULE_PATH = (

|

| 34 |

+

os.environ.get("EASYOCR_MODULE_PATH")

|

| 35 |

+

or os.environ.get("MODULE_PATH")

|

| 36 |

+

or os.path.expanduser("~/.EasyOCR/")

|

| 37 |

+

)

|

| 38 |

+

|

| 39 |

+

|

| 40 |

+

class ReaderLite(object):

|

| 41 |

+

def __init__(

|

| 42 |

+

self,

|

| 43 |

+

gpu=True,

|

| 44 |

+

model_storage_directory=None,

|

| 45 |

+

download_enabled=True,

|

| 46 |

+

verbose=True,

|

| 47 |

+

quantize=True,

|

| 48 |

+

cudnn_benchmark=False,

|

| 49 |

+

):

|

| 50 |

+

|

| 51 |

+

self.verbose = verbose

|

| 52 |

+

|

| 53 |

+

model_storage_directory = Path(

|

| 54 |

+

model_storage_directory

|

| 55 |

+

if model_storage_directory

|

| 56 |

+

else MODULE_PATH + "/model"

|

| 57 |

+

)

|

| 58 |

+

self.detector_path = prepare_model(

|

| 59 |

+

model_storage_directory, download_enabled, verbose

|

| 60 |

+

)

|

| 61 |

+

|

| 62 |

+

self.quantize = quantize

|

| 63 |

+

self.cudnn_benchmark = cudnn_benchmark

|

| 64 |

+

if gpu is False:

|

| 65 |

+

self.device = "cpu"

|

| 66 |

+

if verbose:

|

| 67 |

+

logger.warning(

|

| 68 |

+

"Using CPU. Note: This module is much faster with a GPU."

|

| 69 |

+

)

|

| 70 |

+

elif not torch.cuda.is_available():

|

| 71 |

+

self.device = "cpu"

|

| 72 |

+

if verbose:

|

| 73 |

+

logger.warning(

|

| 74 |

+

"CUDA not available - defaulting to CPU. Note: This module is much faster with a GPU."

|

| 75 |

+

)

|

| 76 |

+

elif gpu is True:

|

| 77 |

+

self.device = "cuda"

|

| 78 |

+

else:

|

| 79 |

+

self.device = gpu

|

| 80 |

+

|

| 81 |

+

self.detector = CRAFT()

|

| 82 |

+

|

| 83 |

+

state_dict = torch.load(self.detector_path, map_location=self.device)

|

| 84 |

+

if list(state_dict.keys())[0].startswith("module"):

|

| 85 |

+

state_dict = {k[7:]: v for k, v in state_dict.items()}

|

| 86 |

+

|

| 87 |

+

self.detector.load_state_dict(state_dict)

|

| 88 |

+

|

| 89 |

+

if self.device == "cpu":

|

| 90 |

+

if self.quantize:

|

| 91 |

+

try:

|

| 92 |

+

torch.quantization.quantize_dynamic(

|

| 93 |

+

self.detector, dtype=torch.qint8, inplace=True

|

| 94 |

+

)

|

| 95 |

+

except:

|

| 96 |

+

pass

|

| 97 |

+

else:

|

| 98 |

+

self.detector = torch.nn.DataParallel(self.detector).to(self.device)

|

| 99 |

+

import torch.backends.cudnn as cudnn

|

| 100 |

+

|

| 101 |

+

cudnn.benchmark = self.cudnn_benchmark

|

| 102 |

+

|

| 103 |

+

self.detector.eval()

|

| 104 |

+

|

| 105 |

+

def process(

|

| 106 |

+

self,

|

| 107 |

+

image_path: str,

|

| 108 |

+

max_size: int = 960,

|

| 109 |

+

expand_ratio: float = 1.0,

|

| 110 |

+

sharp: float = 1.0,

|

| 111 |

+

contrast: float = 1.0,

|

| 112 |

+

text_confidence: float = 0.7,

|

| 113 |

+

text_threshold: float = 0.4,

|

| 114 |

+

link_threshold: float = 0.4,

|

| 115 |

+

slope_ths: float = 0.1,

|

| 116 |

+

ratio_ths: float = 0.5,

|

| 117 |

+

center_ths: float = 0.5,

|

| 118 |

+

dim_ths: float = 0.5,

|

| 119 |

+

space_ths: float = 1.0,

|

| 120 |

+

add_margin: float = 0.1,

|

| 121 |

+

min_size: float = 0.01,

|

| 122 |

+

) -> Tuple[BoxTuple, list[np.ndarray]]:

|

| 123 |

+

|

| 124 |

+

image = Image.open(image_path).convert('RGB')

|

| 125 |

+

|

| 126 |

+

tensor, inverse_ratio = self.preprocess(

|

| 127 |

+

image, max_size, expand_ratio, sharp, contrast

|

| 128 |

+

)

|

| 129 |

+

|

| 130 |

+

scores = self.forward_net(tensor)

|

| 131 |

+

|

| 132 |

+

boxes = self.detect(scores, text_confidence, text_threshold, link_threshold)

|

| 133 |

+

|

| 134 |

+

image = np.array(image)

|

| 135 |

+

region_list, box_list = self.postprocess(

|

| 136 |

+

image,

|

| 137 |

+

boxes,

|

| 138 |

+

inverse_ratio,

|

| 139 |

+

slope_ths,

|

| 140 |

+

ratio_ths,

|

| 141 |

+

center_ths,

|

| 142 |

+

dim_ths,

|

| 143 |

+

space_ths,

|

| 144 |

+

add_margin,

|

| 145 |

+

min_size,

|

| 146 |

+

)

|

| 147 |

+

|

| 148 |

+

# get cropped image

|

| 149 |

+

image_list = []

|

| 150 |

+

for region in region_list:

|

| 151 |

+

x_min, x_max, y_min, y_max = region

|

| 152 |

+

crop_img = image[y_min:y_max, x_min:x_max, :]

|

| 153 |

+

image_list.append(

|

| 154 |

+

(

|

| 155 |

+

((x_min, y_min), (x_max, y_min), (x_max, y_max), (x_min, y_max)),

|

| 156 |

+

crop_img,

|

| 157 |

+

)

|

| 158 |

+

)

|

| 159 |

+

|

| 160 |

+

for box in box_list:

|

| 161 |

+

transformed_img = boxed_transform(image, np.array(box, dtype="float32"))

|

| 162 |

+

image_list.append((box, transformed_img))

|

| 163 |

+

|

| 164 |

+

# sort by top left point

|

| 165 |

+

image_list = sorted(image_list, key=lambda x: (x[0][0][1], x[0][0][0]))

|

| 166 |

+

|

| 167 |

+

return image_list

|

| 168 |

+

|

| 169 |

+

def preprocess(

|

| 170 |

+

self,

|

| 171 |

+

image: Image.Image,

|

| 172 |

+

max_size: int,

|

| 173 |

+

expand_ratio: float = 1.0,

|

| 174 |

+

sharp: float = 1.0,

|

| 175 |

+

contrast: float = 1.0,

|

| 176 |

+

) -> torch.Tensor:

|

| 177 |

+

if sharp != 1:

|

| 178 |

+

enhancer = ImageEnhance.Sharpness(image)

|

| 179 |

+

image = enhancer.enhance(sharp)

|

| 180 |

+

if contrast != 1:

|

| 181 |

+

enhancer = ImageEnhance.Contrast(image)

|

| 182 |

+

image = enhancer.enhance(contrast)

|

| 183 |

+

|

| 184 |

+

image = np.array(image)

|

| 185 |

+

|

| 186 |

+

image, target_ratio = resize_aspect_ratio(

|

| 187 |

+

image, max_size, interpolation=cv2.INTER_LINEAR, expand_ratio=expand_ratio

|

| 188 |

+

)

|

| 189 |

+

inverse_ratio = 1 / target_ratio

|

| 190 |

+

|

| 191 |

+

x = np.transpose(normalize_mean_variance(image), (2, 0, 1))

|

| 192 |

+

|

| 193 |

+

x = torch.tensor(np.array([x]), device=self.device)

|

| 194 |

+

|

| 195 |

+

return x, inverse_ratio

|

| 196 |

+

|

| 197 |

+

@torch.no_grad()

|

| 198 |

+

def forward_net(self, tensor: torch.Tensor) -> torch.Tensor:

|

| 199 |

+

scores, feature = self.detector(tensor)

|

| 200 |

+

return scores[0]

|

| 201 |

+

|

| 202 |

+

def detect(

|

| 203 |

+

self,

|

| 204 |

+

scores: torch.Tensor,

|

| 205 |

+

text_confidence: float = 0.7,

|

| 206 |

+

text_threshold: float = 0.4,

|

| 207 |

+

link_threshold: float = 0.4,

|

| 208 |

+

) -> list[BoxTuple]:

|

| 209 |

+

# make score and link map

|

| 210 |

+

score_text = scores[:, :, 0].cpu().data.numpy()

|

| 211 |

+

score_link = scores[:, :, 1].cpu().data.numpy()

|

| 212 |

+

# extract box

|

| 213 |

+

boxes, _ = extract_boxes(

|

| 214 |

+

score_text, score_link, text_confidence, text_threshold, link_threshold

|

| 215 |

+

)

|

| 216 |

+

return boxes

|

| 217 |

+

|

| 218 |

+

def postprocess(

|

| 219 |

+

self,

|

| 220 |

+

image: np.ndarray,

|

| 221 |

+

boxes: list[BoxTuple],

|

| 222 |

+

inverse_ratio: float,

|

| 223 |

+

slope_ths: float = 0.1,

|

| 224 |

+

ratio_ths: float = 0.5,

|

| 225 |

+

center_ths: float = 0.5,

|

| 226 |

+

dim_ths: float = 0.5,

|

| 227 |

+

space_ths: float = 1.0,

|

| 228 |

+

add_margin: float = 0.1,

|

| 229 |

+

min_size: int = 0,

|

| 230 |

+

) -> Tuple[list[RegionTuple], list[BoxTuple]]:

|

| 231 |

+

|

| 232 |

+

# coordinate adjustment

|

| 233 |

+

boxes = adjust_result_coordinates(boxes, inverse_ratio)

|

| 234 |

+

|

| 235 |

+

max_y, max_x, _ = image.shape

|

| 236 |

+

|

| 237 |

+

# extract region and merge

|

| 238 |

+

region_list, box_list = extract_regions_from_boxes(boxes, slope_ths)

|

| 239 |

+

|

| 240 |

+

region_list = greedy_merge(

|

| 241 |

+

region_list,

|

| 242 |

+

ratio_ths=ratio_ths,

|

| 243 |

+

center_ths=center_ths,

|

| 244 |

+

dim_ths=dim_ths,

|

| 245 |

+

space_ths=space_ths,

|

| 246 |

+

verbose=0

|

| 247 |

+

)

|

| 248 |

+

|

| 249 |

+

# add margin

|

| 250 |

+

region_list = [

|

| 251 |

+

region.expand(add_margin, (max_x, max_y)).as_tuple()

|

| 252 |

+

for region in region_list

|

| 253 |

+

]

|

| 254 |

+

|

| 255 |

+

box_list = [box_expand(box, add_margin, (max_x, max_y)) for box in box_list]

|

| 256 |

+

|

| 257 |

+

# filter by size

|

| 258 |

+

if min_size:

|

| 259 |

+

if min_size < 1:

|

| 260 |

+

min_size = int(min(max_y, max_x) * min_size)

|

| 261 |

+

|

| 262 |

+

region_list = [

|

| 263 |

+

i for i in region_list if max(i[1] - i[0], i[3] - i[2]) > min_size

|

| 264 |

+

]

|

| 265 |

+

box_list = [

|

| 266 |

+

i

|

| 267 |

+

for i in box_list

|

| 268 |

+

if max(utils.diff([c[0] for c in i]), utils.diff([c[1] for c in i]))

|

| 269 |

+

> min_size

|

| 270 |

+

]

|

| 271 |

+

|

| 272 |

+

return region_list, box_list

|

ezocr/easyocrlite/types.py

ADDED

|

@@ -0,0 +1,5 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from typing import Tuple

|

| 2 |

+

|

| 3 |

+

Point = Tuple[int, int]

|

| 4 |

+

BoxTuple = Tuple[Point, Point, Point, Point]

|

| 5 |

+

RegionTuple = Tuple[int, int, int, int]

|

ezocr/easyocrlite/utils/__init__.py

ADDED

|

File without changes

|

ezocr/easyocrlite/utils/detect_utils.py

ADDED

|

@@ -0,0 +1,327 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|