Spaces:

Running

Running

torchmoji code

Browse files- .travis.yml +1 -1

- LICENSE +21 -0

- data/.gitkeep +1 -0

- data/Olympic/raw.pickle +3 -0

- data/PsychExp/raw.pickle +3 -0

- data/SCv1/raw.pickle +3 -0

- data/SCv2-GEN/raw.pickle +3 -0

- data/SE0714/raw.pickle +3 -0

- data/SS-Twitter/raw.pickle +3 -0

- data/SS-Youtube/raw.pickle +3 -0

- data/emoji_codes.json +67 -0

- data/kaggle-insults/raw.pickle +3 -0

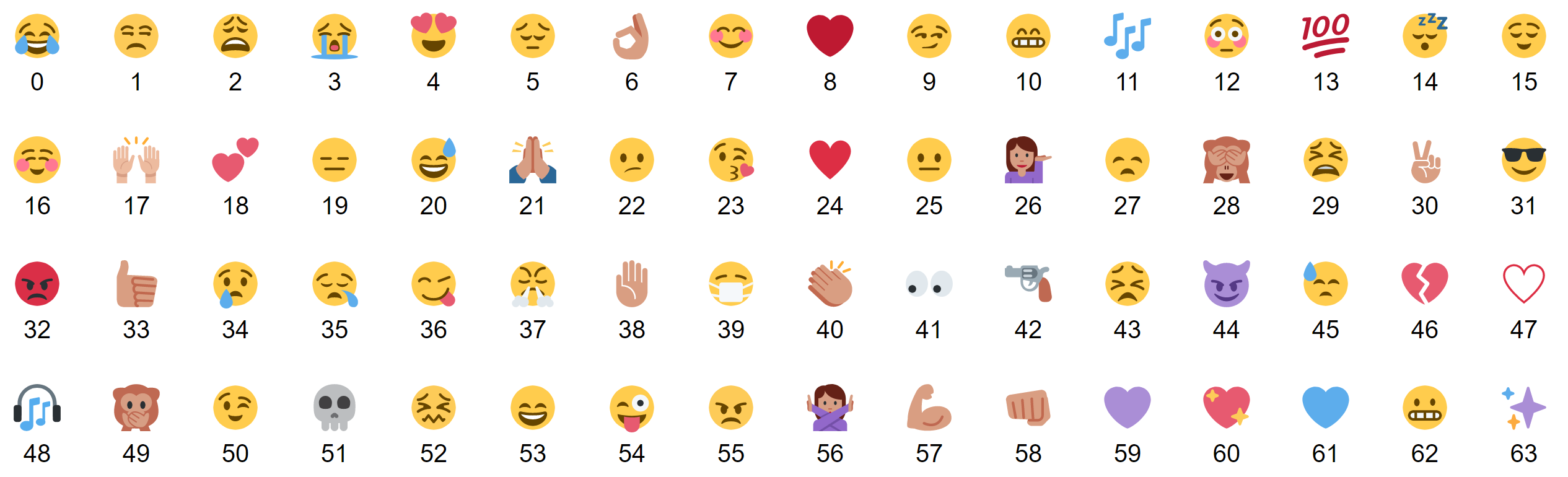

- emoji_overview.png +0 -0

- environment.yml +41 -0

- examples/.gitkeep +1 -0

- examples/README.md +39 -0

- examples/__init__.py +0 -0

- examples/create_twitter_vocab.py +13 -0

- examples/dataset_split.py +59 -0

- examples/encode_texts.py +41 -0

- examples/example_helper.py +6 -0

- examples/finetune_insults_chain-thaw.py +44 -0

- examples/finetune_semeval_class-avg_f1.py +50 -0

- examples/finetune_youtube_last.py +35 -0

- examples/score_texts_emojis.py +85 -0

- examples/text_emojize.py +63 -0

- examples/tokenize_dataset.py +26 -0

- examples/vocab_extension.py +30 -0

- scripts/analyze_all_results.py +40 -0

- scripts/analyze_results.py +39 -0

- scripts/calculate_coverages.py +90 -0

- scripts/convert_all_datasets.py +110 -0

- scripts/download_weights.py +65 -0

- scripts/finetune_dataset.py +109 -0

- scripts/results/.gitkeep +1 -0

- setup.py +16 -0

- tests/test_finetuning.py +235 -0

- tests/test_helper.py +6 -0

- tests/test_sentence_tokenizer.py +113 -0

- tests/test_tokenizer.py +167 -0

- tests/test_word_generator.py +73 -0

.travis.yml

CHANGED

|

@@ -24,4 +24,4 @@ script:

|

|

| 24 |

- true # pytest --capture=sys # add other tests here

|

| 25 |

notifications:

|

| 26 |

on_success: change

|

| 27 |

-

on_failure: change # `always` will be the setting once code changes slow down

|

|

|

|

| 24 |

- true # pytest --capture=sys # add other tests here

|

| 25 |

notifications:

|

| 26 |

on_success: change

|

| 27 |

+

on_failure: change # `always` will be the setting once code changes slow down

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2017 Bjarke Felbo, Han Thi Nguyen, Thomas Wolf

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

data/.gitkeep

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

data/Olympic/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:398d394ac1d7c2116166ca968bae9b1f9fd049f9e9281f05c94ae7b2ea97d427

|

| 3 |

+

size 227301

|

data/PsychExp/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:dc7d710f2ccd7e9d8e620be703a446ce7ec05818d5ce6afe43d1e6aa9ff4a8aa

|

| 3 |

+

size 3492229

|

data/SCv1/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:a65db490451dada57b88918a951d04082a51599d2cde24914f8c713312de89f5

|

| 3 |

+

size 868931

|

data/SCv2-GEN/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:43ae3ea310130c2ca2089d60876ba6b08006d7f2e018a0519c4fdb7b166f992f

|

| 3 |

+

size 883467

|

data/SE0714/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:66f0ecf48affe92bdacdeb64ab20c1c84b9990a3ac7b659a1a98aa29c9c4a064

|

| 3 |

+

size 126311

|

data/SS-Twitter/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:0ef34a4f0fe39b1bb45fcb72026bbf3b82ce2e2a14c13d39610b3b41f18fc98e

|

| 3 |

+

size 413660

|

data/SS-Youtube/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:83ec15e393fb4f0dbb524946480de50e9baf9fef83a3e9eaf95caa3c425b87aa

|

| 3 |

+

size 396130

|

data/emoji_codes.json

ADDED

|

@@ -0,0 +1,67 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"0": ":joy:",

|

| 3 |

+

"1": ":unamused:",

|

| 4 |

+

"2": ":weary:",

|

| 5 |

+

"3": ":sob:",

|

| 6 |

+

"4": ":heart_eyes:",

|

| 7 |

+

"5": ":pensive:",

|

| 8 |

+

"6": ":ok_hand:",

|

| 9 |

+

"7": ":blush:",

|

| 10 |

+

"8": ":heart:",

|

| 11 |

+

"9": ":smirk:",

|

| 12 |

+

"10":":grin:",

|

| 13 |

+

"11":":notes:",

|

| 14 |

+

"12":":flushed:",

|

| 15 |

+

"13":":100:",

|

| 16 |

+

"14":":sleeping:",

|

| 17 |

+

"15":":relieved:",

|

| 18 |

+

"16":":relaxed:",

|

| 19 |

+

"17":":raised_hands:",

|

| 20 |

+

"18":":two_hearts:",

|

| 21 |

+

"19":":expressionless:",

|

| 22 |

+

"20":":sweat_smile:",

|

| 23 |

+

"21":":pray:",

|

| 24 |

+

"22":":confused:",

|

| 25 |

+

"23":":kissing_heart:",

|

| 26 |

+

"24":":hearts:",

|

| 27 |

+

"25":":neutral_face:",

|

| 28 |

+

"26":":information_desk_person:",

|

| 29 |

+

"27":":disappointed:",

|

| 30 |

+

"28":":see_no_evil:",

|

| 31 |

+

"29":":tired_face:",

|

| 32 |

+

"30":":v:",

|

| 33 |

+

"31":":sunglasses:",

|

| 34 |

+

"32":":rage:",

|

| 35 |

+

"33":":thumbsup:",

|

| 36 |

+

"34":":cry:",

|

| 37 |

+

"35":":sleepy:",

|

| 38 |

+

"36":":stuck_out_tongue_winking_eye:",

|

| 39 |

+

"37":":triumph:",

|

| 40 |

+

"38":":raised_hand:",

|

| 41 |

+

"39":":mask:",

|

| 42 |

+

"40":":clap:",

|

| 43 |

+

"41":":eyes:",

|

| 44 |

+

"42":":gun:",

|

| 45 |

+

"43":":persevere:",

|

| 46 |

+

"44":":imp:",

|

| 47 |

+

"45":":sweat:",

|

| 48 |

+

"46":":broken_heart:",

|

| 49 |

+

"47":":blue_heart:",

|

| 50 |

+

"48":":headphones:",

|

| 51 |

+

"49":":speak_no_evil:",

|

| 52 |

+

"50":":wink:",

|

| 53 |

+

"51":":skull:",

|

| 54 |

+

"52":":confounded:",

|

| 55 |

+

"53":":smile:",

|

| 56 |

+

"54":":stuck_out_tongue_winking_eye:",

|

| 57 |

+

"55":":angry:",

|

| 58 |

+

"56":":no_good:",

|

| 59 |

+

"57":":muscle:",

|

| 60 |

+

"58":":punch:",

|

| 61 |

+

"59":":purple_heart:",

|

| 62 |

+

"60":":sparkling_heart:",

|

| 63 |

+

"61":":blue_heart:",

|

| 64 |

+

"62":":grimacing:",

|

| 65 |

+

"63":":sparkles:"

|

| 66 |

+

}

|

| 67 |

+

|

data/kaggle-insults/raw.pickle

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

version https://git-lfs.github.com/spec/v1

|

| 2 |

+

oid sha256:2fbeca5470209163e04b6975fc5fb91889e79583fe6ff499f83966e36392fcda

|

| 3 |

+

size 1338159

|

emoji_overview.png

ADDED

|

environment.yml

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

name: torchMoji

|

| 2 |

+

channels:

|

| 3 |

+

- pytorch

|

| 4 |

+

- defaults

|

| 5 |

+

dependencies:

|

| 6 |

+

- _libgcc_mutex=0.1

|

| 7 |

+

- blas=1.0

|

| 8 |

+

- ca-certificates=2019.11.27

|

| 9 |

+

- certifi=2019.11.28

|

| 10 |

+

- cffi=1.13.2

|

| 11 |

+

- cudatoolkit=10.1.243

|

| 12 |

+

- intel-openmp=2019.4

|

| 13 |

+

- libedit=3.1.20181209

|

| 14 |

+

- libffi=3.2.1

|

| 15 |

+

- libgcc-ng=9.1.0

|

| 16 |

+

- libgfortran-ng=7.3.0

|

| 17 |

+

- libstdcxx-ng=9.1.0

|

| 18 |

+

- mkl=2018.0.3

|

| 19 |

+

- ncurses=6.1

|

| 20 |

+

- ninja=1.9.0

|

| 21 |

+

- nose=1.3.7

|

| 22 |

+

- numpy=1.13.1

|

| 23 |

+

- openssl=1.1.1d

|

| 24 |

+

- pip=19.3.1

|

| 25 |

+

- pycparser=2.19

|

| 26 |

+

- python=3.6.9

|

| 27 |

+

- pytorch=1.3.1

|

| 28 |

+

- readline=7.0

|

| 29 |

+

- scikit-learn=0.19.0

|

| 30 |

+

- scipy=0.19.1

|

| 31 |

+

- setuptools=42.0.2

|

| 32 |

+

- sqlite=3.30.1

|

| 33 |

+

- text-unidecode=1.0

|

| 34 |

+

- tk=8.6.8

|

| 35 |

+

- wheel=0.33.6

|

| 36 |

+

- xz=5.2.4

|

| 37 |

+

- zlib=1.2.11

|

| 38 |

+

- pip:

|

| 39 |

+

- emoji==0.4.5

|

| 40 |

+

prefix: /home/cbowdon/miniconda3/envs/torchMoji

|

| 41 |

+

|

examples/.gitkeep

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

|

examples/README.md

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# torchMoji examples

|

| 2 |

+

|

| 3 |

+

## Initialization

|

| 4 |

+

[create_twitter_vocab.py](create_twitter_vocab.py)

|

| 5 |

+

Create a new vocabulary from a tsv file.

|

| 6 |

+

|

| 7 |

+

[tokenize_dataset.py](tokenize_dataset.py)

|

| 8 |

+

Tokenize a given dataset using the prebuilt vocabulary.

|

| 9 |

+

|

| 10 |

+

[vocab_extension.py](vocab_extension.py)

|

| 11 |

+

Extend the given vocabulary using dataset-specific words.

|

| 12 |

+

|

| 13 |

+

[dataset_split.py](dataset_split.py)

|

| 14 |

+

Split a given dataset into training, validation and testing.

|

| 15 |

+

|

| 16 |

+

## Use pretrained model/architecture

|

| 17 |

+

[score_texts_emojis.py](score_texts_emojis.py)

|

| 18 |

+

Use torchMoji to score texts for emoji distribution.

|

| 19 |

+

|

| 20 |

+

[text_emojize.py](text_emojize.py)

|

| 21 |

+

Use torchMoji to output emoji visualization from a single text input (mapped from `emoji_overview.png`)

|

| 22 |

+

|

| 23 |

+

```sh

|

| 24 |

+

python examples/text_emojize.py --text "I love mom's cooking\!"

|

| 25 |

+

# => I love mom's cooking! 😋 😍 💓 💛 ❤

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

[encode_texts.py](encode_texts.py)

|

| 29 |

+

Use torchMoji to encode the text into 2304-dimensional feature vectors for further modeling/analysis.

|

| 30 |

+

|

| 31 |

+

## Transfer learning

|

| 32 |

+

[finetune_youtube_last.py](finetune_youtube_last.py)

|

| 33 |

+

Finetune the model on the SS-Youtube dataset using the 'last' method.

|

| 34 |

+

|

| 35 |

+

[finetune_insults_chain-thaw.py](finetune_insults_chain-thaw.py)

|

| 36 |

+

Finetune the model on the Kaggle insults dataset (from blog post) using the 'chain-thaw' method.

|

| 37 |

+

|

| 38 |

+

[finetune_semeval_class-avg_f1.py](finetune_semeval_class-avg_f1.py)

|

| 39 |

+

Finetune the model on the SemeEval emotion dataset using the 'full' method and evaluate using the class average F1 metric.

|

examples/__init__.py

ADDED

|

File without changes

|

examples/create_twitter_vocab.py

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

""" Creates a vocabulary from a tsv file.

|

| 2 |

+

"""

|

| 3 |

+

|

| 4 |

+

import codecs

|

| 5 |

+

import example_helper

|

| 6 |

+

from torchmoji.create_vocab import VocabBuilder

|

| 7 |

+

from torchmoji.word_generator import TweetWordGenerator

|

| 8 |

+

|

| 9 |

+

with codecs.open('../../twitterdata/tweets.2016-09-01', 'rU', 'utf-8') as stream:

|

| 10 |

+

wg = TweetWordGenerator(stream)

|

| 11 |

+

vb = VocabBuilder(wg)

|

| 12 |

+

vb.count_all_words()

|

| 13 |

+

vb.save_vocab()

|

examples/dataset_split.py

ADDED

|

@@ -0,0 +1,59 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

'''

|

| 2 |

+

Split a given dataset into three different datasets: training, validation and

|

| 3 |

+

testing.

|

| 4 |

+

|

| 5 |

+

This is achieved by splitting the given list of sentences into three separate

|

| 6 |

+

lists according to either a given ratio (e.g. [0.7, 0.1, 0.2]) or by an

|

| 7 |

+

explicit enumeration. The sentences are also tokenised using the given

|

| 8 |

+

vocabulary.

|

| 9 |

+

|

| 10 |

+

Also splits a given list of dictionaries containing information about

|

| 11 |

+

each sentence.

|

| 12 |

+

|

| 13 |

+

An additional parameter can be set 'extend_with', which will extend the given

|

| 14 |

+

vocabulary with up to 'extend_with' tokens, taken from the training dataset.

|

| 15 |

+

'''

|

| 16 |

+

from __future__ import print_function, unicode_literals

|

| 17 |

+

import example_helper

|

| 18 |

+

import json

|

| 19 |

+

|

| 20 |

+

from torchmoji.sentence_tokenizer import SentenceTokenizer

|

| 21 |

+

|

| 22 |

+

DATASET = [

|

| 23 |

+

'I am sentence 0',

|

| 24 |

+

'I am sentence 1',

|

| 25 |

+

'I am sentence 2',

|

| 26 |

+

'I am sentence 3',

|

| 27 |

+

'I am sentence 4',

|

| 28 |

+

'I am sentence 5',

|

| 29 |

+

'I am sentence 6',

|

| 30 |

+

'I am sentence 7',

|

| 31 |

+

'I am sentence 8',

|

| 32 |

+

'I am sentence 9 newword',

|

| 33 |

+

]

|

| 34 |

+

|

| 35 |

+

INFO_DICTS = [

|

| 36 |

+

{'label': 'sentence 0'},

|

| 37 |

+

{'label': 'sentence 1'},

|

| 38 |

+

{'label': 'sentence 2'},

|

| 39 |

+

{'label': 'sentence 3'},

|

| 40 |

+

{'label': 'sentence 4'},

|

| 41 |

+

{'label': 'sentence 5'},

|

| 42 |

+

{'label': 'sentence 6'},

|

| 43 |

+

{'label': 'sentence 7'},

|

| 44 |

+

{'label': 'sentence 8'},

|

| 45 |

+

{'label': 'sentence 9'},

|

| 46 |

+

]

|

| 47 |

+

|

| 48 |

+

with open('../model/vocabulary.json', 'r') as f:

|

| 49 |

+

vocab = json.load(f)

|

| 50 |

+

st = SentenceTokenizer(vocab, 30)

|

| 51 |

+

|

| 52 |

+

# Split using the default split ratio

|

| 53 |

+

print(st.split_train_val_test(DATASET, INFO_DICTS))

|

| 54 |

+

|

| 55 |

+

# Split explicitly

|

| 56 |

+

print(st.split_train_val_test(DATASET,

|

| 57 |

+

INFO_DICTS,

|

| 58 |

+

[[0, 1, 2, 4, 9], [5, 6], [7, 8, 3]],

|

| 59 |

+

extend_with=1))

|

examples/encode_texts.py

ADDED

|

@@ -0,0 +1,41 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

|

| 3 |

+

""" Use torchMoji to encode texts into emotional feature vectors.

|

| 4 |

+

"""

|

| 5 |

+

from __future__ import print_function, division, unicode_literals

|

| 6 |

+

import json

|

| 7 |

+

|

| 8 |

+

from torchmoji.sentence_tokenizer import SentenceTokenizer

|

| 9 |

+

from torchmoji.model_def import torchmoji_feature_encoding

|

| 10 |

+

from torchmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

|

| 11 |

+

|

| 12 |

+

TEST_SENTENCES = ['I love mom\'s cooking',

|

| 13 |

+

'I love how you never reply back..',

|

| 14 |

+

'I love cruising with my homies',

|

| 15 |

+

'I love messing with yo mind!!',

|

| 16 |

+

'I love you and now you\'re just gone..',

|

| 17 |

+

'This is shit',

|

| 18 |

+

'This is the shit']

|

| 19 |

+

|

| 20 |

+

maxlen = 30

|

| 21 |

+

batch_size = 32

|

| 22 |

+

|

| 23 |

+

print('Tokenizing using dictionary from {}'.format(VOCAB_PATH))

|

| 24 |

+

with open(VOCAB_PATH, 'r') as f:

|

| 25 |

+

vocabulary = json.load(f)

|

| 26 |

+

st = SentenceTokenizer(vocabulary, maxlen)

|

| 27 |

+

tokenized, _, _ = st.tokenize_sentences(TEST_SENTENCES)

|

| 28 |

+

|

| 29 |

+

print('Loading model from {}.'.format(PRETRAINED_PATH))

|

| 30 |

+

model = torchmoji_feature_encoding(PRETRAINED_PATH)

|

| 31 |

+

print(model)

|

| 32 |

+

|

| 33 |

+

print('Encoding texts..')

|

| 34 |

+

encoding = model(tokenized)

|

| 35 |

+

|

| 36 |

+

print('First 5 dimensions for sentence: {}'.format(TEST_SENTENCES[0]))

|

| 37 |

+

print(encoding[0,:5])

|

| 38 |

+

|

| 39 |

+

# Now you could visualize the encodings to see differences,

|

| 40 |

+

# run a logistic regression classifier on top,

|

| 41 |

+

# or basically anything you'd like to do.

|

examples/example_helper.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

""" Module import helper.

|

| 2 |

+

Modifies PATH in order to allow us to import the torchmoji directory.

|

| 3 |

+

"""

|

| 4 |

+

import sys

|

| 5 |

+

from os.path import abspath, dirname

|

| 6 |

+

sys.path.insert(0, dirname(dirname(abspath(__file__))))

|

examples/finetune_insults_chain-thaw.py

ADDED

|

@@ -0,0 +1,44 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Finetuning example.

|

| 2 |

+

|

| 3 |

+

Trains the torchMoji model on the kaggle insults dataset, using the 'chain-thaw'

|

| 4 |

+

finetuning method and the accuracy metric. See the blog post at

|

| 5 |

+

https://medium.com/@bjarkefelbo/what-can-we-learn-from-emojis-6beb165a5ea0

|

| 6 |

+

for more information. Note that results may differ a bit due to slight

|

| 7 |

+

changes in preprocessing and train/val/test split.

|

| 8 |

+

|

| 9 |

+

The 'chain-thaw' method does the following:

|

| 10 |

+

0) Load all weights except for the softmax layer. Extend the embedding layer if

|

| 11 |

+

necessary, initialising the new weights with random values.

|

| 12 |

+

1) Freeze every layer except the last (softmax) layer and train it.

|

| 13 |

+

2) Freeze every layer except the first layer and train it.

|

| 14 |

+

3) Freeze every layer except the second etc., until the second last layer.

|

| 15 |

+

4) Unfreeze all layers and train entire model.

|

| 16 |

+

"""

|

| 17 |

+

|

| 18 |

+

from __future__ import print_function

|

| 19 |

+

import example_helper

|

| 20 |

+

import json

|

| 21 |

+

from torchmoji.model_def import torchmoji_transfer

|

| 22 |

+

from torchmoji.global_variables import PRETRAINED_PATH

|

| 23 |

+

from torchmoji.finetuning import (

|

| 24 |

+

load_benchmark,

|

| 25 |

+

finetune)

|

| 26 |

+

|

| 27 |

+

|

| 28 |

+

DATASET_PATH = '../data/kaggle-insults/raw.pickle'

|

| 29 |

+

nb_classes = 2

|

| 30 |

+

|

| 31 |

+

with open('../model/vocabulary.json', 'r') as f:

|

| 32 |

+

vocab = json.load(f)

|

| 33 |

+

|

| 34 |

+

# Load dataset. Extend the existing vocabulary with up to 10000 tokens from

|

| 35 |

+

# the training dataset.

|

| 36 |

+

data = load_benchmark(DATASET_PATH, vocab, extend_with=10000)

|

| 37 |

+

|

| 38 |

+

# Set up model and finetune. Note that we have to extend the embedding layer

|

| 39 |

+

# with the number of tokens added to the vocabulary.

|

| 40 |

+

model = torchmoji_transfer(nb_classes, PRETRAINED_PATH, extend_embedding=data['added'])

|

| 41 |

+

print(model)

|

| 42 |

+

model, acc = finetune(model, data['texts'], data['labels'], nb_classes,

|

| 43 |

+

data['batch_size'], method='chain-thaw')

|

| 44 |

+

print('Acc: {}'.format(acc))

|

examples/finetune_semeval_class-avg_f1.py

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Finetuning example.

|

| 2 |

+

|

| 3 |

+

Trains the torchMoji model on the SemEval emotion dataset, using the 'last'

|

| 4 |

+

finetuning method and the class average F1 metric.

|

| 5 |

+

|

| 6 |

+

The 'last' method does the following:

|

| 7 |

+

0) Load all weights except for the softmax layer. Do not add tokens to the

|

| 8 |

+

vocabulary and do not extend the embedding layer.

|

| 9 |

+

1) Freeze all layers except for the softmax layer.

|

| 10 |

+

2) Train.

|

| 11 |

+

|

| 12 |

+

The class average F1 metric does the following:

|

| 13 |

+

1) For each class, relabel the dataset into binary classification

|

| 14 |

+

(belongs to/does not belong to this class).

|

| 15 |

+

2) Calculate F1 score for each class.

|

| 16 |

+

3) Compute the average of all F1 scores.

|

| 17 |

+

"""

|

| 18 |

+

|

| 19 |

+

from __future__ import print_function

|

| 20 |

+

import example_helper

|

| 21 |

+

import json

|

| 22 |

+

from torchmoji.finetuning import load_benchmark

|

| 23 |

+

from torchmoji.class_avg_finetuning import class_avg_finetune

|

| 24 |

+

from torchmoji.model_def import torchmoji_transfer

|

| 25 |

+

from torchmoji.global_variables import PRETRAINED_PATH

|

| 26 |

+

|

| 27 |

+

DATASET_PATH = '../data/SE0714/raw.pickle'

|

| 28 |

+

nb_classes = 3

|

| 29 |

+

|

| 30 |

+

with open('../model/vocabulary.json', 'r') as f:

|

| 31 |

+

vocab = json.load(f)

|

| 32 |

+

|

| 33 |

+

|

| 34 |

+

# Load dataset. Extend the existing vocabulary with up to 10000 tokens from

|

| 35 |

+

# the training dataset.

|

| 36 |

+

data = load_benchmark(DATASET_PATH, vocab, extend_with=10000)

|

| 37 |

+

|

| 38 |

+

# Set up model and finetune. Note that we have to extend the embedding layer

|

| 39 |

+

# with the number of tokens added to the vocabulary.

|

| 40 |

+

#

|

| 41 |

+

# Also note that when using class average F1 to evaluate, the model has to be

|

| 42 |

+

# defined with two classes, since the model will be trained for each class

|

| 43 |

+

# separately.

|

| 44 |

+

model = torchmoji_transfer(2, PRETRAINED_PATH, extend_embedding=data['added'])

|

| 45 |

+

print(model)

|

| 46 |

+

|

| 47 |

+

# For finetuning however, pass in the actual number of classes.

|

| 48 |

+

model, f1 = class_avg_finetune(model, data['texts'], data['labels'],

|

| 49 |

+

nb_classes, data['batch_size'], method='last')

|

| 50 |

+

print('F1: {}'.format(f1))

|

examples/finetune_youtube_last.py

ADDED

|

@@ -0,0 +1,35 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""Finetuning example.

|

| 2 |

+

|

| 3 |

+

Trains the torchMoji model on the SS-Youtube dataset, using the 'last'

|

| 4 |

+

finetuning method and the accuracy metric.

|

| 5 |

+

|

| 6 |

+

The 'last' method does the following:

|

| 7 |

+

0) Load all weights except for the softmax layer. Do not add tokens to the

|

| 8 |

+

vocabulary and do not extend the embedding layer.

|

| 9 |

+

1) Freeze all layers except for the softmax layer.

|

| 10 |

+

2) Train.

|

| 11 |

+

"""

|

| 12 |

+

|

| 13 |

+

from __future__ import print_function

|

| 14 |

+

import example_helper

|

| 15 |

+

import json

|

| 16 |

+

from torchmoji.model_def import torchmoji_transfer

|

| 17 |

+

from torchmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH, ROOT_PATH

|

| 18 |

+

from torchmoji.finetuning import (

|

| 19 |

+

load_benchmark,

|

| 20 |

+

finetune)

|

| 21 |

+

|

| 22 |

+

DATASET_PATH = '{}/data/SS-Youtube/raw.pickle'.format(ROOT_PATH)

|

| 23 |

+

nb_classes = 2

|

| 24 |

+

|

| 25 |

+

with open(VOCAB_PATH, 'r') as f:

|

| 26 |

+

vocab = json.load(f)

|

| 27 |

+

|

| 28 |

+

# Load dataset.

|

| 29 |

+

data = load_benchmark(DATASET_PATH, vocab)

|

| 30 |

+

|

| 31 |

+

# Set up model and finetune

|

| 32 |

+

model = torchmoji_transfer(nb_classes, PRETRAINED_PATH)

|

| 33 |

+

print(model)

|

| 34 |

+

model, acc = finetune(model, data['texts'], data['labels'], nb_classes, data['batch_size'], method='last')

|

| 35 |

+

print('Acc: {}'.format(acc))

|

examples/score_texts_emojis.py

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

|

| 3 |

+

""" Use torchMoji to score texts for emoji distribution.

|

| 4 |

+

|

| 5 |

+

The resulting emoji ids (0-63) correspond to the mapping

|

| 6 |

+

in emoji_overview.png file at the root of the torchMoji repo.

|

| 7 |

+

|

| 8 |

+

Writes the result to a csv file.

|

| 9 |

+

"""

|

| 10 |

+

|

| 11 |

+

from __future__ import print_function, division, unicode_literals

|

| 12 |

+

|

| 13 |

+

import sys

|

| 14 |

+

from os.path import abspath, dirname

|

| 15 |

+

|

| 16 |

+

import json

|

| 17 |

+

import csv

|

| 18 |

+

import numpy as np

|

| 19 |

+

|

| 20 |

+

from torchmoji.sentence_tokenizer import SentenceTokenizer

|

| 21 |

+

from torchmoji.model_def import torchmoji_emojis

|

| 22 |

+

from torchmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

|

| 23 |

+

|

| 24 |

+

sys.path.insert(0, dirname(dirname(abspath(__file__))))

|

| 25 |

+

|

| 26 |

+

OUTPUT_PATH = 'test_sentences.csv'

|

| 27 |

+

|

| 28 |

+

TEST_SENTENCES = ['I love mom\'s cooking',

|

| 29 |

+

'I love how you never reply back..',

|

| 30 |

+

'I love cruising with my homies',

|

| 31 |

+

'I love messing with yo mind!!',

|

| 32 |

+

'I love you and now you\'re just gone..',

|

| 33 |

+

'This is shit',

|

| 34 |

+

'This is the shit']

|

| 35 |

+

|

| 36 |

+

|

| 37 |

+

def top_elements(array, k):

|

| 38 |

+

ind = np.argpartition(array, -k)[-k:]

|

| 39 |

+

return ind[np.argsort(array[ind])][::-1]

|

| 40 |

+

|

| 41 |

+

maxlen = 30

|

| 42 |

+

|

| 43 |

+

print('Tokenizing using dictionary from {}'.format(VOCAB_PATH))

|

| 44 |

+

with open(VOCAB_PATH, 'r') as f:

|

| 45 |

+

vocabulary = json.load(f)

|

| 46 |

+

|

| 47 |

+

st = SentenceTokenizer(vocabulary, maxlen)

|

| 48 |

+

|

| 49 |

+

print('Loading model from {}.'.format(PRETRAINED_PATH))

|

| 50 |

+

model = torchmoji_emojis(PRETRAINED_PATH)

|

| 51 |

+

print(model)

|

| 52 |

+

|

| 53 |

+

def doImportableFunction():

|

| 54 |

+

print('Running predictions.')

|

| 55 |

+

tokenized, _, _ = st.tokenize_sentences(TEST_SENTENCES)

|

| 56 |

+

prob = model(tokenized)

|

| 57 |

+

|

| 58 |

+

for prob in [prob]:

|

| 59 |

+

# Find top emojis for each sentence. Emoji ids (0-63)

|

| 60 |

+

# correspond to the mapping in emoji_overview.png

|

| 61 |

+

# at the root of the torchMoji repo.

|

| 62 |

+

print('Writing results to {}'.format(OUTPUT_PATH))

|

| 63 |

+

scores = []

|

| 64 |

+

for i, t in enumerate(TEST_SENTENCES):

|

| 65 |

+

t_tokens = tokenized[i]

|

| 66 |

+

t_score = [t]

|

| 67 |

+

t_prob = prob[i]

|

| 68 |

+

ind_top = top_elements(t_prob, 5)

|

| 69 |

+

t_score.append(sum(t_prob[ind_top]))

|

| 70 |

+

t_score.extend(ind_top)

|

| 71 |

+

t_score.extend([t_prob[ind] for ind in ind_top])

|

| 72 |

+

scores.append(t_score)

|

| 73 |

+

print(t_score)

|

| 74 |

+

|

| 75 |

+

with open(OUTPUT_PATH, 'w') as csvfile:

|

| 76 |

+

writer = csv.writer(csvfile, delimiter=str(','), lineterminator='\n')

|

| 77 |

+

writer.writerow(['Text', 'Top5%',

|

| 78 |

+

'Emoji_1', 'Emoji_2', 'Emoji_3', 'Emoji_4', 'Emoji_5',

|

| 79 |

+

'Pct_1', 'Pct_2', 'Pct_3', 'Pct_4', 'Pct_5'])

|

| 80 |

+

for i, row in enumerate(scores):

|

| 81 |

+

try:

|

| 82 |

+

writer.writerow(row)

|

| 83 |

+

except:

|

| 84 |

+

print("Exception at row {}!".format(i))

|

| 85 |

+

return

|

examples/text_emojize.py

ADDED

|

@@ -0,0 +1,63 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

|

| 3 |

+

""" Use torchMoji to predict emojis from a single text input

|

| 4 |

+

"""

|

| 5 |

+

|

| 6 |

+

from __future__ import print_function, division, unicode_literals

|

| 7 |

+

import example_helper

|

| 8 |

+

import json

|

| 9 |

+

import csv

|

| 10 |

+

import argparse

|

| 11 |

+

|

| 12 |

+

import numpy as np

|

| 13 |

+

import emoji

|

| 14 |

+

|

| 15 |

+

from torchmoji.sentence_tokenizer import SentenceTokenizer

|

| 16 |

+

from torchmoji.model_def import torchmoji_emojis

|

| 17 |

+

from torchmoji.global_variables import PRETRAINED_PATH, VOCAB_PATH

|

| 18 |

+

|

| 19 |

+

# Emoji map in emoji_overview.png

|

| 20 |

+

EMOJIS = ":joy: :unamused: :weary: :sob: :heart_eyes: \

|

| 21 |

+

:pensive: :ok_hand: :blush: :heart: :smirk: \

|

| 22 |

+

:grin: :notes: :flushed: :100: :sleeping: \

|

| 23 |

+

:relieved: :relaxed: :raised_hands: :two_hearts: :expressionless: \

|

| 24 |

+

:sweat_smile: :pray: :confused: :kissing_heart: :heartbeat: \

|

| 25 |

+

:neutral_face: :information_desk_person: :disappointed: :see_no_evil: :tired_face: \

|

| 26 |

+

:v: :sunglasses: :rage: :thumbsup: :cry: \

|

| 27 |

+

:sleepy: :yum: :triumph: :hand: :mask: \

|

| 28 |

+

:clap: :eyes: :gun: :persevere: :smiling_imp: \

|

| 29 |

+

:sweat: :broken_heart: :yellow_heart: :musical_note: :speak_no_evil: \

|

| 30 |

+

:wink: :skull: :confounded: :smile: :stuck_out_tongue_winking_eye: \

|

| 31 |

+

:angry: :no_good: :muscle: :facepunch: :purple_heart: \

|

| 32 |

+

:sparkling_heart: :blue_heart: :grimacing: :sparkles:".split(' ')

|

| 33 |

+

|

| 34 |

+

def top_elements(array, k):

|

| 35 |

+

ind = np.argpartition(array, -k)[-k:]

|

| 36 |

+

return ind[np.argsort(array[ind])][::-1]

|

| 37 |

+

|

| 38 |

+

if __name__ == "__main__":

|

| 39 |

+

argparser = argparse.ArgumentParser()

|

| 40 |

+

argparser.add_argument('--text', type=str, required=True, help="Input text to emojize")

|

| 41 |

+

argparser.add_argument('--maxlen', type=int, default=30, help="Max length of input text")

|

| 42 |

+

args = argparser.parse_args()

|

| 43 |

+

|

| 44 |

+

# Tokenizing using dictionary

|

| 45 |

+

with open(VOCAB_PATH, 'r') as f:

|

| 46 |

+

vocabulary = json.load(f)

|

| 47 |

+

|

| 48 |

+

st = SentenceTokenizer(vocabulary, args.maxlen)

|

| 49 |

+

|

| 50 |

+

# Loading model

|

| 51 |

+

model = torchmoji_emojis(PRETRAINED_PATH)

|

| 52 |

+

# Running predictions

|

| 53 |

+

tokenized, _, _ = st.tokenize_sentences([args.text])

|

| 54 |

+

# Get sentence probability

|

| 55 |

+

prob = model(tokenized)[0]

|

| 56 |

+

|

| 57 |

+

# Top emoji id

|

| 58 |

+

emoji_ids = top_elements(prob, 5)

|

| 59 |

+

|

| 60 |

+

# map to emojis

|

| 61 |

+

emojis = map(lambda x: EMOJIS[x], emoji_ids)

|

| 62 |

+

|

| 63 |

+

print(emoji.emojize("{} {}".format(args.text,' '.join(emojis)), use_aliases=True))

|

examples/tokenize_dataset.py

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Take a given list of sentences and turn it into a numpy array, where each

|

| 3 |

+

number corresponds to a word. Padding is used (number 0) to ensure fixed length

|

| 4 |

+

of sentences.

|

| 5 |

+

"""

|

| 6 |

+

|

| 7 |

+

from __future__ import print_function, unicode_literals

|

| 8 |

+

import example_helper

|

| 9 |

+

import json

|

| 10 |

+

from torchmoji.sentence_tokenizer import SentenceTokenizer

|

| 11 |

+

|

| 12 |

+

with open('../model/vocabulary.json', 'r') as f:

|

| 13 |

+

vocabulary = json.load(f)

|

| 14 |

+

|

| 15 |

+

st = SentenceTokenizer(vocabulary, 30)

|

| 16 |

+

test_sentences = [

|

| 17 |

+

'\u2014 -- \u203c !!\U0001F602',

|

| 18 |

+

'Hello world!',

|

| 19 |

+

'This is a sample tweet #example',

|

| 20 |

+

]

|

| 21 |

+

|

| 22 |

+

tokens, infos, stats = st.tokenize_sentences(test_sentences)

|

| 23 |

+

|

| 24 |

+

print(tokens)

|

| 25 |

+

print(infos)

|

| 26 |

+

print(stats)

|

examples/vocab_extension.py

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""

|

| 2 |

+

Extend the given vocabulary using dataset-specific words.

|

| 3 |

+

|

| 4 |

+

1. First create a vocabulary for the specific dataset.

|

| 5 |

+

2. Find all words not in our vocabulary, but in the dataset vocabulary.

|

| 6 |

+

3. Take top X (default=1000) of these words and add them to the vocabulary.

|

| 7 |

+

4. Save this combined vocabulary and embedding matrix, which can now be used.

|

| 8 |

+

"""

|

| 9 |

+

|

| 10 |

+

from __future__ import print_function, unicode_literals

|

| 11 |

+

import example_helper

|

| 12 |

+

import json

|

| 13 |

+

from torchmoji.create_vocab import extend_vocab, VocabBuilder

|

| 14 |

+

from torchmoji.word_generator import WordGenerator

|

| 15 |

+

|

| 16 |

+

new_words = ['#zzzzaaazzz', 'newword', 'newword']

|

| 17 |

+

word_gen = WordGenerator(new_words)

|

| 18 |

+

vb = VocabBuilder(word_gen)

|

| 19 |

+

vb.count_all_words()

|

| 20 |

+

|

| 21 |

+

with open('../model/vocabulary.json') as f:

|

| 22 |

+

vocab = json.load(f)

|

| 23 |

+

|

| 24 |

+

print(len(vocab))

|

| 25 |

+

print(vb.word_counts)

|

| 26 |

+

extend_vocab(vocab, vb, max_tokens=1)

|

| 27 |

+

|

| 28 |

+

# 'newword' should be added because it's more frequent in the given vocab

|

| 29 |

+

print(vocab['newword'])

|

| 30 |

+

print(len(vocab))

|

scripts/analyze_all_results.py

ADDED

|

@@ -0,0 +1,40 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import print_function

|

| 2 |

+

|

| 3 |

+

# allow us to import the codebase directory

|

| 4 |

+

import sys

|

| 5 |

+

import glob

|

| 6 |

+

import numpy as np

|

| 7 |

+

from os.path import dirname, abspath

|

| 8 |

+

sys.path.insert(0, dirname(dirname(abspath(__file__))))

|

| 9 |

+

|

| 10 |

+

DATASETS = ['SE0714', 'Olympic', 'PsychExp', 'SS-Twitter', 'SS-Youtube',

|

| 11 |

+

'SCv1', 'SV2-GEN'] # 'SE1604' excluded due to Twitter's ToS

|

| 12 |

+

|

| 13 |

+

def get_results(dset):

|

| 14 |

+

METHOD = 'last'

|

| 15 |

+

RESULTS_DIR = 'results/'

|

| 16 |

+

RESULT_PATHS = glob.glob('{}/{}_{}_*_results.txt'.format(RESULTS_DIR, dset, METHOD))

|

| 17 |

+

assert len(RESULT_PATHS)

|

| 18 |

+

|

| 19 |

+

scores = []

|

| 20 |

+

for path in RESULT_PATHS:

|

| 21 |

+

with open(path) as f:

|

| 22 |

+

score = f.readline().split(':')[1]

|

| 23 |

+

scores.append(float(score))

|

| 24 |

+

|

| 25 |

+

average = np.mean(scores)

|

| 26 |

+

maximum = max(scores)

|

| 27 |

+

minimum = min(scores)

|

| 28 |

+

std = np.std(scores)

|

| 29 |

+

|

| 30 |

+

print('Dataset: {}'.format(dset))

|

| 31 |

+

print('Method: {}'.format(METHOD))

|

| 32 |

+

print('Number of results: {}'.format(len(scores)))

|

| 33 |

+

print('--------------------------')

|

| 34 |

+

print('Average: {}'.format(average))

|

| 35 |

+

print('Maximum: {}'.format(maximum))

|

| 36 |

+

print('Minimum: {}'.format(minimum))

|

| 37 |

+

print('Standard deviaton: {}'.format(std))

|

| 38 |

+

|

| 39 |

+

for dset in DATASETS:

|

| 40 |

+

get_results(dset)

|

scripts/analyze_results.py

ADDED

|

@@ -0,0 +1,39 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import print_function

|

| 2 |

+

|

| 3 |

+

import sys

|

| 4 |

+

import glob

|

| 5 |

+

import numpy as np

|

| 6 |

+

|

| 7 |

+

DATASET = 'SS-Twitter' # 'SE1604' excluded due to Twitter's ToS

|

| 8 |

+

METHOD = 'new'

|

| 9 |

+

|

| 10 |

+

# Optional usage: analyze_results.py <dataset> <method>

|

| 11 |

+

if len(sys.argv) == 3:

|

| 12 |

+

DATASET = sys.argv[1]

|

| 13 |

+

METHOD = sys.argv[2]

|

| 14 |

+

|

| 15 |

+

RESULTS_DIR = 'results/'

|

| 16 |

+

RESULT_PATHS = glob.glob('{}/{}_{}_*_results.txt'.format(RESULTS_DIR, DATASET, METHOD))

|

| 17 |

+

|

| 18 |

+

if not RESULT_PATHS:

|

| 19 |

+

print('Could not find results for \'{}\' using \'{}\' in directory \'{}\'.'.format(DATASET, METHOD, RESULTS_DIR))

|

| 20 |

+

else:

|

| 21 |

+

scores = []

|

| 22 |

+

for path in RESULT_PATHS:

|

| 23 |

+

with open(path) as f:

|

| 24 |

+

score = f.readline().split(':')[1]

|

| 25 |

+

scores.append(float(score))

|

| 26 |

+

|

| 27 |

+

average = np.mean(scores)

|

| 28 |

+

maximum = max(scores)

|

| 29 |

+

minimum = min(scores)

|

| 30 |

+

std = np.std(scores)

|

| 31 |

+

|

| 32 |

+

print('Dataset: {}'.format(DATASET))

|

| 33 |

+

print('Method: {}'.format(METHOD))

|

| 34 |

+

print('Number of results: {}'.format(len(scores)))

|

| 35 |

+

print('--------------------------')

|

| 36 |

+

print('Average: {}'.format(average))

|

| 37 |

+

print('Maximum: {}'.format(maximum))

|

| 38 |

+

print('Minimum: {}'.format(minimum))

|

| 39 |

+

print('Standard deviaton: {}'.format(std))

|

scripts/calculate_coverages.py

ADDED

|

@@ -0,0 +1,90 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from __future__ import print_function

|

| 2 |

+

import pickle

|

| 3 |

+

import json

|

| 4 |

+

import csv

|

| 5 |

+

import sys

|

| 6 |

+

from io import open

|

| 7 |

+

|

| 8 |

+

# Allow us to import the torchmoji directory

|

| 9 |

+

from os.path import dirname, abspath

|

| 10 |

+

sys.path.insert(0, dirname(dirname(abspath(__file__))))

|

| 11 |

+

|

| 12 |

+

from torchmoji.sentence_tokenizer import SentenceTokenizer, coverage

|

| 13 |

+

|

| 14 |

+

try:

|

| 15 |

+

unicode # Python 2

|

| 16 |

+

except NameError:

|

| 17 |

+

unicode = str # Python 3

|

| 18 |

+

|

| 19 |

+

IS_PYTHON2 = int(sys.version[0]) == 2

|

| 20 |

+

|

| 21 |

+

OUTPUT_PATH = 'coverage.csv'

|

| 22 |

+

DATASET_PATHS = [

|

| 23 |

+

'../data/Olympic/raw.pickle',

|

| 24 |

+

'../data/PsychExp/raw.pickle',

|

| 25 |

+

'../data/SCv1/raw.pickle',

|

| 26 |

+

'../data/SCv2-GEN/raw.pickle',

|

| 27 |

+

'../data/SE0714/raw.pickle',

|

| 28 |

+

#'../data/SE1604/raw.pickle', # Excluded due to Twitter's ToS

|

| 29 |

+

'../data/SS-Twitter/raw.pickle',

|

| 30 |

+

'../data/SS-Youtube/raw.pickle',

|

| 31 |

+

]

|

| 32 |

+

|

| 33 |

+

with open('../model/vocabulary.json', 'r') as f:

|

| 34 |

+

vocab = json.load(f)

|

| 35 |

+

|

| 36 |

+

results = []

|

| 37 |

+

for p in DATASET_PATHS:

|

| 38 |

+

coverage_result = [p]

|

| 39 |

+

print('Calculating coverage for {}'.format(p))

|

| 40 |

+

with open(p, 'rb') as f:

|

| 41 |

+

if IS_PYTHON2:

|

| 42 |

+

s = pickle.load(f)

|

| 43 |

+

else:

|

| 44 |

+

s = pickle.load(f, fix_imports=True)

|

| 45 |

+

|

| 46 |

+

# Decode data

|

| 47 |

+

try:

|

| 48 |

+

s['texts'] = [unicode(x) for x in s['texts']]

|

| 49 |

+

except UnicodeDecodeError:

|

| 50 |

+

s['texts'] = [x.decode('utf-8') for x in s['texts']]

|

| 51 |

+

|

| 52 |

+

# Own

|

| 53 |

+

st = SentenceTokenizer({}, 30)

|

| 54 |

+

tests, dicts, _ = st.split_train_val_test(s['texts'], s['info'],

|

| 55 |

+

[s['train_ind'],

|

| 56 |

+

s['val_ind'],

|

| 57 |

+

s['test_ind']],

|

| 58 |

+

extend_with=10000)

|

| 59 |

+

coverage_result.append(coverage(tests[2]))

|

| 60 |

+

|

| 61 |

+

# Last

|

| 62 |

+

st = SentenceTokenizer(vocab, 30)

|

| 63 |

+

tests, dicts, _ = st.split_train_val_test(s['texts'], s['info'],

|

| 64 |

+

[s['train_ind'],

|

| 65 |

+

s['val_ind'],

|

| 66 |

+

s['test_ind']],

|

| 67 |

+

extend_with=0)

|

| 68 |

+

coverage_result.append(coverage(tests[2]))

|

| 69 |

+

|

| 70 |

+

# Full

|

| 71 |

+

st = SentenceTokenizer(vocab, 30)

|

| 72 |

+

tests, dicts, _ = st.split_train_val_test(s['texts'], s['info'],

|

| 73 |

+

[s['train_ind'],

|

| 74 |

+

s['val_ind'],

|