srisurya

commited on

Commit

·

5e66110

1

Parent(s):

2935122

final

Browse files- .gitignore +1 -0

- app.py +163 -0

- images/hindi.jpg +0 -0

- images/test.jpg +0 -0

- images/testocr.png +0 -0

- requirements.txt +12 -0

.gitignore

CHANGED

|

@@ -122,6 +122,7 @@ celerybeat.pid

|

|

| 122 |

*.sage.py

|

| 123 |

|

| 124 |

# Environments

|

|

|

|

| 125 |

.env

|

| 126 |

.venv

|

| 127 |

env/

|

|

|

|

| 122 |

*.sage.py

|

| 123 |

|

| 124 |

# Environments

|

| 125 |

+

ocrenv/

|

| 126 |

.env

|

| 127 |

.venv

|

| 128 |

env/

|

app.py

ADDED

|

@@ -0,0 +1,163 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers import AutoModel, AutoTokenizer,AutoProcessor

|

| 2 |

+

import streamlit as st

|

| 3 |

+

import os

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import torch

|

| 6 |

+

from torchvision import io

|

| 7 |

+

import torchvision.transforms as transforms

|

| 8 |

+

import random

|

| 9 |

+

import easyocr

|

| 10 |

+

import numpy as np

|

| 11 |

+

|

| 12 |

+

def start():

|

| 13 |

+

st.session_state.start = True

|

| 14 |

+

|

| 15 |

+

def reset():

|

| 16 |

+

del st.session_state['start']

|

| 17 |

+

|

| 18 |

+

@st.cache_data

|

| 19 |

+

def get_text(image_file, _model, _tokenizer):

|

| 20 |

+

res = _model.chat(_tokenizer, image_file, ocr_type='ocr')

|

| 21 |

+

return res

|

| 22 |

+

|

| 23 |

+

@st.cache_data

|

| 24 |

+

def extract_text_easyocr(_image):

|

| 25 |

+

reader = easyocr.Reader(['hi'],gpu = False)

|

| 26 |

+

results = reader.readtext(np.array(_image))

|

| 27 |

+

# return results

|

| 28 |

+

return " ".join([result[1] for result in results])

|

| 29 |

+

|

| 30 |

+

@st.cache_resource

|

| 31 |

+

def model():

|

| 32 |

+

tokenizer = AutoTokenizer.from_pretrained('srimanth-d/GOT_CPU', trust_remote_code=True)

|

| 33 |

+

model = AutoModel.from_pretrained('srimanth-d/GOT_CPU', trust_remote_code=True, use_safetensors=True, pad_token_id=tokenizer.eos_token_id)

|

| 34 |

+

model = model.eval()

|

| 35 |

+

return model, tokenizer

|

| 36 |

+

|

| 37 |

+

@st.cache_resource

|

| 38 |

+

def highlight_keywords(text, keywords):

|

| 39 |

+

colors = generate_unique_colors(len(keywords))

|

| 40 |

+

highlighted_text = text

|

| 41 |

+

found_keywords = []

|

| 42 |

+

for keyword, color in zip(keywords, colors):

|

| 43 |

+

if keyword.lower() in text.lower():

|

| 44 |

+

highlighted_text = highlighted_text.replace(keyword, f'<mark style="background-color: {color};">{keyword}</mark>')

|

| 45 |

+

found_keywords.append(keyword)

|

| 46 |

+

return highlighted_text, found_keywords

|

| 47 |

+

|

| 48 |

+

def search():

|

| 49 |

+

st.session_state.search = True

|

| 50 |

+

|

| 51 |

+

@st.cache_data

|

| 52 |

+

def generate_unique_colors(n):

|

| 53 |

+

colors = []

|

| 54 |

+

for i in range(n):

|

| 55 |

+

color = "#{:06x}".format(random.randint(0, 0xFFFFFF))

|

| 56 |

+

while color in colors:

|

| 57 |

+

color = "#{:06x}".format(random.randint(0, 0xFFFFFF))

|

| 58 |

+

colors.append(color)

|

| 59 |

+

return colors

|

| 60 |

+

|

| 61 |

+

st.title("A Web-Based Text Extraction and Retrieval System")

|

| 62 |

+

|

| 63 |

+

language = st.selectbox("Select a language:", ["English", "Hindi"])

|

| 64 |

+

|

| 65 |

+

if language == "English":

|

| 66 |

+

st.subheader("You selected English!")

|

| 67 |

+

st.button("Let's get started", on_click=start)

|

| 68 |

+

|

| 69 |

+

if 'start' not in st.session_state:

|

| 70 |

+

st.session_state.start = False

|

| 71 |

+

|

| 72 |

+

if 'search' not in st.session_state:

|

| 73 |

+

st.session_state.search = False

|

| 74 |

+

|

| 75 |

+

if 'reset' not in st.session_state:

|

| 76 |

+

st.session_state.reset = False

|

| 77 |

+

|

| 78 |

+

if st.session_state.start:

|

| 79 |

+

uploaded_file = st.file_uploader("Upload an Image", type=["png", "jpg", "jpeg"])

|

| 80 |

+

|

| 81 |

+

if uploaded_file is not None:

|

| 82 |

+

st.subheader("Uploaded Image:")

|

| 83 |

+

st.image(uploaded_file, caption="Uploaded Image", use_column_width=True)

|

| 84 |

+

|

| 85 |

+

MODEL, TOKENIZER = model()

|

| 86 |

+

|

| 87 |

+

if not os.path.exists("images"):

|

| 88 |

+

os.makedirs("images")

|

| 89 |

+

with open(f"images/{uploaded_file.name}", "wb") as f:

|

| 90 |

+

f.write(uploaded_file.getbuffer())

|

| 91 |

+

|

| 92 |

+

extracted_text = get_text(f"images/{uploaded_file.name}", MODEL, TOKENIZER)

|

| 93 |

+

|

| 94 |

+

st.subheader("Extracted Text")

|

| 95 |

+

st.write(extracted_text)

|

| 96 |

+

|

| 97 |

+

keywords_input = st.text_input("Enter keywords to search within the extracted text (comma-separated):")

|

| 98 |

+

|

| 99 |

+

if keywords_input:

|

| 100 |

+

keywords = [keyword.strip() for keyword in keywords_input.split(',')]

|

| 101 |

+

highlighted_text, found_keywords = highlight_keywords(extracted_text, keywords)

|

| 102 |

+

st.button("Search", on_click=search)

|

| 103 |

+

|

| 104 |

+

if st.session_state.search:

|

| 105 |

+

st.subheader("Search Results:")

|

| 106 |

+

if found_keywords:

|

| 107 |

+

st.markdown(highlighted_text, unsafe_allow_html=True)

|

| 108 |

+

st.write(f"Found keywords: {', '.join(found_keywords)}")

|

| 109 |

+

else:

|

| 110 |

+

st.warning("No keywords were found in the extracted text.")

|

| 111 |

+

|

| 112 |

+

not_found_keywords = set(keywords) - set(found_keywords)

|

| 113 |

+

if not_found_keywords:

|

| 114 |

+

st.error(f"Keywords not found: {', '.join(not_found_keywords)}")

|

| 115 |

+

|

| 116 |

+

st.button("Reset", on_click=reset)

|

| 117 |

+

|

| 118 |

+

elif language == "Hindi":

|

| 119 |

+

st.subheader("You selected HINDI!")

|

| 120 |

+

st.button("Let's get started", on_click=start)

|

| 121 |

+

|

| 122 |

+

if 'start' not in st.session_state:

|

| 123 |

+

st.session_state.start = False

|

| 124 |

+

|

| 125 |

+

if 'search' not in st.session_state:

|

| 126 |

+

st.session_state.search = False

|

| 127 |

+

|

| 128 |

+

if 'reset' not in st.session_state:

|

| 129 |

+

st.session_state.reset = False

|

| 130 |

+

|

| 131 |

+

if st.session_state.start:

|

| 132 |

+

uploaded_file = st.file_uploader("Upload an Image", type=["png", "jpg", "jpeg"])

|

| 133 |

+

|

| 134 |

+

if uploaded_file is not None:

|

| 135 |

+

st.subheader("Uploaded Image:")

|

| 136 |

+

st.image(uploaded_file, caption="Uploaded Image", use_column_width=True)

|

| 137 |

+

image = Image.open(uploaded_file)

|

| 138 |

+

if not os.path.exists("images"):

|

| 139 |

+

os.makedirs("images")

|

| 140 |

+

with open(f"images/{uploaded_file.name}", "wb") as f:

|

| 141 |

+

f.write(uploaded_file.getbuffer())

|

| 142 |

+

extracted_text_hindi =extract_text_easyocr(image)

|

| 143 |

+

st.subheader("Extracted Text:")

|

| 144 |

+

st.write(extracted_text_hindi)

|

| 145 |

+

|

| 146 |

+

keywords_input = st.text_input("Enter keywords to search within the extracted text (comma-separated):")

|

| 147 |

+

if keywords_input:

|

| 148 |

+

keywords = [keyword.strip() for keyword in keywords_input.split(',')]

|

| 149 |

+

highlighted_text, found_keywords = highlight_keywords(extracted_text_hindi, keywords)

|

| 150 |

+

st.button("Search", on_click=search)

|

| 151 |

+

|

| 152 |

+

if st.session_state.search:

|

| 153 |

+

st.subheader("Search Results:")

|

| 154 |

+

if found_keywords:

|

| 155 |

+

st.markdown(highlighted_text, unsafe_allow_html=True)

|

| 156 |

+

st.write(f"Found keywords: {', '.join(found_keywords)}")

|

| 157 |

+

else:

|

| 158 |

+

st.warning("No keywords were found in the extracted text.")

|

| 159 |

+

|

| 160 |

+

not_found_keywords = set(keywords) - set(found_keywords)

|

| 161 |

+

if not_found_keywords:

|

| 162 |

+

st.error(f"Keywords not found: {', '.join(not_found_keywords)}")

|

| 163 |

+

st.button("Reset", on_click=reset)

|

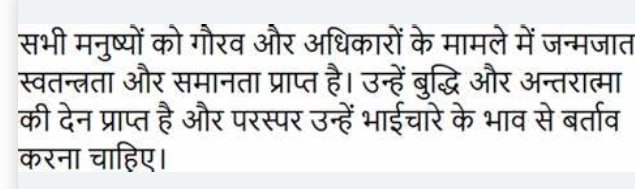

images/hindi.jpg

ADDED

|

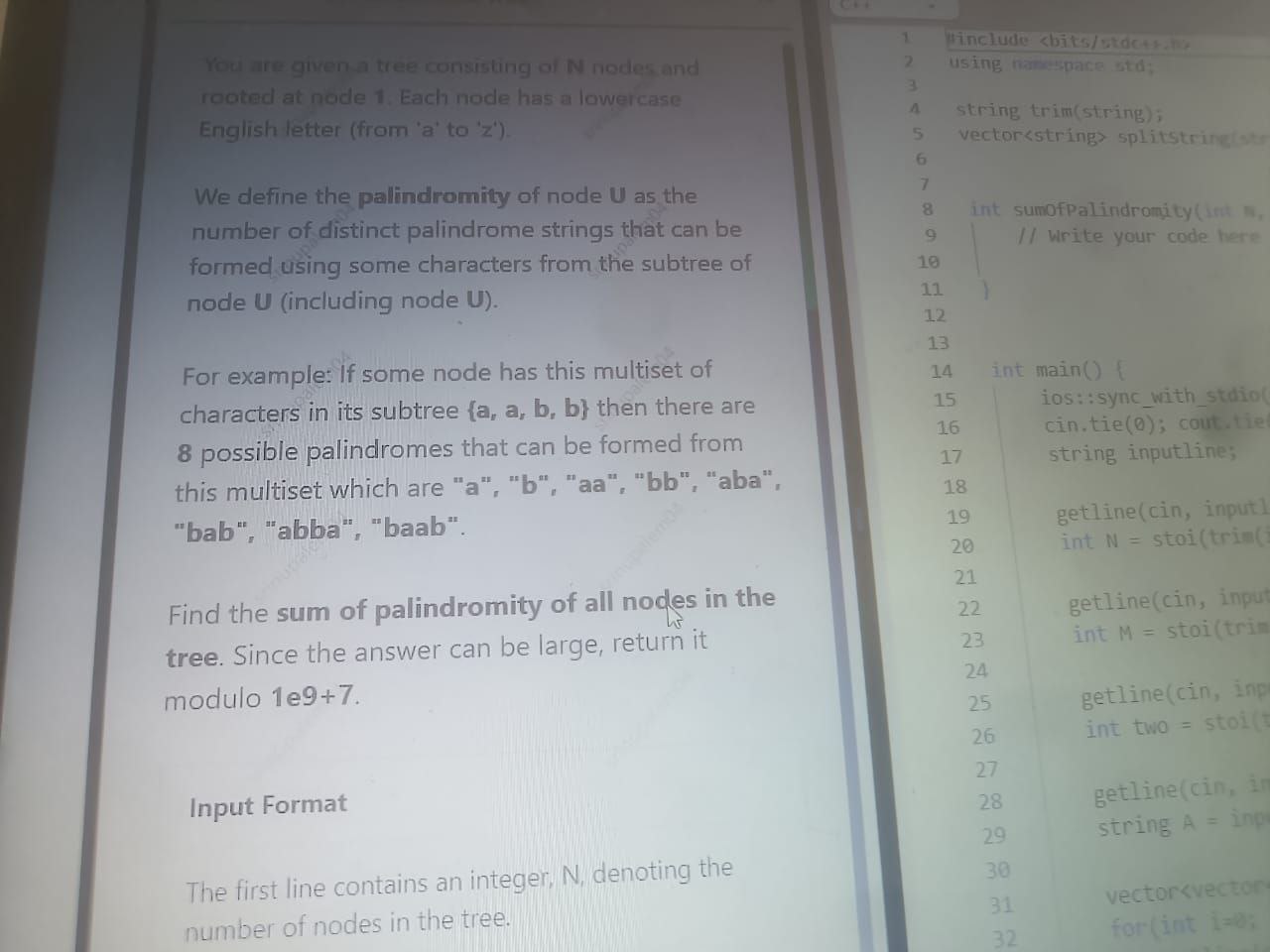

images/test.jpg

ADDED

|

images/testocr.png

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

torchvision

|

| 3 |

+

torchaudio

|

| 4 |

+

transformers

|

| 5 |

+

Pillow

|

| 6 |

+

verovio

|

| 7 |

+

tiktoken

|

| 8 |

+

importlib_resources

|

| 9 |

+

accelerate>=0.26.0

|

| 10 |

+

safetensors

|

| 11 |

+

streamlit

|

| 12 |

+

easyocr

|