Spaces:

Sleeping

Sleeping

Commit

·

b858330

1

Parent(s):

a568da9

Add a model selector

Browse files- app.py +78 -48

- config.json +0 -10

- config.py +10 -9

- config.yaml +30 -0

- resource/hugging_face_1.jpg +0 -0

- resource/hugging_face_2.jpg +0 -0

- resource/hugging_face_3.jpg +0 -0

- resource/hugging_face_4.jpg +0 -0

- tools.py +49 -0

app.py

CHANGED

|

@@ -1,51 +1,59 @@

|

|

| 1 |

# -*- coding: utf-8 -*-

|

| 2 |

|

| 3 |

import json

|

| 4 |

-

import os

|

| 5 |

from pathlib import Path

|

| 6 |

|

| 7 |

import gradio as gr

|

| 8 |

import numpy as np

|

| 9 |

-

from doc_ufcn import models

|

| 10 |

-

from doc_ufcn.main import DocUFCN

|

| 11 |

from PIL import Image, ImageDraw

|

| 12 |

|

| 13 |

from config import parse_configurations

|

|

|

|

| 14 |

|

| 15 |

# Load the config

|

| 16 |

-

config = parse_configurations(Path("config.

|

| 17 |

|

| 18 |

-

#

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 23 |

|

| 24 |

-

#

|

| 25 |

-

|

| 26 |

|

| 27 |

-

# Check that the number of colors is equal to the number of classes -1

|

| 28 |

-

assert len(classes) - 1 == len(

|

| 29 |

-

classes_colors

|

| 30 |

-

), f"The parameter classes_colors was filled with the wrong number of colors. {len(classes)-1} colors are expected instead of {len(classes_colors)}."

|

| 31 |

|

| 32 |

-

|

| 33 |

-

|

| 34 |

-

|

| 35 |

|

| 36 |

-

|

| 37 |

-

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

model.

|

|

|

|

|

|

|

| 43 |

|

| 44 |

|

| 45 |

-

def query_image(image):

|

| 46 |

"""

|

| 47 |

-

|

| 48 |

|

|

|

|

| 49 |

:param image: An image to predict

|

| 50 |

:return: Image and dict, an image with the predictions and a

|

| 51 |

dictionary mapping an object idx (starting from 1) to a dictionary describing the detected object:

|

|

@@ -54,8 +62,11 @@ def query_image(image):

|

|

| 54 |

- `channel` key : str, the name of the predicted class.

|

| 55 |

"""

|

| 56 |

|

|

|

|

|

|

|

|

|

|

| 57 |

# Make a prediction with the model

|

| 58 |

-

detected_polygons, probabilities, mask, overlap = model.predict(

|

| 59 |

input_image=image, raw_output=True, mask_output=True, overlap_output=True

|

| 60 |

)

|

| 61 |

|

|

@@ -70,12 +81,12 @@ def query_image(image):

|

|

| 70 |

|

| 71 |

# Create the polygons on the copy of the image for each class with the corresponding color

|

| 72 |

# We do not draw polygons of the background channel (channel 0)

|

| 73 |

-

for channel in range(1,

|

| 74 |

for i, polygon in enumerate(detected_polygons[channel]):

|

| 75 |

# Draw the polygons on the image copy.

|

| 76 |

# Loop through the class_colors list (channel 1 has color 0)

|

| 77 |

ImageDraw.Draw(img2).polygon(

|

| 78 |

-

polygon["polygon"], fill=

|

| 79 |

)

|

| 80 |

|

| 81 |

# Build the dictionary

|

|

@@ -88,34 +99,55 @@ def query_image(image):

|

|

| 88 |

# Confidence that the model predicts the polygon in the right place

|

| 89 |

"confidence": polygon["confidence"],

|

| 90 |

# The channel on which the polygon is predicted

|

| 91 |

-

"channel": classes[channel],

|

| 92 |

}

|

| 93 |

)

|

| 94 |

|

| 95 |

# Return the blend of the images and the dictionary formatted in json

|

| 96 |

-

return Image.blend(image, img2, 0.5), json.dumps(predict, indent=

|

| 97 |

|

| 98 |

|

| 99 |

-

|

|

|

|

|

|

|

| 100 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 101 |

# Create app title

|

| 102 |

gr.Markdown(f"# {config['title']}")

|

| 103 |

|

| 104 |

# Create app description

|

| 105 |

gr.Markdown(config["description"])

|

| 106 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 107 |

# Create a first row of blocks

|

| 108 |

with gr.Row():

|

| 109 |

-

|

| 110 |

# Create a column on the left

|

| 111 |

with gr.Column():

|

| 112 |

-

|

| 113 |

# Generates an image that can be uploaded by a user

|

| 114 |

image = gr.Image()

|

| 115 |

|

| 116 |

# Create a row under the image

|

| 117 |

with gr.Row():

|

| 118 |

-

|

| 119 |

# Generate a button to clear the inputs and outputs

|

| 120 |

clear_button = gr.Button("Clear", variant="secondary")

|

| 121 |

|

|

@@ -124,25 +156,21 @@ with gr.Blocks() as process_image:

|

|

| 124 |

|

| 125 |

# Create a row under the buttons

|

| 126 |

with gr.Row():

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

examples = gr.Examples(inputs=image, examples=config["examples"])

|

| 130 |

|

| 131 |

# Create a column on the right

|

| 132 |

with gr.Column():

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

|

| 136 |

|

| 137 |

# Create a row under the predicted image

|

| 138 |

with gr.Row():

|

| 139 |

-

|

| 140 |

# Create a column so that the JSON output doesn't take the full size of the page

|

| 141 |

with gr.Column():

|

| 142 |

-

|

| 143 |

-

# Create a collapsible region

|

| 144 |

with gr.Accordion("JSON"):

|

| 145 |

-

|

| 146 |

# Generates a json with the model predictions

|

| 147 |

json_output = gr.JSON()

|

| 148 |

|

|

@@ -154,7 +182,9 @@ with gr.Blocks() as process_image:

|

|

| 154 |

)

|

| 155 |

|

| 156 |

# Create the button to submit the prediction

|

| 157 |

-

submit_button.click(

|

|

|

|

|

|

|

| 158 |

|

| 159 |

-

# Launch the application

|

| 160 |

process_image.launch()

|

|

|

|

| 1 |

# -*- coding: utf-8 -*-

|

| 2 |

|

| 3 |

import json

|

|

|

|

| 4 |

from pathlib import Path

|

| 5 |

|

| 6 |

import gradio as gr

|

| 7 |

import numpy as np

|

|

|

|

|

|

|

| 8 |

from PIL import Image, ImageDraw

|

| 9 |

|

| 10 |

from config import parse_configurations

|

| 11 |

+

from tools import UFCNModel

|

| 12 |

|

| 13 |

# Load the config

|

| 14 |

+

config = parse_configurations(Path("config.yaml"))

|

| 15 |

|

| 16 |

+

# Check that the paths of the examples are valid

|

| 17 |

+

for example in config["examples"]:

|

| 18 |

+

assert Path.exists(

|

| 19 |

+

Path(example)

|

| 20 |

+

), f"The path of the image '{example}' does not exist."

|

| 21 |

+

|

| 22 |

+

# Cached models, maps model_name to UFCNModel object

|

| 23 |

+

MODELS = {

|

| 24 |

+

model["model_name"]: UFCNModel(

|

| 25 |

+

name=model["model_name"],

|

| 26 |

+

colors=model["classes_colors"],

|

| 27 |

+

title=model["title"],

|

| 28 |

+

description=model["description"],

|

| 29 |

+

)

|

| 30 |

+

for model in config["models"]

|

| 31 |

+

}

|

| 32 |

|

| 33 |

+

# Create a list of models name

|

| 34 |

+

models_name = list(MODELS)

|

| 35 |

|

|

|

|

|

|

|

|

|

|

|

|

|

| 36 |

|

| 37 |

+

def load_model(model_name) -> UFCNModel:

|

| 38 |

+

"""

|

| 39 |

+

Retrieve the model, and load its parameters/files if it wasn't done before.

|

| 40 |

|

| 41 |

+

:param model_name: The name of the selected model

|

| 42 |

+

:return: The UFCNModel instance selected

|

| 43 |

+

"""

|

| 44 |

+

assert model_name in MODELS

|

| 45 |

+

model = MODELS[model_name]

|

| 46 |

+

# Load the model's files if it wasn't done before

|

| 47 |

+

if not model.loaded:

|

| 48 |

+

model.load()

|

| 49 |

+

return model

|

| 50 |

|

| 51 |

|

| 52 |

+

def query_image(model_name: gr.Dropdown, image: gr.Image) -> list([Image, json]):

|

| 53 |

"""

|

| 54 |

+

Loads a model and draws the predicted polygons with the color provided by the model on an image

|

| 55 |

|

| 56 |

+

:param model: A model selected in dropdown

|

| 57 |

:param image: An image to predict

|

| 58 |

:return: Image and dict, an image with the predictions and a

|

| 59 |

dictionary mapping an object idx (starting from 1) to a dictionary describing the detected object:

|

|

|

|

| 62 |

- `channel` key : str, the name of the predicted class.

|

| 63 |

"""

|

| 64 |

|

| 65 |

+

# Load the model and get its classes, classes_colors and the model

|

| 66 |

+

ufcn_model = load_model(model_name)

|

| 67 |

+

|

| 68 |

# Make a prediction with the model

|

| 69 |

+

detected_polygons, probabilities, mask, overlap = ufcn_model.model.predict(

|

| 70 |

input_image=image, raw_output=True, mask_output=True, overlap_output=True

|

| 71 |

)

|

| 72 |

|

|

|

|

| 81 |

|

| 82 |

# Create the polygons on the copy of the image for each class with the corresponding color

|

| 83 |

# We do not draw polygons of the background channel (channel 0)

|

| 84 |

+

for channel in range(1, ufcn_model.num_channels):

|

| 85 |

for i, polygon in enumerate(detected_polygons[channel]):

|

| 86 |

# Draw the polygons on the image copy.

|

| 87 |

# Loop through the class_colors list (channel 1 has color 0)

|

| 88 |

ImageDraw.Draw(img2).polygon(

|

| 89 |

+

polygon["polygon"], fill=ufcn_model.colors[channel - 1]

|

| 90 |

)

|

| 91 |

|

| 92 |

# Build the dictionary

|

|

|

|

| 99 |

# Confidence that the model predicts the polygon in the right place

|

| 100 |

"confidence": polygon["confidence"],

|

| 101 |

# The channel on which the polygon is predicted

|

| 102 |

+

"channel": ufcn_model.classes[channel],

|

| 103 |

}

|

| 104 |

)

|

| 105 |

|

| 106 |

# Return the blend of the images and the dictionary formatted in json

|

| 107 |

+

return Image.blend(image, img2, 0.5), json.dumps(predict, indent=2)

|

| 108 |

|

| 109 |

|

| 110 |

+

def update_model(model_name: gr.Dropdown) -> str:

|

| 111 |

+

"""

|

| 112 |

+

Update the model title to the title of the current model

|

| 113 |

|

| 114 |

+

:param model_name: The name of the selected model

|

| 115 |

+

:return: A new title

|

| 116 |

+

"""

|

| 117 |

+

return f"## {MODELS[model_name].title}", MODELS[model_name].description

|

| 118 |

+

|

| 119 |

+

|

| 120 |

+

with gr.Blocks() as process_image:

|

| 121 |

# Create app title

|

| 122 |

gr.Markdown(f"# {config['title']}")

|

| 123 |

|

| 124 |

# Create app description

|

| 125 |

gr.Markdown(config["description"])

|

| 126 |

|

| 127 |

+

# Create dropdown button

|

| 128 |

+

model_name = gr.Dropdown(models_name, value=models_name[0], label="Models")

|

| 129 |

+

|

| 130 |

+

# get models

|

| 131 |

+

selected_model: UFCNModel = MODELS[model_name.value]

|

| 132 |

+

|

| 133 |

+

# Create model title

|

| 134 |

+

model_title = gr.Markdown(f"## {selected_model.title}")

|

| 135 |

+

|

| 136 |

+

# Create model description

|

| 137 |

+

model_description = gr.Markdown(selected_model.description)

|

| 138 |

+

|

| 139 |

+

# Change model title and description when the model_id is update

|

| 140 |

+

model_name.change(update_model, model_name, [model_title, model_description])

|

| 141 |

+

|

| 142 |

# Create a first row of blocks

|

| 143 |

with gr.Row():

|

|

|

|

| 144 |

# Create a column on the left

|

| 145 |

with gr.Column():

|

|

|

|

| 146 |

# Generates an image that can be uploaded by a user

|

| 147 |

image = gr.Image()

|

| 148 |

|

| 149 |

# Create a row under the image

|

| 150 |

with gr.Row():

|

|

|

|

| 151 |

# Generate a button to clear the inputs and outputs

|

| 152 |

clear_button = gr.Button("Clear", variant="secondary")

|

| 153 |

|

|

|

|

| 156 |

|

| 157 |

# Create a row under the buttons

|

| 158 |

with gr.Row():

|

| 159 |

+

# Generate example images that can be used as input image for every model

|

| 160 |

+

gr.Examples(config["examples"], inputs=image)

|

|

|

|

| 161 |

|

| 162 |

# Create a column on the right

|

| 163 |

with gr.Column():

|

| 164 |

+

with gr.Row():

|

| 165 |

+

# Generates an output image that does not support upload

|

| 166 |

+

image_output = gr.Image(interactive=False)

|

| 167 |

|

| 168 |

# Create a row under the predicted image

|

| 169 |

with gr.Row():

|

|

|

|

| 170 |

# Create a column so that the JSON output doesn't take the full size of the page

|

| 171 |

with gr.Column():

|

| 172 |

+

# # Create a collapsible region

|

|

|

|

| 173 |

with gr.Accordion("JSON"):

|

|

|

|

| 174 |

# Generates a json with the model predictions

|

| 175 |

json_output = gr.JSON()

|

| 176 |

|

|

|

|

| 182 |

)

|

| 183 |

|

| 184 |

# Create the button to submit the prediction

|

| 185 |

+

submit_button.click(

|

| 186 |

+

query_image, inputs=[model_name, image], outputs=[image_output, json_output]

|

| 187 |

+

)

|

| 188 |

|

| 189 |

+

# Launch the application with the public mode (True or False)

|

| 190 |

process_image.launch()

|

config.json

DELETED

|

@@ -1,10 +0,0 @@

|

|

| 1 |

-

{

|

| 2 |

-

"model_name": "doc-ufcn-generic-historical-line",

|

| 3 |

-

"classes_colors": ["green"],

|

| 4 |

-

"title":"doc-ufcn Line Detection Demo",

|

| 5 |

-

"description":"A demo showing a prediction from the [Teklia/doc-ufcn-generic-historical-line](https://huggingface.co/Teklia/doc-ufcn-generic-historical-line) model. The generic historical line detection model predicts text lines from document images.",

|

| 6 |

-

"examples":[

|

| 7 |

-

"resource/hugging_face_1.jpg",

|

| 8 |

-

"resource/hugging_face_2.jpg"

|

| 9 |

-

]

|

| 10 |

-

}

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

config.py

CHANGED

|

@@ -7,21 +7,22 @@ from teklia_toolbox.config import ConfigParser

|

|

| 7 |

|

| 8 |

def parse_configurations(config_path: Path):

|

| 9 |

"""

|

| 10 |

-

Parse multiple

|

| 11 |

of configuration for the HuggingFace app

|

| 12 |

|

| 13 |

-

:param config_path: pathlib.Path, Path to the .

|

| 14 |

:return: dict, containing the configuration. Ensures config is complete and with correct typing

|

| 15 |

"""

|

| 16 |

-

|

| 17 |

parser = ConfigParser()

|

| 18 |

|

| 19 |

-

parser.add_option(

|

| 20 |

-

|

| 21 |

-

)

|

| 22 |

-

parser.add_option("classes_colors", type=list, default=["green"])

|

| 23 |

-

parser.add_option("title", type=str)

|

| 24 |

-

parser.add_option("description", type=str)

|

| 25 |

parser.add_option("examples", type=list)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 26 |

|

| 27 |

return parser.parse(config_path)

|

|

|

|

| 7 |

|

| 8 |

def parse_configurations(config_path: Path):

|

| 9 |

"""

|

| 10 |

+

Parse multiple YAML configuration files into a single source

|

| 11 |

of configuration for the HuggingFace app

|

| 12 |

|

| 13 |

+

:param config_path: pathlib.Path, Path to the .yaml config file

|

| 14 |

:return: dict, containing the configuration. Ensures config is complete and with correct typing

|

| 15 |

"""

|

|

|

|

| 16 |

parser = ConfigParser()

|

| 17 |

|

| 18 |

+

parser.add_option("title")

|

| 19 |

+

parser.add_option("description")

|

|

|

|

|

|

|

|

|

|

|

|

|

| 20 |

parser.add_option("examples", type=list)

|

| 21 |

+

model_parser = parser.add_subparser("models", many=True)

|

| 22 |

+

|

| 23 |

+

model_parser.add_option("model_name")

|

| 24 |

+

model_parser.add_option("title")

|

| 25 |

+

model_parser.add_option("description")

|

| 26 |

+

model_parser.add_option("classes_colors", type=list)

|

| 27 |

|

| 28 |

return parser.parse(config_path)

|

config.yaml

ADDED

|

@@ -0,0 +1,30 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

---

|

| 2 |

+

title: Teklia - Doc-UFCN Demo

|

| 3 |

+

description: >-

|

| 4 |

+

[TEKLIA](https://teklia.com/)’s Document Layout Analysis on historical documents. For modern documents, see [ocelus.teklia.com](https://ocelus.teklia.com).

|

| 5 |

+

examples:

|

| 6 |

+

- resource/hugging_face_1.jpg

|

| 7 |

+

- resource/hugging_face_2.jpg

|

| 8 |

+

- resource/hugging_face_3.jpg

|

| 9 |

+

- resource/hugging_face_4.jpg

|

| 10 |

+

|

| 11 |

+

models:

|

| 12 |

+

- model_name: doc-ufcn-generic-historical-line

|

| 13 |

+

title: Doc-UFCN Generic historical line detection

|

| 14 |

+

description: >-

|

| 15 |

+

The [generic historical line detection model](https://huggingface.co/Teklia/doc-ufcn-generic-historical-line) predicts text lines from document images. Please select an image from the examples below or upload your own image!

|

| 16 |

+

classes_colors:

|

| 17 |

+

- green

|

| 18 |

+

- model_name: doc-ufcn-huginmunin-line

|

| 19 |

+

title: Doc-UFCN Hugin-Munin line detection

|

| 20 |

+

description: >-

|

| 21 |

+

The [Hugin-Munin line detection model](https://huggingface.co/Teklia/doc-ufcn-huginmunin-line) predicts horizontal and vertical text lines from Hugin-Munin document images. Please select an image from the examples below or upload your own image!

|

| 22 |

+

classes_colors:

|

| 23 |

+

- green

|

| 24 |

+

- blue

|

| 25 |

+

- model_name: doc-ufcn-generic-page

|

| 26 |

+

title: Doc-UFCN Generic page detection

|

| 27 |

+

description: >-

|

| 28 |

+

The [generic page detection model](https://huggingface.co/Teklia/doc-ufcn-generic-page) predicts single pages from document images. Please select an image from the examples below or upload your own image!

|

| 29 |

+

classes_colors:

|

| 30 |

+

- green

|

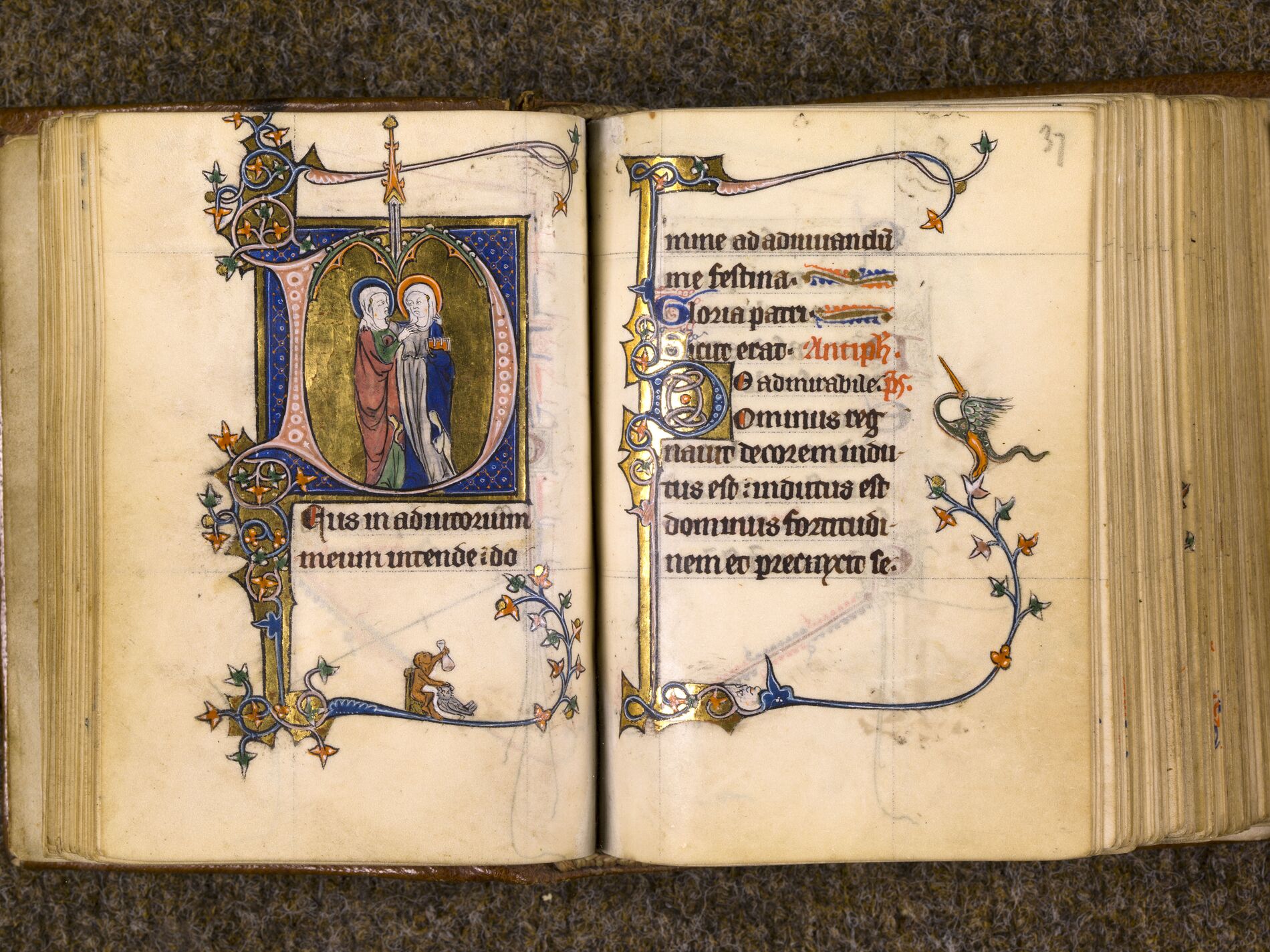

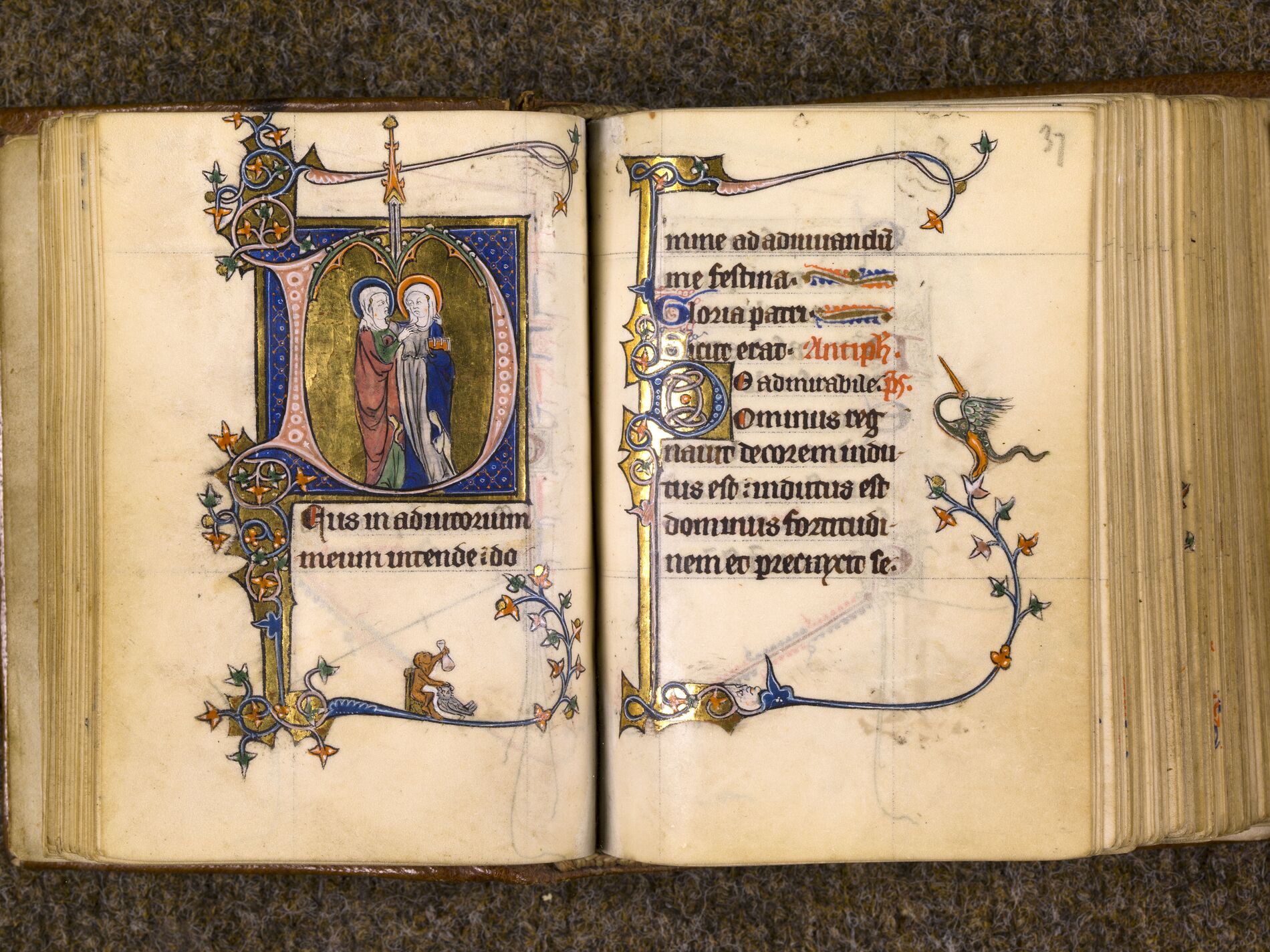

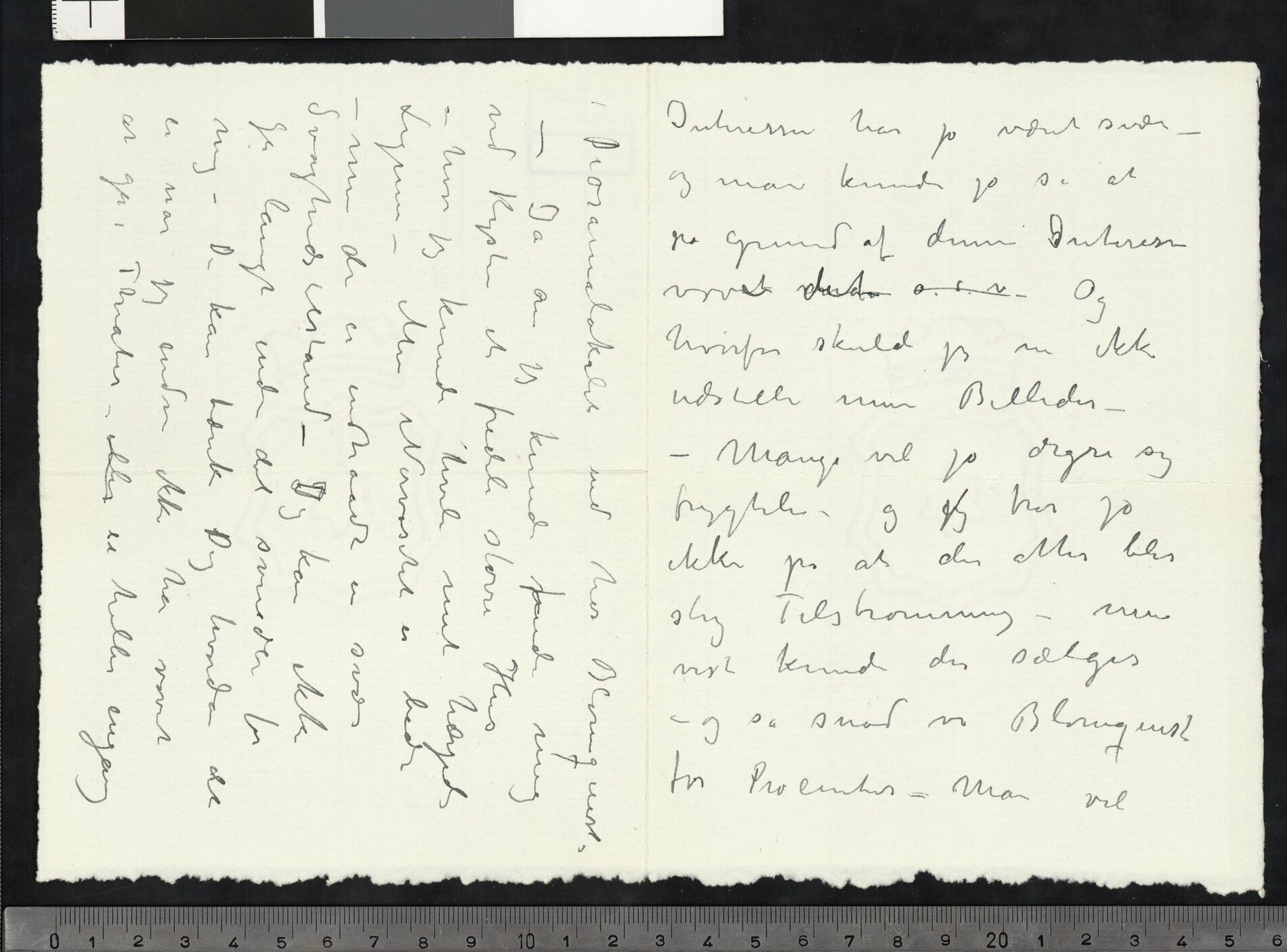

resource/hugging_face_1.jpg

CHANGED

|

|

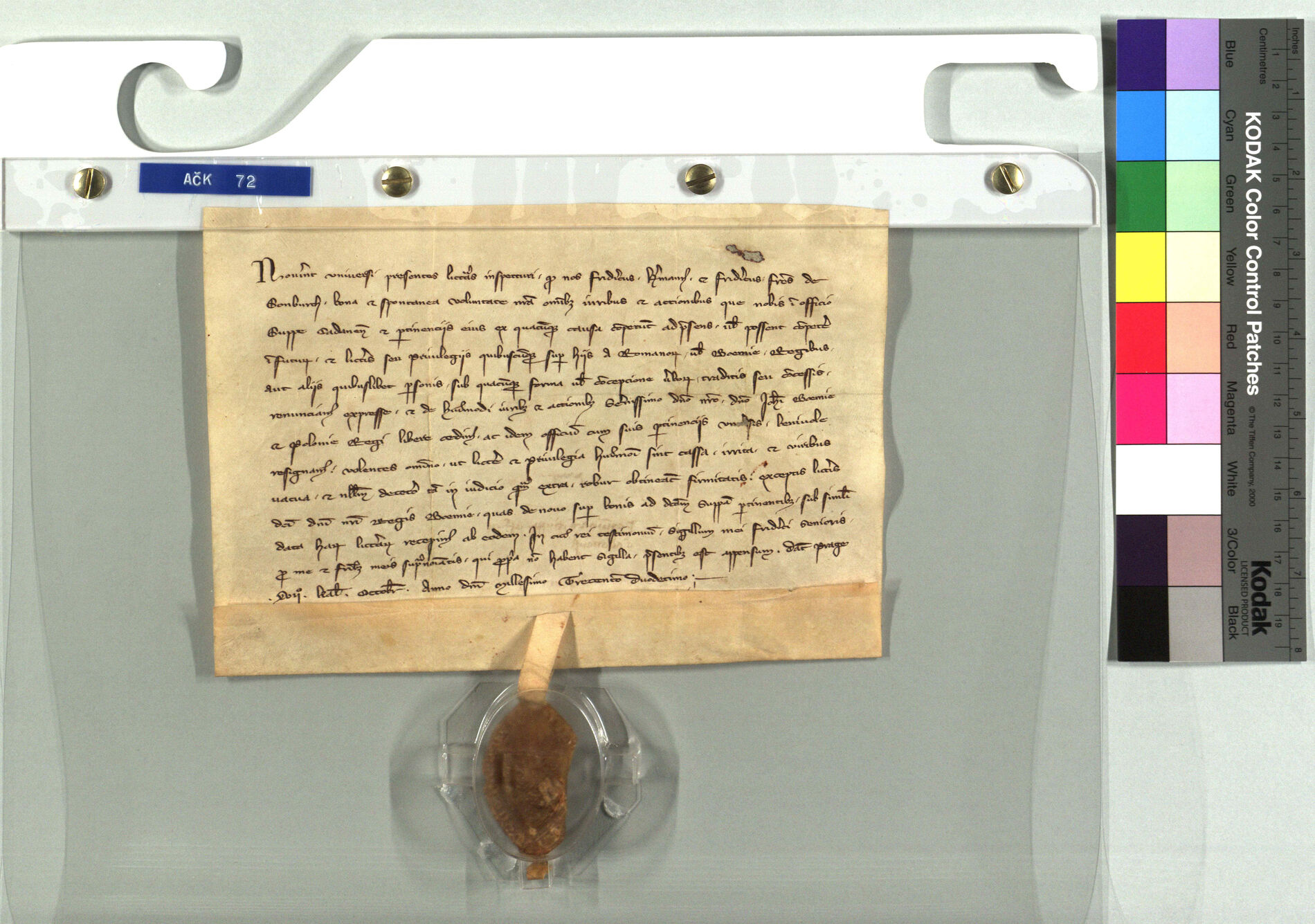

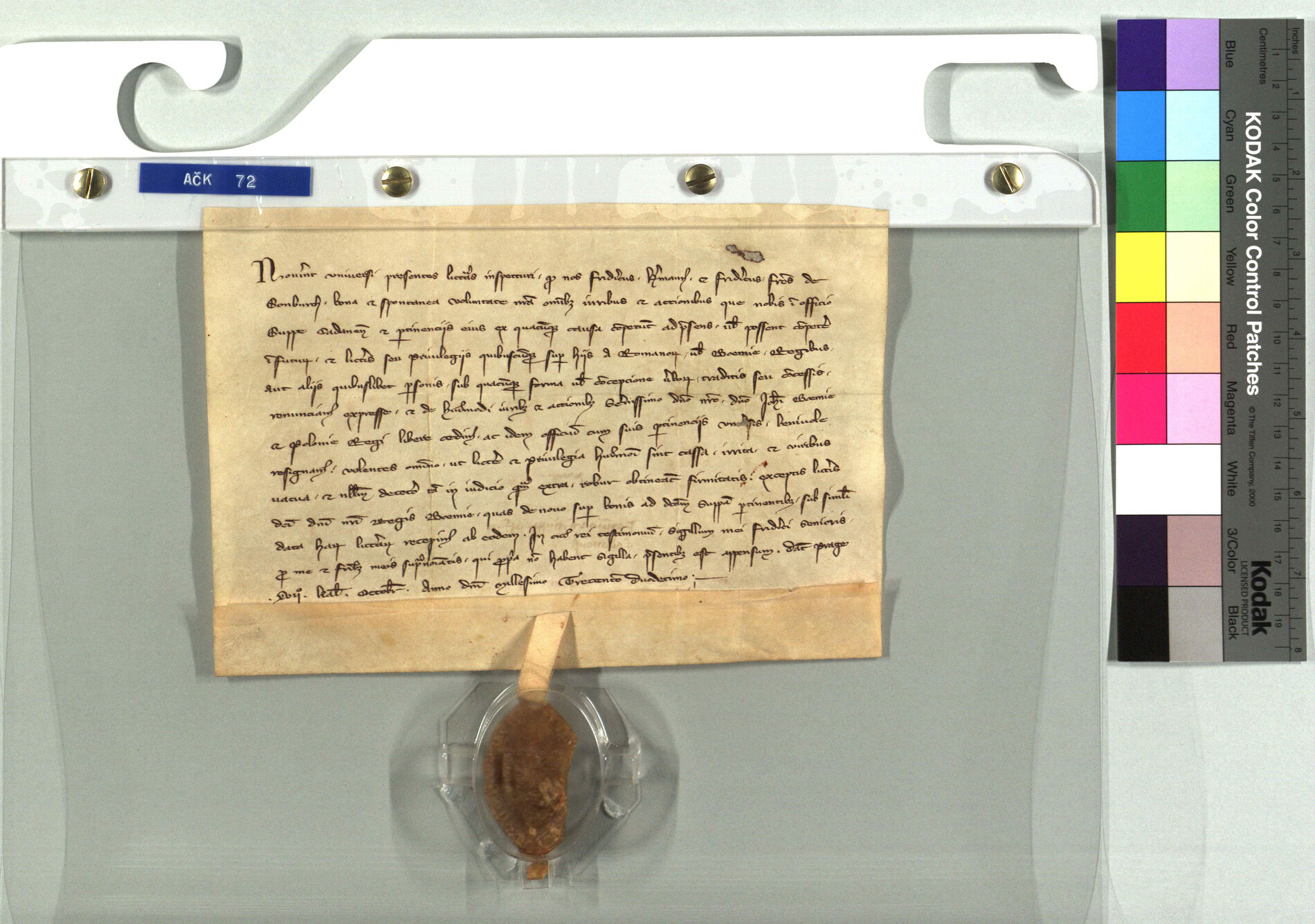

resource/hugging_face_2.jpg

CHANGED

|

|

resource/hugging_face_3.jpg

ADDED

|

resource/hugging_face_4.jpg

ADDED

|

tools.py

ADDED

|

@@ -0,0 +1,49 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# -*- coding: utf-8 -*-

|

| 2 |

+

|

| 3 |

+

from dataclasses import dataclass, field

|

| 4 |

+

|

| 5 |

+

from doc_ufcn import models

|

| 6 |

+

from doc_ufcn.main import DocUFCN

|

| 7 |

+

|

| 8 |

+

|

| 9 |

+

@dataclass

|

| 10 |

+

class UFCNModel:

|

| 11 |

+

name: str

|

| 12 |

+

colors: list

|

| 13 |

+

title: str

|

| 14 |

+

description: str

|

| 15 |

+

classes: list = field(default_factory=list)

|

| 16 |

+

model: DocUFCN = None

|

| 17 |

+

|

| 18 |

+

def get_class_name(self, channel_idx):

|

| 19 |

+

return self.classes[channel_idx]

|

| 20 |

+

|

| 21 |

+

@property

|

| 22 |

+

def loaded(self):

|

| 23 |

+

return self.model is not None

|

| 24 |

+

|

| 25 |

+

@property

|

| 26 |

+

def num_channels(self):

|

| 27 |

+

return len(self.classes)

|

| 28 |

+

|

| 29 |

+

def load(self):

|

| 30 |

+

# Download the model

|

| 31 |

+

model_path, parameters = models.download_model(name=self.name)

|

| 32 |

+

|

| 33 |

+

# Store classes

|

| 34 |

+

self.classes = parameters["classes"]

|

| 35 |

+

|

| 36 |

+

# Check that the number of colors is equal to the number of classes -1

|

| 37 |

+

assert self.num_channels - 1 == len(

|

| 38 |

+

self.colors

|

| 39 |

+

), f"The parameter classes_colors was filled with the wrong number of colors. {self.num_channels-1} colors are expected instead of {len(self.colors)}."

|

| 40 |

+

|

| 41 |

+

# Load the model

|

| 42 |

+

self.model = DocUFCN(

|

| 43 |

+

no_of_classes=len(self.classes),

|

| 44 |

+

model_input_size=parameters["input_size"],

|

| 45 |

+

device="cpu",

|

| 46 |

+

)

|

| 47 |

+

self.model.load(

|

| 48 |

+

model_path=model_path, mean=parameters["mean"], std=parameters["std"]

|

| 49 |

+

)

|