Spaces:

Running

on

Zero

Running

on

Zero

jhaozhuang

commited on

Commit

·

77771e4

1

Parent(s):

e826d2f

app

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- BidirectionalTranslation/LICENSE +26 -0

- BidirectionalTranslation/README.md +72 -0

- BidirectionalTranslation/data/__init__.py +100 -0

- BidirectionalTranslation/data/aligned_dataset.py +60 -0

- BidirectionalTranslation/data/base_dataset.py +164 -0

- BidirectionalTranslation/data/image_folder.py +66 -0

- BidirectionalTranslation/data/singleCo_dataset.py +85 -0

- BidirectionalTranslation/data/singleSr_dataset.py +73 -0

- BidirectionalTranslation/models/__init__.py +61 -0

- BidirectionalTranslation/models/base_model.py +277 -0

- BidirectionalTranslation/models/cycle_ganstft_model.py +103 -0

- BidirectionalTranslation/models/networks.py +1375 -0

- BidirectionalTranslation/options/base_options.py +142 -0

- BidirectionalTranslation/options/test_options.py +19 -0

- BidirectionalTranslation/requirements.txt +8 -0

- BidirectionalTranslation/scripts/test_western2manga.sh +49 -0

- BidirectionalTranslation/test.py +71 -0

- BidirectionalTranslation/util/html.py +86 -0

- BidirectionalTranslation/util/util.py +136 -0

- BidirectionalTranslation/util/visualizer.py +221 -0

- app.py +507 -0

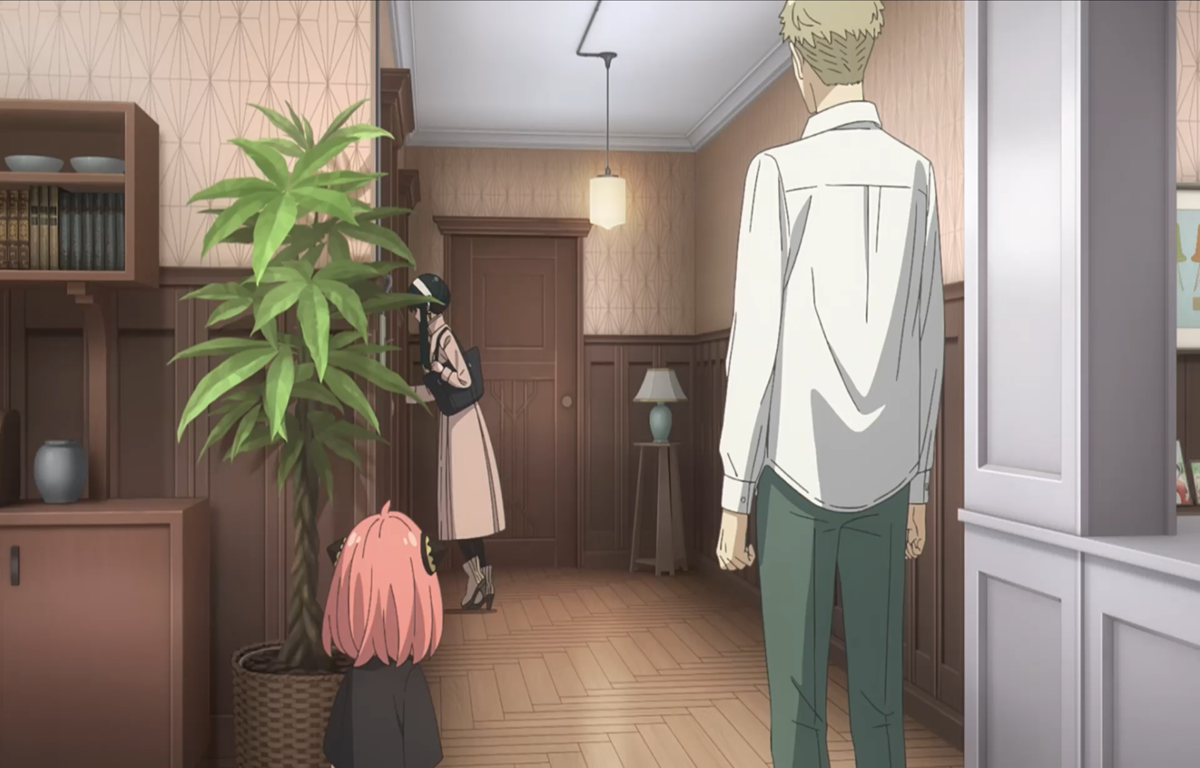

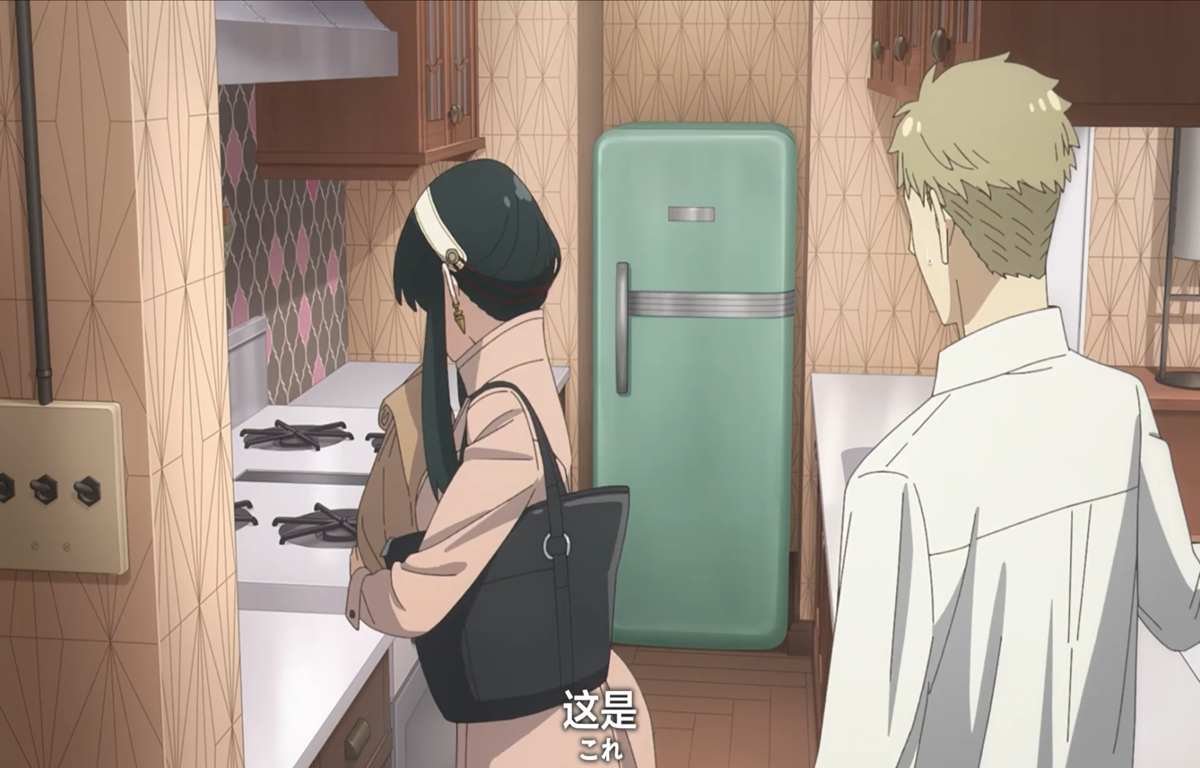

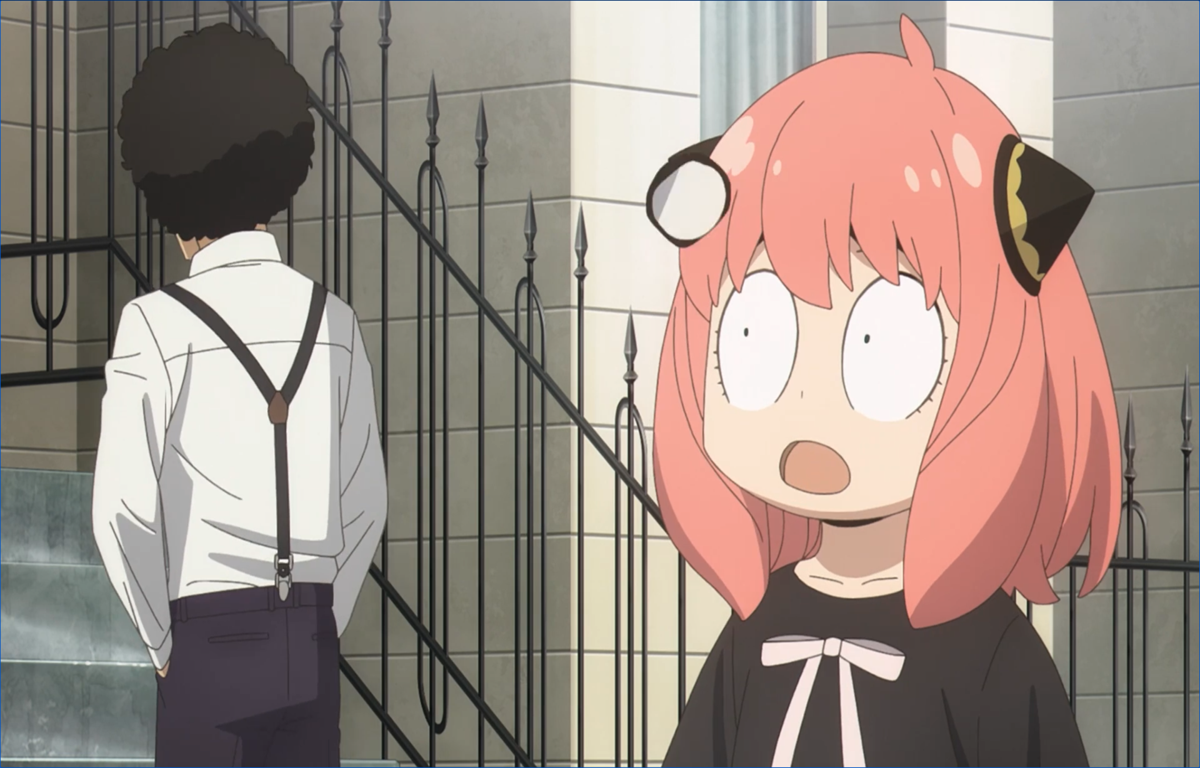

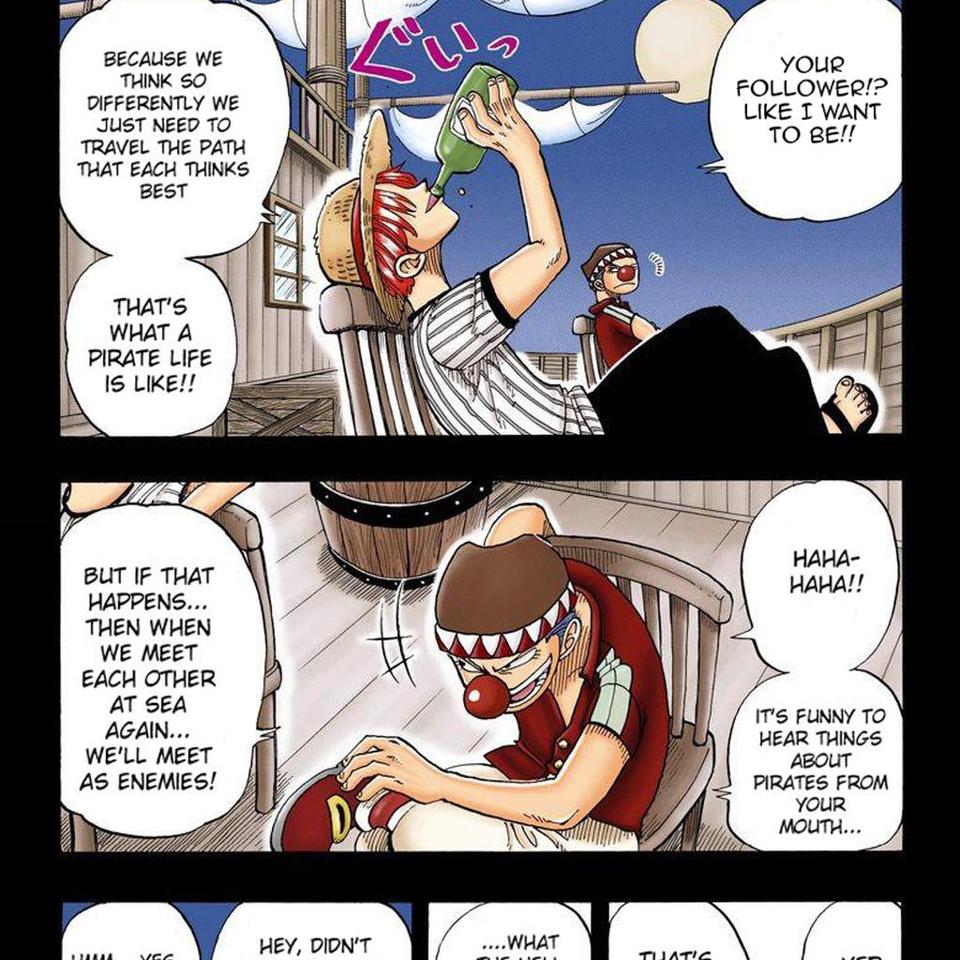

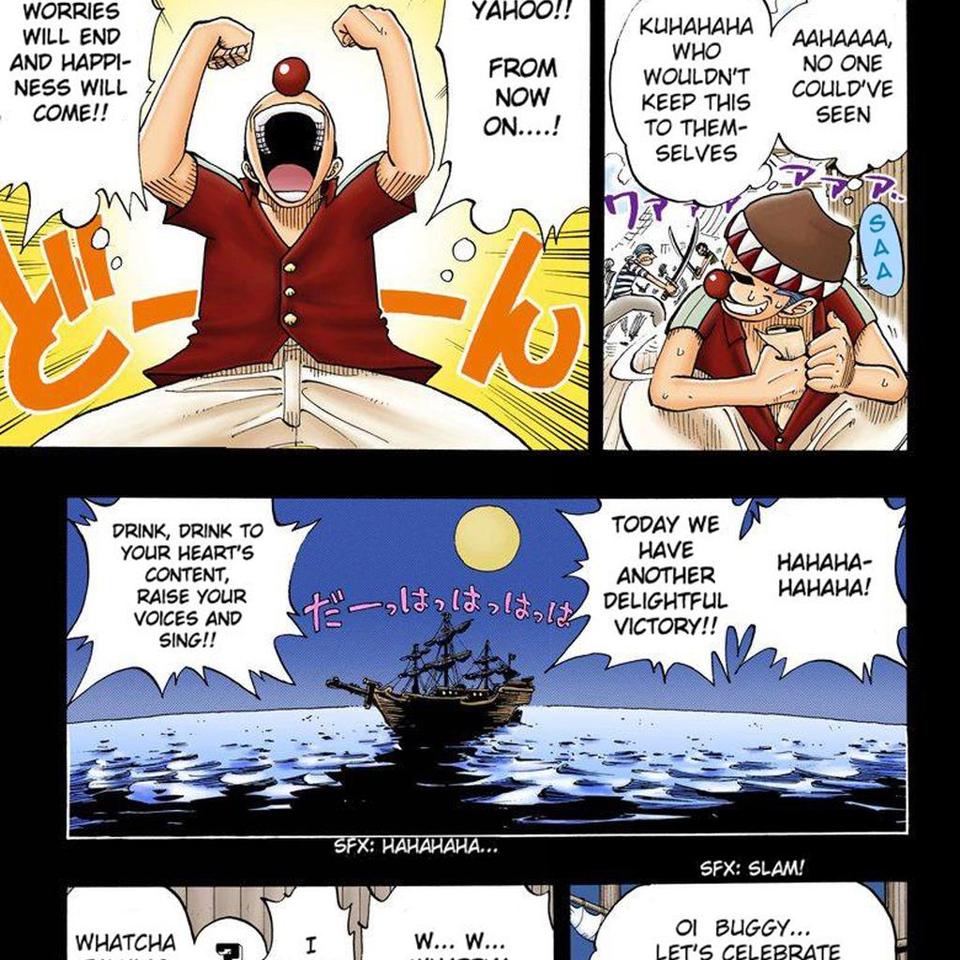

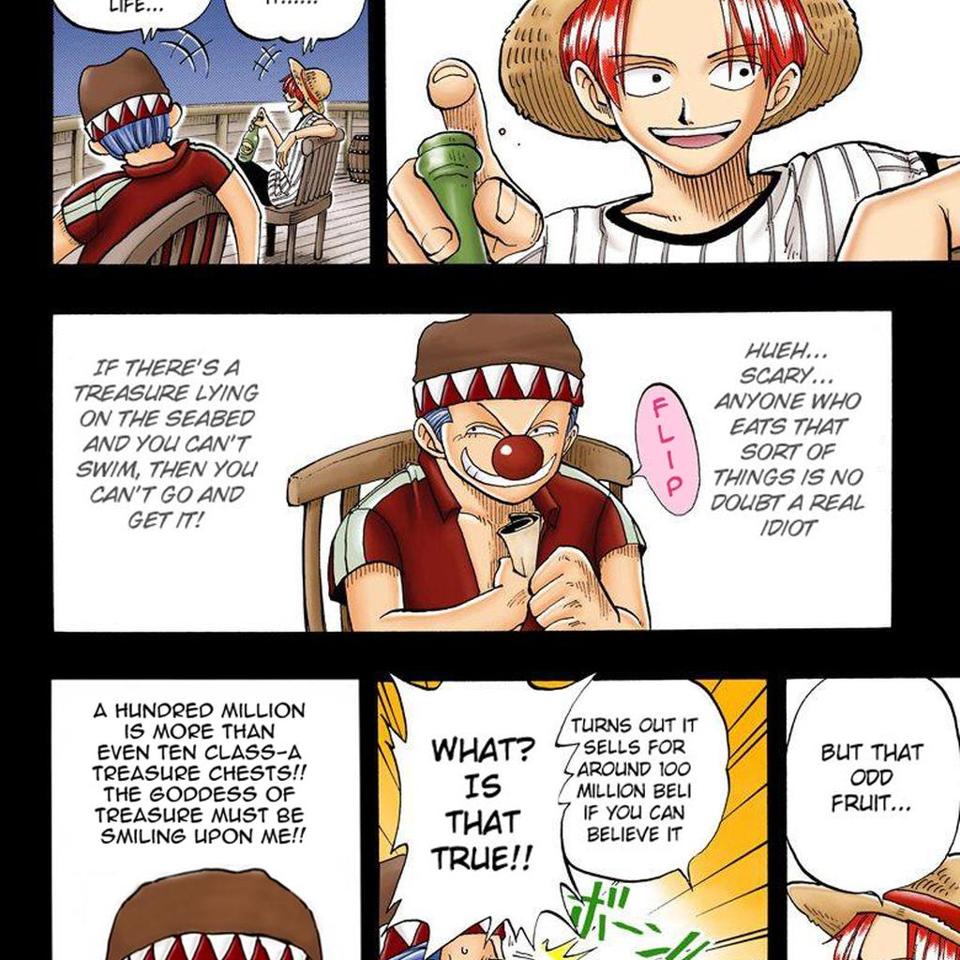

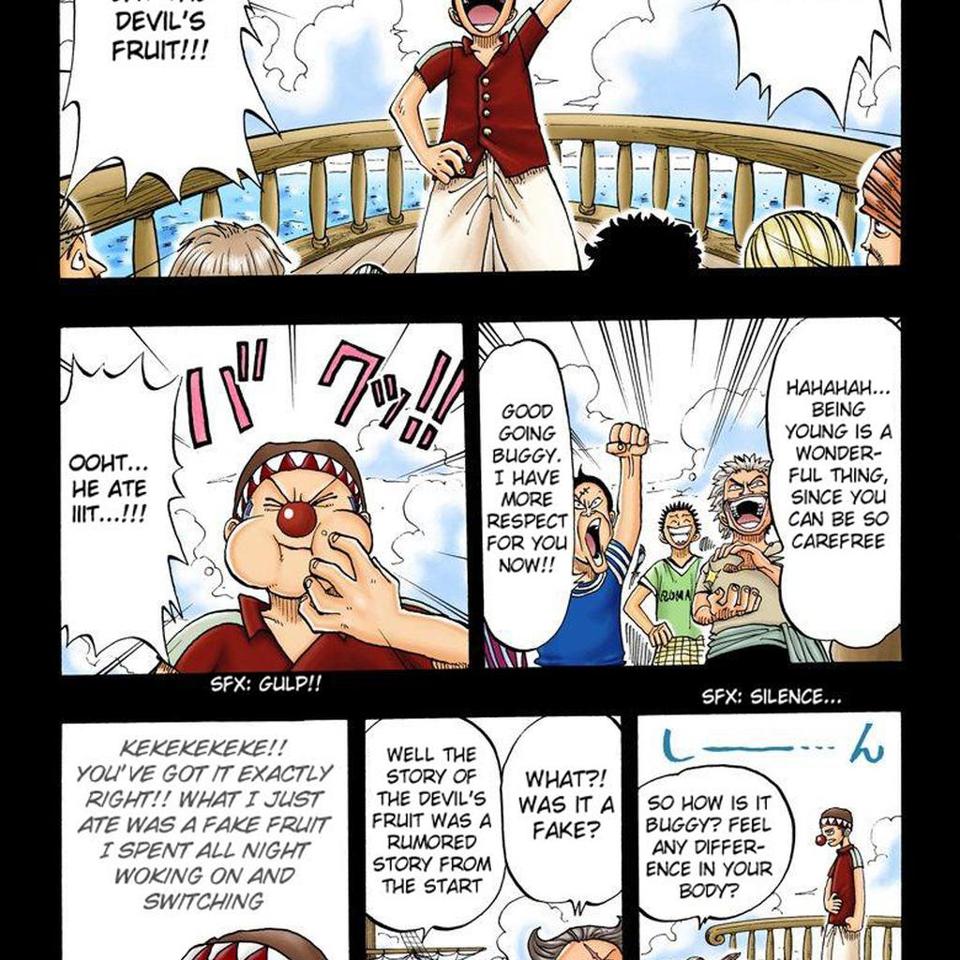

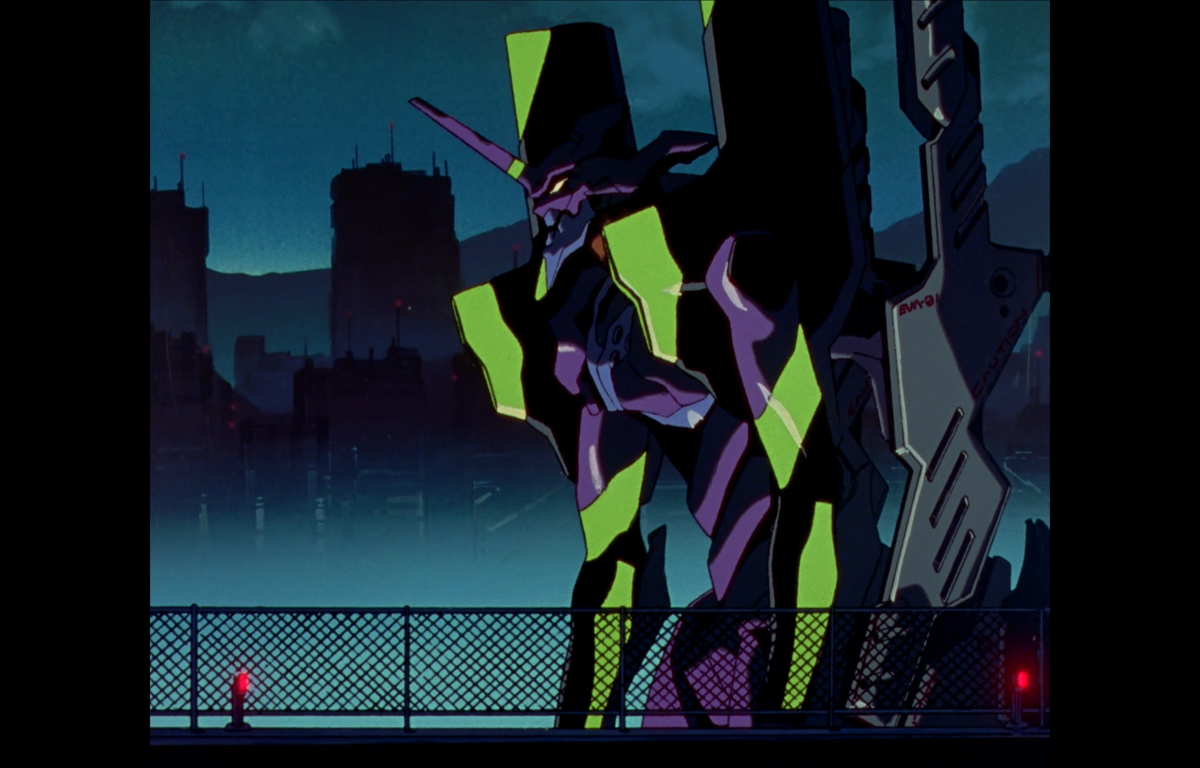

- assets/example_0/input.jpg +0 -0

- assets/example_0/ref1.jpg +0 -0

- assets/example_1/input.jpg +0 -0

- assets/example_1/ref1.jpg +0 -0

- assets/example_1/ref2.jpg +0 -0

- assets/example_1/ref3.jpg +0 -0

- assets/example_2/input.png +0 -0

- assets/example_2/ref1.png +0 -0

- assets/example_2/ref2.png +0 -0

- assets/example_2/ref3.png +0 -0

- assets/example_3/input.png +0 -0

- assets/example_3/ref1.png +0 -0

- assets/example_3/ref2.png +0 -0

- assets/example_3/ref3.png +0 -0

- assets/example_4/input.jpg +0 -0

- assets/example_4/ref1.jpg +0 -0

- assets/example_4/ref2.jpg +0 -0

- assets/example_4/ref3.jpg +0 -0

- assets/example_5/input.png +0 -0

- assets/example_5/ref1.png +0 -0

- assets/example_5/ref2.png +0 -0

- assets/example_5/ref3.png +0 -0

- assets/mask.png +0 -0

- diffusers/.github/ISSUE_TEMPLATE/bug-report.yml +110 -0

- diffusers/.github/ISSUE_TEMPLATE/config.yml +4 -0

- diffusers/.github/ISSUE_TEMPLATE/feature_request.md +20 -0

- diffusers/.github/ISSUE_TEMPLATE/feedback.md +12 -0

- diffusers/.github/ISSUE_TEMPLATE/new-model-addition.yml +31 -0

- diffusers/.github/ISSUE_TEMPLATE/translate.md +29 -0

BidirectionalTranslation/LICENSE

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Manga Filling Style Conversion with Screentone Variational Autoencoder

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2020 The Chinese University of Hong Kong

|

| 4 |

+

|

| 5 |

+

Copyright and License Information: The source code, the binary executable, and all data files (hereafter, Software) are copyrighted by The Chinese University of Hong Kong and Tien-Tsin Wong (hereafter, Author), Copyright (c) 2021 The Chinese University of Hong Kong. All Rights Reserved.

|

| 6 |

+

|

| 7 |

+

The Author grants to you ("Licensee") a non-exclusive license to use the Software for academic, research and commercial purposes, without fee. For commercial use, Licensee should submit a WRITTEN NOTICE to the Author. The notice should clearly identify the software package/system/hardware (name, version, and/or model number) using the Software. Licensee may distribute the Software to third parties provided that the copyright notice and this statement appears on all copies. Licensee agrees that the copyright notice and this statement will appear on all copies of the Software, or portions thereof. The Author retains exclusive ownership of the Software.

|

| 8 |

+

|

| 9 |

+

Licensee may make derivatives of the Software, provided that such derivatives can only be used for the purposes specified in the license grant above.

|

| 10 |

+

|

| 11 |

+

THE AUTHOR MAKES NO REPRESENTATIONS OR WARRANTIES ABOUT THE SUITABILITY OF THE SOFTWARE, EITHER EXPRESS OR IMPLIED, INCLUDING BUT NOT LIMITED TO THE IMPLIED WARRANTIES OF MERCHANTABILITY, FITNESS FOR A PARTICULAR PURPOSE, OR NON-INFRINGEMENT. THE AUTHOR SHALL NOT BE LIABLE FOR ANY DAMAGES SUFFERED BY LICENSEE AS A RESULT OF USING, MODIFYING OR DISTRIBUTING THE SOFTWARE OR ITS DERIVATIVES.

|

| 12 |

+

|

| 13 |

+

By using the source code, Licensee agrees to cite the following papers in

|

| 14 |

+

Licensee's publication/work:

|

| 15 |

+

|

| 16 |

+

Minshan Xie, Chengze Li, Xueting Liu and Tien-Tsin Wong

|

| 17 |

+

"Manga Filling Style Conversion with Screentone Variational Autoencoder"

|

| 18 |

+

ACM Transactions on Graphics (SIGGRAPH Asia 2020 issue), Vol. 39, No. 6, December 2020, pp. 226:1-226:15.

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

By using or copying the Software, Licensee agrees to abide by the intellectual property laws, and all other applicable laws of the U.S., and the terms of this license.

|

| 22 |

+

|

| 23 |

+

Author shall have the right to terminate this license immediately by written notice upon Licensee's breach of, or non-compliance with, any of its terms.

|

| 24 |

+

Licensee may be held legally responsible for any infringement that is caused or encouraged by Licensee's failure to abide by the terms of this license.

|

| 25 |

+

|

| 26 |

+

For more information or comments, send mail to: ttwong@acm.org

|

BidirectionalTranslation/README.md

ADDED

|

@@ -0,0 +1,72 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Bidirectional Translation

|

| 2 |

+

|

| 3 |

+

Pytorch implementation for multimodal comic-to-manga translation.

|

| 4 |

+

|

| 5 |

+

**Note**: The current software works well with PyTorch 1.6.0+.

|

| 6 |

+

|

| 7 |

+

## Prerequisites

|

| 8 |

+

- Linux

|

| 9 |

+

- Python 3

|

| 10 |

+

- CPU or NVIDIA GPU + CUDA CuDNN

|

| 11 |

+

|

| 12 |

+

## Getting Started ###

|

| 13 |

+

### Installation

|

| 14 |

+

- Clone this repo:

|

| 15 |

+

```bash

|

| 16 |

+

git clone https://github.com/msxie/ScreenStyle.git

|

| 17 |

+

cd ScreenStyle/MangaScreening

|

| 18 |

+

```

|

| 19 |

+

- Install PyTorch and dependencies from http://pytorch.org

|

| 20 |

+

- Install python libraries [tensorboardX](https://github.com/lanpa/tensorboardX)

|

| 21 |

+

- Install other libraries

|

| 22 |

+

For pip users:

|

| 23 |

+

```

|

| 24 |

+

pip install -r requirements.txt

|

| 25 |

+

```

|

| 26 |

+

|

| 27 |

+

## Data praperation

|

| 28 |

+

The training requires paired data (including manga image, western image and their line drawings).

|

| 29 |

+

The line drawing can be extracted using [MangaLineExtraction](https://github.com/ljsabc/MangaLineExtraction).

|

| 30 |

+

|

| 31 |

+

```

|

| 32 |

+

${DATASET}

|

| 33 |

+

|-- color2manga

|

| 34 |

+

| |-- val

|

| 35 |

+

| | |-- ${FOLDER}

|

| 36 |

+

| | | |-- imgs

|

| 37 |

+

| | | | |-- 0001.png

|

| 38 |

+

| | | | |-- ...

|

| 39 |

+

| | | |-- line

|

| 40 |

+

| | | | |-- 0001.png

|

| 41 |

+

| | | | |-- ...

|

| 42 |

+

```

|

| 43 |

+

|

| 44 |

+

### Use a Pre-trained Model

|

| 45 |

+

- Download the pre-trained [ScreenVAE](https://drive.google.com/file/d/1OBxWHjijMwi9gfTOfDiFiHRZA_CXNSWr/view?usp=sharing) model and place under `checkpoints/ScreenVAE/` folder.

|

| 46 |

+

|

| 47 |

+

- Download the pre-trained [color2manga](https://drive.google.com/file/d/18-N1W0t3igWLJWFyplNZ5Fa2YHWASCZY/view?usp=sharing) model and place under `checkpoints/color2manga/` folder.

|

| 48 |

+

- Generate results with the model

|

| 49 |

+

```bash

|

| 50 |

+

bash ./scripts/test_western2manga.sh

|

| 51 |

+

```

|

| 52 |

+

|

| 53 |

+

## Copyright and License

|

| 54 |

+

You are granted with the [LICENSE](LICENSE) for both academic and commercial usages.

|

| 55 |

+

|

| 56 |

+

## Citation

|

| 57 |

+

If you find the code helpful in your resarch or work, please cite the following papers.

|

| 58 |

+

```

|

| 59 |

+

@article{xie-2020-manga,

|

| 60 |

+

author = {Minshan Xie and Chengze Li and Xueting Liu and Tien-Tsin Wong},

|

| 61 |

+

title = {Manga Filling Style Conversion with Screentone Variational Autoencoder},

|

| 62 |

+

journal = {ACM Transactions on Graphics (SIGGRAPH Asia 2020 issue)},

|

| 63 |

+

month = {December},

|

| 64 |

+

year = {2020},

|

| 65 |

+

volume = {39},

|

| 66 |

+

number = {6},

|

| 67 |

+

pages = {226:1--226:15}

|

| 68 |

+

}

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

### Acknowledgements

|

| 72 |

+

This code borrows heavily from the [pytorch-CycleGAN-and-pix2pix](https://github.com/junyanz/pytorch-CycleGAN-and-pix2pix) repository.

|

BidirectionalTranslation/data/__init__.py

ADDED

|

@@ -0,0 +1,100 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This package includes all the modules related to data loading and preprocessing

|

| 2 |

+

|

| 3 |

+

To add a custom dataset class called 'dummy', you need to add a file called 'dummy_dataset.py' and define a subclass 'DummyDataset' inherited from BaseDataset.

|

| 4 |

+

You need to implement four functions:

|

| 5 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 6 |

+

-- <__len__>: return the size of dataset.

|

| 7 |

+

-- <__getitem__>: get a data point from data loader.

|

| 8 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 9 |

+

|

| 10 |

+

Now you can use the dataset class by specifying flag '--dataset_mode dummy'.

|

| 11 |

+

See our template dataset class 'template_dataset.py' for more details.

|

| 12 |

+

"""

|

| 13 |

+

import importlib

|

| 14 |

+

import torch.utils.data

|

| 15 |

+

from data.base_dataset import BaseDataset

|

| 16 |

+

|

| 17 |

+

|

| 18 |

+

def find_dataset_using_name(dataset_name):

|

| 19 |

+

"""Import the module "data/[dataset_name]_dataset.py".

|

| 20 |

+

|

| 21 |

+

In the file, the class called DatasetNameDataset() will

|

| 22 |

+

be instantiated. It has to be a subclass of BaseDataset,

|

| 23 |

+

and it is case-insensitive.

|

| 24 |

+

"""

|

| 25 |

+

dataset_filename = "data." + dataset_name + "_dataset"

|

| 26 |

+

datasetlib = importlib.import_module(dataset_filename)

|

| 27 |

+

|

| 28 |

+

dataset = None

|

| 29 |

+

target_dataset_name = dataset_name.replace('_', '') + 'dataset'

|

| 30 |

+

for name, cls in datasetlib.__dict__.items():

|

| 31 |

+

if name.lower() == target_dataset_name.lower() \

|

| 32 |

+

and issubclass(cls, BaseDataset):

|

| 33 |

+

dataset = cls

|

| 34 |

+

|

| 35 |

+

if dataset is None:

|

| 36 |

+

raise NotImplementedError("In %s.py, there should be a subclass of BaseDataset with class name that matches %s in lowercase." % (dataset_filename, target_dataset_name))

|

| 37 |

+

|

| 38 |

+

return dataset

|

| 39 |

+

|

| 40 |

+

|

| 41 |

+

def get_option_setter(dataset_name):

|

| 42 |

+

"""Return the static method <modify_commandline_options> of the dataset class."""

|

| 43 |

+

dataset_class = find_dataset_using_name(dataset_name)

|

| 44 |

+

return dataset_class.modify_commandline_options

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

def create_dataset(opt):

|

| 48 |

+

"""Create a dataset given the option.

|

| 49 |

+

|

| 50 |

+

This function wraps the class CustomDatasetDataLoader.

|

| 51 |

+

This is the main interface between this package and 'train.py'/'test.py'

|

| 52 |

+

|

| 53 |

+

Example:

|

| 54 |

+

>>> from data import create_dataset

|

| 55 |

+

>>> dataset = create_dataset(opt)

|

| 56 |

+

"""

|

| 57 |

+

data_loader = CustomDatasetDataLoader(opt)

|

| 58 |

+

dataset = data_loader.load_data()

|

| 59 |

+

return dataset

|

| 60 |

+

|

| 61 |

+

|

| 62 |

+

class CustomDatasetDataLoader():

|

| 63 |

+

"""Wrapper class of Dataset class that performs multi-threaded data loading"""

|

| 64 |

+

|

| 65 |

+

def __init__(self, opt):

|

| 66 |

+

"""Initialize this class

|

| 67 |

+

|

| 68 |

+

Step 1: create a dataset instance given the name [dataset_mode]

|

| 69 |

+

Step 2: create a multi-threaded data loader.

|

| 70 |

+

"""

|

| 71 |

+

self.opt = opt

|

| 72 |

+

dataset_class = find_dataset_using_name(opt.dataset_mode)

|

| 73 |

+

self.dataset = dataset_class(opt)

|

| 74 |

+

print("dataset [%s] was created" % type(self.dataset).__name__)

|

| 75 |

+

|

| 76 |

+

train_sampler = None

|

| 77 |

+

if len(opt.gpu_ids) > 1:

|

| 78 |

+

train_sampler = torch.utils.data.distributed.DistributedSampler(self.dataset)

|

| 79 |

+

|

| 80 |

+

self.dataloader = torch.utils.data.DataLoader(

|

| 81 |

+

self.dataset,

|

| 82 |

+

batch_size=opt.batch_size,

|

| 83 |

+

#shuffle=not opt.serial_batches,

|

| 84 |

+

num_workers=int(opt.num_threads),

|

| 85 |

+

pin_memory=True, sampler=train_sampler

|

| 86 |

+

)

|

| 87 |

+

|

| 88 |

+

def load_data(self):

|

| 89 |

+

return self

|

| 90 |

+

|

| 91 |

+

def __len__(self):

|

| 92 |

+

"""Return the number of data in the dataset"""

|

| 93 |

+

return min(len(self.dataset), self.opt.max_dataset_size)

|

| 94 |

+

|

| 95 |

+

def __iter__(self):

|

| 96 |

+

"""Return a batch of data"""

|

| 97 |

+

for i, data in enumerate(self.dataloader):

|

| 98 |

+

if i * self.opt.batch_size >= self.opt.max_dataset_size:

|

| 99 |

+

break

|

| 100 |

+

yield data

|

BidirectionalTranslation/data/aligned_dataset.py

ADDED

|

@@ -0,0 +1,60 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os.path

|

| 2 |

+

from data.base_dataset import BaseDataset, get_params, get_transform

|

| 3 |

+

from data.image_folder import make_dataset

|

| 4 |

+

from PIL import Image

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

class AlignedDataset(BaseDataset):

|

| 8 |

+

"""A dataset class for paired image dataset.

|

| 9 |

+

|

| 10 |

+

It assumes that the directory '/path/to/data/train' contains image pairs in the form of {A,B}.

|

| 11 |

+

During test time, you need to prepare a directory '/path/to/data/test'.

|

| 12 |

+

"""

|

| 13 |

+

|

| 14 |

+

def __init__(self, opt):

|

| 15 |

+

"""Initialize this dataset class.

|

| 16 |

+

|

| 17 |

+

Parameters:

|

| 18 |

+

opt (Option class) -- stores all the experiment flags; needs to be a subclass of BaseOptions

|

| 19 |

+

"""

|

| 20 |

+

BaseDataset.__init__(self, opt)

|

| 21 |

+

self.dir_AB = os.path.join(opt.dataroot, opt.phase) # get the image directory

|

| 22 |

+

self.AB_paths = sorted(make_dataset(self.dir_AB, opt.max_dataset_size)) # get image paths

|

| 23 |

+

assert(self.opt.load_size >= self.opt.crop_size) # crop_size should be smaller than the size of loaded image

|

| 24 |

+

self.input_nc = self.opt.output_nc if self.opt.direction == 'BtoA' else self.opt.input_nc

|

| 25 |

+

self.output_nc = self.opt.input_nc if self.opt.direction == 'BtoA' else self.opt.output_nc

|

| 26 |

+

|

| 27 |

+

def __getitem__(self, index):

|

| 28 |

+

"""Return a data point and its metadata information.

|

| 29 |

+

|

| 30 |

+

Parameters:

|

| 31 |

+

index - - a random integer for data indexing

|

| 32 |

+

|

| 33 |

+

Returns a dictionary that contains A, B, A_paths and B_paths

|

| 34 |

+

A (tensor) - - an image in the input domain

|

| 35 |

+

B (tensor) - - its corresponding image in the target domain

|

| 36 |

+

A_paths (str) - - image paths

|

| 37 |

+

B_paths (str) - - image paths (same as A_paths)

|

| 38 |

+

"""

|

| 39 |

+

# read a image given a random integer index

|

| 40 |

+

AB_path = self.AB_paths[index%len(self.AB_paths)]

|

| 41 |

+

AB = Image.open(AB_path).convert('RGB')

|

| 42 |

+

# split AB image into A and B

|

| 43 |

+

w, h = AB.size

|

| 44 |

+

w2 = int(w / 2)

|

| 45 |

+

A = AB.crop((0, 0, w2, h))

|

| 46 |

+

B = AB.crop((w2, 0, w, h))

|

| 47 |

+

|

| 48 |

+

# apply the same transform to both A and B

|

| 49 |

+

transform_params = get_params(self.opt, A.size)

|

| 50 |

+

A_transform = get_transform(self.opt, transform_params, grayscale=(self.input_nc == 1))

|

| 51 |

+

B_transform = get_transform(self.opt, transform_params, grayscale=(self.output_nc == 1))

|

| 52 |

+

|

| 53 |

+

A = A_transform(A)

|

| 54 |

+

B = B_transform(B)

|

| 55 |

+

|

| 56 |

+

return {'A': A, 'B': B, 'A_paths': AB_path, 'B_paths': AB_path}

|

| 57 |

+

|

| 58 |

+

def __len__(self):

|

| 59 |

+

"""Return the total number of images in the dataset."""

|

| 60 |

+

return len(self.AB_paths)*100

|

BidirectionalTranslation/data/base_dataset.py

ADDED

|

@@ -0,0 +1,164 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This module implements an abstract base class (ABC) 'BaseDataset' for datasets.

|

| 2 |

+

|

| 3 |

+

It also includes common transformation functions (e.g., get_transform, __scale_width), which can be later used in subclasses.

|

| 4 |

+

"""

|

| 5 |

+

import random

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch.utils.data as data

|

| 8 |

+

from PIL import Image, ImageOps

|

| 9 |

+

import torchvision.transforms as transforms

|

| 10 |

+

from abc import ABC, abstractmethod

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

class BaseDataset(data.Dataset, ABC):

|

| 14 |

+

"""This class is an abstract base class (ABC) for datasets.

|

| 15 |

+

|

| 16 |

+

To create a subclass, you need to implement the following four functions:

|

| 17 |

+

-- <__init__>: initialize the class, first call BaseDataset.__init__(self, opt).

|

| 18 |

+

-- <__len__>: return the size of dataset.

|

| 19 |

+

-- <__getitem__>: get a data point.

|

| 20 |

+

-- <modify_commandline_options>: (optionally) add dataset-specific options and set default options.

|

| 21 |

+

"""

|

| 22 |

+

|

| 23 |

+

def __init__(self, opt):

|

| 24 |

+

"""Initialize the class; save the options in the class

|

| 25 |

+

|

| 26 |

+

Parameters:

|

| 27 |

+

opt (Option class)-- stores all the experiment flags; needs to be a subclass of BaseOptions

|

| 28 |

+

"""

|

| 29 |

+

self.opt = opt

|

| 30 |

+

self.root = opt.dataroot

|

| 31 |

+

|

| 32 |

+

@staticmethod

|

| 33 |

+

def modify_commandline_options(parser, is_train):

|

| 34 |

+

"""Add new dataset-specific options, and rewrite default values for existing options.

|

| 35 |

+

|

| 36 |

+

Parameters:

|

| 37 |

+

parser -- original option parser

|

| 38 |

+

is_train (bool) -- whether training phase or test phase. You can use this flag to add training-specific or test-specific options.

|

| 39 |

+

|

| 40 |

+

Returns:

|

| 41 |

+

the modified parser.

|

| 42 |

+

"""

|

| 43 |

+

return parser

|

| 44 |

+

|

| 45 |

+

@abstractmethod

|

| 46 |

+

def __len__(self):

|

| 47 |

+

"""Return the total number of images in the dataset."""

|

| 48 |

+

return 0

|

| 49 |

+

|

| 50 |

+

@abstractmethod

|

| 51 |

+

def __getitem__(self, index):

|

| 52 |

+

"""Return a data point and its metadata information.

|

| 53 |

+

|

| 54 |

+

Parameters:

|

| 55 |

+

index - - a random integer for data indexing

|

| 56 |

+

|

| 57 |

+

Returns:

|

| 58 |

+

a dictionary of data with their names. It ususally contains the data itself and its metadata information.

|

| 59 |

+

"""

|

| 60 |

+

pass

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

def get_params(opt, size):

|

| 64 |

+

w, h = size

|

| 65 |

+

new_h = h

|

| 66 |

+

new_w = w

|

| 67 |

+

crop = 0

|

| 68 |

+

if opt.preprocess == 'resize_and_crop':

|

| 69 |

+

new_h = new_w = opt.load_size

|

| 70 |

+

elif opt.preprocess == 'scale_width_and_crop':

|

| 71 |

+

new_w = opt.load_size

|

| 72 |

+

new_h = opt.load_size * h // w

|

| 73 |

+

|

| 74 |

+

# x = random.randint(0, np.maximum(0, new_w - opt.crop_size))

|

| 75 |

+

# y = random.randint(0, np.maximum(0, new_h - opt.crop_size))

|

| 76 |

+

|

| 77 |

+

x = random.randint(crop, np.maximum(0, new_w - opt.crop_size-crop))

|

| 78 |

+

y = random.randint(crop, np.maximum(0, new_h - opt.crop_size-crop))

|

| 79 |

+

|

| 80 |

+

flip = random.random() > 0.5

|

| 81 |

+

|

| 82 |

+

return {'crop_pos': (x, y), 'flip': flip}

|

| 83 |

+

|

| 84 |

+

|

| 85 |

+

def get_transform(opt, params=None, grayscale=False, method=Image.BICUBIC, convert=True):

|

| 86 |

+

transform_list = []

|

| 87 |

+

if grayscale:

|

| 88 |

+

transform_list.append(transforms.Grayscale(1))

|

| 89 |

+

if 'resize' in opt.preprocess:

|

| 90 |

+

osize = [opt.load_size, opt.load_size]

|

| 91 |

+

transform_list.append(transforms.Resize(osize, method))

|

| 92 |

+

elif 'scale_width' in opt.preprocess:

|

| 93 |

+

transform_list.append(transforms.Lambda(lambda img: __scale_width(img, opt.load_size, method)))

|

| 94 |

+

|

| 95 |

+

if 'crop' in opt.preprocess:

|

| 96 |

+

if params is None:

|

| 97 |

+

# transform_list.append(transforms.RandomCrop(opt.crop_size))

|

| 98 |

+

transform_list.append(transforms.CenterCrop(opt.crop_size))

|

| 99 |

+

else:

|

| 100 |

+

transform_list.append(transforms.Lambda(lambda img: __crop(img, params['crop_pos'], opt.crop_size)))

|

| 101 |

+

|

| 102 |

+

if opt.preprocess == 'none':

|

| 103 |

+

transform_list.append(transforms.Lambda(lambda img: __make_power_2(img, base=2**8, method=method)))

|

| 104 |

+

|

| 105 |

+

if not opt.no_flip:

|

| 106 |

+

if params is None:

|

| 107 |

+

transform_list.append(transforms.RandomHorizontalFlip())

|

| 108 |

+

elif params['flip']:

|

| 109 |

+

transform_list.append(transforms.Lambda(lambda img: __flip(img, params['flip'])))

|

| 110 |

+

|

| 111 |

+

# transform_list += [transforms.ToTensor()]

|

| 112 |

+

if convert:

|

| 113 |

+

transform_list += [transforms.ToTensor()]

|

| 114 |

+

if grayscale:

|

| 115 |

+

transform_list += [transforms.Normalize((0.5,), (0.5,))]

|

| 116 |

+

else:

|

| 117 |

+

transform_list += [transforms.Normalize((0.5, 0.5, 0.5), (0.5, 0.5, 0.5))]

|

| 118 |

+

return transforms.Compose(transform_list)

|

| 119 |

+

|

| 120 |

+

|

| 121 |

+

def __make_power_2(img, base, method=Image.BICUBIC):

|

| 122 |

+

ow, oh = img.size

|

| 123 |

+

h = int((oh+base-1) // base * base)

|

| 124 |

+

w = int((ow+base-1) // base * base)

|

| 125 |

+

if (h == oh) and (w == ow):

|

| 126 |

+

return img

|

| 127 |

+

|

| 128 |

+

__print_size_warning(ow, oh, w, h)

|

| 129 |

+

return ImageOps.expand(img, (0, 0, w-ow, h-oh), fill=255)

|

| 130 |

+

|

| 131 |

+

|

| 132 |

+

def __scale_width(img, target_width, method=Image.BICUBIC):

|

| 133 |

+

ow, oh = img.size

|

| 134 |

+

if (ow == target_width):

|

| 135 |

+

return img

|

| 136 |

+

w = target_width

|

| 137 |

+

h = int(target_width * oh / ow)

|

| 138 |

+

return img.resize((w, h), method)

|

| 139 |

+

|

| 140 |

+

|

| 141 |

+

def __crop(img, pos, size):

|

| 142 |

+

ow, oh = img.size

|

| 143 |

+

x1, y1 = pos

|

| 144 |

+

tw = th = size

|

| 145 |

+

if (ow > tw or oh > th):

|

| 146 |

+

return img.crop((x1, y1, x1 + tw, y1 + th))

|

| 147 |

+

return img

|

| 148 |

+

|

| 149 |

+

|

| 150 |

+

def __flip(img, flip):

|

| 151 |

+

if flip:

|

| 152 |

+

return img.transpose(Image.FLIP_LEFT_RIGHT)

|

| 153 |

+

return img

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

def __print_size_warning(ow, oh, w, h):

|

| 157 |

+

"""Print warning information about image size(only print once)"""

|

| 158 |

+

if not hasattr(__print_size_warning, 'has_printed'):

|

| 159 |

+

print("The image size needs to be a multiple of 4. "

|

| 160 |

+

"The loaded image size was (%d, %d), so it was adjusted to "

|

| 161 |

+

"(%d, %d). This adjustment will be done to all images "

|

| 162 |

+

"whose sizes are not multiples of 4" % (ow, oh, w, h))

|

| 163 |

+

__print_size_warning.has_printed = True

|

| 164 |

+

|

BidirectionalTranslation/data/image_folder.py

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""A modified image folder class

|

| 2 |

+

|

| 3 |

+

We modify the official PyTorch image folder (https://github.com/pytorch/vision/blob/master/torchvision/datasets/folder.py)

|

| 4 |

+

so that this class can load images from both current directory and its subdirectories.

|

| 5 |

+

"""

|

| 6 |

+

|

| 7 |

+

import torch.utils.data as data

|

| 8 |

+

|

| 9 |

+

from PIL import Image

|

| 10 |

+

import os

|

| 11 |

+

import os.path

|

| 12 |

+

|

| 13 |

+

IMG_EXTENSIONS = [

|

| 14 |

+

'.jpg', '.JPG', '.jpeg', '.JPEG', '.npz', 'npy',

|

| 15 |

+

'.png', '.PNG', '.ppm', '.PPM', '.bmp', '.BMP',

|

| 16 |

+

]

|

| 17 |

+

|

| 18 |

+

|

| 19 |

+

def is_image_file(filename):

|

| 20 |

+

return any(filename.endswith(extension) for extension in IMG_EXTENSIONS)

|

| 21 |

+

|

| 22 |

+

|

| 23 |

+

def make_dataset(dir, max_dataset_size=float("inf")):

|

| 24 |

+

images = []

|

| 25 |

+

assert os.path.isdir(dir), '%s is not a valid directory' % dir

|

| 26 |

+

|

| 27 |

+

for root, _, fnames in sorted(os.walk(dir)):

|

| 28 |

+

for fname in fnames:

|

| 29 |

+

if is_image_file(fname):

|

| 30 |

+

path = os.path.join(root, fname)

|

| 31 |

+

images.append(path)

|

| 32 |

+

return images[:min(max_dataset_size, len(images))]

|

| 33 |

+

|

| 34 |

+

|

| 35 |

+

def default_loader(path):

|

| 36 |

+

return Image.open(path).convert('RGB')

|

| 37 |

+

|

| 38 |

+

|

| 39 |

+

class ImageFolder(data.Dataset):

|

| 40 |

+

|

| 41 |

+

def __init__(self, root, transform=None, return_paths=False,

|

| 42 |

+

loader=default_loader):

|

| 43 |

+

imgs = make_dataset(root)

|

| 44 |

+

if len(imgs) == 0:

|

| 45 |

+

raise(RuntimeError("Found 0 images in: " + root + "\n"

|

| 46 |

+

"Supported image extensions are: " +

|

| 47 |

+

",".join(IMG_EXTENSIONS)))

|

| 48 |

+

|

| 49 |

+

self.root = root

|

| 50 |

+

self.imgs = imgs

|

| 51 |

+

self.transform = transform

|

| 52 |

+

self.return_paths = return_paths

|

| 53 |

+

self.loader = loader

|

| 54 |

+

|

| 55 |

+

def __getitem__(self, index):

|

| 56 |

+

path = self.imgs[index]

|

| 57 |

+

img = self.loader(path)

|

| 58 |

+

if self.transform is not None:

|

| 59 |

+

img = self.transform(img)

|

| 60 |

+

if self.return_paths:

|

| 61 |

+

return img, path

|

| 62 |

+

else:

|

| 63 |

+

return img

|

| 64 |

+

|

| 65 |

+

def __len__(self):

|

| 66 |

+

return len(self.imgs)

|

BidirectionalTranslation/data/singleCo_dataset.py

ADDED

|

@@ -0,0 +1,85 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os.path

|

| 2 |

+

from data.base_dataset import BaseDataset, get_params, get_transform

|

| 3 |

+

from data.image_folder import make_dataset

|

| 4 |

+

from PIL import Image, ImageEnhance

|

| 5 |

+

import random

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

import torch.nn.functional as F

|

| 9 |

+

import cv2

|

| 10 |

+

|

| 11 |

+

|

| 12 |

+

class SingleCoDataset(BaseDataset):

|

| 13 |

+

@staticmethod

|

| 14 |

+

def modify_commandline_options(parser, is_train):

|

| 15 |

+

return parser

|

| 16 |

+

|

| 17 |

+

def __init__(self, opt):

|

| 18 |

+

self.opt = opt

|

| 19 |

+

self.root = opt.dataroot

|

| 20 |

+

self.dir_A = os.path.join(opt.dataroot, opt.phase, opt.folder, 'imgs')

|

| 21 |

+

|

| 22 |

+

self.A_paths = make_dataset(self.dir_A)

|

| 23 |

+

|

| 24 |

+

self.A_paths = sorted(self.A_paths)

|

| 25 |

+

|

| 26 |

+

self.A_size = len(self.A_paths)

|

| 27 |

+

# self.transform = get_transform(opt)

|

| 28 |

+

|

| 29 |

+

def __getitem__(self, index):

|

| 30 |

+

A_path = self.A_paths[index]

|

| 31 |

+

|

| 32 |

+

A_img = Image.open(A_path).convert('RGB')

|

| 33 |

+

# enhancer = ImageEnhance.Brightness(A_img)

|

| 34 |

+

# A_img = enhancer.enhance(1.5)

|

| 35 |

+

if os.path.exists(A_path.replace('imgs','line')[:-4]+'.jpg'):

|

| 36 |

+

# L_img = Image.open(A_path.replace('imgs','line')[:-4]+'.png')

|

| 37 |

+

L_img = cv2.imread(A_path.replace('imgs','line')[:-4]+'.jpg')

|

| 38 |

+

kernel = np.ones((3,3), np.uint8)

|

| 39 |

+

L_img = cv2.erode(L_img, kernel, iterations=1)

|

| 40 |

+

L_img = Image.fromarray(L_img)

|

| 41 |

+

else:

|

| 42 |

+

L_img = A_img

|

| 43 |

+

if A_img.size!=L_img.size:

|

| 44 |

+

# L_img = L_img.resize(A_img.size, Image.ANTIALIAS)

|

| 45 |

+

A_img = A_img.resize(L_img.size, Image.ANTIALIAS)

|

| 46 |

+

if A_img.size[1]>2500:

|

| 47 |

+

A_img = A_img.resize((A_img.size[0]//2, A_img.size[1]//2), Image.ANTIALIAS)

|

| 48 |

+

|

| 49 |

+

ow, oh = A_img.size

|

| 50 |

+

transform_params = get_params(self.opt, A_img.size)

|

| 51 |

+

A_transform = get_transform(self.opt, transform_params, grayscale=False)

|

| 52 |

+

L_transform = get_transform(self.opt, transform_params, grayscale=True)

|

| 53 |

+

A = A_transform(A_img)

|

| 54 |

+

L = L_transform(L_img)

|

| 55 |

+

|

| 56 |

+

# base = 2**9

|

| 57 |

+

# h = int((oh+base-1) // base * base)

|

| 58 |

+

# w = int((ow+base-1) // base * base)

|

| 59 |

+

# A = F.pad(A.unsqueeze(0), (0,w-ow, 0,h-oh), 'replicate').squeeze(0)

|

| 60 |

+

# L = F.pad(L.unsqueeze(0), (0,w-ow, 0,h-oh), 'replicate').squeeze(0)

|

| 61 |

+

|

| 62 |

+

tmp = A[0, ...] * 0.299 + A[1, ...] * 0.587 + A[2, ...] * 0.114

|

| 63 |

+

Ai = tmp.unsqueeze(0)

|

| 64 |

+

|

| 65 |

+

return {'A': A, 'Ai': Ai, 'L': L,

|

| 66 |

+

'B': torch.zeros(1), 'Bs': torch.zeros(1), 'Bi': torch.zeros(1), 'Bl': torch.zeros(1),

|

| 67 |

+

'A_paths': A_path, 'h': oh, 'w': ow}

|

| 68 |

+

|

| 69 |

+

def __len__(self):

|

| 70 |

+

return self.A_size

|

| 71 |

+

|

| 72 |

+

def name(self):

|

| 73 |

+

return 'SingleCoDataset'

|

| 74 |

+

|

| 75 |

+

|

| 76 |

+

def M_transform(feat, opt, params=None):

|

| 77 |

+

outfeat = feat.copy()

|

| 78 |

+

oh,ow = feat.shape[1:]

|

| 79 |

+

x1, y1 = params['crop_pos']

|

| 80 |

+

tw = th = opt.crop_size

|

| 81 |

+

if (ow > tw or oh > th):

|

| 82 |

+

outfeat = outfeat[:,y1:y1+th,x1:x1+tw]

|

| 83 |

+

if params['flip']:

|

| 84 |

+

outfeat = np.flip(outfeat, 2)#outfeat[:,:,::-1]

|

| 85 |

+

return torch.from_numpy(outfeat.copy()).float()*2-1.0

|

BidirectionalTranslation/data/singleSr_dataset.py

ADDED

|

@@ -0,0 +1,73 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import os.path

|

| 2 |

+

from data.base_dataset import BaseDataset, get_params, get_transform

|

| 3 |

+

from data.image_folder import make_dataset

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import random

|

| 6 |

+

import numpy as np

|

| 7 |

+

import torch

|

| 8 |

+

import torch.nn.functional as F

|

| 9 |

+

|

| 10 |

+

|

| 11 |

+

class SingleSrDataset(BaseDataset):

|

| 12 |

+

@staticmethod

|

| 13 |

+

def modify_commandline_options(parser, is_train):

|

| 14 |

+

return parser

|

| 15 |

+

|

| 16 |

+

def __init__(self, opt):

|

| 17 |

+

self.opt = opt

|

| 18 |

+

self.root = opt.dataroot

|

| 19 |

+

self.dir_B = os.path.join(opt.dataroot, opt.phase, opt.folder, 'imgs')

|

| 20 |

+

# self.dir_B = os.path.join(opt.dataroot, opt.phase, 'test/imgs', opt.folder)

|

| 21 |

+

|

| 22 |

+

self.B_paths = make_dataset(self.dir_B)

|

| 23 |

+

|

| 24 |

+

self.B_paths = sorted(self.B_paths)

|

| 25 |

+

|

| 26 |

+

self.B_size = len(self.B_paths)

|

| 27 |

+

# self.transform = get_transform(opt)

|

| 28 |

+

# print(self.B_size)

|

| 29 |

+

|

| 30 |

+

def __getitem__(self, index):

|

| 31 |

+

B_path = self.B_paths[index]

|

| 32 |

+

|

| 33 |

+

B_img = Image.open(B_path).convert('RGB')

|

| 34 |

+

if os.path.exists(B_path.replace('imgs','line').replace('.jpg','.png')):

|

| 35 |

+

L_img = Image.open(B_path.replace('imgs','line').replace('.jpg','.png'))#.convert('RGB')

|

| 36 |

+

else:

|

| 37 |

+

L_img = Image.open(B_path.replace('imgs','line').replace('.png','.jpg'))#.convert('RGB')

|

| 38 |

+

B_img = B_img.resize(L_img.size, Image.ANTIALIAS)

|

| 39 |

+

|

| 40 |

+

ow, oh = B_img.size

|

| 41 |

+

transform_params = get_params(self.opt, B_img.size)

|

| 42 |

+

B_transform = get_transform(self.opt, transform_params, grayscale=True)

|

| 43 |

+

B = B_transform(B_img)

|

| 44 |

+

L = B_transform(L_img)

|

| 45 |

+

|

| 46 |

+

# base = 2**8

|

| 47 |

+

# h = int((oh+base-1) // base * base)

|

| 48 |

+

# w = int((ow+base-1) // base * base)

|

| 49 |

+

# B = F.pad(B.unsqueeze(0), (0,w-ow, 0,h-oh), 'replicate').squeeze(0)

|

| 50 |

+

# L = F.pad(L.unsqueeze(0), (0,w-ow, 0,h-oh), 'replicate').squeeze(0)

|

| 51 |

+

|

| 52 |

+

return {'B': B, 'Bs': B, 'Bi': B, 'Bl': L,

|

| 53 |

+

'A': torch.zeros(1), 'Ai': torch.zeros(1), 'L': torch.zeros(1),

|

| 54 |

+

'A_paths': B_path, 'h': oh, 'w': ow}

|

| 55 |

+

|

| 56 |

+

def __len__(self):

|

| 57 |

+

return self.B_size

|

| 58 |

+

|

| 59 |

+

def name(self):

|

| 60 |

+

return 'SingleSrDataset'

|

| 61 |

+

|

| 62 |

+

|

| 63 |

+

def M_transform(feat, opt, params=None):

|

| 64 |

+

outfeat = feat.copy()

|

| 65 |

+

if params is not None:

|

| 66 |

+

oh,ow = feat.shape[1:]

|

| 67 |

+

x1, y1 = params['crop_pos']

|

| 68 |

+

tw = th = opt.crop_size

|

| 69 |

+

if (ow > tw or oh > th):

|

| 70 |

+

outfeat = outfeat[:,y1:y1+th,x1:x1+tw]

|

| 71 |

+

if params['flip']:

|

| 72 |

+

outfeat = np.flip(outfeat, 2).copy()#outfeat[:,:,::-1]

|

| 73 |

+

return torch.from_numpy(outfeat).float()*2-1.0

|

BidirectionalTranslation/models/__init__.py

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

"""This package contains modules related to objective functions, optimizations, and network architectures.

|

| 2 |

+

To add a custom model class called 'dummy', you need to add a file called 'dummy_model.py' and define a subclass DummyModel inherited from BaseModel.

|

| 3 |

+

You need to implement the following five functions:

|

| 4 |

+

-- <__init__>: initialize the class; first call BaseModel.__init__(self, opt).

|

| 5 |

+

-- <set_input>: unpack data from dataset and apply preprocessing.

|

| 6 |

+

-- <forward>: produce intermediate results.

|

| 7 |

+

-- <optimize_parameters>: calculate loss, gradients, and update network weights.

|

| 8 |

+

-- <modify_commandline_options>: (optionally) add model-specific options and set default options.

|

| 9 |

+

In the function <__init__>, you need to define four lists:

|

| 10 |

+

-- self.loss_names (str list): specify the training losses that you want to plot and save.

|

| 11 |

+

-- self.model_names (str list): specify the images that you want to display and save.

|

| 12 |

+

-- self.visual_names (str list): define networks used in our training.

|

| 13 |

+

-- self.optimizers (optimizer list): define and initialize optimizers. You can define one optimizer for each network. If two networks are updated at the same time, you can use itertools.chain to group them. See cycle_gan_model.py for an example.

|

| 14 |

+

Now you can use the model class by specifying flag '--model dummy'.

|

| 15 |

+

See our template model class 'template_model.py' for an example.

|

| 16 |

+

"""

|

| 17 |

+

|

| 18 |

+

import importlib

|

| 19 |

+

from models.base_model import BaseModel

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

def find_model_using_name(model_name):

|

| 23 |

+

"""Import the module "models/[model_name]_model.py".

|

| 24 |

+

In the file, the class called DatasetNameModel() will

|

| 25 |

+

be instantiated. It has to be a subclass of BaseModel,

|

| 26 |

+

and it is case-insensitive.

|

| 27 |

+

"""

|

| 28 |

+

model_filename = "models." + model_name + "_model"

|

| 29 |

+

modellib = importlib.import_module(model_filename)

|

| 30 |

+

model = None

|

| 31 |

+

target_model_name = model_name.replace('_', '') + 'model'

|

| 32 |

+

for name, cls in modellib.__dict__.items():

|

| 33 |

+

if name.lower() == target_model_name.lower() \

|

| 34 |

+

and issubclass(cls, BaseModel):

|

| 35 |

+

model = cls

|

| 36 |

+

|

| 37 |

+

if model is None:

|

| 38 |

+

print("In %s.py, there should be a subclass of BaseModel with class name that matches %s in lowercase." % (model_filename, target_model_name))

|

| 39 |

+

exit(0)

|

| 40 |

+

|

| 41 |

+

return model

|

| 42 |

+

|

| 43 |

+

|

| 44 |

+

def get_option_setter(model_name):

|

| 45 |

+

"""Return the static method <modify_commandline_options> of the model class."""

|

| 46 |

+

model_class = find_model_using_name(model_name)

|

| 47 |

+

return model_class.modify_commandline_options

|

| 48 |

+

|

| 49 |

+

|

| 50 |

+

def create_model(opt, ckpt_root):

|

| 51 |

+

"""Create a model given the option.

|

| 52 |

+

This function warps the class CustomDatasetDataLoader.

|

| 53 |

+

This is the main interface between this package and 'train.py'/'test.py'

|

| 54 |

+

Example:

|

| 55 |

+

>>> from models import create_model

|

| 56 |

+

>>> model = create_model(opt)

|

| 57 |

+

"""

|

| 58 |

+

model = find_model_using_name(opt.model)

|

| 59 |

+

instance = model(opt, ckpt_root = ckpt_root)

|

| 60 |

+

print("model [%s] was created" % type(instance).__name__)

|

| 61 |

+

return instance

|

BidirectionalTranslation/models/base_model.py

ADDED

|

@@ -0,0 +1,277 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|