Overall improvements with added support for various face restoration models and upscale models.

Browse files01. The inference uses the auto_split_upscale mechanism, auto_split_upscale is in the dataops.py file, and its source is from the ESRGAN project forked by authors joeyballentine and BlueAmulet.

02. The face model now supports RestoreFormer++, authored by wzhouxiff.

03. The face model now supports CodeFormer, authored by sczhou.

04. The face model now supports GPEN, authored by yangxy.

05. Added support for parsing older RRDB models from the ESRGAN project.

06. Added support for parsing DAT models from the DAT project by author zhengchen1999.

07. Added support for parsing HAT models from the HAT project by author XPixelGroup.

08. Added support for parsing RealPLKSR models from the PLKSR project by author dslisleedh & neosr-project.

09. In the interface, you can select the type of face detection: "retinaface_resnet50", "YOLOv5l", or "YOLOv5n". The default is "retinaface_resnet50".

10. In the interface, you can set whether to include the model name in the output image file names for easier result verification.

11. The Gradio interface has been updated from the `Interface` syntax to the `Blocks` syntax.

12. Upgrade the project's Gradio version to 5.9.0.

- .gitignore +2 -1

- README.md +3 -3

- app.py +327 -119

- images/a01.jpg +0 -0

- images/a02.jpg +0 -0

- images/a03.jpg +0 -0

- images/a04.jpg +0 -0

- images/b01.jpg +0 -0

- images/b02.jpg +0 -0

- images/b03.jpg +0 -0

- images/b04.jpg +0 -0

- images/b05.jpg +0 -0

- images/b06.jpg +0 -0

- images/b07.jpg +0 -0

- images/b08.jpg +0 -0

- images/b09.jpg +0 -0

- images/b10.jpg +0 -0

- images/b11.jpg +0 -0

- images/bus.jpg +0 -0

- images/c01.jpg +0 -0

- images/c02.jpg +0 -0

- images/c03.jpg +0 -0

- images/c04.jpg +0 -0

- images/c05.jpg +0 -0

- images/c06.jpg +0 -0

- images/c07.jpg +0 -0

- images/c08.jpg +0 -0

- images/c09.jpg +0 -0

- images/c10.jpg +0 -0

- images/zidane.jpg +0 -0

- requirements.txt +1 -1

|

@@ -140,4 +140,5 @@ dmypy.json

|

|

| 140 |

.vs

|

| 141 |

output

|

| 142 |

weights

|

| 143 |

-

|

|

|

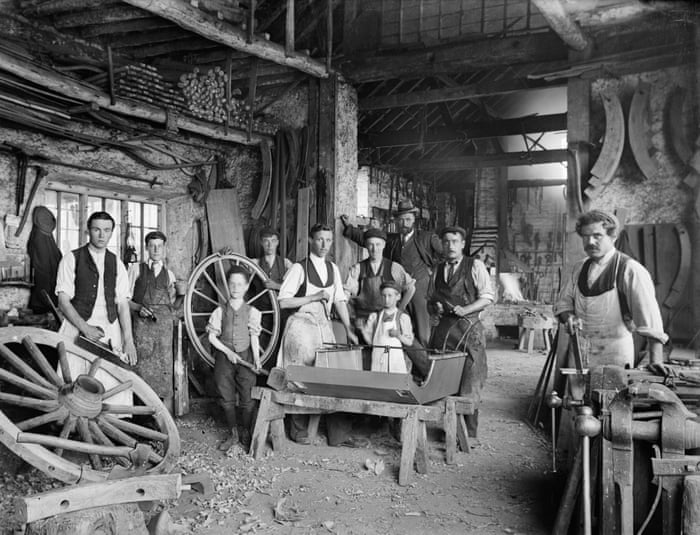

|

|

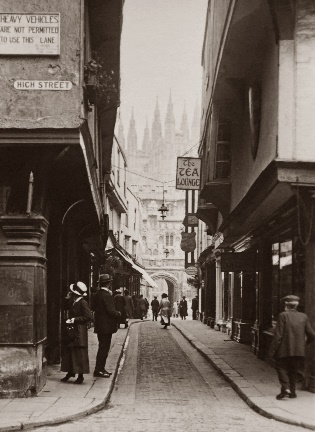

|

| 140 |

.vs

|

| 141 |

output

|

| 142 |

weights

|

| 143 |

+

.jpg

|

| 144 |

+

.png

|

|

@@ -1,12 +1,12 @@

|

|

| 1 |

---

|

| 2 |

-

title: Image Face Upscale Restoration-GFPGAN

|

| 3 |

emoji: 📈

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 5.

|

| 8 |

app_file: app.py

|

| 9 |

-

pinned:

|

| 10 |

license: apache-2.0

|

| 11 |

---

|

| 12 |

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Image Face Upscale Restoration-GFPGAN-RestoreFormerPlusPlus-CodeFormer

|

| 3 |

emoji: 📈

|

| 4 |

colorFrom: blue

|

| 5 |

colorTo: gray

|

| 6 |

sdk: gradio

|

| 7 |

+

sdk_version: 5.9.0

|

| 8 |

app_file: app.py

|

| 9 |

+

pinned: true

|

| 10 |

license: apache-2.0

|

| 11 |

---

|

| 12 |

|

|

@@ -1,7 +1,6 @@

|

|

| 1 |

import os

|

| 2 |

import gc

|

| 3 |

import cv2

|

| 4 |

-

import requests

|

| 5 |

import numpy as np

|

| 6 |

import gradio as gr

|

| 7 |

import torch

|

|

@@ -11,59 +10,208 @@ from realesrgan.utils import RealESRGANer

|

|

| 11 |

|

| 12 |

|

| 13 |

# Define URLs and their corresponding local storage paths

|

| 14 |

-

|

| 15 |

-

"GFPGANv1.4.pth": "https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.4.pth",

|

| 16 |

-

|

| 17 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 18 |

# legacy model

|

| 19 |

-

"GFPGANv1.3.pth": "https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth",

|

| 20 |

-

|

| 21 |

-

"

|

|

|

|

|

|

|

|

|

|

| 22 |

}

|

| 23 |

-

|

| 24 |

# SRVGGNet

|

| 25 |

-

"realesr-general-x4v3.pth": "https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-general-x4v3.pth",

|

| 26 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 27 |

# RRDBNet

|

| 28 |

-

"RealESRGAN_x4plus_anime_6B.pth": "https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth",

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 32 |

# ESRGAN(oldRRDB)

|

| 33 |

-

"4x-AnimeSharp.pth": "https://huggingface.co/utnah/esrgan/resolve/main/4x-AnimeSharp.pth?download=true",

|

| 34 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 35 |

# DATNet

|

| 36 |

-

"4xNomos8kDAT.pth": "https://github.com/Phhofm/models/releases/download/4xNomos8kDAT/4xNomos8kDAT.pth",

|

| 37 |

-

|

| 38 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 39 |

# HAT

|

| 40 |

-

"4xNomos8kSCHAT-L.pth": "https://github.com/Phhofm/models/releases/download/4xNomos8kSCHAT/4xNomos8kSCHAT-L.pth",

|

| 41 |

-

|

| 42 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 43 |

# RealPLKSR_dysample

|

| 44 |

-

"4xHFA2k_ludvae_realplksr_dysample.pth": "https://github.com/Phhofm/models/releases/download/4xHFA2k_ludvae_realplksr_dysample/4xHFA2k_ludvae_realplksr_dysample.pth",

|

| 45 |

-

|

| 46 |

-

|

| 47 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 48 |

# RealPLKSR

|

| 49 |

-

"2x-AnimeSharpV2_RPLKSR_Sharp.pth": "https://github.com/Kim2091/Kim2091-Models/releases/download/2x-AnimeSharpV2_Set/2x-AnimeSharpV2_RPLKSR_Sharp.pth",

|

| 50 |

-

|

| 51 |

-

|

| 52 |

-

|

| 53 |

-

|

| 54 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 55 |

}

|

| 56 |

|

| 57 |

-

|

| 58 |

-

|

| 59 |

-

|

| 60 |

-

|

| 61 |

-

|

| 62 |

-

"a3.jpg":

|

| 63 |

-

"https://i.guim.co.uk/img/media/06f614065ed82ca0e917b149a32493c791619854/0_0_3648_2789/master/3648.jpg?width=700&quality=85&auto=format&fit=max&s=05764b507c18a38590090d987c8b6202",

|

| 64 |

-

"a4.jpg":

|

| 65 |

-

"https://i.pinimg.com/736x/46/96/9e/46969eb94aec2437323464804d27706d--victorian-london-victorian-era.jpg",

|

| 66 |

-

}

|

| 67 |

|

| 68 |

def get_model_type(model_name):

|

| 69 |

# Define model type mappings based on key parts of the model names

|

|

@@ -82,19 +230,19 @@ def get_model_type(model_name):

|

|

| 82 |

model_type = "RealPLKSR_dysample"

|

| 83 |

elif "realplksr" in model_name.lower() or "rplksr" in model_name.lower():

|

| 84 |

model_type = "RealPLKSR"

|

|

|

|

|

|

|

|

|

|

|

|

|

| 85 |

return f"{model_type}, {model_name}"

|

| 86 |

|

| 87 |

-

|

| 88 |

-

|

| 89 |

-

def download_from_urls(urls, save_dir=None):

|

| 90 |

-

for file_name, url in urls.items():

|

| 91 |

-

download_from_url(url, file_name, save_dir)

|

| 92 |

|

| 93 |

|

| 94 |

class Upscale:

|

| 95 |

-

def inference(self, img, face_restoration,

|

| 96 |

print(img)

|

| 97 |

-

print(face_restoration,

|

| 98 |

try:

|

| 99 |

self.scale = scale

|

| 100 |

self.img_name = os.path.basename(str(img))

|

|

@@ -107,55 +255,57 @@ class Upscale:

|

|

| 107 |

img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

|

| 108 |

|

| 109 |

h, w = img.shape[0:2]

|

| 110 |

-

if h < 300:

|

| 111 |

-

img = cv2.resize(img, (w * 2, h * 2), interpolation=cv2.INTER_LANCZOS4)

|

| 112 |

|

| 113 |

if face_restoration:

|

| 114 |

-

download_from_url(

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

| 118 |

|

| 119 |

netscale = 4

|

| 120 |

loadnet = None

|

| 121 |

model = None

|

| 122 |

is_auto_split_upscale = True

|

| 123 |

half = True if torch.cuda.is_available() else False

|

| 124 |

-

if

|

| 125 |

from basicsr.archs.rrdbnet_arch import RRDBNet

|

| 126 |

from basicsr.archs.realplksr_arch import realplksr

|

| 127 |

-

# background enhancer with

|

| 128 |

-

if

|

| 129 |

-

netscale = 2 if "x2" in

|

| 130 |

-

num_block = 6 if "6B" in

|

| 131 |

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=num_block, num_grow_ch=32, scale=netscale)

|

| 132 |

-

elif

|

| 133 |

from realesrgan.archs.srvgg_arch import SRVGGNetCompact

|

| 134 |

netscale = 4

|

| 135 |

-

num_conv = 16 if "animevideov3" in

|

| 136 |

model = SRVGGNetCompact(num_in_ch=3, num_out_ch=3, num_feat=64, num_conv=num_conv, upscale=netscale, act_type='prelu')

|

| 137 |

-

elif

|

| 138 |

netscale = 4

|

| 139 |

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32, scale=netscale)

|

| 140 |

loadnet = {}

|

| 141 |

-

loadnet_origin = torch.load(os.path.join("weights", "

|

| 142 |

for key, value in loadnet_origin.items():

|

| 143 |

new_key = key.replace("model.0", "conv_first").replace("model.1.sub.23.", "conv_body.").replace("model.1.sub", "body") \

|

| 144 |

.replace(".0.weight", ".weight").replace(".0.bias", ".bias").replace(".RDB1.", ".rdb1.").replace(".RDB2.", ".rdb2.").replace(".RDB3.", ".rdb3.") \

|

| 145 |

.replace("model.3.", "conv_up1.").replace("model.6.", "conv_up2.").replace("model.8.", "conv_hr.").replace("model.10.", "conv_last.")

|

| 146 |

loadnet[new_key] = value

|

| 147 |

-

elif

|

| 148 |

from basicsr.archs.dat_arch import DAT

|

| 149 |

half = False

|

| 150 |

netscale = 4

|

| 151 |

-

expansion_factor = 2. if "dat2" in

|

| 152 |

model = DAT(img_size=64, in_chans=3, embed_dim=180, split_size=[8,32], depth=[6,6,6,6,6,6], num_heads=[6,6,6,6,6,6], expansion_factor=expansion_factor, upscale=netscale)

|

| 153 |

# # Speculate on the parameters.

|

| 154 |

-

# loadnet_origin = torch.load(os.path.join("weights", "

|

| 155 |

# inferred_params = self.infer_parameters_from_state_dict_for_dat(loadnet_origin, netscale)

|

| 156 |

# for param, value in inferred_params.items():

|

| 157 |

# print(f"{param}: {value}")

|

| 158 |

-

elif

|

| 159 |

half = False

|

| 160 |

netscale = 4

|

| 161 |

import torch.nn.functional as F

|

|

@@ -205,7 +355,7 @@ class Upscale:

|

|

| 205 |

|

| 206 |

# The parameters are derived from the XPixelGroup project files: HAT-L_SRx4_ImageNet-pretrain.yml and HAT-S_SRx4.yml.

|

| 207 |

# https://github.com/XPixelGroup/HAT/tree/main/options/test

|

| 208 |

-

if "hat-l" in

|

| 209 |

window_size = 16

|

| 210 |

compress_ratio = 3

|

| 211 |

squeeze_factor = 30

|

|

@@ -214,7 +364,7 @@ class Upscale:

|

|

| 214 |

num_heads = [6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6]

|

| 215 |

mlp_ratio = 2

|

| 216 |

upsampler = "pixelshuffle"

|

| 217 |

-

elif "hat-s" in

|

| 218 |

window_size = 16

|

| 219 |

compress_ratio = 24

|

| 220 |

squeeze_factor = 24

|

|

@@ -225,12 +375,12 @@ class Upscale:

|

|

| 225 |

upsampler = "pixelshuffle"

|

| 226 |

model = HATWithAutoPadding(img_size=64, patch_size=1, in_chans=3, embed_dim=embed_dim, depths=depths, num_heads=num_heads, window_size=window_size, compress_ratio=compress_ratio,

|

| 227 |

squeeze_factor=squeeze_factor, conv_scale=0.01, overlap_ratio=0.5, mlp_ratio=mlp_ratio, upsampler=upsampler, upscale=netscale,)

|

| 228 |

-

elif

|

| 229 |

netscale = 4

|

| 230 |

-

model = realplksr(upscaling_factor=netscale, dysample=True)

|

| 231 |

-

elif

|

| 232 |

-

half = False if "RealPLSKR" in

|

| 233 |

-

netscale = 2 if

|

| 234 |

model = realplksr(dim=64, n_blocks=28, kernel_size=17, split_ratio=0.25, upscaling_factor=netscale)

|

| 235 |

|

| 236 |

|

|

@@ -238,8 +388,8 @@ class Upscale:

|

|

| 238 |

if loadnet:

|

| 239 |

self.upsampler = RealESRGANer(scale=netscale, loadnet=loadnet, model=model, tile=0, tile_pad=10, pre_pad=0, half=half)

|

| 240 |

elif model:

|

| 241 |

-

self.upsampler = RealESRGANer(scale=netscale, model_path=os.path.join("weights", "

|

| 242 |

-

elif

|

| 243 |

self.upsampler = None

|

| 244 |

import PIL

|

| 245 |

from image_gen_aux import UpscaleWithModel

|

|

@@ -286,25 +436,42 @@ class Upscale:

|

|

| 286 |

return cv_image, None

|

| 287 |

|

| 288 |

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 289 |

-

upscaler = UpscaleWithModel.from_pretrained(os.path.join("weights", "

|

| 290 |

upscaler.__class__ = UpscaleWithModel_Gfpgan

|

| 291 |

self.upsampler = upscaler

|

| 292 |

self.face_enhancer = None

|

| 293 |

|

|

|

|

| 294 |

if face_restoration:

|

|

|

|

| 295 |

from gfpgan.utils import GFPGANer

|

|

|

|

|

|

|

|

|

|

|

|

|

| 296 |

if face_restoration and face_restoration.startswith("GFPGANv1."):

|

| 297 |

-

|

|

|

|

| 298 |

elif face_restoration and face_restoration.startswith("RestoreFormer"):

|

| 299 |

arch = "RestoreFormer++" if face_restoration.startswith("RestoreFormer++") else "RestoreFormer"

|

| 300 |

-

self.face_enhancer = GFPGANer(model_path=os.path.join("weights", "face", face_restoration), upscale=self.scale, arch=arch, channel_multiplier=2, bg_upsampler=self.upsampler)

|

| 301 |

elif face_restoration == 'CodeFormer.pth':

|

| 302 |

-

|

| 303 |

-

|

| 304 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 305 |

|

| 306 |

files = []

|

| 307 |

-

|

|

|

|

|

|

|

| 308 |

try:

|

| 309 |

bg_upsample_img = None

|

| 310 |

if self.upsampler and self.upsampler.enhance:

|

|

@@ -317,22 +484,19 @@ class Upscale:

|

|

| 317 |

if cropped_faces and restored_aligned:

|

| 318 |

for idx, (cropped_face, restored_face) in enumerate(zip(cropped_faces, restored_aligned)):

|

| 319 |

# save cropped face

|

| 320 |

-

save_crop_path = f"output/{self.basename}{idx:02d}_cropped_faces.png"

|

| 321 |

self.imwriteUTF8(save_crop_path, cropped_face)

|

| 322 |

# save restored face

|

| 323 |

-

save_restore_path = f"output/{self.basename}{idx:02d}_restored_faces.png"

|

| 324 |

self.imwriteUTF8(save_restore_path, restored_face)

|

| 325 |

# save comparison image

|

| 326 |

-

save_cmp_path = f"output/{self.basename}{idx:02d}_cmp.png"

|

| 327 |

cmp_img = np.concatenate((cropped_face, restored_face), axis=1)

|

| 328 |

self.imwriteUTF8(save_cmp_path, cmp_img)

|

| 329 |

|

| 330 |

files.append(save_crop_path)

|

| 331 |

files.append(save_restore_path)

|

| 332 |

files.append(save_cmp_path)

|

| 333 |

-

outputs.append(cv2.cvtColor(cropped_face, cv2.COLOR_BGR2RGB))

|

| 334 |

-

outputs.append(cv2.cvtColor(restored_face, cv2.COLOR_BGR2RGB))

|

| 335 |

-

outputs.append(cv2.cvtColor(cmp_img, cv2.COLOR_BGR2RGB))

|

| 336 |

|

| 337 |

restored_img = bg_upsample_img

|

| 338 |

except RuntimeError as error:

|

|

@@ -348,13 +512,12 @@ class Upscale:

|

|

| 348 |

|

| 349 |

if not self.extension:

|

| 350 |

self.extension = ".png" if self.img_mode == "RGBA" else ".jpg" # RGBA images should be saved in png format

|

| 351 |

-

save_path = f"output/{self.basename}{self.extension}"

|

| 352 |

self.imwriteUTF8(save_path, restored_img)

|

| 353 |

|

| 354 |

restored_img = cv2.cvtColor(restored_img, cv2.COLOR_BGR2RGB)

|

| 355 |

files.append(save_path)

|

| 356 |

-

|

| 357 |

-

return outputs, files

|

| 358 |

except Exception as error:

|

| 359 |

print(traceback.format_exc())

|

| 360 |

print("global exception", error)

|

|

@@ -451,42 +614,87 @@ def main():

|

|

| 451 |

# Ensure the target directory exists

|

| 452 |

os.makedirs('output', exist_ok=True)

|

| 453 |

|

| 454 |

-

|

| 455 |

-

|

| 456 |

-

|

| 457 |

-

|

| 458 |

-

|

| 459 |

-

|

|

|

|

|

|

|

| 460 |

To use it, simply just upload the concerned image.<br>

|

| 461 |

"""

|

| 462 |

article = r"""

|

| 463 |

[](https://github.com/TencentARC/GFPGAN/releases)

|

| 464 |

[](https://github.com/TencentARC/GFPGAN)

|

| 465 |

[](https://arxiv.org/abs/2101.04061)

|

| 466 |

-

<center><img src='https://visitor-badge.glitch.me/badge?page_id=dj_face_restoration_GFPGAN' alt='visitor badge'></center>

|

| 467 |

"""

|

| 468 |

|

| 469 |

upscale = Upscale()

|

| 470 |

|

| 471 |

-

|

| 472 |

-

|

| 473 |

-

|

| 474 |

-

gr.

|

| 475 |

-

|

| 476 |

-

|

| 477 |

-

|

| 478 |

-

|

| 479 |

-

|

| 480 |

-

|

| 481 |

-

|

| 482 |

-

|

| 483 |

-

|

| 484 |

-

|

| 485 |

-

|

| 486 |

-

|

| 487 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 488 |

|

| 489 |

-

demo.queue(default_concurrency_limit=

|

| 490 |

demo.launch(inbrowser=True)

|

| 491 |

|

| 492 |

|

|

|

|

| 1 |

import os

|

| 2 |

import gc

|

| 3 |

import cv2

|

|

|

|

| 4 |

import numpy as np

|

| 5 |

import gradio as gr

|

| 6 |

import torch

|

|

|

|

| 10 |

|

| 11 |

|

| 12 |

# Define URLs and their corresponding local storage paths

|

| 13 |

+

face_models = {

|

| 14 |

+

"GFPGANv1.4.pth" : ["https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.4.pth",

|

| 15 |

+

"https://github.com/TencentARC/GFPGAN/",

|

| 16 |

+

"""GFPGAN: Towards Real-World Blind Face Restoration and Upscalling of the image with a Generative Facial Prior.

|

| 17 |

+

GFPGAN aims at developing a Practical Algorithm for Real-world Face Restoration.

|

| 18 |

+

It leverages rich and diverse priors encapsulated in a pretrained face GAN (e.g., StyleGAN2) for blind face restoration."""],

|

| 19 |

+

|

| 20 |

+

"RestoreFormer++.ckpt": ["https://github.com/wzhouxiff/RestoreFormerPlusPlus/releases/download/v1.0.0/RestoreFormer++.ckpt",

|

| 21 |

+

"https://github.com/wzhouxiff/RestoreFormerPlusPlus",

|

| 22 |

+

"""RestoreFormer++: Towards Real-World Blind Face Restoration from Undegraded Key-Value Pairs.

|

| 23 |

+

RestoreFormer++ is an extension of RestoreFormer. It proposes to restore a degraded face image with both fidelity and \

|

| 24 |

+

realness by using the powerful fully-spacial attention mechanisms to model the abundant contextual information in the face and \

|

| 25 |

+

its interplay with reconstruction-oriented high-quality priors."""],

|

| 26 |

+

|

| 27 |

+

"CodeFormer.pth" : ["https://github.com/sczhou/CodeFormer/releases/download/v0.1.0/codeformer.pth",

|

| 28 |

+

"https://github.com/sczhou/CodeFormer",

|

| 29 |

+

"""CodeFormer: Towards Robust Blind Face Restoration with Codebook Lookup Transformer (NeurIPS 2022).

|

| 30 |

+

CodeFormer is a Transformer-based model designed to tackle the challenging problem of blind face restoration, where inputs are often severely degraded.

|

| 31 |

+

By framing face restoration as a code prediction task, this approach ensures both improved mapping from degraded inputs to outputs and the generation of visually rich, high-quality faces.

|

| 32 |

+

"""],

|

| 33 |

+

|

| 34 |

+

"GPEN-BFR-512.pth" : ["https://huggingface.co/akhaliq/GPEN-BFR-512/resolve/main/GPEN-BFR-512.pth",

|

| 35 |

+

"https://github.com/yangxy/GPEN",

|

| 36 |

+

"""GPEN: GAN Prior Embedded Network for Blind Face Restoration in the Wild.

|

| 37 |

+

GPEN addresses blind face restoration (BFR) by embedding a GAN into a U-shaped DNN, combining GAN’s ability to generate high-quality images with DNN’s feature extraction.

|

| 38 |

+

This design reconstructs global structure, fine details, and backgrounds from degraded inputs.

|

| 39 |

+

Simple yet effective, GPEN outperforms state-of-the-art methods, delivering realistic results even for severely degraded images."""],

|

| 40 |

+

|

| 41 |

+

"GPEN-BFR-1024.pt" : ["https://www.modelscope.cn/models/iic/cv_gpen_image-portrait-enhancement-hires/resolve/master/pytorch_model.pt",

|

| 42 |

+

"https://www.modelscope.cn/models/iic/cv_gpen_image-portrait-enhancement-hires/files",

|

| 43 |

+

"""The same as GPEN but for 1024 resolution."""],

|

| 44 |

+

|

| 45 |

+

"GPEN-BFR-2048.pt" : ["https://www.modelscope.cn/models/iic/cv_gpen_image-portrait-enhancement-hires/resolve/master/pytorch_model-2048.pt",

|

| 46 |

+

"https://www.modelscope.cn/models/iic/cv_gpen_image-portrait-enhancement-hires/files",

|

| 47 |

+

"""The same as GPEN but for 2048 resolution."""],

|

| 48 |

+

|

| 49 |

# legacy model

|

| 50 |

+

"GFPGANv1.3.pth" : ["https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.3.pth",

|

| 51 |

+

"https://github.com/TencentARC/GFPGAN/", "The same as GFPGAN but legacy model"],

|

| 52 |

+

"GFPGANv1.2.pth" : ["https://github.com/TencentARC/GFPGAN/releases/download/v1.3.0/GFPGANv1.2.pth",

|

| 53 |

+

"https://github.com/TencentARC/GFPGAN/", "The same as GFPGAN but legacy model"],

|

| 54 |

+

"RestoreFormer.ckpt": ["https://github.com/wzhouxiff/RestoreFormerPlusPlus/releases/download/v1.0.0/RestoreFormer.ckpt",

|

| 55 |

+

"https://github.com/wzhouxiff/RestoreFormerPlusPlus", "The same as RestoreFormer++ but legacy model"],

|

| 56 |

}

|

| 57 |

+

upscale_models = {

|

| 58 |

# SRVGGNet

|

| 59 |

+

"realesr-general-x4v3.pth": ["https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-general-x4v3.pth",

|

| 60 |

+

"https://github.com/xinntao/Real-ESRGAN/releases/tag/v0.3.0",

|

| 61 |

+

"""add realesr-general-x4v3 and realesr-general-wdn-x4v3. They are very tiny models for general scenes, and they may more robust. But as they are tiny models, their performance may be limited."""],

|

| 62 |

+

|

| 63 |

+

"realesr-animevideov3.pth": ["https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.5.0/realesr-animevideov3.pth",

|

| 64 |

+

"https://github.com/xinntao/Real-ESRGAN/releases/tag/v0.2.5.0",

|

| 65 |

+

"""update the RealESRGAN AnimeVideo-v3 model, which can achieve better results with a faster inference speed."""],

|

| 66 |

+

|

| 67 |

# RRDBNet

|

| 68 |

+

"RealESRGAN_x4plus_anime_6B.pth": ["https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.2.4/RealESRGAN_x4plus_anime_6B.pth",

|

| 69 |

+

"https://github.com/xinntao/Real-ESRGAN/releases/tag/v0.2.2.4",

|

| 70 |

+

"""We add RealESRGAN_x4plus_anime_6B.pth, which is optimized for anime images with much smaller model size. More details and comparisons with waifu2x are in anime_model.md"""],

|

| 71 |

+

|

| 72 |

+

"RealESRGAN_x2plus.pth" : ["https://github.com/xinntao/Real-ESRGAN/releases/download/v0.2.1/RealESRGAN_x2plus.pth",

|

| 73 |

+

"https://github.com/xinntao/Real-ESRGAN/releases/tag/v0.2.1",

|

| 74 |

+

"""Add RealESRGAN_x2plus.pth model"""],

|

| 75 |

+

|

| 76 |

+

"RealESRNet_x4plus.pth" : ["https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.1/RealESRNet_x4plus.pth",

|

| 77 |

+

"https://github.com/xinntao/Real-ESRGAN/releases/tag/v0.1.1",

|

| 78 |

+

"""This release is mainly for storing pre-trained models and executable files."""],

|

| 79 |

+

|

| 80 |

+

"RealESRGAN_x4plus.pth" : ["https://github.com/xinntao/Real-ESRGAN/releases/download/v0.1.0/RealESRGAN_x4plus.pth",

|

| 81 |

+

"https://github.com/xinntao/Real-ESRGAN/releases/tag/v0.1.0",

|

| 82 |

+

"""This release is mainly for storing pre-trained models and executable files."""],

|

| 83 |

+

|

| 84 |

# ESRGAN(oldRRDB)

|

| 85 |

+

"4x-AnimeSharp.pth": ["https://huggingface.co/utnah/esrgan/resolve/main/4x-AnimeSharp.pth?download=true",

|

| 86 |

+

"https://openmodeldb.info/models/4x-AnimeSharp",

|

| 87 |

+

"""Interpolation between 4x-UltraSharp and 4x-TextSharp-v0.5. Works amazingly on anime. It also upscales text, but it's far better with anime content."""],

|

| 88 |

+

|

| 89 |

+

"4x_IllustrationJaNai_V1_ESRGAN_135k.pth": ["https://drive.google.com/uc?export=download&confirm=1&id=1qpioSqBkB_IkSBhEAewSSNFt6qgkBimP",

|

| 90 |

+

"https://openmodeldb.info/models/4x-IllustrationJaNai-V1-DAT2",

|

| 91 |

+

"""Purpose: Illustrations, digital art, manga covers

|

| 92 |

+

Model for color images including manga covers and color illustrations, digital art, visual novel art, artbooks, and more.

|

| 93 |

+

DAT2 version is the highest quality version but also the slowest. See the ESRGAN version for faster performance."""],

|

| 94 |

+

|

| 95 |

# DATNet

|

| 96 |

+

"4xNomos8kDAT.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomos8kDAT/4xNomos8kDAT.pth",

|

| 97 |

+

"https://openmodeldb.info/models/4x-Nomos8kDAT",

|

| 98 |

+

"""A 4x photo upscaler with otf jpg compression, blur and resize, trained on musl's Nomos8k_sfw dataset for realisic sr, this time based on the DAT arch, as a finetune on the official 4x DAT model."""],

|

| 99 |

+

|

| 100 |

+

"4x-DWTP-DS-dat2-v3.pth" : ["https://objectstorage.us-phoenix-1.oraclecloud.com/n/ax6ygfvpvzka/b/open-modeldb-files/o/4x-DWTP-DS-dat2-v3.pth",

|

| 101 |

+

"https://openmodeldb.info/models/4x-DWTP-DS-dat2-v3",

|

| 102 |

+

"""DAT descreenton model, designed to reduce discrepancies on tiles due to too much loss of the first version, while getting rid of the removal of paper texture"""],

|

| 103 |

+

|

| 104 |

+

"4xBHI_dat2_real.pth" : ["https://github.com/Phhofm/models/releases/download/4xBHI_dat2_real/4xBHI_dat2_real.pth",

|

| 105 |

+

"https://github.com/Phhofm/models/releases/tag/4xBHI_dat2_real",

|

| 106 |

+

"""Purpose: 4x upscaling images. Handles realistic noise, some realistic blur, and webp and jpg (re)compression.

|

| 107 |

+

Description: 4x dat2 upscaling model for web and realistic images. It handles realistic noise, some realistic blur, and webp and jpg (re)compression. Trained on my BHI dataset (390'035 training tiles) with degraded LR subset."""],

|

| 108 |

+

|

| 109 |

+

"4xBHI_dat2_otf.pth" : ["https://github.com/Phhofm/models/releases/download/4xBHI_dat2_otf/4xBHI_dat2_otf.pth",

|

| 110 |

+

"https://github.com/Phhofm/models/releases/tag/4xBHI_dat2_otf",

|

| 111 |

+

"""Purpose: 4x upscaling images, handles noise and jpg compression

|

| 112 |

+

Description: 4x dat2 upscaling model, trained with the real-esrgan otf pipeline on my bhi dataset. Handles noise and compression."""],

|

| 113 |

+

|

| 114 |

+

"4xBHI_dat2_multiblur.pth" : ["https://github.com/Phhofm/models/releases/download/4xBHI_dat2_multiblurjpg/4xBHI_dat2_multiblur.pth",

|

| 115 |

+

"https://github.com/Phhofm/models/releases/tag/4xBHI_dat2_multiblurjpg",

|

| 116 |

+

"""Purpose: 4x upscaling images, handles jpg compression

|

| 117 |

+

Description: 4x dat2 upscaling model, trained with down_up,linear, cubic_mitchell, lanczos, gauss and box scaling algos, some average, gaussian and anisotropic blurs and jpg compression. Trained on my BHI sisr dataset."""],

|

| 118 |

+

|

| 119 |

+

"4xBHI_dat2_multiblurjpg.pth" : ["https://github.com/Phhofm/models/releases/download/4xBHI_dat2_multiblurjpg/4xBHI_dat2_multiblurjpg.pth",

|

| 120 |

+

"https://github.com/Phhofm/models/releases/tag/4xBHI_dat2_multiblurjpg",

|

| 121 |

+

"""Purpose: 4x upscaling images, handles jpg compression

|

| 122 |

+

Description: 4x dat2 upscaling model, trained with down_up,linear, cubic_mitchell, lanczos, gauss and box scaling algos, some average, gaussian and anisotropic blurs and jpg compression. Trained on my BHI sisr dataset."""],

|

| 123 |

+

|

| 124 |

+

"4x_IllustrationJaNai_V1_DAT2_190k.pth": ["https://drive.google.com/uc?export=download&confirm=1&id=1qpioSqBkB_IkSBhEAewSSNFt6qgkBimP",

|

| 125 |

+

"https://openmodeldb.info/models/4x-IllustrationJaNai-V1-DAT2",

|

| 126 |

+

"""Purpose: Illustrations, digital art, manga covers

|

| 127 |

+

Model for color images including manga covers and color illustrations, digital art, visual novel art, artbooks, and more.

|

| 128 |

+

DAT2 version is the highest quality version but also the slowest. See the ESRGAN version for faster performance."""],

|

| 129 |

+

|

| 130 |

# HAT

|

| 131 |

+

"4xNomos8kSCHAT-L.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomos8kSCHAT/4xNomos8kSCHAT-L.pth",

|

| 132 |

+

"https://openmodeldb.info/models/4x-Nomos8kSCHAT-L",

|

| 133 |

+

"""4x photo upscaler with otf jpg compression and blur, trained on musl's Nomos8k_sfw dataset for realisic sr. Since this is a big model, upscaling might take a while."""],

|

| 134 |

+

|

| 135 |

+

"4xNomos8kSCHAT-S.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomos8kSCHAT/4xNomos8kSCHAT-S.pth",

|

| 136 |

+

"https://openmodeldb.info/models/4x-Nomos8kSCHAT-S",

|

| 137 |

+

"""4x photo upscaler with otf jpg compression and blur, trained on musl's Nomos8k_sfw dataset for realisic sr. HAT-S version/model."""],

|

| 138 |

+

|

| 139 |

+

"4xNomos8kHAT-L_otf.pth": ["https://github.com/Phhofm/models/releases/download/4xNomos8kHAT-L_otf/4xNomos8kHAT-L_otf.pth",

|

| 140 |

+

"https://openmodeldb.info/models/4x-Nomos8kHAT-L-otf",

|

| 141 |

+

"""4x photo upscaler trained with otf"""],

|

| 142 |

+

|

| 143 |

# RealPLKSR_dysample

|

| 144 |

+

"4xHFA2k_ludvae_realplksr_dysample.pth": ["https://github.com/Phhofm/models/releases/download/4xHFA2k_ludvae_realplksr_dysample/4xHFA2k_ludvae_realplksr_dysample.pth",

|

| 145 |

+

"https://openmodeldb.info/models/4x-HFA2k-ludvae-realplksr-dysample",

|

| 146 |

+

"""A Dysample RealPLKSR 4x upscaling model for anime single-image resolution."""],

|

| 147 |

+

|

| 148 |

+

"4xArtFaces_realplksr_dysample.pth" : ["https://github.com/Phhofm/models/releases/download/4xArtFaces_realplksr_dysample/4xArtFaces_realplksr_dysample.pth",

|

| 149 |

+

"https://openmodeldb.info/models/4x-ArtFaces-realplksr-dysample",

|

| 150 |

+

"""A Dysample RealPLKSR 4x upscaling model for art / painted faces."""],

|

| 151 |

+

|

| 152 |

+

"4x-PBRify_RPLKSRd_V3.pth" : ["https://github.com/Kim2091/Kim2091-Models/releases/download/4x-PBRify_RPLKSRd_V3/4x-PBRify_RPLKSRd_V3.pth", "https://openmodeldb.info/models/4x-PBRify-RPLKSRd-V3",

|

| 153 |

+

"""This model is roughly 8x faster than the current DAT2 model, while being higher quality. It produces far more natural detail, resolves lines and edges more smoothly, and cleans up compression artifacts better."""],

|

| 154 |

+

|

| 155 |

+

"4xNomos2_realplksr_dysample.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomos2_realplksr_dysample/4xNomos2_realplksr_dysample.pth",

|

| 156 |

+

"https://openmodeldb.info/models/4x-Nomos2-realplksr-dysample",

|

| 157 |

+

"""Description: A Dysample RealPLKSR 4x upscaling model that was trained with / handles jpg compression down to 70 on the Nomosv2 dataset, preserves DoF.

|

| 158 |

+

This model affects / saturate colors, which can be counteracted a bit by using wavelet color fix, as used in these examples."""],

|

| 159 |

+

|

| 160 |

# RealPLKSR

|

| 161 |

+

"2x-AnimeSharpV2_RPLKSR_Sharp.pth": ["https://github.com/Kim2091/Kim2091-Models/releases/download/2x-AnimeSharpV2_Set/2x-AnimeSharpV2_RPLKSR_Sharp.pth",

|

| 162 |

+

"https://openmodeldb.info/models/2x-AnimeSharpV2-RPLKSR-Sharp",

|

| 163 |

+

"""Kim2091: This is my first anime model in years. Hopefully you guys can find a good use-case for it.

|

| 164 |

+

RealPLKSR (Higher quality, slower) Sharp: For heavily degraded sources. Sharp models have issues depth of field but are best at removing artifacts

|

| 165 |

+

"""],

|

| 166 |

+

|

| 167 |

+

"2x-AnimeSharpV2_RPLKSR_Soft.pth" : ["https://github.com/Kim2091/Kim2091-Models/releases/download/2x-AnimeSharpV2_Set/2x-AnimeSharpV2_RPLKSR_Soft.pth",

|

| 168 |

+

"https://openmodeldb.info/models/2x-AnimeSharpV2-RPLKSR-Soft",

|

| 169 |

+

"""Kim2091: This is my first anime model in years. Hopefully you guys can find a good use-case for it.

|

| 170 |

+

RealPLKSR (Higher quality, slower) Soft: For cleaner sources. Soft models preserve depth of field but may not remove other artifacts as well"""],

|

| 171 |

+

|

| 172 |

+

"4xPurePhoto-RealPLSKR.pth" : ["https://github.com/starinspace/StarinspaceUpscale/releases/download/Models/4xPurePhoto-RealPLSKR.pth",

|

| 173 |

+

"https://openmodeldb.info/models/4x-PurePhoto-RealPLSKR",

|

| 174 |

+

"""Skilled in working with cats, hair, parties, and creating clear images.

|

| 175 |

+

Also proficient in resizing photos and enlarging large, sharp images.

|

| 176 |

+

Can effectively improve images from small sizes as well (300px at smallest on one side, depending on the subject).

|

| 177 |

+

Experienced in experimenting with techniques like upscaling with this model twice and \

|

| 178 |

+

then reducing it by 50% to enhance details, especially in features like hair or animals."""],

|

| 179 |

+

|

| 180 |

+

"2x_Text2HD_v.1-RealPLKSR.pth" : ["https://github.com/starinspace/StarinspaceUpscale/releases/download/Models/2x_Text2HD_v.1-RealPLKSR.pth",

|

| 181 |

+

"https://openmodeldb.info/models/2x-Text2HD-v-1",

|

| 182 |

+

"""Purpose: Upscale text in very low quality to normal quality.

|

| 183 |

+

The upscale model is specifically designed to enhance lower-quality text images, \

|

| 184 |

+

improving their clarity and readability by upscaling them by 2x.

|

| 185 |

+

It excels at processing moderately sized text, effectively transforming it into high-quality, legible scans.

|

| 186 |

+

However, the model may encounter challenges when dealing with very small text, \

|

| 187 |

+

as its performance is optimized for text of a certain minimum size. For best results, \

|

| 188 |

+

input images should contain text that is not excessively small."""],

|

| 189 |

+

|

| 190 |

+

"2xVHS2HD-RealPLKSR.pth" : ["https://github.com/starinspace/StarinspaceUpscale/releases/download/Models/2xVHS2HD-RealPLKSR.pth",

|

| 191 |

+

"https://openmodeldb.info/models/2x-VHS2HD",

|

| 192 |

+

"""An advanced VHS recording model designed to enhance video quality by reducing artifacts such as haloing, ghosting, and noise patterns.

|

| 193 |

+

Optimized primarily for PAL resolution (NTSC might work good as well)."""],

|

| 194 |

+

|

| 195 |

+

"4xNomosWebPhoto_RealPLKSR.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomosWebPhoto_RealPLKSR/4xNomosWebPhoto_RealPLKSR.pth",

|

| 196 |

+

"https://openmodeldb.info/models/4x-NomosWebPhoto-RealPLKSR",

|

| 197 |

+

"""4x RealPLKSR model for photography, trained with realistic noise, lens blur, jpg and webp re-compression."""],

|

| 198 |

+

|

| 199 |

+

# "4xNomos2_hq_drct-l.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomos2_hq_drct-l/4xNomos2_hq_drct-l.pth",

|

| 200 |

+

# "https://github.com/Phhofm/models/releases/tag/4xNomos2_hq_drct-l",

|

| 201 |

+

# """An drct-l 4x upscaling model, similiar to the 4xNomos2_hq_atd, 4xNomos2_hq_dat2 and 4xNomos2_hq_mosr models, trained and for usage on non-degraded input to give good quality output.

|

| 202 |

+

# """],

|

| 203 |

+

|

| 204 |

+

# "4xNomos2_hq_atd.pth" : ["https://github.com/Phhofm/models/releases/download/4xNomos2_hq_atd/4xNomos2_hq_atd.pth",

|

| 205 |

+

# "https://github.com/Phhofm/models/releases/tag/4xNomos2_hq_atd",

|

| 206 |

+

# """An atd 4x upscaling model, similiar to the 4xNomos2_hq_dat2 or 4xNomos2_hq_mosr models, trained and for usage on non-degraded input to give good quality output.

|

| 207 |

+

# """]

|

| 208 |

}

|

| 209 |

|

| 210 |

+

example_list = ["images/a01.jpg", "images/a02.jpg", "images/a03.jpg", "images/a04.jpg", "images/bus.jpg", "images/zidane.jpg",

|

| 211 |

+

"images/b01.jpg", "images/b02.jpg", "images/b03.jpg", "images/b04.jpg", "images/b05.jpg", "images/b06.jpg",

|

| 212 |

+

"images/b07.jpg", "images/b08.jpg", "images/b09.jpg", "images/b10.jpg", "images/b11.jpg", "images/c01.jpg",

|

| 213 |

+

"images/c02.jpg", "images/c03.jpg", "images/c04.jpg", "images/c05.jpg", "images/c06.jpg", "images/c07.jpg",

|

| 214 |

+

"images/c08.jpg", "images/c09.jpg", "images/c10.jpg"]

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 215 |

|

| 216 |

def get_model_type(model_name):

|

| 217 |

# Define model type mappings based on key parts of the model names

|

|

|

|

| 230 |

model_type = "RealPLKSR_dysample"

|

| 231 |

elif "realplksr" in model_name.lower() or "rplksr" in model_name.lower():

|

| 232 |

model_type = "RealPLKSR"

|

| 233 |

+

elif "drct-l" in model_name.lower():

|

| 234 |

+

model_type = "DRCT-L"

|

| 235 |

+

elif "atd" in model_name.lower():

|

| 236 |

+

model_type = "ATD"

|

| 237 |

return f"{model_type}, {model_name}"

|

| 238 |

|

| 239 |

+

typed_upscale_models = {get_model_type(key): value[0] for key, value in upscale_models.items()}

|

|

|

|

|

|

|

|

|

|

|

|

|

| 240 |

|

| 241 |

|

| 242 |

class Upscale:

|

| 243 |

+

def inference(self, img, face_restoration, upscale_model, scale: float, face_detection, outputWithModelName: bool):

|

| 244 |

print(img)

|

| 245 |

+

print(face_restoration, upscale_model, scale)

|

| 246 |

try:

|

| 247 |

self.scale = scale

|

| 248 |

self.img_name = os.path.basename(str(img))

|

|

|

|

| 255 |

img = cv2.cvtColor(img, cv2.COLOR_GRAY2BGR)

|

| 256 |

|

| 257 |

h, w = img.shape[0:2]

|

|

|

|

|

|

|

| 258 |

|

| 259 |

if face_restoration:

|

| 260 |

+

download_from_url(face_models[face_restoration][0], face_restoration, os.path.join("weights", "face"))

|

| 261 |

+

|

| 262 |

+

modelInUse = ""

|

| 263 |

+

upscale_type = None

|

| 264 |

+

if upscale_model:

|

| 265 |

+

upscale_type, upscale_model = upscale_model.split(", ", 1)

|

| 266 |

+

download_from_url(upscale_models[upscale_model][0], upscale_model, os.path.join("weights", "upscale"))

|

| 267 |

+

modelInUse = f"_{os.path.splitext(upscale_model)[0]}"

|

| 268 |

|

| 269 |

netscale = 4

|

| 270 |

loadnet = None

|

| 271 |

model = None

|

| 272 |

is_auto_split_upscale = True

|

| 273 |

half = True if torch.cuda.is_available() else False

|

| 274 |

+

if upscale_type:

|

| 275 |

from basicsr.archs.rrdbnet_arch import RRDBNet

|

| 276 |

from basicsr.archs.realplksr_arch import realplksr

|

| 277 |

+

# background enhancer with upscale model

|

| 278 |

+

if upscale_type == "RRDB":

|

| 279 |

+

netscale = 2 if "x2" in upscale_model else 4

|

| 280 |

+

num_block = 6 if "6B" in upscale_model else 23

|

| 281 |

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=num_block, num_grow_ch=32, scale=netscale)

|

| 282 |

+

elif upscale_type == "SRVGG":

|

| 283 |

from realesrgan.archs.srvgg_arch import SRVGGNetCompact

|

| 284 |

netscale = 4

|

| 285 |

+

num_conv = 16 if "animevideov3" in upscale_model else 32

|

| 286 |

model = SRVGGNetCompact(num_in_ch=3, num_out_ch=3, num_feat=64, num_conv=num_conv, upscale=netscale, act_type='prelu')

|

| 287 |

+

elif upscale_type == "ESRGAN":

|

| 288 |

netscale = 4

|

| 289 |

model = RRDBNet(num_in_ch=3, num_out_ch=3, num_feat=64, num_block=23, num_grow_ch=32, scale=netscale)

|

| 290 |

loadnet = {}

|

| 291 |

+

loadnet_origin = torch.load(os.path.join("weights", "upscale", upscale_model), map_location=torch.device('cpu'), weights_only=True)

|

| 292 |

for key, value in loadnet_origin.items():

|

| 293 |

new_key = key.replace("model.0", "conv_first").replace("model.1.sub.23.", "conv_body.").replace("model.1.sub", "body") \

|

| 294 |

.replace(".0.weight", ".weight").replace(".0.bias", ".bias").replace(".RDB1.", ".rdb1.").replace(".RDB2.", ".rdb2.").replace(".RDB3.", ".rdb3.") \

|

| 295 |

.replace("model.3.", "conv_up1.").replace("model.6.", "conv_up2.").replace("model.8.", "conv_hr.").replace("model.10.", "conv_last.")

|

| 296 |

loadnet[new_key] = value

|

| 297 |

+

elif upscale_type == "DAT":

|

| 298 |

from basicsr.archs.dat_arch import DAT

|

| 299 |

half = False

|

| 300 |

netscale = 4

|

| 301 |

+

expansion_factor = 2. if "dat2" in upscale_model.lower() else 4.

|

| 302 |

model = DAT(img_size=64, in_chans=3, embed_dim=180, split_size=[8,32], depth=[6,6,6,6,6,6], num_heads=[6,6,6,6,6,6], expansion_factor=expansion_factor, upscale=netscale)

|

| 303 |

# # Speculate on the parameters.

|

| 304 |

+

# loadnet_origin = torch.load(os.path.join("weights", "upscale", upscale_model), map_location=torch.device('cpu'), weights_only=True)

|

| 305 |

# inferred_params = self.infer_parameters_from_state_dict_for_dat(loadnet_origin, netscale)

|

| 306 |

# for param, value in inferred_params.items():

|

| 307 |

# print(f"{param}: {value}")

|

| 308 |

+

elif upscale_type == "HAT":

|

| 309 |

half = False

|

| 310 |

netscale = 4

|

| 311 |

import torch.nn.functional as F

|

|

|

|

| 355 |

|

| 356 |

# The parameters are derived from the XPixelGroup project files: HAT-L_SRx4_ImageNet-pretrain.yml and HAT-S_SRx4.yml.

|

| 357 |

# https://github.com/XPixelGroup/HAT/tree/main/options/test

|

| 358 |

+

if "hat-l" in upscale_model.lower():

|

| 359 |

window_size = 16

|

| 360 |

compress_ratio = 3

|

| 361 |

squeeze_factor = 30

|

|

|

|

| 364 |

num_heads = [6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6, 6]

|

| 365 |

mlp_ratio = 2

|

| 366 |

upsampler = "pixelshuffle"

|

| 367 |

+

elif "hat-s" in upscale_model.lower():

|

| 368 |

window_size = 16

|

| 369 |

compress_ratio = 24

|

| 370 |

squeeze_factor = 24

|

|

|

|

| 375 |

upsampler = "pixelshuffle"

|

| 376 |

model = HATWithAutoPadding(img_size=64, patch_size=1, in_chans=3, embed_dim=embed_dim, depths=depths, num_heads=num_heads, window_size=window_size, compress_ratio=compress_ratio,

|

| 377 |

squeeze_factor=squeeze_factor, conv_scale=0.01, overlap_ratio=0.5, mlp_ratio=mlp_ratio, upsampler=upsampler, upscale=netscale,)

|

| 378 |

+

elif upscale_type == "RealPLKSR_dysample":

|

| 379 |

netscale = 4

|

| 380 |

+

model = realplksr(dim=64, n_blocks=28, kernel_size=17, split_ratio=0.25, upscaling_factor=netscale, dysample=True)

|

| 381 |

+

elif upscale_type == "RealPLKSR":

|

| 382 |

+

half = False if "RealPLSKR" in upscale_model else half

|

| 383 |

+

netscale = 2 if upscale_model.startswith("2x") else 4

|

| 384 |

model = realplksr(dim=64, n_blocks=28, kernel_size=17, split_ratio=0.25, upscaling_factor=netscale)

|

| 385 |

|

| 386 |

|

|

|

|

| 388 |

if loadnet:

|

| 389 |

self.upsampler = RealESRGANer(scale=netscale, loadnet=loadnet, model=model, tile=0, tile_pad=10, pre_pad=0, half=half)

|

| 390 |

elif model:

|

| 391 |

+

self.upsampler = RealESRGANer(scale=netscale, model_path=os.path.join("weights", "upscale", upscale_model), model=model, tile=0, tile_pad=10, pre_pad=0, half=half)

|

| 392 |

+

elif upscale_model:

|

| 393 |

self.upsampler = None

|

| 394 |

import PIL

|

| 395 |

from image_gen_aux import UpscaleWithModel

|

|

|

|

| 436 |

return cv_image, None

|

| 437 |

|

| 438 |

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 439 |

+

upscaler = UpscaleWithModel.from_pretrained(os.path.join("weights", "upscale", upscale_model)).to(device)

|

| 440 |

upscaler.__class__ = UpscaleWithModel_Gfpgan

|

| 441 |

self.upsampler = upscaler

|

| 442 |

self.face_enhancer = None

|

| 443 |

|

| 444 |

+

resolution = 512

|

| 445 |

if face_restoration:

|

| 446 |

+

modelInUse = f"_{os.path.splitext(face_restoration)[0]}" + modelInUse

|

| 447 |

from gfpgan.utils import GFPGANer

|

| 448 |

+

model_rootpath = os.path.join("weights", "face")

|

| 449 |

+

model_path = os.path.join(model_rootpath, face_restoration)

|

| 450 |

+

channel_multiplier = None

|

| 451 |

+

|

| 452 |

if face_restoration and face_restoration.startswith("GFPGANv1."):

|

| 453 |

+

arch = "clean"

|

| 454 |

+

channel_multiplier = 2

|

| 455 |

elif face_restoration and face_restoration.startswith("RestoreFormer"):

|

| 456 |

arch = "RestoreFormer++" if face_restoration.startswith("RestoreFormer++") else "RestoreFormer"

|

|

|

|

| 457 |

elif face_restoration == 'CodeFormer.pth':

|

| 458 |

+

arch = "CodeFormer"

|

| 459 |

+

elif face_restoration.startswith("GPEN-BFR-"):

|

| 460 |

+

arch = "GPEN"

|

| 461 |

+

channel_multiplier = 2

|

| 462 |

+

if "1024" in face_restoration:

|

| 463 |

+

arch = "GPEN-1024"

|

| 464 |

+

resolution = 1024

|

| 465 |

+

elif "2048" in face_restoration:

|

| 466 |

+

arch = "GPEN-2048"

|

| 467 |

+

resolution = 2048

|

| 468 |

+

|

| 469 |

+

self.face_enhancer = GFPGANer(model_path=model_path, upscale=self.scale, arch=arch, channel_multiplier=channel_multiplier, bg_upsampler=self.upsampler, model_rootpath=model_rootpath, det_model=face_detection, resolution=resolution)

|

| 470 |

|

| 471 |

files = []

|

| 472 |

+

if not outputWithModelName:

|

| 473 |

+

modelInUse = ""

|

| 474 |

+

|

| 475 |

try:

|

| 476 |

bg_upsample_img = None

|

| 477 |

if self.upsampler and self.upsampler.enhance:

|

|

|

|

| 484 |

if cropped_faces and restored_aligned:

|

| 485 |

for idx, (cropped_face, restored_face) in enumerate(zip(cropped_faces, restored_aligned)):

|

| 486 |

# save cropped face

|

| 487 |

+

save_crop_path = f"output/{self.basename}{idx:02d}_cropped_faces{modelInUse}.png"

|

| 488 |

self.imwriteUTF8(save_crop_path, cropped_face)

|

| 489 |

# save restored face

|

| 490 |

+

save_restore_path = f"output/{self.basename}{idx:02d}_restored_faces{modelInUse}.png"

|

| 491 |

self.imwriteUTF8(save_restore_path, restored_face)

|

| 492 |

# save comparison image

|

| 493 |

+

save_cmp_path = f"output/{self.basename}{idx:02d}_cmp{modelInUse}.png"

|

| 494 |

cmp_img = np.concatenate((cropped_face, restored_face), axis=1)

|

| 495 |

self.imwriteUTF8(save_cmp_path, cmp_img)

|

| 496 |

|

| 497 |

files.append(save_crop_path)

|

| 498 |

files.append(save_restore_path)

|

| 499 |

files.append(save_cmp_path)

|

|

|

|

|

|

|

|

|

|

| 500 |

|

| 501 |

restored_img = bg_upsample_img

|

| 502 |

except RuntimeError as error:

|

|

|

|

| 512 |

|

| 513 |

if not self.extension:

|

| 514 |

self.extension = ".png" if self.img_mode == "RGBA" else ".jpg" # RGBA images should be saved in png format

|

| 515 |

+

save_path = f"output/{self.basename}{modelInUse}{self.extension}"

|

| 516 |

self.imwriteUTF8(save_path, restored_img)

|

| 517 |

|

| 518 |

restored_img = cv2.cvtColor(restored_img, cv2.COLOR_BGR2RGB)

|

| 519 |

files.append(save_path)

|

| 520 |

+

return files, files

|

|

|

|

| 521 |

except Exception as error:

|

| 522 |

print(traceback.format_exc())

|

| 523 |

print("global exception", error)

|

|

|

|

| 614 |

# Ensure the target directory exists

|

| 615 |

os.makedirs('output', exist_ok=True)

|

| 616 |

|

| 617 |

+

title = "Image Upscaling & Restoration using GFPGAN / RestoreFormerPlusPlus / CodeFormer / GPEN Algorithm"

|

| 618 |

+

description = r"""

|

| 619 |

+

<a href='https://github.com/TencentARC/GFPGAN' target='_blank'><b>GFPGAN: Towards Real-World Blind Face Restoration and Upscalling of the image with a Generative Facial Prior</b></a>. <br>

|

| 620 |

+

<a href='https://github.com/wzhouxiff/RestoreFormerPlusPlus' target='_blank'><b>RestoreFormer++: Towards Real-World Blind Face Restoration from Undegraded Key-Value Pairs</b></a>. <br>

|

| 621 |

+

<a href='https://github.com/sczhou/CodeFormer' target='_blank'><b>CodeFormer: Towards Robust Blind Face Restoration with Codebook Lookup Transformer (NeurIPS 2022)</b></a>. <br>

|

| 622 |

+

<a href='https://github.com/yangxy/GPEN' target='_blank'><b>GPEN: GAN Prior Embedded Network for Blind Face Restoration in the Wild</b></a>. <br>

|

| 623 |

+

<br>

|

| 624 |

+

Practically, the aforementioned algorithm is used to restore your **old photos** or improve **AI-generated faces**.<br>

|

| 625 |

To use it, simply just upload the concerned image.<br>

|

| 626 |

"""

|

| 627 |

article = r"""

|

| 628 |

[](https://github.com/TencentARC/GFPGAN/releases)

|

| 629 |

[](https://github.com/TencentARC/GFPGAN)

|

| 630 |

[](https://arxiv.org/abs/2101.04061)

|

|

|

|

| 631 |

"""

|

| 632 |

|

| 633 |

upscale = Upscale()

|

| 634 |

|

| 635 |

+

with gr.Blocks(title = title) as demo:

|

| 636 |

+

gr.Markdown(value=f"<h1 style=\"text-align:center;\">{title}</h1><br>{description}")

|

| 637 |

+

with gr.Row():

|

| 638 |

+

with gr.Column(variant="panel"):

|

| 639 |

+

input_image = gr.Image(type="filepath", label="Input", format="png")

|

| 640 |

+

face_model = gr.Dropdown(list(face_models.keys())+[None], type="value", value='GFPGANv1.4.pth', label='Face Restoration version', info="Face Restoration and RealESR can be freely combined in different ways, or one can be set to \"None\" to use only the other model. Face Restoration is primarily used for face restoration in real-life images, while RealESR serves as a background restoration model.")

|

| 641 |

+

upscale_model = gr.Dropdown(list(typed_upscale_models.keys())+[None], type="value", value='SRVGG, realesr-general-x4v3.pth', label='UpScale version')

|

| 642 |

+

upscale_scale = gr.Number(label="Rescaling factor", value=4)

|

| 643 |

+

face_detection = gr.Dropdown(["retinaface_resnet50", "YOLOv5l", "YOLOv5n"], type="value", value="retinaface_resnet50", label="Face Detection type")

|

| 644 |

+

with_model_name = gr.Checkbox(label="Output image files name with model name", value=True)

|

| 645 |

+

with gr.Row():

|

| 646 |

+

submit = gr.Button(value="Submit", variant="primary", size="lg")

|

| 647 |

+

clear = gr.ClearButton(

|

| 648 |

+

components=[

|

| 649 |

+

input_image,

|

| 650 |

+

face_model,

|

| 651 |

+

upscale_model,

|

| 652 |

+

upscale_scale,

|

| 653 |

+

face_detection,

|

| 654 |

+

with_model_name,

|

| 655 |

+

], variant="secondary", size="lg",)

|

| 656 |

+

with gr.Column(variant="panel"):

|

| 657 |

+

gallerys = gr.Gallery(type="filepath", label="Output (The whole image)", format="png")

|

| 658 |

+

outputs = gr.File(label="Download the output image")

|

| 659 |

+

with gr.Row(variant="panel"):

|

| 660 |

+

# Generate output array

|

| 661 |

+

output_arr = []

|

| 662 |

+

for file_name in example_list:

|

| 663 |

+

output_arr.append([file_name,])

|

| 664 |

+

gr.Examples(output_arr, inputs=[input_image,], examples_per_page=20)

|

| 665 |

+

with gr.Row(variant="panel"):

|

| 666 |

+

# Convert to Markdown table

|

| 667 |

+

header = "| Face Model Name | Info | Download URL |\n|------------|------|--------------|"

|

| 668 |

+

rows = [

|

| 669 |

+

f"| [{key}]({value[1]}) | " + value[2].replace("\n", "<br>") + f" | [download]({value[0]}) |"

|

| 670 |

+

for key, value in face_models.items()

|

| 671 |

+

]

|

| 672 |

+

markdown_table = header + "\n" + "\n".join(rows)

|

| 673 |

+

gr.Markdown(value=markdown_table)

|

| 674 |

+

with gr.Row(variant="panel"):

|

| 675 |

+

# Convert to Markdown table

|

| 676 |

+

header = "| Upscale Model Name | Info | Download URL |\n|------------|------|--------------|"

|

| 677 |

+

rows = [

|

| 678 |

+

f"| [{key}]({value[1]}) | " + value[2].replace("\n", "<br>") + f" | [download]({value[0]}) |"

|

| 679 |

+

for key, value in upscale_models.items()

|

| 680 |

+

]

|

| 681 |

+

markdown_table = header + "\n" + "\n".join(rows)

|

| 682 |

+

gr.Markdown(value=markdown_table)

|

| 683 |

+

|

| 684 |

+

submit.click(

|

| 685 |

+

upscale.inference,

|

| 686 |

+

inputs=[

|

| 687 |

+

input_image,

|

| 688 |

+

face_model,

|

| 689 |

+

upscale_model,

|

| 690 |

+

upscale_scale,

|

| 691 |

+

face_detection,

|

| 692 |

+

with_model_name,

|

| 693 |

+

],

|

| 694 |

+

outputs=[gallerys, outputs],

|

| 695 |

+

)

|

| 696 |

|

| 697 |

+

demo.queue(default_concurrency_limit=1)

|

| 698 |

demo.launch(inbrowser=True)

|

| 699 |

|

| 700 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

@@ -1,6 +1,6 @@

|

|

| 1 |

--extra-index-url https://download.pytorch.org/whl/cu124

|

| 2 |

|

| 3 |

-

gradio==5.

|

| 4 |

|

| 5 |

basicsr @ git+https://github.com/avan06/BasicSR

|

| 6 |

facexlib @ git+https://github.com/avan06/facexlib

|

|

|

|

| 1 |

--extra-index-url https://download.pytorch.org/whl/cu124

|

| 2 |

|

| 3 |

+

gradio==5.9.0

|

| 4 |

|

| 5 |

basicsr @ git+https://github.com/avan06/BasicSR

|

| 6 |

facexlib @ git+https://github.com/avan06/facexlib

|