Spaces:

Sleeping

Sleeping

Commit

•

3582c8a

1

Parent(s):

8be1cb6

添加注释

Browse files- README.md +58 -16

- analysis/tests.py +0 -3

- app.py +1 -7

- generate_and_play.py +8 -5

- media/banner.png +0 -0

- models/example_policy/samples.lvls +122 -122

- models/example_policy/samples.png +0 -0

- plots.py +0 -733

- pyproject.toml +0 -21

- requirements.txt +0 -0

- src/drl/egsac/train_egsac.py +2 -2

- src/drl/sunrise/train_sunrise.py +3 -8

- src/drl/train_async.py +15 -0

- src/drl/train_sinproc.py +13 -0

- src/env/environments.py +2 -5

- src/env/rfunc.py +6 -5

- src/gan/adversarial_train.py +0 -21

- src/gan/gankits.py +2 -2

- src/gan/gans.py +1 -1

- src/olgen/olg_policy.py +7 -44

- src/smb/asyncsimlt.py +4 -9

- src/smb/proxy.py +0 -8

- src/utils/img.py +0 -1

- test_ddpm.py +1 -81

- test_gen_log.py +0 -15

- test_gen_samples.py +0 -24

- tests.py +0 -140

- train.py +2 -0

README.md

CHANGED

|

@@ -8,26 +8,39 @@ python_version: 3.9

|

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

|

|

|

|

|

|

| 11 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

| 14 |

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 15 |

|

| 16 |

-

|

| 17 |

-

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

* torch 1.8.2+cu111

|

| 22 |

-

* numpy 1.20.3

|

| 23 |

-

* gym 0.21.0

|

| 24 |

-

* scipy 1.7.2

|

| 25 |

-

* Pillow 10.0.0

|

| 26 |

-

* matplotlib 3.6.3

|

| 27 |

-

* pandas 1.3.2

|

| 28 |

-

* sklearn 1.0.1

|

| 29 |

|

| 30 |

-

|

| 31 |

|

| 32 |

All training are launched by running `train.py` with option and arguments. For example, execute `python train.py ncesac --lbd 0.3 --m 5` will train NCERL with hyperparameters set as $\lambda = 0.3, m=5$.

|

| 33 |

Plot script is `plots.py`

|

|

@@ -36,9 +49,38 @@ All training are launched by running `train.py` with option and arguments. For e

|

|

| 36 |

* `python train.py sac`: to train a standard SAC as the policy for online game level generation

|

| 37 |

* `python train.py asyncsac`: to train a SAC with an asynchronous evaluation environment as the policy for online game level generation

|

| 38 |

* `python train.py ncesac`: to train an NCERL based on SAC as the policy for online game level generation

|

| 39 |

-

* `python train.py egsac`: to train an episodic generative SAC (see paper [*The fun facets of Mario: Multifaceted experience-driven PCG via reinforcement learning*](https://dl.acm.org/doi/abs/10.1145/3555858.3563282

|

| 40 |

* `python train.py pmoe`: to train an episodic generative SAC (see paper [*Probabilistic Mixture-of-Experts for Efficient Deep Reinforcement Learning*](https://arxiv.org/abs/2104.09122)) as the policy for online game level generation

|

| 41 |

* `python train.py sunrise`: to train a SUNRISE (see paper [*SUNRISE: A Simple Unified Framework for Ensemble Learning in Deep Reinforcement Learning*](https://proceedings.mlr.press/v139/lee21g.html)) as the policy for online game level generation

|

| 42 |

* `python train.py dvd`: to train a DvD-SAC (see paper [*Effective Diversity in Population Based Reinforcement Learning*](https://proceedings.neurips.cc/paper_files/paper/2020/hash/d1dc3a8270a6f9394f88847d7f0050cf-Abstract.html)) as the policy for online game level generation

|

| 43 |

|

| 44 |

For the training arguments, please refer to the help `python train.py [option] --help`

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

app_file: app.py

|

| 9 |

pinned: false

|

| 10 |

---

|

| 11 |

+

|

| 12 |

+

# Negatively Correlated Ensemble RL

|

| 13 |

|

| 14 |

+

## 环境安装

|

| 15 |

+

创建conda环境

|

| 16 |

+

```bash

|

| 17 |

+

conda create -n ncerl python=3.9

|

| 18 |

+

```

|

| 19 |

+

安装环境依赖

|

| 20 |

+

```bash

|

| 21 |

+

pip install -r requirements.txt

|

| 22 |

+

```

|

| 23 |

+

注:该程序不需要您使用任何显卡,但是需要安装pytorch。如果您的显卡支持cuda,那么请安装cuda版本,否则安装cpu版本。使用cuda版本可以提高推理速度。

|

| 24 |

|

| 25 |

+

切换conda环境

|

| 26 |

+

```

|

| 27 |

+

conda activate ncerl

|

| 28 |

+

```

|

| 29 |

|

| 30 |

+

## 快速开始

|

| 31 |

+

如果您想查看效果,可以通过

|

| 32 |

+

```

|

| 33 |

+

python app.py

|

| 34 |

+

```

|

| 35 |

+

后打开命令行显示连接互动查看。

|

| 36 |

|

| 37 |

+

也可以通过运行

|

| 38 |

+

```

|

| 39 |

+

python generate_and_play.py

|

| 40 |

+

```

|

| 41 |

+

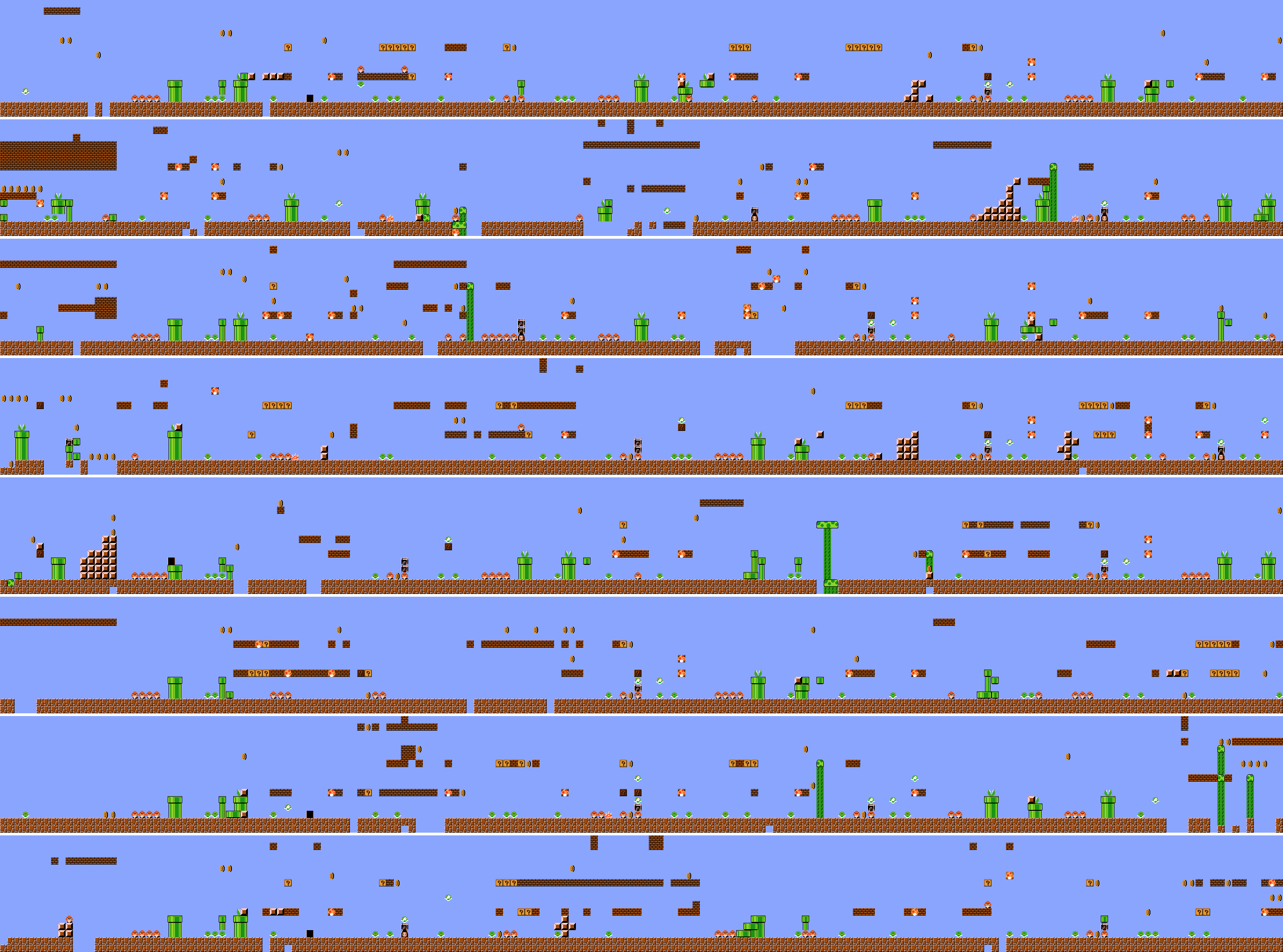

后查看`models/example_policy/samples.png`查看生成效果。

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 42 |

|

| 43 |

+

## 开始训练

|

| 44 |

|

| 45 |

All training are launched by running `train.py` with option and arguments. For example, execute `python train.py ncesac --lbd 0.3 --m 5` will train NCERL with hyperparameters set as $\lambda = 0.3, m=5$.

|

| 46 |

Plot script is `plots.py`

|

|

|

|

| 49 |

* `python train.py sac`: to train a standard SAC as the policy for online game level generation

|

| 50 |

* `python train.py asyncsac`: to train a SAC with an asynchronous evaluation environment as the policy for online game level generation

|

| 51 |

* `python train.py ncesac`: to train an NCERL based on SAC as the policy for online game level generation

|

| 52 |

+

* `python train.py egsac`: to train an episodic generative SAC (see paper [*The fun facets of Mario: Multifaceted experience-driven PCG via reinforcement learning*](https://dl.acm.org/doi/abs/10.1145/3555858.3563282)) as the policy for online game level generation

|

| 53 |

* `python train.py pmoe`: to train an episodic generative SAC (see paper [*Probabilistic Mixture-of-Experts for Efficient Deep Reinforcement Learning*](https://arxiv.org/abs/2104.09122)) as the policy for online game level generation

|

| 54 |

* `python train.py sunrise`: to train a SUNRISE (see paper [*SUNRISE: A Simple Unified Framework for Ensemble Learning in Deep Reinforcement Learning*](https://proceedings.mlr.press/v139/lee21g.html)) as the policy for online game level generation

|

| 55 |

* `python train.py dvd`: to train a DvD-SAC (see paper [*Effective Diversity in Population Based Reinforcement Learning*](https://proceedings.neurips.cc/paper_files/paper/2020/hash/d1dc3a8270a6f9394f88847d7f0050cf-Abstract.html)) as the policy for online game level generation

|

| 56 |

|

| 57 |

For the training arguments, please refer to the help `python train.py [option] --help`

|

| 58 |

+

|

| 59 |

+

## 目录结构

|

| 60 |

+

```

|

| 61 |

+

NCERL-DIVERSE-PCG/

|

| 62 |

+

* analysis/

|

| 63 |

+

* generate.py 未使用

|

| 64 |

+

* tests.py 做evaluation使用

|

| 65 |

+

* media/ markdown素材文件

|

| 66 |

+

* models/

|

| 67 |

+

* example_policy/ 做生成展示使用

|

| 68 |

+

* smb/ 马里奥仿真以及图片资源数据

|

| 69 |

+

* src/

|

| 70 |

+

* ddpm/ ddpm模型相关目录

|

| 71 |

+

* drl/ drl模型、训练目录

|

| 72 |

+

* env/ 马里奥gym环境和reward function

|

| 73 |

+

* gan/ gan模型、训练目录

|

| 74 |

+

* olgen/ 在线生成环境与policy目录

|

| 75 |

+

* rlkit/ 强化学习使用部件目录

|

| 76 |

+

* smb/ 马里奥与仿真器交互组件以及多进程异步池组件

|

| 77 |

+

* utils/ 一些功能性文件

|

| 78 |

+

* training_data/ 训练数据

|

| 79 |

+

* README.md 当前文件

|

| 80 |

+

* app.py 用于gradio展示用途文件

|

| 81 |

+

* generate_and_play.py 用于非gradio展示文件

|

| 82 |

+

* train.py 训练文件

|

| 83 |

+

* test_ddpm.py 测试训练ddpm文件

|

| 84 |

+

* requirements.txt 环境依赖文件

|

| 85 |

+

```

|

| 86 |

+

|

analysis/tests.py

CHANGED

|

@@ -36,7 +36,6 @@ def evaluate_rewards(lvls, rfunc='default', dest_path='', parallel=1, eval_pool=

|

|

| 36 |

|

| 37 |

def evaluate_mnd(lvls, refs, parallel=2):

|

| 38 |

eval_pool = AsycSimltPool(parallel, verbose=False, refs=[str(ref) for ref in refs])

|

| 39 |

-

# m, _ = len(lvls), len(refs)

|

| 40 |

res = []

|

| 41 |

for lvl in lvls:

|

| 42 |

eval_pool.put('mnd_item', str(lvl))

|

|

@@ -49,7 +48,6 @@ def evaluate_mnd(lvls, refs, parallel=2):

|

|

| 49 |

def evaluate_mpd(lvls, parallel=2):

|

| 50 |

task_datas = [[] for _ in range(parallel)]

|

| 51 |

for i, (A, B) in enumerate(combinations(lvls, 2)):

|

| 52 |

-

# lvlA, lvlB = lvls[i * 2], lvls[i * 2 + 1]

|

| 53 |

task_datas[i % parallel].append((str(A), str(B)))

|

| 54 |

|

| 55 |

hms, dtws = [], []

|

|

@@ -73,7 +71,6 @@ def evaluate_gen_log(path, parallel=5):

|

|

| 73 |

step = name[4:]

|

| 74 |

rewards = [sum(item) for item in evaluate_rewards(lvls, rfunc_name, parallel=parallel)]

|

| 75 |

r_avg, r_std = np.mean(rewards), np.std(rewards)

|

| 76 |

-

# mpd_hm, mpd_dtw = evaluate_mpd(lvls, parallel=parallel)

|

| 77 |

mpd = evaluate_mpd(lvls, parallel=parallel)

|

| 78 |

line = [step, r_avg, r_std, mpd, '']

|

| 79 |

wrtr.writerow(line)

|

|

|

|

| 36 |

|

| 37 |

def evaluate_mnd(lvls, refs, parallel=2):

|

| 38 |

eval_pool = AsycSimltPool(parallel, verbose=False, refs=[str(ref) for ref in refs])

|

|

|

|

| 39 |

res = []

|

| 40 |

for lvl in lvls:

|

| 41 |

eval_pool.put('mnd_item', str(lvl))

|

|

|

|

| 48 |

def evaluate_mpd(lvls, parallel=2):

|

| 49 |

task_datas = [[] for _ in range(parallel)]

|

| 50 |

for i, (A, B) in enumerate(combinations(lvls, 2)):

|

|

|

|

| 51 |

task_datas[i % parallel].append((str(A), str(B)))

|

| 52 |

|

| 53 |

hms, dtws = [], []

|

|

|

|

| 71 |

step = name[4:]

|

| 72 |

rewards = [sum(item) for item in evaluate_rewards(lvls, rfunc_name, parallel=parallel)]

|

| 73 |

r_avg, r_std = np.mean(rewards), np.std(rewards)

|

|

|

|

| 74 |

mpd = evaluate_mpd(lvls, parallel=parallel)

|

| 75 |

line = [step, r_avg, r_std, mpd, '']

|

| 76 |

wrtr.writerow(line)

|

app.py

CHANGED

|

@@ -9,7 +9,6 @@ sys.path.append(path.dirname(path.abspath(__file__)))

|

|

| 9 |

|

| 10 |

|

| 11 |

from src.olgen.ol_generator import VecOnlineGenerator

|

| 12 |

-

# from src.olgen.olg_game import MarioOnlineGenGame

|

| 13 |

from src.olgen.olg_policy import RLGenPolicy

|

| 14 |

from src.smb.level import save_batch

|

| 15 |

from src.utils.filesys import getpath

|

|

@@ -21,7 +20,7 @@ device = 'cuda:0' if torch.cuda.is_available() else 'cpu'

|

|

| 21 |

|

| 22 |

def generate_and_play():

|

| 23 |

path = 'models/example_policy'

|

| 24 |

-

#

|

| 25 |

N, L = 8, 10

|

| 26 |

plc = RLGenPolicy.from_path(path, device)

|

| 27 |

generator = VecOnlineGenerator(plc, g_device=device)

|

|

@@ -29,14 +28,9 @@ def generate_and_play():

|

|

| 29 |

os.makedirs(fd, exist_ok=True)

|

| 30 |

|

| 31 |

lvls = generator.generate(N, L)

|

| 32 |

-

# save_batch(lvls, f'{path}/samples.lvls')

|

| 33 |

imgs = [lvl.to_img() for lvl in lvls]

|

| 34 |

return imgs

|

| 35 |

-

# make_img_sheet(imgs, 1, save_path=f'{path}/samples.png')

|

| 36 |

|

| 37 |

-

# # Play with the example policy model

|

| 38 |

-

# game = MarioOnlineGenGame(path)

|

| 39 |

-

# game.play()

|

| 40 |

|

| 41 |

|

| 42 |

with gr.Blocks(title="NCERL Demo") as demo:

|

|

|

|

| 9 |

|

| 10 |

|

| 11 |

from src.olgen.ol_generator import VecOnlineGenerator

|

|

|

|

| 12 |

from src.olgen.olg_policy import RLGenPolicy

|

| 13 |

from src.smb.level import save_batch

|

| 14 |

from src.utils.filesys import getpath

|

|

|

|

| 20 |

|

| 21 |

def generate_and_play():

|

| 22 |

path = 'models/example_policy'

|

| 23 |

+

# 使用example policy做生成

|

| 24 |

N, L = 8, 10

|

| 25 |

plc = RLGenPolicy.from_path(path, device)

|

| 26 |

generator = VecOnlineGenerator(plc, g_device=device)

|

|

|

|

| 28 |

os.makedirs(fd, exist_ok=True)

|

| 29 |

|

| 30 |

lvls = generator.generate(N, L)

|

|

|

|

| 31 |

imgs = [lvl.to_img() for lvl in lvls]

|

| 32 |

return imgs

|

|

|

|

| 33 |

|

|

|

|

|

|

|

|

|

|

| 34 |

|

| 35 |

|

| 36 |

with gr.Blocks(title="NCERL Demo") as demo:

|

generate_and_play.py

CHANGED

|

@@ -1,5 +1,5 @@

|

|

| 1 |

import os

|

| 2 |

-

|

| 3 |

from src.olgen.ol_generator import VecOnlineGenerator

|

| 4 |

from src.olgen.olg_game import MarioOnlineGenGame

|

| 5 |

from src.olgen.olg_policy import RLGenPolicy

|

|

@@ -7,12 +7,14 @@ from src.smb.level import save_batch

|

|

| 7 |

from src.utils.filesys import getpath

|

| 8 |

from src.utils.img import make_img_sheet

|

| 9 |

|

|

|

|

|

|

|

| 10 |

if __name__ == '__main__':

|

| 11 |

path = 'models/example_policy'

|

| 12 |

# Generate with example policy model

|

| 13 |

N, L = 8, 10

|

| 14 |

-

plc = RLGenPolicy.from_path(path)

|

| 15 |

-

generator = VecOnlineGenerator(plc)

|

| 16 |

fd, _ = os.path.split(getpath(path))

|

| 17 |

os.makedirs(fd, exist_ok=True)

|

| 18 |

|

|

@@ -22,6 +24,7 @@ if __name__ == '__main__':

|

|

| 22 |

make_img_sheet(imgs, 1, save_path=f'{path}/samples.png')

|

| 23 |

|

| 24 |

# # Play with the example policy model

|

| 25 |

-

#

|

| 26 |

-

|

|

|

|

| 27 |

pass

|

|

|

|

| 1 |

import os

|

| 2 |

+

import torch

|

| 3 |

from src.olgen.ol_generator import VecOnlineGenerator

|

| 4 |

from src.olgen.olg_game import MarioOnlineGenGame

|

| 5 |

from src.olgen.olg_policy import RLGenPolicy

|

|

|

|

| 7 |

from src.utils.filesys import getpath

|

| 8 |

from src.utils.img import make_img_sheet

|

| 9 |

|

| 10 |

+

device = 'cuda:0' if torch.cuda.is_available() else 'cpu'

|

| 11 |

+

|

| 12 |

if __name__ == '__main__':

|

| 13 |

path = 'models/example_policy'

|

| 14 |

# Generate with example policy model

|

| 15 |

N, L = 8, 10

|

| 16 |

+

plc = RLGenPolicy.from_path(path, device=device)

|

| 17 |

+

generator = VecOnlineGenerator(plc, g_device=device)

|

| 18 |

fd, _ = os.path.split(getpath(path))

|

| 19 |

os.makedirs(fd, exist_ok=True)

|

| 20 |

|

|

|

|

| 24 |

make_img_sheet(imgs, 1, save_path=f'{path}/samples.png')

|

| 25 |

|

| 26 |

# # Play with the example policy model

|

| 27 |

+

# 请保证您的电脑上已经安装了jvm, 并且在命令行中输入java可以看到Java的信息

|

| 28 |

+

game = MarioOnlineGenGame(path)

|

| 29 |

+

game.play()

|

| 30 |

pass

|

media/banner.png

ADDED

|

models/example_policy/samples.lvls

CHANGED

|

@@ -1,135 +1,135 @@

|

|

| 1 |

-

|

| 2 |

-

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 3 |

-

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 4 |

-

|

| 5 |

-

------------------------------oo

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

| 15 |

-

|

| 16 |

-

|

| 17 |

;

|

| 18 |

-

|

| 19 |

-

|

| 20 |

-

|

| 21 |

-

|

| 22 |

-

|

| 23 |

-

|

| 24 |

-

----------------

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

|

| 28 |

-

|

| 29 |

-

|

| 30 |

-

|

| 31 |

-

|

| 32 |

-

---

|

| 33 |

-

|

| 34 |

;

|

| 35 |

-

|

| 36 |

-

|

| 37 |

-

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 38 |

-

|

| 39 |

-

|

| 40 |

-

|

| 41 |

-

|

| 42 |

-

|

| 43 |

-

|

| 44 |

-

|

| 45 |

-

|

| 46 |

-

|

| 47 |

-

|

| 48 |

-

--

|

| 49 |

-

|

| 50 |

-

|

| 51 |

;

|

| 52 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 53 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 54 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 55 |

-

|

| 56 |

-

------------------------------oo-----------------------------------------------------------------------------------------------o------------------------------------------------

|

| 57 |

-

---------------------------------o-----------------------------------------------------------------------------------------------------------------o----------------------------

|

| 58 |

-

----------------%----So--------------Q---------------QQQQ------------Qo--------------QQQQS-----#--------------------------------SooS--SS-----S-----SQQQ---------------o---------

|

| 59 |

-

----------------|------------------------------------------------------------------------------#--------------------------------------------------------------------------------

|

| 60 |

-

----------------|----------------------------------------------------------------------------###-------------K------------------------------------------------o-----------------

|

| 61 |

-

----------------|----------------------------------------------------------------------------###-------------2---------------------------------o--------------------------------

|

| 62 |

-

-------------oo-|----------------#--USoS-----US--#------------------------------Q-Q----QQ----###----------------------------------UQS------------------------US--------------o--

|

| 63 |

-

----------------|------TT-----K-TT---------------##----t---------------t---------------------###-------B---------------TT----#T----------------------------------------tt-------

|

| 64 |

-

---------------@|------TT-----U-TT-----K---------#---------------------t--------------------####-------B---------------TT----TT----------------------------------------tt-------

|

| 65 |

-

---gg----------g|-gggg-Tt---k-U-T--k-k----k------#-k--kk-----k-----k-gog----kkk---or--------####---k-gog----k-k---gggg-TT---kTT--------------------kgggg--k-k-----ggggott---kkk-

|

| 66 |

-

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX%%%%%-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX--XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 67 |

-

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-|XX--XXXXXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 68 |

-

;

|

| 69 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 70 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

|

|

|

| 71 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 72 |

-

|

| 73 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 74 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 75 |

-

|

| 76 |

-

|

| 77 |

-

|

| 78 |

-

|

| 79 |

-

|

| 80 |

-

|

| 81 |

-

|

| 82 |

-

|

| 83 |

-

|

| 84 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 85 |

;

|

| 86 |

-

|

| 87 |

-

|

| 88 |

-

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 89 |

-

|

| 90 |

-

|

| 91 |

-

|

| 92 |

-

|

| 93 |

-

|

| 94 |

-

|

| 95 |

-

|

| 96 |

-

|

| 97 |

-

|

| 98 |

-

|

| 99 |

-

|

| 100 |

-

|

| 101 |

-

|

| 102 |

;

|

| 103 |

-

|

| 104 |

-

|

| 105 |

-

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 106 |

-

|

| 107 |

-

|

| 108 |

-

|

| 109 |

-

|

| 110 |

-

|

| 111 |

-

|

| 112 |

-

|

| 113 |

-

|

| 114 |

-

|

| 115 |

-

|

| 116 |

-

|

| 117 |

-

|

| 118 |

-

|

| 119 |

;

|

| 120 |

-

|

| 121 |

-

|

| 122 |

-

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 123 |

-

|

| 124 |

-

|

| 125 |

-

|

| 126 |

-

|

| 127 |

-

|

| 128 |

-

|

| 129 |

-

|

| 130 |

-

|

| 131 |

-

|

| 132 |

-

|

| 133 |

-

|

| 134 |

-

|

| 135 |

-

|

|

|

|

| 1 |

+

----------------------------------------------------------------------------------------------------------S---------------------------------------------------------------------

|

| 2 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 3 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 4 |

+

----------------------------------------------------------------------------------------------------------------------------------------------------------------SSS-------------

|

| 5 |

+

------------------------------oo---------------------------------------------------------------------------------------------------------------o--------------------------------

|

| 6 |

+

--------------oo----------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 7 |

+

-------SS---%%%%%----So----------S--QSSSoS---SS---------------------QQQQQSSS-SSS---------------o-------------------------------U--------------------QS--------------------------

|

| 8 |

+

-------------||-|---------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 9 |

+

-------------||-|--------------------------------------------K---------------------------------------------------------------oo-------------------------------------------------

|

| 10 |

+

-------------||-|--------------------------------------------2---------------------------------------------oo----------------------------------------------------------o--------

|

| 11 |

+

-------QSSS-%%%%|---------------Q#-----------SS-----------------------QQQSSSS@S--------------------------S%%%----------------@S---------------------USSS-----US--------tt-------

|

| 12 |

+

-------------||-|------TT-----K------------------------B------K--------------------------------------------|---------------------------tt----#-------------------------tt----T--

|

| 13 |

+

-------------||-|------TT-----U------------------------B---------------------------------------------------|-----------B---------------Tt----TT------------------------tt----T--

|

| 14 |

+

---t---------||-|-gggg-Tt---k-U-------k-k----------k-gog----k-k----k-------------------------#-------------|------gk---b----k-k---ggg--Tt----kT----k-oog--k-k----------tt---kkk-

|

| 15 |

+

XX%XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXXXXXXXXXXXX-XXXXXXXXXX-XXXXXXXXXXXXXXXXXXXX---%%%%%%%%|----XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 16 |

+

X-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXXXXXXXXXXXX-XXXXXXXXXX-XXXXXXXXXXXXXXXXXXXX----||||||-|----XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 17 |

;

|

| 18 |

+

--------------------------------------------------------------------------k-----------------------------------------------------------------------------------------------------

|

| 19 |

+

---------------------------------------------------------S----------------K-----------------------------------------------------------------------------------------------------

|

| 20 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 21 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 22 |

+

------------------------------oo-------------------------o----------------------------------------------------------------------------------------------------------------------

|

| 23 |

+

---------------------------------------------------------------------------------------------------------------o----------------------------------------------------------------

|

| 24 |

+

-------------------------------------Q----------------SSSSS--SSS----QQQQQ--------------------------------------------------------------QQ-----------SQo-------------------------

|

| 25 |

+

-------------------------------------------------------------------------------o------------------------------------------------------------------------------------------------

|

| 26 |

+

-------------------------------------o---------------------------------------------------------------o---------------------------------------oo--------------U------------------

|

| 27 |

+

-----------------------------------------------------------------T--------------------------------------------------------------------g-----------------------------------------

|

| 28 |

+

------------------------------------USSS-----US------------SSSS--T#--------------------------o------USSS-----@S--------tt-------#-#-####-----@S--------2-----U------------------

|

| 29 |

+

-----------------------tt-----T-TT-------------------------------T#----K---------------t--------T----------------------tt-------###--------------------K--K------------Tt----#--

|

| 30 |

+

-----------------------Tt-----T-T--------------------------------##--------------------t-----TT------------------------tt-------T#-----K---------------B---------------TT----TT-

|

| 31 |

+

---------------o--gggg-Tt---kkk-T--k-k-g--k---------------------TT#----k----k-k---kggg-b----kkg----k------k-------g----tt---kkg-T#-k------k--------k-gog--k-k-----gg---TT----TT-

|

| 32 |

+

XXXXXXXX----XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-------XXX---XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 33 |

+

XXXXXXXX-X-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX---X---XX----XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 34 |

;

|

| 35 |

+

-------K----------------------------------------------------------------------------------k---------------------------------------------------------------------------S---------

|

| 36 |

+

----------------------------------------------------------------------------------------------------------------------------------------------------------------------S---------

|

| 37 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 38 |

+

----------------------------------------------------------------------------------------------------------------SSSSSSSSSSSSSSSS------------------------------------------------

|

| 39 |

+

-----------------------------------------------o----------------------------------------------------------------S--------------S------------------------------------------------

|

| 40 |

+

-------------ooo------------------------------------------------------------------------------------------------SSS------ooo---S----------------------------------o-------------

|

| 41 |

+

----------%%%%%%-%-------------U----------------SSoSSSSSS----SSS--------------------QSQQQ----SSS------------------SSSSSSSSSSSSSS----QQSSSSS------------SS-SSSS---S%--------SS---

|

| 42 |

+

-----------||||--|------------------------------------------------------------------------------------------------------------------------------------------------|----TT-------

|

| 43 |

+

-----------||||--|-----------oo----------------------------------------------K-----------------------o---------------------------------------oo-------------------|----TT-------

|

| 44 |

+

---------ooo|||--|--------------------------------------------oo-------------2------------------------------------------------------------------------------------|----TT---SS--

|

| 45 |

+

------%%%%%%%%K--|-----------@o-----------------QSQSS---------SS--------------------Q--------USS----USSS----S@S-----------------------------S@S-------------S@S---|---###--S----

|

| 46 |

+

-------||||||-o--|-----t---------------TT----TT------------------------B------------------------T-----------------------------------------------------------------|--------S----

|

| 47 |

+

-------||||||%%%-|-----t---------------TT----TT------------------------B------------------------------------------------t-----------------------------------------|--------S----

|

| 48 |

+

---r---||||||-|--|kk---b-----gkg--gggg-TT---kTT-----ggg------------k-gog----k-k----k--------k------k------k-----T------tt------g--kggk--------------kk---------k--|-------oy----

|

| 49 |

+

%%%%%%%%%%%||-|-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXX--XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXXXXXXXXXXX-SS--SXX-X--

|

| 50 |

+

-|||||||||-||-|-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXXXXXXXXXXXXXXXXX---------X-X

|

| 51 |

;

|

| 52 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 53 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 54 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 55 |

+

----------------------------------------------------------------SSSS------------------------------------------------------------------------------------------------------------

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 56 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 57 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 58 |

+

--------------------------------------SS-----S------QQ------------------------------Q---QS---SSS----SQo-----------------------------QS----------------------------oo------------

|

| 59 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 60 |

+

-------------------------------------------------------------------------------------------------------------U------------------------------------------------------------------

|

| 61 |

+

-----------------------------------------------------------------------o---------o---------------------------------------------------------------------o------------------------

|

| 62 |

+

------------------------------------oo-------o-------2-------U---------tt-------Q#QQQ---Q----U---------2-----U----------------------USoS-----US--------tt----o---------------SSS

|

| 63 |

+

-----------------------tt----TT------------------------K---------------tt----T-------------------------K--K------------Tt----#-------------------------tt----#------------------

|

| 64 |

+

-----------------------Tt----TT------------------------B---------------tt----TT--------------#---------B---------------TT----TT------------------------tt----TT-----------------

|

| 65 |

+

--kk--------------gggg-Tt---kkT--------------------k-k-b----k-----g----tt---kkT---ok--------k------k-gog----k-----g----TT----TT----k-kog--k-k----------tt----k------------k-----

|

| 66 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXX

|

| 67 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX-XXXX

|

| 68 |

+

;

|

| 69 |

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 70 |

+

-------------------------------------------------------------K-K-------------------------------------------------SS-------------------------------------------------------------

|

| 71 |

+

---------------------------------------------------------------o----------------------------------------------------------------------------------------------------------------

|

| 72 |

+

------------------------------%%-----------------------------%%t------------------------------------------------SSSSSSSSSSSSSSSS------------------------------------------------

|

| 73 |

+

------------------------------||-----------------------------||o------------------------------------------------S---SSS------S-------------------------------------------------o

|

| 74 |

+

------------------------------||-----------------------------||%------------------------------------------------SSSSSSSSSSSoSSSS------------------------------------------------

|

| 75 |

+

------------------------S-----|o---------------U------%%%%%--|||S--SSS-------SSS--------------------------------SSSSSSSSSSSSSSSS-----QQ---------%---SS--------------------------

|

| 76 |

+

------------------------------||-----------------------|||---|||--------------------------------------------------------------------------------|-------------------------------

|

| 77 |

+

------------------------------||-----o-------oo--------|||---|||-----------------------------K--------------------------------------------------|------------Ko-----------------

|

| 78 |

+

------------------------------||-----------------------|||-o-SS|-g----------------------------------------------ooooooo--------S----------------|-------------------------------

|

| 79 |

+

---------T--------------------||-----S-------@S--------|||%%%--|QSQSSSSSSSS-S@S%-------------U---------------U--SSSSSSS--------S-#--------------|----S-------US-----------------

|

| 80 |

+

---------TT---TT--------------||----------K------------|||-|---|---------------|-----------------------BB----#-------------------------t--------|------K--K------------TT----TT-

|

| 81 |

+

--B------TT---TT--------------||-------B---------------|||-|---|---------------|-----------------------Tt----TT---------------------------------|------B---------------TT----TT-

|

| 82 |

+

--b------TT---TT--------------|r--gk---b----k-k--------|||-|---r---k------k----|--kk---k----kkk---ggg--Tt---kkg------ggg-------g---k--kk----k---|--k-gog--k-k-----gggg-TT---kTT-

|

| 83 |

+

XXXXXXXXXXXXXXXX---------X---X%%XXXXXXXXXXXXXXXX-------|||-|%%%%XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 84 |

+

XXXXXXXXXXXXXXXX--------XX---X|XXXXXXXXXXXXXXXXX-------|||-|-||-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 85 |

;

|

| 86 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 87 |

+

--------------------------------------------------------------------------------------------------K-----------------------------------------------------------------------------

|

| 88 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 89 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 90 |

+

-----------------------------------------------------------------------------------------------o-------------------------------o------------------------------------------------

|

| 91 |

+

-------------------------------------------------------------------------------o---------------------------------------------------------------------------------ooo-----ooooo--

|

| 92 |

+

-------QQ-----------Q-QQQ------------SSSQS---SSS----SQQ----------------------%%%----QQQ------------------------2------SSSS-----------Qo-------------QQQQSSS--S--%%--------------

|

| 93 |

+

------------------------------------------------------------------------------|------------------B---------------------------o----------------------------------||--------------

|

| 94 |

+

-------------------------------------------------------K----------------------|------------------------------------------------------------------------------oo-||--------------

|

| 95 |

+

------------------------------------------------------------------------------|-----------------o-o-------------------------------------------------------------||--------------

|

| 96 |

+

-------------------#------------------Q--------------2-2-----U---------------oo-----------------------------------------------------------------------QQQ---S@S-||---2---%%%----

|

| 97 |

+

--------------------------------------t----------------K--K-------------------|--------@----------T------o-----------------------------B------------------------||--------|-----

|

| 98 |

+

--------------------------------------t------#---------B----------------------|--------tt---------TT----TT-----------------------------B------------------------||-----K--|----T

|

| 99 |

+

------------k------k--------k--------kk------#-----k-gog----k-k---------------##---ygg-tt---k-----TT----TTT-k--------kk------------k-gog----kkk----k-k------k---|g-ggg-b--|----o

|

| 100 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX------%XXXX-XXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX--XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 101 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX------|-XXXXXXX-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX--XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 102 |

;

|

| 103 |

+

------------------------------------------------------------------------------------------------------------------------------------------S-------------------------------------

|

| 104 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 105 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 106 |

+

------------------------------------------------------------------------------------------------SSSSSSS---------SS----S---SSSSSS------------------------------------------------

|

| 107 |

+

-------------------------------------------------------------------------------------------------------------------------------------------------------------------------------o

|

| 108 |

+

-----------------------------------------------------------------------------------------------o--------------------------------------------------------------------------------

|

| 109 |

+

S----SQQQ-----------------------SSSSSSSS-----SSS------------------------------------------------------------------------------------------------%---SS--------------------------

|

| 110 |

+

------------------------------------------------------------------------------------------------------------------------------------------------|-------------------------------

|

| 111 |

+

---------------------------------------------o---------------K-----------------------o------------------------------------------------TU--------|----o-------oo-----------------

|

| 112 |

+

-----------------------o--------o----------------------------2-------------------------------------------------------S----------------T------o--|-------------------------------

|

| 113 |

+

-SSS-SSSSS--SU---------tt--------------------@SS-----------------------------U------USSS-----US--------t-----------------------------S@#--------|----SS------@S--------------o--

|

| 114 |

+

-----------------------tt----T-------------------------B---------------TT----#--T----------------------tt----------------T------S---------------|----------------------TT----#--

|

| 115 |

+

-----------------------tt----TT------------------------B---------------TT----TT-----------------------ttt----T----------TTT---------------K-----|----------------------TT----TT-

|

| 116 |

+

---k--g-----------ggg--tt---kkT----kgggg--k-k------k-gog----kkk---ggg--TT---kTT----k------k-----------ttt---kk----------TTT---------------U-----|--k-g-g--k-k-----gggg-TT---kTg-

|

| 117 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX---XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 118 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX---XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

|

| 119 |

;

|

| 120 |

+

---------------------------------------------------------------------------------------------------K--S---S---------------------------------------------------------------------

|

| 121 |

+

--------------------------------------------------------------------------------------------------SS----------------------------------------------------------------------------

|

| 122 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 123 |

+

--------------------------------------------------------------------------------------------------------------ooSS--------------------------------------------------------------

|

| 124 |

+

--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

|

| 125 |

+

-----------------------------------------------------------------o--------------------------------------------------------------------------------o------------o----------------

|

| 126 |

+

-------------------------------------Q--------------QQQQQS------------------------------oo-----U----------------------SSSS----to----------------%%%-----------------QQ---------U

|

| 127 |

+

-------------------------------------------------------------------------------------------------------o----------------------K------------------|------------------------------

|

| 128 |

+

-----------------------------------------------------------------------------K---------------o-----------------------------------------------K---|----------------------------o-

|

| 129 |

+

-----gg----------------------------------------------------------------------U-------------------------------SS------------------------------U---|------------------------------

|

| 130 |

+

Q-#####-----------------------------USSS-----US--------QQ-----------------------------------S@S-------------------------------Q------------------|---------------------------US-

|

| 131 |

+

-----------------------tt----TT-TT-------------------------------------B------K----------------------------------------------##--------B------K--|------------------------------

|

| 132 |

+

-----------------------Tt----TT-T--------------------------------------B----------------------------------------------------###--------B---------|------------T-----------------

|

| 133 |

+

------------k-----gggg-Tt---kkT-T--k-k-g--k--------------------k---k-gog----k-k----k-------------------------r--------------##-----k-gog----k-k--|-----------oro--gggg-g----k---

|

| 134 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX--XX----XX--XXXX--------%%%%%%%%XXXXXXXXXX--XXXXXXXXXXXXXXXXXXXX-|XXXXXXXXXXX%%%XXXXXXXXXXXXXXXX

|

| 135 |

+

XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX--XX---XXX--XXXX---------||||||-XXXXXXXXXX-XXXXXXXXXXXXXXXXXXXXX-|XXXX-X-XXX--|-XXXXXXXXXXXXXXXX

|

models/example_policy/samples.png

CHANGED

|

|

plots.py

DELETED

|

@@ -1,733 +0,0 @@

|

|

| 1 |

-

import glob

|

| 2 |

-

import json

|

| 3 |

-

import os

|

| 4 |

-

import re

|

| 5 |

-

|

| 6 |

-

import numpy as np

|

| 7 |

-

import pandas as pds

|

| 8 |

-

import matplotlib

|

| 9 |

-

import matplotlib.pyplot as plt

|

| 10 |

-

from math import sqrt

|

| 11 |

-

import torch

|

| 12 |

-

from root import PRJROOT

|

| 13 |

-

from sklearn.manifold import TSNE

|

| 14 |

-

from itertools import product, chain

|

| 15 |

-

# from src.drl.drl_uses import load_cfgs

|

| 16 |

-

from src.gan.gankits import get_decoder, process_onehot

|

| 17 |

-

from src.gan.gans import nz

|

| 18 |

-

from src.smb.level import load_batch, hamming_dis, lvlhcat

|

| 19 |

-

from src.utils.datastruct import RingQueue

|

| 20 |

-

from src.utils.filesys import load_dict_json, getpath

|

| 21 |

-

from src.utils.img import make_img_sheet

|

| 22 |

-

from torch.distributions import Normal

|

| 23 |

-

|

| 24 |

-

matplotlib.rcParams["axes.formatter.limits"] = (-5, 5)

|

| 25 |

-

|

| 26 |

-

|

| 27 |

-

def print_compare_tab():

|

| 28 |

-

rand_lgp, rand_fhp, rand_divs = load_dict_json(

|

| 29 |

-

'test_data/rand_policy/performance.csv', 'lgp', 'fhp', 'diversity'

|

| 30 |

-

)

|

| 31 |

-

rand_performance = {'lgp': rand_lgp, 'fhp': rand_fhp, 'diversity': rand_divs}

|

| 32 |

-

|

| 33 |

-

def _print_line(_data, minimise=False):

|

| 34 |

-

means = _data.mean(axis=-1)

|

| 35 |

-

stds = _data.std(axis=-1)

|

| 36 |

-

max_i, min_i = np.argmax(means), np.argmin(means)

|

| 37 |

-

mean_str_content = [*map(lambda x: '%.4g' % x, _data.mean(axis=-1))]

|

| 38 |

-

std_str_content = [*map(lambda x: '$\pm$%.3g' % x, _data.std(axis=-1))]

|

| 39 |

-

if minimise:

|

| 40 |

-

mean_str_content[min_i] = r'\textbf{%s}' % mean_str_content[min_i]

|

| 41 |

-

mean_str_content[max_i] = r'\textit{%s}' % mean_str_content[max_i]

|

| 42 |

-

std_str_content[min_i] = r'\textbf{%s}' % std_str_content[min_i]

|

| 43 |

-

std_str_content[max_i] = r'\textit{%s}' % std_str_content[max_i]

|

| 44 |

-

else:

|

| 45 |

-

mean_str_content[max_i] = r'\textbf{%s}' % mean_str_content[max_i]

|

| 46 |

-

mean_str_content[min_i] = r'\textit{%s}' % mean_str_content[min_i]

|

| 47 |

-

std_str_content[max_i] = r'\textbf{%s}' % std_str_content[max_i]

|

| 48 |

-

std_str_content[min_i] = r'\textit{%s}' % std_str_content[min_i]

|

| 49 |

-

print(' &', ' & '.join(mean_str_content), r'\\')

|

| 50 |

-

print(' & &', ' & '.join(std_str_content), r'\\')

|

| 51 |

-

pass

|

| 52 |

-

|

| 53 |

-

def _print_block(_task):

|

| 54 |

-

fds = [

|

| 55 |

-

f'sac/{_task}', f'egsac/{_task}', f'asyncsac/{_task}',

|

| 56 |

-

f'pmoe/{_task}', f'dvd/{_task}', f'sunrise/{_task}',

|

| 57 |

-

f'varpm-{_task}/l0.0_m5', f'varpm-{_task}/l0.1_m5', f'varpm-{_task}/l0.2_m5',

|

| 58 |

-

f'varpm-{_task}/l0.3_m5', f'varpm-{_task}/l0.4_m5', f'varpm-{_task}/l0.5_m5'

|

| 59 |

-

]

|

| 60 |

-

rewards, divs = [], []

|

| 61 |

-

for fd in fds:

|

| 62 |

-

rewards.append([])

|

| 63 |

-

divs.append([])

|

| 64 |

-

# print(getpath())

|

| 65 |

-

for path in glob.glob(getpath('test_data', fd, '**', 'performance.csv'), recursive=True):

|

| 66 |

-

reward, div = load_dict_json(path, 'reward', 'diversity')

|

| 67 |

-

rewards[-1].append(reward)

|

| 68 |

-

divs[-1].append(div)

|

| 69 |

-

rewards = np.array(rewards)

|

| 70 |

-

divs = np.array(divs)

|

| 71 |

-

|

| 72 |

-

print(' & \\multirow{2}{*}{Reward}')

|

| 73 |

-

_print_line(rewards)

|

| 74 |

-

print(' \\cline{2-14}')

|

| 75 |

-

print(' & \\multirow{2}{*}{Diversity}')

|

| 76 |

-

_print_line(divs)

|

| 77 |

-

print(' \\cline{2-14}')

|

| 78 |

-

print(' & \\multirow{2}{*}{G-mean}')

|

| 79 |

-

gmean = np.sqrt(rewards * divs)

|

| 80 |

-

_print_line(gmean)

|

| 81 |

-

|

| 82 |

-

print(' \\cline{2-14}')

|

| 83 |

-

print(' & \\multirow{2}{*}{N-rank}')

|

| 84 |

-

r_rank = np.zeros_like(rewards.flatten())

|

| 85 |

-

r_rank[np.argsort(-rewards.flatten())] = np.linspace(1, len(r_rank), len(r_rank))

|

| 86 |

-

|

| 87 |

-

d_rank = np.zeros_like(divs.flatten())

|

| 88 |

-

d_rank[np.argsort(-divs.flatten())] = np.linspace(1, len(r_rank), len(r_rank))

|

| 89 |

-

n_rank = (r_rank.reshape([12, 5]) + d_rank.reshape([12, 5])) / (2 * 5)

|

| 90 |

-

_print_line(n_rank, True)

|

| 91 |

-

|

| 92 |

-

print(' \\multirow{8}{*}{MarioPuzzle}')

|

| 93 |

-

_print_block('fhp')

|

| 94 |

-

print(' \\midrule')

|

| 95 |

-

print(' \\multirow{8}{*}{MultiFacet}')

|

| 96 |

-

_print_block('lgp')

|

| 97 |

-

pass

|

| 98 |

-

|

| 99 |

-

def print_compare_tab_nonrl():

|

| 100 |

-

# rand_lgp, rand_fhp, rand_divs = load_dict_json(

|

| 101 |

-

# 'test_data/rand_policy/performance.csv', 'lgp', 'fhp', 'diversity'

|

| 102 |

-

# )

|

| 103 |

-

# rand_performance = {'lgp': rand_lgp, 'fhp': rand_fhp, 'diversity': rand_divs}

|

| 104 |

-

|

| 105 |

-

def _print_line(_data, minimise=False):

|

| 106 |

-

means = _data.mean(axis=-1)

|

| 107 |

-

stds = _data.std(axis=-1)

|

| 108 |

-

max_i, min_i = np.argmax(means), np.argmin(means)

|

| 109 |

-

mean_str_content = [*map(lambda x: '%.4g' % x, _data.mean(axis=-1))]

|

| 110 |

-

std_str_content = [*map(lambda x: '$\pm$%.3g' % x, _data.std(axis=-1))]

|

| 111 |

-

if minimise:

|

| 112 |

-

mean_str_content[min_i] = r'\textbf{%s}' % mean_str_content[min_i]

|

| 113 |

-

mean_str_content[max_i] = r'\textit{%s}' % mean_str_content[max_i]

|

| 114 |

-

std_str_content[min_i] = r'\textbf{%s}' % std_str_content[min_i]

|

| 115 |

-

std_str_content[max_i] = r'\textit{%s}' % std_str_content[max_i]

|

| 116 |

-

else:

|

| 117 |

-

mean_str_content[max_i] = r'\textbf{%s}' % mean_str_content[max_i]

|

| 118 |

-

mean_str_content[min_i] = r'\textit{%s}' % mean_str_content[min_i]

|

| 119 |

-

std_str_content[max_i] = r'\textbf{%s}' % std_str_content[max_i]

|

| 120 |

-

std_str_content[min_i] = r'\textit{%s}' % std_str_content[min_i]

|

| 121 |

-

print(' &', ' & '.join(mean_str_content), r'\\')

|

| 122 |

-

print(' & &', ' & '.join(std_str_content), r'\\')

|

| 123 |

-

pass

|

| 124 |

-

|

| 125 |

-

def _print_block(_task):

|

| 126 |

-

fds = [

|

| 127 |

-

f'GAN-{_task}', f'DDPM-{_task}',

|

| 128 |

-

f'varpm-{_task}/l0.0_m5', f'varpm-{_task}/l0.1_m5', f'varpm-{_task}/l0.2_m5',

|

| 129 |

-

f'varpm-{_task}/l0.3_m5', f'varpm-{_task}/l0.4_m5', f'varpm-{_task}/l0.5_m5'

|

| 130 |

-

]

|

| 131 |

-

rewards, divs = [], []

|

| 132 |

-

for fd in fds:

|

| 133 |

-

rewards.append([])

|

| 134 |

-

divs.append([])

|

| 135 |

-

# print(getpath())

|

| 136 |

-

for path in glob.glob(getpath('test_data', fd, '**', 'performance.csv'), recursive=True):

|

| 137 |

-

reward, div = load_dict_json(path, 'reward', 'diversity')

|

| 138 |

-

rewards[-1].append(reward)

|

| 139 |

-

divs[-1].append(div)

|

| 140 |

-

rewards = np.array(rewards)

|

| 141 |

-

divs = np.array(divs)

|

| 142 |

-

|

| 143 |

-

print(' & \\multirow{2}{*}{Reward}')

|

| 144 |

-

_print_line(rewards)

|

| 145 |

-

print(' \\cline{2-10}')

|

| 146 |

-

print(' & \\multirow{2}{*}{Diversity}')

|

| 147 |

-

_print_line(divs)

|

| 148 |

-

print(' \\cline{2-10}')

|

| 149 |

-

# print(' & \\multirow{2}{*}{G-mean}')

|

| 150 |

-

# gmean = np.sqrt(rewards * divs)

|

| 151 |

-

# _print_line(gmean)

|

| 152 |

-

#

|

| 153 |

-

# print(' \\cline{2-10}')

|

| 154 |

-

# print(' & \\multirow{2}{*}{N-rank}')

|

| 155 |

-

# r_rank = np.zeros_like(rewards.flatten())

|

| 156 |

-

# r_rank[np.argsort(-rewards.flatten())] = np.linspace(1, len(r_rank), len(r_rank))

|

| 157 |

-

#

|

| 158 |

-

# d_rank = np.zeros_like(divs.flatten())

|

| 159 |

-

# d_rank[np.argsort(-divs.flatten())] = np.linspace(1, len(r_rank), len(r_rank))

|

| 160 |

-

# n_rank = (r_rank.reshape([8, 5]) + d_rank.reshape([8, 5])) / (2 * 5)

|

| 161 |

-

# _print_line(n_rank, True)

|

| 162 |

-

|

| 163 |

-

print(' \\multirow{4}{*}{MarioPuzzle}')

|

| 164 |

-

_print_block('fhp')

|

| 165 |

-

print(' \\midrule')

|

| 166 |

-

print(' \\multirow{4}{*}{MultiFacet}')

|

| 167 |

-

_print_block('lgp')

|

| 168 |

-

pass

|

| 169 |

-

|

| 170 |

-

def plot_cmp_learning_curves(task, save_path='', title=''):

|

| 171 |

-

plt.style.use('seaborn')

|

| 172 |

-

colors = [plt.plot([0, 1], [-1000, -1000])[0].get_color() for _ in range(6)]

|

| 173 |

-

plt.cla()

|

| 174 |

-

plt.style.use('default')

|

| 175 |

-

|

| 176 |

-

# colors = ('#5D2CAB', '#005BD4', '#007CE4', '#0097DD', '#00ADC4', '#00C1A5')

|

| 177 |

-

def _get_algo_data(fd):

|

| 178 |

-

res = []

|

| 179 |

-

for i in range(1, 6):

|

| 180 |

-

path = getpath(fd, f't{i}', 'step_tests.csv')

|

| 181 |

-

try:

|

| 182 |

-

data = pds.read_csv(path)

|

| 183 |

-

trajectory = [

|

| 184 |

-

[float(item['step']), float(item['r-avg']), float(item['diversity'])]

|

| 185 |

-

for _, item in data.iterrows()

|

| 186 |

-

]

|

| 187 |

-

trajectory.sort(key=lambda x: x[0])

|

| 188 |

-

res.append(trajectory)

|

| 189 |

-

if len(trajectory) != 26:

|

| 190 |

-

print('Not complete (%d)/26:' % len(trajectory), path)

|

| 191 |

-

except FileNotFoundError:

|

| 192 |

-

print(path)

|

| 193 |

-

res = np.array(res)

|

| 194 |

-

# rdsum = res[:, :, 1] + res[:, :, 2]

|

| 195 |

-

gmean = np.sqrt(res[:, :, 1] * res[:, :, 2])

|

| 196 |

-

steps = res[0, :, 0]

|

| 197 |

-

# r_avgs = np.mean(res[:, :, 1], axis=0)

|

| 198 |

-

# r_stds = np.std(res[:, :, 1], axis=0)

|

| 199 |

-

# divs = np.mean(res[:, :, 2], axis=0)

|

| 200 |

-

# div_std = np.std(res[:, :, 2], axis=0)

|

| 201 |

-

_performances = {

|

| 202 |

-

'reward': (np.mean(res[:, :, 1], axis=0), np.std(res[:, :, 1], axis=0)),

|

| 203 |

-

'diversity': (np.mean(res[:, :, 2], axis=0), np.std(res[:, :, 2], axis=0)),

|

| 204 |

-

# 'rdsum': (np.mean(rdsum, axis=0), np.std(rdsum, axis=0)),

|

| 205 |

-

'gmean': (np.mean(gmean, axis=0), np.std(gmean, axis=0)),

|

| 206 |

-

}

|

| 207 |

-

# print(_performances['gmean'])

|

| 208 |

-

return steps, _performances

|

| 209 |

-

|

| 210 |

-

def _plot_criterion(_ax, _criterion):

|

| 211 |

-

i, j, k = 0, 0, 0

|

| 212 |

-

for algo, (steps, _performances) in performances.items():

|

| 213 |

-

avgs, stds = _performances[_criterion]

|

| 214 |

-

if '\lambda' in algo:

|

| 215 |

-

ls = '-'

|

| 216 |

-

_c = colors[i]

|

| 217 |

-

i += 1

|

| 218 |

-

elif algo in {'SAC', 'EGSAC', 'ASAC'}:

|

| 219 |

-

ls = ':'

|

| 220 |

-

_c = colors[j]

|

| 221 |

-

j += 1

|

| 222 |

-

else:

|

| 223 |

-

ls = '--'

|

| 224 |

-

_c = colors[j]

|

| 225 |

-

j += 1

|

| 226 |

-

_ax.plot(steps, avgs, color=_c, label=algo, ls=ls)

|

| 227 |

-

_ax.fill_between(steps, avgs - stds, avgs + stds, color=_c, alpha=0.15)

|

| 228 |

-

_ax.grid(False)

|

| 229 |

-

# plt.plot(steps, avgs, label=algo)

|

| 230 |

-

# plt.plot(_performances, label=algo)

|

| 231 |

-

pass

|

| 232 |

-

_ax.set_xlabel('Time step')

|

| 233 |

-

|

| 234 |

-

fig, ax = plt.subplots(1, 3, figsize=(9.6, 3.2), dpi=250, width_ratios=[1, 1, 1])

|

| 235 |

-

# fig, (ax1, ax2) = plt.subplots(1, 2, figsize=(5, 4), dpi=256)

|

| 236 |

-

# fig, ax1 = plt.subplots(1, 1, figsize=(8, 3), dpi=256)

|

| 237 |

-

# ax2 = ax1.twinx()

|

| 238 |

-

# fig = plt.plot(figsize=(4, 3), dpi=256)

|

| 239 |

-

performances = {

|

| 240 |

-

'SUNRISE': _get_algo_data(f'test_data/sunrise/{task}'),

|

| 241 |

-

'$\lambda$=0.0': _get_algo_data(f'test_data/varpm-{task}/l0.0_m5'),

|

| 242 |

-

'DvD': _get_algo_data(f'test_data/dvd/{task}'),

|

| 243 |

-

'$\lambda$=0.1': _get_algo_data(f'test_data/varpm-{task}/l0.1_m5'),

|

| 244 |

-

'PMOE': _get_algo_data(f'test_data/pmoe/{task}'),

|

| 245 |

-

'$\lambda$=0.2': _get_algo_data(f'test_data/varpm-{task}/l0.2_m5'),

|

| 246 |

-

'SAC': _get_algo_data(f'test_data/sac/{task}'),

|

| 247 |

-

'$\lambda$=0.3': _get_algo_data(f'test_data/varpm-{task}/l0.3_m5'),

|

| 248 |

-

'EGSAC': _get_algo_data(f'test_data/egsac/{task}'),

|

| 249 |

-

'$\lambda$=0.4': _get_algo_data(f'test_data/varpm-{task}/l0.4_m5'),

|

| 250 |

-

'ASAC': _get_algo_data(f'test_data/asyncsac/{task}'),

|

| 251 |

-

'$\lambda$=0.5': _get_algo_data(f'test_data/varpm-{task}/l0.5_m5'),

|

| 252 |

-

}

|

| 253 |

-

# _plot_algo(*_get_algo_data(glob.glob(getpath('test_data/SAC', '**', 'step_tests.csv'))), 'SAC')

|

| 254 |

-

# _plot_algo(*_get_algo_data(glob.glob(getpath('test_data/EGSAC', '**', 'step_tests.csv'))), 'EGSAC')

|

| 255 |

-

# _plot_algo(*_get_algo_data(glob.glob(getpath('test_data/AsyncSAC', '**', 'step_tests.csv'))), 'AsyncSAC')

|

| 256 |

-

# _plot_algo(*_get_algo_data(glob.glob(getpath('test_data/SUNRISE', '**', 'step_tests.csv'))), 'SUNRISE')

|

| 257 |

-

# _plot_algo(*_get_algo_data(glob.glob(getpath('test_data/DvD-ES', '**', 'step_tests.csv'))), 'DvD-ES')

|

| 258 |

-

# _plot_algo(*_get_algo_data(glob.glob(getpath('test_data/lbd-m-crosstest/l0.04_m5', '**', 'step_tests.csv'))), 'NCESAC')

|

| 259 |

-

|

| 260 |

-

|

| 261 |

-

|

| 262 |

-

_plot_criterion(ax[0], 'reward')

|

| 263 |

-

_plot_criterion(ax[1], 'diversity')

|

| 264 |

-

# _plot_criterion(ax[2], 'rdsum')

|

| 265 |

-

_plot_criterion(ax[2], 'gmean')

|

| 266 |

-

# ax[0].set_title(f'{title} reward')

|

| 267 |

-

ax[0].set_title(f'Cumulative Reward')

|

| 268 |

-

ax[1].set_title('Diversity Score')

|

| 269 |

-

# ax[2].set_title('Summation')

|

| 270 |

-

ax[2].set_title('G-mean')

|

| 271 |

-

# plt.title(title)

|

| 272 |

-

|

| 273 |

-

lines, labels = fig.axes[-1].get_legend_handles_labels()

|

| 274 |

-

fig.suptitle(title, fontsize=14)

|

| 275 |

-

plt.tight_layout(pad=0.5)

|

| 276 |

-

if save_path:

|

| 277 |

-

plt.savefig(getpath(save_path))

|

| 278 |

-

else:

|

| 279 |

-

plt.show()

|

| 280 |

-

|

| 281 |

-

plt.cla()

|

| 282 |

-

plt.figure(figsize=(9.6, 2.4), dpi=250)

|

| 283 |

-

plt.grid(False)

|

| 284 |

-

plt.axis('off')

|

| 285 |

-

plt.yticks([1.0])

|

| 286 |

-

plt.legend(

|

| 287 |

-

lines, labels, loc='lower center', ncol=6, edgecolor='white', fontsize=15,

|

| 288 |

-

columnspacing=0.8, borderpad=0.16, labelspacing=0.2, handlelength=2.4, handletextpad=0.3

|

| 289 |

-

)

|

| 290 |

-

plt.tight_layout(pad=0.5)

|

| 291 |

-

plt.show()

|

| 292 |

-

pass

|

| 293 |

-

|

| 294 |

-

def plot_crosstest_scatters(rfunc, xrange=None, yrange=None, title=''):

|

| 295 |

-

def get_pareto():

|

| 296 |

-

all_points = list(chain(*scatter_groups.values())) + cmp_points

|

| 297 |

-

res = []

|

| 298 |

-

for p in all_points:

|

| 299 |

-

non_dominated = True

|

| 300 |

-

for q in all_points:

|

| 301 |

-

if q[0] >= p[0] and q[1] >= p[1] and (q[0] > p[0] or q[1] > p[1]):

|

| 302 |

-