Spaces:

Runtime error

Runtime error

Commit

•

102e437

1

Parent(s):

2ce0924

add food ner gradio app

Browse files- .gitignore +1 -0

- app.py +68 -0

- app_images/featured.jpg +0 -0

- app_images/salt_butter_new.png +0 -0

- app_images/salt_butter_old.png +0 -0

- app_images/sodium.jpg +0 -0

- app_text/blog_text.md +66 -0

.gitignore

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

.DS_Store

|

app.py

ADDED

|

@@ -0,0 +1,68 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

from transformers import AutoTokenizer, AutoModelForTokenClassification, pipeline

|

| 3 |

+

|

| 4 |

+

|

| 5 |

+

def convert_hf_ents_to_gradio(hf_ents):

|

| 6 |

+

gradio_ents = []

|

| 7 |

+

for hf_ent in hf_ents:

|

| 8 |

+

gradio_ent = {"start" : hf_ent['start'], "end": hf_ent['end'], "entity": hf_ent['entity_group']}

|

| 9 |

+

gradio_ents.append(gradio_ent)

|

| 10 |

+

return gradio_ents

|

| 11 |

+

|

| 12 |

+

|

| 13 |

+

def tag(text):

|

| 14 |

+

hf_ents = nlp(text, aggregation_strategy="first")

|

| 15 |

+

gradio_ents = convert_hf_ents_to_gradio(hf_ents)

|

| 16 |

+

doc ={"text": text,

|

| 17 |

+

"entities": gradio_ents}

|

| 18 |

+

return doc

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

if __name__ == "__main__":

|

| 22 |

+

model_ckpt = "carolanderson/roberta-base-food-ner"

|

| 23 |

+

model = AutoModelForTokenClassification.from_pretrained(model_ckpt)

|

| 24 |

+

tokenizer = AutoTokenizer.from_pretrained("roberta-base", add_prefix_space=True)

|

| 25 |

+

nlp = pipeline("ner", model=model, tokenizer=tokenizer)

|

| 26 |

+

|

| 27 |

+

with open("app_text/blog_text.md", "r") as f:

|

| 28 |

+

blog_text = f.read()

|

| 29 |

+

|

| 30 |

+

examples=[

|

| 31 |

+

["Saute the onions in olive oil until browned."],

|

| 32 |

+

["Add bourbon and sweet vermouth to the shaker."],

|

| 33 |

+

["Salt the water and butter the bread."],

|

| 34 |

+

["Add salt to the water and spread butter on the bread."]]

|

| 35 |

+

|

| 36 |

+

with gr.Blocks() as demo:

|

| 37 |

+

gr.Markdown("# Extracting Food Mentions from Text")

|

| 38 |

+

html = ("<div style='max-width:100%; max-height:200px; overflow:auto'>"

|

| 39 |

+

+ "<img src='file=app_images/featured.jpg' alt='Cookbook'>"

|

| 40 |

+

+ "</div>"

|

| 41 |

+

)

|

| 42 |

+

gr.HTML(html)

|

| 43 |

+

gr.Markdown("This is a model I trained to extract food terms from text. "

|

| 44 |

+

"I fine tuned RoBERTa base on a dataset I created by labeling a set of recipes.")

|

| 45 |

+

gr.Markdown("Details about the training data and training process are below.")

|

| 46 |

+

with gr.Row():

|

| 47 |

+

inp = gr.Textbox(placeholder="Enter text here...", lines=4)

|

| 48 |

+

out = gr.HighlightedText()

|

| 49 |

+

btn = gr.Button("Run")

|

| 50 |

+

gr.Examples(examples, inp)

|

| 51 |

+

btn.click(fn=tag, inputs=inp, outputs=out)

|

| 52 |

+

gr.Markdown(blog_text)

|

| 53 |

+

html_2 = ("<div style='max-width:100%; max-height:50px; overflow:auto'>"

|

| 54 |

+

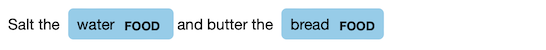

+ "<img src='file=app_images/salt_butter_old.png' alt='Butter and Salt (old model)'>"

|

| 55 |

+

+ "</div>"

|

| 56 |

+

)

|

| 57 |

+

gr.HTML(html_2)

|

| 58 |

+

gr.Markdown("I speculated then that these kinds of errors could probably be reduced by using"

|

| 59 |

+

" contextual word embeddings, such as ELMo or BERT embeddings, or by using BERT itself "

|

| 60 |

+

"(fine-tuning it on the NER task)."

|

| 61 |

+

" That turned out to be true -- the current, RoBERTa model correctly handles these cases:")

|

| 62 |

+

html_3 = ("<div style='max-width:100%; max-height:50px; overflow:auto'>"

|

| 63 |

+

+ "<img src='file=app_images/salt_butter_new.png' alt='Butter and Salt (new model)'>"

|

| 64 |

+

+ "</div>"

|

| 65 |

+

)

|

| 66 |

+

gr.HTML(html_3)

|

| 67 |

+

|

| 68 |

+

demo.launch()

|

app_images/featured.jpg

ADDED

|

app_images/salt_butter_new.png

ADDED

|

app_images/salt_butter_old.png

ADDED

|

app_images/sodium.jpg

ADDED

|

app_text/blog_text.md

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# How I trained this model

|

| 2 |

+

## Training Data

|

| 3 |

+

As my training data, I used [this dataset from Kaggle](https://www.kaggle.com/hugodarwood/epirecipes), consisting of more than 20,000 recipes from [Epicurious.com](www.epicurious.com). I hand-labeled food mentions in a random subset of 300 recipes, consisting of 981 sentences, using the awesome labeling tool [Prodigy](https://prodi.gy).

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

### Exploring the data

|

| 7 |

+

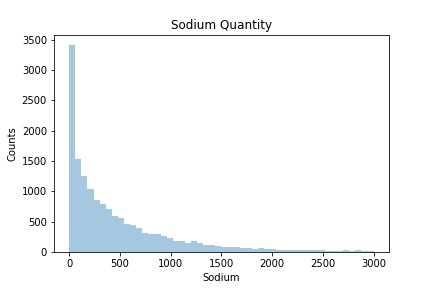

The Kaggle dataset includes many fields besides the recipes themselves, such as Title, Date, Category, Calories, Fat, Protein, Sodium, and Ingredients. In future projects, I'll use these as labels to train classification or regression models.

|

| 8 |

+

|

| 9 |

+

#### Common foods

|

| 10 |

+

What kinds of foods are most commonly included in the training data? To answer this question, I determined the most common food terms after labeling 300 recipes. The most common one-word foods were:

|

| 11 |

+

<br>

|

| 12 |

+

|

| 13 |

+

| Term | # Mentions|

|

| 14 |

+

| ----------| ----------|

|

| 15 |

+

| salt | 301 |

|

| 16 |

+

| pepper | 202 |

|

| 17 |

+

| oil | 122 |

|

| 18 |

+

| butter | 122 |

|

| 19 |

+

| sugar | 114 |

|

| 20 |

+

| sauce | 89 |

|

| 21 |

+

| garlic | 78 |

|

| 22 |

+

| dough | 69 |

|

| 23 |

+

| onion | 66 |

|

| 24 |

+

| flour | 63 |

|

| 25 |

+

|

| 26 |

+

<br>

|

| 27 |

+

And the most common two-word food terms were:

|

| 28 |

+

<br>

|

| 29 |

+

|

| 30 |

+

| Term | # Mentions|

|

| 31 |

+

| ----------| ----------|

|

| 32 |

+

|lemon juice| 47

|

| 33 |

+

|olive oil|29

|

| 34 |

+

|lime juice| 16

|

| 35 |

+

|flour mixture| 12

|

| 36 |

+

|sesame seeds| 11

|

| 37 |

+

|egg mixture| 10

|

| 38 |

+

|pan juices|10

|

| 39 |

+

|baking powder| 10

|

| 40 |

+

|cream cheese|10

|

| 41 |

+

|green onions| 10

|

| 42 |

+

|

| 43 |

+

<br>

|

| 44 |

+

You can see that the recipes include both baking and non-baking cooking. They span a wide variety of cooking styles and techniques. They also include cocktail recipes and a detailed set of instructions for setting up a beachside clambake!

|

| 45 |

+

|

| 46 |

+

|

| 47 |

+

### Difficult labeling decisions

|

| 48 |

+

You'd think labeling food would be easy, but I faced some hard decisions. Is water food? Sometimes water is an ingredient, but other times it's used almost as a tool, as in "plunge asparagus into boiling water." What about phrases like "egg mixture"? Should "mixture" be considered part of the food item? Or how about the word "slices" in the sentence "Arrange slices on a platter"? These decisions would normally be guided by a business use case. But here I was just training a model for fun, so these decisions were tough, and a bit arbitrary.

|

| 49 |

+

|

| 50 |

+

## Model Architecture

|

| 51 |

+

I fine tuned a few different BERT-style models (BERT, distilBERT, and RoBERTa) and achieved the best performance with RoBERTa. You can see the training code [here.](https://github.com/carolmanderson/food/blob/master/notebooks/modeling/Train_BERT.ipynb)

|

| 52 |

+

|

| 53 |

+

A few years ago I trained a model with a completely different architecture on the same dataset. That model had a bidirectional LSTM layer followed by a softmax output layer to predict token labels. I used pretrained GloVe embeddings as the only feature. You can see the old training code [here.](https://github.com/carolmanderson/food/blob/master/notebooks/modeling/Train_basic_LSTM_model.ipynb)

|

| 54 |

+

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

## Model Performance

|

| 58 |

+

This model achieved 95% recall and 96% precision when evaluated at the entity level (i.e., the exact beginning and end of each `FOOD` entity must match the ground truth labels in order to count as a true postive).

|

| 59 |

+

|

| 60 |

+

For comparison, the LSTM model that I previously trained achieved both precision and recall of 92%.

|

| 61 |

+

|

| 62 |

+

One of the errors I commonly saw with the older LSTM model involved words with multiple meanings. The word "salt", for example, can be a food, but it can also be a verb, as in "Lightly salt the vegetables." The word "butter" is similarly ambiguous — you can use butter as a ingredient, but you can also "butter the pan." The old model seemed to treat all mentions of these words as food:

|

| 63 |

+

|

| 64 |

+

|

| 65 |

+

|

| 66 |

+

|