Spaces:

Running

Running

Commit

·

a6ef7ad

1

Parent(s):

380e6c3

adding library of cells with set parameters

Browse files- app.py +142 -37

- examples/HEK_PhC.png +0 -0

- examples/U2OS_BF.png +0 -0

- examples/U2OS_QPI.png +0 -0

- examples/ctc_glioblastoma_astrocytoma_U373.png +0 -0

- examples/mousekidney.png +0 -0

- examples/neuromast2.png +0 -0

- misc/czb_mark.png +0 -0

- misc/czbsf_logo.png +0 -0

app.py

CHANGED

|

@@ -46,12 +46,12 @@ class VSGradio:

|

|

| 46 |

new_width = int(width * scale_factor)

|

| 47 |

return resize(inp, (new_height, new_width), anti_aliasing=True)

|

| 48 |

|

| 49 |

-

def predict(self, inp,

|

| 50 |

# Normalize the input and convert to tensor

|

| 51 |

inp = self.normalize_fov(inp)

|

| 52 |

original_shape = inp.shape

|

| 53 |

# Resize the input image to the expected cell diameter

|

| 54 |

-

inp = apply_rescale_image(inp,

|

| 55 |

|

| 56 |

# Convert the input to a tensor

|

| 57 |

inp = torch.from_numpy(np.array(inp).astype(np.float32))

|

|

@@ -119,22 +119,28 @@ def apply_image_adjustments(image, invert_image: bool, gamma_factor: float):

|

|

| 119 |

return exposure.rescale_intensity(image, out_range=(0, 255)).astype(np.uint8)

|

| 120 |

|

| 121 |

|

| 122 |

-

def apply_rescale_image(

|

| 123 |

-

image

|

| 124 |

-

)

|

| 125 |

-

# Assume the model was trained with cells ~30 microns in diameter

|

| 126 |

-

# Resize the input image according to the scaling factor

|

| 127 |

-

scale_factor = expected_cell_diameter / float(cell_diameter)

|

| 128 |

image = resize(

|

| 129 |

image,

|

| 130 |

-

(int(image.shape[0] *

|

| 131 |

anti_aliasing=True,

|

| 132 |

)

|

| 133 |

return image

|

| 134 |

|

| 135 |

|

| 136 |

-

#

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 137 |

def load_css(file_path):

|

|

|

|

| 138 |

with open(file_path, "r") as file:

|

| 139 |

return file.read()

|

| 140 |

|

|

@@ -163,7 +169,14 @@ if __name__ == "__main__":

|

|

| 163 |

with gr.Blocks(css=load_css("style.css")) as demo:

|

| 164 |

# Title and description

|

| 165 |

gr.HTML(

|

| 166 |

-

"

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 167 |

)

|

| 168 |

gr.HTML(

|

| 169 |

"""

|

|

@@ -171,9 +184,11 @@ if __name__ == "__main__":

|

|

| 171 |

<p><b>Model:</b> VSCyto2D</p>

|

| 172 |

<p><b>Input:</b> label-free image (e.g., QPI or phase contrast).</p>

|

| 173 |

<p><b>Output:</b> Virtual staining of nucleus and membrane.</p>

|

| 174 |

-

<p><b>Note:</b> The model works well with QPI, and sometimes generalizes to phase contrast and DIC

|

|

|

|

|

|

|

| 175 |

<p>Check out our preprint: <a href='https://www.biorxiv.org/content/10.1101/2024.05.31.596901' target='_blank'><i>Liu et al., Robust virtual staining of landmark organelles</i></a></p>

|

| 176 |

-

<p> For training

|

| 177 |

</div>

|

| 178 |

"""

|

| 179 |

)

|

|

@@ -182,7 +197,10 @@ if __name__ == "__main__":

|

|

| 182 |

with gr.Row():

|

| 183 |

input_image = gr.Image(type="numpy", image_mode="L", label="Upload Image")

|

| 184 |

adjusted_image = gr.Image(

|

| 185 |

-

type="numpy",

|

|

|

|

|

|

|

|

|

|

| 186 |

)

|

| 187 |

|

| 188 |

with gr.Column():

|

|

@@ -201,20 +219,21 @@ if __name__ == "__main__":

|

|

| 201 |

|

| 202 |

# Slider for gamma adjustment

|

| 203 |

gamma_factor = gr.Slider(

|

| 204 |

-

label="Adjust Gamma", minimum=0.

|

| 205 |

)

|

| 206 |

|

| 207 |

# Input field for the cell diameter in microns

|

| 208 |

-

|

| 209 |

-

label="

|

| 210 |

-

value="

|

| 211 |

-

placeholder="

|

| 212 |

)

|

| 213 |

|

| 214 |

# Checkbox for merging predictions

|

| 215 |

-

merge_checkbox = gr.Checkbox(

|

|

|

|

|

|

|

| 216 |

|

| 217 |

-

# Update the adjusted image based on all the transformations

|

| 218 |

input_image.change(

|

| 219 |

fn=apply_image_adjustments,

|

| 220 |

inputs=[input_image, preprocess_invert, gamma_factor],

|

|

@@ -226,6 +245,15 @@ if __name__ == "__main__":

|

|

| 226 |

inputs=[input_image, preprocess_invert, gamma_factor],

|

| 227 |

outputs=adjusted_image,

|

| 228 |

)

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 229 |

|

| 230 |

preprocess_invert.change(

|

| 231 |

fn=apply_image_adjustments,

|

|

@@ -237,27 +265,64 @@ if __name__ == "__main__":

|

|

| 237 |

submit_button = gr.Button("Submit")

|

| 238 |

|

| 239 |

# Function to handle prediction and merging if needed

|

| 240 |

-

def submit_and_merge(inp,

|

| 241 |

-

nucleus, membrane = vsgradio.predict(inp,

|

| 242 |

if merge:

|

| 243 |

merged = merge_images(nucleus, membrane)

|

| 244 |

-

return

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 245 |

else:

|

| 246 |

-

return

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 247 |

|

| 248 |

submit_button.click(

|

| 249 |

fn=submit_and_merge,

|

| 250 |

-

inputs=[adjusted_image,

|

| 251 |

-

outputs=[

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 252 |

)

|

| 253 |

|

| 254 |

# Function to handle merging the two predictions after they are shown

|

| 255 |

def merge_predictions_fn(nucleus_image, membrane_image, merge):

|

| 256 |

if merge:

|

| 257 |

merged = merge_images(nucleus_image, membrane_image)

|

| 258 |

-

return

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 259 |

else:

|

| 260 |

-

return

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 261 |

|

| 262 |

# Toggle between merged and separate views when the checkbox is checked

|

| 263 |

merge_checkbox.change(

|

|

@@ -267,21 +332,61 @@ if __name__ == "__main__":

|

|

| 267 |

)

|

| 268 |

|

| 269 |

# Example images and article

|

| 270 |

-

gr.Examples(

|

| 271 |

examples=[

|

| 272 |

-

"examples/a549.png",

|

| 273 |

-

"examples/hek.png",

|

| 274 |

-

"examples/

|

| 275 |

-

"examples/livecell_A172.png",

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 276 |

],

|

| 277 |

-

inputs=input_image,

|

| 278 |

)

|

| 279 |

-

|

| 280 |

# Article or footer information

|

| 281 |

gr.HTML(

|

| 282 |

"""

|

| 283 |

<div class='article-block'>

|

| 284 |

-

<

|

|

|

|

|

|

|

|

|

|

| 285 |

</div>

|

| 286 |

"""

|

| 287 |

)

|

|

|

|

| 46 |

new_width = int(width * scale_factor)

|

| 47 |

return resize(inp, (new_height, new_width), anti_aliasing=True)

|

| 48 |

|

| 49 |

+

def predict(self, inp, scaling_factor: float):

|

| 50 |

# Normalize the input and convert to tensor

|

| 51 |

inp = self.normalize_fov(inp)

|

| 52 |

original_shape = inp.shape

|

| 53 |

# Resize the input image to the expected cell diameter

|

| 54 |

+

inp = apply_rescale_image(inp, scaling_factor)

|

| 55 |

|

| 56 |

# Convert the input to a tensor

|

| 57 |

inp = torch.from_numpy(np.array(inp).astype(np.float32))

|

|

|

|

| 119 |

return exposure.rescale_intensity(image, out_range=(0, 255)).astype(np.uint8)

|

| 120 |

|

| 121 |

|

| 122 |

+

def apply_rescale_image(image, scaling_factor: float):

|

| 123 |

+

"""Resize the input image according to the scaling factor"""

|

| 124 |

+

scaling_factor = float(scaling_factor)

|

|

|

|

|

|

|

|

|

|

| 125 |

image = resize(

|

| 126 |

image,

|

| 127 |

+

(int(image.shape[0] * scaling_factor), int(image.shape[1] * scaling_factor)),

|

| 128 |

anti_aliasing=True,

|

| 129 |

)

|

| 130 |

return image

|

| 131 |

|

| 132 |

|

| 133 |

+

# Function to clear outputs when a new image is uploaded

|

| 134 |

+

def clear_outputs(image):

|

| 135 |

+

return (

|

| 136 |

+

image,

|

| 137 |

+

None,

|

| 138 |

+

None,

|

| 139 |

+

) # Return None for adjusted_image, output_nucleus, and output_membrane

|

| 140 |

+

|

| 141 |

+

|

| 142 |

def load_css(file_path):

|

| 143 |

+

"""Load custom CSS"""

|

| 144 |

with open(file_path, "r") as file:

|

| 145 |

return file.read()

|

| 146 |

|

|

|

|

| 169 |

with gr.Blocks(css=load_css("style.css")) as demo:

|

| 170 |

# Title and description

|

| 171 |

gr.HTML(

|

| 172 |

+

"""

|

| 173 |

+

<div style="display: flex; justify-content: center; align-items: center; text-align: center;">

|

| 174 |

+

<a href="https://www.czbiohub.org/sf/" target="_blank">

|

| 175 |

+

<img src="https://huggingface.co/spaces/compmicro-czb/VirtualStaining/resolve/main/misc/czb_mark.png" style="width: 100px; height: auto; margin-right: 10px;">

|

| 176 |

+

</a>

|

| 177 |

+

<div class='title-block'>Image Translation (Virtual Staining) of cellular landmark organelles</div>

|

| 178 |

+

</div>

|

| 179 |

+

"""

|

| 180 |

)

|

| 181 |

gr.HTML(

|

| 182 |

"""

|

|

|

|

| 184 |

<p><b>Model:</b> VSCyto2D</p>

|

| 185 |

<p><b>Input:</b> label-free image (e.g., QPI or phase contrast).</p>

|

| 186 |

<p><b>Output:</b> Virtual staining of nucleus and membrane.</p>

|

| 187 |

+

<p><b>Note:</b> The model works well with QPI, and sometimes generalizes to phase contrast and DIC.<br>

|

| 188 |

+

It was trained primarily on HEK293T, BJ5, and A549 cells imaged at 20x. <br>

|

| 189 |

+

We continue to diagnose and improve generalization<p>

|

| 190 |

<p>Check out our preprint: <a href='https://www.biorxiv.org/content/10.1101/2024.05.31.596901' target='_blank'><i>Liu et al., Robust virtual staining of landmark organelles</i></a></p>

|

| 191 |

+

<p> For training your own model and analyzing large amounts of data, use our <a href='https://github.com/mehta-lab/VisCy/tree/main/examples/virtual_staining/dlmbl_exercise' target='_blank'>GitHub repository</a>.</p>

|

| 192 |

</div>

|

| 193 |

"""

|

| 194 |

)

|

|

|

|

| 197 |

with gr.Row():

|

| 198 |

input_image = gr.Image(type="numpy", image_mode="L", label="Upload Image")

|

| 199 |

adjusted_image = gr.Image(

|

| 200 |

+

type="numpy",

|

| 201 |

+

image_mode="L",

|

| 202 |

+

label="Adjusted Image (Preview)",

|

| 203 |

+

interactive=False,

|

| 204 |

)

|

| 205 |

|

| 206 |

with gr.Column():

|

|

|

|

| 219 |

|

| 220 |

# Slider for gamma adjustment

|

| 221 |

gamma_factor = gr.Slider(

|

| 222 |

+

label="Adjust Gamma", minimum=0.01, maximum=5.0, value=1.0, step=0.1

|

| 223 |

)

|

| 224 |

|

| 225 |

# Input field for the cell diameter in microns

|

| 226 |

+

scaling_factor = gr.Textbox(

|

| 227 |

+

label="Rescaling image factor",

|

| 228 |

+

value="1.0",

|

| 229 |

+

placeholder="Rescaling factor for the input image",

|

| 230 |

)

|

| 231 |

|

| 232 |

# Checkbox for merging predictions

|

| 233 |

+

merge_checkbox = gr.Checkbox(

|

| 234 |

+

label="Merge Predictions into one image", value=True

|

| 235 |

+

)

|

| 236 |

|

|

|

|

| 237 |

input_image.change(

|

| 238 |

fn=apply_image_adjustments,

|

| 239 |

inputs=[input_image, preprocess_invert, gamma_factor],

|

|

|

|

| 245 |

inputs=[input_image, preprocess_invert, gamma_factor],

|

| 246 |

outputs=adjusted_image,

|

| 247 |

)

|

| 248 |

+

cell_name = gr.Textbox(

|

| 249 |

+

label="Cell Name", placeholder="Cell Type", visible=False

|

| 250 |

+

)

|

| 251 |

+

imaging_modality = gr.Textbox(

|

| 252 |

+

label="Imaging Modality", placeholder="Imaging Modality", visible=False

|

| 253 |

+

)

|

| 254 |

+

references = gr.Textbox(

|

| 255 |

+

label="References", placeholder="References", visible=False

|

| 256 |

+

)

|

| 257 |

|

| 258 |

preprocess_invert.change(

|

| 259 |

fn=apply_image_adjustments,

|

|

|

|

| 265 |

submit_button = gr.Button("Submit")

|

| 266 |

|

| 267 |

# Function to handle prediction and merging if needed

|

| 268 |

+

def submit_and_merge(inp, scaling_factor, merge):

|

| 269 |

+

nucleus, membrane = vsgradio.predict(inp, scaling_factor)

|

| 270 |

if merge:

|

| 271 |

merged = merge_images(nucleus, membrane)

|

| 272 |

+

return (

|

| 273 |

+

merged,

|

| 274 |

+

gr.update(visible=True),

|

| 275 |

+

nucleus,

|

| 276 |

+

gr.update(visible=False),

|

| 277 |

+

membrane,

|

| 278 |

+

gr.update(visible=False),

|

| 279 |

+

)

|

| 280 |

else:

|

| 281 |

+

return (

|

| 282 |

+

None,

|

| 283 |

+

gr.update(visible=False),

|

| 284 |

+

nucleus,

|

| 285 |

+

gr.update(visible=True),

|

| 286 |

+

membrane,

|

| 287 |

+

gr.update(visible=True),

|

| 288 |

+

)

|

| 289 |

|

| 290 |

submit_button.click(

|

| 291 |

fn=submit_and_merge,

|

| 292 |

+

inputs=[adjusted_image, scaling_factor, merge_checkbox],

|

| 293 |

+

outputs=[

|

| 294 |

+

merged_image,

|

| 295 |

+

merged_image,

|

| 296 |

+

output_nucleus,

|

| 297 |

+

output_nucleus,

|

| 298 |

+

output_membrane,

|

| 299 |

+

output_membrane,

|

| 300 |

+

],

|

| 301 |

+

)

|

| 302 |

+

# Clear everything when the input image changes

|

| 303 |

+

input_image.change(

|

| 304 |

+

fn=clear_outputs,

|

| 305 |

+

inputs=input_image,

|

| 306 |

+

outputs=[adjusted_image, output_nucleus, output_membrane],

|

| 307 |

)

|

| 308 |

|

| 309 |

# Function to handle merging the two predictions after they are shown

|

| 310 |

def merge_predictions_fn(nucleus_image, membrane_image, merge):

|

| 311 |

if merge:

|

| 312 |

merged = merge_images(nucleus_image, membrane_image)

|

| 313 |

+

return (

|

| 314 |

+

merged,

|

| 315 |

+

gr.update(visible=True),

|

| 316 |

+

gr.update(visible=False),

|

| 317 |

+

gr.update(visible=False),

|

| 318 |

+

)

|

| 319 |

else:

|

| 320 |

+

return (

|

| 321 |

+

None,

|

| 322 |

+

gr.update(visible=False),

|

| 323 |

+

gr.update(visible=True),

|

| 324 |

+

gr.update(visible=True),

|

| 325 |

+

)

|

| 326 |

|

| 327 |

# Toggle between merged and separate views when the checkbox is checked

|

| 328 |

merge_checkbox.change(

|

|

|

|

| 332 |

)

|

| 333 |

|

| 334 |

# Example images and article

|

| 335 |

+

examples_component = gr.Examples(

|

| 336 |

examples=[

|

| 337 |

+

["examples/a549.png", "A549", "QPI", 1.0, False, "1.0", "1"],

|

| 338 |

+

["examples/hek.png", "HEK293T", "QPI", 1.0, False, "1.0", "1"],

|

| 339 |

+

["examples/HEK_PhC.png", "HEK293T", "PhC", 1.2, True, "1.0", "1"],

|

| 340 |

+

["examples/livecell_A172.png", "A172", "PhC", 1.0, True, "1.0", "2"],

|

| 341 |

+

["examples/ctc_HeLa.png", "HeLa", "DIC", 0.7, False, "0.7", "3"],

|

| 342 |

+

[

|

| 343 |

+

"examples/ctc_glioblastoma_astrocytoma_U373.png",

|

| 344 |

+

"Glioblastoma",

|

| 345 |

+

"PhC",

|

| 346 |

+

1.0,

|

| 347 |

+

True,

|

| 348 |

+

"2.0",

|

| 349 |

+

"3",

|

| 350 |

+

],

|

| 351 |

+

["examples/U2OS_BF.png", "U2OS", "Brightfield", 1.0, False, "0.3", "4"],

|

| 352 |

+

["examples/U2OS_QPI.png", "U2OS", "QPI", 1.0, False, "0.3", "4"],

|

| 353 |

+

[

|

| 354 |

+

"examples/neuromast2.png",

|

| 355 |

+

"Zebrafish neuromast",

|

| 356 |

+

"QPI",

|

| 357 |

+

0.6,

|

| 358 |

+

False,

|

| 359 |

+

"1.2",

|

| 360 |

+

"1",

|

| 361 |

+

],

|

| 362 |

+

[

|

| 363 |

+

"examples/mousekidney.png",

|

| 364 |

+

"Mouse Kidney",

|

| 365 |

+

"QPI",

|

| 366 |

+

0.8,

|

| 367 |

+

False,

|

| 368 |

+

"0.6",

|

| 369 |

+

"1",

|

| 370 |

+

],

|

| 371 |

+

],

|

| 372 |

+

inputs=[

|

| 373 |

+

input_image,

|

| 374 |

+

cell_name,

|

| 375 |

+

imaging_modality,

|

| 376 |

+

gamma_factor,

|

| 377 |

+

preprocess_invert,

|

| 378 |

+

scaling_factor,

|

| 379 |

+

references,

|

| 380 |

],

|

|

|

|

| 381 |

)

|

|

|

|

| 382 |

# Article or footer information

|

| 383 |

gr.HTML(

|

| 384 |

"""

|

| 385 |

<div class='article-block'>

|

| 386 |

+

<li>1. <a href='https://www.biorxiv.org/content/10.1101/2024.05.31.596901' target='_blank'>Liu et al., Robust virtual staining of landmark organelles</a></li>

|

| 387 |

+

<li>2. <a href='https://sartorius-research.github.io/LIVECell/' target='_blank'>Edlund et. al. LIVECEll-A large-scale dataset for label-free live cell segmentation</a></li>

|

| 388 |

+

<li>3. <a href='https://celltrackingchallenge.net/' target='_blank'>Maska et. al.,The cell tracking challenge: 10 years of objective benchmarking </a></li>

|

| 389 |

+

<li>4. <a href='https://elifesciences.org/articles/55502' target='_blank'>Guo et. al., Revealing architectural order with quantitative label-free imaging and deep learning</a></li>

|

| 390 |

</div>

|

| 391 |

"""

|

| 392 |

)

|

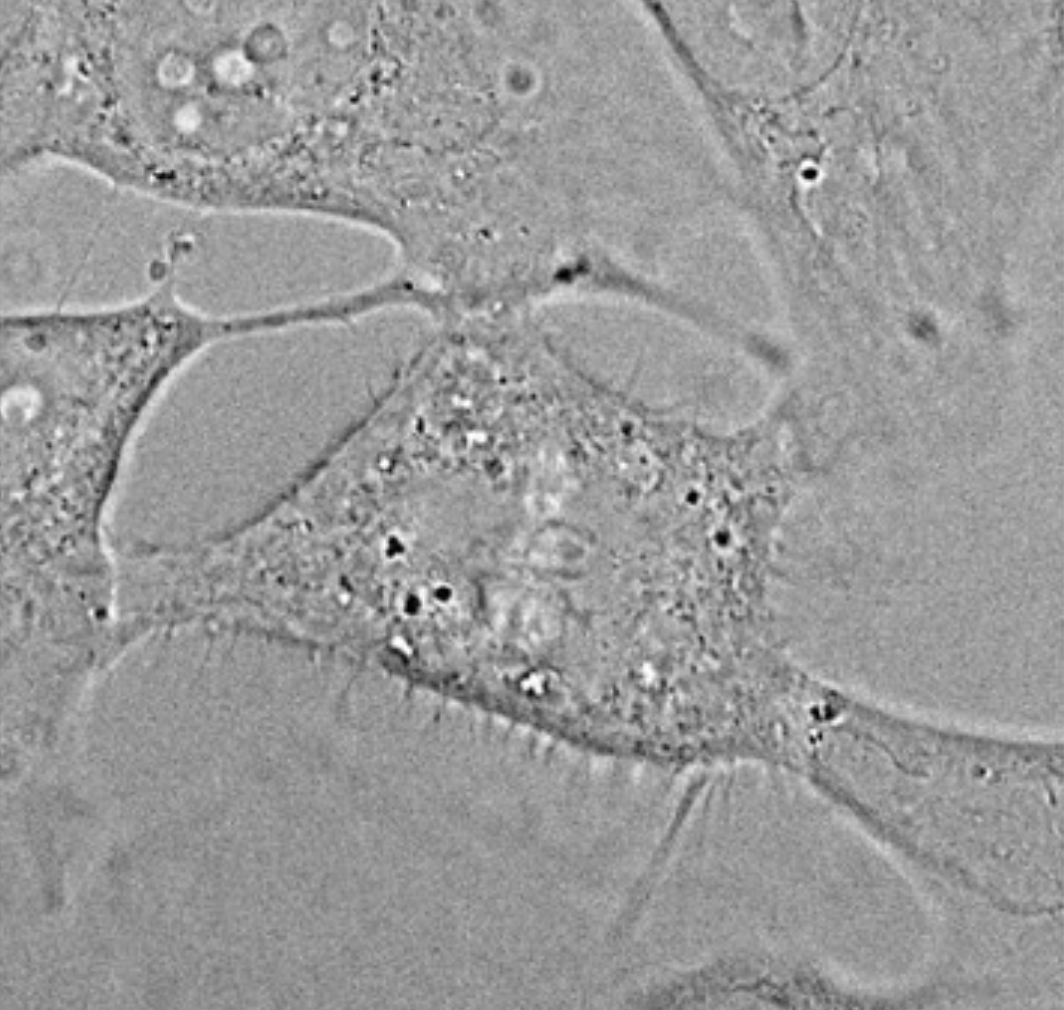

examples/HEK_PhC.png

ADDED

|

examples/U2OS_BF.png

ADDED

|

examples/U2OS_QPI.png

ADDED

|

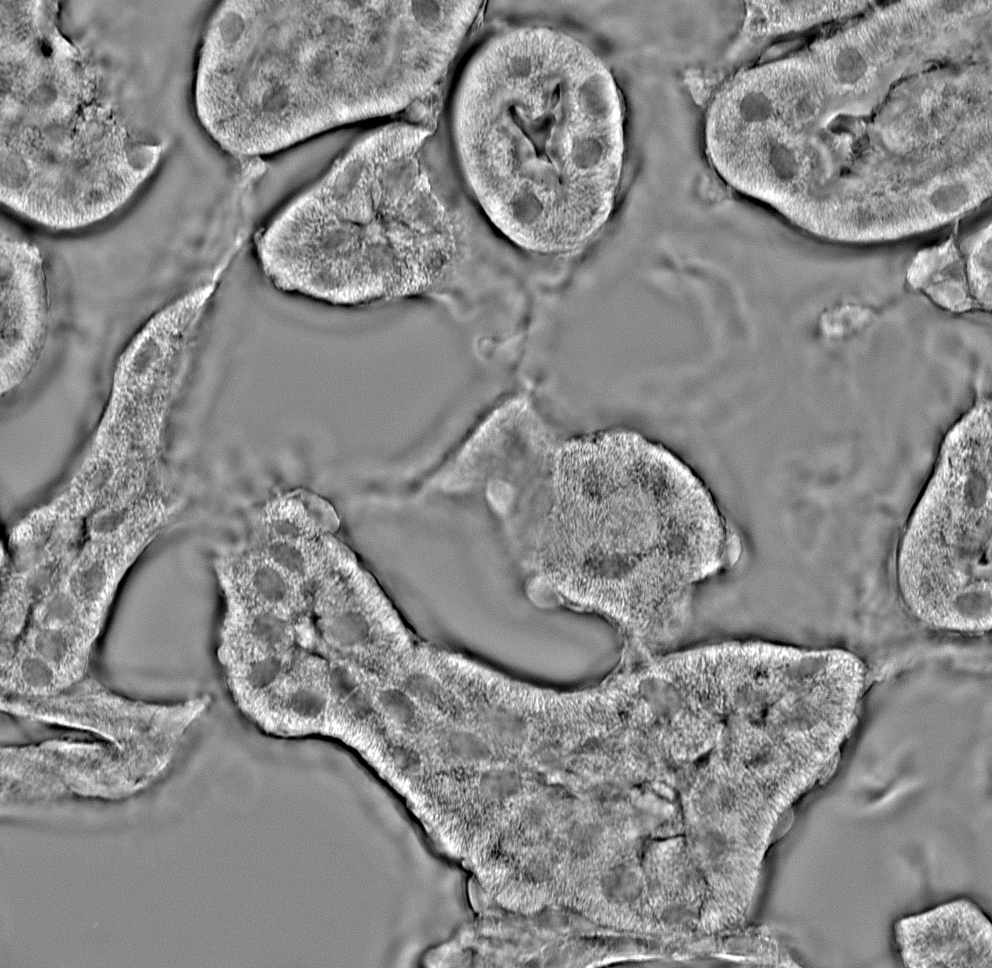

examples/ctc_glioblastoma_astrocytoma_U373.png

ADDED

|

examples/mousekidney.png

ADDED

|

examples/neuromast2.png

ADDED

|

misc/czb_mark.png

ADDED

|

misc/czbsf_logo.png

ADDED

|