Spaces:

Runtime error

Runtime error

Harry_FBK

commited on

Commit

·

60094bd

1

Parent(s):

277d7e6

Clone original THA3

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitignore +134 -0

- LICENSE +21 -0

- README.md +239 -13

- colab.ipynb +542 -0

- docs/ifacialmocap_ip.jpg +0 -0

- docs/ifacialmocap_puppeteer_click_start_capture.png +0 -0

- docs/ifacialmocap_puppeteer_ip_address_box.png +0 -0

- docs/ifacialmocap_puppeteer_numbers.png +0 -0

- docs/input_spec.png +0 -0

- docs/pytorch-install-command.png +0 -0

- environment.yml +141 -0

- manual_poser.ipynb +460 -0

- tha3/__init__.py +0 -0

- tha3/app/__init__.py +0 -0

- tha3/app/ifacialmocap_puppeteer.py +439 -0

- tha3/app/manual_poser.py +464 -0

- tha3/compute/__init__.py +0 -0

- tha3/compute/cached_computation_func.py +9 -0

- tha3/compute/cached_computation_protocol.py +43 -0

- tha3/mocap/__init__.py +0 -0

- tha3/mocap/ifacialmocap_constants.py +239 -0

- tha3/mocap/ifacialmocap_pose.py +27 -0

- tha3/mocap/ifacialmocap_pose_converter.py +12 -0

- tha3/mocap/ifacialmocap_poser_converter_25.py +463 -0

- tha3/mocap/ifacialmocap_v2.py +89 -0

- tha3/module/__init__.py +0 -0

- tha3/module/module_factory.py +9 -0

- tha3/nn/__init__.py +0 -0

- tha3/nn/common/__init__.py +0 -0

- tha3/nn/common/conv_block_factory.py +55 -0

- tha3/nn/common/poser_args.py +68 -0

- tha3/nn/common/poser_encoder_decoder_00.py +121 -0

- tha3/nn/common/poser_encoder_decoder_00_separable.py +92 -0

- tha3/nn/common/resize_conv_encoder_decoder.py +125 -0

- tha3/nn/common/resize_conv_unet.py +155 -0

- tha3/nn/conv.py +189 -0

- tha3/nn/editor/__init__.py +0 -0

- tha3/nn/editor/editor_07.py +180 -0

- tha3/nn/eyebrow_decomposer/__init__.py +0 -0

- tha3/nn/eyebrow_decomposer/eyebrow_decomposer_00.py +102 -0

- tha3/nn/eyebrow_decomposer/eyebrow_decomposer_03.py +109 -0

- tha3/nn/eyebrow_morphing_combiner/__init__.py +0 -0

- tha3/nn/eyebrow_morphing_combiner/eyebrow_morphing_combiner_00.py +115 -0

- tha3/nn/eyebrow_morphing_combiner/eyebrow_morphing_combiner_03.py +117 -0

- tha3/nn/face_morpher/__init__.py +0 -0

- tha3/nn/face_morpher/face_morpher_08.py +241 -0

- tha3/nn/face_morpher/face_morpher_09.py +187 -0

- tha3/nn/image_processing_util.py +58 -0

- tha3/nn/init_function.py +76 -0

- tha3/nn/nonlinearity_factory.py +72 -0

.gitignore

ADDED

|

@@ -0,0 +1,134 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Byte-compiled / optimized / DLL files

|

| 2 |

+

__pycache__/

|

| 3 |

+

*.py[cod]

|

| 4 |

+

*$py.class

|

| 5 |

+

|

| 6 |

+

# C extensions

|

| 7 |

+

*.so

|

| 8 |

+

|

| 9 |

+

# Distribution / packaging

|

| 10 |

+

.Python

|

| 11 |

+

build/

|

| 12 |

+

develop-eggs/

|

| 13 |

+

dist/

|

| 14 |

+

downloads/

|

| 15 |

+

eggs/

|

| 16 |

+

.eggs/

|

| 17 |

+

lib/

|

| 18 |

+

lib64/

|

| 19 |

+

parts/

|

| 20 |

+

sdist/

|

| 21 |

+

var/

|

| 22 |

+

wheels/

|

| 23 |

+

pip-wheel-metadata/

|

| 24 |

+

share/python-wheels/

|

| 25 |

+

*.egg-info/

|

| 26 |

+

.installed.cfg

|

| 27 |

+

*.egg

|

| 28 |

+

MANIFEST

|

| 29 |

+

|

| 30 |

+

# PyInstaller

|

| 31 |

+

# Usually these files are written by a python script from a template

|

| 32 |

+

# before PyInstaller builds the exe, so as to inject date/other infos into it.

|

| 33 |

+

*.manifest

|

| 34 |

+

*.spec

|

| 35 |

+

|

| 36 |

+

# Installer logs

|

| 37 |

+

pip-log.txt

|

| 38 |

+

pip-delete-this-directory.txt

|

| 39 |

+

|

| 40 |

+

# Unit test / coverage reports

|

| 41 |

+

htmlcov/

|

| 42 |

+

.tox/

|

| 43 |

+

.nox/

|

| 44 |

+

.coverage

|

| 45 |

+

.coverage.*

|

| 46 |

+

.cache

|

| 47 |

+

nosetests.xml

|

| 48 |

+

coverage.xml

|

| 49 |

+

*.cover

|

| 50 |

+

*.py,cover

|

| 51 |

+

.hypothesis/

|

| 52 |

+

.pytest_cache/

|

| 53 |

+

|

| 54 |

+

# Translations

|

| 55 |

+

*.mo

|

| 56 |

+

*.pot

|

| 57 |

+

|

| 58 |

+

# Django stuff:

|

| 59 |

+

*.log

|

| 60 |

+

local_settings.py

|

| 61 |

+

db.sqlite3

|

| 62 |

+

db.sqlite3-journal

|

| 63 |

+

|

| 64 |

+

# Flask stuff:

|

| 65 |

+

instance/

|

| 66 |

+

.webassets-cache

|

| 67 |

+

|

| 68 |

+

# Scrapy stuff:

|

| 69 |

+

.scrapy

|

| 70 |

+

|

| 71 |

+

# Sphinx documentation

|

| 72 |

+

docs/_build/

|

| 73 |

+

|

| 74 |

+

# PyBuilder

|

| 75 |

+

target/

|

| 76 |

+

|

| 77 |

+

# Jupyter Notebook

|

| 78 |

+

.ipynb_checkpoints

|

| 79 |

+

|

| 80 |

+

# IPython

|

| 81 |

+

profile_default/

|

| 82 |

+

ipython_config.py

|

| 83 |

+

|

| 84 |

+

# pyenv

|

| 85 |

+

.python-version

|

| 86 |

+

|

| 87 |

+

# pipenv

|

| 88 |

+

# According to pypa/pipenv#598, it is recommended to include Pipfile.lock in version control.

|

| 89 |

+

# However, in case of collaboration, if having platform-specific dependencies or dependencies

|

| 90 |

+

# having no cross-platform support, pipenv may install dependencies that don't work, or not

|

| 91 |

+

# install all needed dependencies.

|

| 92 |

+

#Pipfile.lock

|

| 93 |

+

|

| 94 |

+

# PEP 582; used by e.g. github.com/David-OConnor/pyflow

|

| 95 |

+

__pypackages__/

|

| 96 |

+

|

| 97 |

+

# Celery stuff

|

| 98 |

+

celerybeat-schedule

|

| 99 |

+

celerybeat.pid

|

| 100 |

+

|

| 101 |

+

# SageMath parsed files

|

| 102 |

+

*.sage.py

|

| 103 |

+

|

| 104 |

+

# Environments

|

| 105 |

+

.env

|

| 106 |

+

.venv

|

| 107 |

+

env/

|

| 108 |

+

venv/

|

| 109 |

+

ENV/

|

| 110 |

+

env.bak/

|

| 111 |

+

venv.bak/

|

| 112 |

+

|

| 113 |

+

# Spyder project settings

|

| 114 |

+

.spyderproject

|

| 115 |

+

.spyproject

|

| 116 |

+

|

| 117 |

+

# Rope project settings

|

| 118 |

+

.ropeproject

|

| 119 |

+

|

| 120 |

+

# mkdocs documentation

|

| 121 |

+

/site

|

| 122 |

+

|

| 123 |

+

# mypy

|

| 124 |

+

.mypy_cache/

|

| 125 |

+

.dmypy.json

|

| 126 |

+

dmypy.json

|

| 127 |

+

|

| 128 |

+

# Pyre type checker

|

| 129 |

+

.pyre/

|

| 130 |

+

|

| 131 |

+

data/

|

| 132 |

+

*.iml

|

| 133 |

+

.idea/

|

| 134 |

+

*.pt

|

LICENSE

ADDED

|

@@ -0,0 +1,21 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

MIT License

|

| 2 |

+

|

| 3 |

+

Copyright (c) 2022 Pramook Khungurn

|

| 4 |

+

|

| 5 |

+

Permission is hereby granted, free of charge, to any person obtaining a copy

|

| 6 |

+

of this software and associated documentation files (the "Software"), to deal

|

| 7 |

+

in the Software without restriction, including without limitation the rights

|

| 8 |

+

to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

|

| 9 |

+

copies of the Software, and to permit persons to whom the Software is

|

| 10 |

+

furnished to do so, subject to the following conditions:

|

| 11 |

+

|

| 12 |

+

The above copyright notice and this permission notice shall be included in all

|

| 13 |

+

copies or substantial portions of the Software.

|

| 14 |

+

|

| 15 |

+

THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

|

| 16 |

+

IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

|

| 17 |

+

FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

|

| 18 |

+

AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

|

| 19 |

+

LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

|

| 20 |

+

OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

|

| 21 |

+

SOFTWARE.

|

README.md

CHANGED

|

@@ -1,13 +1,239 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Demo Code for "Talking Head(?) Anime from A Single Image 3: Now the Body Too"

|

| 2 |

+

|

| 3 |

+

This repository contains demo programs for the [Talking Head(?) Anime from a Single Image 3: Now the Body Too](https://pkhungurn.github.io/talking-head-anime-3/index.html) project. As the name implies, the project allows you to animate anime characters, and you only need a single image of that character to do so. There are two demo programs:

|

| 4 |

+

|

| 5 |

+

* The ``manual_poser`` lets you manipulate a character's facial expression, head rotation, body rotation, and chest expansion due to breathing through a graphical user interface.

|

| 6 |

+

* ``ifacialmocap_puppeteer`` lets you transfer your facial motion to an anime character.

|

| 7 |

+

|

| 8 |

+

## Try the Manual Poser on Google Colab

|

| 9 |

+

|

| 10 |

+

If you do not have the required hardware (discussed below) or do not want to download the code and set up an environment to run it, click [](https://colab.research.google.com/github/pkhungurn/talking-head-anime-3-demo/blob/master/colab.ipynb) to try running the manual poser on [Google Colab](https://research.google.com/colaboratory/faq.html).

|

| 11 |

+

|

| 12 |

+

## Hardware Requirements

|

| 13 |

+

|

| 14 |

+

Both programs require a recent and powerful Nvidia GPU to run. I could personally ran them at good speed with the Nvidia Titan RTX. However, I think recent high-end gaming GPUs such as the RTX 2080, the RTX 3080, or better would do just as well.

|

| 15 |

+

|

| 16 |

+

The `ifacialmocap_puppeteer` requires an iOS device that is capable of computing [blend shape parameters](https://developer.apple.com/documentation/arkit/arfaceanchor/2928251-blendshapes) from a video feed. This means that the device must be able to run iOS 11.0 or higher and must have a TrueDepth front-facing camera. (See [this page](https://developer.apple.com/documentation/arkit/content_anchors/tracking_and_visualizing_faces) for more info.) In other words, if you have the iPhone X or something better, you should be all set. Personally, I have used an iPhone 12 mini.

|

| 17 |

+

|

| 18 |

+

## Software Requirements

|

| 19 |

+

|

| 20 |

+

### GPU Related Software

|

| 21 |

+

|

| 22 |

+

Please update your GPU's device driver and install the [CUDA Toolkit](https://developer.nvidia.com/cuda-toolkit) that is compatible with your GPU and is newer than the version you will be installing in the next subsection.

|

| 23 |

+

|

| 24 |

+

### Python Environment

|

| 25 |

+

|

| 26 |

+

Both ``manual_poser`` and ``ifacialmocap_puppeteer`` are available as desktop applications. To run them, you need to set up an environment for running programs written in the [Python](http://www.python.org) language. The environment needs to have the following software packages:

|

| 27 |

+

|

| 28 |

+

* Python >= 3.8

|

| 29 |

+

* PyTorch >= 1.11.0 with CUDA support

|

| 30 |

+

* SciPY >= 1.7.3

|

| 31 |

+

* wxPython >= 4.1.1

|

| 32 |

+

* Matplotlib >= 3.5.1

|

| 33 |

+

|

| 34 |

+

One way to do so is to install [Anaconda](https://www.anaconda.com/) and run the following commands in your shell:

|

| 35 |

+

|

| 36 |

+

```

|

| 37 |

+

> conda create -n talking-head-anime-3-demo python=3.8

|

| 38 |

+

> conda activate talking-head-anime-3-demo

|

| 39 |

+

> conda install pytorch torchvision torchaudio cudatoolkit=11.3 -c pytorch

|

| 40 |

+

> conda install scipy

|

| 41 |

+

> pip install wxpython

|

| 42 |

+

> conda install matplotlib

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

#### Caveat 1: Do not use Python 3.10 on Windows

|

| 46 |

+

|

| 47 |

+

As of June 2006, you cannot use [wxPython](https://www.wxpython.org/) with Python 3.10 on Windows. As a result, do not use Python 3.10 until [this bug](https://github.com/wxWidgets/Phoenix/issues/2024) is fixed. This means you should not set ``python=3.10`` in the first ``conda`` command in the listing above.

|

| 48 |

+

|

| 49 |

+

#### Caveat 2: Adjust versions of Python and CUDA Toolkit as needed

|

| 50 |

+

|

| 51 |

+

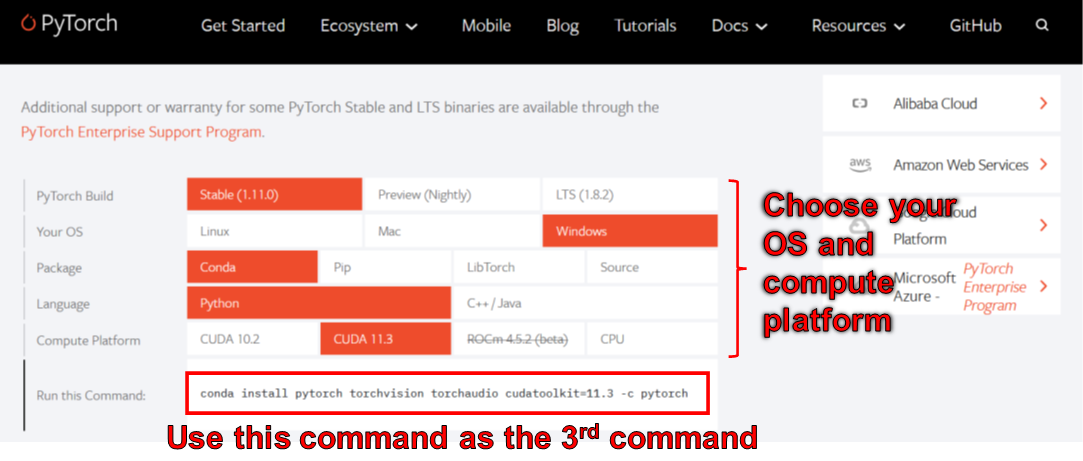

The environment created by the commands above gives you Python version 3.8 and an installation of [PyTorch](http://pytorch.org) that was compiled with CUDA Toolkit version 11.3. This particular setup might not work in the future because you may find that this particular PyTorch package does not work with your new computer. The solution is to:

|

| 52 |

+

|

| 53 |

+

1. Change the Python version in the first command to a recent one that works for your OS. (That is, do not use 3.10 if you are using Windows.)

|

| 54 |

+

2. Change the version of CUDA toolkit in the third command to one that the PyTorch's website says is available. In particular, scroll to the "Install PyTorch" section and use the chooser there to pick the right command for your computer. Use that command to install PyTorch instead of the third command above.

|

| 55 |

+

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

### Jupyter Environment

|

| 59 |

+

|

| 60 |

+

The ``manual_poser`` is also available as a [Jupyter Nootbook](http://jupyter.org). To run it on your local machines, you also need to install:

|

| 61 |

+

|

| 62 |

+

* Jupyter Notebook >= 7.3.4

|

| 63 |

+

* IPywidgets >= 7.7.0

|

| 64 |

+

|

| 65 |

+

In some case, you will also need to enable the ``widgetsnbextension`` as well. So, run

|

| 66 |

+

|

| 67 |

+

```

|

| 68 |

+

> jupyter nbextension enable --py widgetsnbextension

|

| 69 |

+

```

|

| 70 |

+

|

| 71 |

+

After installing the above two packages. Using Anaconda, I managed to do the above with the following commands:

|

| 72 |

+

|

| 73 |

+

```

|

| 74 |

+

> conda install -c conda-forge notebook

|

| 75 |

+

> conda install -c conda-forge ipywidgets

|

| 76 |

+

> jupyter nbextension enable --py widgetsnbextension

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

### Automatic Environment Construction with Anaconda

|

| 80 |

+

|

| 81 |

+

You can also use Anaconda to download and install all Python packages in one command. Open your shell, change the directory to where you clone the repository, and run:

|

| 82 |

+

|

| 83 |

+

```

|

| 84 |

+

> conda env create -f environment.yml

|

| 85 |

+

```

|

| 86 |

+

|

| 87 |

+

This will create an environment called ``talking-head-anime-3-demo`` containing all the required Python packages.

|

| 88 |

+

|

| 89 |

+

### iFacialMocap

|

| 90 |

+

|

| 91 |

+

If you want to use ``ifacialmocap_puppeteer``, you will also need to an iOS software called [iFacialMocap](https://www.ifacialmocap.com/) (a 980 yen purchase in the App Store). You do not need to download the paired application this time. Your iOS and your computer must use the same network. For example, you may connect them to the same wireless router.

|

| 92 |

+

|

| 93 |

+

## Download the Models

|

| 94 |

+

|

| 95 |

+

Before running the programs, you need to download the model files from this [Dropbox link](https://www.dropbox.com/s/y7b8jl4n2euv8xe/talking-head-anime-3-models.zip?dl=0) and unzip it to the ``data/models`` folder under the repository's root directory. In the end, the data folder should look like:

|

| 96 |

+

|

| 97 |

+

```

|

| 98 |

+

+ data

|

| 99 |

+

+ images

|

| 100 |

+

- crypko_00.png

|

| 101 |

+

- crypko_01.png

|

| 102 |

+

:

|

| 103 |

+

- crypko_07.png

|

| 104 |

+

- lambda_00.png

|

| 105 |

+

- lambda_01.png

|

| 106 |

+

+ models

|

| 107 |

+

+ separable_float

|

| 108 |

+

- editor.pt

|

| 109 |

+

- eyebrow_decomposer.pt

|

| 110 |

+

- eyebrow_morphing_combiner.pt

|

| 111 |

+

- face_morpher.pt

|

| 112 |

+

- two_algo_face_body_rotator.pt

|

| 113 |

+

+ separable_half

|

| 114 |

+

- editor.pt

|

| 115 |

+

:

|

| 116 |

+

- two_algo_face_body_rotator.pt

|

| 117 |

+

+ standard_float

|

| 118 |

+

- editor.pt

|

| 119 |

+

:

|

| 120 |

+

- two_algo_face_body_rotator.pt

|

| 121 |

+

+ standard_half

|

| 122 |

+

- editor.pt

|

| 123 |

+

:

|

| 124 |

+

- two_algo_face_body_rotator.pt

|

| 125 |

+

```

|

| 126 |

+

|

| 127 |

+

The model files are distributed with the

|

| 128 |

+

[Creative Commons Attribution 4.0 International License](https://creativecommons.org/licenses/by/4.0/legalcode), which

|

| 129 |

+

means that you can use them for commercial purposes. However, if you distribute them, you must, among other things, say

|

| 130 |

+

that I am the creator.

|

| 131 |

+

|

| 132 |

+

## Running the `manual_poser` Desktop Application

|

| 133 |

+

|

| 134 |

+

Open a shell. Change your working directory to the repository's root directory. Then, run:

|

| 135 |

+

|

| 136 |

+

```

|

| 137 |

+

> python tha3/app/manual_poser.py

|

| 138 |

+

```

|

| 139 |

+

|

| 140 |

+

Note that before running the command above, you might have to activate the Python environment that contains the required

|

| 141 |

+

packages. If you created an environment using Anaconda as was discussed above, you need to run

|

| 142 |

+

|

| 143 |

+

```

|

| 144 |

+

> conda activate talking-head-anime-3-demo

|

| 145 |

+

```

|

| 146 |

+

|

| 147 |

+

if you have not already activated the environment.

|

| 148 |

+

|

| 149 |

+

### Choosing System Variant to Use

|

| 150 |

+

|

| 151 |

+

As noted in the [project's write-up](http://pkhungurn.github.io/talking-head-anime-3/index.html), I created 4 variants of the neural network system. They are called ``standard_float``, ``separable_float``, ``standard_half``, and ``separable_half``. All of them have the same functionalities, but they differ in their sizes, RAM usage, speed, and accuracy. You can specify which variant that the ``manual_poser`` program uses through the ``--model`` command line option.

|

| 152 |

+

|

| 153 |

+

```

|

| 154 |

+

> python tha3/app/manual_poser --model <variant_name>

|

| 155 |

+

```

|

| 156 |

+

|

| 157 |

+

where ``<variant_name>`` must be one of the 4 names above. If no variant is specified, the ``standard_float`` variant (which is the largest, slowest, and most accurate) will be used.

|

| 158 |

+

|

| 159 |

+

## Running the `manual_poser` Jupyter Notebook

|

| 160 |

+

|

| 161 |

+

Open a shell. Activate the environment. Change your working directory to the repository's root directory. Then, run:

|

| 162 |

+

|

| 163 |

+

```

|

| 164 |

+

> jupyter notebook

|

| 165 |

+

```

|

| 166 |

+

|

| 167 |

+

A browser window should open. In it, open `manual_poser.ipynb`. Once you have done so, you should see that it has two cells. Run the two cells in order. Then, scroll down to the end of the document, and you'll see the GUI there.

|

| 168 |

+

|

| 169 |

+

You can choose the system variant to use by changing the ``MODEL_NAME`` variable in the first cell. If you do, you will need to rerun both cells in order for the variant to be loaded and the GUI to be properly updated to use it.

|

| 170 |

+

|

| 171 |

+

## Running the `ifacialmocap_poser`

|

| 172 |

+

|

| 173 |

+

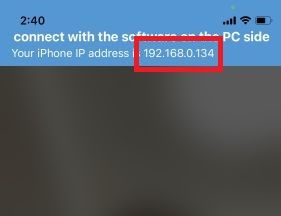

First, run iFacialMocap on your iOS device. It should show you the device's IP address. Jot it down. Keep the app open.

|

| 174 |

+

|

| 175 |

+

|

| 176 |

+

|

| 177 |

+

Open a shell. Activate the Python environment. Change your working directory to the repository's root directory. Then, run:

|

| 178 |

+

|

| 179 |

+

```

|

| 180 |

+

> python tha3/app/ifacialmocap_puppeteer.py

|

| 181 |

+

```

|

| 182 |

+

|

| 183 |

+

You will see a text box with label "Capture Device IP." Write the iOS device's IP address that you jotted down there.

|

| 184 |

+

|

| 185 |

+

|

| 186 |

+

|

| 187 |

+

Click the "START CAPTURE!" button to the right.

|

| 188 |

+

|

| 189 |

+

|

| 190 |

+

|

| 191 |

+

If the programs are connected properly, you should see the numbers in the bottom part of the window change when you move your head.

|

| 192 |

+

|

| 193 |

+

|

| 194 |

+

|

| 195 |

+

Now, you can load an image of a character, and it should follow your facial movement.

|

| 196 |

+

|

| 197 |

+

## Contraints on Input Images

|

| 198 |

+

|

| 199 |

+

In order for the system to work well, the input image must obey the following constraints:

|

| 200 |

+

|

| 201 |

+

* It should be of resolution 512 x 512. (If the demo programs receives an input image of any other size, they will resize the image to this resolution and also output at this resolution.)

|

| 202 |

+

* It must have an alpha channel.

|

| 203 |

+

* It must contain only one humanoid character.

|

| 204 |

+

* The character should be standing upright and facing forward.

|

| 205 |

+

* The character's hands should be below and far from the head.

|

| 206 |

+

* The head of the character should roughly be contained in the 128 x 128 box in the middle of the top half of the image.

|

| 207 |

+

* The alpha channels of all pixels that do not belong to the character (i.e., background pixels) must be 0.

|

| 208 |

+

|

| 209 |

+

|

| 210 |

+

|

| 211 |

+

See the project's [write-up](http://pkhungurn.github.io/talking-head-anime-3/full.html#sec:problem-spec) for more details on the input image.

|

| 212 |

+

|

| 213 |

+

## Citation

|

| 214 |

+

|

| 215 |

+

If your academic work benefits from the code in this repository, please cite the project's web page as follows:

|

| 216 |

+

|

| 217 |

+

> Pramook Khungurn. **Talking Head(?) Anime from a Single Image 3: Now the Body Too.** http://pkhungurn.github.io/talking-head-anime-3/, 2022. Accessed: YYYY-MM-DD.

|

| 218 |

+

|

| 219 |

+

You can also used the following BibTex entry:

|

| 220 |

+

|

| 221 |

+

```

|

| 222 |

+

@misc{Khungurn:2022,

|

| 223 |

+

author = {Pramook Khungurn},

|

| 224 |

+

title = {Talking Head(?) Anime from a Single Image 3: Now the Body Too},

|

| 225 |

+

howpublished = {\url{http://pkhungurn.github.io/talking-head-anime-3/}},

|

| 226 |

+

year = 2022,

|

| 227 |

+

note = {Accessed: YYYY-MM-DD},

|

| 228 |

+

}

|

| 229 |

+

```

|

| 230 |

+

|

| 231 |

+

## Disclaimer

|

| 232 |

+

|

| 233 |

+

While the author is an employee of [Google Japan](https://careers.google.com/locations/tokyo/), this software is not Google's product and is not supported by Google.

|

| 234 |

+

|

| 235 |

+

The copyright of this software belongs to me as I have requested it using the [IARC process](https://opensource.google/documentation/reference/releasing#iarc). However, Google might claim the rights to the intellectual

|

| 236 |

+

property of this invention.

|

| 237 |

+

|

| 238 |

+

The code is released under the [MIT license](https://github.com/pkhungurn/talking-head-anime-2-demo/blob/master/LICENSE).

|

| 239 |

+

The model is released under the [Creative Commons Attribution 4.0 International License](https://creativecommons.org/licenses/by/4.0/legalcode). Please see the README.md file in the ``data/images`` directory for the licenses for the images there.

|

colab.ipynb

ADDED

|

@@ -0,0 +1,542 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "markdown",

|

| 5 |

+

"id": "1027b46a",

|

| 6 |

+

"metadata": {},

|

| 7 |

+

"source": [

|

| 8 |

+

"# Talking Head(?) Anime from a Single Image 3: Now the Body Too (Manual Poser Tool)\n",

|

| 9 |

+

"\n",

|

| 10 |

+

"**Instruction**\n",

|

| 11 |

+

"\n",

|

| 12 |

+

"1. Run the four cells below, one by one, in order by clicking the \"Play\" button to the left of it. Wait for each cell to finish before going to the next one.\n",

|

| 13 |

+

"2. Scroll down to the end of the last cell, and play with the GUI.\n",

|

| 14 |

+

"\n",

|

| 15 |

+

"**Links**\n",

|

| 16 |

+

"\n",

|

| 17 |

+

"* Github repository: http://github.com/pkhungurn/talking-head-anime-3-demo\n",

|

| 18 |

+

"* Project writeup: http://pkhungurn.github.io/talking-head-anime-3/"

|

| 19 |

+

]

|

| 20 |

+

},

|

| 21 |

+

{

|

| 22 |

+

"cell_type": "code",

|

| 23 |

+

"execution_count": null,

|

| 24 |

+

"id": "54cc96d7",

|

| 25 |

+

"metadata": {},

|

| 26 |

+

"outputs": [],

|

| 27 |

+

"source": [

|

| 28 |

+

"# Clone the repository\n",

|

| 29 |

+

"%cd /content\n",

|

| 30 |

+

"!git clone https://github.com/pkhungurn/talking-head-anime-3-demo.git"

|

| 31 |

+

]

|

| 32 |

+

},

|

| 33 |

+

{

|

| 34 |

+

"cell_type": "code",

|

| 35 |

+

"execution_count": null,

|

| 36 |

+

"id": "77f2016c",

|

| 37 |

+

"metadata": {},

|

| 38 |

+

"outputs": [],

|

| 39 |

+

"source": [

|

| 40 |

+

"# CD into the repository directory.\n",

|

| 41 |

+

"%cd /content/talking-head-anime-3-demo"

|

| 42 |

+

]

|

| 43 |

+

},

|

| 44 |

+

{

|

| 45 |

+

"cell_type": "code",

|

| 46 |

+

"execution_count": null,

|

| 47 |

+

"id": "1771c927",

|

| 48 |

+

"metadata": {},

|

| 49 |

+

"outputs": [],

|

| 50 |

+

"source": [

|

| 51 |

+

"# Download model files\n",

|

| 52 |

+

"!mkdir -p data/models/standard_float\n",

|

| 53 |

+

"!wget -O data/models/standard_float/editor.pt https://www.dropbox.com/s/zp3e5ox57sdws3y/editor.pt?dl=0\n",

|

| 54 |

+

"!wget -O data/models/standard_float/eyebrow_decomposer.pt https://www.dropbox.com/s/bcp42knbrk7egk8/eyebrow_decomposer.pt?dl=0\n",

|

| 55 |

+

"!wget -O data/models/standard_float/eyebrow_morphing_combiner.pt https://www.dropbox.com/s/oywaiio2s53lc57/eyebrow_morphing_combiner.pt?dl=0\n",

|

| 56 |

+

"!wget -O data/models/standard_float/face_morpher.pt https://www.dropbox.com/s/8qvo0u5lw7hqvtq/face_morpher.pt?dl=0\n",

|

| 57 |

+

"!wget -O data/models/standard_float/two_algo_face_body_rotator.pt https://www.dropbox.com/s/qmq1dnxrmzsxb4h/two_algo_face_body_rotator.pt?dl=0\n",

|

| 58 |

+

"\n",

|

| 59 |

+

"!mkdir -p data/models/standard_half\n",

|

| 60 |

+

"!wget -O data/models/standard_half/editor.pt https://www.dropbox.com/s/g21ps8gfuvz4kbo/editor.pt?dl=0\n",

|

| 61 |

+

"!wget -O data/models/standard_half/eyebrow_decomposer.pt https://www.dropbox.com/s/nwwwevzpmxiilgn/eyebrow_decomposer.pt?dl=0\n",

|

| 62 |

+

"!wget -O data/models/standard_half/eyebrow_morphing_combiner.pt https://www.dropbox.com/s/z5v0amgqif7yup1/eyebrow_morphing_combiner.pt?dl=0\n",

|

| 63 |

+

"!wget -O data/models/standard_half/face_morpher.pt https://www.dropbox.com/s/g03sfnd5yfs0m65/face_morpher.pt?dl=0\n",

|

| 64 |

+

"!wget -O data/models/standard_half/two_algo_face_body_rotator.pt https://www.dropbox.com/s/c5lrn7z34x12317/two_algo_face_body_rotator.pt?dl=0\n",

|

| 65 |

+

"\n",

|

| 66 |

+

"!mkdir -p data/models/separable_float \n",

|

| 67 |

+

"!wget -O data/models/separable_float/editor.pt https://www.dropbox.com/s/nwdxhrpa9fy19r4/editor.pt?dl=0\n",

|

| 68 |

+

"!wget -O data/models/separable_float/eyebrow_decomposer.pt https://www.dropbox.com/s/hfzjcu9cqr9wm3i/eyebrow_decomposer.pt?dl=0\n",

|

| 69 |

+

"!wget -O data/models/separable_float/eyebrow_morphing_combiner.pt https://www.dropbox.com/s/g04dyyyavh5o1e2/eyebrow_morphing_combiner.pt?dl=0\n",

|

| 70 |

+

"!wget -O data/models/separable_float/face_morpher.pt https://www.dropbox.com/s/vgi9dsj95y0rrwv/face_morpher.pt?dl=0\n",

|

| 71 |

+

"!wget -O data/models/separable_float/two_algo_face_body_rotator.pt https://www.dropbox.com/s/8u0qond8po34l24/two_algo_face_body_rotator.pt?dl=0\n",

|

| 72 |

+

"\n",

|

| 73 |

+

"!mkdir -p data/models/separable_half\n",

|

| 74 |

+

"!wget -O data/models/separable_half/editor.pt https://www.dropbox.com/s/on8kn6z9fj95j0h/editor.pt?dl=0\n",

|

| 75 |

+

"!wget -O data/models/separable_half/eyebrow_decomposer.pt https://www.dropbox.com/s/0hxu8opu1hmghqe/eyebrow_decomposer.pt?dl=0\n",

|

| 76 |

+

"!wget -O data/models/separable_half/eyebrow_morphing_combiner.pt https://www.dropbox.com/s/bgz02afp0xojqfs/eyebrow_morphing_combiner.pt?dl=0\n",

|

| 77 |

+

"!wget -O data/models/separable_half/face_morpher.pt https://www.dropbox.com/s/bgz02afp0xojqfs/eyebrow_morphing_combiner.pt?dl=0\n",

|

| 78 |

+

"!wget -O data/models/separable_half/two_algo_face_body_rotator.pt https://www.dropbox.com/s/vr8h2xxltszhw7w/two_algo_face_body_rotator.pt?dl=0"

|

| 79 |

+

]

|

| 80 |

+

},

|

| 81 |

+

{

|

| 82 |

+

"cell_type": "code",

|

| 83 |

+

"execution_count": null,

|

| 84 |

+

"id": "062014f7",

|

| 85 |

+

"metadata": {

|

| 86 |

+

"id": "breeding-extra"

|

| 87 |

+

},

|

| 88 |

+

"outputs": [],

|

| 89 |

+

"source": [

|

| 90 |

+

"# Set this constant to specify which system variant to use.\n",

|

| 91 |

+

"MODEL_NAME = \"standard_float\" \n",

|

| 92 |

+

"\n",

|

| 93 |

+

"# Load the models.\n",

|

| 94 |

+

"import torch\n",

|

| 95 |

+

"DEVICE_NAME = 'cuda'\n",

|

| 96 |

+

"device = torch.device(DEVICE_NAME)\n",

|

| 97 |

+

"\n",

|

| 98 |

+

"def load_poser(model: str, device: torch.device):\n",

|

| 99 |

+

" print(\"Using the %s model.\" % model)\n",

|

| 100 |

+

" if model == \"standard_float\":\n",

|

| 101 |

+

" from tha3.poser.modes.standard_float import create_poser\n",

|

| 102 |

+

" return create_poser(device)\n",

|

| 103 |

+

" elif model == \"standard_half\":\n",

|

| 104 |

+

" from tha3.poser.modes.standard_half import create_poser\n",

|

| 105 |

+

" return create_poser(device)\n",

|

| 106 |

+

" elif model == \"separable_float\":\n",

|

| 107 |

+

" from tha3.poser.modes.separable_float import create_poser\n",

|

| 108 |

+

" return create_poser(device)\n",

|

| 109 |

+

" elif model == \"separable_half\":\n",

|

| 110 |

+

" from tha3.poser.modes.separable_half import create_poser\n",

|

| 111 |

+

" return create_poser(device)\n",

|

| 112 |

+

" else:\n",

|

| 113 |

+

" raise RuntimeError(\"Invalid model: '%s'\" % model)\n",

|

| 114 |

+

" \n",

|

| 115 |

+

"poser = load_poser(MODEL_NAME, DEVICE_NAME)\n",

|

| 116 |

+

"poser.get_modules();"

|

| 117 |

+

]

|

| 118 |

+

},

|

| 119 |

+

{

|

| 120 |

+

"cell_type": "code",

|

| 121 |

+

"execution_count": null,

|

| 122 |

+

"id": "breeding-extra",

|

| 123 |

+

"metadata": {

|

| 124 |

+

"id": "breeding-extra"

|

| 125 |

+

},

|

| 126 |

+

"outputs": [],

|

| 127 |

+

"source": [

|

| 128 |

+

"# Create the GUI for manipulating character images.\n",

|

| 129 |

+

"import PIL.Image\n",

|

| 130 |

+

"import io\n",

|

| 131 |

+

"from io import StringIO, BytesIO\n",

|

| 132 |

+

"import IPython.display\n",

|

| 133 |

+

"import numpy\n",

|

| 134 |

+

"import ipywidgets\n",

|

| 135 |

+

"import time\n",

|

| 136 |

+

"import threading\n",

|

| 137 |

+

"import torch\n",

|

| 138 |

+

"from tha3.util import resize_PIL_image, extract_PIL_image_from_filelike, \\\n",

|

| 139 |

+

" extract_pytorch_image_from_PIL_image, convert_output_image_from_torch_to_numpy\n",

|

| 140 |

+

"\n",

|

| 141 |

+

"FRAME_RATE = 30.0\n",

|

| 142 |

+

"\n",

|

| 143 |

+

"last_torch_input_image = None\n",

|

| 144 |

+

"torch_input_image = None\n",

|

| 145 |

+

"\n",

|

| 146 |

+

"def show_pytorch_image(pytorch_image):\n",

|

| 147 |

+

" output_image = pytorch_image.detach().cpu()\n",

|

| 148 |

+

" numpy_image = numpy.uint8(numpy.rint(convert_output_image_from_torch_to_numpy(output_image) * 255.0))\n",

|

| 149 |

+

" pil_image = PIL.Image.fromarray(numpy_image, mode='RGBA')\n",

|

| 150 |

+

" IPython.display.display(pil_image)\n",

|

| 151 |

+

"\n",

|

| 152 |

+

"upload_input_image_button = ipywidgets.FileUpload(\n",

|

| 153 |

+

" accept='.png',\n",

|

| 154 |

+

" multiple=False,\n",

|

| 155 |

+

" layout={\n",

|

| 156 |

+

" 'width': '512px'\n",

|

| 157 |

+

" }\n",

|

| 158 |

+

")\n",

|

| 159 |

+

"\n",

|

| 160 |

+

"output_image_widget = ipywidgets.Output(\n",

|

| 161 |

+

" layout={\n",

|

| 162 |

+

" 'border': '1px solid black',\n",

|

| 163 |

+

" 'width': '512px',\n",

|

| 164 |

+

" 'height': '512px'\n",

|

| 165 |

+

" }\n",

|

| 166 |

+

")\n",

|

| 167 |

+

"\n",

|

| 168 |

+

"eyebrow_dropdown = ipywidgets.Dropdown(\n",

|

| 169 |

+

" options=[\"troubled\", \"angry\", \"lowered\", \"raised\", \"happy\", \"serious\"],\n",

|

| 170 |

+

" value=\"troubled\",\n",

|

| 171 |

+

" description=\"Eyebrow:\", \n",

|

| 172 |

+

")\n",

|

| 173 |

+

"eyebrow_left_slider = ipywidgets.FloatSlider(\n",

|

| 174 |

+

" value=0.0,\n",

|

| 175 |

+

" min=0.0,\n",

|

| 176 |

+

" max=1.0,\n",

|

| 177 |

+

" step=0.01,\n",

|

| 178 |

+

" description=\"Left:\",\n",

|

| 179 |

+

" readout=True,\n",

|

| 180 |

+

" readout_format=\".2f\"\n",

|

| 181 |

+

")\n",

|

| 182 |

+

"eyebrow_right_slider = ipywidgets.FloatSlider(\n",

|

| 183 |

+

" value=0.0,\n",

|

| 184 |

+

" min=0.0,\n",

|

| 185 |

+

" max=1.0,\n",

|

| 186 |

+

" step=0.01,\n",

|

| 187 |

+

" description=\"Right:\",\n",

|

| 188 |

+

" readout=True,\n",

|

| 189 |

+

" readout_format=\".2f\"\n",

|

| 190 |

+

")\n",

|

| 191 |

+

"\n",

|

| 192 |

+

"eye_dropdown = ipywidgets.Dropdown(\n",

|

| 193 |

+

" options=[\"wink\", \"happy_wink\", \"surprised\", \"relaxed\", \"unimpressed\", \"raised_lower_eyelid\"],\n",

|

| 194 |

+

" value=\"wink\",\n",

|

| 195 |

+

" description=\"Eye:\", \n",

|

| 196 |

+

")\n",

|

| 197 |

+

"eye_left_slider = ipywidgets.FloatSlider(\n",

|

| 198 |

+

" value=0.0,\n",

|

| 199 |

+

" min=0.0,\n",

|

| 200 |

+

" max=1.0,\n",

|

| 201 |

+

" step=0.01,\n",

|

| 202 |

+

" description=\"Left:\",\n",

|

| 203 |

+

" readout=True,\n",

|

| 204 |

+

" readout_format=\".2f\"\n",

|

| 205 |

+

")\n",

|

| 206 |

+

"eye_right_slider = ipywidgets.FloatSlider(\n",

|

| 207 |

+

" value=0.0,\n",

|

| 208 |

+

" min=0.0,\n",

|

| 209 |

+

" max=1.0,\n",

|

| 210 |

+

" step=0.01,\n",

|

| 211 |

+

" description=\"Right:\",\n",

|

| 212 |

+

" readout=True,\n",

|

| 213 |

+

" readout_format=\".2f\"\n",

|

| 214 |

+

")\n",

|

| 215 |

+

"\n",

|

| 216 |

+

"mouth_dropdown = ipywidgets.Dropdown(\n",

|

| 217 |

+

" options=[\"aaa\", \"iii\", \"uuu\", \"eee\", \"ooo\", \"delta\", \"lowered_corner\", \"raised_corner\", \"smirk\"],\n",

|

| 218 |

+

" value=\"aaa\",\n",

|

| 219 |

+

" description=\"Mouth:\", \n",

|

| 220 |

+

")\n",

|

| 221 |

+

"mouth_left_slider = ipywidgets.FloatSlider(\n",

|

| 222 |

+

" value=0.0,\n",

|

| 223 |

+

" min=0.0,\n",

|

| 224 |

+

" max=1.0,\n",

|

| 225 |

+

" step=0.01,\n",

|

| 226 |

+

" description=\"Value:\",\n",

|

| 227 |

+

" readout=True,\n",

|

| 228 |

+

" readout_format=\".2f\"\n",

|

| 229 |

+

")\n",

|

| 230 |

+

"mouth_right_slider = ipywidgets.FloatSlider(\n",

|

| 231 |

+

" value=0.0,\n",

|

| 232 |

+

" min=0.0,\n",

|

| 233 |

+

" max=1.0,\n",

|

| 234 |

+

" step=0.01,\n",

|

| 235 |

+

" description=\" \",\n",

|

| 236 |

+

" readout=True,\n",

|

| 237 |

+

" readout_format=\".2f\",\n",

|

| 238 |

+

" disabled=True,\n",

|

| 239 |

+

")\n",

|

| 240 |

+

"\n",

|

| 241 |

+

"def update_mouth_sliders(change):\n",

|

| 242 |

+

" if mouth_dropdown.value == \"lowered_corner\" or mouth_dropdown.value == \"raised_corner\":\n",

|

| 243 |

+

" mouth_left_slider.description = \"Left:\"\n",

|

| 244 |

+

" mouth_right_slider.description = \"Right:\"\n",

|

| 245 |

+

" mouth_right_slider.disabled = False\n",

|

| 246 |

+

" else:\n",

|

| 247 |

+

" mouth_left_slider.description = \"Value:\"\n",

|

| 248 |

+

" mouth_right_slider.description = \" \"\n",

|

| 249 |

+

" mouth_right_slider.disabled = True\n",

|

| 250 |

+

"\n",

|

| 251 |

+

"mouth_dropdown.observe(update_mouth_sliders, names='value')\n",

|

| 252 |

+

"\n",

|

| 253 |

+

"iris_small_left_slider = ipywidgets.FloatSlider(\n",

|

| 254 |

+

" value=0.0,\n",

|

| 255 |

+

" min=0.0,\n",

|

| 256 |

+

" max=1.0,\n",

|

| 257 |

+

" step=0.01,\n",

|

| 258 |

+

" description=\"Left:\",\n",

|

| 259 |

+

" readout=True,\n",

|

| 260 |

+

" readout_format=\".2f\"\n",

|

| 261 |

+

")\n",

|

| 262 |

+

"iris_small_right_slider = ipywidgets.FloatSlider(\n",

|

| 263 |

+

" value=0.0,\n",

|

| 264 |

+

" min=0.0,\n",

|

| 265 |

+

" max=1.0,\n",

|

| 266 |

+

" step=0.01,\n",

|

| 267 |

+

" description=\"Right:\",\n",

|

| 268 |

+

" readout=True,\n",

|

| 269 |

+

" readout_format=\".2f\", \n",

|

| 270 |

+

")\n",

|

| 271 |

+

"iris_rotation_x_slider = ipywidgets.FloatSlider(\n",

|

| 272 |

+

" value=0.0,\n",

|

| 273 |

+

" min=-1.0,\n",

|

| 274 |

+

" max=1.0,\n",

|

| 275 |

+

" step=0.01,\n",

|

| 276 |

+

" description=\"X-axis:\",\n",

|

| 277 |

+

" readout=True,\n",

|

| 278 |

+

" readout_format=\".2f\"\n",

|

| 279 |

+

")\n",

|

| 280 |

+

"iris_rotation_y_slider = ipywidgets.FloatSlider(\n",

|

| 281 |

+

" value=0.0,\n",

|

| 282 |

+

" min=-1.0,\n",

|

| 283 |

+

" max=1.0,\n",

|

| 284 |

+

" step=0.01,\n",

|

| 285 |

+

" description=\"Y-axis:\",\n",

|

| 286 |

+

" readout=True,\n",

|

| 287 |

+

" readout_format=\".2f\", \n",

|

| 288 |

+

")\n",

|

| 289 |

+

"\n",

|

| 290 |

+

"head_x_slider = ipywidgets.FloatSlider(\n",

|

| 291 |

+

" value=0.0,\n",

|

| 292 |

+

" min=-1.0,\n",

|

| 293 |

+

" max=1.0,\n",

|

| 294 |

+

" step=0.01,\n",

|

| 295 |

+

" description=\"X-axis:\",\n",

|

| 296 |

+

" readout=True,\n",

|

| 297 |

+

" readout_format=\".2f\"\n",

|

| 298 |

+

")\n",

|

| 299 |

+

"head_y_slider = ipywidgets.FloatSlider(\n",

|

| 300 |

+

" value=0.0,\n",

|

| 301 |

+

" min=-1.0,\n",

|

| 302 |

+

" max=1.0,\n",

|

| 303 |

+

" step=0.01,\n",

|

| 304 |

+

" description=\"Y-axis:\",\n",

|

| 305 |

+

" readout=True,\n",

|

| 306 |

+

" readout_format=\".2f\", \n",

|

| 307 |

+

")\n",

|

| 308 |

+

"neck_z_slider = ipywidgets.FloatSlider(\n",

|

| 309 |

+

" value=0.0,\n",

|

| 310 |

+

" min=-1.0,\n",

|

| 311 |

+

" max=1.0,\n",

|

| 312 |

+

" step=0.01,\n",

|

| 313 |

+

" description=\"Z-axis:\",\n",

|

| 314 |

+

" readout=True,\n",

|

| 315 |

+

" readout_format=\".2f\", \n",

|

| 316 |

+

")\n",

|

| 317 |

+

"body_y_slider = ipywidgets.FloatSlider(\n",

|

| 318 |

+

" value=0.0,\n",

|

| 319 |

+

" min=-1.0,\n",

|

| 320 |

+

" max=1.0,\n",

|

| 321 |

+

" step=0.01,\n",

|

| 322 |

+

" description=\"Y-axis rotation:\",\n",

|

| 323 |

+

" readout=True,\n",

|

| 324 |

+

" readout_format=\".2f\", \n",

|

| 325 |

+

")\n",

|

| 326 |

+

"body_z_slider = ipywidgets.FloatSlider(\n",

|

| 327 |

+

" value=0.0,\n",

|