Spaces:

Running

on

Zero

Running

on

Zero

First commit from github repo

Browse files- .gitattributes +1 -0

- README.md +124 -14

- app.py +470 -0

- assets/a4957-expertc.png +0 -0

- assets/a4957-input.png +0 -0

- assets/a4982-input.png +0 -0

- assets/a4984-input.png +0 -0

- assets/a4985-input.png +0 -0

- assets/a4986-input.png +0 -0

- assets/a4988-input.png +0 -0

- assets/a4990-input.png +0 -0

- assets/a4993-input.png +0 -0

- assets/a4994-input.png +0 -0

- assets/a4996-input.png +0 -0

- assets/a4998-input.png +0 -0

- assets/a5000-input.png +0 -0

- assets/architecture-overview.png +0 -0

- assets/thumbnail.png +0 -0

- configs/mit5k_dpe_config.yaml +96 -0

- configs/mit5k_upe_config.yaml +90 -0

- data/datasets.py +109 -0

- data/image_transformations.py +61 -0

- mit5k_ids_filepath/dpe/images_test.txt +50 -0

- mit5k_ids_filepath/dpe/images_train.txt +62 -0

- mit5k_ids_filepath/dpe/images_valid.txt +2250 -0

- mit5k_ids_filepath/upe_uegan/images_test.txt +500 -0

- mit5k_ids_filepath/upe_uegan/images_train.txt +4500 -0

- models/attention_fusion.py +108 -0

- models/backbone.py +234 -0

- models/bezier_control_point_estimator.py +90 -0

- models/color_naming.py +70 -0

- models/interactive_model.py +125 -0

- models/joost_color_naming.mat +3 -0

- models/model.py +28 -0

- output/a4957-input.png +0 -0

- requirements.txt +33 -0

- scripts/download_checkpoints.sh +9 -0

- scripts/generate_naming_maps.py +29 -0

- test.py +41 -0

- train.py +73 -0

- utils/deltaE.py +125 -0

- utils/evaluator.py +69 -0

- utils/logger.py +21 -0

- utils/setup_criterion.py +26 -0

- utils/setup_optim_scheduler.py +10 -0

- utils/trainer.py +47 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

models/joost_color_naming.mat filter=lfs diff=lfs merge=lfs -text

|

README.md

CHANGED

|

@@ -1,14 +1,124 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

| 13 |

-

|

| 14 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

|

| 2 |

+

|

| 3 |

+

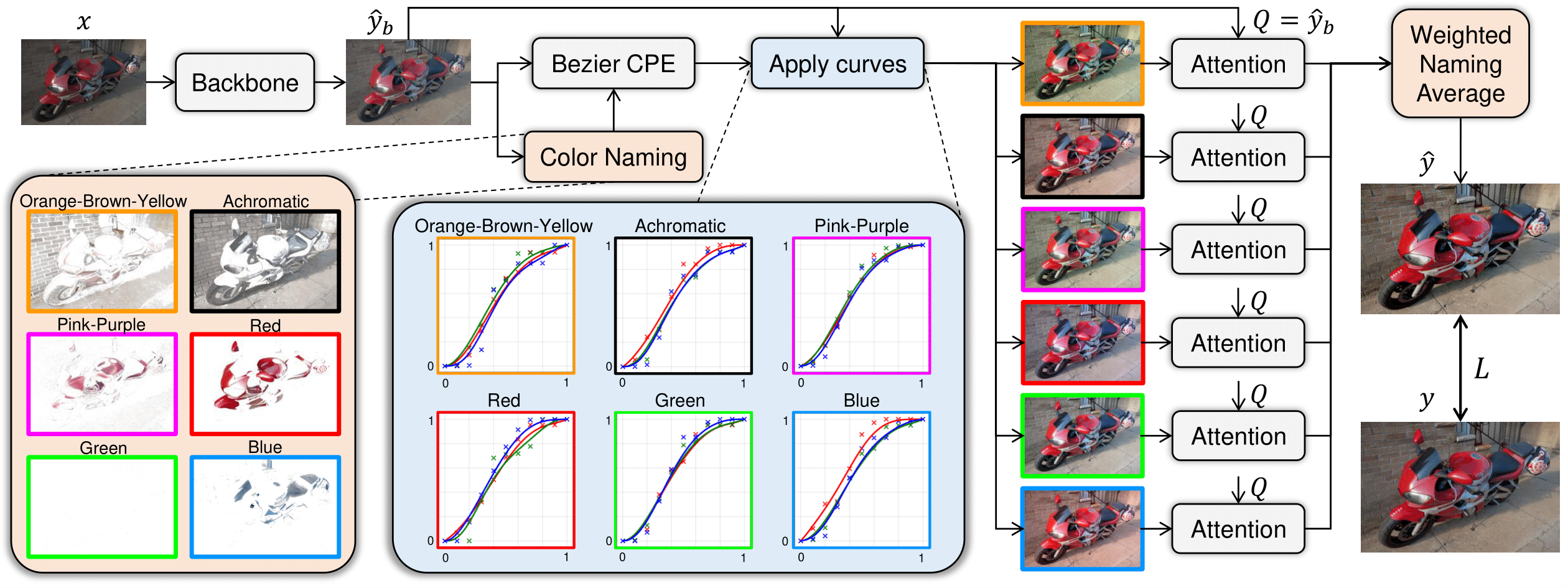

This repository is the official implementation of "NamedCurves: Learned Image Enhancement via Color Naming" @ ECCV24.

|

| 4 |

+

|

| 5 |

+

[](https://arxiv.org/abs/2407.09892)

|

| 6 |

+

[](https://namedcurves.github.io/)

|

| 7 |

+

|

| 8 |

+

[David Serrano-Lozano](https://davidserra9.github.io/), [Luis Herranz](http://www.lherranz.org/), [Michael S. Brown](http://www.cse.yorku.ca/~mbrown/) and [Javier Vazquez-Corral](https://www.jvazquez-corral.net/)

|

| 9 |

+

|

| 10 |

+

## News 🚀

|

| 11 |

+

- [Nov24] Code update. We release the inference images for MIT5K-UEGAN.

|

| 12 |

+

- [July24] We realease the code and pretrained models of our paper.

|

| 13 |

+

- [July24] Our paper NamedCurves is accepted to ECCV24!

|

| 14 |

+

|

| 15 |

+

## TODO:

|

| 16 |

+

- torch Dataset object for PPR10K

|

| 17 |

+

- Create notebook

|

| 18 |

+

- Create gradio demo

|

| 19 |

+

|

| 20 |

+

## Method

|

| 21 |

+

|

| 22 |

+

We propose NamedCurves, a learning-based image enhancement technique that decomposes the image into a small set of named colors. Our method learns to globally adjust the image for each specific named color via tone curves and then combines the images using and attention-based fusion mechanism to mimic spatial editing. In contrast to other SOTA methods, NamedCurves allows interpretability thanks to computing a set of tone curves for each universal color name.

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

## Data

|

| 27 |

+

|

| 28 |

+

In this paper we use two datasets: [MIT-Adobe FiveK](https://data.csail.mit.edu/graphics/fivek/) and [PPR10K](https://github.com/csjliang/PPR10K).

|

| 29 |

+

|

| 30 |

+

### MIT-Adobe FiveK

|

| 31 |

+

|

| 32 |

+

MIT FiveK dataset consists of 5,000 photographs taken by SLR cameras by a set of different photographers that cover a broad range of scenes, subjects, and lighting conditions. They are all in RAW format. Then, 5 different photography students adjust the tone of the photos. Each of them retouched all the 5,000 photos using Adobe Lightroom.

|

| 33 |

+

|

| 34 |

+

Following previous works we decided to use just the expert-C redition. To obtain the retouched images, we have to render the RAW files using Adobe Lightroom. Because of this, researchers have created different rendered versions of the dataset. In this paper, we use 3 different versions: DPE, UPE and UEGAN, dubbed after the method that introduced them. Some methods were evaluated in only some of the versions and their code and models are not available, so we considered it was fair to compare our results in the same conditions as they did. Now, we will provide information on the properties of each version and how to obtain them:

|

| 35 |

+

|

| 36 |

+

The dataset can be downloaded [here](ttps://data.csail.mit.edu/graphics/fivek/). After downloading the images you will need to use Adobe Lightroom to pre-process them according to each version.

|

| 37 |

+

|

| 38 |

+

- The **DPE** version uses the first 2,250 images of the dataset for training, the following 2,250 for validation and the last 500 for testing. The images are rendered to have the short edge to 512 pixels. Please see the [issue](https://github.com/sjmoran/CURL/issues/20) for detailed instructions.

|

| 39 |

+

|

| 40 |

+

- The **UPE** version uses the first 4,500 images of the dataset for training and the last 500 for testing. The images are rendered to have the short edge to 512 pixels. Please see the [issue](https://github.com/dvlab-research/DeepUPE/issues/26) for detailed instructions.

|

| 41 |

+

|

| 42 |

+

- The **UEGAN** version uses the first 4,500 images of the dataset for training and the last 500 for testing. The images are rendered to have the short edge to 512 pixels. For downloading the rendered images from [Google Drive](https://drive.google.com/drive/folders/1x-DcqFVoxprzM4KYGl8SUif8sV-57FP3). Please see the [official repository](https://github.com/dvlab-research/DeepUPE) for more information.

|

| 43 |

+

|

| 44 |

+

### PPR10K

|

| 45 |

+

PPR10K contains 1,681 high-quality RAW portraits photos manually retouched by 3 experts. The dataset can be downloaded from the [official repository](https://github.com/csjliang/PPR10K). We used the 480p images.

|

| 46 |

+

|

| 47 |

+

## Getting started

|

| 48 |

+

|

| 49 |

+

### Environment setup

|

| 50 |

+

|

| 51 |

+

We provide a Conda environment file ```requirements.txt``` with all necessary dependencies, except for PyTorch and Torchvision. Follow the instructions below to set up the environment.

|

| 52 |

+

|

| 53 |

+

First, create and activate the Conda environment:

|

| 54 |

+

|

| 55 |

+

```

|

| 56 |

+

conda create -n namedcurves python=3.8

|

| 57 |

+

conda activate namedcurves

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

Alternatively, you can set up a virtual environment:

|

| 61 |

+

```

|

| 62 |

+

python3 -m venv venv

|

| 63 |

+

source venv/bin/activate

|

| 64 |

+

```

|

| 65 |

+

|

| 66 |

+

Next, install PyTorch and Torchvision with the appropriate versions based on your CUDA and driver dependencies. Visit the [Pytorch Official Page](https://pytorch.org/get-started/previous-versions/) for specific installation commands. For example:

|

| 67 |

+

|

| 68 |

+

```

|

| 69 |

+

pip install torch==1.12.0+cu113 torchvision==0.13.0+cu113 --extra-index-url https://download.pytorch.org/whl/cu113

|

| 70 |

+

```

|

| 71 |

+

|

| 72 |

+

Once PyTorch is installed, you can install the remaining dependencies from the ```requirements.txt``` file:

|

| 73 |

+

|

| 74 |

+

```

|

| 75 |

+

pip install -r requirements.txt

|

| 76 |

+

```

|

| 77 |

+

|

| 78 |

+

Alternatively, you can manually install the required packages:

|

| 79 |

+

```

|

| 80 |

+

pip install omegaconf matplotlib scipy scikit-image lpips torchmetrics

|

| 81 |

+

```

|

| 82 |

+

|

| 83 |

+

### Results

|

| 84 |

+

|

| 85 |

+

We provide our results for the MIT5K dataset in the following format: aXXXX_Y_Z.png, where XXXX is the 4-digit file ID, Y is the PSNR value, and Z is the $\Delta E2000$ color difference of the image. All numeric values are rounded to two decimal places.

|

| 86 |

+

|

| 87 |

+

| | PSNR | SSIM | $\Delta E2000$ | Images |

|

| 88 |

+

| :-------- | :------: | :-------: | :--------------: | :-------: |

|

| 89 |

+

| MIT5K | 25.59 | 0.936 | 6.07 | [Link](https://cvcuab-my.sharepoint.com/:f:/g/personal/dserrano_cvc_uab_cat/EijObxqdogJHpNufwKKZE4ABI78-4iQnO78V2mHkzfs07A?e=tVTWAq)

|

| 90 |

+

|

| 91 |

+

### Pre-trained models

|

| 92 |

+

|

| 93 |

+

Create and store the pre-trained models in a folder inside the repository.

|

| 94 |

+

|

| 95 |

+

```

|

| 96 |

+

cd namedcurves

|

| 97 |

+

mkdir pretrained

|

| 98 |

+

```

|

| 99 |

+

|

| 100 |

+

The weights can be found [here](https://github.com/davidserra9/namedcurves/releases/tag/v1.0). Alternatively, you can run:

|

| 101 |

+

|

| 102 |

+

```

|

| 103 |

+

cd namedcurves

|

| 104 |

+

bash scripts/download_checkpoints.sh

|

| 105 |

+

```

|

| 106 |

+

|

| 107 |

+

|

| 108 |

+

## Inference

|

| 109 |

+

|

| 110 |

+

The following command takes an image file or a folder with images and saves the results in the specified directory.

|

| 111 |

+

|

| 112 |

+

```

|

| 113 |

+

python test.py --input_path assets/a4957-input.png --output_path output/ --config_path configs/mit5k_upe_config.yaml --model_path pretrained/mit5k_uegan_psnr_25.59.pth

|

| 114 |

+

```

|

| 115 |

+

|

| 116 |

+

## Training

|

| 117 |

+

|

| 118 |

+

Modify the configurations of the ```configs``` folders and run the following command:

|

| 119 |

+

|

| 120 |

+

```

|

| 121 |

+

python train.py --config configs/mit5k_upe_config.yaml

|

| 122 |

+

```

|

| 123 |

+

|

| 124 |

+

|

app.py

ADDED

|

@@ -0,0 +1,470 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import argparse

|

| 2 |

+

from omegaconf import OmegaConf

|

| 3 |

+

import gradio as gr

|

| 4 |

+

from PIL import Image

|

| 5 |

+

import os

|

| 6 |

+

import torch

|

| 7 |

+

import numpy as np

|

| 8 |

+

import io

|

| 9 |

+

import yaml

|

| 10 |

+

from huggingface_hub import hf_hub_download

|

| 11 |

+

import matplotlib.pyplot as plt

|

| 12 |

+

#from gradio_imageslider import ImageSlider

|

| 13 |

+

|

| 14 |

+

## local code

|

| 15 |

+

from models.interactive_model import NamedCurves

|

| 16 |

+

|

| 17 |

+

def dict2namespace(config):

|

| 18 |

+

namespace = argparse.Namespace()

|

| 19 |

+

for key, value in config.items():

|

| 20 |

+

if isinstance(value, dict):

|

| 21 |

+

new_value = dict2namespace(value)

|

| 22 |

+

else:

|

| 23 |

+

new_value = value

|

| 24 |

+

setattr(namespace, key, new_value)

|

| 25 |

+

return namespace

|

| 26 |

+

|

| 27 |

+

def get_named_curves(control_points):

|

| 28 |

+

linspace = torch.linspace(0, 1, steps=101).unsqueeze(0).unsqueeze(2).repeat(1, 3, 1, 1).to(device)

|

| 29 |

+

outspace = model.bcpe.apply_cubic_bezier(linspace, control_points)

|

| 30 |

+

|

| 31 |

+

fig = plt.figure()

|

| 32 |

+

plt.plot(linspace[0, 0, :, 0].cpu().numpy(),outspace[0, 0, :, 0].cpu().numpy(), 'r')

|

| 33 |

+

plt.plot(linspace[0, 1, :, 0].cpu().numpy(), outspace[0, 1, :, 0].cpu().numpy(), 'g')

|

| 34 |

+

plt.plot(linspace[0, 2, :, 0].cpu().numpy(), outspace[0, 2, :, 0].cpu().numpy(), 'b')

|

| 35 |

+

|

| 36 |

+

plt.scatter(control_points[0, 0, :, 1].cpu().numpy(), control_points[0, 0, :, 0].cpu().numpy(), c='r', marker='x')

|

| 37 |

+

plt.scatter(control_points[0, 1, :, 1].cpu().numpy(), control_points[0, 1, :, 0].cpu().numpy(), c='g', marker='x')

|

| 38 |

+

plt.scatter(control_points[0, 2, :, 1].cpu().numpy(), control_points[0, 2, :, 0].cpu().numpy(), c='b', marker='x')

|

| 39 |

+

|

| 40 |

+

plt.xlim(0, 1)

|

| 41 |

+

plt.ylim(0, 1)

|

| 42 |

+

plt.grid()

|

| 43 |

+

|

| 44 |

+

img_buf = io.BytesIO()

|

| 45 |

+

plt.savefig(img_buf, format='png', bbox_inches='tight', dpi=300)

|

| 46 |

+

plt.close(fig)

|

| 47 |

+

return Image.open(img_buf)

|

| 48 |

+

|

| 49 |

+

hf_hub_download(repo_id="davidserra9/NamedCurves", filename="mit5k_uegan_psnr_25.59.pth", local_dir="./")

|

| 50 |

+

|

| 51 |

+

CONFIG = "configs/mit5k_upe_config.yaml"

|

| 52 |

+

model_pt = "mit5k_uegan_psnr_25.59.pth"

|

| 53 |

+

|

| 54 |

+

# parse config file

|

| 55 |

+

config = OmegaConf.load(CONFIG)

|

| 56 |

+

|

| 57 |

+

config = dict2namespace(config)

|

| 58 |

+

|

| 59 |

+

device = torch.device("cuda") if torch.cuda.is_available() else torch.device("cpu")

|

| 60 |

+

model = NamedCurves(config.model).to(device)

|

| 61 |

+

model.load_state_dict(torch.load(model_pt)["model_state_dict"])

|

| 62 |

+

|

| 63 |

+

def load_img(filename, norm=True,):

|

| 64 |

+

img = np.array(Image.open(filename).convert("RGB"))

|

| 65 |

+

if norm:

|

| 66 |

+

img = img / 255.

|

| 67 |

+

img = img.astype(np.float32)

|

| 68 |

+

return img

|

| 69 |

+

|

| 70 |

+

def process_img(image):

|

| 71 |

+

img = np.array(image)

|

| 72 |

+

img = img / 255.

|

| 73 |

+

img = img.astype(np.float32)

|

| 74 |

+

y = torch.tensor(img).permute(2,0,1).unsqueeze(0).to(device)

|

| 75 |

+

|

| 76 |

+

with torch.no_grad():

|

| 77 |

+

enhanced_img, control_points = model(y, return_curves=True)

|

| 78 |

+

|

| 79 |

+

img_curves = [get_named_curves(control_points_i) for control_points_i in control_points]

|

| 80 |

+

|

| 81 |

+

enhanced_img = enhanced_img.squeeze().permute(1,2,0).clamp_(0, 1).cpu().detach().numpy()

|

| 82 |

+

enhanced_img = np.clip(enhanced_img, 0. , 1.)

|

| 83 |

+

|

| 84 |

+

enhanced_img = (enhanced_img * 255.0).round().astype(np.uint8) # float32 to uint8

|

| 85 |

+

oby_points = control_points[0][0, :, :, 0].detach().cpu().numpy()

|

| 86 |

+

achr_points = control_points[1][0, :, :, 0].detach().cpu().numpy()

|

| 87 |

+

pp_points = control_points[2][0, :, :, 0].detach().cpu().numpy()

|

| 88 |

+

red_points = control_points[3][0, :, :, 0].detach().cpu().numpy()

|

| 89 |

+

green_points = control_points[4][0, :, :, 0].detach().cpu().numpy()

|

| 90 |

+

blue_points = control_points[5][0, :, :, 0].detach().cpu().numpy()

|

| 91 |

+

|

| 92 |

+

return img_curves[0], oby_points[0, 0], oby_points[1, 0], oby_points[2, 0], oby_points[0, 1], oby_points[1, 1], oby_points[2, 1], oby_points[0, 2], oby_points[1, 2], oby_points[2, 2], oby_points[0, 3], oby_points[1, 3], oby_points[2, 3], oby_points[0, 4], oby_points[1, 4], oby_points[2, 4], oby_points[0, 5], oby_points[1, 5], oby_points[2, 5], oby_points[0, 6], oby_points[1, 6], oby_points[2, 6], oby_points[0, 7], oby_points[1, 7], oby_points[2, 7], oby_points[0, 8], oby_points[1, 8], oby_points[2, 8], oby_points[0, 9], oby_points[1, 9], oby_points[2, 9], oby_points[0, 10], oby_points[1, 10], oby_points[2, 10], img_curves[1], achr_points[0, 0], achr_points[1, 0], achr_points[2, 0], achr_points[0, 1], achr_points[1, 1], achr_points[2, 1], achr_points[0, 2], achr_points[1, 2], achr_points[2, 2], achr_points[0, 3], achr_points[1, 3], achr_points[2, 3], achr_points[0, 4], achr_points[1, 4], achr_points[2, 4], achr_points[0, 5], achr_points[1, 5], achr_points[2, 5], achr_points[0, 6], achr_points[1, 6], achr_points[2, 6], achr_points[0, 7], achr_points[1, 7], achr_points[2, 7], achr_points[0, 8], achr_points[1, 8], achr_points[2, 8], achr_points[0, 9], achr_points[1, 9], achr_points[2, 9], achr_points[0, 10], achr_points[1, 10], achr_points[2, 10], img_curves[2], pp_points[0, 0], pp_points[1, 0], pp_points[2, 0], pp_points[0, 1], pp_points[1, 1], pp_points[2, 1], pp_points[0, 2], pp_points[1, 2], pp_points[2, 2], pp_points[0, 3], pp_points[1, 3], pp_points[2, 3], pp_points[0, 4], pp_points[1, 4], pp_points[2, 4], pp_points[0, 5], pp_points[1, 5], pp_points[2, 5], pp_points[0, 6], pp_points[1, 6], pp_points[2, 6], pp_points[0, 7], pp_points[1, 7], pp_points[2, 7], pp_points[0, 8], pp_points[1, 8], pp_points[2, 8], pp_points[0, 9], pp_points[1, 9], pp_points[2, 9], pp_points[0, 10], pp_points[1, 10], pp_points[2, 10], img_curves[3], red_points[0, 0], red_points[1, 0], red_points[2, 0], red_points[0, 1], red_points[1, 1], red_points[2, 1], red_points[0, 2], red_points[1, 2], red_points[2, 2], red_points[0, 3], red_points[1, 3], red_points[2, 3], red_points[0, 4], red_points[1, 4], red_points[2, 4], red_points[0, 5], red_points[1, 5], red_points[2, 5], red_points[0, 6], red_points[1, 6], red_points[2, 6], red_points[0, 7], red_points[1, 7], red_points[2, 7], red_points[0, 8], red_points[1, 8], red_points[2, 8], red_points[0, 9], red_points[1, 9], red_points[2, 9], red_points[0, 10], red_points[1, 10], red_points[2, 10], img_curves[4], green_points[0, 0], green_points[1, 0], green_points[2, 0], green_points[0, 1], green_points[1, 1], green_points[2, 1], green_points[0, 2], green_points[1, 2], green_points[2, 2], green_points[0, 3], green_points[1, 3], green_points[2, 3], green_points[0, 4], green_points[1, 4], green_points[2, 4], green_points[0, 5], green_points[1, 5], green_points[2, 5], green_points[0, 6], green_points[1, 6], green_points[2, 6], green_points[0, 7], green_points[1, 7], green_points[2, 7], green_points[0, 8], green_points[1, 8], green_points[2, 8], green_points[0, 9], green_points[1, 9], green_points[2, 9], green_points[0, 10], green_points[1, 10], green_points[2, 10], img_curves[5], blue_points[0, 0], blue_points[1, 0], blue_points[2, 0], blue_points[0, 1], blue_points[1, 1], blue_points[2, 1], blue_points[0, 2], blue_points[1, 2], blue_points[2, 2], blue_points[0, 3], blue_points[1, 3], blue_points[2, 3], blue_points[0, 4], blue_points[1, 4], blue_points[2, 4], blue_points[0, 5], blue_points[1, 5], blue_points[2, 5], blue_points[0, 6], blue_points[1, 6], blue_points[2, 6], blue_points[0, 7], blue_points[1, 7], blue_points[2, 7], blue_points[0, 8], blue_points[1, 8], blue_points[2, 8], blue_points[0, 9], blue_points[1, 9], blue_points[2, 9], blue_points[0, 10], blue_points[1, 10], blue_points[2, 10], Image.fromarray(enhanced_img)

|

| 93 |

+

|

| 94 |

+

def process_img_with_sliders(image, oby_red_p0, oby_green_p0, oby_blue_p0, oby_red_p1, oby_green_p1, oby_blue_p1, oby_red_p2, oby_green_p2, oby_blue_p2, oby_red_p3, oby_green_p3, oby_blue_p3, oby_red_p4, oby_green_p4, oby_blue_p4, oby_red_p5, oby_green_p5, oby_blue_p5, oby_red_p6, oby_green_p6, oby_blue_p6, oby_red_p7, oby_green_p7, oby_blue_p7, oby_red_p8, oby_green_p8, oby_blue_p8, oby_red_p9, oby_green_p9, oby_blue_p9, oby_red_p10, oby_green_p10, oby_blue_p10,

|

| 95 |

+

achro_red_p0, achro_green_p0, achro_blue_p0, achro_red_p1, achro_green_p1, achro_blue_p1, achro_red_p2, achro_green_p2, achro_blue_p2, achro_red_p3, achro_green_p3, achro_blue_p3, achro_red_p4, achro_green_p4, achro_blue_p4, achro_red_p5, achro_green_p5, achro_blue_p5, achro_red_p6, achro_green_p6, achro_blue_p6, achro_red_p7, achro_green_p7, achro_blue_p7, achro_red_p8, achro_green_p8, achro_blue_p8, achro_red_p9, achro_green_p9, achro_blue_p9, achro_red_p10, achro_green_p10, achro_blue_p10,

|

| 96 |

+

pp_red_p0, pp_green_p0, pp_blue_p0, pp_red_p1, pp_green_p1, pp_blue_p1, pp_red_p2, pp_green_p2, pp_blue_p2, pp_red_p3, pp_green_p3, pp_blue_p3, pp_red_p4, pp_green_p4, pp_blue_p4, pp_red_p5, pp_green_p5, pp_blue_p5, pp_red_p6, pp_green_p6, pp_blue_p6, pp_red_p7, pp_green_p7, pp_blue_p7, pp_red_p8, pp_green_p8, pp_blue_p8, pp_red_p9, pp_green_p9, pp_blue_p9, pp_red_p10, pp_green_p10, pp_blue_p10,

|

| 97 |

+

red_red_p0, red_green_p0, red_blue_p0, red_red_p1, red_green_p1, red_blue_p1, red_red_p2, red_green_p2, red_blue_p2, red_red_p3, red_green_p3, red_blue_p3, red_red_p4, red_green_p4, red_blue_p4, red_red_p5, red_green_p5, red_blue_p5, red_red_p6, red_green_p6, red_blue_p6, red_red_p7, red_green_p7, red_blue_p7, red_red_p8, red_green_p8, red_blue_p8, red_red_p9, red_green_p9, red_blue_p9, red_red_p10, red_green_p10, red_blue_p10,

|

| 98 |

+

green_red_p0, green_green_p0, green_blue_p0, green_red_p1, green_green_p1, green_blue_p1, green_red_p2, green_green_p2, green_blue_p2, green_red_p3, green_green_p3, green_blue_p3, green_red_p4, green_green_p4, green_blue_p4, green_red_p5, green_green_p5, green_blue_p5, green_red_p6, green_green_p6, green_blue_p6, green_red_p7, green_green_p7, green_blue_p7, green_red_p8, green_green_p8, green_blue_p8, green_red_p9, green_green_p9, green_blue_p9, green_red_p10, green_green_p10, green_blue_p10,

|

| 99 |

+

blue_red_p0, blue_green_p0, blue_blue_p0, blue_red_p1, blue_green_p1, blue_blue_p1, blue_red_p2, blue_green_p2, blue_blue_p2, blue_red_p3, blue_green_p3, blue_blue_p3, blue_red_p4, blue_green_p4, blue_blue_p4, blue_red_p5, blue_green_p5, blue_blue_p5, blue_red_p6, blue_green_p6, blue_blue_p6, blue_red_p7, blue_green_p7, blue_blue_p7, blue_red_p8, blue_green_p8, blue_blue_p8, blue_red_p9, blue_green_p9, blue_blue_p9, blue_red_p10, blue_green_p10, blue_blue_p10,

|

| 100 |

+

):

|

| 101 |

+

|

| 102 |

+

x = np.linspace(0, 1, 11)

|

| 103 |

+

oby_r_y = [float(oby_red_p0), float(oby_red_p1), float(oby_red_p2), float(oby_red_p3), float(oby_red_p4), float(oby_red_p5), float(oby_red_p6), float(oby_red_p7), float(oby_red_p8), float(oby_red_p9), float(oby_red_p10)]

|

| 104 |

+

oby_g_y = [float(oby_green_p0), float(oby_green_p1), float(oby_green_p2), float(oby_green_p3), float(oby_green_p4), float(oby_green_p5), float(oby_green_p6), float(oby_green_p7), float(oby_green_p8), float(oby_green_p9), float(oby_green_p10)]

|

| 105 |

+

oby_b_y = [float(oby_blue_p0), float(oby_blue_p1), float(oby_blue_p2), float(oby_blue_p3), float(oby_blue_p4), float(oby_blue_p5), float(oby_blue_p6), float(oby_blue_p7), float(oby_blue_p8), float(oby_blue_p9), float(oby_blue_p10)]

|

| 106 |

+

achro_r_y = [float(achro_red_p0), float(achro_red_p1), float(achro_red_p2), float(achro_red_p3), float(achro_red_p4), float(achro_red_p5), float(achro_red_p6), float(achro_red_p7), float(achro_red_p8), float(achro_red_p9), float(achro_red_p10)]

|

| 107 |

+

achro_g_y = [float(achro_green_p0), float(achro_green_p1), float(achro_green_p2), float(achro_green_p3), float(achro_green_p4), float(achro_green_p5), float(achro_green_p6), float(achro_green_p7), float(achro_green_p8), float(achro_green_p9), float(achro_green_p10)]

|

| 108 |

+

achro_b_y = [float(achro_blue_p0), float(achro_blue_p1), float(achro_blue_p2), float(achro_blue_p3), float(achro_blue_p4), float(achro_blue_p5), float(achro_blue_p6), float(achro_blue_p7), float(achro_blue_p8), float(achro_blue_p9), float(achro_blue_p10)]

|

| 109 |

+

pp_r_y = [float(pp_red_p0), float(pp_red_p1), float(pp_red_p2), float(pp_red_p3), float(pp_red_p4), float(pp_red_p5), float(pp_red_p6), float(pp_red_p7), float(pp_red_p8), float(pp_red_p9), float(pp_red_p10)]

|

| 110 |

+

pp_g_y = [float(pp_green_p0), float(pp_green_p1), float(pp_green_p2), float(pp_green_p3), float(pp_green_p4), float(pp_green_p5), float(pp_green_p6), float(pp_green_p7), float(pp_green_p8), float(pp_green_p9), float(pp_green_p10)]

|

| 111 |

+

pp_b_y = [float(pp_blue_p0), float(pp_blue_p1), float(pp_blue_p2), float(pp_blue_p3), float(pp_blue_p4), float(pp_blue_p5), float(pp_blue_p6), float(pp_blue_p7), float(pp_blue_p8), float(pp_blue_p9), float(pp_blue_p10)]

|

| 112 |

+

red_r_y = [float(red_red_p0), float(red_red_p1), float(red_red_p2), float(red_red_p3), float(red_red_p4), float(red_red_p5), float(red_red_p6), float(red_red_p7), float(red_red_p8), float(red_red_p9), float(red_red_p10)]

|

| 113 |

+

red_g_y = [float(red_green_p0), float(red_green_p1), float(red_green_p2), float(red_green_p3), float(red_green_p4), float(red_green_p5), float(red_green_p6), float(red_green_p7), float(red_green_p8), float(red_green_p9), float(red_green_p10)]

|

| 114 |

+

red_b_y = [float(red_blue_p0), float(red_blue_p1), float(red_blue_p2), float(red_blue_p3), float(red_blue_p4), float(red_blue_p5), float(red_blue_p6), float(red_blue_p7), float(red_blue_p8), float(red_blue_p9), float(red_blue_p10)]

|

| 115 |

+

green_r_y = [float(green_red_p0), float(green_red_p1), float(green_red_p2), float(green_red_p3), float(green_red_p4), float(green_red_p5), float(green_red_p6), float(green_red_p7), float(green_red_p8), float(green_red_p9), float(green_red_p10)]

|

| 116 |

+

green_g_y = [float(green_green_p0), float(green_green_p1), float(green_green_p2), float(green_green_p3), float(green_green_p4), float(green_green_p5), float(green_green_p6), float(green_green_p7), float(green_green_p8), float(green_green_p9), float(green_green_p10)]

|

| 117 |

+

green_b_y = [float(green_blue_p0), float(green_blue_p1), float(green_blue_p2), float(green_blue_p3), float(green_blue_p4), float(green_blue_p5), float(green_blue_p6), float(green_blue_p7), float(green_blue_p8), float(green_blue_p9), float(green_blue_p10)]

|

| 118 |

+

blue_r_y = [float(blue_red_p0), float(blue_red_p1), float(blue_red_p2), float(blue_red_p3), float(blue_red_p4), float(blue_red_p5), float(blue_red_p6), float(blue_red_p7), float(blue_red_p8), float(blue_red_p9), float(blue_red_p10)]

|

| 119 |

+

blue_g_y = [float(blue_green_p0), float(blue_green_p1), float(blue_green_p2), float(blue_green_p3), float(blue_green_p4), float(blue_green_p5), float(blue_green_p6), float(blue_green_p7), float(blue_green_p8), float(blue_green_p9), float(blue_green_p10)]

|

| 120 |

+

blue_b_y = [float(blue_blue_p0), float(blue_blue_p1), float(blue_blue_p2), float(blue_blue_p3), float(blue_blue_p4), float(blue_blue_p5), float(blue_blue_p6), float(blue_blue_p7), float(blue_blue_p8), float(blue_blue_p9), float(blue_blue_p10)]

|

| 121 |

+

|

| 122 |

+

oby_y = torch.concatenate([torch.tensor(np.array([oby_r_y, x]).T).unsqueeze(0), torch.tensor(np.array([oby_g_y, x]).T).unsqueeze(0), torch.tensor(np.array([oby_b_y, x]).T).unsqueeze(0)], dim=0).unsqueeze(0).to(device)

|

| 123 |

+

achro_y = torch.concatenate([torch.tensor(np.array([achro_r_y, x]).T).unsqueeze(0), torch.tensor(np.array([achro_g_y, x]).T).unsqueeze(0), torch.tensor(np.array([achro_b_y, x]).T).unsqueeze(0)], dim=0).unsqueeze(0).to(device)

|

| 124 |

+

pp_y = torch.concatenate([torch.tensor(np.array([pp_r_y, x]).T).unsqueeze(0), torch.tensor(np.array([pp_g_y, x]).T).unsqueeze(0), torch.tensor(np.array([pp_b_y, x]).T).unsqueeze(0)], dim=0).unsqueeze(0).to(device)

|

| 125 |

+

red_y = torch.concatenate([torch.tensor(np.array([red_r_y, x]).T).unsqueeze(0), torch.tensor(np.array([red_g_y, x]).T).unsqueeze(0), torch.tensor(np.array([red_b_y, x]).T).unsqueeze(0)], dim=0).unsqueeze(0).to(device)

|

| 126 |

+

green_y = torch.concatenate([torch.tensor(np.array([green_r_y, x]).T).unsqueeze(0), torch.tensor(np.array([green_g_y, x]).T).unsqueeze(0), torch.tensor(np.array([green_b_y, x]).T).unsqueeze(0)], dim=0).unsqueeze(0).to(device)

|

| 127 |

+

blue_y = torch.concatenate([torch.tensor(np.array([blue_r_y, x]).T).unsqueeze(0), torch.tensor(np.array([blue_g_y, x]).T).unsqueeze(0), torch.tensor(np.array([blue_b_y, x]).T).unsqueeze(0)], dim=0).unsqueeze(0).to(device)

|

| 128 |

+

|

| 129 |

+

control_points = [oby_y, achro_y, pp_y, red_y, green_y, blue_y]

|

| 130 |

+

|

| 131 |

+

img = np.array(image)

|

| 132 |

+

img = img / 255.

|

| 133 |

+

img = img.astype(np.float32)

|

| 134 |

+

y = torch.tensor(img).permute(2,0,1).unsqueeze(0).to(device)

|

| 135 |

+

|

| 136 |

+

with torch.no_grad():

|

| 137 |

+

enhanced_img, control_points = model(y, return_curves=True, control_points=control_points)

|

| 138 |

+

|

| 139 |

+

img_curves = [get_named_curves(control_points_i) for control_points_i in control_points]

|

| 140 |

+

|

| 141 |

+

enhanced_img = enhanced_img.squeeze().permute(1,2,0).clamp_(0, 1).cpu().detach().numpy()

|

| 142 |

+

enhanced_img = np.clip(enhanced_img, 0. , 1.)

|

| 143 |

+

|

| 144 |

+

enhanced_img = (enhanced_img * 255.0).round().astype(np.uint8) # float32 to uint8

|

| 145 |

+

oby_points = control_points[0][0, :, :, 0].detach().cpu().numpy()

|

| 146 |

+

achr_points = control_points[1][0, :, :, 0].detach().cpu().numpy()

|

| 147 |

+

pp_points = control_points[2][0, :, :, 0].detach().cpu().numpy()

|

| 148 |

+

red_points = control_points[3][0, :, :, 0].detach().cpu().numpy()

|

| 149 |

+

green_points = control_points[4][0, :, :, 0].detach().cpu().numpy()

|

| 150 |

+

blue_points = control_points[5][0, :, :, 0].detach().cpu().numpy()

|

| 151 |

+

|

| 152 |

+

return img_curves[0], oby_points[0, 0], oby_points[1, 0], oby_points[2, 0], oby_points[0, 1], oby_points[1, 1], oby_points[2, 1], oby_points[0, 2], oby_points[1, 2], oby_points[2, 2], oby_points[0, 3], oby_points[1, 3], oby_points[2, 3], oby_points[0, 4], oby_points[1, 4], oby_points[2, 4], oby_points[0, 5], oby_points[1, 5], oby_points[2, 5], oby_points[0, 6], oby_points[1, 6], oby_points[2, 6], oby_points[0, 7], oby_points[1, 7], oby_points[2, 7], oby_points[0, 8], oby_points[1, 8], oby_points[2, 8], oby_points[0, 9], oby_points[1, 9], oby_points[2, 9], oby_points[0, 10], oby_points[1, 10], oby_points[2, 10], img_curves[1], achr_points[0, 0], achr_points[1, 0], achr_points[2, 0], achr_points[0, 1], achr_points[1, 1], achr_points[2, 1], achr_points[0, 2], achr_points[1, 2], achr_points[2, 2], achr_points[0, 3], achr_points[1, 3], achr_points[2, 3], achr_points[0, 4], achr_points[1, 4], achr_points[2, 4], achr_points[0, 5], achr_points[1, 5], achr_points[2, 5], achr_points[0, 6], achr_points[1, 6], achr_points[2, 6], achr_points[0, 7], achr_points[1, 7], achr_points[2, 7], achr_points[0, 8], achr_points[1, 8], achr_points[2, 8], achr_points[0, 9], achr_points[1, 9], achr_points[2, 9], achr_points[0, 10], achr_points[1, 10], achr_points[2, 10], img_curves[2], pp_points[0, 0], pp_points[1, 0], pp_points[2, 0], pp_points[0, 1], pp_points[1, 1], pp_points[2, 1], pp_points[0, 2], pp_points[1, 2], pp_points[2, 2], pp_points[0, 3], pp_points[1, 3], pp_points[2, 3], pp_points[0, 4], pp_points[1, 4], pp_points[2, 4], pp_points[0, 5], pp_points[1, 5], pp_points[2, 5], pp_points[0, 6], pp_points[1, 6], pp_points[2, 6], pp_points[0, 7], pp_points[1, 7], pp_points[2, 7], pp_points[0, 8], pp_points[1, 8], pp_points[2, 8], pp_points[0, 9], pp_points[1, 9], pp_points[2, 9], pp_points[0, 10], pp_points[1, 10], pp_points[2, 10], img_curves[3], red_points[0, 0], red_points[1, 0], red_points[2, 0], red_points[0, 1], red_points[1, 1], red_points[2, 1], red_points[0, 2], red_points[1, 2], red_points[2, 2], red_points[0, 3], red_points[1, 3], red_points[2, 3], red_points[0, 4], red_points[1, 4], red_points[2, 4], red_points[0, 5], red_points[1, 5], red_points[2, 5], red_points[0, 6], red_points[1, 6], red_points[2, 6], red_points[0, 7], red_points[1, 7], red_points[2, 7], red_points[0, 8], red_points[1, 8], red_points[2, 8], red_points[0, 9], red_points[1, 9], red_points[2, 9], red_points[0, 10], red_points[1, 10], red_points[2, 10], img_curves[4], green_points[0, 0], green_points[1, 0], green_points[2, 0], green_points[0, 1], green_points[1, 1], green_points[2, 1], green_points[0, 2], green_points[1, 2], green_points[2, 2], green_points[0, 3], green_points[1, 3], green_points[2, 3], green_points[0, 4], green_points[1, 4], green_points[2, 4], green_points[0, 5], green_points[1, 5], green_points[2, 5], green_points[0, 6], green_points[1, 6], green_points[2, 6], green_points[0, 7], green_points[1, 7], green_points[2, 7], green_points[0, 8], green_points[1, 8], green_points[2, 8], green_points[0, 9], green_points[1, 9], green_points[2, 9], green_points[0, 10], green_points[1, 10], green_points[2, 10], img_curves[5], blue_points[0, 0], blue_points[1, 0], blue_points[2, 0], blue_points[0, 1], blue_points[1, 1], blue_points[2, 1], blue_points[0, 2], blue_points[1, 2], blue_points[2, 2], blue_points[0, 3], blue_points[1, 3], blue_points[2, 3], blue_points[0, 4], blue_points[1, 4], blue_points[2, 4], blue_points[0, 5], blue_points[1, 5], blue_points[2, 5], blue_points[0, 6], blue_points[1, 6], blue_points[2, 6], blue_points[0, 7], blue_points[1, 7], blue_points[2, 7], blue_points[0, 8], blue_points[1, 8], blue_points[2, 8], blue_points[0, 9], blue_points[1, 9], blue_points[2, 9], blue_points[0, 10], blue_points[1, 10], blue_points[2, 10], Image.fromarray(enhanced_img)

|

| 153 |

+

|

| 154 |

+

|

| 155 |

+

|

| 156 |

+

|

| 157 |

+

title = "NamedCurves🌈🤗"

|

| 158 |

+

description = '''

|

| 159 |

+

'''

|

| 160 |

+

|

| 161 |

+

article = "<p style='text-align: center'><a href='https://github.com/davidserra9/namedcurves' target='_blank'>NamedCurves: Learned Image Enhancement via Color Naming</a></p>"

|

| 162 |

+

|

| 163 |

+

#### Image,Prompts examples

|

| 164 |

+

#examples = [['assets/a4957-input.png']]

|

| 165 |

+

|

| 166 |

+

css = """

|

| 167 |

+

.image-frame img, .image-container img {

|

| 168 |

+

width: auto;

|

| 169 |

+

height: auto;

|

| 170 |

+

max-width: none;

|

| 171 |

+

}

|

| 172 |

+

"""

|

| 173 |

+

|

| 174 |

+

with gr.Blocks() as demo:

|

| 175 |

+

gr.Markdown("""

|

| 176 |

+

## [NamedCurves](https://namedcurves.github.io/): Learned Image Enhancement via Color Naming

|

| 177 |

+

[David Serrano-Lozano](https://davidserra9.github.io/), [Luis Herranz](https://www.lherranz.org/), [Michael S. Brown](https://www.eecs.yorku.ca/~mbrown/), [Javier Vazquez-Corral](https://jvazquezcorral.github.io/)

|

| 178 |

+

Computer Vision Center, Universitat Autònoma de Barcelona, Universidad Autónoma de Madrid, York University

|

| 179 |

+

|

| 180 |

+

**NamedCurves decomposes an image into a small set of named colors and enhances them using a learned set of tone curves.** By making this decomposition, we improve the interactivity of the model as the user can modify the tone curves assigned to color name to manipulate only a certain color of the image.

|

| 181 |

+

|

| 182 |

+

* Upload an image and click "Run" to automatically enhance the image.

|

| 183 |

+

* Then, you can adjust the tone curves for each color name to make the retouched version to your liking. Manipulate the sliders corresponding to the control points that define the tone curves. Note that for simplicity, we show the intensity values of the control points instead of the RGB values of each control point.

|

| 184 |

+

|

| 185 |

+

""")

|

| 186 |

+

with gr.Row():

|

| 187 |

+

with gr.Column():

|

| 188 |

+

image = gr.Image(type="pil", label="Input")

|

| 189 |

+

run_btn = gr.Button("Run")

|

| 190 |

+

examples = gr.Examples(['assets/a4957-input.png', 'assets/a4996-input.png', 'assets/a4998-input.png', 'assets/a5000-input.png',

|

| 191 |

+

'assets/a4986-input.png', 'assets/a4988-input.png', 'assets/a4990-input.png', 'assets/a4993-input.png'], inputs=[image])

|

| 192 |

+

|

| 193 |

+

with gr.Tabs() as input_tabs:

|

| 194 |

+

with gr.Tab(label="Orange", id=0) as oby_curves_tab:

|

| 195 |

+

oby_curves = gr.Image(label="Orange-Brown-Yellow Curves", type="pil")

|

| 196 |

+

with gr.Tabs() as channel_tabs:

|

| 197 |

+

with gr.Tab(label="R", id=0) as oby_red_curves_tab:

|

| 198 |

+

oby_red_p0 = gr.Slider(0, 1, label="R-P0", interactive=True)

|

| 199 |

+

oby_red_p1 = gr.Slider(0, 1, label="R-P1", interactive=True)

|

| 200 |

+

oby_red_p2 = gr.Slider(0, 1, label="R-P2", interactive=True)

|

| 201 |

+

oby_red_p3 = gr.Slider(0, 1, label="R-P3", interactive=True)

|

| 202 |

+

oby_red_p4 = gr.Slider(0, 1, label="R-P4", interactive=True)

|

| 203 |

+

oby_red_p5 = gr.Slider(0, 1, label="R-P5", interactive=True)

|

| 204 |

+

oby_red_p6 = gr.Slider(0, 1, label="R-P6", interactive=True)

|

| 205 |

+

oby_red_p7 = gr.Slider(0, 1, label="R-P7", interactive=True)

|

| 206 |

+

oby_red_p8 = gr.Slider(0, 1, label="R-P8", interactive=True)

|

| 207 |

+

oby_red_p9 = gr.Slider(0, 1, label="R-P9", interactive=True)

|

| 208 |

+

oby_red_p10 = gr.Slider(0, 1, label="R-P10", interactive=True)

|

| 209 |

+

with gr.Tab(label="G", id=1) as oby_green_curves_tab:

|

| 210 |

+

oby_green_p0 = gr.Slider(0, 1, label="G-P0", interactive=True)

|

| 211 |

+

oby_green_p1 = gr.Slider(0, 1, label="G-P1", interactive=True)

|

| 212 |

+

oby_green_p2 = gr.Slider(0, 1, label="G-P2", interactive=True)

|

| 213 |

+

oby_green_p3 = gr.Slider(0, 1, label="G-P3", interactive=True)

|

| 214 |

+

oby_green_p4 = gr.Slider(0, 1, label="G-P4", interactive=True)

|

| 215 |

+

oby_green_p5 = gr.Slider(0, 1, label="G-P5", interactive=True)

|

| 216 |

+

oby_green_p6 = gr.Slider(0, 1, label="G-P6", interactive=True)

|

| 217 |

+

oby_green_p7 = gr.Slider(0, 1, label="G-P7", interactive=True)

|

| 218 |

+

oby_green_p8 = gr.Slider(0, 1, label="G-P8", interactive=True)

|

| 219 |

+

oby_green_p9 = gr.Slider(0, 1, label="G-P9", interactive=True)

|

| 220 |

+

oby_green_p10 = gr.Slider(0, 1, label="G-P10", interactive=True)

|

| 221 |

+

with gr.Tab(label="B", id=2) as oby_blue_curves_tab:

|

| 222 |

+

oby_blue_p0 = gr.Slider(0, 1, label="B-P0", interactive=True)

|

| 223 |

+

oby_blue_p1 = gr.Slider(0, 1, label="B-P1", interactive=True)

|

| 224 |

+

oby_blue_p2 = gr.Slider(0, 1, label="B-P2", interactive=True)

|

| 225 |

+

oby_blue_p3 = gr.Slider(0, 1, label="B-P3", interactive=True)

|

| 226 |

+

oby_blue_p4 = gr.Slider(0, 1, label="B-P4", interactive=True)

|

| 227 |

+

oby_blue_p5 = gr.Slider(0, 1, label="B-P5", interactive=True)

|

| 228 |

+

oby_blue_p6 = gr.Slider(0, 1, label="B-P6", interactive=True)

|

| 229 |

+

oby_blue_p7 = gr.Slider(0, 1, label="B-P7", interactive=True)

|

| 230 |

+

oby_blue_p8 = gr.Slider(0, 1, label="B-P8", interactive=True)

|

| 231 |

+

oby_blue_p9 = gr.Slider(0, 1, label="B-P9", interactive=True)

|

| 232 |

+

oby_blue_p10 = gr.Slider(0, 1, label="B-P10", interactive=True)

|

| 233 |

+

|

| 234 |

+

with gr.Tab(label="Achr", id=1) as achro_curves_tab:

|

| 235 |

+

achro_curves = gr.Image(label="Achromatic Curves", type="pil")

|

| 236 |

+

with gr.Tabs() as channel_tabs:

|

| 237 |

+

with gr.Tab(label="R", id=0) as achro_red_curves_tab:

|

| 238 |

+

achro_red_p0 = gr.Slider(0, 1, label="R-P0", interactive=True)

|

| 239 |

+

achro_red_p1 = gr.Slider(0, 1, label="R-P1", interactive=True)

|

| 240 |

+

achro_red_p2 = gr.Slider(0, 1, label="R-P2", interactive=True)

|

| 241 |

+

achro_red_p3 = gr.Slider(0, 1, label="R-P3", interactive=True)

|

| 242 |

+

achro_red_p4 = gr.Slider(0, 1, label="R-P4", interactive=True)

|

| 243 |

+

achro_red_p5 = gr.Slider(0, 1, label="R-P5", interactive=True)

|

| 244 |

+

achro_red_p6 = gr.Slider(0, 1, label="R-P6", interactive=True)

|

| 245 |

+

achro_red_p7 = gr.Slider(0, 1, label="R-P7", interactive=True)

|

| 246 |

+

achro_red_p8 = gr.Slider(0, 1, label="R-P8", interactive=True)

|

| 247 |

+

achro_red_p9 = gr.Slider(0, 1, label="R-P9", interactive=True)

|

| 248 |

+

achro_red_p10 = gr.Slider(0, 1, label="R-P10", interactive=True)

|

| 249 |

+

with gr.Tab(label="G", id=1) as achro_green_curves_tab:

|

| 250 |

+

achro_green_p0 = gr.Slider(0, 1, label="G-P0", interactive=True)

|

| 251 |

+

achro_green_p1 = gr.Slider(0, 1, label="G-P1", interactive=True)

|

| 252 |

+

achro_green_p2 = gr.Slider(0, 1, label="G-P2", interactive=True)

|

| 253 |

+

achro_green_p3 = gr.Slider(0, 1, label="G-P3", interactive=True)

|

| 254 |

+

achro_green_p4 = gr.Slider(0, 1, label="G-P4", interactive=True)

|

| 255 |

+

achro_green_p5 = gr.Slider(0, 1, label="G-P5", interactive=True)

|

| 256 |

+

achro_green_p6 = gr.Slider(0, 1, label="G-P6", interactive=True)

|

| 257 |

+

achro_green_p7 = gr.Slider(0, 1, label="G-P7", interactive=True)

|

| 258 |

+

achro_green_p8 = gr.Slider(0, 1, label="G-P8", interactive=True)

|

| 259 |

+

achro_green_p9 = gr.Slider(0, 1, label="G-P9", interactive=True)

|

| 260 |

+

achro_green_p10 = gr.Slider(0, 1, label="G-P10", interactive=True)

|

| 261 |

+

with gr.Tab(label="B", id=2) as achro_blue_curves_tab:

|

| 262 |

+

achro_blue_p0 = gr.Slider(0, 1, label="B-P0", interactive=True)

|

| 263 |

+

achro_blue_p1 = gr.Slider(0, 1, label="B-P1", interactive=True)

|

| 264 |

+

achro_blue_p2 = gr.Slider(0, 1, label="B-P2", interactive=True)

|

| 265 |

+

achro_blue_p3 = gr.Slider(0, 1, label="B-P3", interactive=True)

|

| 266 |

+

achro_blue_p4 = gr.Slider(0, 1, label="B-P4", interactive=True)

|

| 267 |

+

achro_blue_p5 = gr.Slider(0, 1, label="B-P5", interactive=True)

|

| 268 |

+

achro_blue_p6 = gr.Slider(0, 1, label="B-P6", interactive=True)

|

| 269 |

+

achro_blue_p7 = gr.Slider(0, 1, label="B-P7", interactive=True)

|

| 270 |

+

achro_blue_p8 = gr.Slider(0, 1, label="B-P8", interactive=True)

|

| 271 |

+

achro_blue_p9 = gr.Slider(0, 1, label="B-P9", interactive=True)

|

| 272 |

+

achro_blue_p10 = gr.Slider(0, 1, label="B-P10", interactive=True)

|

| 273 |

+

|

| 274 |

+

with gr.Tab(label="Pink", id=2) as pink_purple_curves_tab:

|

| 275 |

+

pink_purple_curves = gr.Image(label="Pink-Purple Curves", type="pil")

|

| 276 |

+

with gr.Tabs() as channel_tabs:

|

| 277 |

+

with gr.Tab(label="R", id=0) as pp_red_curves_tab:

|

| 278 |

+

pp_red_p0 = gr.Slider(0, 1, label="R-P0", interactive=True)

|

| 279 |

+

pp_red_p1 = gr.Slider(0, 1, label="R-P1", interactive=True)

|

| 280 |

+

pp_red_p2 = gr.Slider(0, 1, label="R-P2", interactive=True)

|

| 281 |

+

pp_red_p3 = gr.Slider(0, 1, label="R-P3", interactive=True)

|

| 282 |

+

pp_red_p4 = gr.Slider(0, 1, label="R-P4", interactive=True)

|

| 283 |

+

pp_red_p5 = gr.Slider(0, 1, label="R-P5", interactive=True)

|

| 284 |

+

pp_red_p6 = gr.Slider(0, 1, label="R-P6", interactive=True)

|

| 285 |

+

pp_red_p7 = gr.Slider(0, 1, label="R-P7", interactive=True)

|

| 286 |

+

pp_red_p8 = gr.Slider(0, 1, label="R-P8", interactive=True)

|

| 287 |

+

pp_red_p9 = gr.Slider(0, 1, label="R-P9", interactive=True)

|

| 288 |

+

pp_red_p10 = gr.Slider(0, 1, label="R-P10", interactive=True)

|

| 289 |

+

with gr.Tab(label="G", id=1) as pp_green_curves_tab:

|

| 290 |

+

pp_green_p0 = gr.Slider(0, 1, label="G-P0", interactive=True)

|

| 291 |

+

pp_green_p1 = gr.Slider(0, 1, label="G-P1", interactive=True)

|

| 292 |

+

pp_green_p2 = gr.Slider(0, 1, label="G-P2", interactive=True)

|

| 293 |

+

pp_green_p3 = gr.Slider(0, 1, label="G-P3", interactive=True)

|

| 294 |

+

pp_green_p4 = gr.Slider(0, 1, label="G-P4", interactive=True)

|

| 295 |

+

pp_green_p5 = gr.Slider(0, 1, label="G-P5", interactive=True)

|

| 296 |

+

pp_green_p6 = gr.Slider(0, 1, label="G-P6", interactive=True)

|

| 297 |

+

pp_green_p7 = gr.Slider(0, 1, label="G-P7", interactive=True)

|

| 298 |

+

pp_green_p8 = gr.Slider(0, 1, label="G-P8", interactive=True)

|

| 299 |

+

pp_green_p9 = gr.Slider(0, 1, label="G-P9", interactive=True)

|

| 300 |

+

pp_green_p10 = gr.Slider(0, 1, label="G-P10", interactive=True)

|

| 301 |

+

with gr.Tab(label="B", id=2) as pp_blue_curves_tab:

|

| 302 |

+

pp_blue_p0 = gr.Slider(0, 1, label="B-P0", interactive=True)

|

| 303 |

+

pp_blue_p1 = gr.Slider(0, 1, label="B-P1", interactive=True)

|

| 304 |

+

pp_blue_p2 = gr.Slider(0, 1, label="B-P2", interactive=True)

|

| 305 |

+

pp_blue_p3 = gr.Slider(0, 1, label="B-P3", interactive=True)

|

| 306 |

+

pp_blue_p4 = gr.Slider(0, 1, label="B-P4", interactive=True)

|

| 307 |

+

pp_blue_p5 = gr.Slider(0, 1, label="B-P5", interactive=True)

|

| 308 |

+

pp_blue_p6 = gr.Slider(0, 1, label="B-P6", interactive=True)

|

| 309 |

+

pp_blue_p7 = gr.Slider(0, 1, label="B-P7", interactive=True)

|

| 310 |

+

pp_blue_p8 = gr.Slider(0, 1, label="B-P8", interactive=True)

|

| 311 |

+

pp_blue_p9 = gr.Slider(0, 1, label="B-P9", interactive=True)

|

| 312 |

+

pp_blue_p10 = gr.Slider(0, 1, label="B-P10", interactive=True)

|

| 313 |

+

|

| 314 |

+

with gr.Tab(label="Red", id=3) as red_curves_tab:

|

| 315 |

+

red_curves = gr.Image(label="Red Curves", type="pil")

|

| 316 |

+

with gr.Tabs() as channel_tabs:

|

| 317 |

+

with gr.Tab(label="R", id=0) as red_red_curves_tab:

|

| 318 |

+

red_red_p0 = gr.Slider(0, 1, label="R-P0", interactive=True)

|

| 319 |

+

red_red_p1 = gr.Slider(0, 1, label="R-P1", interactive=True)

|

| 320 |

+

red_red_p2 = gr.Slider(0, 1, label="R-P2", interactive=True)

|

| 321 |

+

red_red_p3 = gr.Slider(0, 1, label="R-P3", interactive=True)

|

| 322 |

+

red_red_p4 = gr.Slider(0, 1, label="R-P4", interactive=True)

|

| 323 |

+

red_red_p5 = gr.Slider(0, 1, label="R-P5", interactive=True)

|

| 324 |

+

red_red_p6 = gr.Slider(0, 1, label="R-P6", interactive=True)

|

| 325 |

+

red_red_p7 = gr.Slider(0, 1, label="R-P7", interactive=True)

|

| 326 |

+

red_red_p8 = gr.Slider(0, 1, label="R-P8", interactive=True)

|

| 327 |

+

red_red_p9 = gr.Slider(0, 1, label="R-P9", interactive=True)

|

| 328 |

+

red_red_p10 = gr.Slider(0, 1, label="R-P10", interactive=True)

|

| 329 |

+

with gr.Tab(label="G", id=1) as red_green_curves_tab:

|

| 330 |

+

red_green_p0 = gr.Slider(0, 1, label="G-P0", interactive=True)

|

| 331 |

+

red_green_p1 = gr.Slider(0, 1, label="G-P1", interactive=True)

|

| 332 |

+

red_green_p2 = gr.Slider(0, 1, label="G-P2", interactive=True)

|

| 333 |

+

red_green_p3 = gr.Slider(0, 1, label="G-P3", interactive=True)

|

| 334 |

+

red_green_p4 = gr.Slider(0, 1, label="G-P4", interactive=True)

|

| 335 |

+

red_green_p5 = gr.Slider(0, 1, label="G-P5", interactive=True)

|

| 336 |

+

red_green_p6 = gr.Slider(0, 1, label="G-P6", interactive=True)

|

| 337 |

+

red_green_p7 = gr.Slider(0, 1, label="G-P7", interactive=True)

|

| 338 |

+

red_green_p8 = gr.Slider(0, 1, label="G-P8", interactive=True)

|

| 339 |

+

red_green_p9 = gr.Slider(0, 1, label="G-P9", interactive=True)

|

| 340 |

+

red_green_p10 = gr.Slider(0, 1, label="G-P10", interactive=True)

|

| 341 |

+

with gr.Tab(label="B", id=2) as red_blue_curves_tab:

|

| 342 |

+

red_blue_p0 = gr.Slider(0, 1, label="B-P0", interactive=True)

|

| 343 |

+

red_blue_p1 = gr.Slider(0, 1, label="B-P1", interactive=True)

|

| 344 |

+

red_blue_p2 = gr.Slider(0, 1, label="B-P2", interactive=True)

|

| 345 |

+

red_blue_p3 = gr.Slider(0, 1, label="B-P3", interactive=True)

|

| 346 |

+

red_blue_p4 = gr.Slider(0, 1, label="B-P4", interactive=True)

|

| 347 |

+

red_blue_p5 = gr.Slider(0, 1, label="B-P5", interactive=True)

|

| 348 |

+

red_blue_p6 = gr.Slider(0, 1, label="B-P6", interactive=True)

|

| 349 |

+

red_blue_p7 = gr.Slider(0, 1, label="B-P7", interactive=True)

|

| 350 |

+

red_blue_p8 = gr.Slider(0, 1, label="B-P8", interactive=True)

|

| 351 |

+

red_blue_p9 = gr.Slider(0, 1, label="B-P9", interactive=True)

|

| 352 |

+

red_blue_p10 = gr.Slider(0, 1, label="B-P10", interactive=True)

|

| 353 |

+

|

| 354 |

+

with gr.Tab(label="Green", id=4) as green_curves_tab:

|

| 355 |

+

green_curves = gr.Image(label="Green Curves", type="pil")

|

| 356 |

+

with gr.Tabs() as channel_tabs:

|

| 357 |

+

with gr.Tab(label="R", id=0) as green_red_curves_tab:

|

| 358 |

+

green_red_p0 = gr.Slider(0, 1, label="R-P0", interactive=True)

|

| 359 |

+

green_red_p1 = gr.Slider(0, 1, label="R-P1", interactive=True)

|

| 360 |

+

green_red_p2 = gr.Slider(0, 1, label="R-P2", interactive=True)

|

| 361 |

+

green_red_p3 = gr.Slider(0, 1, label="R-P3", interactive=True)

|

| 362 |

+

green_red_p4 = gr.Slider(0, 1, label="R-P4", interactive=True)

|

| 363 |

+

green_red_p5 = gr.Slider(0, 1, label="R-P5", interactive=True)

|

| 364 |

+

green_red_p6 = gr.Slider(0, 1, label="R-P6", interactive=True)

|

| 365 |

+

green_red_p7 = gr.Slider(0, 1, label="R-P7", interactive=True)

|

| 366 |

+

green_red_p8 = gr.Slider(0, 1, label="R-P8", interactive=True)

|

| 367 |

+

green_red_p9 = gr.Slider(0, 1, label="R-P9", interactive=True)

|

| 368 |

+

green_red_p10 = gr.Slider(0, 1, label="R-P10", interactive=True)

|

| 369 |

+

with gr.Tab(label="G", id=1) as green_green_curves_tab:

|

| 370 |

+

green_green_p0 = gr.Slider(0, 1, label="G-P0", interactive=True)

|

| 371 |

+

green_green_p1 = gr.Slider(0, 1, label="G-P1", interactive=True)

|

| 372 |

+

green_green_p2 = gr.Slider(0, 1, label="G-P2", interactive=True)

|

| 373 |

+

green_green_p3 = gr.Slider(0, 1, label="G-P3", interactive=True)

|

| 374 |

+

green_green_p4 = gr.Slider(0, 1, label="G-P4", interactive=True)

|

| 375 |

+

green_green_p5 = gr.Slider(0, 1, label="G-P5", interactive=True)

|

| 376 |

+

green_green_p6 = gr.Slider(0, 1, label="G-P6", interactive=True)

|

| 377 |

+

green_green_p7 = gr.Slider(0, 1, label="G-P7", interactive=True)

|

| 378 |

+

green_green_p8 = gr.Slider(0, 1, label="G-P8", interactive=True)

|

| 379 |

+

green_green_p9 = gr.Slider(0, 1, label="G-P9", interactive=True)

|

| 380 |

+

green_green_p10 = gr.Slider(0, 1, label="G-P10", interactive=True)

|

| 381 |

+

with gr.Tab(label="B", id=2) as green_blue_curves_tab:

|

| 382 |

+

green_blue_p0 = gr.Slider(0, 1, label="B-P0", interactive=True)

|

| 383 |

+

green_blue_p1 = gr.Slider(0, 1, label="B-P1", interactive=True)

|

| 384 |

+

green_blue_p2 = gr.Slider(0, 1, label="B-P2", interactive=True)

|

| 385 |

+

green_blue_p3 = gr.Slider(0, 1, label="B-P3", interactive=True)

|

| 386 |

+

green_blue_p4 = gr.Slider(0, 1, label="B-P4", interactive=True)

|

| 387 |

+

green_blue_p5 = gr.Slider(0, 1, label="B-P5", interactive=True)

|

| 388 |

+

green_blue_p6 = gr.Slider(0, 1, label="B-P6", interactive=True)

|

| 389 |

+

green_blue_p7 = gr.Slider(0, 1, label="B-P7", interactive=True)

|

| 390 |

+

green_blue_p8 = gr.Slider(0, 1, label="B-P8", interactive=True)

|

| 391 |

+

green_blue_p9 = gr.Slider(0, 1, label="B-P9", interactive=True)

|

| 392 |

+

green_blue_p10 = gr.Slider(0, 1, label="B-P10", interactive=True)

|

| 393 |

+

|

| 394 |

+

with gr.Tab(label="Blue", id=5) as blue_curves_tab:

|

| 395 |

+

blue_curves = gr.Image(label="Blue Curves", type="pil")

|

| 396 |

+

with gr.Tabs() as channel_tabs:

|

| 397 |

+

with gr.Tab(label="R", id=0) as blue_red_curves_tab:

|

| 398 |

+

blue_red_p0 = gr.Slider(0, 1, label="R-P0", interactive=True)

|

| 399 |

+

blue_red_p1 = gr.Slider(0, 1, label="R-P1", interactive=True)

|

| 400 |

+

blue_red_p2 = gr.Slider(0, 1, label="R-P2", interactive=True)

|

| 401 |

+

blue_red_p3 = gr.Slider(0, 1, label="R-P3", interactive=True)

|

| 402 |

+

blue_red_p4 = gr.Slider(0, 1, label="R-P4", interactive=True)

|

| 403 |

+

blue_red_p5 = gr.Slider(0, 1, label="R-P5", interactive=True)

|

| 404 |

+

blue_red_p6 = gr.Slider(0, 1, label="R-P6", interactive=True)

|

| 405 |

+

blue_red_p7 = gr.Slider(0, 1, label="R-P7", interactive=True)

|

| 406 |

+

blue_red_p8 = gr.Slider(0, 1, label="R-P8", interactive=True)

|

| 407 |

+

blue_red_p9 = gr.Slider(0, 1, label="R-P9", interactive=True)

|

| 408 |

+

blue_red_p10 = gr.Slider(0, 1, label="R-P10", interactive=True)

|

| 409 |

+

with gr.Tab(label="G", id=1) as blue_green_curves_tab:

|

| 410 |

+

blue_green_p0 = gr.Slider(0, 1, label="G-P0", interactive=True)

|

| 411 |

+

blue_green_p1 = gr.Slider(0, 1, label="G-P1", interactive=True)

|

| 412 |

+

blue_green_p2 = gr.Slider(0, 1, label="G-P2", interactive=True)

|

| 413 |

+

blue_green_p3 = gr.Slider(0, 1, label="G-P3", interactive=True)

|

| 414 |

+

blue_green_p4 = gr.Slider(0, 1, label="G-P4", interactive=True)

|