Commit

·

fc335fd

1

Parent(s):

aa3efb4

app v1

Browse files- README.md +13 -8

- app.py +37 -0

- install-deps.sh +3 -0

- requirements.txt +18 -0

- result.png +0 -0

- run.sh +3 -0

README.md

CHANGED

|

@@ -1,13 +1,18 @@

|

|

| 1 |

---

|

| 2 |

-

title: Diego

|

| 3 |

-

emoji:

|

| 4 |

-

colorFrom: yellow

|

| 5 |

-

colorTo: indigo

|

| 6 |

sdk: gradio

|

| 7 |

-

sdk_version: 4.

|

|

|

|

|

|

|

|

|

|

|

|

|

| 8 |

app_file: app.py

|

| 9 |

-

pinned: false

|

| 10 |

-

license: unlicense

|

| 11 |

---

|

| 12 |

|

| 13 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

---

|

| 2 |

+

title: Diego's LLM Chat

|

| 3 |

+

emoji: 🤖

|

|

|

|

|

|

|

| 4 |

sdk: gradio

|

| 5 |

+

sdk_version: 4.24.0

|

| 6 |

+

license: cc-by-nc-sa-4.0

|

| 7 |

+

short_description: DialoGPT LLM model chat

|

| 8 |

+

colorFrom: red

|

| 9 |

+

colorTo: indigo

|

| 10 |

app_file: app.py

|

|

|

|

|

|

|

| 11 |

---

|

| 12 |

|

| 13 |

+

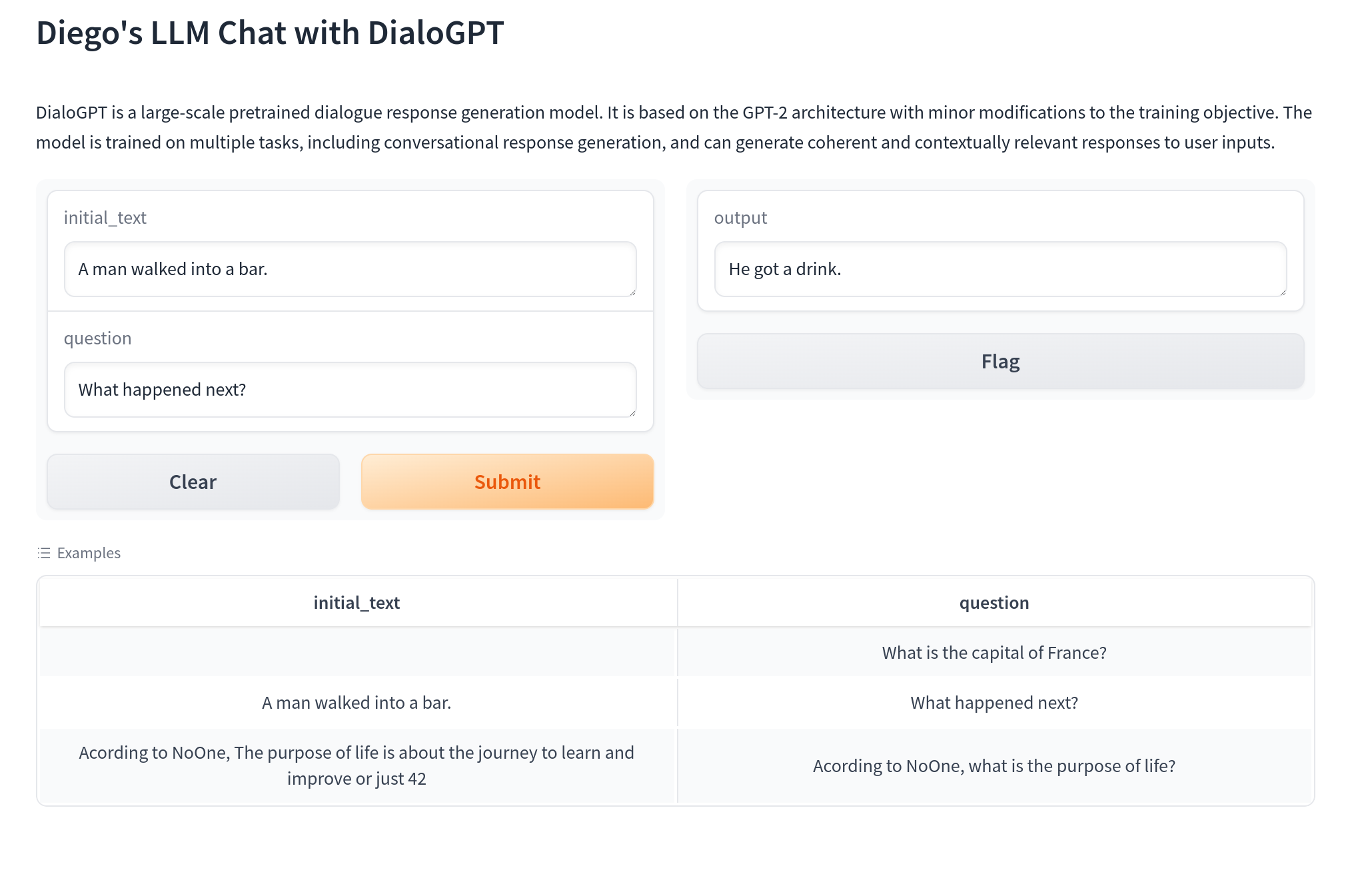

### Result

|

| 14 |

+

* LLM Chat

|

| 15 |

+

* Using LLM Model DialoGPT

|

| 16 |

+

|

| 17 |

+

<img src='result.png' />

|

| 18 |

+

|

app.py

ADDED

|

@@ -0,0 +1,37 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

from transformers import AutoModelForCausalLM, AutoTokenizer

|

| 2 |

+

import gradio as gr

|

| 3 |

+

|

| 4 |

+

# Load DialoGPT model and tokenizer

|

| 5 |

+

tokenizer = AutoTokenizer.from_pretrained("microsoft/DialoGPT-medium")

|

| 6 |

+

model = AutoModelForCausalLM.from_pretrained("microsoft/DialoGPT-medium")

|

| 7 |

+

|

| 8 |

+

def text_to_chat(initial_text, question):

|

| 9 |

+

# Concatenate the initial text and the question

|

| 10 |

+

text = initial_text + " " + question

|

| 11 |

+

|

| 12 |

+

# Encode the new user input, add the eos_token and return a tensor in Pytorch

|

| 13 |

+

new_user_input_ids = tokenizer.encode(text + tokenizer.eos_token, return_tensors='pt')

|

| 14 |

+

|

| 15 |

+

# Generate a response to the user input

|

| 16 |

+

bot_input_ids = model.generate(new_user_input_ids, max_length=1024, pad_token_id=tokenizer.eos_token_id, max_new_tokens=500)

|

| 17 |

+

|

| 18 |

+

# Decode the response and return it

|

| 19 |

+

chat_output = tokenizer.decode(bot_input_ids[:, new_user_input_ids.shape[-1]:][0], skip_special_tokens=True)

|

| 20 |

+

|

| 21 |

+

return chat_output

|

| 22 |

+

|

| 23 |

+

def chat_with_bot(initial_text, question):

|

| 24 |

+

result = text_to_chat(initial_text, question)

|

| 25 |

+

return result

|

| 26 |

+

|

| 27 |

+

ui = gr.Interface(fn=chat_with_bot,

|

| 28 |

+

inputs=["text", "text"],

|

| 29 |

+

outputs="text",

|

| 30 |

+

title="Diego's LLM Chat with DialoGPT",

|

| 31 |

+

examples=

|

| 32 |

+

[["","What is the capital of France?"],

|

| 33 |

+

["A man walked into a bar.", "What happened next?"],

|

| 34 |

+

["Acording to NoOne, The purpose of life is about the journey to learn and improve or just 42", "Acording to NoOne, what is the purpose of life?"]],

|

| 35 |

+

description="DialoGPT is a large-scale pretrained dialogue response generation model. It is based on the GPT-2 architecture with minor modifications to the training objective. The model is trained on multiple tasks, including conversational response generation, and can generate coherent and contextually relevant responses to user inputs.",

|

| 36 |

+

)

|

| 37 |

+

ui.launch()

|

install-deps.sh

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

pip install -r requirements.txt

|

requirements.txt

ADDED

|

@@ -0,0 +1,18 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

numpy

|

| 2 |

+

transformers

|

| 3 |

+

sentence-transformers

|

| 4 |

+

seaborn

|

| 5 |

+

torch

|

| 6 |

+

torchvision

|

| 7 |

+

matplotlib

|

| 8 |

+

pandas

|

| 9 |

+

scikit-learn

|

| 10 |

+

nltk

|

| 11 |

+

gensim

|

| 12 |

+

tensorflow

|

| 13 |

+

keras

|

| 14 |

+

opencv-python

|

| 15 |

+

fastapi

|

| 16 |

+

uvicorn

|

| 17 |

+

gradio

|

| 18 |

+

flask

|

result.png

ADDED

|

run.sh

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

#!/bin/bash

|

| 2 |

+

|

| 3 |

+

python app.py

|