Spaces:

Runtime error

Runtime error

Commit

•

940ed19

1

Parent(s):

3d195be

Added app files

Browse files- app.py +54 -0

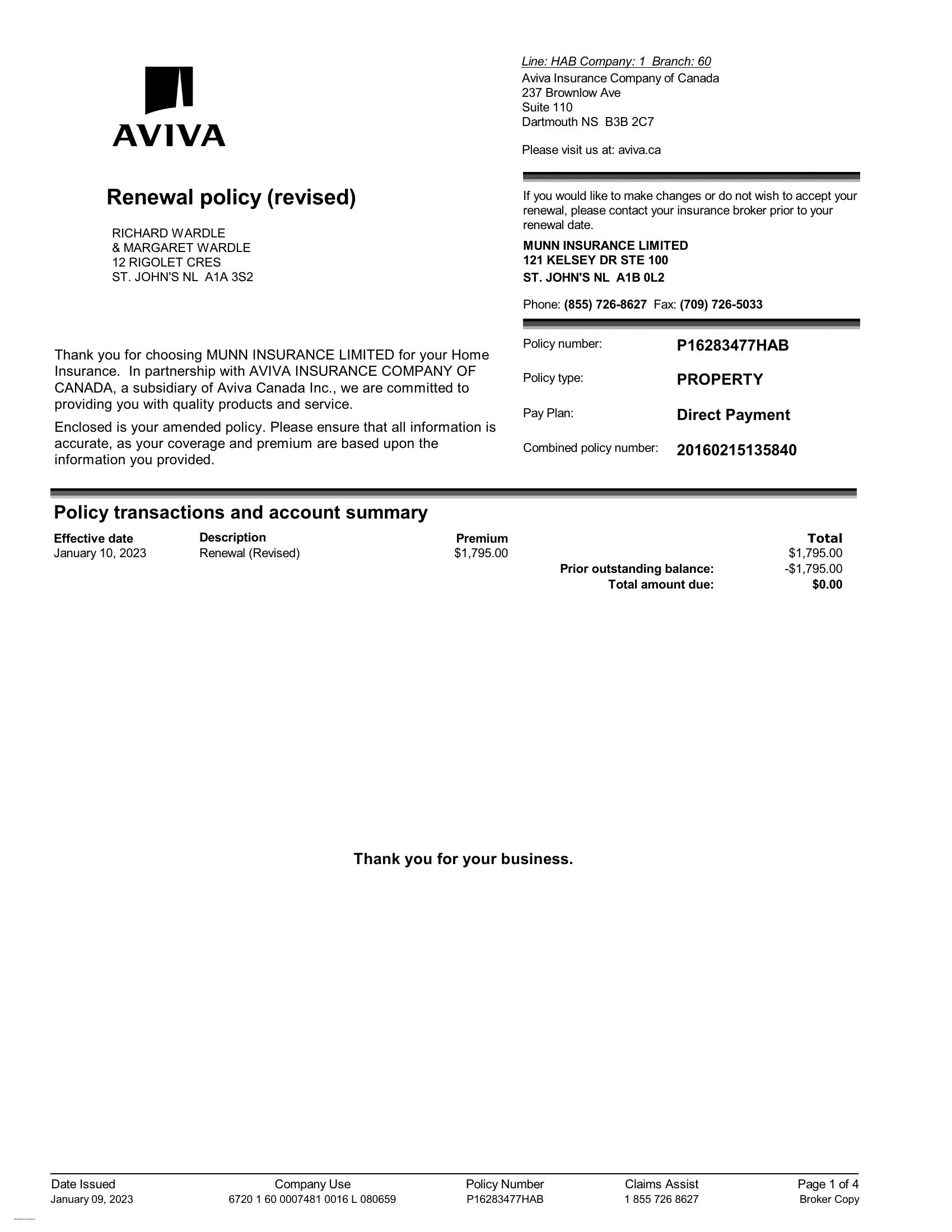

- aviva.jpeg +0 -0

- requirements.txt +3 -0

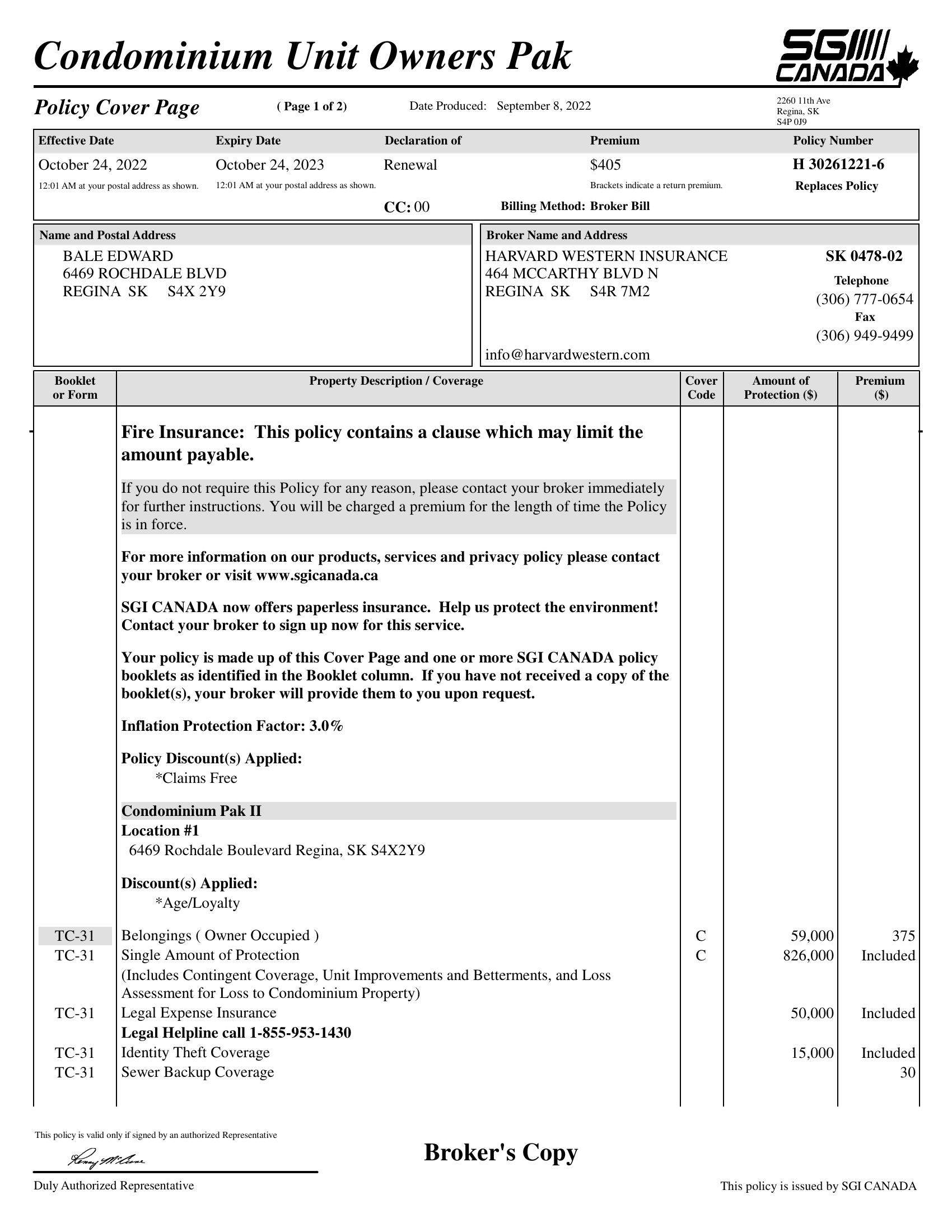

- sgi.jpeg +0 -0

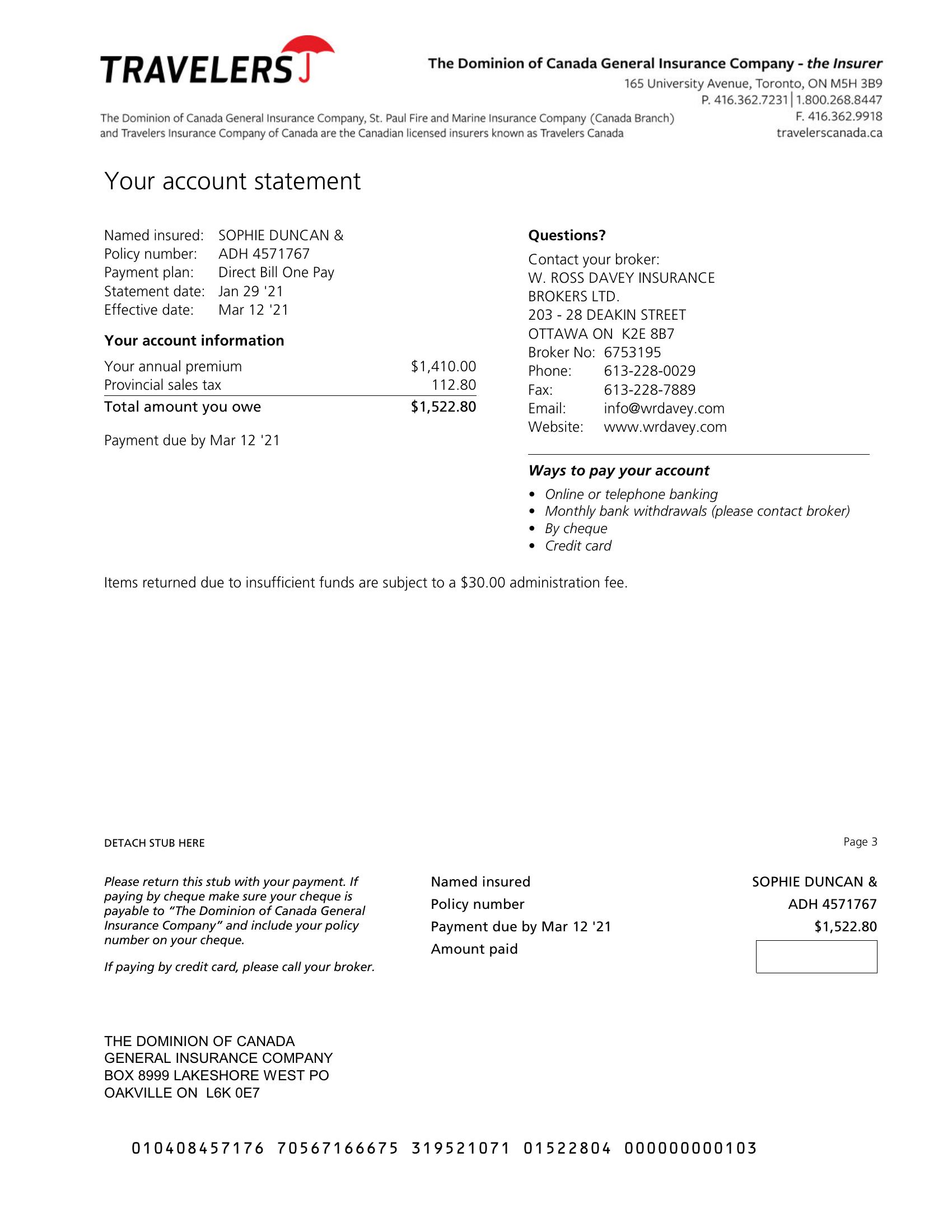

- travelers.jpeg +0 -0

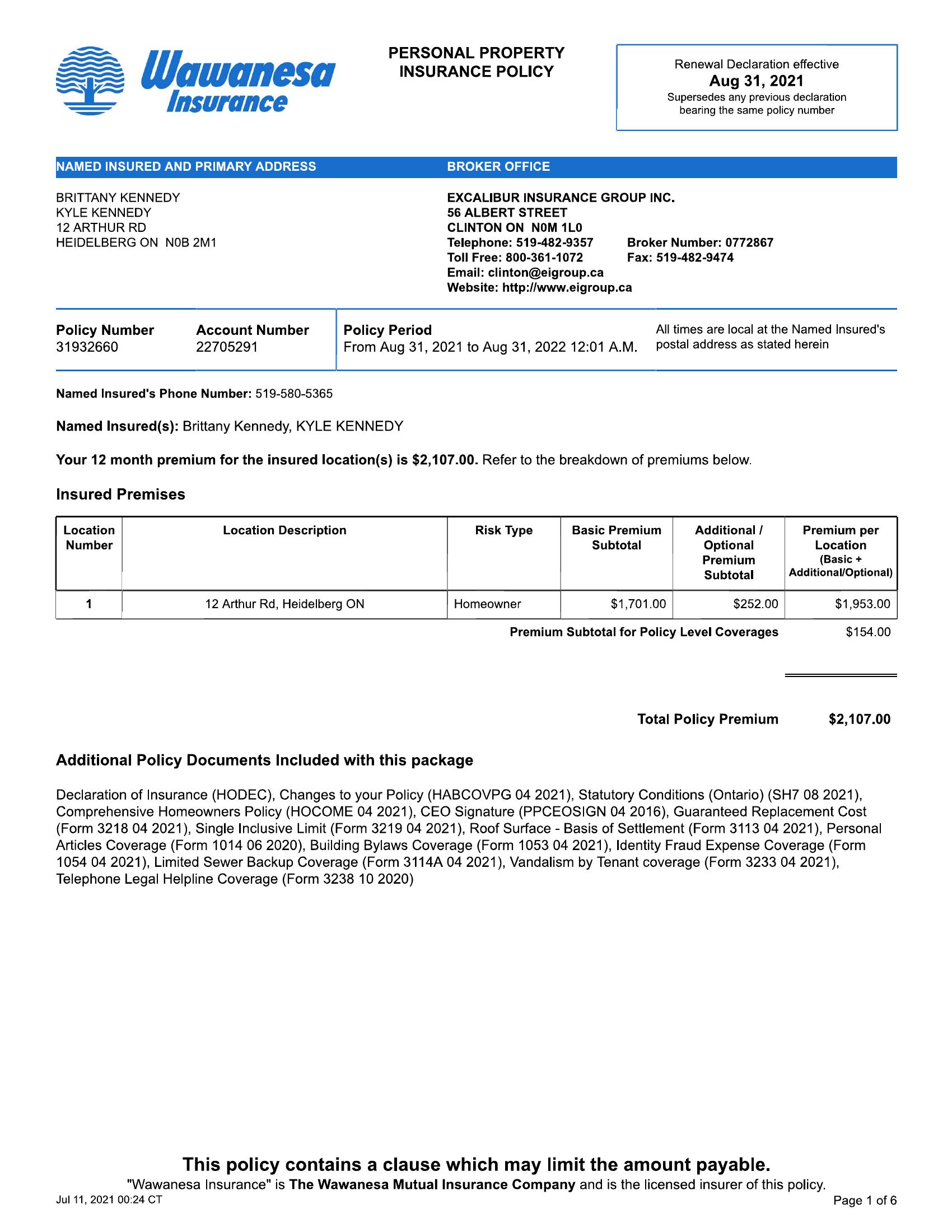

- wawanesa.jpeg +0 -0

app.py

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import re

|

| 2 |

+

import gradio as gr

|

| 3 |

+

|

| 4 |

+

import torch

|

| 5 |

+

from transformers import DonutProcessor, VisionEncoderDecoderModel

|

| 6 |

+

|

| 7 |

+

processor = DonutProcessor.from_pretrained("naver-clova-ix/donut-base-finetuned-cord-v2")

|

| 8 |

+

model = VisionEncoderDecoderModel.from_pretrained("naver-clova-ix/donut-base-finetuned-cord-v2")

|

| 9 |

+

|

| 10 |

+

device = "cuda" if torch.cuda.is_available() else "cpu"

|

| 11 |

+

model.to(device)

|

| 12 |

+

|

| 13 |

+

def process_document(image):

|

| 14 |

+

# prepare encoder inputs

|

| 15 |

+

pixel_values = processor(image, return_tensors="pt").pixel_values

|

| 16 |

+

|

| 17 |

+

# prepare decoder inputs

|

| 18 |

+

task_prompt = "<s_cord-v2>"

|

| 19 |

+

decoder_input_ids = processor.tokenizer(task_prompt, add_special_tokens=False, return_tensors="pt").input_ids

|

| 20 |

+

|

| 21 |

+

# generate answer

|

| 22 |

+

outputs = model.generate(

|

| 23 |

+

pixel_values.to(device),

|

| 24 |

+

decoder_input_ids=decoder_input_ids.to(device),

|

| 25 |

+

max_length=model.decoder.config.max_position_embeddings,

|

| 26 |

+

early_stopping=True,

|

| 27 |

+

pad_token_id=processor.tokenizer.pad_token_id,

|

| 28 |

+

eos_token_id=processor.tokenizer.eos_token_id,

|

| 29 |

+

use_cache=True,

|

| 30 |

+

num_beams=1,

|

| 31 |

+

bad_words_ids=[[processor.tokenizer.unk_token_id]],

|

| 32 |

+

return_dict_in_generate=True,

|

| 33 |

+

)

|

| 34 |

+

|

| 35 |

+

# postprocess

|

| 36 |

+

sequence = processor.batch_decode(outputs.sequences)[0]

|

| 37 |

+

sequence = sequence.replace(processor.tokenizer.eos_token, "").replace(processor.tokenizer.pad_token, "")

|

| 38 |

+

sequence = re.sub(r"<.*?>", "", sequence, count=1).strip() # remove first task start token

|

| 39 |

+

|

| 40 |

+

return processor.token2json(sequence)

|

| 41 |

+

|

| 42 |

+

description = "Gradio Demo for Donut trained on Quandri internal dataset."

|

| 43 |

+

|

| 44 |

+

demo = gr.Interface(

|

| 45 |

+

fn=process_document,

|

| 46 |

+

inputs="image",

|

| 47 |

+

outputs="json",

|

| 48 |

+

title="Demo: Donut 🍩 for Document Parsing on Quandri Dataset",

|

| 49 |

+

description=description,

|

| 50 |

+

enable_queue=True,

|

| 51 |

+

examples=[["aviva.jpeg"], ["wawanesa.jpeg"], ["sgi.jpeg"], ["travelers.jpeg"]],

|

| 52 |

+

cache_examples=False)

|

| 53 |

+

|

| 54 |

+

demo.launch()

|

aviva.jpeg

ADDED

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

torch

|

| 2 |

+

git+https://github.com/huggingface/transformers.git

|

| 3 |

+

sentencepiece

|

sgi.jpeg

ADDED

|

travelers.jpeg

ADDED

|

wawanesa.jpeg

ADDED

|