Spaces:

Runtime error

Runtime error

Upload folder using huggingface_hub

Browse files- .dockerignore +2 -0

- .zen/config.yaml +2 -0

- Dockerfile +26 -0

- README.md +53 -12

- _assets/airflow_stack.png +0 -0

- _assets/default_stack.png +0 -0

- _assets/local_sagmaker_so_stack.png +0 -0

- _assets/sagemaker_stack.png +0 -0

- app.py +48 -0

- configs/deployment.yaml +13 -0

- configs/feature_engineering.yaml +12 -0

- configs/inference.yaml +13 -0

- configs/training.yaml +12 -0

- flagged/log.csv +2 -0

- flagged/output/tmpjy2eamkw.json +1 -0

- pipelines/__init__.py +6 -0

- pipelines/__pycache__/__init__.cpython-38.pyc +0 -0

- pipelines/__pycache__/deployment.cpython-38.pyc +0 -0

- pipelines/__pycache__/feature_engineering.cpython-38.pyc +0 -0

- pipelines/__pycache__/inference.cpython-38.pyc +0 -0

- pipelines/__pycache__/training.cpython-38.pyc +0 -0

- pipelines/deployment.py +38 -0

- pipelines/feature_engineering.py +54 -0

- pipelines/inference.py +50 -0

- pipelines/training.py +61 -0

- requirements.txt +3 -0

- run.ipynb +981 -0

- run.py +173 -0

- run_stack_showcase.ipynb +347 -0

- steps/__init__.py +29 -0

- steps/__pycache__/__init__.cpython-38.pyc +0 -0

- steps/__pycache__/data_loader.cpython-38.pyc +0 -0

- steps/__pycache__/data_preprocessor.cpython-38.pyc +0 -0

- steps/__pycache__/data_splitter.cpython-38.pyc +0 -0

- steps/__pycache__/deploy_to_huggingface.cpython-38.pyc +0 -0

- steps/__pycache__/inference_predict.cpython-38.pyc +0 -0

- steps/__pycache__/inference_preprocessor.cpython-38.pyc +0 -0

- steps/__pycache__/model_evaluator.cpython-38.pyc +0 -0

- steps/__pycache__/model_promoter.cpython-38.pyc +0 -0

- steps/__pycache__/model_trainer.cpython-38.pyc +0 -0

- steps/data_loader.py +53 -0

- steps/data_preprocessor.py +115 -0

- steps/data_splitter.py +47 -0

- steps/deploy_to_huggingface.py +58 -0

- steps/inference_predict.py +59 -0

- steps/inference_preprocessor.py +52 -0

- steps/model_evaluator.py +102 -0

- steps/model_promoter.py +42 -0

- steps/model_trainer.py +52 -0

.dockerignore

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

.venv*

|

| 2 |

+

.requirements*

|

.zen/config.yaml

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

active_stack_id: c2be0c2a-7cf0-44e7-8ee3-71400a579a27

|

| 2 |

+

active_workspace_id: f3a544f2-afb5-4672-934a-7a465c66201c

|

Dockerfile

ADDED

|

@@ -0,0 +1,26 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# read the doc: https://huggingface.co/docs/hub/spaces-sdks-docker

|

| 2 |

+

# you will also find guides on how best to write your Dockerfile

|

| 3 |

+

|

| 4 |

+

FROM python:3.9

|

| 5 |

+

|

| 6 |

+

WORKDIR /code

|

| 7 |

+

|

| 8 |

+

COPY ./requirements.txt /code/requirements.txt

|

| 9 |

+

|

| 10 |

+

RUN pip install --no-cache-dir --upgrade -r /code/requirements.txt

|

| 11 |

+

|

| 12 |

+

# Set up a new user named "user" with user ID 1000

|

| 13 |

+

RUN useradd -m -u 1000 user

|

| 14 |

+

# Switch to the "user" user

|

| 15 |

+

USER user

|

| 16 |

+

# Set home to the user's home directory

|

| 17 |

+

ENV HOME=/home/user \

|

| 18 |

+

PATH=/home/user/.local/bin:$PATH

|

| 19 |

+

|

| 20 |

+

# Set the working directory to the user's home directory

|

| 21 |

+

WORKDIR $HOME/app

|

| 22 |

+

|

| 23 |

+

# Copy the current directory contents into the container at $HOME/app setting the owner to the user

|

| 24 |

+

COPY --chown=user . $HOME/app

|

| 25 |

+

|

| 26 |

+

CMD ["python", "app.py", "--server.port=7860", "--server.address=0.0.0.0"]

|

README.md

CHANGED

|

@@ -1,12 +1,53 @@

|

|

| 1 |

-

|

| 2 |

-

|

| 3 |

-

|

| 4 |

-

|

| 5 |

-

|

| 6 |

-

|

| 7 |

-

|

| 8 |

-

|

| 9 |

-

|

| 10 |

-

|

| 11 |

-

|

| 12 |

-

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# 📜 ZenML Stack Show Case

|

| 2 |

+

|

| 3 |

+

This project aims to demonstrate the power of stacks. The code in this

|

| 4 |

+

project assumes that you have quite a few stacks registered already.

|

| 5 |

+

|

| 6 |

+

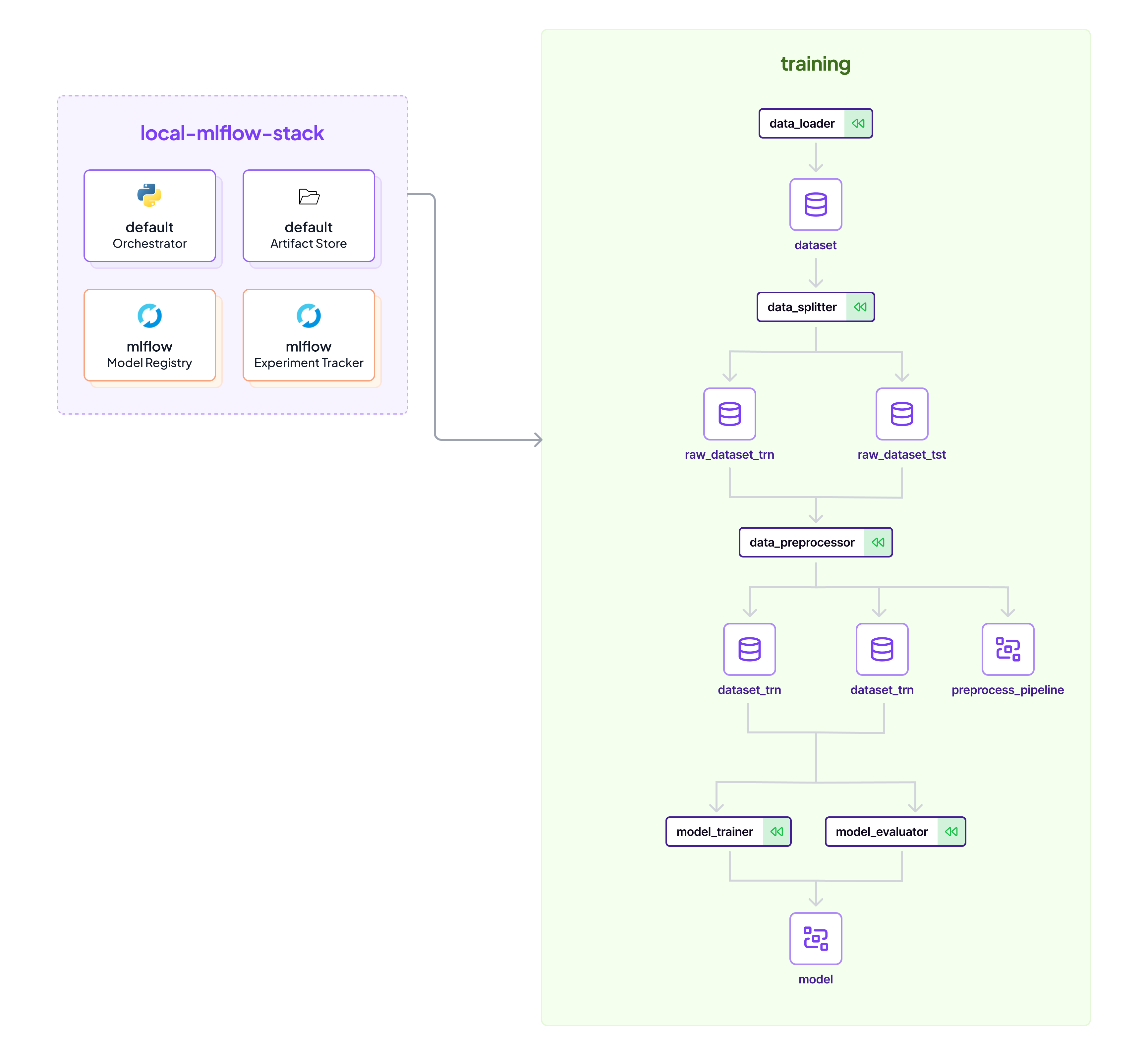

## default

|

| 7 |

+

* `default` Orchestrator

|

| 8 |

+

* `default` Artifact Store

|

| 9 |

+

|

| 10 |

+

```commandline

|

| 11 |

+

zenml stack set default

|

| 12 |

+

python run.py --training-pipeline

|

| 13 |

+

```

|

| 14 |

+

|

| 15 |

+

## local-sagemaker-step-operator-stack

|

| 16 |

+

* `default` Orchestrator

|

| 17 |

+

* `s3` Artifact Store

|

| 18 |

+

* `local` Image Builder

|

| 19 |

+

* `aws` Container Registry

|

| 20 |

+

* `Sagemaker` Step Operator

|

| 21 |

+

|

| 22 |

+

```commandline

|

| 23 |

+

zenml stack set local-sagemaker-step-operator-stack

|

| 24 |

+

zenml integration install aws -y

|

| 25 |

+

python run.py --training-pipeline

|

| 26 |

+

```

|

| 27 |

+

|

| 28 |

+

## sagemaker-airflow-stack

|

| 29 |

+

* `Airflow` Orchestrator

|

| 30 |

+

* `s3` Artifact Store

|

| 31 |

+

* `local` Image Builder

|

| 32 |

+

* `aws` Container Registry

|

| 33 |

+

* `Sagemaker` Step Operator

|

| 34 |

+

|

| 35 |

+

```commandline

|

| 36 |

+

zenml stack set sagemaker-airflow-stack

|

| 37 |

+

zenml integration install airflow -y

|

| 38 |

+

pip install apache-airflow-providers-docker apache-airflow~=2.5.0

|

| 39 |

+

zenml stack up

|

| 40 |

+

python run.py --training-pipeline

|

| 41 |

+

```

|

| 42 |

+

|

| 43 |

+

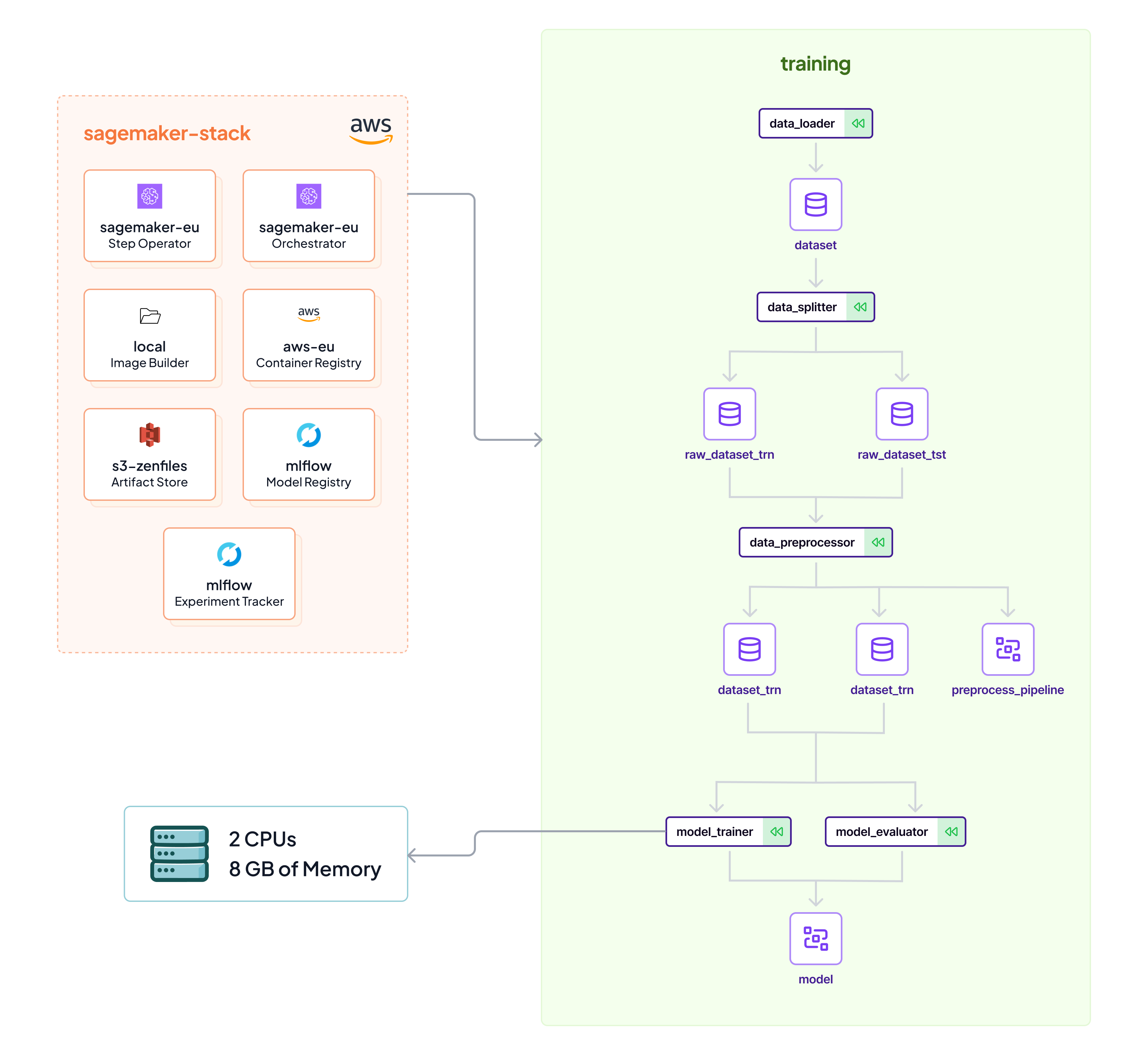

## sagemaker-stack

|

| 44 |

+

* `Sagemaker` Orchestrator

|

| 45 |

+

* `s3` Artifact Store

|

| 46 |

+

* `local` Image Builder

|

| 47 |

+

* `aws` Container Registry

|

| 48 |

+

* `Sagemaker` Step Operator

|

| 49 |

+

|

| 50 |

+

```commandline

|

| 51 |

+

zenml stack set sagemaker-stack

|

| 52 |

+

python run.py --training-pipeline

|

| 53 |

+

```

|

_assets/airflow_stack.png

ADDED

|

_assets/default_stack.png

ADDED

|

_assets/local_sagmaker_so_stack.png

ADDED

|

_assets/sagemaker_stack.png

ADDED

|

app.py

ADDED

|

@@ -0,0 +1,48 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

import gradio as gr

|

| 2 |

+

import numpy as np

|

| 3 |

+

import pandas as pd

|

| 4 |

+

from sklearn.datasets import load_breast_cancer

|

| 5 |

+

from zenml.client import Client

|

| 6 |

+

|

| 7 |

+

client = Client()

|

| 8 |

+

zenml_model_version = client.get_model_version("breast_cancer_classifier", "production")

|

| 9 |

+

preprocess_pipeline = zenml_model_version.get_artifact("preprocess_pipeline").load()

|

| 10 |

+

|

| 11 |

+

# Load the model

|

| 12 |

+

clf = zenml_model_version.get_artifact("model").load()

|

| 13 |

+

|

| 14 |

+

# Load dataset to get feature names

|

| 15 |

+

data = load_breast_cancer()

|

| 16 |

+

feature_names = data.feature_names

|

| 17 |

+

|

| 18 |

+

def classify(*input_features):

|

| 19 |

+

# Convert the input features to pandas DataFrame

|

| 20 |

+

input_features = np.array(input_features).reshape(1, -1)

|

| 21 |

+

input_df = pd.DataFrame(input_features, columns=feature_names)

|

| 22 |

+

|

| 23 |

+

# Pre-process the DataFrame

|

| 24 |

+

input_df["target"] = pd.Series([1] * input_df.shape[0])

|

| 25 |

+

input_df = preprocess_pipeline.transform(input_df)

|

| 26 |

+

input_df.drop(columns=["target"], inplace=True)

|

| 27 |

+

|

| 28 |

+

# Make a prediction

|

| 29 |

+

prediction_proba = clf.predict_proba(input_df)[0]

|

| 30 |

+

|

| 31 |

+

# Map predicted class probabilities

|

| 32 |

+

classes = data.target_names

|

| 33 |

+

return {classes[idx]: prob for idx, prob in enumerate(prediction_proba)}

|

| 34 |

+

|

| 35 |

+

# Define a list of Number inputs for each feature

|

| 36 |

+

input_components = [gr.Number(label=feature_name, default=0) for feature_name in feature_names]

|

| 37 |

+

|

| 38 |

+

# Define the Gradio interface

|

| 39 |

+

iface = gr.Interface(

|

| 40 |

+

fn=classify,

|

| 41 |

+

inputs=input_components,

|

| 42 |

+

outputs=gr.Label(num_top_classes=2),

|

| 43 |

+

title="Breast Cancer Classifier",

|

| 44 |

+

description="Enter the required measurements to predict the classification for breast cancer."

|

| 45 |

+

)

|

| 46 |

+

|

| 47 |

+

# Launch the Gradio app

|

| 48 |

+

iface.launch()

|

configs/deployment.yaml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# environment configuration

|

| 2 |

+

settings:

|

| 3 |

+

docker:

|

| 4 |

+

required_integrations:

|

| 5 |

+

- sklearn

|

| 6 |

+

|

| 7 |

+

# configuration of the Model Control Plane

|

| 8 |

+

model_version:

|

| 9 |

+

name: breast_cancer_classifier

|

| 10 |

+

version: production

|

| 11 |

+

license: Apache 2.0

|

| 12 |

+

description: Classification of Breast Cancer Dataset.

|

| 13 |

+

tags: ["classification", "sklearn"]

|

configs/feature_engineering.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# environment configuration

|

| 2 |

+

settings:

|

| 3 |

+

docker:

|

| 4 |

+

required_integrations:

|

| 5 |

+

- sklearn

|

| 6 |

+

|

| 7 |

+

# configuration of the Model Control Plane

|

| 8 |

+

model_version:

|

| 9 |

+

name: breast_cancer_classifier

|

| 10 |

+

license: Apache 2.0

|

| 11 |

+

description: Classification of Breast Cancer Dataset.

|

| 12 |

+

tags: ["classification", "sklearn"]

|

configs/inference.yaml

ADDED

|

@@ -0,0 +1,13 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# environment configuration

|

| 2 |

+

settings:

|

| 3 |

+

docker:

|

| 4 |

+

required_integrations:

|

| 5 |

+

- sklearn

|

| 6 |

+

|

| 7 |

+

# configuration of the Model Control Plane

|

| 8 |

+

model_version:

|

| 9 |

+

name: breast_cancer_classifier

|

| 10 |

+

version: production

|

| 11 |

+

license: Apache 2.0

|

| 12 |

+

description: Classification of Breast Cancer Dataset.

|

| 13 |

+

tags: ["classification", "sklearn"]

|

configs/training.yaml

ADDED

|

@@ -0,0 +1,12 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# environment configuration

|

| 2 |

+

settings:

|

| 3 |

+

docker:

|

| 4 |

+

required_integrations:

|

| 5 |

+

- sklearn

|

| 6 |

+

|

| 7 |

+

# configuration of the Model Control Plane

|

| 8 |

+

model_version:

|

| 9 |

+

name: breast_cancer_classifier

|

| 10 |

+

license: Apache 2.0

|

| 11 |

+

description: Classification of Breast Cancer Dataset.

|

| 12 |

+

tags: ["classification", "sklearn"]

|

flagged/log.csv

ADDED

|

@@ -0,0 +1,2 @@

|

|

|

|

|

|

|

|

|

|

| 1 |

+

mean radius,mean texture,mean perimeter,mean area,mean smoothness,mean compactness,mean concavity,mean concave points,mean symmetry,mean fractal dimension,radius error,texture error,perimeter error,area error,smoothness error,compactness error,concavity error,concave points error,symmetry error,fractal dimension error,worst radius,worst texture,worst perimeter,worst area,worst smoothness,worst compactness,worst concavity,worst concave points,worst symmetry,worst fractal dimension,output,flag,username,timestamp

|

| 2 |

+

2,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,0,/home/htahir1/workspace/zenml_io/zenml-projects/stack-showcase/flagged/output/tmpjy2eamkw.json,,,2024-01-04 14:08:33.097778

|

flagged/output/tmpjy2eamkw.json

ADDED

|

@@ -0,0 +1 @@

|

|

|

|

|

|

|

| 1 |

+

{}

|

pipelines/__init__.py

ADDED

|

@@ -0,0 +1,6 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# {% include 'template/license_header' %}

|

| 2 |

+

|

| 3 |

+

from .feature_engineering import feature_engineering

|

| 4 |

+

from .inference import inference

|

| 5 |

+

from .training import breast_cancer_training

|

| 6 |

+

from .deployment import breast_cancer_deployment_pipeline

|

pipelines/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (372 Bytes). View file

|

|

|

pipelines/__pycache__/deployment.cpython-38.pyc

ADDED

|

Binary file (1.3 kB). View file

|

|

|

pipelines/__pycache__/feature_engineering.cpython-38.pyc

ADDED

|

Binary file (1.47 kB). View file

|

|

|

pipelines/__pycache__/inference.cpython-38.pyc

ADDED

|

Binary file (1.43 kB). View file

|

|

|

pipelines/__pycache__/training.cpython-38.pyc

ADDED

|

Binary file (1.55 kB). View file

|

|

|

pipelines/deployment.py

ADDED

|

@@ -0,0 +1,38 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# {% include 'template/license_header' %}

|

| 2 |

+

|

| 3 |

+

from typing import Optional, List

|

| 4 |

+

|

| 5 |

+

from steps import (

|

| 6 |

+

deploy_to_huggingface,

|

| 7 |

+

)

|

| 8 |

+

from zenml import get_pipeline_context, pipeline

|

| 9 |

+

from zenml.logger import get_logger

|

| 10 |

+

from zenml.client import Client

|

| 11 |

+

|

| 12 |

+

logger = get_logger(__name__)

|

| 13 |

+

|

| 14 |

+

|

| 15 |

+

@pipeline

|

| 16 |

+

def breast_cancer_deployment_pipeline(

|

| 17 |

+

repo_name: Optional[str] = "zenml_breast_cancer_classifier",

|

| 18 |

+

):

|

| 19 |

+

"""

|

| 20 |

+

Model deployment pipeline.

|

| 21 |

+

|

| 22 |

+

This pipelines deploys latest model on mlflow registry that matches

|

| 23 |

+

the given stage, to one of the supported deployment targets.

|

| 24 |

+

|

| 25 |

+

Args:

|

| 26 |

+

labels: List of labels for the model.

|

| 27 |

+

title: Title for the model.

|

| 28 |

+

description: Description for the model.

|

| 29 |

+

model_name_or_path: Name or path of the model.

|

| 30 |

+

tokenizer_name_or_path: Name or path of the tokenizer.

|

| 31 |

+

interpretation: Interpretation for the model.

|

| 32 |

+

example: Example for the model.

|

| 33 |

+

repo_name: Name of the repository to deploy to HuggingFace Hub.

|

| 34 |

+

"""

|

| 35 |

+

########## Deploy to HuggingFace ##########

|

| 36 |

+

deploy_to_huggingface(

|

| 37 |

+

repo_name=repo_name,

|

| 38 |

+

)

|

pipelines/feature_engineering.py

ADDED

|

@@ -0,0 +1,54 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# {% include 'template/license_header' %}

|

| 2 |

+

|

| 3 |

+

import random

|

| 4 |

+

from typing import List, Optional

|

| 5 |

+

|

| 6 |

+

from steps import (

|

| 7 |

+

data_loader,

|

| 8 |

+

data_preprocessor,

|

| 9 |

+

data_splitter,

|

| 10 |

+

)

|

| 11 |

+

from zenml import pipeline

|

| 12 |

+

from zenml.logger import get_logger

|

| 13 |

+

|

| 14 |

+

logger = get_logger(__name__)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

@pipeline

|

| 18 |

+

def feature_engineering(

|

| 19 |

+

test_size: float = 0.2,

|

| 20 |

+

drop_na: Optional[bool] = None,

|

| 21 |

+

normalize: Optional[bool] = None,

|

| 22 |

+

drop_columns: Optional[List[str]] = None,

|

| 23 |

+

target: Optional[str] = "target",

|

| 24 |

+

):

|

| 25 |

+

"""

|

| 26 |

+

Feature engineering pipeline.

|

| 27 |

+

|

| 28 |

+

This is a pipeline that loads the data, processes it and splits

|

| 29 |

+

it into train and test sets.

|

| 30 |

+

|

| 31 |

+

Args:

|

| 32 |

+

test_size: Size of holdout set for training 0.0..1.0

|

| 33 |

+

drop_na: If `True` NA values will be removed from dataset

|

| 34 |

+

normalize: If `True` dataset will be normalized with MinMaxScaler

|

| 35 |

+

drop_columns: List of columns to drop from dataset

|

| 36 |

+

target: Name of target column in dataset

|

| 37 |

+

"""

|

| 38 |

+

### ADD YOUR OWN CODE HERE - THIS IS JUST AN EXAMPLE ###

|

| 39 |

+

# Link all the steps together by calling them and passing the output

|

| 40 |

+

# of one step as the input of the next step.

|

| 41 |

+

raw_data = data_loader(random_state=random.randint(0, 100), target=target)

|

| 42 |

+

dataset_trn, dataset_tst = data_splitter(

|

| 43 |

+

dataset=raw_data,

|

| 44 |

+

test_size=test_size,

|

| 45 |

+

)

|

| 46 |

+

dataset_trn, dataset_tst, _ = data_preprocessor(

|

| 47 |

+

dataset_trn=dataset_trn,

|

| 48 |

+

dataset_tst=dataset_tst,

|

| 49 |

+

drop_na=drop_na,

|

| 50 |

+

normalize=normalize,

|

| 51 |

+

drop_columns=drop_columns,

|

| 52 |

+

target=target,

|

| 53 |

+

)

|

| 54 |

+

return dataset_trn, dataset_tst

|

pipelines/inference.py

ADDED

|

@@ -0,0 +1,50 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# {% include 'template/license_header' %}

|

| 2 |

+

|

| 3 |

+

from typing import List, Optional

|

| 4 |

+

|

| 5 |

+

from steps import (

|

| 6 |

+

data_loader,

|

| 7 |

+

inference_preprocessor,

|

| 8 |

+

inference_predict,

|

| 9 |

+

)

|

| 10 |

+

from zenml import pipeline, ExternalArtifact

|

| 11 |

+

from zenml.logger import get_logger

|

| 12 |

+

|

| 13 |

+

logger = get_logger(__name__)

|

| 14 |

+

|

| 15 |

+

|

| 16 |

+

@pipeline

|

| 17 |

+

def inference(

|

| 18 |

+

test_size: float = 0.2,

|

| 19 |

+

drop_na: Optional[bool] = None,

|

| 20 |

+

normalize: Optional[bool] = None,

|

| 21 |

+

drop_columns: Optional[List[str]] = None,

|

| 22 |

+

):

|

| 23 |

+

"""

|

| 24 |

+

Model training pipeline.

|

| 25 |

+

|

| 26 |

+

This is a pipeline that loads the data, processes it and splits

|

| 27 |

+

it into train and test sets, then search for best hyperparameters,

|

| 28 |

+

trains and evaluates a model.

|

| 29 |

+

|

| 30 |

+

Args:

|

| 31 |

+

test_size: Size of holdout set for training 0.0..1.0

|

| 32 |

+

drop_na: If `True` NA values will be removed from dataset

|

| 33 |

+

normalize: If `True` dataset will be normalized with MinMaxScaler

|

| 34 |

+

drop_columns: List of columns to drop from dataset

|

| 35 |

+

"""

|

| 36 |

+

### ADD YOUR OWN CODE HERE - THIS IS JUST AN EXAMPLE ###

|

| 37 |

+

# Link all the steps together by calling them and passing the output

|

| 38 |

+

# of one step as the input of the next step.

|

| 39 |

+

random_state = 60

|

| 40 |

+

target = "target"

|

| 41 |

+

df_inference = data_loader(random_state=random_state, is_inference=True)

|

| 42 |

+

df_inference = inference_preprocessor(

|

| 43 |

+

dataset_inf=df_inference,

|

| 44 |

+

preprocess_pipeline=ExternalArtifact(name="preprocess_pipeline"),

|

| 45 |

+

target=target,

|

| 46 |

+

)

|

| 47 |

+

inference_predict(

|

| 48 |

+

dataset_inf=df_inference,

|

| 49 |

+

)

|

| 50 |

+

### END CODE HERE ###

|

pipelines/training.py

ADDED

|

@@ -0,0 +1,61 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# {% include 'template/license_header' %}

|

| 2 |

+

|

| 3 |

+

from typing import Optional

|

| 4 |

+

from uuid import UUID

|

| 5 |

+

|

| 6 |

+

from steps import model_evaluator, model_trainer, model_promoter

|

| 7 |

+

from zenml import ExternalArtifact, pipeline

|

| 8 |

+

from zenml.logger import get_logger

|

| 9 |

+

|

| 10 |

+

from pipelines import (

|

| 11 |

+

feature_engineering,

|

| 12 |

+

)

|

| 13 |

+

|

| 14 |

+

logger = get_logger(__name__)

|

| 15 |

+

|

| 16 |

+

|

| 17 |

+

@pipeline(enable_cache=True)

|

| 18 |

+

def breast_cancer_training(

|

| 19 |

+

train_dataset_id: Optional[UUID] = None,

|

| 20 |

+

test_dataset_id: Optional[UUID] = None,

|

| 21 |

+

min_train_accuracy: float = 0.0,

|

| 22 |

+

min_test_accuracy: float = 0.0,

|

| 23 |

+

):

|

| 24 |

+

"""

|

| 25 |

+

Model training pipeline.

|

| 26 |

+

|

| 27 |

+

This is a pipeline that loads the data, processes it and splits

|

| 28 |

+

it into train and test sets, then search for best hyperparameters,

|

| 29 |

+

trains and evaluates a model.

|

| 30 |

+

|

| 31 |

+

Args:

|

| 32 |

+

test_size: Size of holdout set for training 0.0..1.0

|

| 33 |

+

drop_na: If `True` NA values will be removed from dataset

|

| 34 |

+

normalize: If `True` dataset will be normalized with MinMaxScaler

|

| 35 |

+

drop_columns: List of columns to drop from dataset

|

| 36 |

+

"""

|

| 37 |

+

### ADD YOUR OWN CODE HERE - THIS IS JUST AN EXAMPLE ###

|

| 38 |

+

# Link all the steps together by calling them and passing the output

|

| 39 |

+

# of one step as the input of the next step.

|

| 40 |

+

|

| 41 |

+

# Execute Feature Engineering Pipeline

|

| 42 |

+

if train_dataset_id is None or test_dataset_id is None:

|

| 43 |

+

dataset_trn, dataset_tst = feature_engineering()

|

| 44 |

+

else:

|

| 45 |

+

dataset_trn = ExternalArtifact(id=train_dataset_id)

|

| 46 |

+

dataset_tst = ExternalArtifact(id=test_dataset_id)

|

| 47 |

+

|

| 48 |

+

model = model_trainer(

|

| 49 |

+

dataset_trn=dataset_trn,

|

| 50 |

+

)

|

| 51 |

+

|

| 52 |

+

acc = model_evaluator(

|

| 53 |

+

model=model,

|

| 54 |

+

dataset_trn=dataset_trn,

|

| 55 |

+

dataset_tst=dataset_tst,

|

| 56 |

+

min_train_accuracy=min_train_accuracy,

|

| 57 |

+

min_test_accuracy=min_test_accuracy,

|

| 58 |

+

)

|

| 59 |

+

|

| 60 |

+

model_promoter(accuracy=acc)

|

| 61 |

+

### END CODE HERE ###

|

requirements.txt

ADDED

|

@@ -0,0 +1,3 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

zenml[server]>=0.50.0

|

| 2 |

+

notebook

|

| 3 |

+

scikit-learn<1.3

|

run.ipynb

ADDED

|

@@ -0,0 +1,981 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

{

|

| 2 |

+

"cells": [

|

| 3 |

+

{

|

| 4 |

+

"cell_type": "code",

|

| 5 |

+

"execution_count": 1,

|

| 6 |

+

"id": "081d5616",

|

| 7 |

+

"metadata": {},

|

| 8 |

+

"outputs": [

|

| 9 |

+

{

|

| 10 |

+

"name": "stdout",

|

| 11 |

+

"output_type": "stream",

|

| 12 |

+

"text": [

|

| 13 |

+

"\u001b[1;35mNumExpr defaulting to 8 threads.\u001b[0m\n",

|

| 14 |

+

"\u001b[?25l\u001b[2;36mFound existing ZenML repository at path \u001b[0m\n",

|

| 15 |

+

"\u001b[2;32m'/home/apenner/PycharmProjects/template-starter/template'\u001b[0m\u001b[2;36m.\u001b[0m\n",

|

| 16 |

+

"\u001b[2;32m⠋\u001b[0m\u001b[2;36m Initializing ZenML repository at \u001b[0m\n",

|

| 17 |

+

"\u001b[2;36m/home/apenner/PycharmProjects/template-starter/template.\u001b[0m\n",

|

| 18 |

+

"\u001b[2K\u001b[1A\u001b[2K\u001b[1A\u001b[2K\u001b[32m⠋\u001b[0m Initializing ZenML repository at \n",

|

| 19 |

+

"/home/apenner/PycharmProjects/template-starter/template.\n",

|

| 20 |

+

"\n",

|

| 21 |

+

"\u001b[1A\u001b[2K\u001b[1A\u001b[2K\u001b[1A\u001b[2K\u001b[1;35mNumExpr defaulting to 8 threads.\u001b[0m\n",

|

| 22 |

+

"\u001b[2K\u001b[2;36mActive repository stack set to: \u001b[0m\u001b[2;32m'default'\u001b[0m.\n",

|

| 23 |

+

"\u001b[2K\u001b[32m⠙\u001b[0m Setting the repository active stack to 'default'...t'...\u001b[0m\n",

|

| 24 |

+

"\u001b[1A\u001b[2K"

|

| 25 |

+

]

|

| 26 |

+

}

|

| 27 |

+

],

|

| 28 |

+

"source": [

|

| 29 |

+

"!zenml init\n",

|

| 30 |

+

"!zenml stack set default"

|

| 31 |

+

]

|

| 32 |

+

},

|

| 33 |

+

{

|

| 34 |

+

"cell_type": "code",

|

| 35 |

+

"execution_count": 2,

|

| 36 |

+

"id": "79f775f2",

|

| 37 |

+

"metadata": {},

|

| 38 |

+

"outputs": [

|

| 39 |

+

{

|

| 40 |

+

"name": "stdout",

|

| 41 |

+

"output_type": "stream",

|

| 42 |

+

"text": [

|

| 43 |

+

"\u001b[1;35mNumExpr defaulting to 8 threads.\u001b[0m\n"

|

| 44 |

+

]

|

| 45 |

+

}

|

| 46 |

+

],

|

| 47 |

+

"source": [

|

| 48 |

+

"# Do the imports at the top\n",

|

| 49 |

+

"\n",

|

| 50 |

+

"import random\n",

|

| 51 |

+

"from zenml import ExternalArtifact, pipeline \n",

|

| 52 |

+

"from zenml.client import Client\n",

|

| 53 |

+

"from zenml.logger import get_logger\n",

|

| 54 |

+

"from uuid import UUID\n",

|

| 55 |

+

"\n",

|

| 56 |

+

"import os\n",

|

| 57 |

+

"from typing import Optional, List\n",

|

| 58 |

+

"\n",

|

| 59 |

+

"from zenml import pipeline\n",

|

| 60 |

+

"\n",

|

| 61 |

+

"from steps import (\n",

|

| 62 |

+

" data_loader,\n",

|

| 63 |

+

" data_preprocessor,\n",

|

| 64 |

+

" data_splitter,\n",

|

| 65 |

+

" model_evaluator,\n",

|

| 66 |

+

" model_trainer,\n",

|

| 67 |

+

" inference_predict,\n",

|

| 68 |

+

" inference_preprocessor\n",

|

| 69 |

+

")\n",

|

| 70 |

+

"\n",

|

| 71 |

+

"logger = get_logger(__name__)\n",

|

| 72 |

+

"\n",

|

| 73 |

+

"client = Client()"

|

| 74 |

+

]

|

| 75 |

+

},

|

| 76 |

+

{

|

| 77 |

+

"cell_type": "code",

|

| 78 |

+

"execution_count": 3,

|

| 79 |

+

"id": "b50a9537",

|

| 80 |

+

"metadata": {},

|

| 81 |

+

"outputs": [],

|

| 82 |

+

"source": [

|

| 83 |

+

"@pipeline\n",

|

| 84 |

+

"def feature_engineering(\n",

|

| 85 |

+

" test_size: float = 0.2,\n",

|

| 86 |

+

" drop_na: Optional[bool] = None,\n",

|

| 87 |

+

" normalize: Optional[bool] = None,\n",

|

| 88 |

+

" drop_columns: Optional[List[str]] = None,\n",

|

| 89 |

+

" target: Optional[str] = \"target\",\n",

|

| 90 |

+

"):\n",

|

| 91 |

+

" \"\"\"\n",

|

| 92 |

+

" Feature engineering pipeline.\n",

|

| 93 |

+

"\n",

|

| 94 |

+

" This is a pipeline that loads the data, processes it and splits\n",

|

| 95 |

+

" it into train and test sets.\n",

|

| 96 |

+

"\n",

|

| 97 |

+

" Args:\n",

|

| 98 |

+

" test_size: Size of holdout set for training 0.0..1.0\n",

|

| 99 |

+

" drop_na: If `True` NA values will be removed from dataset\n",

|

| 100 |

+

" normalize: If `True` dataset will be normalized with MinMaxScaler\n",

|

| 101 |

+

" drop_columns: List of columns to drop from dataset\n",

|

| 102 |

+

" target: Name of target column in dataset\n",

|

| 103 |

+

" \"\"\"\n",

|

| 104 |

+

" ### ADD YOUR OWN CODE HERE - THIS IS JUST AN EXAMPLE ###\n",

|

| 105 |

+

" # Link all the steps together by calling them and passing the output\n",

|

| 106 |

+

" # of one step as the input of the next step.\n",

|

| 107 |

+

" raw_data = data_loader(random_state=random.randint(0, 100), target=target)\n",

|

| 108 |

+

" dataset_trn, dataset_tst = data_splitter(\n",

|

| 109 |

+

" dataset=raw_data,\n",

|

| 110 |

+

" test_size=test_size,\n",

|

| 111 |

+

" )\n",

|

| 112 |

+

" dataset_trn, dataset_tst, _ = data_preprocessor(\n",

|

| 113 |

+

" dataset_trn=dataset_trn,\n",

|

| 114 |

+

" dataset_tst=dataset_tst,\n",

|

| 115 |

+

" drop_na=drop_na,\n",

|

| 116 |

+

" normalize=normalize,\n",

|

| 117 |

+

" drop_columns=drop_columns,\n",

|

| 118 |

+

" target=target,\n",

|

| 119 |

+

" )\n",

|

| 120 |

+

" \n",

|

| 121 |

+

" return dataset_trn, dataset_tst"

|

| 122 |

+

]

|

| 123 |

+

},

|

| 124 |

+

{

|

| 125 |

+

"cell_type": "code",

|

| 126 |

+

"execution_count": 4,

|

| 127 |

+

"id": "bc5feef4-7016-420e-9af9-2e87ff666f74",

|

| 128 |

+

"metadata": {},

|

| 129 |

+

"outputs": [],

|

| 130 |

+

"source": [

|

| 131 |

+

"pipeline_args = {}\n",

|

| 132 |

+

"pipeline_args[\"config_path\"] = os.path.join(\"configs\", \"feature_engineering.yaml\")\n",

|

| 133 |

+

"fe_p_configured = feature_engineering.with_options(**pipeline_args)"

|

| 134 |

+

]

|

| 135 |

+

},

|

| 136 |

+

{

|

| 137 |

+

"cell_type": "code",

|

| 138 |

+

"execution_count": 5,

|

| 139 |

+

"id": "75cf3740-b2d8-4c4b-b91b-dc1637000880",

|

| 140 |

+

"metadata": {},

|

| 141 |

+

"outputs": [

|

| 142 |

+

{

|

| 143 |

+

"name": "stdout",

|

| 144 |

+

"output_type": "stream",

|

| 145 |

+

"text": [

|

| 146 |

+

"\u001b[1;35mInitiating a new run for the pipeline: \u001b[0m\u001b[1;36mfeature_engineering\u001b[1;35m.\u001b[0m\n",

|

| 147 |

+

"\u001b[1;35mReusing registered version: \u001b[0m\u001b[1;36m(version: 1)\u001b[1;35m.\u001b[0m\n",

|

| 148 |

+

"\u001b[1;35mNew model version \u001b[0m\u001b[1;36m34\u001b[1;35m was created.\u001b[0m\n",

|

| 149 |

+

"\u001b[1;35mExecuting a new run.\u001b[0m\n",

|

| 150 |

+

"\u001b[1;35mUsing user: \u001b[0m\u001b[1;36malexej@zenml.io\u001b[1;35m\u001b[0m\n",

|

| 151 |

+

"\u001b[1;35mUsing stack: \u001b[0m\u001b[1;36mdefault\u001b[1;35m\u001b[0m\n",

|

| 152 |

+

"\u001b[1;35m artifact_store: \u001b[0m\u001b[1;36mdefault\u001b[1;35m\u001b[0m\n",

|

| 153 |

+

"\u001b[1;35m orchestrator: \u001b[0m\u001b[1;36mdefault\u001b[1;35m\u001b[0m\n",

|

| 154 |

+

"\u001b[1;35mStep \u001b[0m\u001b[1;36mdata_loader\u001b[1;35m has started.\u001b[0m\n",

|

| 155 |

+

"\u001b[1;35mDataset with 541 records loaded!\u001b[0m\n",

|

| 156 |

+

"\u001b[1;35mStep \u001b[0m\u001b[1;36mdata_loader\u001b[1;35m has finished in \u001b[0m\u001b[1;36m6.777s\u001b[1;35m.\u001b[0m\n",

|

| 157 |

+

"\u001b[1;35mStep \u001b[0m\u001b[1;36mdata_splitter\u001b[1;35m has started.\u001b[0m\n",

|

| 158 |

+

"\u001b[1;35mStep \u001b[0m\u001b[1;36mdata_splitter\u001b[1;35m has finished in \u001b[0m\u001b[1;36m11.345s\u001b[1;35m.\u001b[0m\n",

|

| 159 |

+

"\u001b[1;35mStep \u001b[0m\u001b[1;36mdata_preprocessor\u001b[1;35m has started.\u001b[0m\n",

|

| 160 |

+

"\u001b[1;35mStep \u001b[0m\u001b[1;36mdata_preprocessor\u001b[1;35m has finished in \u001b[0m\u001b[1;36m14.866s\u001b[1;35m.\u001b[0m\n",

|

| 161 |

+

"\u001b[1;35mRun \u001b[0m\u001b[1;36mfeature_engineering-2023_12_06-09_08_46_821042\u001b[1;35m has finished in \u001b[0m\u001b[1;36m36.198s\u001b[1;35m.\u001b[0m\n",

|

| 162 |

+

"\u001b[1;35mDashboard URL: https://1cf18d95-zenml.cloudinfra.zenml.io/workspaces/default/pipelines/52874ade-f314-45ab-b9bf-e95fb29290b8/runs/9d9e49b1-d78f-478b-991e-da87b0560512/dag\u001b[0m\n"

|

| 163 |

+

]

|

| 164 |

+

}

|

| 165 |

+

],

|

| 166 |

+

"source": [

|

| 167 |

+

"latest_run = fe_p_configured()"

|

| 168 |

+

]

|

| 169 |

+

},

|

| 170 |

+

{

|

| 171 |

+

"cell_type": "code",