Spaces:

No application file

No application file

up

Browse filesThis view is limited to 50 files because it contains too many changes.

See raw diff

- .gitattributes +1 -0

- GenAD-main/LICENSE +201 -0

- GenAD-main/README.md +127 -0

- GenAD-main/assets/comparison.png +0 -0

- GenAD-main/assets/demo.gif +3 -0

- GenAD-main/assets/framework.png +0 -0

- GenAD-main/assets/results.png +0 -0

- GenAD-main/docs/install.md +66 -0

- GenAD-main/docs/visualization.md +10 -0

- GenAD-main/projects/__init__.py +0 -0

- GenAD-main/projects/__pycache__/__init__.cpython-38.pyc +0 -0

- GenAD-main/projects/configs/VAD/GenAD_config.py +443 -0

- GenAD-main/projects/configs/_base_/datasets/coco_instance.py +48 -0

- GenAD-main/projects/configs/_base_/datasets/kitti-3d-3class.py +140 -0

- GenAD-main/projects/configs/_base_/datasets/kitti-3d-car.py +138 -0

- GenAD-main/projects/configs/_base_/datasets/lyft-3d.py +136 -0

- GenAD-main/projects/configs/_base_/datasets/nuim_instance.py +59 -0

- GenAD-main/projects/configs/_base_/datasets/nus-3d.py +142 -0

- GenAD-main/projects/configs/_base_/datasets/nus-mono3d.py +100 -0

- GenAD-main/projects/configs/_base_/datasets/range100_lyft-3d.py +136 -0

- GenAD-main/projects/configs/_base_/datasets/s3dis-3d-5class.py +114 -0

- GenAD-main/projects/configs/_base_/datasets/s3dis_seg-3d-13class.py +139 -0

- GenAD-main/projects/configs/_base_/datasets/scannet-3d-18class.py +128 -0

- GenAD-main/projects/configs/_base_/datasets/scannet_seg-3d-20class.py +132 -0

- GenAD-main/projects/configs/_base_/datasets/sunrgbd-3d-10class.py +107 -0

- GenAD-main/projects/configs/_base_/datasets/waymoD5-3d-3class.py +145 -0

- GenAD-main/projects/configs/_base_/datasets/waymoD5-3d-car.py +143 -0

- GenAD-main/projects/configs/_base_/default_runtime.py +18 -0

- GenAD-main/projects/configs/_base_/models/3dssd.py +77 -0

- GenAD-main/projects/configs/_base_/models/cascade_mask_rcnn_r50_fpn.py +200 -0

- GenAD-main/projects/configs/_base_/models/centerpoint_01voxel_second_secfpn_nus.py +83 -0

- GenAD-main/projects/configs/_base_/models/centerpoint_02pillar_second_secfpn_nus.py +83 -0

- GenAD-main/projects/configs/_base_/models/fcos3d.py +74 -0

- GenAD-main/projects/configs/_base_/models/groupfree3d.py +71 -0

- GenAD-main/projects/configs/_base_/models/h3dnet.py +341 -0

- GenAD-main/projects/configs/_base_/models/hv_pointpillars_fpn_lyft.py +22 -0

- GenAD-main/projects/configs/_base_/models/hv_pointpillars_fpn_nus.py +96 -0

- GenAD-main/projects/configs/_base_/models/hv_pointpillars_fpn_range100_lyft.py +22 -0

- GenAD-main/projects/configs/_base_/models/hv_pointpillars_secfpn_kitti.py +93 -0

- GenAD-main/projects/configs/_base_/models/hv_pointpillars_secfpn_waymo.py +108 -0

- GenAD-main/projects/configs/_base_/models/hv_second_secfpn_kitti.py +89 -0

- GenAD-main/projects/configs/_base_/models/hv_second_secfpn_waymo.py +100 -0

- GenAD-main/projects/configs/_base_/models/imvotenet_image.py +108 -0

- GenAD-main/projects/configs/_base_/models/mask_rcnn_r50_fpn.py +124 -0

- GenAD-main/projects/configs/_base_/models/paconv_cuda_ssg.py +7 -0

- GenAD-main/projects/configs/_base_/models/paconv_ssg.py +49 -0

- GenAD-main/projects/configs/_base_/models/parta2.py +201 -0

- GenAD-main/projects/configs/_base_/models/pointnet2_msg.py +28 -0

- GenAD-main/projects/configs/_base_/models/pointnet2_ssg.py +35 -0

- GenAD-main/projects/configs/_base_/models/votenet.py +73 -0

.gitattributes

CHANGED

|

@@ -33,3 +33,4 @@ saved_model/**/* filter=lfs diff=lfs merge=lfs -text

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

|

|

|

|

|

| 33 |

*.zip filter=lfs diff=lfs merge=lfs -text

|

| 34 |

*.zst filter=lfs diff=lfs merge=lfs -text

|

| 35 |

*tfevents* filter=lfs diff=lfs merge=lfs -text

|

| 36 |

+

GenAD-main/assets/demo.gif filter=lfs diff=lfs merge=lfs -text

|

GenAD-main/LICENSE

ADDED

|

@@ -0,0 +1,201 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

Apache License

|

| 2 |

+

Version 2.0, January 2004

|

| 3 |

+

http://www.apache.org/licenses/

|

| 4 |

+

|

| 5 |

+

TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

|

| 6 |

+

|

| 7 |

+

1. Definitions.

|

| 8 |

+

|

| 9 |

+

"License" shall mean the terms and conditions for use, reproduction,

|

| 10 |

+

and distribution as defined by Sections 1 through 9 of this document.

|

| 11 |

+

|

| 12 |

+

"Licensor" shall mean the copyright owner or entity authorized by

|

| 13 |

+

the copyright owner that is granting the License.

|

| 14 |

+

|

| 15 |

+

"Legal Entity" shall mean the union of the acting entity and all

|

| 16 |

+

other entities that control, are controlled by, or are under common

|

| 17 |

+

control with that entity. For the purposes of this definition,

|

| 18 |

+

"control" means (i) the power, direct or indirect, to cause the

|

| 19 |

+

direction or management of such entity, whether by contract or

|

| 20 |

+

otherwise, or (ii) ownership of fifty percent (50%) or more of the

|

| 21 |

+

outstanding shares, or (iii) beneficial ownership of such entity.

|

| 22 |

+

|

| 23 |

+

"You" (or "Your") shall mean an individual or Legal Entity

|

| 24 |

+

exercising permissions granted by this License.

|

| 25 |

+

|

| 26 |

+

"Source" form shall mean the preferred form for making modifications,

|

| 27 |

+

including but not limited to software source code, documentation

|

| 28 |

+

source, and configuration files.

|

| 29 |

+

|

| 30 |

+

"Object" form shall mean any form resulting from mechanical

|

| 31 |

+

transformation or translation of a Source form, including but

|

| 32 |

+

not limited to compiled object code, generated documentation,

|

| 33 |

+

and conversions to other media types.

|

| 34 |

+

|

| 35 |

+

"Work" shall mean the work of authorship, whether in Source or

|

| 36 |

+

Object form, made available under the License, as indicated by a

|

| 37 |

+

copyright notice that is included in or attached to the work

|

| 38 |

+

(an example is provided in the Appendix below).

|

| 39 |

+

|

| 40 |

+

"Derivative Works" shall mean any work, whether in Source or Object

|

| 41 |

+

form, that is based on (or derived from) the Work and for which the

|

| 42 |

+

editorial revisions, annotations, elaborations, or other modifications

|

| 43 |

+

represent, as a whole, an original work of authorship. For the purposes

|

| 44 |

+

of this License, Derivative Works shall not include works that remain

|

| 45 |

+

separable from, or merely link (or bind by name) to the interfaces of,

|

| 46 |

+

the Work and Derivative Works thereof.

|

| 47 |

+

|

| 48 |

+

"Contribution" shall mean any work of authorship, including

|

| 49 |

+

the original version of the Work and any modifications or additions

|

| 50 |

+

to that Work or Derivative Works thereof, that is intentionally

|

| 51 |

+

submitted to Licensor for inclusion in the Work by the copyright owner

|

| 52 |

+

or by an individual or Legal Entity authorized to submit on behalf of

|

| 53 |

+

the copyright owner. For the purposes of this definition, "submitted"

|

| 54 |

+

means any form of electronic, verbal, or written communication sent

|

| 55 |

+

to the Licensor or its representatives, including but not limited to

|

| 56 |

+

communication on electronic mailing lists, source code control systems,

|

| 57 |

+

and issue tracking systems that are managed by, or on behalf of, the

|

| 58 |

+

Licensor for the purpose of discussing and improving the Work, but

|

| 59 |

+

excluding communication that is conspicuously marked or otherwise

|

| 60 |

+

designated in writing by the copyright owner as "Not a Contribution."

|

| 61 |

+

|

| 62 |

+

"Contributor" shall mean Licensor and any individual or Legal Entity

|

| 63 |

+

on behalf of whom a Contribution has been received by Licensor and

|

| 64 |

+

subsequently incorporated within the Work.

|

| 65 |

+

|

| 66 |

+

2. Grant of Copyright License. Subject to the terms and conditions of

|

| 67 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 68 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 69 |

+

copyright license to reproduce, prepare Derivative Works of,

|

| 70 |

+

publicly display, publicly perform, sublicense, and distribute the

|

| 71 |

+

Work and such Derivative Works in Source or Object form.

|

| 72 |

+

|

| 73 |

+

3. Grant of Patent License. Subject to the terms and conditions of

|

| 74 |

+

this License, each Contributor hereby grants to You a perpetual,

|

| 75 |

+

worldwide, non-exclusive, no-charge, royalty-free, irrevocable

|

| 76 |

+

(except as stated in this section) patent license to make, have made,

|

| 77 |

+

use, offer to sell, sell, import, and otherwise transfer the Work,

|

| 78 |

+

where such license applies only to those patent claims licensable

|

| 79 |

+

by such Contributor that are necessarily infringed by their

|

| 80 |

+

Contribution(s) alone or by combination of their Contribution(s)

|

| 81 |

+

with the Work to which such Contribution(s) was submitted. If You

|

| 82 |

+

institute patent litigation against any entity (including a

|

| 83 |

+

cross-claim or counterclaim in a lawsuit) alleging that the Work

|

| 84 |

+

or a Contribution incorporated within the Work constitutes direct

|

| 85 |

+

or contributory patent infringement, then any patent licenses

|

| 86 |

+

granted to You under this License for that Work shall terminate

|

| 87 |

+

as of the date such litigation is filed.

|

| 88 |

+

|

| 89 |

+

4. Redistribution. You may reproduce and distribute copies of the

|

| 90 |

+

Work or Derivative Works thereof in any medium, with or without

|

| 91 |

+

modifications, and in Source or Object form, provided that You

|

| 92 |

+

meet the following conditions:

|

| 93 |

+

|

| 94 |

+

(a) You must give any other recipients of the Work or

|

| 95 |

+

Derivative Works a copy of this License; and

|

| 96 |

+

|

| 97 |

+

(b) You must cause any modified files to carry prominent notices

|

| 98 |

+

stating that You changed the files; and

|

| 99 |

+

|

| 100 |

+

(c) You must retain, in the Source form of any Derivative Works

|

| 101 |

+

that You distribute, all copyright, patent, trademark, and

|

| 102 |

+

attribution notices from the Source form of the Work,

|

| 103 |

+

excluding those notices that do not pertain to any part of

|

| 104 |

+

the Derivative Works; and

|

| 105 |

+

|

| 106 |

+

(d) If the Work includes a "NOTICE" text file as part of its

|

| 107 |

+

distribution, then any Derivative Works that You distribute must

|

| 108 |

+

include a readable copy of the attribution notices contained

|

| 109 |

+

within such NOTICE file, excluding those notices that do not

|

| 110 |

+

pertain to any part of the Derivative Works, in at least one

|

| 111 |

+

of the following places: within a NOTICE text file distributed

|

| 112 |

+

as part of the Derivative Works; within the Source form or

|

| 113 |

+

documentation, if provided along with the Derivative Works; or,

|

| 114 |

+

within a display generated by the Derivative Works, if and

|

| 115 |

+

wherever such third-party notices normally appear. The contents

|

| 116 |

+

of the NOTICE file are for informational purposes only and

|

| 117 |

+

do not modify the License. You may add Your own attribution

|

| 118 |

+

notices within Derivative Works that You distribute, alongside

|

| 119 |

+

or as an addendum to the NOTICE text from the Work, provided

|

| 120 |

+

that such additional attribution notices cannot be construed

|

| 121 |

+

as modifying the License.

|

| 122 |

+

|

| 123 |

+

You may add Your own copyright statement to Your modifications and

|

| 124 |

+

may provide additional or different license terms and conditions

|

| 125 |

+

for use, reproduction, or distribution of Your modifications, or

|

| 126 |

+

for any such Derivative Works as a whole, provided Your use,

|

| 127 |

+

reproduction, and distribution of the Work otherwise complies with

|

| 128 |

+

the conditions stated in this License.

|

| 129 |

+

|

| 130 |

+

5. Submission of Contributions. Unless You explicitly state otherwise,

|

| 131 |

+

any Contribution intentionally submitted for inclusion in the Work

|

| 132 |

+

by You to the Licensor shall be under the terms and conditions of

|

| 133 |

+

this License, without any additional terms or conditions.

|

| 134 |

+

Notwithstanding the above, nothing herein shall supersede or modify

|

| 135 |

+

the terms of any separate license agreement you may have executed

|

| 136 |

+

with Licensor regarding such Contributions.

|

| 137 |

+

|

| 138 |

+

6. Trademarks. This License does not grant permission to use the trade

|

| 139 |

+

names, trademarks, service marks, or product names of the Licensor,

|

| 140 |

+

except as required for reasonable and customary use in describing the

|

| 141 |

+

origin of the Work and reproducing the content of the NOTICE file.

|

| 142 |

+

|

| 143 |

+

7. Disclaimer of Warranty. Unless required by applicable law or

|

| 144 |

+

agreed to in writing, Licensor provides the Work (and each

|

| 145 |

+

Contributor provides its Contributions) on an "AS IS" BASIS,

|

| 146 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

|

| 147 |

+

implied, including, without limitation, any warranties or conditions

|

| 148 |

+

of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

|

| 149 |

+

PARTICULAR PURPOSE. You are solely responsible for determining the

|

| 150 |

+

appropriateness of using or redistributing the Work and assume any

|

| 151 |

+

risks associated with Your exercise of permissions under this License.

|

| 152 |

+

|

| 153 |

+

8. Limitation of Liability. In no event and under no legal theory,

|

| 154 |

+

whether in tort (including negligence), contract, or otherwise,

|

| 155 |

+

unless required by applicable law (such as deliberate and grossly

|

| 156 |

+

negligent acts) or agreed to in writing, shall any Contributor be

|

| 157 |

+

liable to You for damages, including any direct, indirect, special,

|

| 158 |

+

incidental, or consequential damages of any character arising as a

|

| 159 |

+

result of this License or out of the use or inability to use the

|

| 160 |

+

Work (including but not limited to damages for loss of goodwill,

|

| 161 |

+

work stoppage, computer failure or malfunction, or any and all

|

| 162 |

+

other commercial damages or losses), even if such Contributor

|

| 163 |

+

has been advised of the possibility of such damages.

|

| 164 |

+

|

| 165 |

+

9. Accepting Warranty or Additional Liability. While redistributing

|

| 166 |

+

the Work or Derivative Works thereof, You may choose to offer,

|

| 167 |

+

and charge a fee for, acceptance of support, warranty, indemnity,

|

| 168 |

+

or other liability obligations and/or rights consistent with this

|

| 169 |

+

License. However, in accepting such obligations, You may act only

|

| 170 |

+

on Your own behalf and on Your sole responsibility, not on behalf

|

| 171 |

+

of any other Contributor, and only if You agree to indemnify,

|

| 172 |

+

defend, and hold each Contributor harmless for any liability

|

| 173 |

+

incurred by, or claims asserted against, such Contributor by reason

|

| 174 |

+

of your accepting any such warranty or additional liability.

|

| 175 |

+

|

| 176 |

+

END OF TERMS AND CONDITIONS

|

| 177 |

+

|

| 178 |

+

APPENDIX: How to apply the Apache License to your work.

|

| 179 |

+

|

| 180 |

+

To apply the Apache License to your work, attach the following

|

| 181 |

+

boilerplate notice, with the fields enclosed by brackets "[]"

|

| 182 |

+

replaced with your own identifying information. (Don't include

|

| 183 |

+

the brackets!) The text should be enclosed in the appropriate

|

| 184 |

+

comment syntax for the file format. We also recommend that a

|

| 185 |

+

file or class name and description of purpose be included on the

|

| 186 |

+

same "printed page" as the copyright notice for easier

|

| 187 |

+

identification within third-party archives.

|

| 188 |

+

|

| 189 |

+

Copyright [yyyy] [name of copyright owner]

|

| 190 |

+

|

| 191 |

+

Licensed under the Apache License, Version 2.0 (the "License");

|

| 192 |

+

you may not use this file except in compliance with the License.

|

| 193 |

+

You may obtain a copy of the License at

|

| 194 |

+

|

| 195 |

+

http://www.apache.org/licenses/LICENSE-2.0

|

| 196 |

+

|

| 197 |

+

Unless required by applicable law or agreed to in writing, software

|

| 198 |

+

distributed under the License is distributed on an "AS IS" BASIS,

|

| 199 |

+

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

| 200 |

+

See the License for the specific language governing permissions and

|

| 201 |

+

limitations under the License.

|

GenAD-main/README.md

ADDED

|

@@ -0,0 +1,127 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# GenAD: Generative End-to-End Autonomous Driving

|

| 2 |

+

|

| 3 |

+

### [Paper](https://arxiv.org/pdf/2402.11502)

|

| 4 |

+

|

| 5 |

+

> GenAD: Generative End-to-End Autonomous Driving

|

| 6 |

+

|

| 7 |

+

> [Wenzhao Zheng](https://wzzheng.net/)\*, Ruiqi Song\*, [Xianda Guo](https://scholar.google.com/citations?user=jPvOqgYAAAAJ)\* $\dagger$, Chenming Zhang, [Long Chen](https://scholar.google.com/citations?user=jzvXnkcAAAAJ)$\dagger$

|

| 8 |

+

|

| 9 |

+

\* Equal contributions $\dagger$ Corresponding authors

|

| 10 |

+

|

| 11 |

+

**GenAD casts autonomous driving as a generative modeling problem.**

|

| 12 |

+

|

| 13 |

+

## News

|

| 14 |

+

|

| 15 |

+

- **[2024/5/2]** Training and evaluation code release.

|

| 16 |

+

- **[2024/2/18]** Paper released on [arXiv](https://arxiv.org/pdf/2402.11502).

|

| 17 |

+

|

| 18 |

+

## Demo

|

| 19 |

+

|

| 20 |

+

|

| 21 |

+

|

| 22 |

+

## Overview

|

| 23 |

+

|

| 24 |

+

|

| 25 |

+

|

| 26 |

+

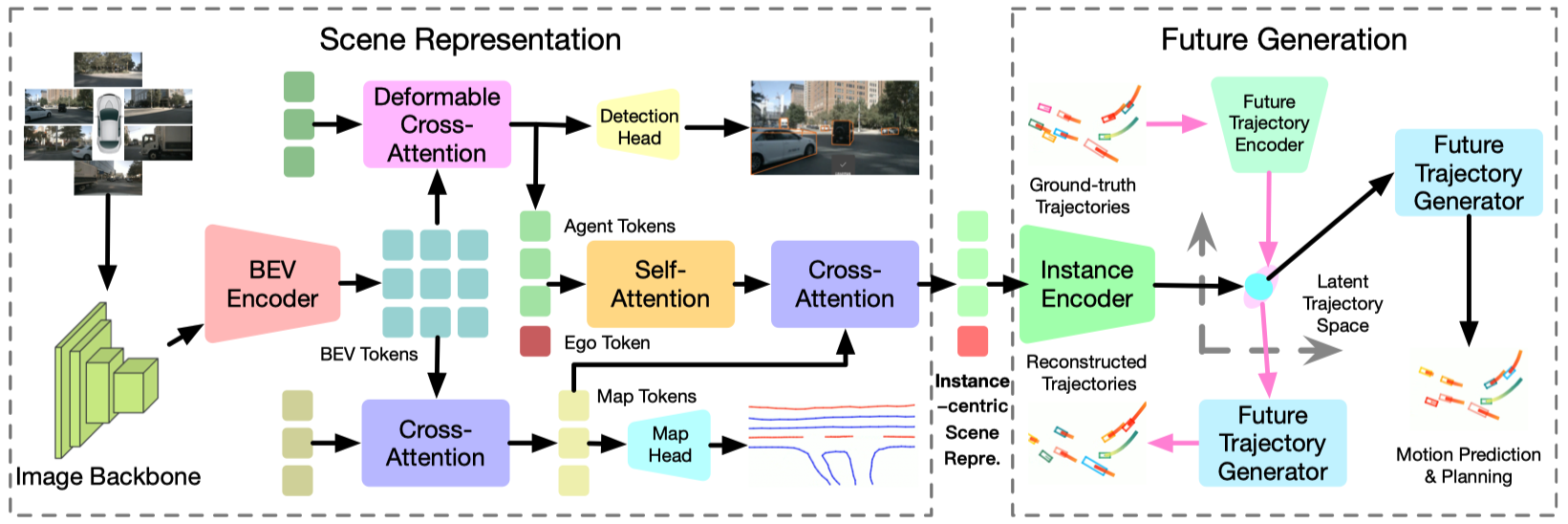

**Comparisons of the proposed generative end-to-end autonomous driving framework with the conventional pipeline.** Most existing methods follow a serial design of perception, prediction, and planning. They usually ignore the high-level interactions between the ego car and other agents and the structural prior of realistic trajectories. We model autonomous driving as a future generation problem and conduct motion prediction and ego planning simultaneously in a structural latent trajectory space.

|

| 27 |

+

|

| 28 |

+

## Results

|

| 29 |

+

|

| 30 |

+

|

| 31 |

+

|

| 32 |

+

## Code

|

| 33 |

+

### Dataset

|

| 34 |

+

|

| 35 |

+

Download nuScenes V1.0 full dataset data and CAN bus expansion data [HERE](https://www.nuscenes.org/download). Prepare nuscenes data as follows.

|

| 36 |

+

|

| 37 |

+

**Download CAN bus expansion**

|

| 38 |

+

|

| 39 |

+

```

|

| 40 |

+

# download 'can_bus.zip'

|

| 41 |

+

unzip can_bus.zip

|

| 42 |

+

# move can_bus to data dir

|

| 43 |

+

```

|

| 44 |

+

|

| 45 |

+

**Prepare nuScenes data**

|

| 46 |

+

|

| 47 |

+

*We genetate custom annotation files which are different from mmdet3d's*

|

| 48 |

+

|

| 49 |

+

Generate the train file and val file:

|

| 50 |

+

|

| 51 |

+

```

|

| 52 |

+

python tools/data_converter/genad_nuscenes_converter.py nuscenes --root-path ./data/nuscenes --out-dir ./data/nuscenes --extra-tag genad_nuscenes --version v1.0 --canbus ./data

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

Using the above code will generate `genad_nuscenes_infos_temporal_{train,val}.pkl`.

|

| 56 |

+

|

| 57 |

+

|

| 58 |

+

**Folder structure**

|

| 59 |

+

|

| 60 |

+

```

|

| 61 |

+

GenAD

|

| 62 |

+

├── projects/

|

| 63 |

+

├── tools/

|

| 64 |

+

├── configs/

|

| 65 |

+

├── ckpts/

|

| 66 |

+

│ ├── resnet50-19c8e357.pth

|

| 67 |

+

├── data/

|

| 68 |

+

│ ├── can_bus/

|

| 69 |

+

│ ├── nuscenes/

|

| 70 |

+

│ │ ├── maps/

|

| 71 |

+

│ │ ├── samples/

|

| 72 |

+

│ │ ├── sweeps/

|

| 73 |

+

│ │ ├── v1.0-test/

|

| 74 |

+

| | ├── v1.0-trainval/

|

| 75 |

+

| | ├── genad_nuscenes_infos_train.pkl

|

| 76 |

+

| | ├── genad_nuscenes_infos_val.pkl

|

| 77 |

+

```

|

| 78 |

+

|

| 79 |

+

### installation

|

| 80 |

+

|

| 81 |

+

Detailed package versions can be found in [requirements.txt](../requirements.txt).

|

| 82 |

+

|

| 83 |

+

- [Installation](docs/install.md)

|

| 84 |

+

|

| 85 |

+

### Getting Started

|

| 86 |

+

|

| 87 |

+

**datasets**

|

| 88 |

+

|

| 89 |

+

https://drive.google.com/drive/folders/1gy7Ux-bk0sge77CsGgeEzPF9ImVn-WgJ?usp=drive_link

|

| 90 |

+

|

| 91 |

+

**Checkpoints**

|

| 92 |

+

|

| 93 |

+

https://drive.google.com/drive/folders/1nlAWJlvSHwqnTjEwlfiE99YJVRFKmqF9?usp=drive_link

|

| 94 |

+

|

| 95 |

+

Train GenAD with 8 GPUs

|

| 96 |

+

|

| 97 |

+

```shell

|

| 98 |

+

cd /path/to/GenAD

|

| 99 |

+

conda activate genad

|

| 100 |

+

python -m torch.distributed.run --nproc_per_node=8 --master_port=2333 tools/train.py projects/configs/GenAD/GenAD_config.py --launcher pytorch --deterministic --work-dir path/to/save/outputs

|

| 101 |

+

```

|

| 102 |

+

|

| 103 |

+

Eval GenAD with 1 GPU

|

| 104 |

+

|

| 105 |

+

```shell

|

| 106 |

+

cd /path/to/GenAD

|

| 107 |

+

conda activate genad

|

| 108 |

+

CUDA_VISIBLE_DEVICES=0 python tools/test.py projects/configs/VAD/GenAD_config.py /path/to/ckpt.pth --launcher none --eval bbox --tmpdir outputs

|

| 109 |

+

```

|

| 110 |

+

|

| 111 |

+

|

| 112 |

+

|

| 113 |

+

## Related Projects

|

| 114 |

+

|

| 115 |

+

Our code is based on [VAD](https://github.com/hustvl/VAD) and [UniAD](https://github.com/OpenDriveLab/UniAD).

|

| 116 |

+

|

| 117 |

+

## Citation

|

| 118 |

+

|

| 119 |

+

If you find this project helpful, please consider citing the following paper:

|

| 120 |

+

```

|

| 121 |

+

@article{zheng2024genad,

|

| 122 |

+

title={GenAD: Generative End-to-End Autonomous Driving},

|

| 123 |

+

author={Zheng, Wenzhao and Song, Ruiqi and Guo, Xianda and Zhang, Chenming and Chen, Long},

|

| 124 |

+

journal={arXiv preprint arXiv: 2402.11502},

|

| 125 |

+

year={2024}

|

| 126 |

+

}

|

| 127 |

+

```

|

GenAD-main/assets/comparison.png

ADDED

|

GenAD-main/assets/demo.gif

ADDED

|

Git LFS Details

|

GenAD-main/assets/framework.png

ADDED

|

GenAD-main/assets/results.png

ADDED

|

GenAD-main/docs/install.md

ADDED

|

@@ -0,0 +1,66 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# installation

|

| 2 |

+

|

| 3 |

+

Detailed package versions can be found in [requirements.txt](../requirements.txt).

|

| 4 |

+

|

| 5 |

+

|

| 6 |

+

|

| 7 |

+

**a. Create a conda virtual environment and activate it.**

|

| 8 |

+

```shell

|

| 9 |

+

conda create -n genad python=3.8 -y

|

| 10 |

+

conda activate genad

|

| 11 |

+

```

|

| 12 |

+

|

| 13 |

+

**b. Install PyTorch and torchvision following the [official instructions](https://pytorch.org/).**

|

| 14 |

+

```shell

|

| 15 |

+

pip install torch==1.9.1+cu111 torchvision==0.10.1+cu111 torchaudio==0.9.1 -f https://download.pytorch.org/whl/torch_stable.html

|

| 16 |

+

# Recommended torch>=1.9

|

| 17 |

+

```

|

| 18 |

+

|

| 19 |

+

**c. Install gcc>=5 in conda env (optional).**

|

| 20 |

+

```shell

|

| 21 |

+

conda install -c omgarcia gcc-5 # gcc-6.2

|

| 22 |

+

```

|

| 23 |

+

|

| 24 |

+

**c. Install mmcv-full.**

|

| 25 |

+

```shell

|

| 26 |

+

pip install mmcv-full==1.4.0

|

| 27 |

+

# pip install mmcv-full==1.4.0 -f https://download.openmmlab.com/mmcv/dist/cu111/torch1.9.0/index.html

|

| 28 |

+

```

|

| 29 |

+

|

| 30 |

+

**d. Install mmdet and mmseg.**

|

| 31 |

+

```shell

|

| 32 |

+

pip install mmdet==2.14.0

|

| 33 |

+

pip install mmsegmentation==0.14.1

|

| 34 |

+

```

|

| 35 |

+

|

| 36 |

+

**e. Install timm.**

|

| 37 |

+

```shell

|

| 38 |

+

pip install timm

|

| 39 |

+

```

|

| 40 |

+

|

| 41 |

+

**f. Install mmdet3d.**

|

| 42 |

+

```shell

|

| 43 |

+

conda activate genad

|

| 44 |

+

git clone https://github.com/open-mmlab/mmdetection3d.git

|

| 45 |

+

cd /path/to/mmdetection3d

|

| 46 |

+

git checkout -f v0.17.1

|

| 47 |

+

python setup.py develop

|

| 48 |

+

```

|

| 49 |

+

|

| 50 |

+

**g. Install nuscenes-devkit.**

|

| 51 |

+

```shell

|

| 52 |

+

pip install nuscenes-devkit==1.1.9

|

| 53 |

+

```

|

| 54 |

+

|

| 55 |

+

**h. Clone GenAD.**

|

| 56 |

+

```shell

|

| 57 |

+

git clone https://github.com/wzzheng/GenAD.git

|

| 58 |

+

```

|

| 59 |

+

|

| 60 |

+

**i. Prepare pretrained models.**

|

| 61 |

+

```shell

|

| 62 |

+

cd /path/to/GenAD

|

| 63 |

+

mkdir ckpts

|

| 64 |

+

cd ckpts

|

| 65 |

+

wget https://download.pytorch.org/models/resnet50-19c8e357.pth

|

| 66 |

+

```

|

GenAD-main/docs/visualization.md

ADDED

|

@@ -0,0 +1,10 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

# Visualization

|

| 2 |

+

|

| 3 |

+

We provide the script to visualize the VAD prediction to a video [here](../tools/analysis_tools/visualization.py).

|

| 4 |

+

|

| 5 |

+

```shell

|

| 6 |

+

cd /path/to/GenAD/

|

| 7 |

+

conda activate genad

|

| 8 |

+

python tools/analysis_tools/visualization.py --result-path /path/to/inference/results --save-path /path/to/save/visualization/results

|

| 9 |

+

```

|

| 10 |

+

|

GenAD-main/projects/__init__.py

ADDED

|

File without changes

|

GenAD-main/projects/__pycache__/__init__.cpython-38.pyc

ADDED

|

Binary file (142 Bytes). View file

|

|

|

GenAD-main/projects/configs/VAD/GenAD_config.py

ADDED

|

@@ -0,0 +1,443 @@

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

| 1 |

+

_base_ = [

|

| 2 |

+

'../datasets/custom_nus-3d.py',

|

| 3 |

+

'../_base_/default_runtime.py'

|

| 4 |

+

]

|

| 5 |

+

#

|

| 6 |

+

plugin = True

|

| 7 |

+

plugin_dir = 'projects/mmdet3d_plugin/'

|

| 8 |

+

|

| 9 |

+

# If point cloud range is changed, the models should also change their point

|

| 10 |

+

# cloud range accordingly

|

| 11 |

+

point_cloud_range = [-15.0, -30.0, -2.0, 15.0, 30.0, 2.0]

|

| 12 |

+

voxel_size = [0.15, 0.15, 4]

|

| 13 |

+

|

| 14 |

+

img_norm_cfg = dict(

|

| 15 |

+

mean=[123.675, 116.28, 103.53], std=[58.395, 57.12, 57.375], to_rgb=True)

|

| 16 |

+

# For nuScenes we usually do 10-class detection

|

| 17 |

+

class_names = [

|

| 18 |

+

'car', 'truck', 'construction_vehicle', 'bus', 'trailer', 'barrier',

|

| 19 |

+

'motorcycle', 'bicycle', 'pedestrian', 'traffic_cone'

|

| 20 |

+

]

|

| 21 |

+

num_classes = len(class_names)

|

| 22 |

+

|

| 23 |

+

# map has classes: divider, ped_crossing, boundary

|

| 24 |

+

map_classes = ['divider', 'ped_crossing', 'boundary']

|

| 25 |

+

map_num_vec = 100

|

| 26 |

+

map_fixed_ptsnum_per_gt_line = 20 # now only support fixed_pts > 0

|

| 27 |

+

map_fixed_ptsnum_per_pred_line = 20

|

| 28 |

+

map_eval_use_same_gt_sample_num_flag = True

|

| 29 |

+

map_num_classes = len(map_classes)

|

| 30 |

+

|

| 31 |

+

input_modality = dict(

|

| 32 |

+

use_lidar=False,

|

| 33 |

+

use_camera=True,

|

| 34 |

+

use_radar=False,

|

| 35 |

+

use_map=False,

|

| 36 |

+

use_external=True)

|

| 37 |

+

|

| 38 |

+

_dim_ = 256

|

| 39 |

+

_pos_dim_ = _dim_//2

|

| 40 |

+

_ffn_dim_ = _dim_*2

|

| 41 |

+

_num_levels_ = 1

|

| 42 |

+

bev_h_ = 100

|

| 43 |

+

bev_w_ = 100

|

| 44 |

+

queue_length = 3 # each sequence contains `queue_length` frames.

|

| 45 |

+

total_epochs = 60

|

| 46 |

+

|

| 47 |

+

model = dict(

|

| 48 |

+

type='VAD',

|

| 49 |

+

use_grid_mask=True,

|

| 50 |

+

video_test_mode=True,

|

| 51 |

+

pretrained=dict(img='torchvision://resnet50'),

|

| 52 |

+

img_backbone=dict(

|

| 53 |

+

type='ResNet',

|

| 54 |

+

depth=50,

|

| 55 |

+

num_stages=4,

|

| 56 |

+

out_indices=(3,),

|

| 57 |

+

frozen_stages=1,

|

| 58 |

+

norm_cfg=dict(type='BN', requires_grad=False),

|

| 59 |

+

norm_eval=True,

|

| 60 |

+

style='pytorch'),

|

| 61 |

+

img_neck=dict(

|

| 62 |

+

type='FPN',

|

| 63 |

+

in_channels=[2048],

|

| 64 |

+

out_channels=_dim_,

|

| 65 |

+

start_level=0,

|

| 66 |

+

add_extra_convs='on_output',

|

| 67 |

+

num_outs=_num_levels_,

|

| 68 |

+

relu_before_extra_convs=True),

|

| 69 |

+

pts_bbox_head=dict(

|

| 70 |

+

type='VADHead',

|

| 71 |

+

map_thresh=0.5,

|

| 72 |

+

dis_thresh=0.2,

|

| 73 |

+

pe_normalization=True,

|

| 74 |

+

tot_epoch=total_epochs,

|

| 75 |

+

use_traj_lr_warmup=False,

|

| 76 |

+

query_thresh=0.0,

|

| 77 |

+

query_use_fix_pad=False,

|

| 78 |

+

ego_his_encoder=None,

|

| 79 |

+

ego_lcf_feat_idx=None,

|

| 80 |

+

valid_fut_ts=6,

|

| 81 |

+

agent_dim = 300,

|

| 82 |

+

ego_agent_decoder=dict(

|

| 83 |

+

type='CustomTransformerDecoder',

|

| 84 |

+

num_layers=1,

|

| 85 |

+

return_intermediate=False,

|

| 86 |

+

transformerlayers=dict(

|

| 87 |

+

type='BaseTransformerLayer',

|

| 88 |

+

attn_cfgs=[

|

| 89 |

+

dict(

|

| 90 |

+

type='MultiheadAttention',

|

| 91 |

+

embed_dims=_dim_,

|

| 92 |

+

num_heads=8,

|

| 93 |

+

dropout=0.1),

|

| 94 |

+

],

|

| 95 |

+

feedforward_channels=_ffn_dim_,

|

| 96 |

+

ffn_dropout=0.1,

|

| 97 |

+

operation_order=('cross_attn', 'norm', 'ffn', 'norm'))),

|

| 98 |

+

ego_map_decoder=dict(

|

| 99 |

+

type='CustomTransformerDecoder',

|

| 100 |

+

num_layers=1,

|

| 101 |

+

return_intermediate=False,

|

| 102 |

+

transformerlayers=dict(

|

| 103 |

+

type='BaseTransformerLayer',

|

| 104 |

+

attn_cfgs=[

|

| 105 |

+

dict(

|

| 106 |

+

type='MultiheadAttention',

|

| 107 |

+

embed_dims=_dim_,

|

| 108 |

+

num_heads=8,

|

| 109 |

+

dropout=0.1),

|

| 110 |

+

],

|

| 111 |

+

feedforward_channels=_ffn_dim_,

|

| 112 |

+

ffn_dropout=0.1,

|

| 113 |

+

operation_order=('cross_attn', 'norm', 'ffn', 'norm'))),

|

| 114 |

+

motion_decoder=dict(

|

| 115 |

+

type='CustomTransformerDecoder',

|

| 116 |

+

num_layers=1,

|

| 117 |

+

return_intermediate=False,

|

| 118 |

+

transformerlayers=dict(

|

| 119 |

+

type='BaseTransformerLayer',

|

| 120 |

+

attn_cfgs=[

|

| 121 |

+

dict(

|

| 122 |

+

type='MultiheadAttention',

|

| 123 |

+

embed_dims=_dim_,

|

| 124 |

+

num_heads=8,

|

| 125 |

+

dropout=0.1),

|

| 126 |

+

],

|

| 127 |

+

feedforward_channels=_ffn_dim_,

|

| 128 |

+

ffn_dropout=0.1,

|

| 129 |

+

operation_order=('cross_attn', 'norm', 'ffn', 'norm'))),

|

| 130 |

+

motion_map_decoder=dict(

|

| 131 |

+

type='CustomTransformerDecoder',

|

| 132 |

+

num_layers=1,

|

| 133 |

+

return_intermediate=False,

|

| 134 |

+

transformerlayers=dict(

|

| 135 |

+

type='BaseTransformerLayer',

|

| 136 |

+

attn_cfgs=[

|

| 137 |

+

dict(

|

| 138 |

+

type='MultiheadAttention',

|

| 139 |

+

embed_dims=_dim_,

|

| 140 |

+

num_heads=8,

|

| 141 |

+

dropout=0.1),

|

| 142 |

+

],

|

| 143 |

+

feedforward_channels=_ffn_dim_,

|

| 144 |

+

ffn_dropout=0.1,

|

| 145 |

+

operation_order=('cross_attn', 'norm', 'ffn', 'norm'))),

|

| 146 |

+

use_pe=True,

|

| 147 |

+

bev_h=bev_h_,

|

| 148 |

+

bev_w=bev_w_,

|

| 149 |

+

num_query=300,

|

| 150 |

+

num_classes=num_classes,

|

| 151 |

+

in_channels=_dim_,

|

| 152 |

+

sync_cls_avg_factor=True,

|

| 153 |

+

with_box_refine=True,

|

| 154 |

+

as_two_stage=False,

|

| 155 |

+

map_num_vec=map_num_vec,

|

| 156 |

+

map_num_classes=map_num_classes,

|

| 157 |

+

map_num_pts_per_vec=map_fixed_ptsnum_per_pred_line,

|

| 158 |

+

map_num_pts_per_gt_vec=map_fixed_ptsnum_per_gt_line,

|

| 159 |

+

map_query_embed_type='instance_pts',

|

| 160 |

+

map_transform_method='minmax',

|

| 161 |

+

map_gt_shift_pts_pattern='v2',

|

| 162 |

+

map_dir_interval=1,

|

| 163 |

+

map_code_size=2,

|

| 164 |

+

map_code_weights=[1.0, 1.0, 1.0, 1.0],

|

| 165 |

+

transformer=dict(

|

| 166 |

+

type='VADPerceptionTransformer',

|

| 167 |

+

map_num_vec=map_num_vec,

|

| 168 |

+

map_num_pts_per_vec=map_fixed_ptsnum_per_pred_line,

|

| 169 |

+

rotate_prev_bev=True,

|

| 170 |

+

use_shift=True,

|

| 171 |

+

use_can_bus=True,

|

| 172 |

+

embed_dims=_dim_,

|

| 173 |

+

encoder=dict(

|

| 174 |

+

type='BEVFormerEncoder',

|

| 175 |

+

num_layers=3,

|

| 176 |

+

pc_range=point_cloud_range,

|

| 177 |

+

num_points_in_pillar=4,

|

| 178 |

+

return_intermediate=False,

|

| 179 |

+

transformerlayers=dict(

|

| 180 |

+

type='BEVFormerLayer',

|

| 181 |

+

attn_cfgs=[

|

| 182 |

+

dict(

|

| 183 |

+

type='TemporalSelfAttention',

|

| 184 |

+

embed_dims=_dim_,

|

| 185 |

+

num_levels=1),

|

| 186 |

+

dict(

|

| 187 |

+

type='SpatialCrossAttention',

|

| 188 |

+

pc_range=point_cloud_range,

|

| 189 |

+

deformable_attention=dict(

|

| 190 |

+

type='MSDeformableAttention3D',

|

| 191 |

+

embed_dims=_dim_,

|

| 192 |

+

num_points=8,

|

| 193 |

+

num_levels=_num_levels_),

|

| 194 |

+

embed_dims=_dim_,

|

| 195 |

+

)

|

| 196 |

+

],

|

| 197 |

+

feedforward_channels=_ffn_dim_,

|

| 198 |

+

ffn_dropout=0.1,

|

| 199 |

+

operation_order=('self_attn', 'norm', 'cross_attn', 'norm',

|

| 200 |

+

'ffn', 'norm'))),

|

| 201 |

+

decoder=dict(

|

| 202 |

+

type='DetectionTransformerDecoder',

|

| 203 |

+

num_layers=3,

|

| 204 |

+

return_intermediate=True,

|

| 205 |

+

transformerlayers=dict(

|

| 206 |

+

type='DetrTransformerDecoderLayer',

|

| 207 |

+

attn_cfgs=[

|

| 208 |

+

dict(

|

| 209 |

+

type='MultiheadAttention',

|

| 210 |

+

embed_dims=_dim_,

|

| 211 |

+

num_heads=8,

|

| 212 |

+

dropout=0.1),

|

| 213 |

+

dict(

|

| 214 |

+

type='CustomMSDeformableAttention',

|

| 215 |

+

embed_dims=_dim_,

|

| 216 |

+

num_levels=1),

|

| 217 |

+

],

|

| 218 |

+

feedforward_channels=_ffn_dim_,

|

| 219 |

+

ffn_dropout=0.1,

|

| 220 |

+

operation_order=('self_attn', 'norm', 'cross_attn', 'norm',

|

| 221 |

+

'ffn', 'norm'))),

|

| 222 |

+

map_decoder=dict(

|

| 223 |

+

type='MapDetectionTransformerDecoder',

|

| 224 |

+

num_layers=3,

|

| 225 |

+

return_intermediate=True,

|

| 226 |

+

transformerlayers=dict(

|

| 227 |

+

type='DetrTransformerDecoderLayer',

|

| 228 |

+

attn_cfgs=[

|

| 229 |

+

dict(

|

| 230 |

+

type='MultiheadAttention',

|

| 231 |

+

embed_dims=_dim_,

|

| 232 |

+

num_heads=8,

|

| 233 |

+

dropout=0.1),

|

| 234 |

+

dict(

|

| 235 |

+

type='CustomMSDeformableAttention',

|

| 236 |

+

embed_dims=_dim_,

|

| 237 |

+

num_levels=1),

|

| 238 |

+

],

|

| 239 |

+

feedforward_channels=_ffn_dim_,

|

| 240 |

+

ffn_dropout=0.1,

|

| 241 |

+

operation_order=('self_attn', 'norm', 'cross_attn', 'norm',

|

| 242 |

+

'ffn', 'norm')))),

|

| 243 |

+

bbox_coder=dict(

|

| 244 |

+

type='CustomNMSFreeCoder',

|

| 245 |

+

post_center_range=[-20, -35, -10.0, 20, 35, 10.0],

|

| 246 |

+

pc_range=point_cloud_range,

|

| 247 |

+

max_num=100,

|

| 248 |

+

voxel_size=voxel_size,

|

| 249 |

+

num_classes=num_classes),

|

| 250 |

+

map_bbox_coder=dict(

|

| 251 |

+

type='MapNMSFreeCoder',

|

| 252 |

+

post_center_range=[-20, -35, -20, -35, 20, 35, 20, 35],

|

| 253 |

+

pc_range=point_cloud_range,

|

| 254 |

+

max_num=50,

|

| 255 |

+

voxel_size=voxel_size,

|

| 256 |

+

num_classes=map_num_classes),

|

| 257 |

+

positional_encoding=dict(

|

| 258 |

+

type='LearnedPositionalEncoding',

|

| 259 |

+

num_feats=_pos_dim_,

|

| 260 |

+

row_num_embed=bev_h_,

|

| 261 |

+

col_num_embed=bev_w_,

|

| 262 |

+

),

|

| 263 |

+

loss_cls=dict(

|

| 264 |

+

type='FocalLoss',

|

| 265 |

+

use_sigmoid=True,

|

| 266 |

+

gamma=2.0,

|

| 267 |

+

alpha=0.25,

|

| 268 |

+

loss_weight=2.0),

|

| 269 |

+

loss_bbox=dict(type='L1Loss', loss_weight=0.25),

|

| 270 |

+

loss_traj=dict(type='L1Loss', loss_weight=0.2),

|

| 271 |

+

loss_traj_cls=dict(

|

| 272 |

+

type='FocalLoss',

|

| 273 |

+

use_sigmoid=True,

|

| 274 |

+

gamma=2.0,

|

| 275 |

+

alpha=0.25,

|

| 276 |

+

loss_weight=0.2),

|

| 277 |

+

loss_iou=dict(type='GIoULoss', loss_weight=0.0),

|

| 278 |

+

loss_map_cls=dict(

|

| 279 |

+

type='FocalLoss',

|

| 280 |

+

use_sigmoid=True,

|

| 281 |

+

gamma=2.0,

|

| 282 |

+

alpha=0.25,

|

| 283 |

+

loss_weight=2.0),

|

| 284 |

+

loss_map_bbox=dict(type='L1Loss', loss_weight=0.0),

|

| 285 |

+

loss_map_iou=dict(type='GIoULoss', loss_weight=0.0),

|

| 286 |

+

loss_map_pts=dict(type='PtsL1Loss', loss_weight=1.0),

|

| 287 |

+

loss_map_dir=dict(type='PtsDirCosLoss', loss_weight=0.005),

|

| 288 |

+

loss_plan_reg=dict(type='L1Loss', loss_weight=1.0),

|

| 289 |

+

loss_plan_bound=dict(type='PlanMapBoundLoss', loss_weight=1.0, dis_thresh=1.0),

|

| 290 |

+

loss_plan_col=dict(type='PlanCollisionLoss', loss_weight=1.0),

|

| 291 |

+

loss_plan_dir=dict(type='PlanMapDirectionLoss', loss_weight=0.5),

|

| 292 |

+

loss_vae_gen=dict(type='ProbabilisticLoss', loss_weight=1.0),

|

| 293 |

+

loss_diff_gen=dict(type='DiffusionLoss', loss_weight=0.5)),

|

| 294 |

+

# model training and testing settings

|

| 295 |

+

train_cfg=dict(pts=dict(

|

| 296 |

+

grid_size=[512, 512, 1],

|

| 297 |

+

voxel_size=voxel_size,

|

| 298 |

+

point_cloud_range=point_cloud_range,

|

| 299 |

+

out_size_factor=4,

|

| 300 |

+

assigner=dict(

|

| 301 |

+

type='HungarianAssigner3D',

|

| 302 |

+

cls_cost=dict(type='FocalLossCost', weight=2.0),

|

| 303 |

+

reg_cost=dict(type='BBox3DL1Cost', weight=0.25),

|

| 304 |

+

iou_cost=dict(type='IoUCost', weight=0.0), # Fake cost. This is just to make it compatible with DETR head.

|

| 305 |

+

pc_range=point_cloud_range),

|

| 306 |

+

map_assigner=dict(

|

| 307 |

+

type='MapHungarianAssigner3D',

|

| 308 |

+

cls_cost=dict(type='FocalLossCost', weight=2.0),

|

| 309 |

+

reg_cost=dict(type='BBoxL1Cost', weight=0.0, box_format='xywh'),

|

| 310 |

+

iou_cost=dict(type='IoUCost', iou_mode='giou', weight=0.0),

|

| 311 |

+

pts_cost=dict(type='OrderedPtsL1Cost', weight=1.0),

|

| 312 |

+

pc_range=point_cloud_range))))

|

| 313 |

+

|

| 314 |

+

dataset_type = 'VADCustomNuScenesDataset'

|

| 315 |

+

data_root = 'xxx/nuscenes/'

|

| 316 |

+

file_client_args = dict(backend='disk')

|

| 317 |

+

|

| 318 |

+

train_pipeline = [

|

| 319 |

+

dict(type='LoadMultiViewImageFromFiles', to_float32=True),

|

| 320 |

+

dict(type='PhotoMetricDistortionMultiViewImage'),

|

| 321 |

+

dict(type='LoadAnnotations3D', with_bbox_3d=True, with_label_3d=True, with_attr_label=True),

|

| 322 |

+

dict(type='CustomObjectRangeFilter', point_cloud_range=point_cloud_range),

|

| 323 |

+

dict(type='CustomObjectNameFilter', classes=class_names),

|

| 324 |

+

dict(type='NormalizeMultiviewImage', **img_norm_cfg),

|

| 325 |

+

dict(type='RandomScaleImageMultiViewImage', scales=[0.4]),

|

| 326 |

+

dict(type='PadMultiViewImage', size_divisor=32),

|

| 327 |

+

dict(type='CustomDefaultFormatBundle3D', class_names=class_names, with_ego=True),

|

| 328 |

+

dict(type='CustomCollect3D',\

|

| 329 |

+

keys=['gt_bboxes_3d', 'gt_labels_3d', 'img', 'ego_his_trajs',

|

| 330 |

+

'ego_fut_trajs', 'ego_fut_masks', 'ego_fut_cmd', 'ego_lcf_feat', 'gt_attr_labels'])

|

| 331 |

+

]

|

| 332 |

+

|

| 333 |

+

test_pipeline = [

|

| 334 |

+

dict(type='LoadMultiViewImageFromFiles', to_float32=True),

|